Abstract

Cancer has become a major threat to global health care. With the development of computer science, artificial intelligence (AI) has been widely applied in histopathological images (HI) analysis. This study analyzed the publications of AI in HI from 2001 to 2021 by bibliometrics, exploring the research status and the potential popular directions in the future. A total of 2844 publications from the Web of Science Core Collection were included in the bibliometric analysis. The country/region, institution, author, journal, keyword, and references were analyzed by using VOSviewer and CiteSpace. The results showed that the number of publications has grown rapidly in the last five years. The USA is the most productive and influential country with 937 publications and 23,010 citations, and most of the authors and institutions with higher numbers of publications and citations are from the USA. Keyword analysis showed that breast cancer, prostate cancer, colorectal cancer, and lung cancer are the tumor types of greatest concern. Co-citation analysis showed that classification and nucleus segmentation are the main research directions of AI-based HI studies. Transfer learning and self-supervised learning in HI is on the rise. This study performed the first bibliometric analysis of AI in HI from multiple indicators, providing insights for researchers to identify key cancer types and understand the research trends of AI application in HI.

1. Introduction

Cancer is a widespread disease that causes major death in the world. In 2020, more than 19 million cases of cancer were confirmed worldwide, including over 10 million deaths from cancer [1]. The early diagnosis and treatment for cancer are essential to reduce cancer deaths in the world. Histopathological diagnosis is the gold standard for tumor diagnosis, grade, and classification. Although CT, MRI, and other imaging methods for tumor examination are provided clinically, cancer can only be diagnosed by biopsy and histopathological examination. Therefore, accurate histopathological diagnosis is critical for cancer screening and diagnosis. Histopathological examination is a highly subjective task that relies on professional knowledge and experience to evaluate tumor tissue structure and cell morphology in pathological sections. Unfortunately, pathologists with highly professional experience are often in short supply. Objective and accurate artificial intelligence (AI) histopathological methods could reduce the clinical misdiagnosis of histopathology caused by subjectivity and the lack of professionals [2].

AI is a technology that utilizes computer technology and algorithms to imitate human intelligence. With the rapid development of computer technology, AI-based machine learning and deep learning methods have been widely used in image processing [3]. As a data-driven approach, AI learns features from datasets relevant to downstream tasks. Digital pathology technology enables large-scale digitization of histopathological slices, and the massive growth of data of histopathological images (HI) accelerates the rapid development of AI in this field. AI has been used in early cancer diagnosis [4,5], cancer mutation detection [6,7], nuclear segmentation [8,9], prognosis evaluation [10,11], etc. The combination of AI and pathologists is a conducive way to solve the lack of professional pathologists in some remote regions [12,13,14]. A large number of papers have been published on AI applications in the fields of HI, so it is worthwhile to quantitatively analyze the papers in this field to grasp the research status and research trends as a whole.

Bibliometrics is a method used to quantitatively analyze numerous documents in a certain field. By counting the authors, countries, journals, and citation relationships of published literature in the field, the bibliometrics can visualize the connection and structure of research topics among the literature to determine current research hotspots and future development trends [15]. There are many studies reviewed on the topic of AI in HI [16,17,18,19], but only one bibliometric study about breast cancer in histopathological image classification [20]. The study is limited to the classification task and breast cancer types. It is necessary to conduct a bibliometric analysis of multi-cancer and multi-tasks on the application of AI in HI. The research themes and emerging trends can be objectively displayed in the field through quantitative analysis of the literature data.

This study is the first bibliometric study to provide a comprehensive view of the application of AI in HI. We collected the publications from 2001 to 2021 in the Web of Science collection database, then counted and visualized multiple indicators by VOSviewer and CiteSpace. This study summarized the prevailing topics of cancer types, methods, and development trends of AI in HI, and provides a reference for future researchers to grasp key points.

2. Materials and Methods

2.1. Search Methods

A total of 6 Citation Indexes were used to ensure the comprehensiveness of the literature data from the Web of Science Core Collection (WOSCC) database, which are Science Citation Index Expanded (SCI-Expanded), Social Sciences Citation Index (SSCI), Conference Proceedings Citation Index-Science (CPCI-S), Arts & Humanities Citation Index (A & HCI), Emerging Sources Citation Index (ESCI), and Conference Proceedings Citation Index-Social Sciences & Humanities (CPCI-SSH). We used the Exact search function to collect articles, proceedings papers, and review articles from 2001 to 2021 on 5 May 2022 to prevent data update. The “plain text” format file with “Full Record and Cited References” was exported through WOSCC, which contains the titles, abstract, authors, keywords, journals, and reference records of the collected literature data.

Because most keywords of AI in HI are terminology, we tried to include various methodological terminology [21]. We used the search rules as follows: TS = (“deep learning” OR “artificial intelligence” OR “machine learning” OR “feature extraction” OR “intelligent learning” OR “feature extraction” OR “feature mining” OR “feature embedding” OR “instance segmentation” OR “Semantic segmentation” OR “image* segmentation” OR superpixel OR “data mine” OR “neural network” OR “deep network” OR “neural learning” OR “neural nets model” OR “artificial neural network” OR “deep neural network” OR “Convolutional Neural Networks” OR CNN OR “supervised learning” OR “semi-supervised” OR “unsupervised learning” OR “unsupervised clustering” OR adversarial generative OR “Bayes network” OR self-supervised OR “active learning” OR “few-shot learning” OR “continual learning” OR “transfer learning” OR “domain adaptation” OR “metric learning” OR “contrastive learning” OR “reinforcement learning” OR “meta learning” OR “knowledge graph” OR “graph learning” OR “graph mining” OR SVM) AND TS = (‘histopatholo* image*’ OR “whole slide image*” OR “slide image*” OR “digital pathology”).

2.2. Bibliometric Analysis Methods

Next, we focused on analyzing the indicators such as country, region, institution, author, keywords, and references collected by the above retrieval methods. Co-authorship analysis can reflect the cooperative relationship between countries, authors, and institutions in the literature data. Co-occurrence shows the relationship between documents by quantifying the terms in the bibliographic data. Co-citation is a method to explore patterns in influential literature and the structural characteristics of current documents [22].

In this study, we used statistical analysis and visual analysis by using VOSviewer (version 1.6.18, Leiden University, Leiden, The Netherlands) [23], CiteSpace (version 5.8.R3, Drexel University, Philadelphia, PA, USA) [24], and Microsoft EXCEL. VOSviewer was used for the co-authorship analysis of countries/regions, authors, institutions, and author keywords. CiteSpace was used for the co-citation reference analysis and timeline visualization, and the log-likelihood ratio was used as the algorithm for obtaining cluster theme markers. The co-citation network used the Modularity Q value and the Weighted Mean Silhouette S value to measure the clustering effect and network homogeneity, respectively. The higher the two indicators, the more reasonable and effective the network clustering effect is [25,26]. A double-graph overlay of the journals was drawn [27] to analyze the distribution of citation trajectories and literature information. The impact factor (IF) is used to evaluate the quality of the journals. The higher the IF of a journal, the higher the quality of the literature published in that journal. The IF statistics are from the 2020 impact factors of the Web of Science core database. In addition, the visualization of the numbers of publications by different countries/regions was plotted using python3.6 (Guido van Rossum, Amsterdam, Netherlands). Microsoft EXCEL was used for statistical chart analysis and production.

3. Results

3.1. Global Publications Trends

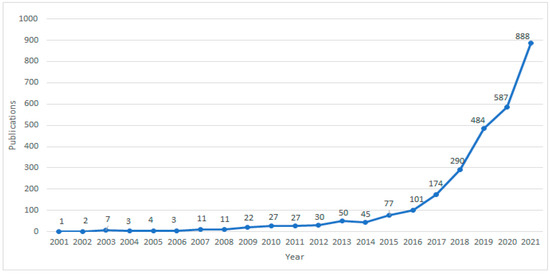

Using the retrieval method described in Methods, a total of 2844 papers were retrieved, including 1714 articles, 945 proceedings papers, and 185 review articles. We found that the number of AI studies on pathological images has shown explosive growth in the past five years from 174 to 888 (Figure 1), accounting for 85.2% of the total number of publications, indicating that pathological AI analysis has become a hot research topic in recent years.

Figure 1.

Global Publication Trends on AI in HI from 2001 to 2021.

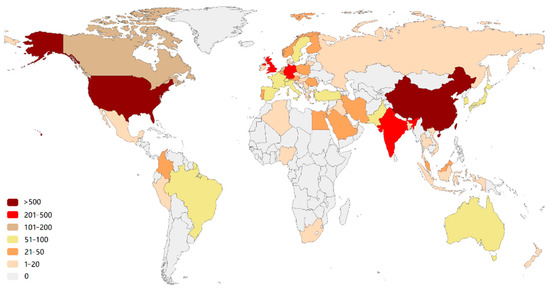

3.2. Countries and Regions

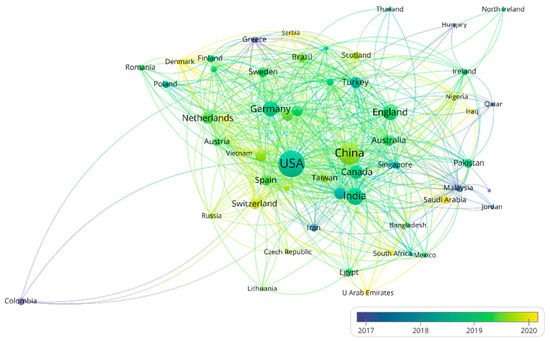

More than 58 countries around the world have participated in the research on the AI application of HI (Figure 2). The top 10 most productive countries and regions are shown in Table S1. The USA is the most productive (the number of publications is 937) and influential (the number of citations is 23,010) country, followed by China (550 publications and 6631 citations). Notably, some countries (e.g., the Netherlands and France) are associated with a relatively high number of citations but with a modest number of publications. This is largely due to a few highly cited review articles, e.g., the article “A survey on deep learning in medical image analysis” by Litjens et al. [28] from the Netherlands (4078 citations). The visualization of the co-authorship analysis of countries is shown in Figure 3. The AI-based research in HI analysis in the USA was carried out as early as 2001 [29], while in China it began in 2009 [30]. The USA has cooperative relations with many countries. It is at the center of the network with the highest total link strength (total link strength is 731). Less intense cooperation occurs among other countries.

Figure 2.

Country production map based on the total publications. The color indicates the number of publications.

Figure 3.

The network of countries/regions’ co-authorship analysis. Node color and size indicate average publication year and the number of publications, respectively; thickness of lines indicates the strength of the relationship. Each country/region in this map has at least 5 collaboration publications. The average year of publications in different countries are from 2017 to 2020.

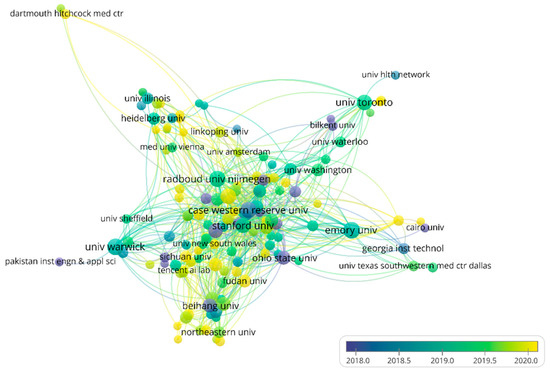

3.3. Institutions

About 3160 institutions have participated in the application of AI in HI. The top 10 most productive institutions are shown in Table S2, among which six institutions are from the United States. The top two institutions are Emory University and The University of Warwick with 55 and 54 articles, respectively. Emory University has mainly focused on AI combined with genomics to analyze HI [31,32,33], while the University of Warwick has focused on segmentation in HI [34,35,36]. Radboud University Nijmegen (citations = 6316) and Stanford University (citations = 5423) are the two institutions affiliated with the most citations. In particular, a review of “A survey on deep learning in medical image analysis” by Litjens et al. [28] (citations = 4078) and a skin cancer classification study “Dermatologist-level classification of skin cancer with deep neural networks” by Esteva et al. [37] (citations = 4273) contributed most of the citations in the two institutions, respectively. The co-authorship between institutions is shown in Figure 4. Most of the sub-networks contain institutions from the same countries, indicating that institutions from the same countries are more likely to collaborate, which is not unexpected. Most of the institutions in the center of the network are from the USA, indicating that increased cooperation is conducive to publishing more papers.

Figure 4.

The network of institutions’ co-authorship analysis. Node color and size indicate the average publication year and the number of publications respectively; thickness of lines indicates the strength of the relationship. Each institution in this map has at least 10 collaborative publications. The average years of publications in the institutions are from 2018 to 2020.

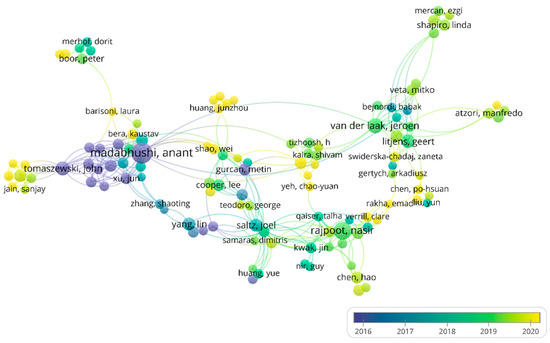

3.4. Authors

The top 10 most productive authors were all from Western countries, including half from the USA (Table S3). Dr. Anant Madabhushi is the most prolific author with 72 publications, having started early in the analysis of histopathology artificial intelligence in breast cancer [38,39,40,41]. The co-authorship analysis between authors is shown in Figure 5. There is a sub-network formed around Dr. Madabhushi, indicating that collaboration can promote research advancement. The multiple yellow nodes in Figure 5 indicate that more researchers are becoming devoted to AI in HI analysis, which shows that the application of AI in HI holds great promise.

Figure 5.

The network of authors’ co-authorship analysis. Node color and size indicate the average publication year and the number of publications, respectively; thickness of lines indicates the strength of the relationship. Each author in this map has at least 7 collaborative publications. The average years of publications of authors were from 2016 to 2020.

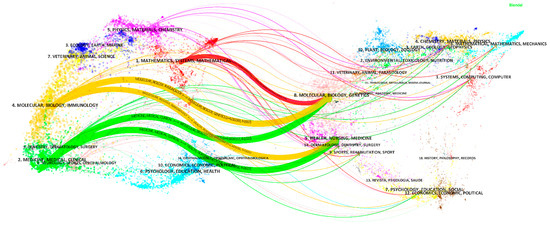

3.5. Journals

The top 10 journals with the largest number of publications are shown in Table S4. Scientific Reports has the largest number of published papers. The two most cited journals are Medical Image Analysis and IEEE Transactions on Medical Imaging, which have the high IF (8.545 and 10.048, respectively). The high IFs and the high numbers of citations indicate that in the application of AI in HI, the two journals might have high quality and influence. Figure 6 is a dual-map overlay of journals on AI-related research on HI, with the citing journal map on the left and the cited journal map on the right. The citing papers were mainly distributed in three areas: (1) Mathematics, Systems, Mathematical; (2) Molecular, Biology, Immunology; (3) Medicine, Medical, Clinical. The cited papers were mainly distributed in two areas: (4) Molecular, Biology, Genetics; (5) Health, Nursing, Medicine. There are five main citation paths from the dual map, showing that the current application of AI in HI has been mainly published in disciplinary areas (1), (2), and (3), the main citation is from disciplinary areas (4) and (5).

Figure 6.

Dual-map overlay of journals on the application of AI in HI. The width of the paths is proportional to the z-score-scaled citation frequency. From top to bottom, the content in the main paths are: MATHEMATICS, SYSTEMS, MATHEMATICAL

MOLECULAR, BIOLOGY, GENETICS (z = 1.84, f = 986); MOLECULAR, BIOLOGY, IMMUNOLOGY

MOLECULAR, BIOLOGY, GENETICS (z = 4.01, f = 1937); MOLECULAR, BIOLOGY, IMMUNOLOGY

HEALTH, NURSING, MEDICINE (z = 2.60, f = 1321); MEDICINE, MEDICAL, CLINICAL

MOLECULAR, BIOLOGY, GENETICS (z = 4.66, f = 2222); and MEDICINE, MEDICAL, CLINICAL

HEALTH, NURSING, MEDICINE (z = 5.56, f = 2617).

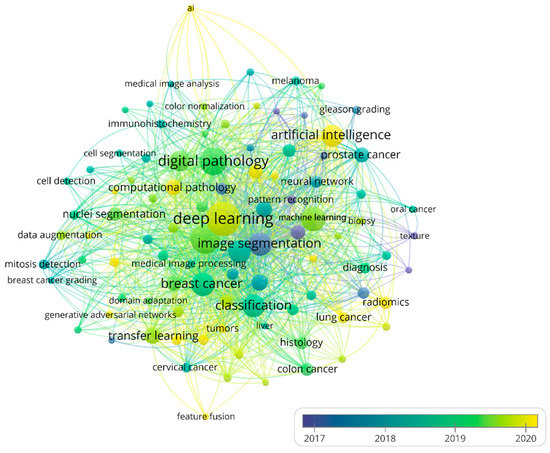

3.6. Keywords

We summarize the top 10 most frequent keywords of methods and cancer types to identify hotspots of AI in HI (Table S5). “Deep learning” had the highest occurrences (n = 657) in the method keywords, followed by “convolutional neural network” (n = 445), which was the main image analysis method in deep learning. Image data is often high-dimensional, and deep learning automatically and efficiently extracts features from images, which are often adapted to downstream tasks without feature engineering [42]. The top four cancer keywords with the most occurrences (n) were “breast cancer” (n = 298), “prostate cancer” (n = 90), “colorectal cancer” (n = 55), and “lung cancer” (n = 45), indicating that these cancers are hotspots in current research. The reason for that may be that breast cancer and lung cancer are the cancers with the highest mortality rates in the United States and China, respectively, and prostate cancer and colorectal cancer have shown rapidly increased incidence rates in recent years [43]. The three least-occurring cancer types with occurrences larger than five in the keywords analysis were “ovarian cancer”, “pancreatic cancer”, and “thyroid cancer”. After merging keywords with similar meanings, keyword co-occurrence analysis is shown in Figure 7. The big yellow node in Figure 7 is an emerging and well-developed area including deep learning, indicating that in the future, deep learning will still maintain a high research interest.

Figure 7.

The network of author keywords’ co-occurrence analysis. Node color and size indicate average publication year and the number of occurrences, respectively; thickness of lines indicates the strength of the relationship. The occurrences of each keyword in this map were at least 11 times. The average years of the publications with the keywords were from 2017 to 2020.

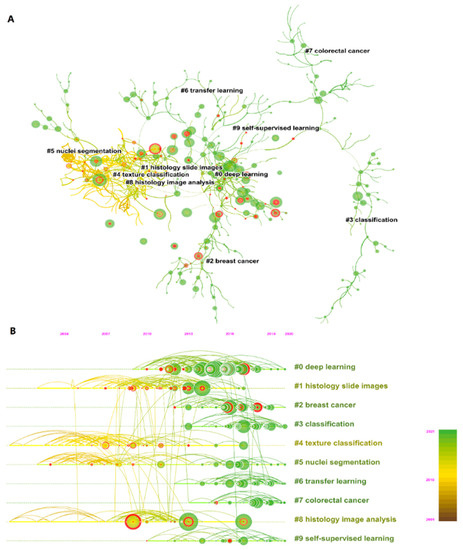

3.7. Co-Citation References

We summarize the top 10 most cited references in Table 1. Ronneberger et al. have the highest total citation frequency with 358 citations, and their proposed U-Net is widely used in medical image segmentation [44]. Modularity Q and the weighted mean Silhouette S of the co-citation network (Figure 8A) were 0.9149 and 0.9556, respectively, showing a good clustering effect and clear topics of the co-citation network. We selected the topics of the top 10 clusters and plotted a timeline map [45,46] (Figure 8B) to find out the development trend in the past years. Deep learning is the largest cluster in the period from 2010 to 2020. Clusters 2 and 7 show that recent studies cited on breast cancer and colorectal cancer have increased sharply. Clusters 3, 4, 5, 6, and 9 (i.e., classification, texture classification, nuclei segmentation, transfer learning, and self-supervised learning, respectively) have been the research hotspots of AI in HI analysis in recent years. Texture classification (cluster 4) and nuclei segmentation (cluster 5) were studied earlier, generally based on traditional machine learning or feature engineering [47,48,49,50]. Transfer learning and self-supervised learning are emerging methods from 2010 to 2021 [51,52,53,54]. The detail about these methods is in the Discussion section.

Table 1.

Top 10 most co-cited publications of AI in HI.

Figure 8.

(A) The co-citation map of reference. (B) Timeline visualization of co-citation map. The label selection method was the log-likelihood ratio. Lines indicate reference relations, and color of lines and circles from yellow to green represent the years from 2001 to 2021. Red circles indicate the burst citation, which means that the number of citations to the publication increased rapidly, lasting for multiple years or a single year. The vertical direction from top to bottom represents the cluster from large to small, the largest cluster is shown on the top with label “#0”. The horizontal direction represents the timeline from 2001 to 2021. A node indicates a publication. The larger node size, the more times co-cited.

4. Discussion

In this study, we conducted a bibliometric analysis of 2844 documents to understand the research status, research hotspots, and research development trends in the application of AI in HI analysis. In the past 20 years, the number of publications has increased year after year, especially sharply in the past five years. Breast cancer, prostate cancer, colorectal cancer, and lung cancer are the four most studied cancer types. The USA is the most influential and productive country with far more citations than any other country, and it has collaborated extensively with multiple countries. American researchers and institutions have carried out artificial intelligence pathology research for the longest and have published many high-quality articles. Meanwhile, countries and institutions should strengthen cooperation with each other to promote advanced research and interdisciplinary frontier research.

With comprehensive keyword co-occurrence analysis and reference co-citation analysis, we summarize the challenges and main directions of AI in HI analysis. On the one hand, classification based on deep learning and nuclei segmentation based on deep learning may be the two main research directions at present. Usually, deep learning methods require large datasets with annotation to reduce overfitting and improve generalization capability. On the other hand, articles related to transfer learning and self-supervised learning were published and updated rapidly, indicating these two directions could be potential hotspots in the future.

Classification based on deep learning. Classification is aimed at diagnosing cancer and identifying cancerous tissue. AI methods will develop the formation of an objective and accurate cancer identification process in cancer diagnosis. In general, the input image size is much smaller than the histopathological image in most mainstream deep network architecture for classification tasks. A scanned histopathological image usually contains billions of pixels, needing image preprocessing into patches with appropriate sizes as the input for the deep network. A scanned histopathological image is divided into non-overlapping patches of the same size, and in this process, may generate thousands of image patches. Datasets will be manually annotated by pathologists at the patch level. The deep learning model will be trained on the above dataset to learn to extract the relevant features, guided by annotations. The more annotated the data, the higher classification performance of the deep learning model. For high-precision recognition models, deep learning methods are comparable to professional pathologists [45,61], but it is still difficult for most pathological models to form an effective comparison with pathologists. The biggest challenge of current classification problems is that training accurate classification models requires large datasets with physician annotations, which are often difficult to obtain. To deal with this problem, a commonly used practice at this stage is to expand the data by augmentation methods (e.g., color changes, random cropping, etc.). Collecting large datasets with annotations [62,63] and using pre-training methods such as transfer learning [64,65,66] and self-supervised learning [67,68] are effective methods to improve classification accuracy. In addition, the emergence of automated annotation procedures [69,70,71] provides an alternative to manual annotations by pathologists to reduce the requirements of professionals for data annotation.

Nuclei segmentation. Nuclear segmentation analyzes the features of cancer tissue and evaluates grade and prognosis. Nuclear features are significant in histopathology, as pathologists may diagnose the grading of patients or assess prognosis based on cell morphology and structure. For example, mitosis is closely related to breast cancer grading [72]. Nuclear density, size, and other indicators have a significant relationship with cancer prognosis [73]. The segmentation of nuclei from tissue images to extract nuclei features provides a premise for future analysis [74,75]. In a nuclei segmentation dataset, some representative regions of interest (ROIs) are selected from HI images by the pathologist, annotating the cell/nuclei contours and cell/nuclei types using annotation software. Typically, a single ROI contains hundreds of cells and several cell types, which takes great effort to annotate accurately segmentation ground truth. In nuclei segmentation methods, each pixel of the input image will be predicted as the probability of belonging to the cell/nuclei as output in the deep learning model. Pixel probabilities larger than a certain probability threshold are considered to belong to cell/nuclei region. One of the challenges in nuclei segmentation is the overlapping of nuclei and the gathered nuclei. Nuclei annotation is at the cell instance level, which is more difficult to annotate. Moreover, there are few publicly available datasets, leading to the segmentation method being difficult to expand between different datasets [76].

Transfer learning. Currently, there are several large datasets with complete annotation in some downstream tasks such as image classification [61], segmentation [77], and object detection [78,79]. In HI, datasets are usually annotated for a specific downstream task in a specific cancer or tissue [80,81,82], hindering study on other cancer types which other researchers are interested in. Transfer learning is a method to leverage knowledge from a source domain to improve the learning performance in a target domain [83]. Transfer learning can increase the performance and robustness of relatively small data in downstream analysis when it is difficult to obtain large datasets with annotation. In HI analysis, transfer knowledge gained from other large datasets to target the cancer domain can improve the performance of downstream tasks [54,84,85,86]. The difficulty of histopathological transfer learning is that most studies transfer the ImageNet dataset [61] to the medical data domain [87]. The ImageNet dataset contains natural objects, while the HI dataset contains repeated organizational structures and texture features. There are significant differences in the distribution of features and resolution between the two data formats. Based on the currently available large datasets of histopathology, suitable transfer learning methods could improve the performance of downstream tasks [87,88,89].

Self-supervised learning. Self-supervised learning is a method that obtains a representation of a dataset without annotation data, unsupervised to find common features among images from unlabeled datasets [90]. Self-supervised learning builds pretext tasks to learn a good representation of the inherent features in the training process, and fine-tunes downstream tasks using labeled data [91]. The pretext tasks of HI are generally constructed through contextual, multi-resolution, and semantic features [51,92], and the downstream tasks are analyzed by pre-training and fine-tuning. Effective features of HI are extracted using appropriate pretext tasks using prior information possessed in HI [93]. Self-supervised learning excavates image-learning semantic information from the image itself, which is the development direction of AI in medicine and of HI analysis in the future [94]. Learning how to design an appropriate pretext task for HI and obtaining general features are the key points in the application of self-supervised learning in HI.

Limitations

This study represents the first bibliometric analysis of the application of AI on HI that includes comprehensive cancer types. This study also has limitations. At present, only the literature in the WOSCC database has been analyzed; documents in other databases may have been missed. To prevent the influence of automatic keyword selection by WOSCC, only the keywords provided by the authors were used in the keyword analysis, which may lead to the omission of some keywords. Recently published publications may be biased due to low citation rates.

In future studies, researchers can study bibliometric analysis in depth for the specific cancer type that is most studied, according to Table S5. They should also include more databases to obtain more comprehensive publications and should manually select all the keywords to obtain a more accurate keyword analysis.

5. Conclusions

In summary, AI has become an important research method for HI analysis. Breast cancer, prostate cancer, colorectal cancer, and lung cancer are the most studied cancer types. Classification and nucleus segmentation based on deep learning may continue to be research hotspots for a long time. Transfer learning and self-supervised learning are likely the development trends of future research. In general, this study provides some insights into the structure and trends in the field of AI HI analysis.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/ijerph191811597/s1. Table S1: Top 10 most productive countries of AI in HI from 2001 to 2021; Table S2: Top 10 most productive institutions of AI in HI from 2001 to 2021; Table S3: Top 10 most productive authors of AI in HI from 2001 to 2021; Table S4: Top 10 journals publishing research on AI in HI from 2001 to 2021; Table S5: Top 10 most frequently used keywords for methods and cancer types in publications of AI in HI.

Author Contributions

Conceptualization, W.Z.; data curation, W.Z.; methodology, W.Z. and Z.D.; software, W.Z. and Z.D.; validation, Z.D. and Y.L.; writing—original draft preparation, W.Z.; writing—review and editing, Z.D., Y.L. and H.S.; supervision, H.X. and H.D.; funding acquisition, W.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Fundamental Research Funds for the Central Universities of Central South University grant number 1053320211619.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors thank the contribution of VOSviewer and CiteSpace bibliometric analysis tools.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Mao, J.J.; Pillai, G.G.; Andrade, C.J.; Ligibel, J.A.; Basu, P.; Cohen, L.; Khan, I.A.; Mustian, K.M.; Puthiyedath, R.; Dhiman, K.S. Integrative oncology: Addressing the global challenges of cancer prevention and treatment. CA Cancer J. Clin. 2022, 72, 144–164. [Google Scholar] [CrossRef] [PubMed]

- Underwood, J.C. More than meets the eye: The changing face of histopathology. Histopathology 2017, 70, 4–9. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Lu, Y. Study on artificial intelligence: The state of the art and future prospects. J. Ind. Inf. Integr. 2021, 23, 100224. [Google Scholar] [CrossRef]

- Wang, K.S.; Yu, G.; Xu, C.; Meng, X.H.; Zhou, J.; Zheng, C.; Deng, Z.; Shang, L.; Liu, R.; Su, S.; et al. Accurate diagnosis of colorectal cancer based on histopathology images using artificial intelligence. BMC Med. 2021, 19, 76. [Google Scholar] [CrossRef] [PubMed]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. A Dataset for Breast Cancer Histopathological Image Classification. IEEE Trans. Biomed. Eng. 2016, 63, 1455–1462. [Google Scholar] [CrossRef]

- Chen, M.Y.; Zhang, B.; Topatana, W.; Cao, J.S.; Zhu, H.P.; Juengpanich, S.; Mao, Q.J.; Yu, H.; Cai, X.J. Classification and mutation prediction based on histopathology H&E images in liver cancer using deep learning. NPJ Precis. Oncol. 2020, 4, 14. [Google Scholar] [CrossRef]

- Coudray, N.; Ocampo, P.S.; Sakellaropoulos, T.; Narula, N.; Snuderl, M.; Fenyo, D.; Moreira, A.L.; Razavian, N.; Tsirigos, A. Classification and mutation prediction from non-small cell lung cancer histopathology images using deep learning. Nat. Med. 2018, 24, 1559–1567. [Google Scholar] [CrossRef]

- Huang, J.J.; Wang, T.; Zheng, D.Q.; He, Y.J. Nucleus segmentation of cervical cytology images based on multi-scale fuzzy clustering algorithm. Bioengineered 2020, 11, 484–501. [Google Scholar] [CrossRef]

- Zhao, J.; Dai, L.; Zhang, M.; Yu, F.; Li, M.; Li, H.F.; Wang, W.J.; Zhang, L. PGU-net plus: Progressive Growing of U-net plus for Automated Cervical Nuclei Segmentation. In Proceedings of the 1st International Workshop on Multiscale Multimodal Medical Imaging (MMMI), Shenzhen, China, 13 October 2019; pp. 51–58. [Google Scholar]

- Wang, X.D.; Chen, Y.; Gao, Y.S.; Zhang, H.Q.; Guan, Z.H.; Dong, Z.; Zheng, Y.X.; Jiang, J.R.; Yang, H.Q.; Wang, L.M.; et al. Predicting gastric cancer outcome from resected lymph node histopathology images using deep learning. Nat. Commun. 2021, 12, 1637. [Google Scholar] [CrossRef]

- Wulczyn, E.; Steiner, D.F.; Moran, M.; Plass, M.; Reihs, R.; Tan, F.; Flament-Auvigne, I.; Brown, T.; Regitnig, P.; Chen, P.H.C.; et al. Interpretable survival prediction for colorectal cancer using deep learning. NPJ Digit. Med. 2021, 4, 71. [Google Scholar] [CrossRef]

- Ahmad, Z.; Rahim, S.; Zubair, M.; Abdul-Ghafar, J. Artificial intelligence (AI) in medicine, current applications and future role with special emphasis on its potential and promise in pathology: Present and future impact, obstacles including costs and acceptance among pathologists, practical and philosophical considerations. A comprehensive review. Diagn. Pathol. 2021, 16, 24. [Google Scholar] [PubMed]

- Försch, S.; Klauschen, F.; Hufnagl, P.; Roth, W. Artificial intelligence in pathology. Dtsch. Ärzteblatt Int. 2021, 118, 199. [Google Scholar]

- Steiner, D.F.; Nagpal, K.; Sayres, R.; Foote, D.J.; Wedin, B.D.; Pearce, A.; Cai, C.J.; Winter, S.R.; Symonds, M.; Yatziv, L. Evaluation of the use of combined artificial intelligence and pathologist assessment to review and grade prostate biopsies. JAMA Netw. Open 2020, 3, e2023267. [Google Scholar] [CrossRef] [PubMed]

- Donthu, N.; Kumar, S.; Mukherjee, D.; Pandey, N.; Lim, W.M. How to conduct a bibliometric analysis: An overview and guidelines. J. Bus. Res. 2021, 133, 285–296. [Google Scholar] [CrossRef]

- Duchene, D.A.; Lotan, Y.; Cadeddu, J.A.; Sagalowsky, A.I.; Koeneman, K.S. Histopathology of surgically managed renal tumors: Analysis of a contemporary series. Urology 2003, 62, 827–830. [Google Scholar] [CrossRef]

- Colling, R.; Pitman, H.; Oien, K.; Rajpoot, N.; Macklin, P.; CM-Path AI in Histopathology Working Group; Bachtiar, V.; Booth, R.; Bryant, A.; Bull, J. Artificial intelligence in digital pathology: A roadmap to routine use in clinical practice. J. Pathol. 2019, 249, 143–150. [Google Scholar] [CrossRef]

- Sultan, A.S.; Elgharib, M.A.; Tavares, T.; Jessri, M.; Basile, J.R. The use of artificial intelligence, machine learning and deep learning in oncologic histopathology. J. Oral Pathol. Med. 2020, 49, 849–856. [Google Scholar] [CrossRef]

- Kayser, K.; GĂśrtler, J.; Bogovac, M.; Bogovac, A.; Goldmann, T.; Vollmer, E.; Kayser, G. AI (artificial intelligence) in histopathology—From image analysis to automated diagnosis. Folia Histochem. Cytobiol. 2009, 47, 355–361. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Khairi, S.S.M.; Abu Bakar, M.A.; Alias, M.A.; Abu Bakar, S.; Liong, C.Y.; Rosli, N.; Farid, M. Deep Learning on Histopathology Images for Breast Cancer Classification: A Bibliometric Analysis. Healthcare 2022, 10, 10. [Google Scholar] [CrossRef]

- Lin, T.; Shen, Z.; Wu, H.; Chen, Z.; Hu, J.; Pan, J.; Kong, J. The global research of artificial intelligence on prostate cancer: A 22-year bibliometric analysis. Front. Oncol. 2022, 12, 843735. [Google Scholar]

- Trujillo, C.M.; Long, T.M. Document co-citation analysis to enhance transdisciplinary research. Sci. Adv. 2018, 4, e1701130. [Google Scholar] [CrossRef] [PubMed]

- van Eck, N.J.; Waltman, L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef]

- Chen, C. Searching for intellectual turning points: Progressive knowledge domain visualization. Proc. Natl. Acad. Sci. USA 2004, 101, 5303–5310. [Google Scholar] [CrossRef] [PubMed]

- Rawashdeh, M.; Ralescu, A. Center-wise intra-inter silhouettes. In Proceedings of the International Conference on Scalable Uncertainty Management, Marburg, Germany, 17–19 September 2012; pp. 406–419. [Google Scholar]

- Muff, S.; Rao, F.; Caflisch, A. Local modularity measure for network clusterizations. Phys. Rev. E 2005, 72, 056107. [Google Scholar] [CrossRef]

- Chen, C.; Leydesdorff, L. Patterns of connections and movements in dual-map overlays: A new method of publication portfolio analysis. J. Assoc. Inf. Sci. Technol. 2014, 65, 334–351. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Tourassi, G.D.; Markey, M.K.; Lo, J.Y.; Floyd, C.E., Jr. A neural network approach to breast cancer diagnosis as a constraint satisfaction problem. Med. Phys. 2001, 28, 804–811. [Google Scholar] [CrossRef]

- Wang, Y.; Crookes, D.; Eldin, O.S.; Wang, S.; Hamilton, P.; Diamond, J. Assisted diagnosis of cervical intraepithelial neoplasia (CIN). IEEE J. Sel. Top. Signal Processing 2009, 3, 112–121. [Google Scholar] [CrossRef]

- Kothari, S.; Phan, J.H.; Stokes, T.H.; Wang, M.D. Pathology imaging informatics for quantitative analysis of whole-slide images. J. Am. Med. Inf. Assoc. 2013, 20, 1099–1108. [Google Scholar] [CrossRef]

- Mobadersany, P.; Yousefi, S.; Amgad, M.; Gutman, D.A.; Barnholtz-Sloan, J.S.; Vega, J.E.V.; Brat, D.J.; Cooper, L.A.D. Predicting cancer outcomes from histology and genomics using convolutional networks. Proc. Natl. Acad. Sci. USA 2018, 115, E2970–E2979. [Google Scholar] [CrossRef]

- Saltz, J.; Gupta, R.; Hou, L.; Kurc, T.; Singh, P.; Nguyen, V.; Samaras, D.; Shroyer, K.R.; Zhao, T.H.; Batiste, R.; et al. Spatial Organization and Molecular Correlation of Tumor-Infiltrating Lymphocytes Using Deep Learning on Pathology Images. Cell Rep. 2018, 23, 181–193. [Google Scholar] [CrossRef] [PubMed]

- Sirinukunwattana, K.; Raza, S.E.A.; Tsang, Y.W.; Snead, D.R.J.; Cree, I.A.; Rajpoot, N.M. Locality Sensitive Deep Learning for Detection and Classification of Nuclei in Routine Colon Cancer Histology Images. IEEE Trans. Med. Imaging 2016, 35, 1196–1206. [Google Scholar] [CrossRef] [PubMed]

- Graham, S.; Vu, Q.D.; Raza, S.E.A.; Azam, A.; Tsang, Y.W.; Kwak, J.T.; Rajpoot, N. Hover-Net: Simultaneous segmentation and classification of nuclei in multi-tissue histology images. Med. Image Anal. 2019, 58, 101563. [Google Scholar] [CrossRef] [PubMed]

- Qaiser, T.; Sirinukunwattana, K.; Nakane, K.; Tsang, Y.W.; Epstein, D.; Rajpoot, N. Persistent Homology for Fast Tumor Segmentation in Whole Slide Histology Images. In Proceedings of the 20th Conference on Medical Image Understanding and Analysis (MIUA), Loughborough Univ, Loughborough, UK, 6–8 July 2016; pp. 119–124. [Google Scholar]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Agner, S.C.; Rosen, M.A.; Englander, S.; Tomaszewski, J.E.; Feldman, M.D.; Zhang, P.; Mies, C.; Schnall, M.D.; Madabhushi, A. Computerized image analysis for identifying triple-negative breast cancers and differentiating them from other molecular subtypes of breast cancer on dynamic contrast-enhanced MR images: A feasibility study. Radiology 2014, 272, 91. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Roa, A.C.; Basavanhally, A.N.; Gilmore, H.L.; Shih, N.; Feldman, M.; Tomaszewski, J.; Gonzalez, F.; Madabhushi, A. Mitosis detection in breast cancer pathology images by combining handcrafted and convolutional neural network features. J. Med. Imaging 2014, 1, 034003. [Google Scholar] [CrossRef]

- Xu, J.; Xiang, L.; Liu, Q.; Gilmore, H.; Wu, J.; Tang, J.; Madabhushi, A. Stacked sparse autoencoder (SSAE) for nuclei detection on breast cancer histopathology images. IEEE Trans. Med. Imaging 2015, 35, 119–130. [Google Scholar] [CrossRef]

- Basavanhally, A.; Ganesan, S.; Shih, N.; Mies, C.; Feldman, M.; Tomaszewski, J.; Madabhushi, A. A boosted classifier for integrating multiple fields of view: Breast cancer grading in histopathology. In Proceedings of the 2011 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Chicago, IL, USA, 30 March–2 April 2011; pp. 125–128. [Google Scholar]

- Faust, O.; Hagiwara, Y.; Hong, T.J.; Lih, O.S.; Acharya, U.R. Deep learning for healthcare applications based on physiological signals: A review. Comput. Methods Programs Biomed. 2018, 161, 1–13. [Google Scholar] [CrossRef]

- Xia, C.F.; Dong, X.S.; Li, H.; Cao, M.M.; Sun, D.A.Q.; He, S.Y.; Yang, F.; Yan, X.X.; Zhang, S.L.; Li, N.; et al. Cancer statistics in China and United States, 2022: Profiles, trends, and determinants. Chin. Med. J. 2022, 135, 584–590. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chen, C.M.; Ibekwe-SanJuan, F.; Hou, J.H. The Structure and Dynamics of Cocitation Clusters: A Multiple-Perspective Cocitation Analysis. J. Am. Soc. Inf. Sci. Technol. 2010, 61, 1386–1409. [Google Scholar] [CrossRef]

- Guzmán, M.V.; Chen, C. CiteSpace: A Practical Guide for Mapping Scientific Literature; Nova Science: Hauppauge, NY, USA, 2016; 169p, ISBN 978-1-53610-280-2/978-1-53610-295-6. [Google Scholar]

- Naik, S.; Doyle, S.; Agner, S.; Madabhushi, A.; Feldman, M.; Tomaszewski, J. Automated gland and nuclei segmentation for grading of prostate and breast cancer histopathology. In Proceedings of the 2008 5th IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Paris, France, 14–17 May 2008; pp. 284–287. [Google Scholar]

- Al-Kofahi, Y.; Lassoued, W.; Lee, W.; Roysam, B. Improved automatic detection and segmentation of cell nuclei in histopathology images. IEEE Trans. Biomed. Eng. 2009, 57, 841–852. [Google Scholar] [CrossRef] [PubMed]

- Sertel, O.; Kong, J.; Lozanski, G.; Shana’ah, A.; Catalyurek, U.; Saltz, J.; Gurcan, M. Texture classification using nonlinear color quantization: Application to histopathological image analysis. In Proceedings of the 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; pp. 597–600. [Google Scholar]

- Sertel, O.; Kong, J.; Catalyurek, U.V.; Lozanski, G.; Saltz, J.H.; Gurcan, M.N. Histopathological image analysis using model-based intermediate representations and color texture: Follicular lymphoma grading. J. Signal Processing Syst. 2009, 55, 169–183. [Google Scholar] [CrossRef]

- Srinidhi, C.L.; Kim, S.W.; Chen, F.D.; Martel, A.L. Self-supervised driven consistency training for annotation efficient histopathology image analysis. Med. Image Anal. 2022, 75, 102256. [Google Scholar] [CrossRef] [PubMed]

- Mahapatra, D.; Kuanar, S.; Bozorgtabar, B.; Ge, Z.Y. Self-supervised Learning of Inter-label Geometric Relationships for Gleason Grade Segmentation. In Proceedings of the 3rd MICCAI Workshop on Domain Adaptation and Representation Transfer (DART), Strasbourg, France, 27 September–1 October 2021; pp. 57–67. [Google Scholar]

- Li, C.; Xue, D.; Kong, F.J.; Hu, Z.J.; Chen, H.; Yao, Y.D.; Sun, H.Z.; Zhang, L.; Zhang, J.P.; Jiang, T.; et al. Cervical Histopathology Image Classification Using Ensembled Transfer Learning. In Proceedings of the 7th International Conference on Information Technology in Biomedicine (ITIB), Kamien Slaski, Poland, 17–19 June 2019; pp. 26–37. [Google Scholar]

- Buddhavarapu, V.G.; Jothi, J.A.A. An experimental study on classification of thyroid histopathology images using transfer learning. Pattern Recognit. Lett. 2020, 140, 1–9. [Google Scholar] [CrossRef]

- Bejnordi, B.E.; Veta, M.; van Diest, P.J.; van Ginneken, B.; Karssemeijer, N.; Litjens, G.; van der Laak, J.; Consortium, C. Diagnostic Assessment of Deep Learning Algorithms for Detection of Lymph Node Metastases in Women with Breast Cancer. JAMA—J. Am. Med. Assoc. 2017, 318, 2199–2210. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Janowczyk, A.; Madabhushi, A. Deep learning for digital pathology image analysis: A comprehensive tutorial with selected use cases. J. Pathol. Inform. 2016, 7, 29. [Google Scholar] [CrossRef]

- Cireşan, D.C.; Giusti, A.; Gambardella, L.M.; Schmidhuber, J. Mitosis detection in breast cancer histology images with deep neural networks. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Nagoya, Japan, 22–26 September 2013; pp. 411–418. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25: 26th Annual Conference on Neural Information Processing Systems 2012, Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar]

- Althnian, A.; AlSaeed, D.; Al-Baity, H.; Samha, A.; Bin Dris, A.; Alzakari, N.; Abou Elwafa, A.; Kurdi, H. Impact of Dataset Size on Classification Performance: An Empirical Evaluation in the Medical Domain. Appl. Sci. 2021, 11, 796. [Google Scholar] [CrossRef]

- Thian, Y.L.; Ng, D.W.; Hallinan, J.; Jagmohan, P.; Sia, S.Y.; Mohamed, J.S.A.; Quek, S.T.; Feng, M.L. Effect of Training Data Volume on Performance of Convolutional Neural Network Pneumothorax Classifiers. J. Digit. Imaging 2022. [Google Scholar] [CrossRef]

- Ben Hamida, A.; Devanne, M.; Weber, J.; Truntzer, C.; Derangere, V.; Ghiringhelli, F.; Forestier, G.; Wemmert, C. Deep learning for colon cancer histopathological images analysis. Comput. Biol. Med. 2021, 136, 104730. [Google Scholar] [CrossRef] [PubMed]

- Kaczmarzyk, J.R.; Kurç, T.M.; Abousamra, S.; Gupta, R.R.; Saltz, J.H.; Koo, P.K. Evaluating histopathology transfer learning with ChampKit. arXiv 2022, arXiv:2206.06862. [Google Scholar]

- Zheng, Y.; Li, C.; Zhou, X.; Chen, H.; Xu, H.; Li, Y.; Zhang, H.; Li, X.; Sun, H.; Huang, X.; et al. Application of Transfer Learning and Ensemble Learning in Image-level Classification for Breast Histopathology. arXiv 2022, arXiv:2204.08311. [Google Scholar] [CrossRef]

- Schirris, Y.; Gavves, E.; Nederlof, I.; Horlings, H.M.; Teuwen, J. DeepSMILE: Contrastive self-supervised pre-training benefits MSI and HRD classification directly from H&E whole-slide images in colorectal and breast cancer. Med. Image Anal. 2022, 79, 102464. [Google Scholar] [CrossRef]

- Mahapatra, D.; Poellinger, A.; Shao, L.; Reyes, M. Interpretability-Driven Sample Selection Using Self Supervised Learning for Disease Classification and Segmentation. IEEE Trans. Med. Imaging 2021, 40, 2548–2562. [Google Scholar] [CrossRef]

- Bulten, W.; Bandi, P.; Hoven, J.; van de Loo, R.; Lotz, J.; Weiss, N.; van der Laak, J.; van Ginneken, B.; Hulsbergen-van de Kaa, C.; Litjens, G. Epithelium segmentation using deep learning in H&E-stained prostate specimens with immunohistochemistry as reference standard. Sci. Rep. 2019, 9, 864. [Google Scholar] [CrossRef]

- Tellez, D.; Balkenhol, M.; Otte-Holler, I.; van de Loo, R.; Vogels, R.; Bult, P.; Wauters, C.; Vreuls, W.; Mol, S.; Karssemeijer, N.; et al. Whole-Slide Mitosis Detection in H & E Breast Histology Using PHH3 as a Reference to Train Distilled Stain-Invariant Convolutional Networks. IEEE Trans. Med. Imaging 2018, 37, 2126–2136. [Google Scholar] [CrossRef]

- Jackson, C.R.; Sriharan, A.; Vaickus, L.J. A machine learning algorithm for simulating immunohistochemistry: Development of SOX10 virtual IHC and evaluation on primarily melanocytic neoplasms. Mod. Pathol. 2020, 33, 1638–1648. [Google Scholar] [CrossRef] [PubMed]

- Elston, C.W.; Ellis, I.O. Pathological prognostic factors in breast cancer. I. The value of histological grade in breast cancer: Experience from a large study with long-term follow-up. Histopathology 1991, 19, 403–410. [Google Scholar] [CrossRef]

- Stierer, M.; Rosen, H.; Weber, R. Nuclear pleomorphism, a strong prognostic factor in axillary node-negative small invasive breast cancer. Breast Cancer Res. Treat. 1992, 20, 109–116. [Google Scholar] [CrossRef]

- Brieu, N.; Pauly, O.; Zimmermann, J.; Binnig, G.; Schmidt, G. Slide-Specific Models for Segmentation of Differently Stained Digital Histopathology Whole Slide Images. In Proceedings of the Conference on Medical Imaging—Image Processing, San Diego, CA, USA, 1–3 March 2016. [Google Scholar]

- Feng, Y.Q.; Zhang, L.; Yi, Z. Breast cancer cell nuclei classification in histopathology images using deep neural networks. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 179–191. [Google Scholar] [CrossRef] [PubMed]

- Xing, F.; Yang, L. Robust nucleus/cell detection and segmentation in digital pathology and microscopy images: A comprehensive review. IEEE Rev. Biomed. Eng. 2016, 9, 234–263. [Google Scholar] [CrossRef] [PubMed]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Kumar, N.; Verma, R.; Anand, D.; Zhou, Y.; Onder, O.F.; Tsougenis, E.; Chen, H.; Heng, P.-A.; Li, J.; Hu, Z. A multi-organ nucleus segmentation challenge. IEEE Trans. Med. Imaging 2019, 39, 1380–1391. [Google Scholar] [CrossRef] [PubMed]

- Gamper, J.; Koohbanani, N.A.; Benes, K.; Graham, S.; Jahanifar, M.; Khurram, S.A.; Azam, A.; Hewitt, K.; Rajpoot, N. Pannuke dataset extension, insights and baselines. arXiv 2020, arXiv:2003.10778. [Google Scholar]

- Veeling, B.S.; Linmans, J.; Winkens, J.; Cohen, T.; Welling, M. Rotation equivariant CNNs for digital pathology. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Granada, Spain, 16–20 September 2018; pp. 210–218. [Google Scholar]

- Torrey, L.; Shavlik, J. Transfer learning. In Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques; IGI Global: Hershey, PA, USA, 2010; pp. 242–264. [Google Scholar]

- Kieffer, B.; Babaie, M.; Kalra, S.; Tizhoosh, H.R. Convolutional neural networks for histopathology image classification: Training vs using pre-trained networks. In Proceedings of the 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), Montreal, QC, Canada, 28 November–1 December 2017; pp. 1–6. [Google Scholar]

- Celik, Y.; Talo, M.; Yildirim, O.; Karabatak, M.; Acharya, U.R. Automated invasive ductal carcinoma detection based using deep transfer learning with whole-slide images. Pattern Recognit. Lett. 2020, 133, 232–239. [Google Scholar] [CrossRef]

- Talo, M. Automated classification of histopathology images using transfer learning. Artif. Intell. Med. 2019, 101, 101743. [Google Scholar] [CrossRef]

- Kora, P.; Ooi, C.P.; Faust, O.; Raghavendra, U.; Gudigar, A.; Chan, W.Y.; Meenakshi, K.; Swaraja, K.; Plawiak, P.; Acharya, U.R. Transfer learning techniques for medical image analysis: A review. Biocybern. Biomed. Eng. 2022, 42, 79–107. [Google Scholar] [CrossRef]

- Wang, P.; Li, P.F.; Li, Y.M.; Wang, J.X.; Xu, J. Histopathological image classification based on cross-domain deep transferred feature fusion. Biomed. Signal Process. Control 2021, 68, 102705. [Google Scholar] [CrossRef]

- Zoetmulder, R.; Gavves, E.; Caan, M.; Marquering, H. Domain- and task-specific transfer learning for medical segmentation tasks. Comput. Methods Programs Biomed. 2022, 214, 106539. [Google Scholar] [CrossRef]

- Shurrab, S.; Duwairi, R. Self-supervised learning methods and applications in medical imaging analysis: A survey. arXiv 2021, arXiv:2109.08685. [Google Scholar]

- Ohri, K.; Kumar, M. Review on self-supervised image recognition using deep neural networks. Knowl.-Based Syst. 2021, 224, 107090. [Google Scholar] [CrossRef]

- Koohbanani, N.A.; Unnikrishnan, B.; Khurram, S.A.; Krishnaswamy, P.; Rajpoot, N. Self-Path: Self-Supervision for Classification of Pathology Images With Limited Annotations. IEEE Trans. Med. Imaging 2021, 40, 2845–2856. [Google Scholar] [CrossRef] [PubMed]

- Yang, P.; Hong, Z.; Yin, X.; Zhu, C.; Jiang, R. Self-supervised visual representation learning for histopathological images. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; pp. 47–57. [Google Scholar]

- Krishnan, R.; Rajpurkar, P.; Topol, E.J. Self-supervised learning in medicine and healthcare. Nat. Biomed. Eng. 2022. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).