1. Introduction

Information systems (IS) are key in today’s modern societies [

1]. They grow over the years and are the basis of most of the technological services enjoyed daily by millions of citizens. Software development companies are struggling to stand out in a very competitive market. They are increasingly numerous and generate a significant impact on the Gross Domestic Product (GDP) of countries [

2]. The key to their commercial success lies in the development of quality software products that meet users’ needs, solve their problems, and are familiar to a large majority of people [

3,

4].

In many areas of everyday life ISs are present, such as healthcare, banking, e-commerce, etc. Between them, the health sector moves large budgets each year with estimates of over

$100 million for the largest institutions [

5]. Given the size and complexity of healthcare systems, it is essential that citizens make responsible use of them in order to achieve economic sustainability [

6]. In this line, personal health records (PHRs) can enhance efficient healthcare use, as they allow patients to know at all times the evolution of the diseases they are suffering from. These systems store medical data that users can consult with an electronic device. Although they are not widely known by the majority, several studies indicate a remarkable willingness on the part of patients to share their data with the medical doctors [

7]. These systems can be directly connected to hospital facilities, giving rise to tethered systems. They can also be found as standalone applications, both on cell phones and PCs, which connect to a server that provides access to the data. They are usually found on the web, and users can access their profile with an ID and password to update medical data. These systems can be free or paid. Between the PHRs in web format, Kaiser Permanente stands out with 12.5 million users served in 2022 (

http://about.kaiserpermanente.org/who-we-are, accessed on 27 May 2022).

Convenience in the use of IS is key to meeting the functionality demands required by users [

8]. In this vein, usability has been identified as one of the most important factors determining the success of software applications [

9]. Correct design of software systems is a challenge for software companies to make their products widely known in the market. It is essential that usability aspects are taken into account during the testing of the applications in order to detect flaws to correct before launching the applications to the market.

Usability evaluations become relevant when developing software applications because they allow one to detect inconveniences or small annoyances that usually occur during the use of software applications, complicating their understanding and operation. It is worth mentioning that among all the software quality factors, usability enables users to use the systems in a comfortable way [

10]. Therefore, usability is important to boost the adoption of software applications [

9]. In particular, it is recommended that depending on the characteristics of the system to be analysed, the usability evaluation changes, allowing one to delve into specific aspects of the systems to be analysed [

11,

12]. The use of computer-aided usability evaluation (CAUE) tools to assist in usability evaluation is becoming a great help to gather more information and to involve a greater number of auditors [

13]. This type of system allows one to centralise the usability analysis of software systems, and might provide auditors with usability requirements catalogues, instructions to proceed to evaluate usability, access credentials to the systems to be evaluated, and so on. With these tools the evaluations are clarified before to proceed, and more accurate results may be produced.

A usability evaluation workflow for PHRs was proposed in this paper. The core of the method is based on the centralisation of tasks, using for this purpose a CAUE tool developed by the authors called Usevalia. Moreover, the following components were added to the workflow:

heuristics adapted to agree on the concepts in usability to be evaluated by the auditors;

a set of tasks to learn how PHRs work; and

a set of usability requirements catalogues to propose a checklist, guiding the usability assessment process.

To better understand the strengths and weaknesses of the framework, the Method Evaluation Model (MEM) adapted to PHRs was applied. MEM [

14] is a theoretical model for evaluating IS design methods. It incorporates two dimensions: actual effectiveness, and adoption in practice. Moreover, it combines Rescher’s theory of methodological pragmatism, which is a theory for validating methodological knowledge [

15], and the Technology Acceptance Model (TAM) [

16], which is a theoretical model for explaining and predicting user acceptance of IT.

To assess whether an IS will be used, MEM is appropriate and can be adapted to a particular system. In the case of PHRs, practitioners’ perception of effectiveness was considered much more important than adoption in practice. For this purpose, the effectiveness perception parameters measured the effects on the decision to use the framework [

16,

17,

18]. Only perception effectiveness in MEM was previously hypothesised in the literature, and it was based on TAM and the Theory of Reasoned Action (TRA).

The beliefs that influence attitudes form the basis of TRA, which in turn lead to intentions and, finally, guide or generate behaviours. TAM adapts the relationship between belief, attitude, intention, and behaviour that arises with the use of IT to a model of user acceptance. The goal of TAM is to provide insights on the factors that determine technology acceptance. Moreover, it is capable of explaining user behaviour across a broad range of end-user computing technologies and user populations with brief and theoretical descriptions [

16]. In a literature review conducted by Hu et al. [

19], TAM was considered a valuable and useful model to explain and predict user acceptance of IT. This characteristic was remarkable for students in a university, and executives in a business organisation context.

The usability evaluations were carried out with the proposed workflow. As a result, it was possible to analyse the usability of PHRs, shedding light on the importance of employing adapted and well-defined methods in usability evaluations on domain-specific ISs. The following research questions were proposed to organise the research:

This paper presents a novel workflow for usability evaluation. To date, there is no other approach that addresses both theoretical and practical perspectives at the same time. The theoretical merits of the workflow are that it provides auditors with access to detailed information on how to proceed with evaluations. In particular, auditors can consult a catalogue of usability requirements, to get a precise idea of how usability should be implemented in systems; usability heuristics specifically designed for e-Health systems, which allow for a deeper understanding of the specific usability aspects to be assessed in the audit; and a checklist to reinforce the usability concepts of each heuristic. In this case, the checklist consists of a set of two questions for each heuristic. The practical merit of the workflow, which is the core of the proposed approach to usability evaluation, is the CAUE Usevalia tool. This tool forms the technological infrastructure provided for usability evaluations. It is available to auditors 24/7. It consists of an electronic information system that is used by the auditors as a database for training and documentation of the usability evaluations to be carried out. In this workflow, the auditors have all the necessary materials for performing usability evaluations in a remote location, which makes it possible to reach auditors all over the world and involve them with the simplification of the evaluation tasks.

The paper is organised as follows.

Section 2 describes the PHR selection protocol along with the proposed workflow for assessing the usability of PHR systems.

Section 3 presents the results of the parameters that highlight the benefits of using the CAUE Usevalia tool. In addition, the results of the usability evaluations of PHRs are shown. In

Section 4, both assessments are analysed. In particular, Usevalia and the PHRs are evaluated for a better understanding of the results. Finally,

Section 5 presents some concluding remarks and proposes future work.

2. Materials and Methods

This section shows the components of the experiment. First, the selection protocol of the PHRs is explained. Then, the heuristics used in the usability evaluations are described. A catalogue of usability requirements is presented, which served to reinforce the evaluation process. Based on this catalogue, the checklist, extracted from the literature, is reviewed. In addition, a set of common tasks in the PHRs is proposed to guide the evaluation. The Usevalia system is also described in this section. Finally, a MEM based on TAM is introduced, exposing the strengths and weaknesses of the whole workflow.

2.1. PHRs Search

The selection of the PHRs was based on a search protocol previously proposed in literature [

20]. The search string “PHR providers OR PHR website” was used in the following databases to search for PHRs: Medline, ACM Digital Library, IEEE Digital Library, and ScienceDirect. The manuscripts found were read in order to detect PHRs. Reporting quality guidelines established by the Preferred Reporting Items for Systematic reviews and Meta-Analysis (PRISMA) group were taken into account to make the search as accurate and unbiased as possible [

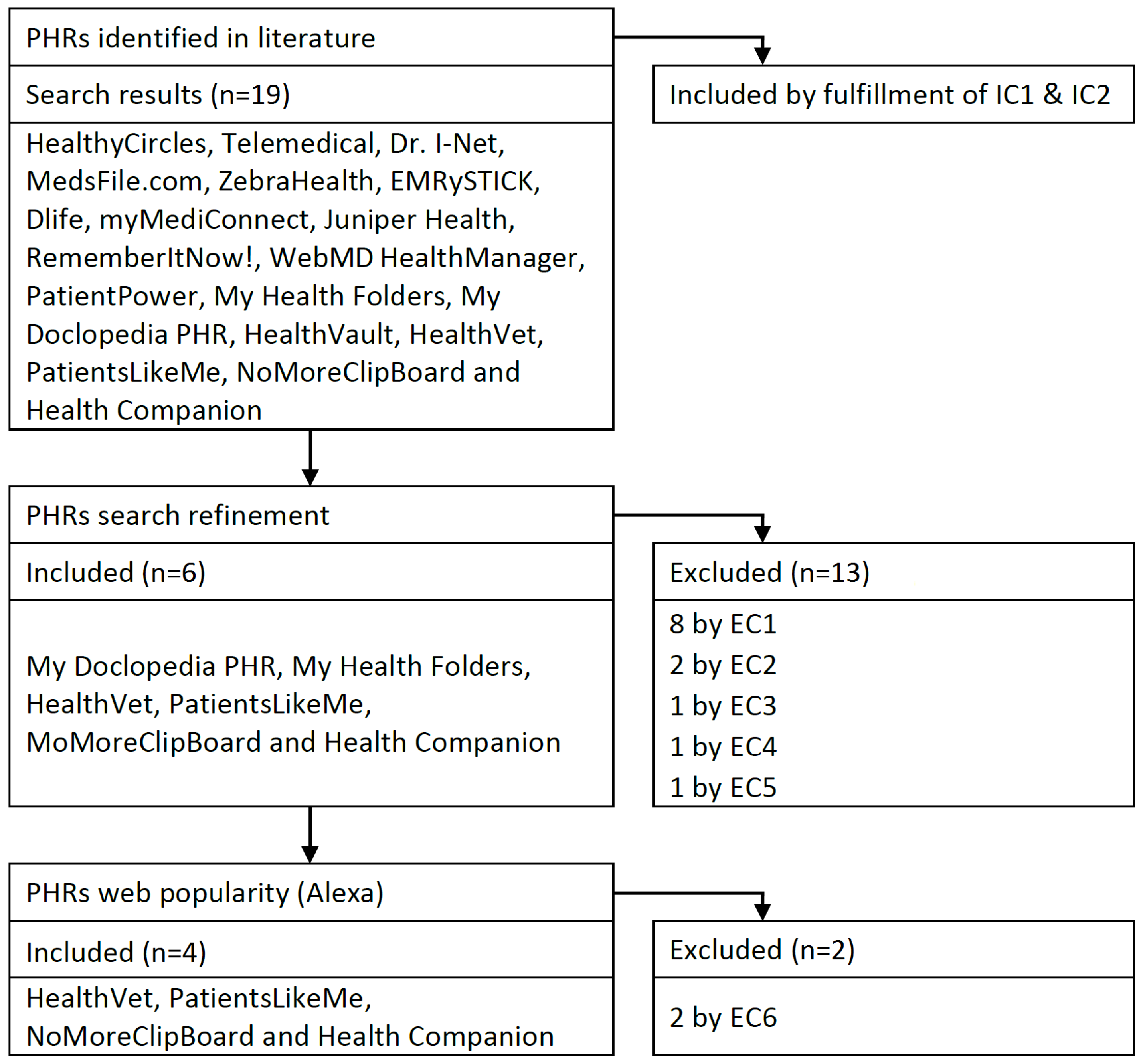

21]. The following inclusion criteria (IC) were taken into account: IC1, the PHR had to be free of charge and IC2, the PHR had to be available on the web. In total 19 PHRs were found.

A set of exclusion criteria (EC) were proposed in order to refine the search results. These were as follows: EC1, PHR not available; EC2, PHR not free; EC3, no login possible; EC4, PHR malfunctioning; EC5, PHR available only in the US; EC6, low popularity. In particular, EC6 could be applied by means of the Alexa tool (

alexa.com/siteinfo, accessed on 27 May 2022), which allows us to rank web portals according to the number of daily visits. By observing the results obtained when assessing the popularity of web portals, a score threshold of 10 million was set to determine the low popularity of websites. This service was withdrawn on 1 May 2022 but the popularity analysis was carried out before that event.

MedsFile, EMRySTICK, Dlife, Healthy-Circles, Dr. I-Net, Telemedical, ZebraHealth, and HealthVault were disregarded in the study by EC1 Juniper Health together with myMediConnect for meeting EC2, RememberItNow! was discarded by EC3, WebMD HealthManager was rejected by EC4, and PatientPower by EC5. When using EC6, My Health Folders and My Doclopedia were removed from the results.

Figure 1 summarises the entire process and shows the selected PHRs.

2.2. Usability Heuristics

Usability evaluations of IS can be carried out by using different techniques such as cognitive walkthrough, think-aloud protocol, expert evaluation, etc. In this study, expert evaluation based on heuristics was employed [

22].

Heuristics formed the basis of the evaluations. There is a wide variety of heuristics proposed in the literature. Of these, the 10 Principles of Design by Nielsen are well-known (please visit

nngroup.com/articles/ten-usability-heuristics, accessed on 27 May 2022). They have been widely used in a vast number of studies. The heuristic can be adapted when evaluating particular ISs such as PHRs. In this study, a collection of heuristics based on the 10 Principles of Design by Nielsen, specially designed for eHealth systems, was employed [

23]. They are as follows:

Match: enable the graphic components in the user interface meets the mental model of users;

Visibility: make visible that the system is processing;

Memory: give information to the users on how to perform a task in the system;

Minimalist: displayed only the basic information and avoid unnecessary elements in the GUI;

Error: warn users from an error and provide information to solve it;

Consistency: make easy to separate visually the different sections of the web page;

Control: allow to undo or cancel any action taken in the system; and

Flexibility: keep past actions on an historical log to allow reuse.

A checklist was also used [

23], aiming at guaranteeing that the concepts considered in the evaluations between the auditors were the same. A total of two mapping questions were posed to this end. In addition, a usability software requirements catalogue was considered in this action. The catalogue in usability requirements was proposed by the authors of the paper and was validated in previous studies [

24]. It is available at

https://docentis.inf.um.es/catalogue/ (accessed on 27 May 2022) (user & password: requisite. It is required to filter the results to find the usability catalogue). The checklist is depicted in

Table 1.

2.3. Tasks Performance

A collection of tasks were performed before to proceed with the usability evaluations. PHRs have particular functionalities in health, which had to be known by the auditors. To this end, the tasks were related with the common uses that can be done in these ISs. Moreover, a total of two user profiles, medical staff and patient, were taken into account to define with more accuracy the tasks in the evaluations [

25,

26]. The user profiles allowed us to simulate the mental model of the typical user when actions are taken in the PHRs. Moreover, recommendations from the American Health Information Management Association (AHIMA) were considered by the time of proposing the tasks [

27]. These features provided a more complete evaluation of the PHR.

Table 2 shows all the tasks carried out by the auditors before giving a score to each heuristics and go deep down in the assessments.

2.4. Usevalia Tool

Usevalia is an automated heuristic inspection-based tool for usability audits. It supports multi-users audits so that a team can work simultaneously on the same audit. The usability audit process in Usevalia starts when the chief auditor logs in with the username and password. Once logged in, any user has two options: (1) define the application to be audited, and (2) define the catalogue of usability guidelines to be used in the audit. When selecting the web application to be evaluated, the user must fill in a form, indicating the name, link address, web category, and description of the application. Next, define the scoring scheme that will be used to evaluate each of the usability guidelines. The scoring scheme can be created or deleted in the tool’s database. When creating a scoring scheme, each of the values is indicated (e.g., high, medium, low). The tool does not allow the auditors to delete a scoring scheme in the database. The teams of auditors that will participate in the usability audit are also managed within the same tool by the chief auditor. Thereby, when creating an audit team, the name of the team and the users who will play the audit role must be selected together with the team description. An audit group cannot be deleted if it has been associated with an audit until the audit is closed.

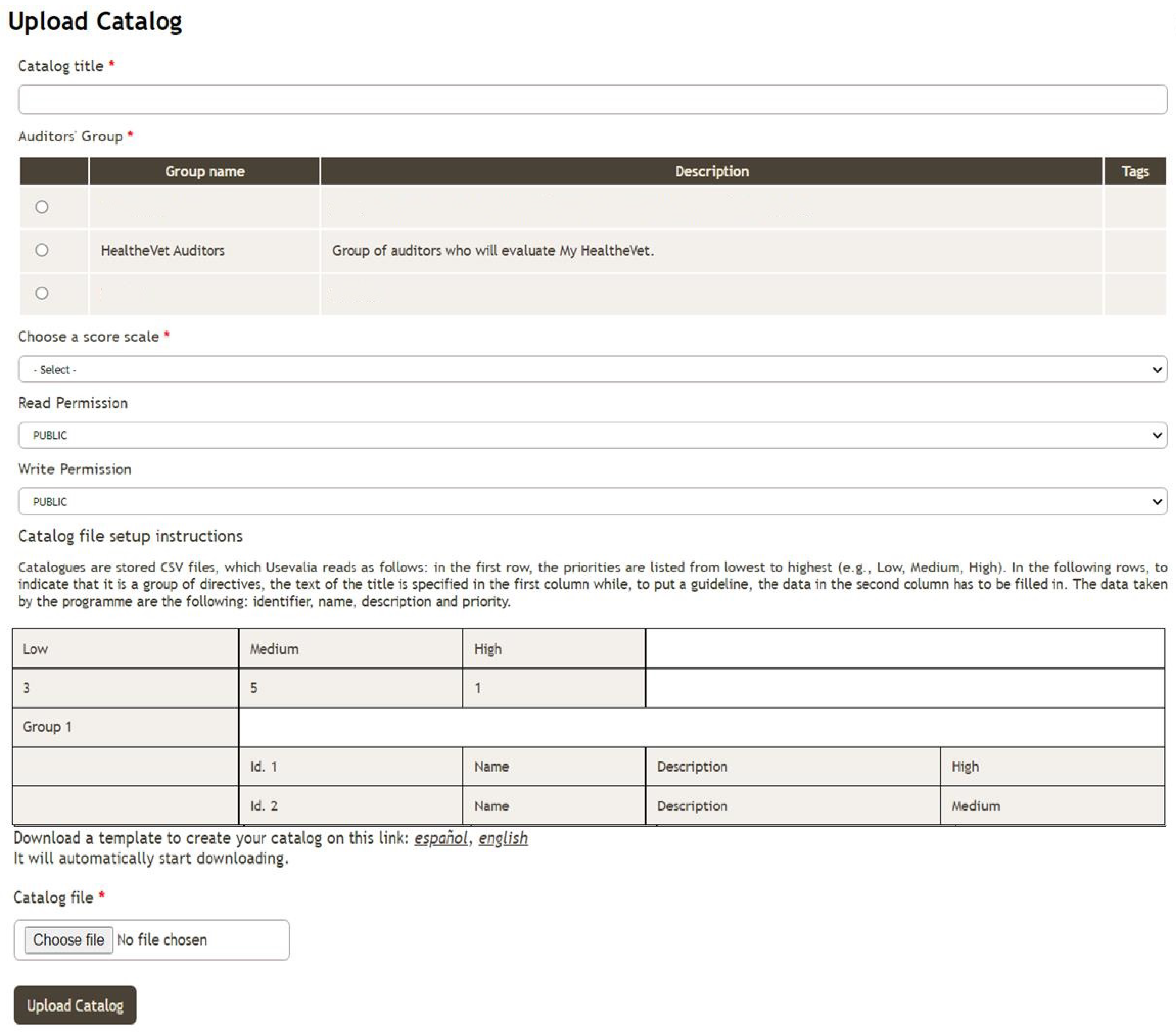

The chief auditor can import a catalogue that will be used to assess the system under test in a new audit. A repository of templates can be accessed from the Upload/Download catalogue page to create a collection of guidelines or to reuse a previously defined catalogue (see

Figure 2). The catalogues are stored in CSV files, which Usevalia reads as follows. In the first row, the priorities are listed from lowest to highest. In the following rows can be found the items of the catalogue. In the first column appears the title. Moreover, the guidelines of the catalogue are indicated in the second column. For each guideline, the following information is stored: identifier, name, description, and priority. In the form, the catalogue’s title, the team to which it will belong, the scoring scheme, and the read/write permissions must be filled in before uploading the catalogue. The chief auditor is the only one who can delete the catalogue from the application when it is not being used in another audit. A catalogue employed in the usability evaluations of the PHRs can be downloaded in CSV format at

http://umubox.um.es/index.php/s/IDWChOi6jy6uAyS (accessed on 27 May 2022).

Subsequently, the chief auditor defines the type of audit (e.g., basic, standard, task-based). Next, the auditor or team of auditors can start to evaluate the application by using the pre-loaded guideline catalogue. Each auditor then performs the heuristic evaluation. If there are x days left before the end of the audit, the system sends a reminder e-mail to the auditors performing the assessment so that they are aware that the time limit is running out. Once the audit expiration time is over, the audit is automatically closed.

Finally, Usevalia allows one to create, delete, evaluate, close, reopen, generate, and display the statistics of an audit. Some of the statistics displayed are: the total percentage of passed and failed guidelines in the audit, the number of auditors who have indicated a guideline as passed/failed, and the number of passed/failed guidelines at each priority level. Once the lead auditor has selected an audit to generate a report, they will receive a link to download the report. The file will be in PDF format and will contain all information related to the selected audit. The tool is available to the HCI community at the following address

https://giis.inf.um.es:446/drupal2/usevalia (accessed on 27 May 2022). It is worth noting that the Usevalia interface was first structured following the authors’ experience on web design, and secondly the usability of this interface was evaluated by means of the authors’ usability evaluation workflow, which provided some tips to improve the final design. In terms of user experience, Usevalia is a user-friendly system. This application is an aid to auditors in usability evaluations, as they can find all the information they need to carry out the audits. The system interface is simple and intuitive. In Usevalia, all options are displayed in a column on the left side at all times. Specifically, the menu displayed in the left-hand column consists of the following sections: Apps, Auditor Groups, Scoring Scales, Catalogues and Audits. In the first option, Apps, it is possible to create, edit or delete a record of an application to be assessed. Basic information about the app is stored in each record: name, category, URL, and a brief description. The second option stores the participants who will perform the audits. This information is editable at any time. In the next option it is possible to create different scales to score the heuristics considered in each usability evaluation. To guide the usability, it is possible to consult usability catalogues in the following option along with the checklist. Finally, the Audits option allows us to manage the outcome of the audit work.

Figure 2 shows an example of the look and feel of Usevalia.

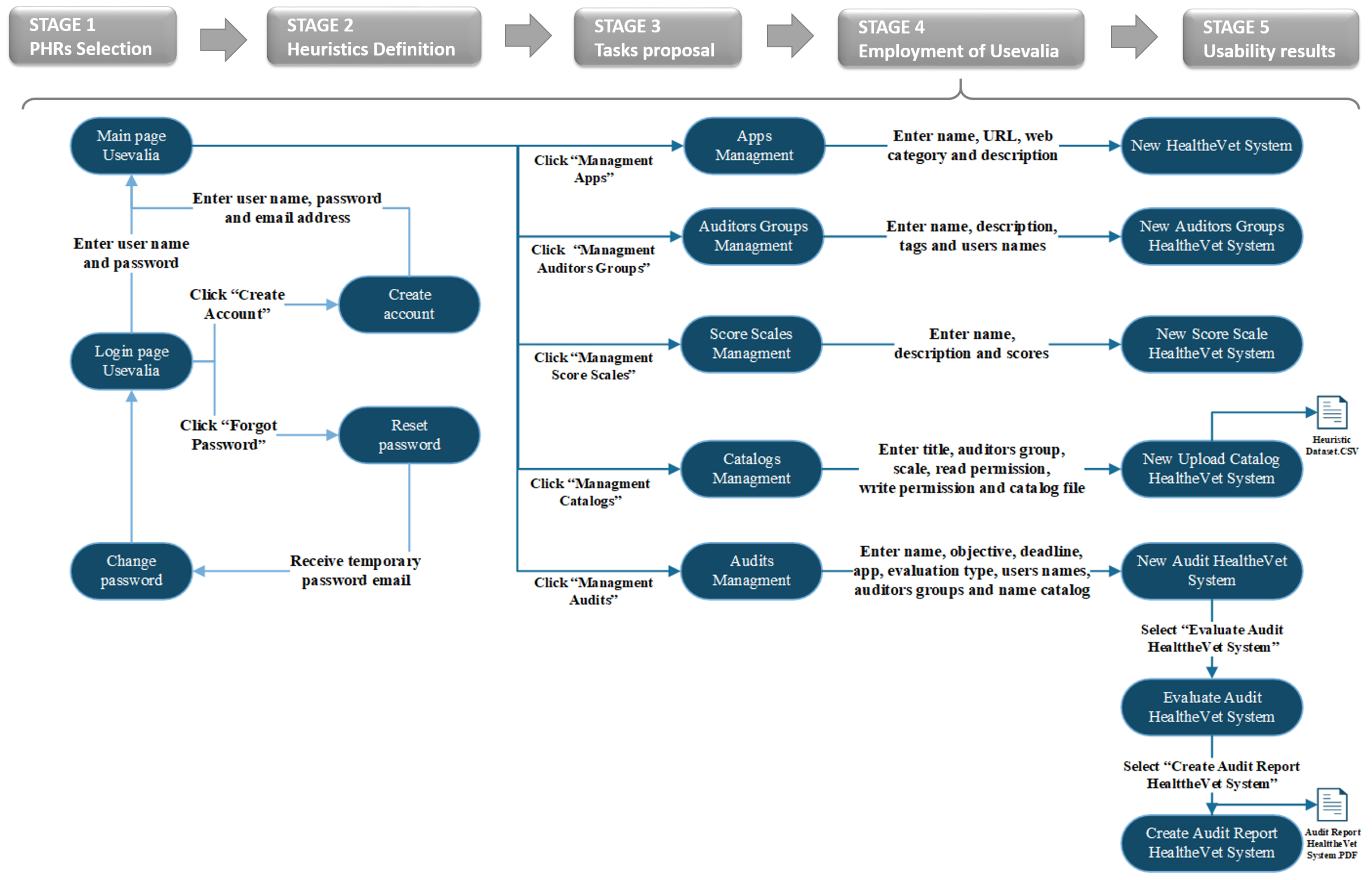

2.5. Usability Assessment Protocol

Usability evaluation is a complex task. A protocol has to be established so that all auditors perform an evaluation under the same conditions [

28]. The whole evaluation protocol consisted of the following items: (1) Usevalia tool, (2) adapted heuristics, (3) performance of the proposed tasks and, finally, (4) the evaluation of the PHRs based on the heuristics and the checklist, keeping the usability requirements catalogue available for the auditors. All this information was held in Usevalia, and the auditors could consult it at any time in any place with an electronic device.

Figure 3 shows the whole workflow, with an audit process of HealthVet using the CAUE Usevalia tool.

In this study, the audits were conducted by experts in usability employing Usevalia. The experts had experience in user interface design and software quality factors such as accessibility and usability. In particular, the work was carried out by students of the User Interfaces course, which is a subject taught in the first semester of the fourth year of the Degree in Computer Science at the University of Murcia. This subject deals with aspects of the design of graphical user interfaces from the perspective of the software quality. In groups of two, students chose the PHR to be evaluated between the ones presented in

Section 2.1. The first step carried out in the evaluations was to perform all the tasks proposed in

Table 2. After a thorough examination of the PHR, each one of the heuristics was scored on a Likert scale ranging from 1 (i.e., very little supported) to 5 (i.e., very well supported). A value of 0 meant not supported.

2.6. Method Evaluation Model (MEM)

In this paper, a total of 7 variables were defined to assess the experience of employing Usevalia. These variables were determined using MEM [

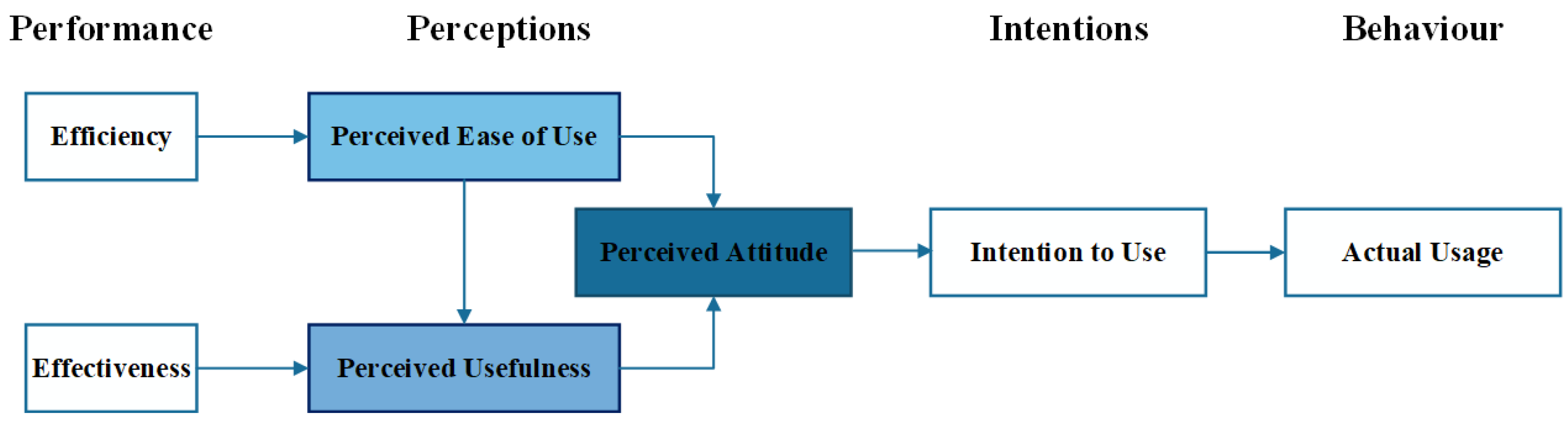

17], which was adapted to assess the skills and knowledge of auditors to perform usability audits with the CAUE tool. The variables were classified into 4 types: (1) performance-based variables, which measure how well subjects can use a tool to conduct usability audits in the PHR realm (i.e., effectiveness and productivity); (2) perception-based variables, which measure the perceived usefulness (PU), perceived ease of use (PEU), and perceived attitude (PA) that subjects believe a usability audit tool has; (3) intention-based variable, which measure the perceived intention of auditors to conduct audits with Usevalia (i.e., intention to use), and (4) behaviour-based variable, which measures the actual usage of Usevalia in the PHR audits (i.e., actual usage).

Figure 4 depicts MEM adapted to the audit of PHRs based on Usevalia and TAM, which are both employed in the study. It is a reduced TAM, excluding behaviour-based and performance-based variables. In this context, the behaviour-based variable (i.e., actual usage) is not evaluated because, on the research side, several prior empirical studies have reported a strong, significant causal link between intention to use and actual behaviour [

17,

19,

29,

30]. It is thought that the degree to which Usevalia was easy to use, as perceived by auditors, affected both their perception of the usefulness of the tool and their attitude toward using the tool in general. Attitude is also influenced by the level of the tool’s usefulness, as perceived by the auditors.

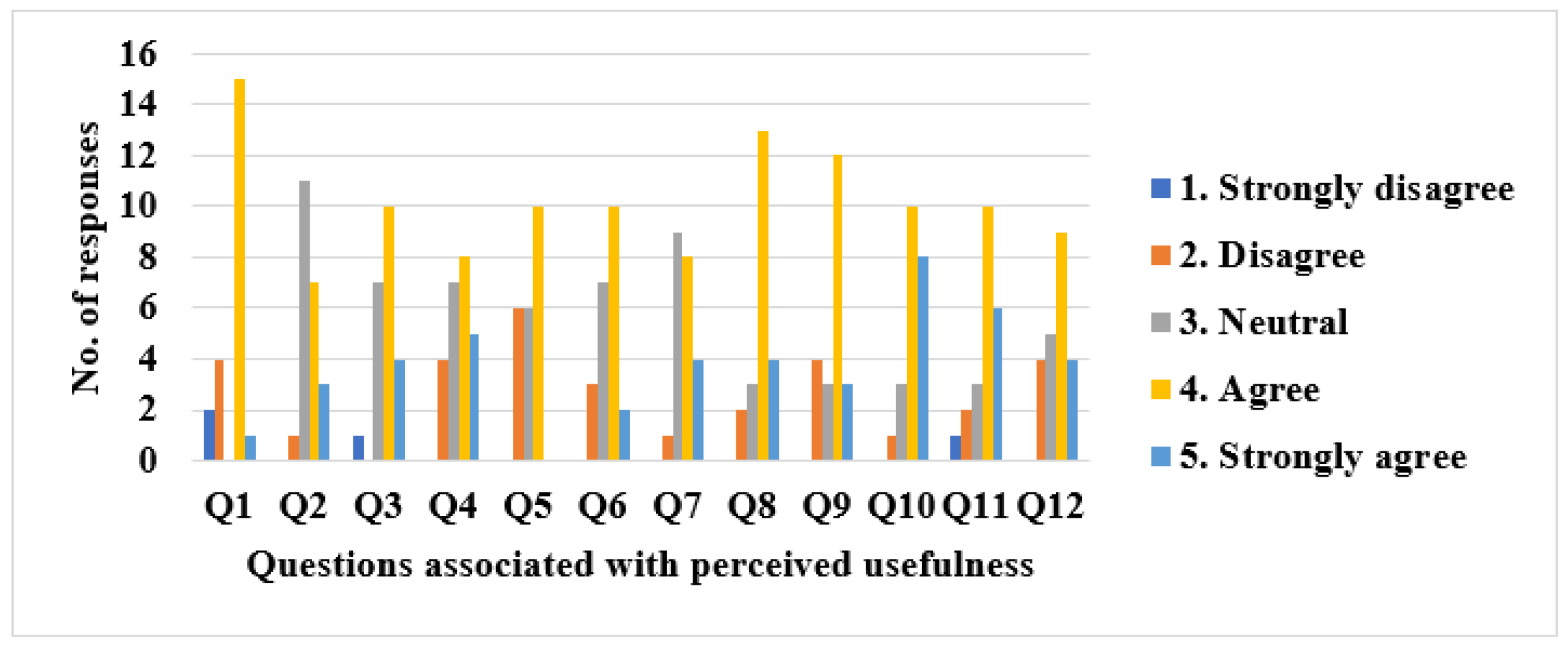

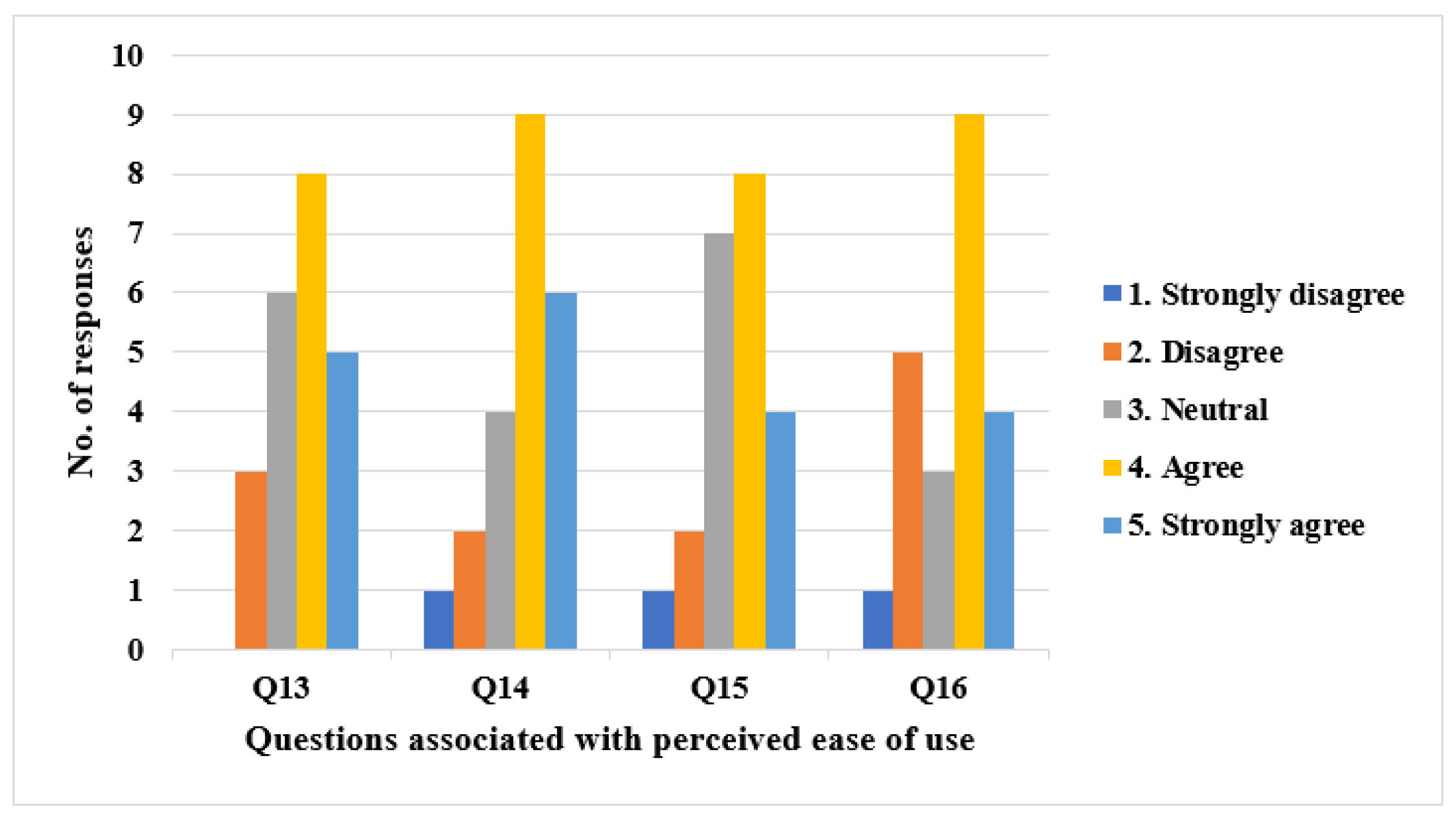

The TAM questionnaire on the use of Usevalia was filled in by a total of 22 students of the user interfaces course who carried out the usability evaluations. All these students shared a common background concerning user interface evaluation. This questionnaire contained a total of 18 questions and was divided into 3 blocks. They were the following ones: Block 1: PU; Block 2: PEU; and Block 3: PA. Block 1 was composed of questions Q1–Q12, Block 2 consisted of questions Q13–Q16, and Block 3 encompassed questions Q17–Q18 (see

Table 3).

5. Conclusions

This paper has proposed a new workflow particularly designed for usability assessments. Moreover, it has analysed the outcome of applying a methodology in which decentralisation is encouraged. Decentralisation is gaining relevance in today’s world for the promotion of access and protection of health data [

105]. New decentralised technological approaches can involve a greater number of participants in audit processes, generating more extensive and detailed results [

106].

The auditor’s satisfaction in using the CAUE Usevalia tool was evaluated. According to TAM, the variables evaluated have a great influence on the final use of the system [

107], in this case, the application of Usevalia to perform usability audits based on heuristic inspection. The results obtained have been positive, so the evaluation of the use of Usevalia by users showed satisfactory results. By using TAM, the relationship between three variables was investigated: PEU, PA and PU. Most of the causal relationships between the variables are well supported, and the study reaffirms the appropriateness of applying TAM to measure user acceptance of the technology.

The system was found to be directly influenced by PEU, PA, and PU. The results illustrate that PEU is a key element [

34] linking the variables PUand PA. This suggests that it is important to foster the user’s self-confidence that the system is easy to use. Technology is advancing rapidly and society needs time to adapt to the changes brought about by the arrival of new systems. If users find it difficult to use a system, they may genuinely believe that the system is too difficult to use and that the benefits they will gain are not worth the effort.

The usability results were analysed. The Match heuristic scored the highest, with a mean value of 3.92. This result comes from all the usability evaluations conducted in this study. This heuristic promotes that there are no major differences in terminology and the way information is presented between the real and virtual world. This finding suggests that the development of PHRs has so far focused on this approach, reducing the cognitive load [

108]. This is relevant, as PHRs are intended for a wide audience, and their use should be as simple as possible [

97].

A virtual environment as an alternative to the real world has great advantages for the healthcare sector [

109]. Mechanisms need to be found to extend the use of PHRs. Between the alternatives, enhancing the automatic uploading of medical data from the facilities could a priori generate a wider audience. This idea is in line with the Lazy User Model [

110] on the study of how actors make decisions in IS to perform the tasks. Uploading huge amounts of medical information can become an overwhelming task for users, and as a result, abandon the PHR. However, simply reviewing and querying medical data by patients would generate comfort. Several PHRs addressed this option. In NoMoreClipBoard, the feature cc:me was found, which allows the medical specialist to automatically send the information to the patient’s profile. In HealthVet, the data is displayed for premium users. It is worth noting that such practices may create responsibilities for health data. In this vein, ensuring privacy and security is crucial. Fervent debates have been generated in the academic community about the appropriateness of giving users full power over their health data [

111,

112].

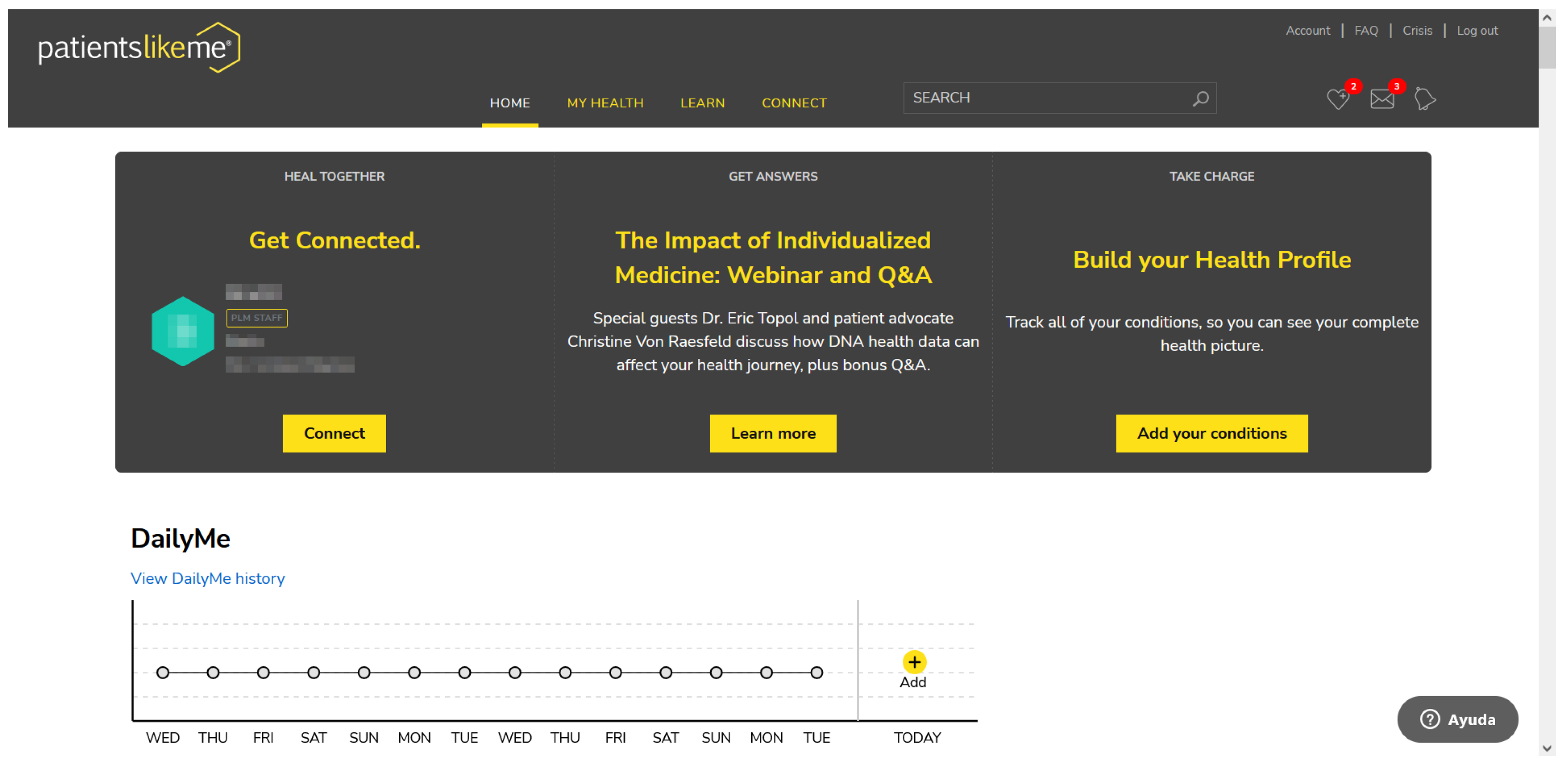

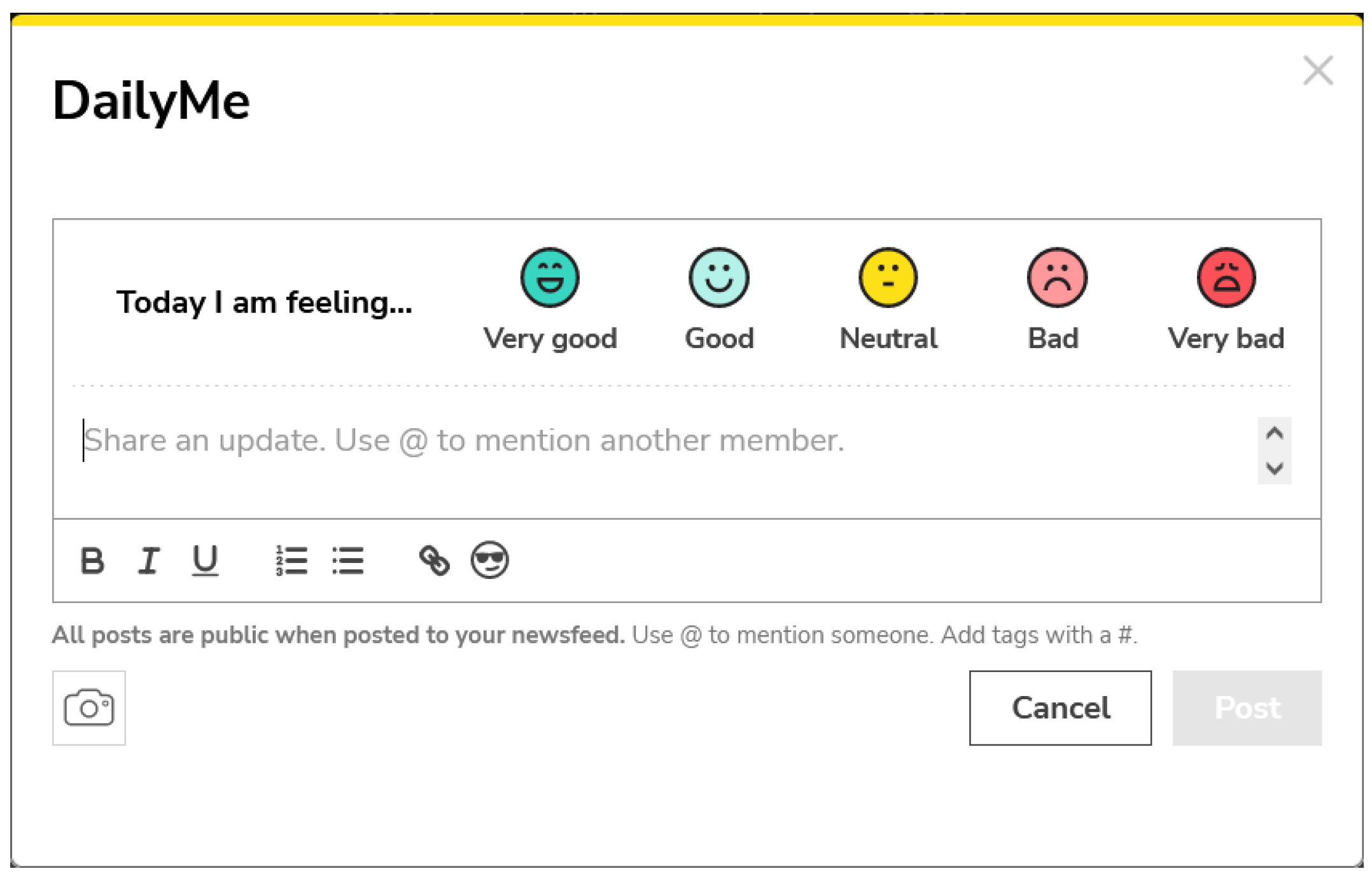

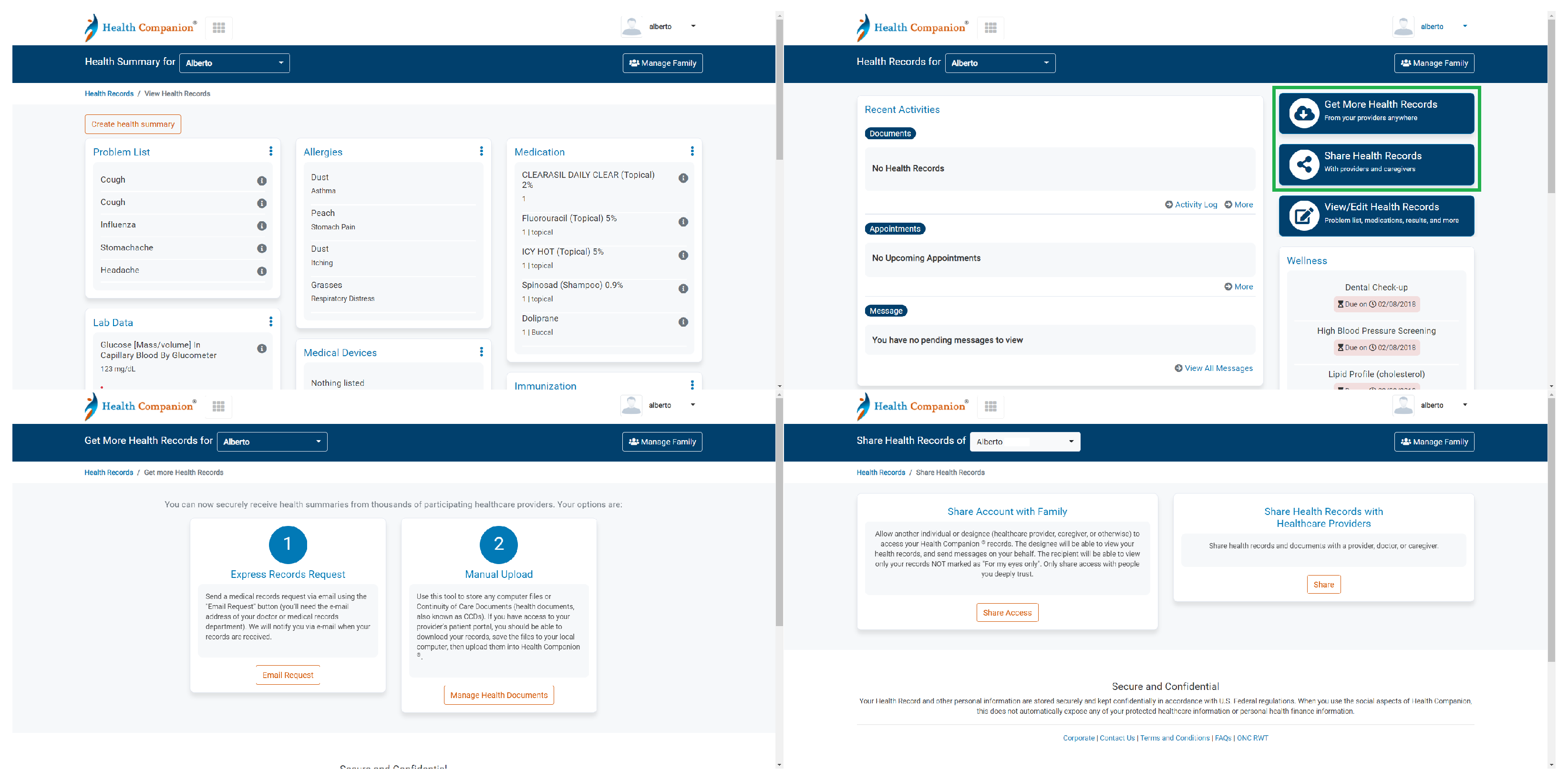

The sense of work overload when uploading medical data to the PHR was attemped to be alleviated and motivated by offering socialisation alternatives, which improve coping conditions among patients [

113]. PatientsLikeMe focuses on the concept of social networking. Users must at least indicate their conditions in order to contact other users who also have the same diseases. By receiving support from other patients, they benefit from the use of the PHR, which is the main objective. On the HealthVet portal, they promote the concept of community among ex-members in the US military. Although the PHR is not a means of communication with other users, a multitude of actions are presented to connect users in the real world with the My community section. In NoMoreClipBoard and Health Companion, no options for socialisation between users were found, although Heath Companion has recently launched the mobile application, which allows video conferencing with medical doctors. This feature of socialisation between users in the PHRs could be related to their success. The popularity of web portals was observed in the Alexa™ ranking. The results showed that those PHRs with user-to-user socialisation options ranked higher, with HealthVet ranking 2907th in popularity, PatientsLikeMe 256,628th, NoMoreClipBoard 1,598,077th and Health Companion 2,463,289th.

As future work, we propose to further develop the Usevalia tool in order to provide it with more functionality. To this end, technical features will be added to allow usability evaluations based on other methods that do not focus solely and exclusively on expert review. In addition, the possibility of extending usability evaluations to other areas such as banking, e-commerce or digital newspapers, where advertising can be very intrusive, and there is a need for mass-use web portals to be studied from a usability perspective.

Automatic testing of GUI designs could be employed to detect inconsistency issues. Widget detection in GUIs has been identified as one of the biggest challenges in automatic GUI testing [

114]. This type of test is important, as they allow gathering information about triggering sequences of GUI failures. This type of test detects unnecessary events, which can be eliminated. Research results showed algorithms that obtained an effective detection rate of more than 95.4% of irrelevant events in failure sequences, by using a processing time of about 10 s [

115]. Therefore, these tools should be analysed to produce GUIs more attractive to users.