1. Introduction

In late 2019, SARS-CoV-2 infections (later recognized as COVID-19) started appearing in patients across China and quickly spread to the entire world, exhibiting highly contagious characteristics [

1]. Within months, the virus started a global pandemic, severely overwhelming the healthcare infrastructure, even in the developed countries [

2]. During such emergency situations with unprecedented influx of patients, hospitals become severely resource constrained. In the case of novel infectious diseases such as COVID-19, initial patient assessment is usually carried out utilizing radiographic imaging such as X-rays and CT scans [

3]. This initial assessment is highly crucial in determining the severity of disease in order to establish an effective triage system for patients. Radiographic scans such as chest X-rays (CXR) are a rapid way of examining patients for symptoms of lung-related problems. They are primarily used for disease diagnosis but have also proven to be an effective modality for detecting disease progression [

4]. Radiologists often use successive CXRs to monitor the progression of infection in patients. Careful examination of CXRs reveal where and how much improvement or deterioration has taken place, which is then used to determine a future course of treatment. Although it may require a quick glance by an expert radiologist, in emergency situations, automated assessment of disease progression from radiographic scans can save precious time and can therefore be highly desirable.

Medical imaging is widely recognized as a vital resource for assisting radiologists to diagnose diseases and monitor their progression. Chest X-rays, due to their fast imaging speed, lower radiation, and relatively lower costs, are the most commonly used modality for the examination and diagnosis of pulmonary diseases [

5]. Although other modalities such as computed tomography (CT) or magnetic resonance imaging (MRI) reveal high-resolution, detailed anatomy in 3D mode, CXRs are widely available and inexpensive and are therefore extensively used by radiologists [

6]. Considering the widespread use of CXRs in COVID-19 and other infectious pulmonary diseases, researchers have widely investigated the use of image processing, machine learning, and techniques based on deep learning to interpret them. Although sufficient success has been achieved in detection of infectious diseases from CXRs, they are still regarded as the most challenging plain film to interpret correctly [

7].

Advances in artificial intelligence techniques, particularly deep learning, have led to several breakthroughs in many challenging medical image analysis and interpretation tasks, including detection, grading, delineation, and even understanding of pathological disorders in radiographic scans [

8]. The availability of huge volumes of imaging datasets and the superior performance of learning algorithms have enabled methods based on deep learning to surpass human experts in disease identification [

9]. Regarding CXRs, deep convolutional neural networks (CNNs) have been able to achieve highly desirable performance in the detection and diagnosis of thoracic diseases as well as successfully differentiate between bacterial and viral pneumonia [

10]. In the case of COVID-19 infection, early-stage CXRs reveal interstitial infiltration in peripheral lung and subpleural regions, which gradually develops into ground glass opacities, and consolidations with varying degree of densities [

11]. The health of patients with COVID-19 infection can rapidly worsen from a mild to moderate or severe state in a matter of days. In such a case, it becomes necessary to monitor the progression of patients, thereby making it necessary to obtain CXRs on a regular basis. Radiologists are therefore required to investigate the progression of infection in patients by simultaneously reading the current and previous CXRs. It is a cumbersome and sometimes challenging task to spot subtle changes in both scans. Hence, an AI-assisted method to predict disease progression from successive scans becomes highly beneficial.

Radiologists compare successive radiographic scans to determine disease progression (i.e., change in disease status over time) in a particular patient. Most current prediction systems work with a single scan to determine severity of a disease and utilize that scoring to infer its prognosis. For instance, Signoroni et al. [

12] developed a multipurpose network to detect COVID-19 pneumonia, segment and align lung regions, and output severity scores by dividing the lungs into six regions. A regression head was trained on a large dataset with severity scores provided by expert radiologists for the purpose of estimating disease severity. The model achieved a mean absolute error (MAE) of 1.8. In a similar study, Cohen et al. [

13] pretrained a DenseNet [

14] model on 18 common radiological findings from several publicly available datasets. A linear regression model was then trained on severity scores for pneumonia extent and opacity scores provided by three expert radiologists. Amer et al. [

15] trained a deep learning model to simultaneously train a detection and localization model for pneumonia in CXRs. The localization maps were then used to estimate a pneumonia ratio indicating severity of infection. Although these works effectively determined disease severity, their use of a single image did not allow them to compute the relative difference in successive scans. Sun et al. [

16] used time-aware LSTM to predict disease progression using demographics and laboratory tests. The outcome was computed in terms of survival and mortality. The LSTM network received these readings about patients at different times and attempted to predict survival or mortality. They exhibited that their model was capable of predicting mortality with a high degree of accuracy. Shamout et al. [

17] showed that considering CXRs along with clinical tests and patient demographics was paramount in predicting the risk of deterioration in the near future. They were able to estimate a deterioration risk curve for patients that indicated the occurrence of adverse events in the future. Pu et al. [

18] utilized CT scans to determine COVID-19 disease severity and progression through segmentation and registration of the lung boundary. They identified the regions with pneumonitis and assessed progression using the segmented regions. They also generated heatmaps to indicate the affected regions, which were rated as “acceptable” by the radiologists. Feng et al. [

19] also studied chest CT scans along with clinical characteristics to predict disease progression. They scored each of the five lung lobes on the basis of their involvement in the infection, and the scores were then summed to obtain an overall severity score. A similar study was conducted by Zhang et al. [

20] to exhibit the capability of AI in determining disease progression from CT scans. Sriram et al. [

21] developed a deterioration prediction model in terms of adverse events occurring within 96 h and mortality within the same duration from single or multiple scans. They showed that utilizing the transformer-based architecture yielded the best performance when used with multiple scans. Although the use of single image to predict severity and disease progression is dominant in the existing literature, we believe that a better estimation of disease progression could be made if multiple scans are utilized simultaneously. In this regard, we propose to utilize multiple successive scans (at least two) simultaneously to predict disease progression by comparison through deep learning methods. We model disease progression as a sequence learning problem utilizing CXR-specific features from successive scans. This strategy can be used in conjunction with severity detection models, which can only predict severity scores but cannot differentiate between two CXR with similar severity scores. There can be subtle changes indicating improvement or deterioration within a particular severity class, which the existing methods cannot detect. Automated patient monitoring systems can make good use of disease progression systems that utilize successive scans to detect positive or negative changes.

The primary objectives of this study are as follows:

Development of a novel sequence learning strategy for successive radiographs that can detect subtle changes in CXRs and determine improvement or deterioration.

Propose a patient profiling method to monitor disease progression in individual patients. The method incorporates positive or negative change in radiographs compared to a reference scan, duration between scans, and patient age to model deterioration as a function of time and age.

2. Materials and Methods

2.1. Datasets

In this study, we used a large dataset from the Valencian Region Medical Image Bank (BIMCV COVID-19) [

22], which contains 2465 COVID-19 CXRs from 1311 patients. From this data, we extracted 1735 CXRs of 582 patients who had multiple scans from multiple sessions along with the associated radiologist reports. The majority of patients had 2, 3, or 4 scans, whereas some patients even had 12 or 15 scans from subsequent sessions (frequency distribution of the dataset is provided as

Figure S1 in the Supplementary Material). This subset was then annotated with the help of a radiologist in terms of improvement and deterioration in the associated reports and visual examination of the scans (visual changes that appear in CXR in response to COVID-19 infection is provided in

Figure S2 in the Supplementary Material). There was insufficient number of samples with no change as reported by the radiologist. Therefore, the no-change assessment was made on the basis of confidence scores of the trained model such that smaller difference in both probabilities was interpreted as negligible change or no change. The dataset was split into two sets of training and validation, with proportions of 70% and 30%, respectively.

The second dataset was the COVID chest X-ray dataset [

23], which was used to evaluate the performance of the proposed disease progression detection method. From a total of 472 patients, 177 were excluded because they had only one CXR. Therefore, 930 scans from 295 individual patients were used. Each set of scans was annotated with various attributes, including scan acquisition date, age, gender, survival, need for supplemental oxygen, intubation, admitted to ICU, and others. This dataset was used to test cross dataset performance of the proposed method to determine disease progression for individual patients. It was also used to assess model robustness and effectiveness of the proposed method.

Figure 1 shows the population statistics for both the datasets used in this study.

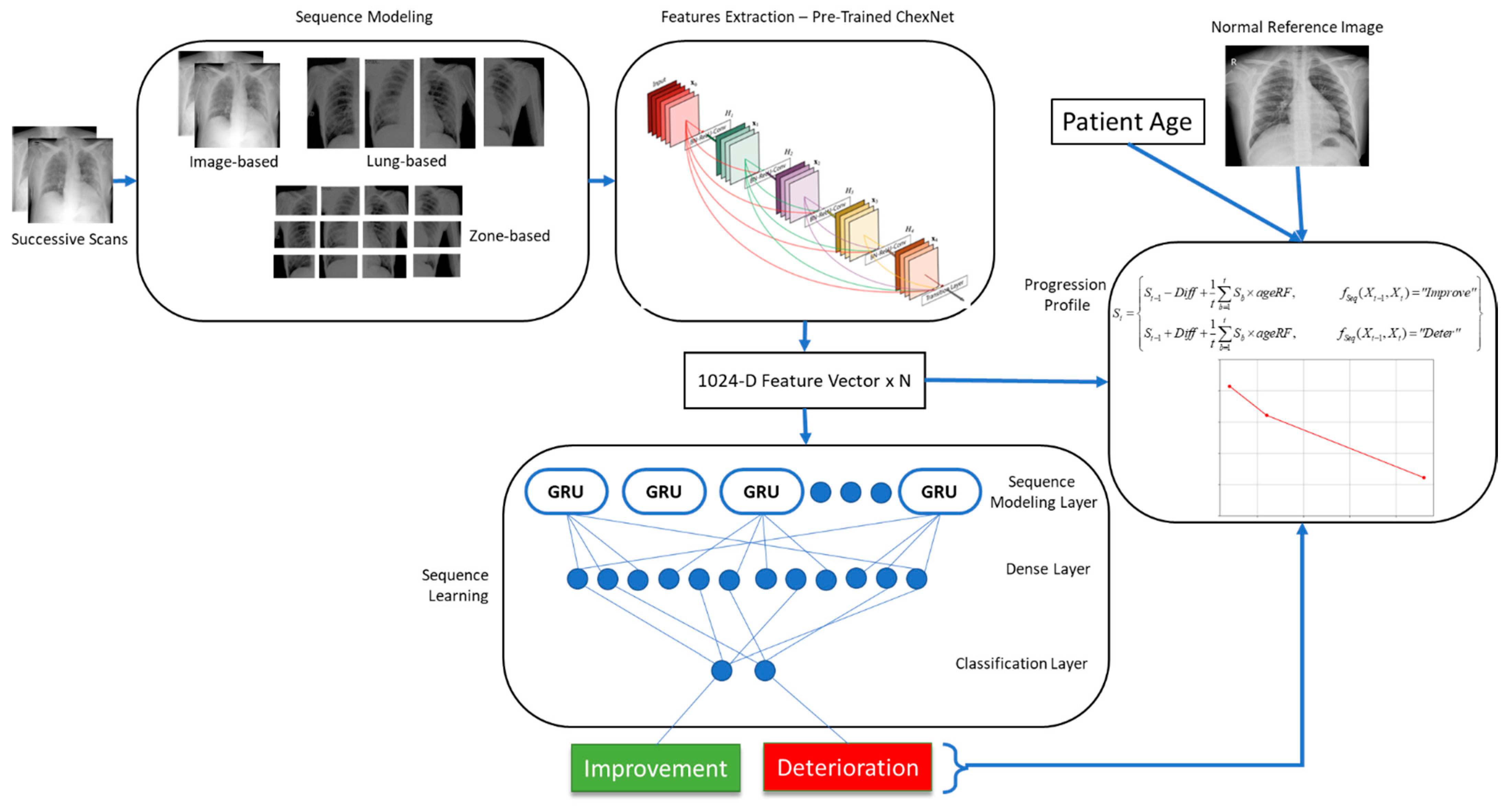

2.2. Proposed Progression Detection Framework

This method relies on a deep convolutional neural network to extract features from individual radiographic scans. This network should be capable of identifying a wide variety of abnormalities in CXRs. For this purpose, we trained a deep CNN to classify CXRs into mild, moderate, and severe infections of COVID-19. Features extracted by this network for a pair of scans were then fed into a long short-term memory (LSTM) network for sequence learning. This sequence was then classified into two classes of improvement and deterioration. The length of the sequence depended on the granularity of the annotations. For image level annotations, the sequence consisted of feature vectors from two images. Similarly, for lung and zone-level annotations, the sequence length was increased to 4 and 12, respectively (Zone based segmentation of CXR is provided as

Figure S3 in Supplementary Material section).

The features were extracted from convolutional layers, where each neuron was sensitive to a particular visual characteristic in the image. Corresponding features could be compared to effectively learn about the changes in image characteristics. The LSTM network then determined progression of the COVID-19 infection via sequence analysis.

Disease progression detection in this work was modeled as a multiclass classification problem via sequence learning as follows:

where the input is a pair of successive CXRs in the frontal view [

Xt-1,

Xt] and the output is a label

y ∈ {I, D indicating whether any improvement (I) or deterioration (D) is noticed in the pair of scans, and

fSeq is the sequence learning function. In order to achieve this, we utilized a pretrained model ChexNet [

8] to extract CXR-specific features from both images. Further details regarding each component in the proposed framework, shown in

Figure 2, are provided in the subsequent sections.

2.3. Feature Extraction

Each scan in the pair of successive scans was separately fed to the ChexNet model for feature extraction. This network was pretrained on a large dataset containing images representing 14 different lung pathologies. The network was able to detect lung diseases at the level of an expert radiologist. For using this network as a feature extractor, the last classification layer of the network was dropped, and activations from the second last layer (7 × 7 × 1024) were obtained as feature maps. Each of these maps were generated by one of the neurons in the CNN that had become sensitive to a particular characteristic in the CXR image. The presence of that particular pattern was indicated by the position of the high activations in the map. The number of high activations in a map showed the size of that pattern in the scan. We believe that accumulating activations from each map reveals the strength of specific patterns in a particular CXR. Therefore, we used global average pooling (7 × 7) to obtain a 1024-dimensional feature vector for each image. Each value in this vector indicated the presence, absence, or strength of individual neuronal activations in the image. The output obtained from the feature extraction module is as follows:

where

fChexNet is the feature extractor function (the ChexNet model in this case),

Xt is the input CXR image, and

Ft is the resulting feature vector. The extracted features from both scans were then constructed into a sequence, which was then utilized by the sequence modeling module for learning. The sequence was obtained as follows:

In addition to extracting features from the entire image, more fine-grained features were also extracted from each scan. Firstly, each lung was segmented from both scans, and features were extracted from them individually. For both the left and right lung in each image, a separate 1024-dimensional feature vector was obtained. The sequence was then constructed by combining the extracted feature vectors as given in (4), resulting in a sequence length of 4.

where

are the features extracted from left lung in the previous scan, left lung in the current scan, right lung in the previous scan, and right lung in the current scan. Here, the features extracted from individual lungs were considered as finer-grained and more expressive compared to the features extracted from the entire image.

Going one step further, we also subdivided each lung into three zones of upper, middle, and lower and extracted features from each zone using the ChexNet model. These features were the most fine-grained features and regarded as the most expressive ones. The sequence length in this case became 12. The final sequence in this case was constructed as follows:

2.4. Sequence Modeling

Convolutional feature output by neurons in deeper layers of the ChexNet model were sensitive to specific properties in CXRs corresponding to the 14 abnormalities the network was trained to recognize. The labeled sequences we generated from the dataset were strictly unidirectional. If the order of the sequence were to be reversed for improvement or deterioration, their labels would also get reversed, giving rise to a new sample. Utilizing this strategy, we expanded our dataset by reversing pairs of images labeled “improvement” to obtain a sample labeled “deterioration” and vice versa. These sequences were then used to train the deep sequence learning model.

2.5. Deep Sequence Learning

Sequence learning is a common machine learning task, where an algorithm attempts to learn from time series data such as text sentences, speech audio, videos, and sensor readings to solve classification and regression problems. In this work, we employed deep sequence learning technique to determine improvement or deterioration in successive radiographic scans obtained over time. For this purpose, gated recurrent units were used to perform sequence learning.

The inbuilt memory mechanism implemented via gates in GRUs allow it to effectively model both short and long sequences without running into vanishing gradient problems. These characteristics make it a suitable candidate for sequence learning of subtle change detection in successive CXRs.

The sequence learning model was constructed using the ChexNet model, followed by a GRU layer, a dense layer, and finally a classification layer. The input layer took N images/patched from two successive scans depending on the granularity of feature extraction, which were input to the feature extraction network (ChexNet). This network output a 1024-dimensional vector for each image. The sequence constructed from N images of the sequence was then forwarded to the sequence learning layer consisting of 1024 GRUs. This was followed by a dense layer of 512 neurons, and finally the classification layer output probabilities for the two classes (Complete network architecture is provided in the

Supplementary Material as Table S1).

2.6. Progression and Severity Detection

The proposed progression detection method can be used to estimate disease progression in CXRs when they are compared with known CXRs. The output of the detection model predicting either improvement or deterioration if quantified can be far more useful in determining progression. In an attempt to quantify the change in successive scans, we devised a progression estimation function (PEF) where features from the current CXR was compared with those of the previous CXR and the normal reference CXR. The inverse cosine similarity using deep features extracted from the ChexNet model was then used as a quantification mechanism for positive or negative change in both scans. An additional reference distance was also computed by taking the difference of the two CXRs from a reference normal scan. Furthermore, age has also been noted as a potential risk factor associated with COVID-19 patients. In this regard, we computed age risk factor for these patients by analyzing historical data. A risk score was computed using (6) for each age group (as depicted in

Figure S4 in the Supplementary Material).

where

ageRFa is the risk factor associated with the age group

a,

is the number of COVID-19 cases in the age group

a, and

is the number of COVID-19 deaths in the group. The risk factor was simply the ratio of deaths in a particular age group to the total number of COVID-19 deaths reported for a period of time. The risk factor scores for this study were calculated from CDC data for the US [

24].

This risk factor was then used as a penalty term in the PEF to determine overall progression by modeling quicker deterioration and slower recovery rates for older patients compared to younger patients. The algorithm for determining patient progression profile is provided below as Algorithm 1.

| Algorithm 1: Progression Estimation Function |

Input:

Fref = The reference normal CXR

Fprev = The previous CXR

Fcurr, = The current CXR

d = The duration in days between the two successive CXRs

ageRF: Age risk factor computed from historical data using Equation (10)

PEF (Fref, Fprev, Fcurr, d, ageRF)

Extract features from all three input scans to construct feature vectors Fref, Fprev, and Fcurr using

Compute inverse cosine similarity between the feature vectors as

Compute the relative difference between successive CXRs using the weighted summation

The Diff is then added or subtracted to the previous profile score based on the prediction of the sequence model, or the score remain unchanged if no change is reported.

Return St |

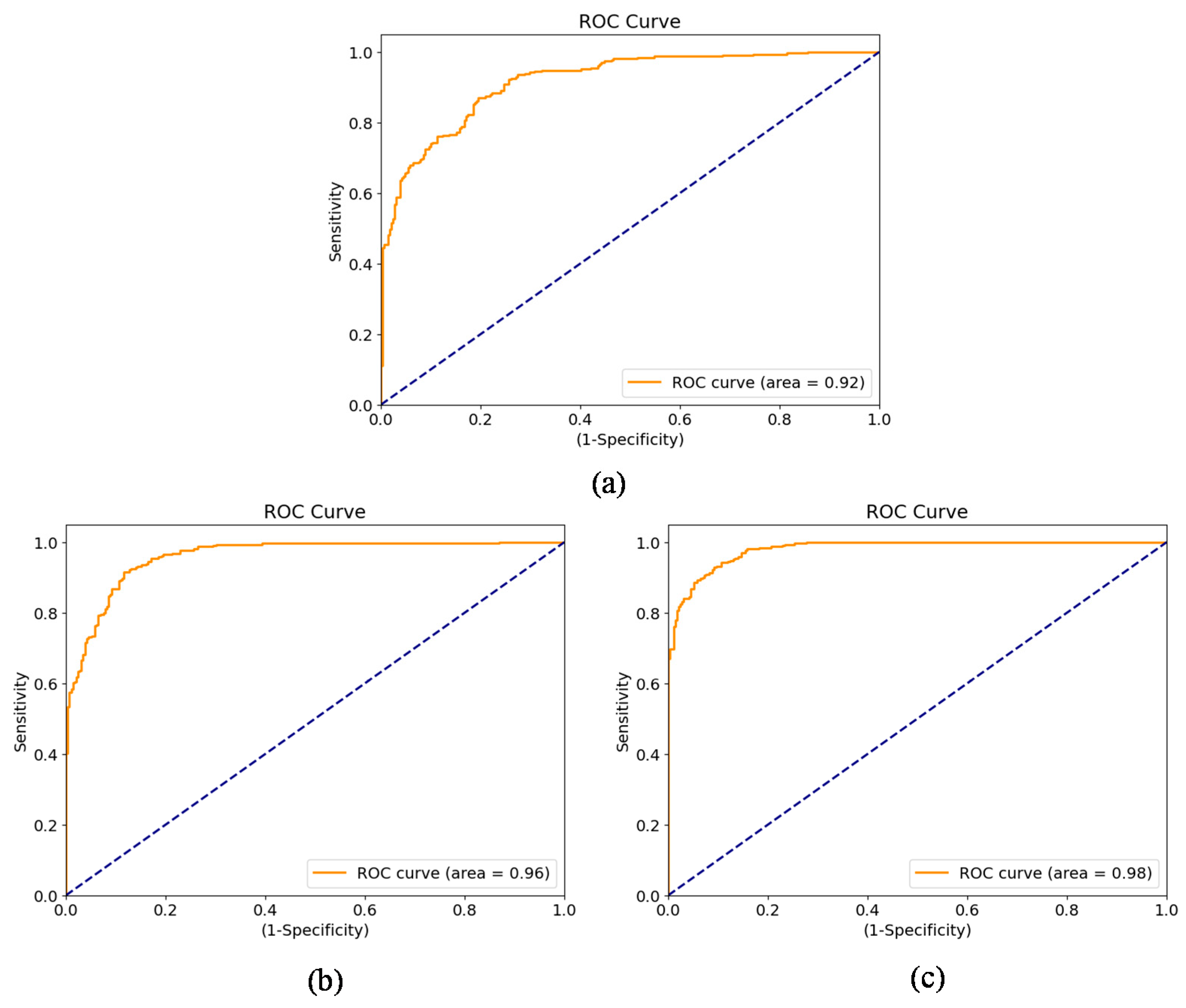

Different sets of experiments were designed to determine optimality of the proposed framework and evaluate its performance with the optimal set of parameters. For this purpose, we experimented with a variety of feature extraction strategies from CXRs. Some of the most capable CNN architectures were evaluated as feature extractors for our sequence learning problem. Finally, the architecture of sequence learning model consisting of GRUs was optimized through experimentation.

2.7. Experimental Setup

The proposed framework was implemented in Google TensorFlow 2.4 (Google, Mountain View, CA, USA) with CUDA support. All the experiments were performed on a PC equipped with a 10th Gen Intel Core i7 CPU (Intel Corporation, San Jose, CA, USA) with 16 GB RAM and an Nvidia RTX 3060 GPU (Nvidia, Santa Clara, CA, USA) with 12 GB memory, running Microsoft Windows 10 (Microsoft, Redmond, WA, USA).

4. Discussion

Previous studies have proposed a wide variety of methods to predict progression in COVID-19 patients [

4,

16,

18,

19]. However, little attention has been paid to analyze time series observations and modeling of the sequential characteristics of COVID-19 patients. Radiologists regard it as essential to observe the relationship between historical and current observations (vital signs, radiographic scans, physiological characteristics, lab tests, etc.) in order to make informed decisions regarding patient health [

16]. It has been found that more accurate predictions regarding disease progression can be made by considering both historical and current readings. In this regard, Sriram et al. [

21] studied methods based on both single and multiple scans and found that the sequence-based models outperformed those based on single scans. Their multi-image prediction method yielded superior performance in predicting probabilities of ICU admission, intubation, and mortality. Their approach utilized feature pooling and transformer to perform sequential analysis of multiple CXRs. The authors predicted adverse events with considerable accuracy, but individual patient profiling was not performed. In a similar study, Sun et al. [

16] showed that time series analysis of clinical biomarkers can be effectively modeled in order to perform survival prediction in COVID-19 patients. Although they were able to predict disease outcome, they did not utilize radiographic scans. In another study, Jiao et al. [

30] proposed a method based on deep learning to predict patient admission, ICU admission, mechanical ventilation, death, and discharge using CXR and clinical data including lab tests and vital signs. However, they used a single image, and the method’s dependency on availability of clinical data means its use is limited in situations where past observations are unavailable.

Several challenges exist when considering sequences of observations (radiographs or other clinical records) for determining progression. Irregular interval between observations and missing values are a few of the problems researchers have to deal with. Deep learning methods have exhibited outstanding performance in prediction tasks. They have also been found to achieve excellent performance in time series prediction. Disease progression detection is intuitively regarded as a sequence observation problem. Therefore, deep sequence learning models are the best candidates for solving this problem. In this work, a method based on sequence learning was proposed and evaluated on COVID-19 datasets to determine disease progression using successive radiographic scans. Deep convolutional features from a pair of scans were utilized by the sequence learning model consisting of GRUs to determine improvement or deterioration. The proposed method was able to predict the change with considerable accuracy. This model forms the basis for a disease progression estimation function that attempts to quantify change and construct disease progression profiles for individual patients. The cases with both deterioration and improvements were more challenging, yet the proposed method was able to detect changes and accurately determine progression.

In most studies, deep learning models are considered as black boxes with very little focus on their interpretability, which restricts their adoption in clinical settings. Medical experts desire interpretable models and are keen to understand how the prediction can be interpreted. In this regard, further work can be carried out with the proposed method to make it interpretable up to some extent. One possibility is to incorporate gradient class activation maps (GradCAM [

31]) to visualize biomarkers in the sequence of radiographic scans. It can be helpful for the radiologist if the model can highlight regions where improvement or deterioration has taken place. This will significantly reduce the time needed to prepare the diagnosis report based on the sequence of scans. Similarly, inclusion of other parameters such as comorbidities and other relevant clinical variables into the overall framework will enhance prediction performance of the method. Segmentation-based preprocessing can also be incorporated to further enhance the proposed model. Finally, the mathematical model of the framework needs to be improved so that the progression estimation is carried out on a uniform scale, which would allow better overall management of patients, particularly in emergency situations.

5. Conclusions

In this work, we showed that a deep sequence learning model can be used to determine disease progression in COVID-19 CXRs. It was also observed that features extracted from the ChexNet model were superior in their representation ability for COVID-19 CXRs. The proposed method of deriving patient progression profile using positive or negative change in conjunction with the patient age can also be used with other modalities of medical imaging, such as CT scans, MRI, and skin lesion images, to monitor disease progression and even predict prognosis. We believe that our method has promise and that further research is needed to improve the radiographic scan comparison and patient profiling method, which can be helpful in developing an automated triage system for use in unforeseen emergency situations.

One of the shortcomings in the proposed method is that the progression profile is not generated on a uniform scale. Therefore, in its current state, patient progression profiles cannot be directly compared to assess which patient is deteriorating faster compared to the rest. Similarly, we have not considered other variables that add risk to deteriorating patients in COVID-19, such as comorbidities.

In the future, we intend to use multipurpose deep learning methods to automatically quantify improvement or deterioration in successive scans and also predict prognosis. We also plan to use a diverse dataset to fine-tune models for detecting COVID-19-specific abnormalities along with their severity in a single end-to-end sequence learning architecture. Furthermore, integration of other risk factors with the proposed method can reveal interesting insights into disease progression and prognosis prediction of COVID-19 patients.