Making Decision-Making Visible—Teaching the Process of Evaluating Interventions

Abstract

1. Introduction

2. Cognitive Mapping

3. EBP Curricula

4. Problem Solving in Medicine

4.1. Heuristics and Causal Models

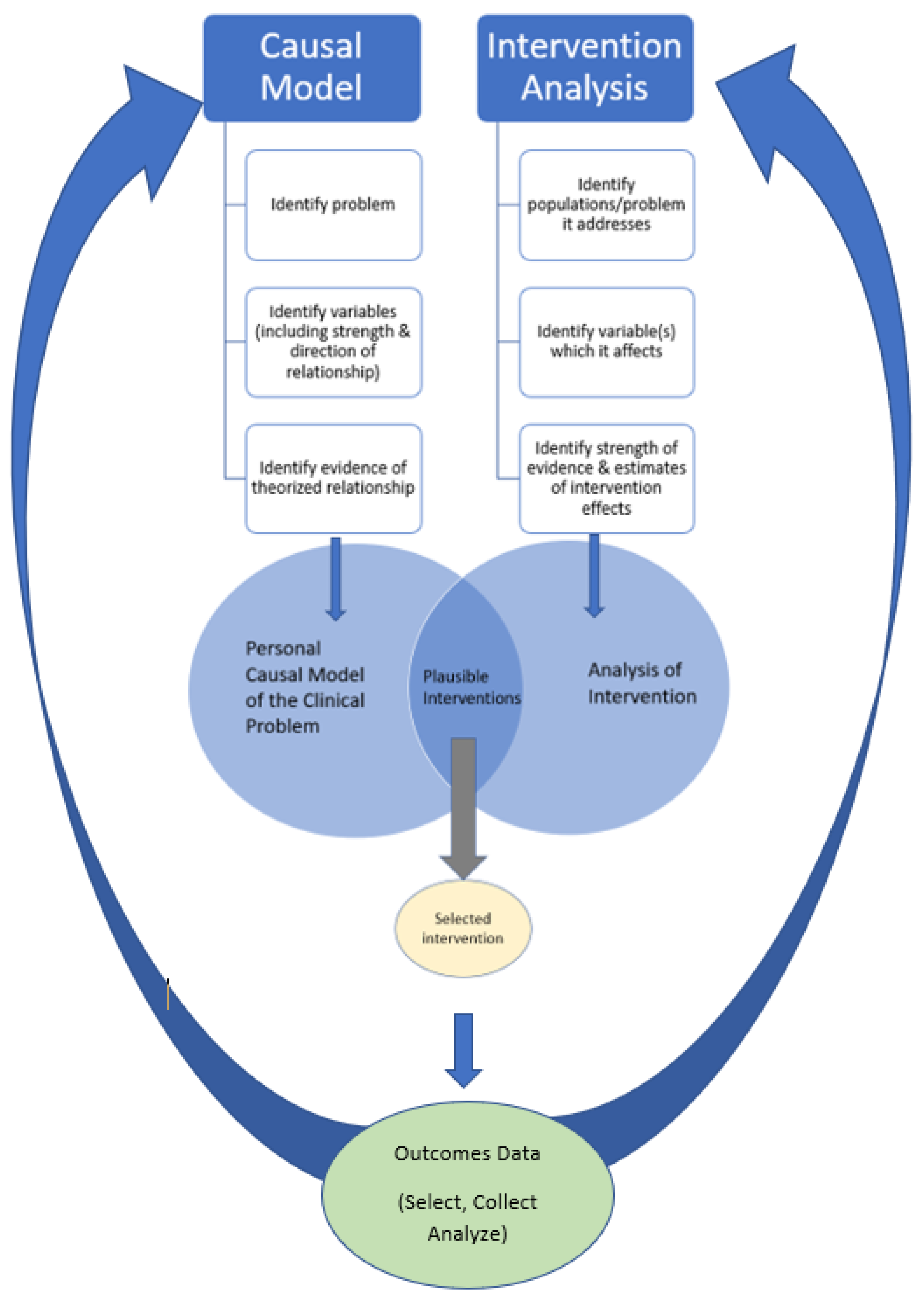

4.2. Causal Models and Interventions

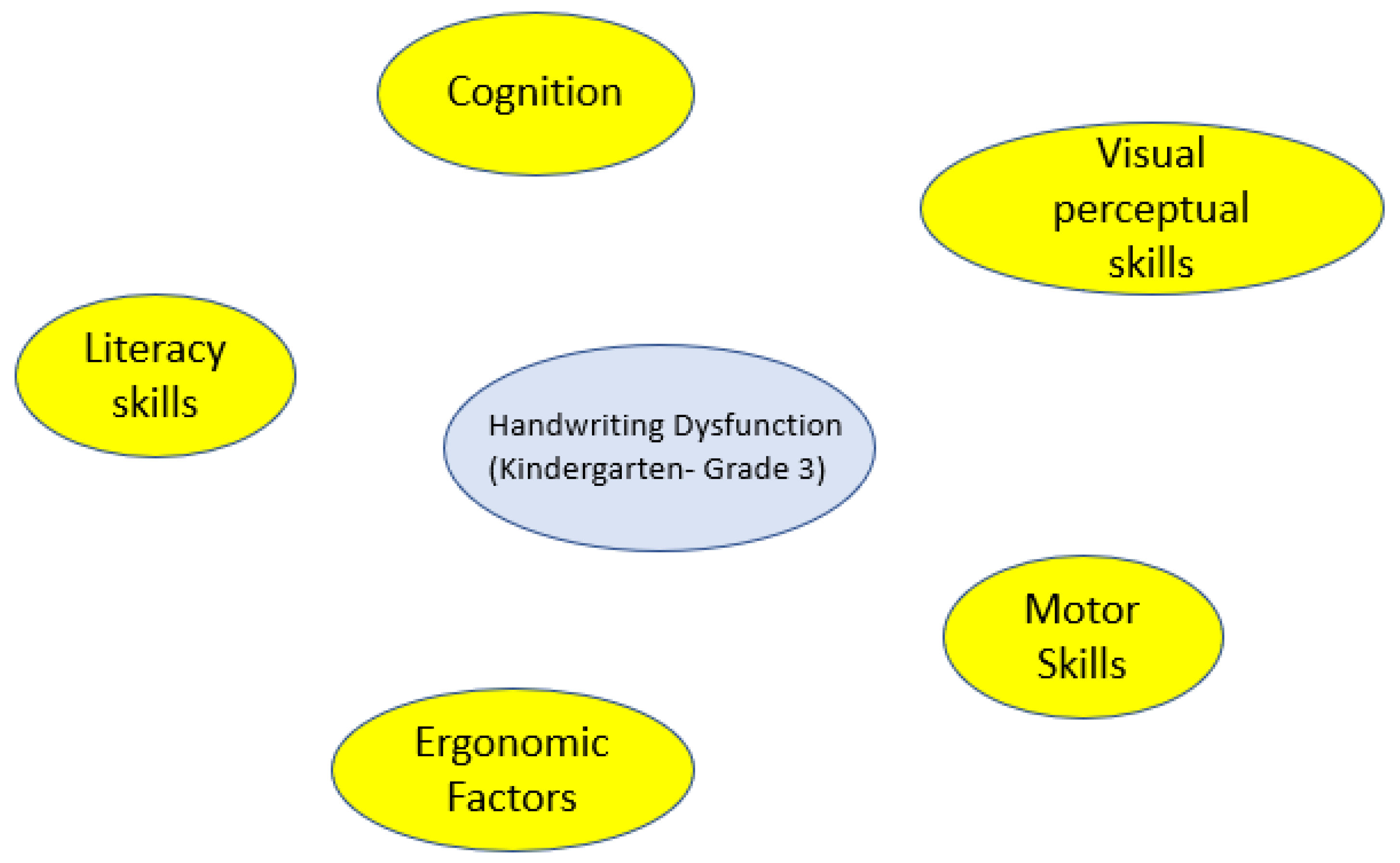

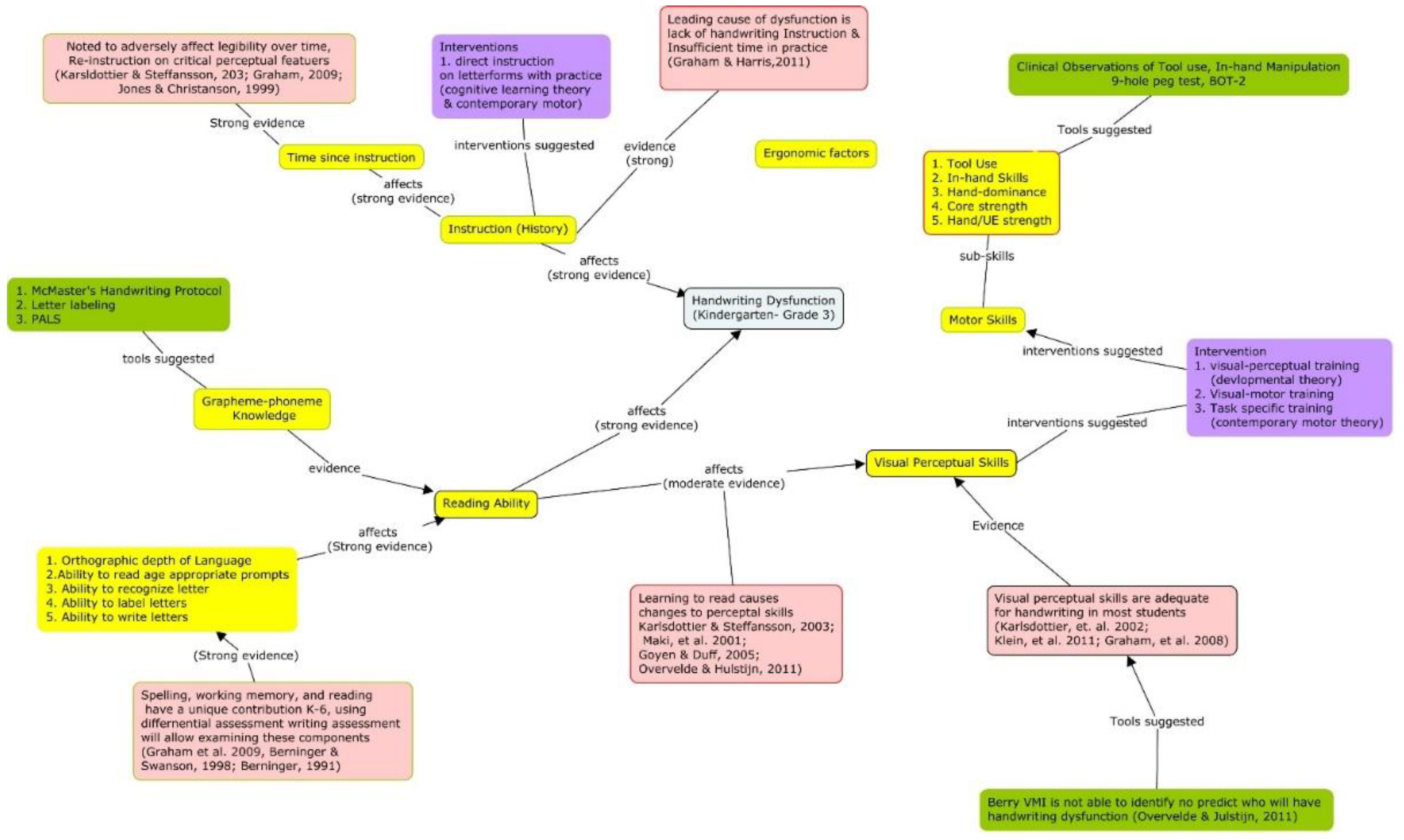

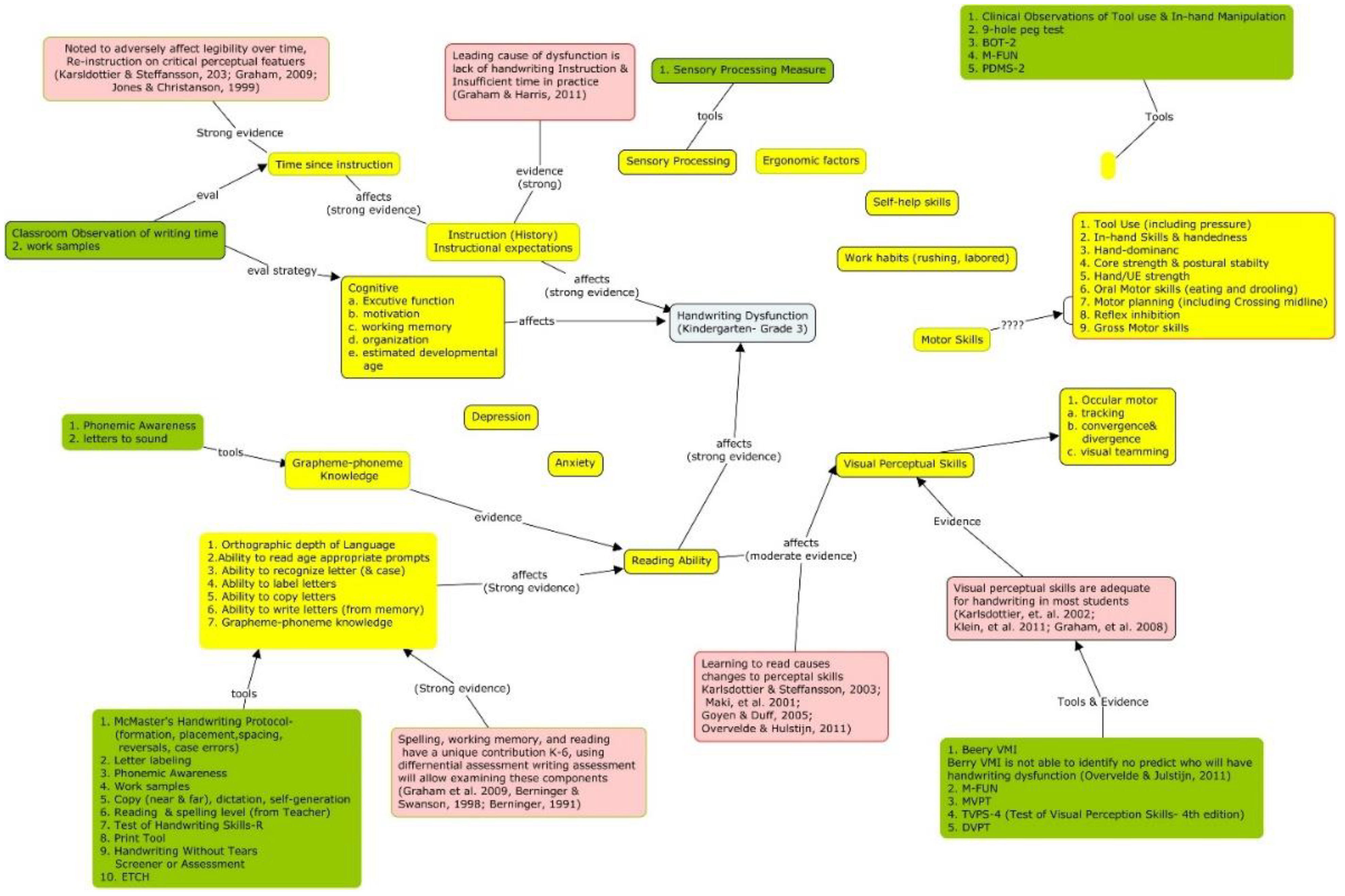

4.3. Causal Mapping

4.4. Making Decision-Making Visible

4.5. Intervention Theory

4.6. Teaching How to Think about Interventions

Clinical Example of Intervention Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Intervention Rubric

| Category | “Best Practice” | Competent | Inadequate |

|---|---|---|---|

| Clearly names intervention and provides alternative names that may be associated with the intervention, (e.g., The Listening Program and auditory integration training) and links to resources that provide specific, in-depth information about the intervention. | Provides only the name of the specific intervention being described and links to resources that provide specific, in-depth information about the intervention. | Does not clearly name intervention nor provide links to resources. |

| Clearly describes the theoretical model(s) and tenets that were used to develop the intervention, including:

| Identifies the theoretical model used to develop the intervention, including:

| Labels the theoretical model used to develop the intervention. |

| Clearly describes what population should be considered and/or ruled out as potential candidates for this intervention

| Labels and describes what clinical population would benefit and not benefit from this intervention. | Provides only minimal information on inclusion/exclusion for intervention |

| Clearly describes a “best practice” aspects of measuring outcomes of intervention, including:

| Provides major indicators that should be monitored for measuring the impact of the intervention.

| Does not provide any information on measuring the impact of the intervention. |

| Intervention Process “Key elements of Intervention” | Clearly describes a theorized or evidence of the process by which the intervention causes the change. | Based on a broad theory (e.g., dynamic systems, diffusion of innovation, etc.), and/or heavy reliance on values and beliefs. | Does not address. |

| Clearly describes important indicators that should be considered while reflecting on the client-intervention- outcome process. Clearly describes all of alternative interventions which should be considered and ruled out due to clinical and/or client characteristics (e.g., consistent with differential diagnosis) | Highlights some indicators that should be considered while reflecting on client-intervention- outcome process. Identifies some alternatives interventions which should be considered. | Does not provide any indicators that should be considered while reflecting on client-intervention- outcome process. Does not identify any alternative interventions which should be considered. |

| Peer-reviewed Evidence Clearly describes the strength of evidence to support using this intervention with each population

Provides clear synthesis of clinical evidence | Peer-reviewed Evidence Uses multiple person/multiple site narratives/clinical experience to describe the client change experienced when using this intervention. Clinical Evidence Describes a systematic outcomes data collection system, including the list of data points collected, however only some clinical outcomes data provided | Peer-reviewed Evidence No peer-reviewed evidence is provided, reliance on expert opinion and personal experience Clinical Evidence Anecdotal experience with general description of client outcomes and heavy reliance on goal achievement (unstandardized and/or lacks evidence of reliability/validity, limited interpretability of change scores) |

- Name: Intervention has a clear name that allows it to be distinguished from other interventions.

- Interventions which have more resources available, such as protocols or manuals, provide deeper understanding of the assessment, application (activities which should and should not be done), and the theoretical underpinnings which provide explanation of how the intervention causes a different outcome.

- Fidelity Tools: provide criteria to assess current practice for similarities and differences and assess if application done locally is develop consistent with the intervention as theorized or in efficacy studies.

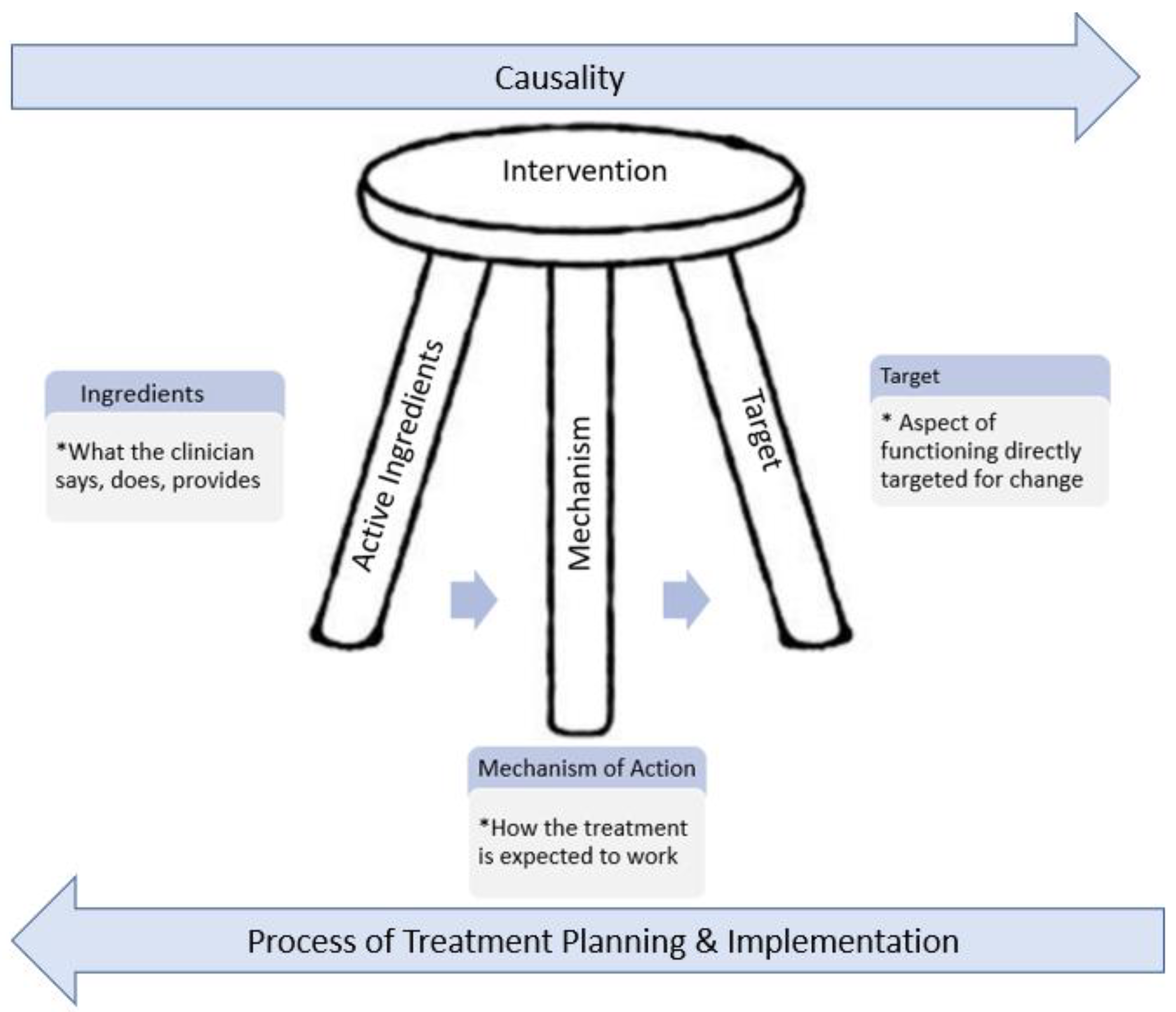

- Theory—“Why it works”Interventions are developed using treatment theory, which identifies a specific group of activities which specify the mechanism by which the active ingredients* of a treatment intervention produce change in the treatment target. In other words, it identifies the aspect of function that is directly impacted by the treatment. A well-defined treatment theory will identify active ingredients (those which cause the change) from inactive ingredients. See Figure 5 and Hart & Ehde [82] for a more extensive discussion of treatment theory.

- Active ingredients involve at a minimum those things that the clinician does, says, and applies to the client that influence a targeted outcome. These include both communicative processes and sequential healing, learning, and environmental processes.

- Essential ingredients are those activities which must be included in order for it to be a given intervention.

- Mechanism of Action: clearly identifies the processes by which the ingredients bring about change on the outcome target.

- Measurable/observable treatment targets: “things” that you measure or observe to know if the intervention is beginning to work in therapy, i.e., clinical process measures.

- Population: Clearly defines who is or is not a member of the population of interest, including clinical characteristics.

- Inclusion/exclusion criteria: what are clinical or client characteristics indicating that the intervention should or should not be applied.

- Measuring the Impact of Intervention: Clearly describes a “best practice” aspect for choosing and observing/measuring outcomes of the intervention including:

- Using the ICF Framework, identifying the measurement construct that are indicators of change theoretically “caused” by the intervention. See addendum a: Outcome Domains Related to Rehabilitation.

- Collecting client reported outcomes at baseline (before), during, end, and after discharge from therapy. Distal measures should include satisfaction, value of change, and functional change outside of the medical model indicators (e.g., activity, participation, or quality of life levels of the ICF). Distal measures will provide evidence that the clinical interventions translated to meaningful change in life circumstances (See do we want to add reference that limited evidence that clinical goals have real world affects)

- Identify and describe the standardized subjective and objective measures that should be used, including the psychometrics and appropriate clinical interpretations of the scores obtained

- Identification of assessment and evaluation tools which have known reliability, sensitivity, and predictive validity, when possible.

- Describe “best practice” for non-standardized data points, e.g., provides clinical data indicators which should be systematically collected locally if the intervention is provided.

- Intervention Process- “Key elements of Intervention”

- Clinical reasoning: clearly describes the important indicators (i.e. person, organizational, socio-political factors, etc.) that should be considered on the intervention process, including conditional decisions regarding strategy feasibility, applicability, and appropriateness in the specific situation.

- Evidence “What Works”:Peer-reviewed Evidence:

- Clearly describes the strength of evidence supporting the use of the intervention with each population.

- Peer-reviewed evidence would be provided when possible.

Clinical evidence:- Provides a synthesis of clinical outcomes data including: number of sites using the intervention and number of clinicians (indicators of replication)

- Provides the estimated number of clients who have received the intervention and who have pre-post outcome data

- Provides estimates of the number and characteristics of patients who did not respond to treatment in the expected time frame.

- Provides information on how they minimized bias and allocated patients to the “new” intervention (compared to standard treatment)

References

- Lehane, E.; Leahy-Warren, P.; O’Riordan, C.; Savage, E.; Drennan, J.; O’Tuathaigh, C.; O’Connor, M.; Corrigan, M.; Burke, F.; Hayes, M.; et al. Evidence-based practice education for healthcare professions: An expert view. BMJ Evid. Based Med. 2019, 24, 103–108. [Google Scholar] [CrossRef]

- Melnyk, B.M.; Fineout-Overholt, E.; Giggleman, M.; Choy, K. A test of the ARCC© model improves implementation of evidence-based practice, healthcare culture, and patient outcomes. Worldviews Evid. Based Nurs. 2017, 14, 5–9. [Google Scholar] [CrossRef]

- Wu, Y.; Brettle, A.; Zhou, C.; Ou, J.; Wang, Y.; Wang, S. Do educational interventions aimed at nurses to support the implementation of evidence-based practice improve patient outcomes? A systematic review. Nurse Educ. Today 2018, 70, 109–114. [Google Scholar] [CrossRef]

- Saunders, H.; Gallagher-Ford, L.; Kvist, T.; Vehviläinen-Julkunen, K. Practicing healthcare professionals’ evidence-based practice competencies: An overview of systematic reviews. Worldviews Evid. Based Nurs. 2019, 16, 176–185. [Google Scholar] [CrossRef] [PubMed]

- Williams, N.J.; Ehrhart, M.G.; Aarons, G.A.; Marcus, S.C.; Beidas, R.S. Linking molar organizational climate and strategic implementation climate to clinicians’ use of evidence-based psychotherapy techniques: Cross-sectional and lagged analyses from a 2-year observational study. Implement. Sci. 2018, 13, 85. [Google Scholar] [CrossRef] [PubMed]

- Melnyk, B.M. Culture eats strategy every time: What works in building and sustaining an evidence-based practice culture in healthcare systems. Worldviews Evid. Based Nurs. 2016, 13, 99–101. [Google Scholar] [CrossRef] [PubMed]

- Aarons, G. Mental health provider attitudes towards adoption of evidence-based practice: The Evidence-Based Practice Attitudes Scale (EBPAS). Ment. Health Serv. Res. 2004, 6, 61–74. [Google Scholar] [CrossRef]

- Locke, J.; Lawson, G.M.; Beidas, R.S.; Aarons, G.A.; Xie, M.; Lyon, A.R.; Stahmer, A.; Seidman, M.; Frederick, L.; Oh, C. Individual and organizational factors that affect implementation of evidence-based practices for children with autism in public schools: A cross-sectional observational study. Implement. Sci. 2019, 14, 1–9. [Google Scholar] [CrossRef]

- Konrad, M.; Criss, C.J.; Telesman, A.O. Fads or facts? Sifting through the evidence to find what really works. Interv. Sch. Clin. 2019, 54, 272–279. [Google Scholar] [CrossRef]

- Goel, A.P.; Maher, D.P.; Cohen, S.P. Ketamine: Miracle drug or latest fad? Pain Manag. 2017. [Google Scholar] [CrossRef]

- Snelling, P.J.; Tessaro, M. Paediatric emergency medicine point-of-care ultrasound: Fundamental or fad? Emerg. Med. Australas. 2017, 29, 486–489. [Google Scholar] [CrossRef] [PubMed]

- Scurlock-Evans, L.; Upton, P.; Upton, D. Evidence-based practice in physiotherapy: A systematic review of barriers, enablers and interventions. Physiotherapy 2014, 100, 208–219. [Google Scholar] [CrossRef] [PubMed]

- Upton, D.; Stephens, D.; Williams, B.; Scurlock-Evans, L. Occupational therapists’ attitudes, knowledge, and implementation of evidence-based practice: A systematic review of published research. Br. J. Occup. Ther. 2014, 77, 24–38. [Google Scholar] [CrossRef]

- Jeffery, H.; Robertson, L.; Reay, K.L. Sources of evidence for professional decision-making in novice occupational therapy practitioners: Clinicians’ perspectives. Br. J. Occup. Ther. 2020, 0308022620941390. [Google Scholar] [CrossRef]

- Robertson, L.; Graham, F.; Anderson, J. What actually informs practice: Occupational therapists’ views of evidence. Br. J. Occup. Ther. 2013, 76, 317–324. [Google Scholar] [CrossRef]

- Hamaideh, S.H. Sources of Knowledge and Barriers of Implementing Evidence-Based Practice Among Mental Health Nurses in Saudi Arabia. Perspect. Psychiatr. Care 2016, 53, 190–198. [Google Scholar] [CrossRef]

- Shepherd, D.; Csako, R.; Landon, J.; Goedeke, S.; Ty, K. Documenting and Understanding Parent’s Intervention Choices for Their Child with Autism Spectrum Disorder. J. Autism Dev. Disord. 2018, 48, 988–1001. [Google Scholar] [CrossRef]

- Mathes, T.; Buehn, S.; Prengel, P.; Pieper, D. Registry-based randomized controlled trials merged the strength of randomized controlled trails and observational studies and give rise to more pragmatic trials. J. Clin. Epidemiol. 2018, 93, 120–127. [Google Scholar] [CrossRef]

- National Library of Medicine. ClinicalTrials.gov Background. Available online: https://clinicaltrials.gov/ct2/about-site/background (accessed on 29 January 2021).

- Wilson, G.T. Manual-based treatments: The clinical application of research findings. Behav Res 1996, 34, 295–314. [Google Scholar] [CrossRef]

- Holmqvist, R.; Philips, B.; Barkham, M. Developing practice-based evidence: Benefits, challenges, and tensions. Psychother. Res. 2015, 25, 20–31. [Google Scholar] [CrossRef]

- Abry, T.; Hulleman, C.S.; Rimm-Kaufman, S.E. Using indices of fidelity to intervention core components to identify program active ingredients. Am. J. Eval. 2015, 36, 320–338. [Google Scholar] [CrossRef]

- Glasgow, R.E.; Eckstein, E.T.; ElZarrad, M.K. Implementation Science Perspectives and Opportunities for HIV/AIDS Research: Integrating Science, Practice, and Policy. Jaids J. Acquir. Immune Defic. Syndr. 2013, 63, S26–S31. [Google Scholar] [CrossRef]

- Khalil, H. Knowledge translation and implementation science: What is the difference? JBI Evid. Implement. 2016, 14, 39–40. [Google Scholar] [CrossRef]

- Aarons, G.A.; Ehrhart, M.G.; Farahnak, L.R.; Sklar, M. Aligning Leadership Across Systems and Organizations to Develop a Strategic Climate for Evidence-Based Practice Implementation. Annu. Rev. Public Health 2014, 35, 255–274. [Google Scholar] [CrossRef]

- Yadav, B.; Fealy, G. Irish psychiatric nurses’ self-reported sources of knowledge for practice. J. Psychiatr. Ment. Health Nurs. 2012, 19, 40–46. [Google Scholar] [CrossRef]

- Cañas, A.J.; Novak, J.D. Re-examining the foundations for effective use of concept maps. In Concept Maps: Theory, Methodology, Technology, Proceedings of the Second International Conference on Concept Mapping, San Jose, Costa Rica, 5–8 September 2006; Universidad de Costa Rica: San Jose, Costa Rica; pp. 494–502.

- Vallori, A.B. Meaningful learning in practice. J. Educ. Hum. Dev. 2014, 3, 199–209. [Google Scholar] [CrossRef]

- Miri, B.; David, B.-C.; Uri, Z. Purposely Teaching for the Promotion of Higher-order Thinking Skills: A Case of Critical Thinking. Res. Sci. Educ. 2007, 37, 353–369. [Google Scholar] [CrossRef]

- Elsawah, S.; Guillaume, J.H.A.; Filatova, T.; Rook, J.; Jakeman, A.J. A methodology for eliciting, representing, and analysing stakeholder knowledge for decision making on complex socio-ecological systems: From cognitive maps to agent-based models. J. Environ. Manag. 2015, 151, 500–516. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Wu, B.; Kirschner, P.A.; Michael Spector, J. Using cognitive mapping to foster deeper learning with complex problems in a computer-based environment. Comput. Hum. Behav. 2018, 87, 450–458. [Google Scholar] [CrossRef]

- Wu, B.; Wang, M.; Grotzer, T.A.; Liu, J.; Johnson, J.M. Visualizing complex processes using a cognitive-mapping tool to support the learning of clinical reasoning. BMC Med Educ. 2016, 16, 216. [Google Scholar] [CrossRef] [PubMed]

- Sun, M.; Wang, M.; Wegerif, R. Using computer-based cognitive mapping to improve students’ divergent thinking for creativity development. Br. J. Educ. Technol. 2019, 50, 2217–2233. [Google Scholar] [CrossRef]

- Derbentseva, N.; Safayeni, F.; Cañas, A.J. Concept maps: Experiments on dynamic thinking. J. Res. Sci. Teach. 2007, 44, 448–465. [Google Scholar] [CrossRef]

- Özesmi, U.; Özesmi, S.L. Ecological models based on people’s knowledge: A multi-step fuzzy cognitive mapping approach. Ecol. Model. 2004, 176, 43–64. [Google Scholar] [CrossRef]

- Täuscher, K.; Abdelkafi, N. Visual tools for business model innovation: Recommendations from a cognitive perspective. Creat. Innov. Manag. 2017, 26, 160–174. [Google Scholar] [CrossRef]

- Village, J.; Salustri, F.A.; Neumann, W.P. Cognitive mapping: Revealing the links between human factors and strategic goals in organizations. Int. J. Ind. Ergon. 2013, 43, 304–313. [Google Scholar] [CrossRef]

- Ahn, A.C.; Tewari, M.; Poon, C.-S.; Phillips, R.S. The limits of reductionism in medicine: Could systems biology offer an alternative? PLoS Med. 2006, 3, e208. [Google Scholar] [CrossRef]

- Grocott, M.P.W. Integrative physiology and systems biology: Reductionism, emergence and causality. Extrem. Physiol. Med. 2013, 2, 9. [Google Scholar] [CrossRef]

- American Physical Therapy Association. Vision 2020. Available online: http://www.apta.org/AM/Template.cfm?Section=Vision_20201&Template=/TaggedPage/TaggedPageDisplay.cfm&TPLID=285&ContentID=32061. (accessed on 3 January 2013).

- Wolkenhauer, O.; Green, S. The search for organizing principles as a cure against reductionism in systems medicine. Febs J. 2013, 280, 5938–5948. [Google Scholar] [CrossRef]

- Zorek, J.; Raehl, C. Interprofessional education accreditation standards in the USA: A comparative analysis. J. Interprofessional Care 2013, 27, 123–130. [Google Scholar] [CrossRef]

- McDougall, A.; Goldszmidt, M.; Kinsella, E.; Smith, S.; Lingard, L. Collaboration and entanglement: An actor-network theory analysis of team-based intraprofessional care for patients with advanced heart failure. Soc. Sci. Med. 2016, 164, 108–117. [Google Scholar] [CrossRef] [PubMed]

- Gambrill, E. Critical Thinking in Clinical Practice; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Fava, L.; Morton, J. Causal modeling of panic disorder theories. Clin. Psychol. Rev. 2009, 29, 623–637. [Google Scholar] [CrossRef]

- Pilecki, B.; Arentoft, A.; McKay, D. An evidence-based causal model of panic disorder. J. Anxiety Disord. 2011, 25, 381–388. [Google Scholar] [CrossRef] [PubMed]

- Scaffa, M.E.; Wooster, D.M. Effects of problem-based learning on clinical reasoning in occupational therapy. Am. J. Occup. Ther. 2004, 58, 333–336. [Google Scholar] [CrossRef][Green Version]

- Masek, A.; Yamin, S. Problem based learning for epistemological competence: The knowledge acquisition perspectives. J. Tech. Educ. Train. 2011, 3. Available online: https://publisher.uthm.edu.my/ojs/index.php/JTET/article/view/257 (accessed on 29 January 2021).

- Schmidt, H.G.; Rikers, R.M. How expertise develops in medicine: Knowledge encapsulation and illness script formation. Med. Educ. 2007, 41, 1133–1139. [Google Scholar] [CrossRef] [PubMed]

- Facione, N.C.; Facione, P.A. Critical Thinking and Clinical Judgment. In Critical Thinking and Clinical Reasoning in the Health Sciences: A Teaching Anthology; Insight Assessment/The California Academic Press: Millbrae CA, USA, 2008; pp. 1–13. Available online: https://insightassessment.com/wp-content/uploads/ia/pdf/CH-1-CT-CR-Facione-Facione.pdf (accessed on 29 January 2021).

- Newell, A. Unified Theories of Cognition; Cambridge University Press: Cambridge, MA, USA, 1990. [Google Scholar]

- Howard, J. Bursting the Big Data Bubble: The Case for Intuition-Based Decision Making; Liebowitz, J., Ed.; Auerbach: Boca Raton, FL, USA, 2015; pp. 57–69. [Google Scholar]

- Bornstein, B.H.; Emler, A.C. Rationality in medical decision making: A review of the literature on doctors’ decision-making biases. J. Eval. Clin. Pract. 2001, 7, 97–107. [Google Scholar] [CrossRef] [PubMed]

- Mamede, S.; Schmidt, H.G.; Rikers, R. Diagnostic errors and reflective practice in medicine. J. Eval. Clin. Pract. 2007, 13, 138–145. [Google Scholar] [CrossRef]

- Klein, J.G. Five pitfalls in decisions about diagnosis and prescribing. BMJ: Br. Med. J. 2005, 330, 781. [Google Scholar] [CrossRef]

- Schwartz, A.; Elstein, A.S. Clinical reasoning in medicine. Clin. Reason. Health Prof. 2008, 3, 223–234. [Google Scholar]

- Mann, K.; Gordon, J.; MacLeod, A. Reflection and reflective practice in health professions education: A systematic review. Adv. Health Sci. Educ. 2009, 14, 595–621. [Google Scholar] [CrossRef]

- Cross, V. Introducing physiotherapy students to the idea of ‘reflective practice’. Med. Teach. 1993, 15, 293–307. [Google Scholar] [CrossRef] [PubMed]

- Donaghy, M.E.; Morss, K. Guided reflection: A framework to facilitate and assess reflective practice within the discipline of physiotherapy. Physiother. Theory Pract. 2000, 16, 3–14. [Google Scholar] [CrossRef]

- Entwistle, N.; Ramsden, P. Understanding Learning; Croom Helm: London, UK, 1983. [Google Scholar]

- Routledge, J.; Willson, M.; McArthur, M.; Richardson, B.; Stephenson, R. Reflection on the development of a reflective assessment. Med. Teach. 1997, 19, 122–128. [Google Scholar] [CrossRef]

- King, G.; Currie, M.; Bartlett, D.J.; Gilpin, M.; Willoughby, C.; Tucker, M.A.; Baxter, D. The development of expertise in pediatric rehabilitation therapists: Changes in approach, self-knowledge, and use of enabling and customizing strategies. Dev. Neurorehabilit. 2007, 10, 223–240. [Google Scholar] [CrossRef] [PubMed]

- Johns, C. Nuances of reflection. J. Clin. Nurs. 1994, 3, 71–74. [Google Scholar] [CrossRef]

- Koole, S.; Dornan, T.; Aper, L.; Scherpbier, A.; Valcke, M.; Cohen-Schotanus, J.; Derese, A. Factors confounding the assessment of reflection: A critical review. BMC Med. Educ. 2011, 11, 104. [Google Scholar] [CrossRef]

- Larrivee, B. Development of a tool to assess teachers’ level of reflective practice. Reflective Pract. 2008, 9, 341–360. [Google Scholar] [CrossRef]

- Rassafiani, M. Is length of experience an appropriate criterion to identify level of expertise? Scand. J. Occup. Ther. 2009, 1–10. [Google Scholar] [CrossRef]

- Wainwright, S.; Shepard, K.; Harman, L.; Stephens, J. Factors that influence the clinical decision making of novice and experienced physical therapists. Phys. Ther. 2011, 91, 87–101. [Google Scholar] [CrossRef]

- Benner, P. From Novice to Expert; Addison-Wesley Publishing Company: Menlo Park, CA, USA, 1984. [Google Scholar]

- Eva, K.W.; Cunnington, J.P.; Reiter, H.I.; Keane, D.R.; Norman, G.R. How can I know what I don’t know? Poor self assessment in a well-defined domain. Adv. Health Sci. Educ. 2004, 9, 211–224. [Google Scholar] [CrossRef]

- Eva, K.W.; Regehr, G. “I’ll never play professional football” and other fallacies of self-assessment. J. Contin. Educ. Health Prof. 2008, 28, 14–19. [Google Scholar] [CrossRef]

- Galbraith, R.M.; Hawkins, R.E.; Holmboe, E.S. Making self-assessment more effective. J. Contin. Educ. Health Prof. 2008, 28, 20–24. [Google Scholar] [CrossRef]

- Furnari, S.; Crilly, D.; Misangyi, V.F.; Greckhamer, T.; Fiss, P.C.; Aguilera, R. Capturing causal complexity: Heuristics for configurational theorizing. Acad. Manag. Rev. 2020. [Google Scholar] [CrossRef]

- Gopnik, A.; Glymour, C.; Sobel, D.M.; Schulz, L.E.; Kushnir, T.; Danks, D. A theory of causal learning in children: Causal maps and Bayes nets. Psychol Rev. 2004, 111, 3–32. [Google Scholar] [CrossRef]

- Hattori, M.; Oaksford, M. Adaptive non-interventional heuristics for covariation detection in causal induction: Model comparison and rational analysis. Cogn. Sci. 2007, 31, 765–814. [Google Scholar] [CrossRef]

- Friston, K.; Schwartenbeck, P.; FitzGerald, T.; Moutoussis, M.; Behrens, T.; Dolan, R.J. The anatomy of choice: Dopamine and decision-making. Philos. Trans. R. Soc. B: Biol. Sci. 2014, 369, 20130481. [Google Scholar] [CrossRef]

- Lu, H.; Yuille, A.L.; Liljeholm, M.; Cheng, P.W.; Holyoak, K.J. Bayesian generic priors for causal learning. Psychol. Rev. 2008, 115, 955. [Google Scholar] [CrossRef]

- Sloman, S. Causal Models: How People Think about the World and Its Alternatives; Oxford University Press: New York, NY, USA, 2005. [Google Scholar]

- Waldmann, M.R.; Hagmayer, Y. Causal reasoning. In The Oxford Handbook of Cognitive Psychology; Oxford Library of Psychology, Oxford University Press: New York, NY, USA, 2013; pp. 733–752. [Google Scholar]

- Rehder, B.; Kim, S. How causal knowledge affects classification: A generative theory of categorization. J. Exp. Psychol. 2006, 32, 659–683. [Google Scholar] [CrossRef]

- Davies, M. Concept mapping, mind mapping and argument mapping: What are the differences and do they matter? High. Educ. 2011, 62, 279–301. [Google Scholar] [CrossRef]

- Daley, B.J.; Torre, D.M. Concept maps in medical education: An analytical literature review. Med. Educ. 2010, 44, 440–448. [Google Scholar] [CrossRef] [PubMed]

- McHugh Schuster, P. Concept Mapping a Critical-Thinking Approach to Care Planning; F.A.Davis: Philadelphia, PA, USA, 2016. [Google Scholar]

- Blackstone, S.; Iwelunmor, J.; Plange-Rhule, J.; Gyamfi, J.; Quakyi, N.K.; Ntim, M.; Ogedegbe, G. Sustaining nurse-led task-shifting strategies for hypertension control: A concept mapping study to inform evidence-based practice. Worldviews Evid. Based Nurs. 2017, 14, 350–357. [Google Scholar] [CrossRef] [PubMed]

- Powell, B.J.; Beidas, R.S.; Lewis, C.C.; Aarons, G.A.; McMillen, J.C.; Proctor, E.K.; Mandell, D.S. Methods to improve the selection and tailoring of implementation strategies. J. Behav. Health Serv. Res. 2017, 44, 177–194. [Google Scholar] [CrossRef]

- Mehlman, M.J. Quackery. Am. J. Law Med. 2005, 31, 349–363. [Google Scholar] [CrossRef] [PubMed]

- Ruiter, R.A.; Crutzen, R. Core processes: How to use evidence, theories, and research in planning behavior change interventions. Front. Public Health 2020, 8, 247. [Google Scholar] [CrossRef]

- Bannigan, K.; Moores, A. A model of professional thinking: Integrating reflective practice and evidence based practice. Can. J. Occup. Ther. 2009, 76, 342–350. [Google Scholar] [CrossRef]

- Krueger, R.B.; Sweetman, M.M.; Martin, M.; Cappaert, T.A. Occupational Therapists’ Implementation of Evidence-Based Practice: A Cross Sectional Survey. Occup. Ther. Health Care 2020, 1–24. [Google Scholar] [CrossRef] [PubMed]

- Cole, J.R.; Persichitte, K.A. Fuzzy cognitive mapping: Applications in education. Int. J. Intell. Syst. 2000, 15, 1–25. [Google Scholar] [CrossRef]

- Harden, R.M. What is a spiral curriculum? Med. Teach. 1999, 21, 141–143. [Google Scholar] [CrossRef] [PubMed]

- Clark, A.M. What are the components of complex interventions in healthcare? Theorizing approaches to parts, powers and the whole intervention. Soc. Sci. Med. 2013, 93, 185–193. [Google Scholar] [CrossRef] [PubMed]

- Whyte, J.; Dijkers, M.P.; Hart, T.; Zanca, J.M.; Packel, A.; Ferraro, M.; Tsaousides, T. Development of a theory-driven rehabilitation treatment taxonomy: Conceptual issues. Arch. Phys. Med. Rehabil. 2014, 95, S24–S32.e22. [Google Scholar] [CrossRef]

- Levack, W.M.; Meyer, T.; Negrini, S.; Malmivaara, A. Cochrane Rehabilitation Methodology Committee: An international survey of priorities for future work. Eur. J. Phys. Rehabil. Med. 2017, 53, 814–817. [Google Scholar] [CrossRef]

- Hart, T.; Ehde, D.M. Defining the treatment targets and active ingredients of rehabilitation: Implications for rehabilitation psychology. Rehabil. Psychol. 2015, 60, 126. [Google Scholar] [CrossRef] [PubMed]

- Dombrowski, S.U.; O’Carroll, R.E.; Williams, B. Form of delivery as a key ‘active ingredient’ in behaviour change interventions. Br. J. Health Psychol. 2016, 21, 733–740. [Google Scholar] [CrossRef]

- Wolf, S.L.; Winstein, C.J.; Miller, J.P.; Taub, E.; Uswatte, G.; Morris, D.; Giuliani, C.; Light, K.E.; Nichols-Larsen, D.; EXCITE Investigators, f.t. Effect of Constraint-Induced Movement Therapy on Upper Extremity Function 3 to 9 Months After StrokeThe EXCITE Randomized Clinical Trial. JAMA 2006, 296, 2095–2104. [Google Scholar] [CrossRef] [PubMed]

- Hoare, B.J.; Wallen, M.A.; Thorley, M.N.; Jackman, M.L.; Carey, L.M.; Imms, C. Constraint-induced movement therapy in children with unilateral cerebral palsy. Cochrane Database Syst. Rev. 2019, 2011, CD003681. [Google Scholar] [CrossRef] [PubMed]

- Gordon, A.M.; Hung, Y.C.; Brandao, M.; Ferre, C.L.; Kuo, H.C.; Friel, K.; Petra, E.; Chinnan, A.; Charles, J.R. Bimanual training and constraint-induced movement therapy in children with hemiplegic cerebral palsy: A randomized trial. Neurorehabil Neural Repair 2011, 25, 692–702. [Google Scholar] [CrossRef] [PubMed]

- Waddell, K.J.; Strube, M.J.; Bailey, R.R.; Klaesner, J.W.; Birkenmeier, R.L.; Dromerick, A.W.; Lang, C.E. Does task-specific training improve upper limb performance in daily life poststroke? Neurorehabilit. Neural Repair 2017, 31, 290–300. [Google Scholar] [CrossRef]

- Lang, C.E.; Lohse, K.R.; Birkenmeier, R.L. Dose and timing in neurorehabilitation: Prescribing motor therapy after stroke. Curr. Opin. Neurol. 2015, 28, 549. [Google Scholar] [CrossRef]

- Jette, A.M. Opening the Black Box of Rehabilitation Interventions. Phys. Ther. 2020, 100, 883–884. [Google Scholar] [CrossRef]

- Lenker, J.A.; Fuhrer, M.J.; Jutai, J.W.; Demers, L.; Scherer, M.J.; DeRuyter, F. Treatment theory, intervention specification, and treatment fidelity in assistive technology outcomes research. Assist Technol. 2010, 22, 129–138. [Google Scholar] [CrossRef]

- Johnston, M.V.; Dijkers, M.P. Toward improved evidence standards and methods for rehabilitation: Recommendations and challenges. Arch. Phys. Med. Rehabil. 2012, 93, S185–S199. [Google Scholar] [CrossRef]

- Sinha, Y.; Silove, N.; Hayen, A.; Williams, K. Auditory integration training and other sound therapies for autism spectrum disorders (ASD). Cochrane Database Syst. Rev. 2011. [Google Scholar] [CrossRef] [PubMed]

- Bérard, G. Hearing Equals Behavior; Keats Publishing: New Canaan, CT, USA, 1993. [Google Scholar]

- American Speech-Language-Hearing Association. Auditory Integration Training [Technical Report]. Available online: https://www2.asha.org/policy/TR2004-00260/ (accessed on 22 January 2021).

| Action | |

|---|---|

| Step 1 | Identify possible problem(s), concepts, etc. |

| Step 2 | Reflect on your beliefs, knowledge, experiences, and place any variable which may be affecting the problem on the map |

| Step 3 | Seek empirical evidence and background information

|

| Step 4 | Identify and link interventions to the variables they affect |

| Step 5 | Assess applicability, feasibility to context and client |

| Step 6 | Identify and collect outcomes data points locally which will allow assessing accuracy of decision |

| Step 7 | Reflect on outcomes data:

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Benfield, A.; Krueger, R.B. Making Decision-Making Visible—Teaching the Process of Evaluating Interventions. Int. J. Environ. Res. Public Health 2021, 18, 3635. https://doi.org/10.3390/ijerph18073635

Benfield A, Krueger RB. Making Decision-Making Visible—Teaching the Process of Evaluating Interventions. International Journal of Environmental Research and Public Health. 2021; 18(7):3635. https://doi.org/10.3390/ijerph18073635

Chicago/Turabian StyleBenfield, Angela, and Robert B. Krueger. 2021. "Making Decision-Making Visible—Teaching the Process of Evaluating Interventions" International Journal of Environmental Research and Public Health 18, no. 7: 3635. https://doi.org/10.3390/ijerph18073635

APA StyleBenfield, A., & Krueger, R. B. (2021). Making Decision-Making Visible—Teaching the Process of Evaluating Interventions. International Journal of Environmental Research and Public Health, 18(7), 3635. https://doi.org/10.3390/ijerph18073635