Constructing Epidemiologic Cohorts from Electronic Health Record Data

Abstract

1. Introduction

1.1. Electronic Health Record Data as a Historical Cohort

1.2. Ongoing Example: Diabetes and Heart Failure Hospitalization

2. Important Data Machinery Components for Historical Cohort Studies with EHRs

2.1. Selecting Research Participants

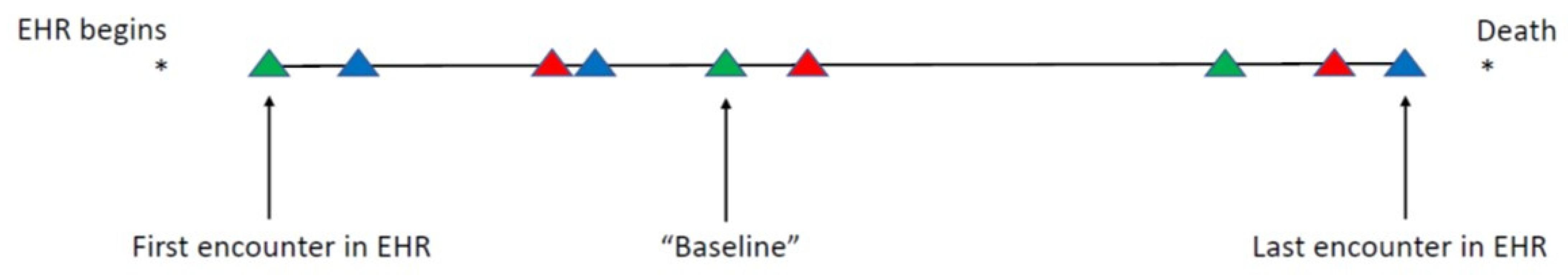

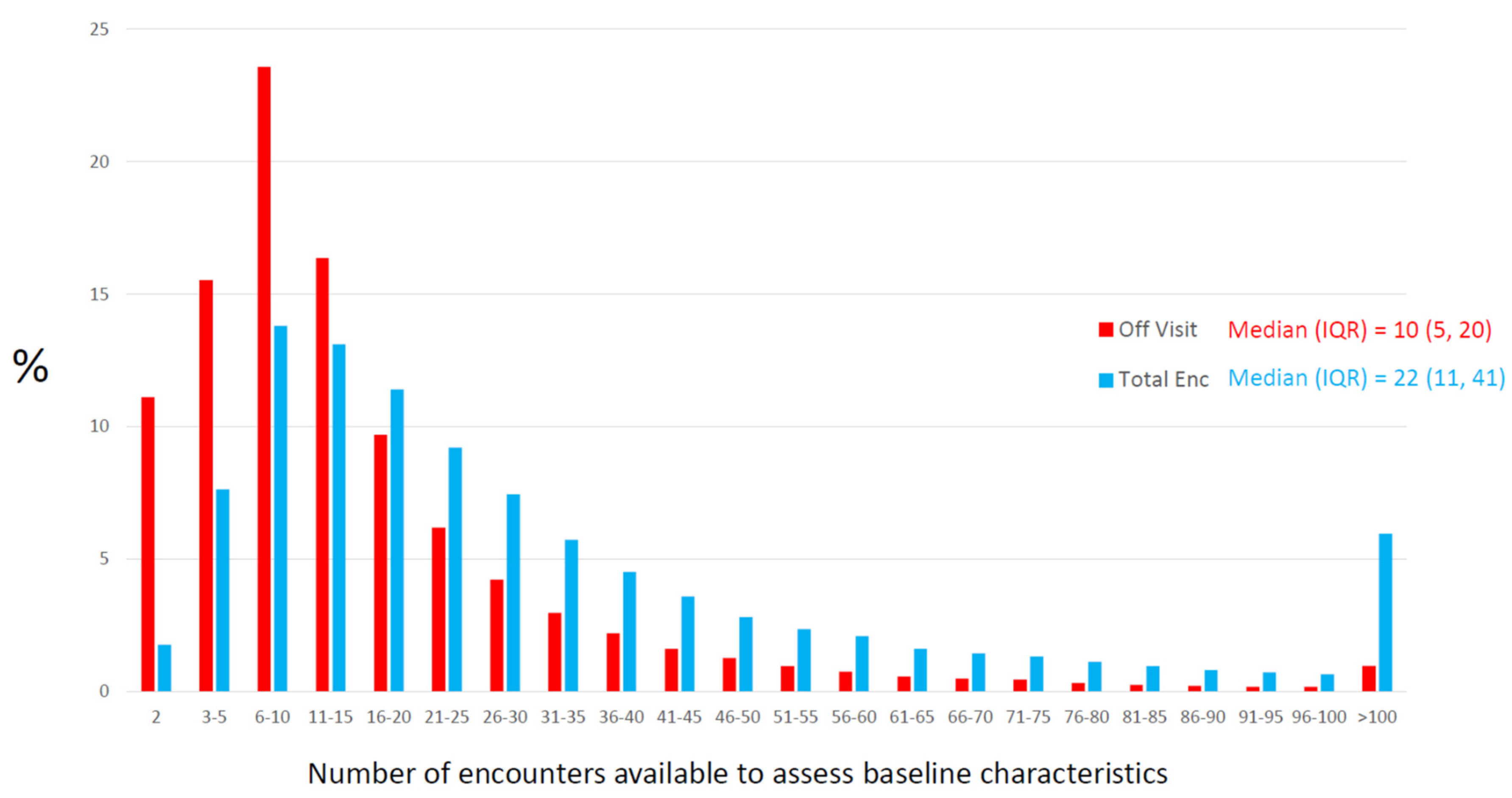

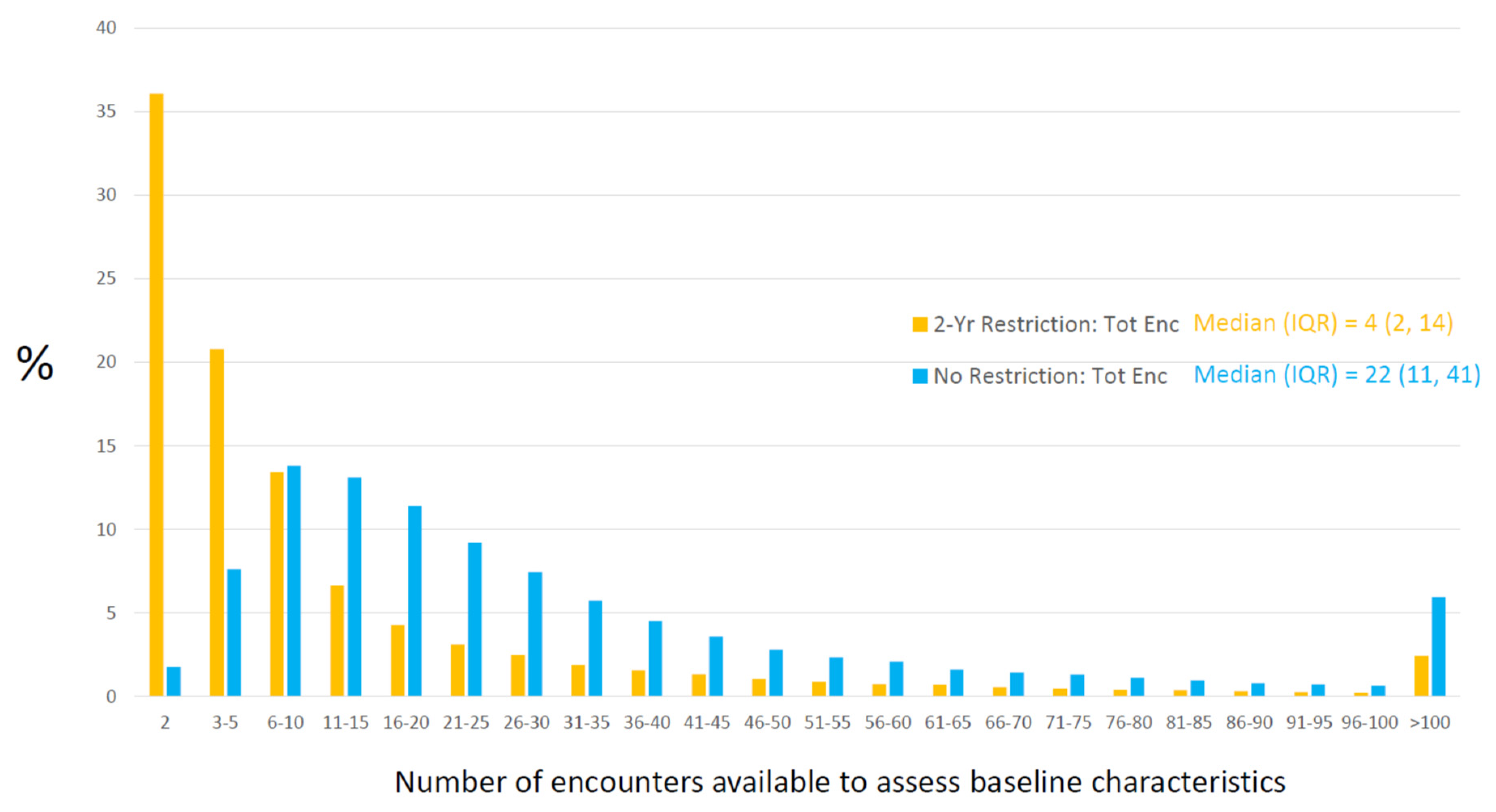

2.2. Defining “Baseline” and Assembly of Baseline Characteristics

2.2.1. EHR Data: What Is Available

2.2.2. Opportunity for Information

2.2.3. Creating Rules for the 99%

2.2.4. Hidden Missingness

2.2.5. Quantitative Data: Measurement Error and Missing Data

2.3. Follow-Up for Future Outcomes

3. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abdelhak, M.; Hanken, M.A. (Eds.) Health Information: Management of a Strategic Resource, 5th ed.; Elsevier: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Hoyt, R.E. (Ed.) Health Informatics: Practical Guide for Healthcare and Information Technology Professionals, 6th ed.; Lulu.com: Pensacola, FL, USA, 2014. [Google Scholar]

- Smiley, K. Medical Billing Coding for Dummies, 2nd ed.; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Shortliffe, E.H.; Cimino, J.J. (Eds.) Biomedical Informatics: Computer Applications in Health Care and Biomedicine, 4th ed.; Springer: London, UK, 2014. [Google Scholar]

- Wager, K.A.; Lee, F.W.; Glaser, J.P. Health Care Information Systems: A Practical Approach for Health Care Management, 4th ed.; Wiley: Hoboken, NJ, USA, 2017. [Google Scholar]

- Russell, L.B. Electronic Health Records: The Signal and the Noise. Med. Decis. Mak. 2021, 41, 103–106. [Google Scholar] [CrossRef]

- Taksler, G.B.; Dalton, J.E.; Perzynski, A.T.; Rothberg, M.B.; Milinovich, A.; Krieger, N.I.; Dawson, N.V.; Roach, M.J.; Lewis, M.D.; Einstadter, D. Opportunities, Pitfalls, and Alternatives in Adapting Electronic Health Records for Health Services Research. Med. Decis. Mak. 2021, 41, 133–142. [Google Scholar] [CrossRef]

- Roger, V.L.; Sidney, S.; Fairchild, A.L.; Howard, V.J.; Labarthe, D.R.; Shay, C.M.; Tiner, A.C.; Whitsel, L.P.; Rosamond, W.D. Recommendations for Cardiovascular Health and Disease Surveillance for 2030 and Beyond: A Policy Statement from the American Heart Association. Circulation 2020, 141, e104–e119. [Google Scholar] [CrossRef] [PubMed]

- Roger, V.L.; Boerwinkle, E.; Crapo, J.D.; Douglas, P.S.; Epstein, J.A.; Granger, C.B.; Greenland, P.; Kohane, I.; Psaty, B.M. Strategic Transformation of Population Studies: Recommendations of the Working Group on Epidemiology and Population Sciences from the National Heart, Lung, and Blood Advisory Council and Board of External Experts. Am. J. Epidemiol. 2015, 181, 363–368. [Google Scholar] [CrossRef] [PubMed]

- Sorlie, P.D.; Bild, D.E.; Lauer, M.S. Cardiovascular Epidemiology in a Changing World—Challenges to Investigators and the National Heart, Lung, and Blood Institute. Am. J. Epidemiol. 2012, 175, 597–601. [Google Scholar] [CrossRef] [PubMed]

- Safran, C.; Bloomrosen, M.M.; Hammond, W.E.; Labkoff, S.; Markel-Fox, S.; Tang, P.C.; Detmer, D.E. Toward a national framework for the secondary use of health data: An American Medical Informatics Association white paper. J. Am. Med. Inform. Assoc. 2007, 14, 1–9. [Google Scholar] [CrossRef]

- Coorevits, P.; Sundgren, M.; Klein, G.O.; Bahr, A.; Claerhout, B.; Daniel, C.; Dugas, M.; Dupont, D.; Schmidt, A.; Singleton, P.; et al. Electronic health records: New opportunities for clinical research. J. Intern. Med. 2013, 274, 547–560. [Google Scholar] [CrossRef] [PubMed]

- Hemingway, H.; Asselbergs, F.W.; Danesh, J.; Dobson, R.; Maniadakis, N.; Maggioni, A.; van Thiel, G.J.M.; Cronin, M.; Brobert, G.; Vardas, P.; et al. Big data from electronic health records for early and late translational cardiovascular research: Challenges and potential. Eur. Heart J. 2018, 39, 1481–1495. [Google Scholar] [CrossRef]

- Samet, J.M.; Ness, R.B. Epidemiology, Austerity, and Innovation. Am. J. Epidemiol. 2012, 175, 975–978. [Google Scholar] [CrossRef][Green Version]

- Grobbee, D.E.; Hoes, A.W. (Eds.) Clinical Epidemiology: Principles, Methods, and Applications for Clinical Research, 2nd ed.; Jones & Bartlett: Burlington, MA, USA, 2015. [Google Scholar]

- Parfrey, P.S.; Barrett, B.J. (Eds.) Clinical Epidemiology: Practice and Methods, 2nd ed.; Springer: New York, NY, USA, 2015. [Google Scholar]

- Fletcher, R.H.; Fletcher, S.W.; Fletcher, G.S. Clinical Epidemiology: The Essentials, 5th ed.; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2014. [Google Scholar]

- Saczynski, J.S.; McManus, D.D.; Goldberg, R.J. Commonly Used Data-collection approaches in Clinical Research. Am. J. Med. 2013, 126, 946–950. [Google Scholar] [CrossRef] [PubMed]

- Greene, J.A.; Lea, A.S. Digital Futures Past—The Long Arc of Big Data in Medicine. N. Engl. J. Med. 2019, 381, 480–485. [Google Scholar] [CrossRef] [PubMed]

- Schneeweiss, S.; Rassen, J.A.; Brown, J.S.; Rothman, K.J.; Happe, L.; Arlett, P.; Pan, G.D.; Goettsch, W.; Murk, W.; Wang, S.V. Graphical Depiction of Longitudinal Study Designs in Health Care Databases. Ann. Intern. Med. 2019, 170, 398–406. [Google Scholar] [CrossRef] [PubMed]

- Williams, B.A.; Geba, D.; Cordova, J.M.; Shetty, S.S. A risk prediction model for heart failure hospitalization in type 2 diabetes mellitus. Clin. Cardiol. 2020, 43, 275–283. [Google Scholar] [CrossRef] [PubMed]

- Fuchs, V.R. Major Concepts of Health Care Economics. Ann. Intern. Med. 2015, 162, 380–383. [Google Scholar] [CrossRef] [PubMed]

- Dixon, B.E.; Gibson, P.J.; Comer, K.F.; Rosenman, M. Measuring population health using electronic health records: Exploring biases and representativeness in a community health information exchange. Stud. Health Technol. Inform. 2015, 216, 1009. [Google Scholar]

- Frisse, M.E.; Misulis, K.E. Essentials of Clinical Informatics; Oxford University Press: New York, NY, USA, 2019. [Google Scholar]

- Raman, S.R.; Curtis, L.H.; Temple, R.; Andersson, T.; Ezekowitz, J.; Ford, I.; James, S.; Marsolo, K.; Mirhaji, P.; Rocca, M.; et al. Leveraging electronic health records for clinical research. Am. Heart J. 2018, 202, 13–19. [Google Scholar] [CrossRef] [PubMed]

- Xian, Y.; Hammill, B.G.; Curtis, L.H. Data Sources for Heart Failure Comparative Effectiveness Research. Heart Fail. Clin. 2013, 9, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Wei, W.-Q.; Leibson, C.L.; Ransom, J.E.; Kho, A.N.; Caraballo, P.J.; Chai, H.S.; Yawn, B.P.; Pacheco, J.A.; Chute, C.G. Impact of data fragmentation across healthcare centers on the accuracy of a high-throughput clinical phenotyping algorithm for specifying subjects with type 2 diabetes mellitus. J. Am. Med. Inform. Assoc. 2012, 19, 219–224. [Google Scholar] [CrossRef] [PubMed]

- D’Avolio, L.W.; Farwell, W.R.; Fiore, L.D. Comparative Effectiveness Research and Medical Informatics. Am. J. Med. 2010, 123, e32–e37. [Google Scholar] [CrossRef] [PubMed]

- Weiskopf, N.G.; Rusanov, A.; Weng, C. Sick patients have more data: The non-random completeness of electronic health records. AMIA Annu. Symp. Proc. 2013, 2013, 1472–1477. [Google Scholar] [PubMed]

- Weber, G.M.; Adams, W.G.; Bernstam, E.V.; Bickel, J.P.; Fox, K.P.; Marsolo, K.; Raghavan, V.A.; Turchin, A.; Zhou, X.; Murphy, S.N.; et al. Biases introduced by filtering electronic health records for patients with “complete data”. J. Am. Med. Inform. Assoc. 2017, 24, 1134–1141. [Google Scholar] [CrossRef] [PubMed]

- Rusanov, A.; Weiskopf, N.G.; Wang, S.; Weng, C. Hidden in plain sight: Bias towards sick patients when sampling patients with sufficient electronic health record data for research. BMC Med. Inform. Decis. Mak. 2014, 14, 51. [Google Scholar] [CrossRef] [PubMed]

- Weiskopf, N.G.; Hripcsak, G.; Swaminathan, S.; Weng, C. Defining and measuring completeness of electronic health records for secondary use. J. Biomed. Inform. 2013, 46, 830–836. [Google Scholar] [CrossRef] [PubMed]

- Albers, D.J.; Hripcsak, G. A statistical dynamics approach to the study of human health data: Resolving population scale diurnal variation in laboratory data. Phys. Lett. 2010, 374, 1159–1164. [Google Scholar] [CrossRef] [PubMed]

- Stewart, W.F.; Shah, N.R.; Selna, M.J.; Paulus, R.A.; Walker, J.M. Bridging the Inferential Gap: The Electronic Health Record and Clinical Evidence: Emerging tools can help physicians bridge the gap between knowledge they possess and knowledge they do not. Health Aff. 2007, 26, w181–w191. [Google Scholar] [CrossRef]

- Rassen, J.A.; Bartels, D.B.; Schneeweiss, S.; Patrick, A.R.; Murk, W. Measuring prevalence and incidence of chronic conditions in claims and electronic health record database. Clin. Epidemiol. 2018, 11, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Chen, G.; Lix, L.; Tu, K.; Hemmelgarn, B.R.; Campbell, N.R.C.; McAlister, F.A.; Quan, H.; Hypertension Outcome and Surveillance Team. Influence of Using Different Databases and ‘Look Back’ Intervals to Define Comorbidity Profiles for Patients with Newly Diagnosed Hypertension: Implications for Health Services Researchers. PLoS ONE 2016, 11, e0162074. [Google Scholar]

- Griffiths, R.I.; O’Malley, C.D.; Herbert, R.J.; Danese, M.D. Misclassification of incident conditions using claims data: Impact of varying the period used to exclude pre-existing disease. BMC Med. Res. Methodol. 2013, 13, 32. [Google Scholar] [CrossRef]

- Wei, W.-Q.; Leibson, C.L.; Ransom, J.E.; Kho, A.N.; Chute, C.G. The absence of longitudinal data limits the accuracy of high-throughput clinical phenotyping for identifying type 2 diabetes mellitus subjects. Int. J. Med. Inform. 2013, 82, 239–247. [Google Scholar] [CrossRef][Green Version]

- Li, X.; Girman, C.J.; Ofner, S.; Shen, C.; Brodovicz, K.G.; Simonaitis, L.; Santanello, N. Sensitivity Analysis of Methods for Active Surveillance of Acute Myocardial Infarction Using Electronic Databases. Epidemiology 2015, 26, 130–132. [Google Scholar] [CrossRef]

- Martin, S.A.; Sinsky, C.A. The map is not the territory: Medical records and 21st century practice. Lancet 2016, 388, 2053–2056. [Google Scholar] [CrossRef]

- Brown, J.S.; Kahn, M.; Toh, S. Data quality assessment for comparative effectiveness research in distributed data networks. Med. Care 2013, 51, S22–S29. [Google Scholar] [CrossRef] [PubMed]

- Kahn, M.G.; Batson, D.; Schilling, L.M. Data model considerations for clinical effectiveness researchers. Med. Care 2012, 50, S60–S67. [Google Scholar] [CrossRef] [PubMed]

- Goldstein, B.A.; Bhavsar, N.A.; Phelan, M.; Pencina, M.J. Controlling for Informed Presence Bias Due to the Number of Health Encounters in an Electronic Health Record. Am. J. Epidemiol. 2016, 184, 847–855. [Google Scholar] [CrossRef] [PubMed]

- Wennberg, J.E.; Staiger, D.O.; Sharp, S.M.; Gottlieb, D.J.; Bevan, G.; McPherson, K.; Welch, H.G. Observational intensity bias associated with illness adjustment: Cross sectional analysis of insurance claims. BMJ 2013, 346, f549. [Google Scholar] [CrossRef]

- Lin, K.J.; Glynn, R.J.; Singer, D.E.; Murphy, S.N.; Lii, J.; Schneeweiss, S. Out-of-system Care and Recording of Patient Characteristics Critical for Comparative Effectiveness Research. Epidemiology 2018, 29, 356–363. [Google Scholar] [CrossRef]

- Nakasian, S.S.; Rassen, J.; Franklin, J.M. Effects of expanding the look-back period to all available data in the assessment of covariates. Pharm. Drug Saf. 2017, 26, 890–899. [Google Scholar] [CrossRef]

- Wang, S.V.; Schneeweiss, S.; Berger, M.L.; Brown, J.; de Vries, F.; Douglas, I.; Gagne, J.J.; Gini, R.; Klungel, O.; Mullins, C.D.; et al. Reporting to Improve Reproducibility and Facilitate Validity Assessment for Healthcare Database Studies. V1.0. Value Health 2017, 20, 1009–1022. [Google Scholar] [CrossRef]

- Nicholls, S.G.; Langan, S.M.; Benchimol, E.I. Routinely collected data: The importance of high-quality diagnostic coding to research. CMAJ 2017, 189, E1054–E1055. [Google Scholar] [CrossRef]

- Hripcsak, G.; Albers, D.J. Next-generation phenotyping of electronic health records. J. Am. Med. Inform. Assoc. 2013, 20, 117–121. [Google Scholar] [CrossRef]

- Pathak, J.; Kho, A.N.; Denny, J.C. Electronic health records-driven phenotyping: Challenges, recent advances, and perspectives. J. Am. Med. Inform. Assoc. 2013, 20, e206–e211. [Google Scholar] [CrossRef] [PubMed]

- Richesson, R.L.; Hammond, W.E.; Nahm, M.; Wixted, D.; Simon, G.E.; Robinson, J.G.; Bauck, A.E.; Cifelli, D.; Smerek, M.M.; Dickerson, J.; et al. Electronic health records based phenotyping in next-generation clinical trials: A perspective from the NIH health care systems collaboratory. J. Am. Med. Inform. Assoc. 2013, 20, e226–e231. [Google Scholar] [CrossRef] [PubMed]

- Jensen, P.B.; Jensen, L.J.; Brunak, S. Mining electronic health records: Towards better research applications and clinical care. Nat. Rev. Genet. 2012, 13, 395–405. [Google Scholar] [CrossRef]

- Wells, B.J.; Nowacki, A.S.; Chagin, K.; Kattan, M.W. Strategies for handling missing data in electronic health record derived data. EGEMS 2013, 1, 1035. [Google Scholar] [CrossRef] [PubMed]

- Vassy, J.L.; Ho, Y.-L.; Honerlaw, J.; Cho, K.; Gaziano, J.M.; Wilson, P.W.; Gagnon, D.R. Yield and bias in defining a cohort study baseline from electronic health record data. J. Biomed. Inf. 2018, 78, 54–59. [Google Scholar] [CrossRef]

- Muntner, P.; Einhorn, P.T.; Cushman, W.C.; Whelton, P.K.; Bello, N.; Drawz, P.E.; Green, B.B.; Jones, D.W.; Juraschek, S.; Margolis, K.; et al. Blood Pressure Assessment in Adults in Clinical Practice and Clinic-Based Research. J. Am. Coll. Cardiol. 2019, 73, 317–335. [Google Scholar] [CrossRef]

- Petersen, I.; Welch, C.A.; Nazareth, I.; Walters, K.; Marston, L.; Morris, R.W.; Carpenter, J.R.; Morris, T.P.; Pham, T.M. Health indicator recording in UK primary care electronic health records: Key implications for handling missing data. Clin. Epidemiol. 2019, 11, 157–167. [Google Scholar] [CrossRef]

- Schneeweiss, S.; A Rassen, J.; Glynn, R.J.; Myers, J.; Daniel, G.W.; Singer, J.; Solomon, D.H.; Kim, S.; Rothman, K.J.; Liu, J.; et al. Supplementing claims data with outpatient laboratory test results to improve confounding adjustment in effectiveness studies of lipid-lowering treatments. BMC Med. Res. Methodol. 2012, 12, 180. [Google Scholar] [CrossRef]

- Haneuse, S.; Arterburn, D.; Daniels, M.J. Assessing Missing Data Assumptions in EHR-Based Studies: A Complex and Underappreciated Task. JAMA Netw. Open 2021, 4, e210184. [Google Scholar] [CrossRef] [PubMed]

- Everson, J.; Patel, V.; Adler-Milstein, J. Information blocking remains prevalent at the start of 21st Century Cures Act: Results from a survey of health information exchange organizations. J. Am. Med. Inform. Assoc. 2021, 28, 727–732. [Google Scholar] [CrossRef]

- Kalbaugh, C.A.; Kucharska-Newton, A.; Wruck, L.; Lund, J.L.; Selvin, E.; Matsushita, K.; Bengtson, L.G.S.; Heiss, G.; Loehr, L. Peripheral Artery Disease Prevalence and Incidence Estimated from Both Outpatient and Inpatient Settings Among Medicare Fee-for-Service Beneficiaries in the Atherosclerosis Risk in Communities (ARIC) Study. J. Am. Heart Assoc. 2017, 6, e003796. [Google Scholar] [CrossRef]

- Camplain, R.; Kucharska-Newton, A.; Cuthbertson, C.C.; Wright, J.D.; Alonso, A.; Heiss, G. Misclassification of incident hospitalized and outpatient heart failure in administrative claims data: The Atherosclerosis Risk in Communities (ARIC) study. Pharmacoepidemiol. Drug Saf. 2017, 26, 421–428. [Google Scholar] [CrossRef] [PubMed]

- Herrett, E.; Shah, A.D.; Boggon, R.; Denaxas, S.; Smeeth, L.; van Staa, T.; Timmis, A.; Hemingway, H. Completeness and diagnostic validity of recording acute myocardial infarction events in primary care, hospital care, disease registry, and national mortality records: Cohort study. BMJ 2013, 346, f2350. [Google Scholar] [CrossRef]

- Robitaille, C.; Bancej, C.; Dai, S.; Tu, K.; Rasali, D.; Blais, C.; Plante, C.; Smith, M.; Svenson, L.W.; Reimer, K.; et al. Surveillance of ischemic heart disease should include physician billing claims: Population-based evidence from administrative health data across seven Canadian provinces. BMC Cardiovasc. Disord. 2013, 13, 88. [Google Scholar] [CrossRef] [PubMed]

- Williams, B.A.; Chagin, K.M.; Bash, L.D.; Boden, W.E.; Duval, S.; Fowkes, F.G.R.; Mahaffey, K.W.; Patel, M.D.; D’Agostino, R.B.; Peterson, E.D.; et al. External validation of the TIMI risk score for secondary cardiovascular events among patients with recent myocardial infarction. Atherosclerosis 2018, 272, 80–86. [Google Scholar] [CrossRef] [PubMed]

- Ehrenstein, V.; Nielsen, H.; Pedersen, A.B.; Johnsen, S.P.; Pedersen, L. Clinical epidemiology in the era of big data: New opportunities, familiar challenges. Clin. Epidemiol. 2017, 9, 245–250. [Google Scholar] [CrossRef] [PubMed]

| Prospective Study | Retrospective Study with EHR | |

|---|---|---|

| Selecting Research Participants |

|

|

| Baseline |

|

|

| Assembling Baseline Characteristics |

|

|

| Follow-up for Future Outcomes |

|

|

| Term or Phrase | Definition |

|---|---|

| Encounter | Any professional contact between a patient and healthcare organization, including primary care, specialty care, laboratory testing, emergency department visits, hospital admissions, etc. |

| Opportunity for Information | The collection of pre-baseline encounters that could provide usable research information. Can be expressed in units of time (days from first encounter to baseline encounter) or as number of encounters (between first and baseline encounters). |

| Creating Rules for the 99% | When assembling baseline characteristics for an EHR-based retrospective study, rules must be created for determining presence/absence of qualitative characteristics and values for quantitative characteristics. This informal expression implies that imperfect rules must be implemented that work well for the majority but rarely universally. |

| Looking for Yes | An expression applied when determining the presence/absence of a binary characteristic, denoting how rules typically only look for positive affirmations of the characteristic and rarely negative affirmations. |

| Hidden Missingness | A phrase describing the scenario where a qualitative condition (e.g., diagnosis) is labeled “absent” but was never queried nor investigated in clinical practice. Thus, the condition’s true status as present/absent is actually undetermined despite being labeled “absent”. |

| Weak No | A scenario where a qualitative condition (e.g., a diagnosis) is labeled absent based on weak information. |

| Strong No | A scenario where a qualitative condition (e.g., a diagnosis) is labeled absent based on strong information. |

| Baseline Characteristic | No Restriction | 2-Year Restriction |

|---|---|---|

| Hypertension | 71% (n = 56,653) | 67% (n = 53,350) |

| High cholesterol | 69% (n = 54,652) | 64% (n = 51,003) |

| Coronary bypass surgery | 7% (n = 5293) | 6% (n = 4673) |

| Heart failure | 11% (n = 9026) | 10% (n = 8170) |

| Acute myocardial infarction | 8% (n = 6516) | 7% (n = 5362) |

| Chest pain | 22% (n = 17,179) | 15% (n = 12,141) |

| Shortness of breath | 16% (n = 12,993) | 12% (n = 9784) |

| Depression | 25% (n = 19,812) | 21% (n = 16,901) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Williams, B.A. Constructing Epidemiologic Cohorts from Electronic Health Record Data. Int. J. Environ. Res. Public Health 2021, 18, 13193. https://doi.org/10.3390/ijerph182413193

Williams BA. Constructing Epidemiologic Cohorts from Electronic Health Record Data. International Journal of Environmental Research and Public Health. 2021; 18(24):13193. https://doi.org/10.3390/ijerph182413193

Chicago/Turabian StyleWilliams, Brent A. 2021. "Constructing Epidemiologic Cohorts from Electronic Health Record Data" International Journal of Environmental Research and Public Health 18, no. 24: 13193. https://doi.org/10.3390/ijerph182413193

APA StyleWilliams, B. A. (2021). Constructing Epidemiologic Cohorts from Electronic Health Record Data. International Journal of Environmental Research and Public Health, 18(24), 13193. https://doi.org/10.3390/ijerph182413193