Rise of Clinical Studies in the Field of Machine Learning: A Review of Data Registered in ClinicalTrials.gov

Abstract

1. Introduction

1.1. Background

1.2. Research Motivation and Objective

2. Materials and Methods

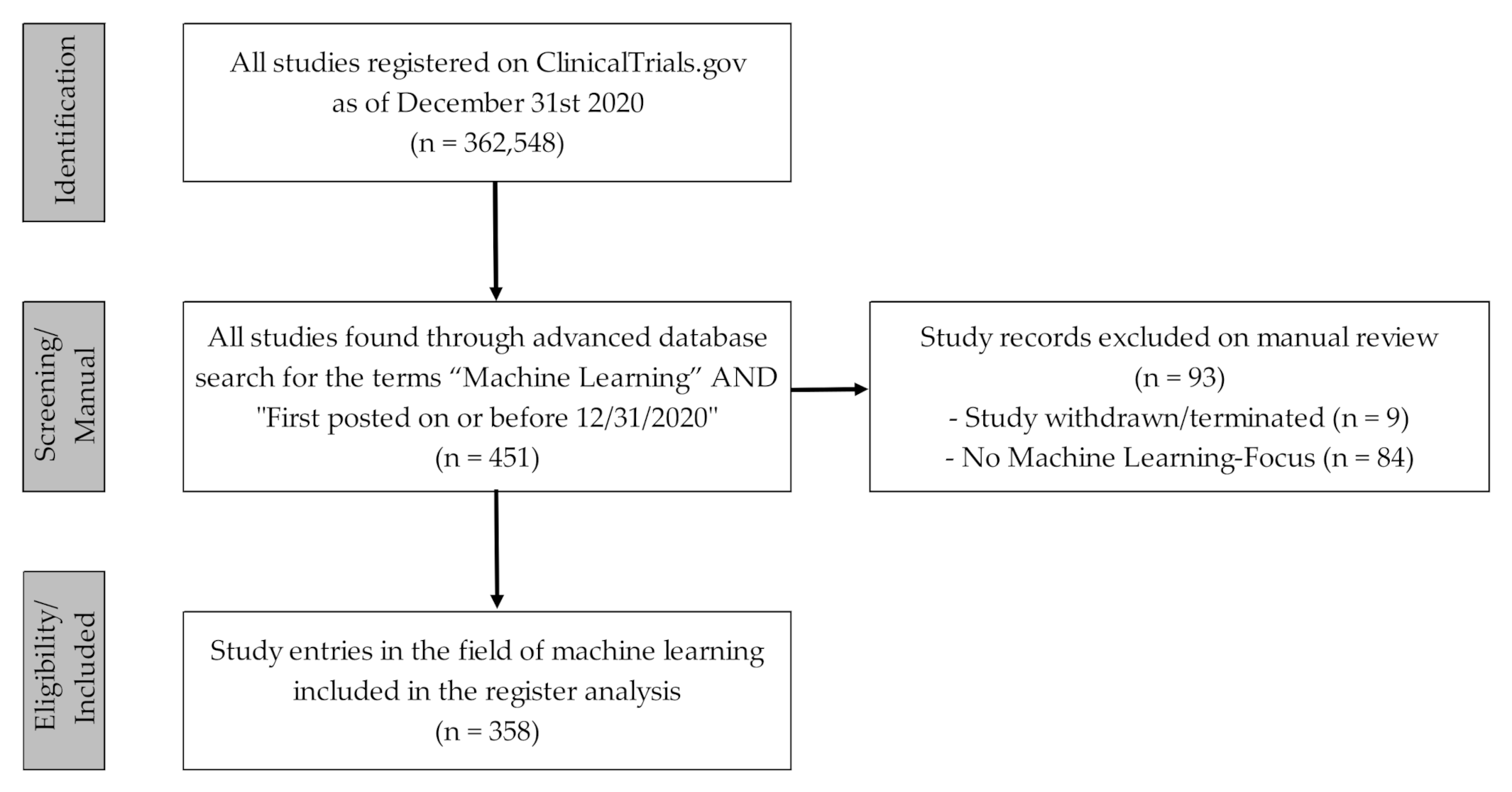

2.1. Data Acquisition and Processing

2.2. Data Evaluation and Analysis

3. Results

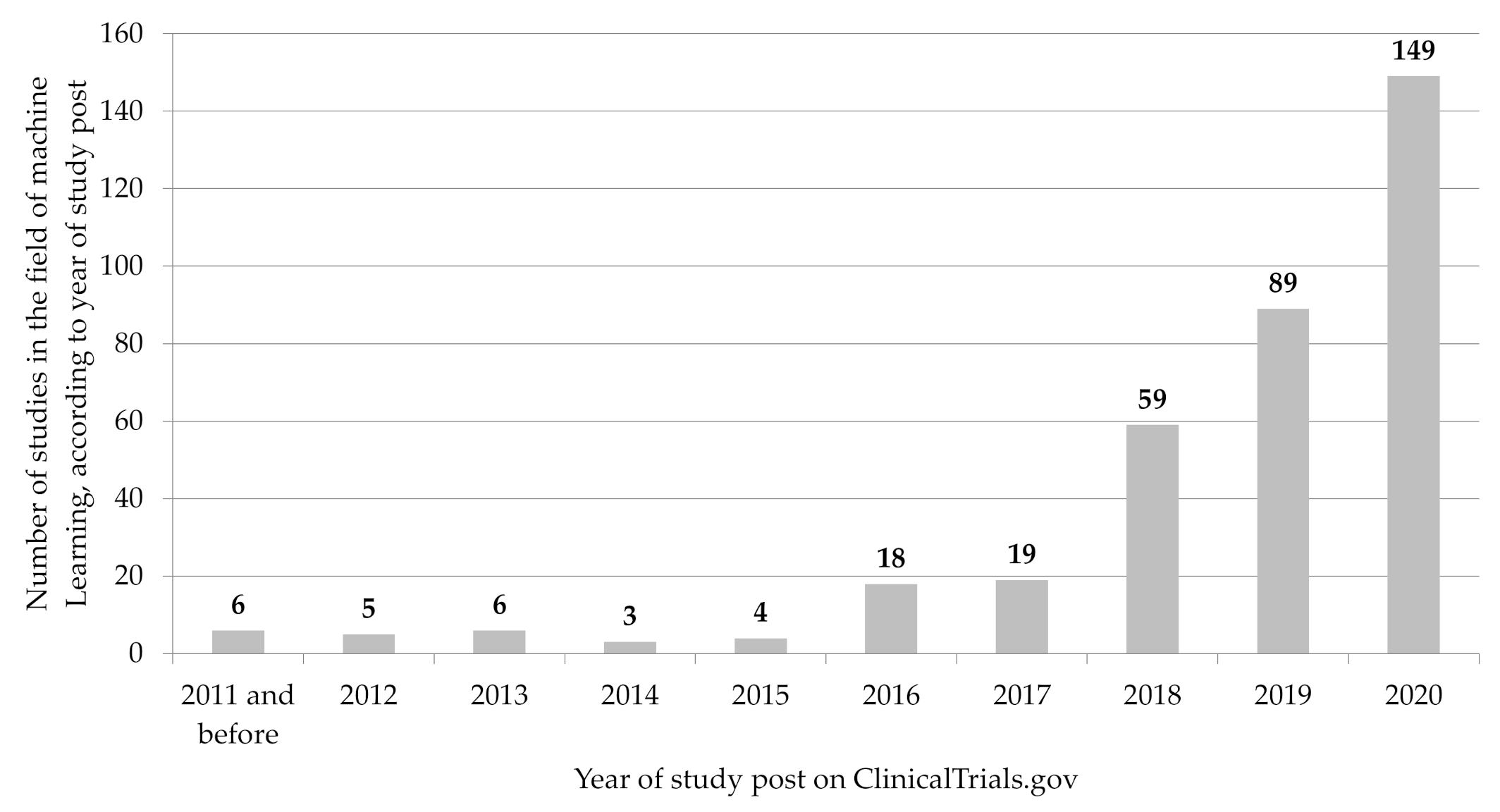

3.1. Registration of ML-Related Studies over Time

3.2. Medical Field of Application

3.3. Patient Recruitment and Study Organization

3.4. Study Type and Design

4. Discussion and Conclusions

4.1. Studies in the Field of ML

- Blomberg et al. reported to analyze whether a ML-based algorithm could recognize out-of-hospital cardiac arrests from audio files of calls to the emergency medical dispatch center (NCT04219306, [60]);

- Jaroszewski et al. wanted to evaluate a ML-Driven Risk Assessment and Intervention Platform to increase the use of psychiatric crisis services (NCT03633825; [61]);

- Mohr et al. stated to evaluate and compare a smartphone intervention for depression and anxiety that uses ML to optimize treatment for participants [NCT02801877; [62]);

4.2. Regulatory Framework and Aspects

- Tailored regulatory framework for AI/ML-based SaMD;

- Good machine-learning practice;

- Patient-centered approach, incorporating transparency to users;

- Regulatory science methods related to algorithm bias and robustness;

- Real-world performance [71].

4.3. Methodological Notes

5. Summary for Decisionmakers

- In recent years, an increasing number of ML algorithms have been developed for the health care sector that offer tremendous potential for the improvement of medical diagnostics and treatment. With a quantitative analysis of register data, the present study aims to give an overview of the recent development and current status of clinical studies in the field of ML.

- Based on an analysis of data from the registry platform ClinicalTrials.gov, we show that the number of registered clinical studies in the field of ML has continuously increased from year to year since 2015, with a particularly significant increase in the last two years.

- The studies analyzed were initiated by a variety of medical specialties, addressed a wide range of medical issues and used different types of data.

- Although academic institutions and (university) hospitals initiated most studies, more and more ML-related algorithms are finding their way into clinical translation with increasing industry funding.

- The increase in the number of studies analyzed shows how important it is to further develop current medical device regulations, specifically in view of the ML-based software product category. The recommendations recently presented by the FDA can provide an important impetus for this.

- Future research with trial registry data might address sub-evaluations on individual study groups.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Grant, J.; Green, L.; Mason, B. Basic research and health: A reassessment of the scientific basis for the support of biomedical science. Res. Eval. 2003, 12, 217–224. [Google Scholar] [CrossRef]

- Green, L.W.; Ottoson, J.M.; García, C.; Hiatt, R.A. Diffusion theory and knowledge dissemination, utilization, and integration in public health. Annu. Rev. Public Health 2009, 30, 151–174. [Google Scholar] [CrossRef] [PubMed]

- Morris, Z.S.; Wooding, S.; Grant, J. The answer is 17 years, what is the question: Understanding time lags in translational research. J. R. Soc. Med. 2011, 104, 510–520. [Google Scholar] [CrossRef] [PubMed]

- Contopoulos-Ioannidis, D.G.; Alexiou, G.A.; Gouvias, T.C.; Ioannidis, J.P. Medicine. Life cycle of translational research for medical interventions. Science 2008, 321, 1298–1299. [Google Scholar] [CrossRef]

- Trochim, W.; Kane, C.; Graham, M.J.; Pincus, H.A. Evaluating translational research: A process marker model. Clin. Transl. Sci. 2011, 4, 153–162. [Google Scholar] [CrossRef]

- Murdoch, T.B.; Detsky, A.S. The Inevitable Application of Big Data to Health Care. JAMA 2013, 309, 1351–1352. [Google Scholar] [CrossRef]

- Raghupathi, W.; Raghupathi, V. Big data analytics in healthcare: Promise and potential. Health Inf. Sci. Syst. 2014, 2, 3. [Google Scholar] [CrossRef]

- Wang, Y.; Hajli, N. Exploring the path to big data analytics success in healthcare. J. Bus. Res. 2017, 70, 287–299. [Google Scholar] [CrossRef]

- Mehta, N.; Pandit, A. Concurrence of big data analytics and healthcare: A systematic review. Int. J. Med. Inform. 2018, 114, 57–65. [Google Scholar] [CrossRef]

- Ngiam, K.Y.; Khor, I.W. Big data and machine learning algorithms for health-care delivery. Lancet Oncol. 2019, 20, e262–e273. [Google Scholar] [CrossRef]

- Deo, R.C. Machine Learning in Medicine. Circulation 2015, 132, 1920–1930. [Google Scholar] [CrossRef]

- U.S. National Library of Medicine. Maschine Learning; MeSH Unique ID: D000069550. 2016. Available online: https://www.ncbi.nlm.nih.gov/mesh/2010029 (accessed on 7 January 2021).

- Camacho, D.M.; Collins, K.M.; Powers, R.K.; Costello, J.C.; Collins, J.J. Next-Generation Machine Learning for Biological Networks. Cell 2018, 173, 1581–1592. [Google Scholar] [CrossRef]

- Chen, P.-H.C.; Liu, Y.; Peng, L. How to develop machine learning models for healthcare. Nat. Mater. 2019, 18, 410–414. [Google Scholar] [CrossRef]

- Uribe, C.F.; Mathotaarachchi, S.; Gaudet, V.; Smith, K.C.; Rosa-Neto, P.; Bénard, F.; Black, S.E.; Zukotynski, K. Machine Learning in Nuclear Medicine: Part 1-Introduction. J. Nucl. Med. 2019, 60, 451–458. [Google Scholar] [CrossRef]

- Erickson, B.J.; Panagiotis, K.; Zeynettin, A.; TL, K. Machine Learning for Medical Imaging. RadioGraphics 2017, 37, 505–515. [Google Scholar] [CrossRef]

- Kohli, M.; Prevedello, L.M.; Filice, R.W.; Geis, J.R. Implementing Machine Learning in Radiology Practice and Research. Am. J. Roentgenol. 2017, 208, 754–760. [Google Scholar] [CrossRef]

- Bonekamp, D.; Kohl, S.; Wiesenfarth, M.; Schelb, P.; Radtke, J.P.; Götz, M.; Kickingereder, P.; Yaqubi, K.; Hitthaler, B.; Gählert, N.; et al. Radiomic Machine Learning for Characterization of Prostate Lesions with MRI: Comparison to ADC Values. Radiology 2018, 289, 128–137. [Google Scholar] [CrossRef] [PubMed]

- Thrall, J.H.; Li, X.; Li, Q.; Cruz, C.; Do, S.; Dreyer, K.; Brink, J. Artificial Intelligence and Machine Learning in Radiology: Opportunities, Challenges, Pitfalls, and Criteria for Success. J. Am. Coll. Radiol. 2018, 15, 504–508. [Google Scholar] [CrossRef]

- Burian, E.; Jungmann, F.; Kaissis, G.A.; Lohöfer, F.K.; Spinner, C.D.; Lahmer, T.; Treiber, M.; Dommasch, M.; Schneider, G.; Geisler, F.; et al. Intensive Care Risk Estimation in COVID-19 Pneumonia Based on Clinical and Imaging Parameters: Experiences from the Munich Cohort. J. Clin. Med. 2020, 9, 1514. [Google Scholar] [CrossRef]

- Kelchtermans, P.; Bittremieux, W.; De Grave, K.; Degroeve, S.; Ramon, J.; Laukens, K.; Valkenborg, D.; Barsnes, H.; Martens, L. Machine learning applications in proteomics research: How the past can boost the future. Proteomics 2014, 14, 353–366. [Google Scholar] [CrossRef]

- Fröhlich, H.; Balling, R.; Beerenwinkel, N.; Kohlbacher, O.; Kumar, S.; Lengauer, T.; Maathuis, M.H.; Moreau, Y.; Murphy, S.A.; Przytycka, T.M.; et al. From hype to reality: Data science enabling personalized medicine. BMC Med. 2018, 16, 150. [Google Scholar] [CrossRef] [PubMed]

- Wong, D.; Yip, S. Machine learning classifies cancer. Nature 2018, 555, 446–447. [Google Scholar] [CrossRef] [PubMed]

- Casagranda, I.; Costantino, G.; Falavigna, G.; Furlan, R.; Ippoliti, R. Artificial Neural Networks and risk stratification models in Emergency Departments: The policy maker’s perspective. Health Policy 2016, 120, 111–119. [Google Scholar] [CrossRef] [PubMed]

- Maier-Hein, L.; Vedula, S.S.; Speidel, S.; Navab, N.; Kikinis, R.; Park, A.; Eisenmann, M.; Feussner, H.; Forestier, G.; Giannarou, S.; et al. Surgical data science for next-generation interventions. Nat. Biomed. Eng. 2017, 1, 691–696. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Nair, B.; Vavilala, M.S.; Horibe, M.; Eisses, M.J.; Adams, T.; Liston, D.E.; Low, D.K.; Newman, S.F.; Kim, J.; et al. Explainable machine-learning predictions for the prevention of hypoxaemia during surgery. Nat. Biomed. Eng. 2018, 2, 749–760. [Google Scholar] [CrossRef]

- Cleophas, T.J.; Zwinderman, A.H. Machine Learning in Medicine—A Complete Overview; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- García-Ordás, M.T.; Arias, N.; Benavides, C.; García-Olalla, O.; Benítez-Andrades, J.A. Evaluation of Country Dietary Habits Using Machine Learning Techniques in Relation to Deaths from COVID-19. Health 2020, 8, 371. [Google Scholar] [CrossRef]

- Gerke, S.; Babic, B.; Evgeniou, T.; Cohen, I.G. The need for a system view to regulate artificial intelligence/machine learning-based software as medical device. NPJ Digit. Med. 2020, 3, 53. [Google Scholar] [CrossRef]

- Stern, A.D.; Price, W.N. Regulatory oversight, causal inference, and safe and effective health care machine learning. Biostatistics 2020, 21, 363–367. [Google Scholar] [CrossRef]

- Subbaswamy, A.; Saria, S. From development to deployment: Dataset shift, causality, and shift-stable models in health AI. Biostatistics 2020, 21, 345–352. [Google Scholar] [CrossRef]

- McCray, A.T.; Ide, N.C. Design and implementation of a national clinical trials registry. J. Am. Med. Inf. Assoc. 2000, 7, 313–323. [Google Scholar] [CrossRef]

- McCray, A.T. Better access to information about clinical trials. Ann. Intern. Med. 2000, 133, 609–614. [Google Scholar] [CrossRef]

- Zarin, D.A.; Tse, T.; Ide, N.C. Trial Registration at ClinicalTrials.gov between May and October 2005. N. Engl. J. Med. 2005, 353, 2779–2787. [Google Scholar] [CrossRef]

- Zarin, D.A.; Tse, T.; Williams, R.J.; Califf, R.M.; Ide, N.C. The ClinicalTrials.gov results database—Update and key issues. N. Engl. J. Med. 2011, 364, 852–860. [Google Scholar] [CrossRef]

- USA National Library of Medicine. ClinicalTrials.gov → Advanced Search. Available online: https://clinicaltrials.gov/ct2/search/advanced (accessed on 7 January 2021).

- Ehrhardt, S.; Appel, L.J.; Meinert, C.L. Trends in National Institutes of Health Funding for Clinical Trials Registered in ClinicalTrials.gov. JAMA 2015, 314, 2566–2567. [Google Scholar] [CrossRef]

- Ross, J.S.; Mulvey, G.K.; Hines, E.M.; Nissen, S.E.; Krumholz, H.M. Trial publication after registration in ClinicalTrials.Gov: A cross-sectional analysis. PLoS Med. 2009, 6, e1000144. [Google Scholar] [CrossRef]

- Cihoric, N.; Tsikkinis, A.; Miguelez, C.G.; Strnad, V.; Soldatovic, I.; Ghadjar, P.; Jeremic, B.; Dal Pra, A.; Aebersold, D.M.; Lössl, K. Portfolio of prospective clinical trials including brachytherapy: An analysis of the ClinicalTrials.gov database. Radiat. Oncol. 2016, 11, 48. [Google Scholar] [CrossRef]

- Chen, Y.-P.; Lv, J.-W.; Liu, X.; Zhang, Y.; Guo, Y.; Lin, A.-H.; Sun, Y.; Mao, Y.-P.; Ma, J. The Landscape of Clinical Trials Evaluating the Theranostic Role of PET Imaging in Oncology: Insights from an Analysis of ClinicalTrials.gov Database. Theranostics 2017, 7, 390–399. [Google Scholar] [CrossRef]

- Zippel, C.; Ronski, S.C.; Bohnet-Joschko, S.; Giesel, F.L.; Kopka, K. Current Status of PSMA-Radiotracers for Prostate Cancer: Data Analysis of Prospective Trials Listed on ClinicalTrials.gov. Pharmacy 2020, 13, 12. [Google Scholar] [CrossRef]

- Bell, S.A.; Tudur Smith, C. A comparison of interventional clinical trials in rare versus non-rare diseases: An analysis of ClinicalTrials.gov. Orphanet J. Rare Dis. 2014, 9, 170. [Google Scholar] [CrossRef]

- Subramanian, J.; Madadi, A.R.; Dandona, M.; Williams, K.; Morgensztern, D.; Govindan, R. Review of ongoing clinical trials in non-small cell lung cancer: A status report for 2009 from the ClinicalTrials.gov website. J. Thorac. Oncol. Off. Publ. Int. Assoc. Study Lung Cancer 2010, 5, 1116–1119. [Google Scholar] [CrossRef]

- Hirsch, B.R.; Califf, R.M.; Cheng, S.K.; Tasneem, A.; Horton, J.; Chiswell, K.; Schulman, K.A.; Dilts, D.M.; Abernethy, A.P. Characteristics of oncology clinical trials: Insights from a systematic analysis of ClinicalTrials.gov. JAMA Intern. Med. 2013, 173, 972–979. [Google Scholar] [CrossRef] [PubMed]

- Califf, R.M.; Zarin, D.A.; Kramer, J.M.; Sherman, R.E.; Aberle, L.H.; Tasneem, A. Characteristics of clinical trials registered in ClinicalTrials.gov, 2007–2010. JAMA 2012, 307, 1838–1847. [Google Scholar] [CrossRef] [PubMed]

- Benke, K.; Benke, G. Artificial Intelligence and Big Data in Public Health. Int. J. Environ. Res. Public Health 2018, 15, 2796. [Google Scholar] [CrossRef] [PubMed]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Kulkarni, S.; Seneviratne, N.; Baig, M.S.; Khan, A.H.A. Artificial Intelligence in Medicine: Where Are We Now? Acad. Radiol. 2020, 27, 62–70. [Google Scholar] [CrossRef]

- Lee, D.; Yoon, S.N. Application of Artificial Intelligence-Based Technologies in the Healthcare Industry: Opportunities and Challenges. Int. J. Environ. Res. Public Health 2021, 18, 271. [Google Scholar] [CrossRef]

- Sidey-Gibbons, J.A.M.; Sidey-Gibbons, C.J. Machine learning in medicine: A practical introduction. BMC Med. Res. Methodol. 2019, 19, 64. [Google Scholar] [CrossRef]

- Brinker, T.J.; Hekler, A.; Utikal, J.S.; Grabe, N.; Schadendorf, D.; Klode, J.; Berking, C.; Steeb, T.; Enk, A.H.; von Kalle, C. Skin Cancer Classification Using Convolutional Neural Networks: Systematic Review. J. Med. Internet Res. 2018, 20, e11936. [Google Scholar] [CrossRef]

- Kawasaki, T.; Kidoh, M.; Kido, T.; Sueta, D.; Fujimoto, S.; Kumamaru, K.K.; Uetani, T.; Tanabe, Y.; Ueda, T.; Sakabe, D.; et al. Evaluation of Significant Coronary Artery Disease Based on CT Fractional Flow Reserve and Plaque Characteristics Using Random Forest Analysis in Machine Learning. Acad. Radiol. 2020, 27, 1700–1708. [Google Scholar] [CrossRef]

- Kickingereder, P.; Isensee, F.; Tursunova, I.; Petersen, J.; Neuberger, U.; Bonekamp, D.; Brugnara, G.; Schell, M.; Kessler, T.; Foltyn, M.; et al. Automated quantitative tumour response assessment of MRI in neuro-oncology with artificial neural networks: A multicentre, retrospective study. Lancet Oncol. 2019, 20, 728–740. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Hekler, A.; Utikal, J.S.; Enk, A.H.; Solass, W.; Schmitt, M.; Klode, J.; Schadendorf, D.; Sondermann, W.; Franklin, C.; Bestvater, F.; et al. Deep learning outperformed 11 pathologists in the classification of histopathological melanoma images. Eur. J. Cancer 2019, 118, 91–96. [Google Scholar] [CrossRef]

- Campanella, G.; Hanna, M.G.; Geneslaw, L.; Miraflor, A.; Werneck Krauss Silva, V.; Busam, K.J.; Brogi, E.; Reuter, V.E.; Klimstra, D.S.; Fuchs, T.J. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat. Med. 2019, 25, 1301–1309. [Google Scholar] [CrossRef]

- Velasco-Garrido, M.; Zentner, A.; Busse, R. Health Systems, Health Policy and Health Technology Assessment. In Health Technology Assessment and Health Policy-Making in Europe. Current Status, Challenges and Potential; Velasco-Garrido, M., Kristensen, F.B., Nielsen, C.P., Busse, R., Eds.; WHO Regional Office for Europe: Copenhagen, Denmark, 2008. [Google Scholar]

- Beck, A.C.C.; Retèl, V.P.; Bhairosing, P.A.; van den Brekel, M.W.M.; van Harten, W.H. Barriers and facilitators of patient access to medical devices in Europe: A systematic literature review. Health Policy 2019, 123, 1185–1198. [Google Scholar] [CrossRef]

- USA National Library of Medicine. ClinicalTrials.gov Protocol Registration Quality Control Review Criteria. Available online: https://prsinfo.clinicaltrials.gov/ProtocolDetailedReviewItems.pdf (accessed on 1 February 2021).

- Blomberg, S.N.; Folke, F.; Ersbøll, A.K.; Christensen, H.C.; Torp-Pedersen, C.; Sayre, M.R.; Counts, C.R.; Lippert, F.K. Machine learning as a supportive tool to recognize cardiac arrest in emergency calls. Resuscitation 2019, 138, 322–329. [Google Scholar] [CrossRef]

- Jaroszewski, A.C.; Morris, R.R.; Nock, M.K. Randomized controlled trial of an online machine learning-driven risk assessment and intervention platform for increasing the use of crisis services. J. Consult. Clin. Psychol. 2019, 87, 370–379. [Google Scholar] [CrossRef]

- Mohr, D.C.; Schueller, S.M.; Tomasino, K.N.; Kaiser, S.M.; Alam, N.; Karr, C.; Vergara, J.L.; Gray, E.L.; Kwasny, M.J.; Lattie, E.G. Comparison of the Effects of Coaching and Receipt of App Recommendations on Depression, Anxiety, and Engagement in the IntelliCare Platform: Factorial Randomized Controlled Trial. J. Med. Internet Res. 2019, 21, e13609. [Google Scholar] [CrossRef]

- Tesche, C.; Otani, K.; De Cecco, C.N.; Coenen, A.; De Geer, J.; Kruk, M.; Kim, Y.-H.; Albrecht, M.H.; Baumann, S.; Renker, M.; et al. Influence of Coronary Calcium on Diagnostic Performance of Machine Learning CT-FFR: Results from MACHINE Registry. JACC Cardiovasc. Imaging 2020, 13, 760–770. [Google Scholar] [CrossRef]

- Baumann, S.; Renker, M.; Schoepf, U.J.; De Cecco, C.N.; Coenen, A.; De Geer, J.; Kruk, M.; Kim, Y.H.; Albrecht, M.H.; Duguay, T.M.; et al. Gender differences in the diagnostic performance of machine learning coronary CT angiography-derived fractional flow reserve -results from the MACHINE registry. Eur. J. Radiol. 2019, 119, 108657. [Google Scholar] [CrossRef]

- De Geer, J.; Coenen, A.; Kim, Y.H.; Kruk, M.; Tesche, C.; Schoepf, U.J.; Kepka, C.; Yang, D.H.; Nieman, K.; Persson, A. Effect of Tube Voltage on Diagnostic Performance of Fractional Flow Reserve Derived from Coronary CT Angiography With Machine Learning: Results From the MACHINE Registry. Am. J. Roentgenol. 2019, 213, 325–331. [Google Scholar] [CrossRef]

- Wan, N.; Weinberg, D.; Liu, T.-Y.; Niehaus, K.; Ariazi, E.A.; Delubac, D.; Kannan, A.; White, B.; Bailey, M.; Bertin, M.; et al. Machine learning enables detection of early-stage colorectal cancer by whole-genome sequencing of plasma cell-free DNA. BMC Cancer 2019, 19, 832. [Google Scholar] [CrossRef]

- Lin, J.; Ariazi, E.; Dzamba, M.; Hsu, T.-K.; Kothen-Hill, S.; Li, K.; Liu, T.-Y.; Mahajan, S.; Palaniappan, K.K.; Pasupathy, A.; et al. Evaluation of a sensitive blood test for the detection of colorectal advanced adenomas in a prospective cohort using a multiomics approach. J. Clin. Oncol. 2021, 39, 43. [Google Scholar] [CrossRef]

- Prabhakar, B.; Singh, R.K.; Yadav, K.S. Artificial intelligence (AI) impacting diagnosis of glaucoma and understanding the regulatory aspects of AI-based software as medical device. Comput. Med. Imaging Graph. 2021, 87, 101818. [Google Scholar] [CrossRef]

- Zippel, C.; Bohnet-Joschko, S. Post market surveillance in the german medical device sector—current state and future perspectives. Health Policy 2017, 121, 880–886. [Google Scholar] [CrossRef]

- European Parliament. Regulation (EU) 2017/745 of the European parliament and of the council of 5 April 2017 on medical devices, amending Directive 2001/83/EC, Regulation (EC) No 178/2002 and Regulation (EC) No 1223/2009 and repealing Council Directives 90/385/EEC and 93/42/EEC. Off. J. Eur. Union 2017, 117, 1–175. [Google Scholar]

- FDA. Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan, Internet. 2021. Available online: https://www.fda.gov/media/145022/download (accessed on 19 February 2021).

- Bate, A.; Hobbiger, S.F. Artificial Intelligence, Real-World Automation and the Safety of Medicines. Drug Saf. 2020. [Google Scholar] [CrossRef]

- Broome, D.T.; Hilton, C.B.; Mehta, N. Policy Implications of Artificial Intelligence and Machine Learning in Diabetes Management. Curr. Diabetes Rep. 2020, 20, 5. [Google Scholar] [CrossRef]

- Cohen, I.G.; Evgeniou, T.; Gerke, S.; Minssen, T. The European artificial intelligence strategy: Implications and challenges for digital health. Lancet Digit. Health 2020, 2, e376–e379. [Google Scholar] [CrossRef]

- Pesapane, F.; Volonté, C.; Codari, M.; Sardanelli, F. Artificial intelligence as a medical device in radiology: Ethical and regulatory issues in Europe and the United States. Insights Imaging 2018, 9, 745–753. [Google Scholar] [CrossRef]

- Larson, D.B.; Harvey, H.; Rubin, D.L.; Irani, N.; Tse, J.R.; Langlotz, C.P. Regulatory Frameworks for Development and Evaluation of Artificial Intelligence-Based Diagnostic Imaging Algorithms: Summary and Recommendations. J. Am. Coll. Radiol. 2020. [Google Scholar] [CrossRef]

- Scherer, J.; Nolden, M.; Kleesiek, J.; Metzger, J.; Kades, K.; Schneider, V.; Bach, M.; Sedlaczek, O.; Bucher, A.M.; Vogl, T.J.; et al. Joint Imaging Platform for Federated Clinical Data Analytics. JCO Clin. Cancer Inform. 2020, 4, 1027–1038. [Google Scholar] [CrossRef]

- Grobler, L.; Siegfried, N.; Askie, L.; Hooft, L.; Tharyan, P.; Antes, G. National and multinational prospective trial registers. Lancet 2008, 372, 1201–1202. [Google Scholar] [CrossRef]

- Hasselblatt, H.; Dreier, G.; Antes, G.; Schumacher, M. The German Clinical Trials Register: Challenges and chances of implementing a bilingual registry. J. Evid. Based Med. 2009, 2, 36–40. [Google Scholar] [CrossRef] [PubMed]

- Ogino, D.; Takahashi, K.; Sato, H. Characteristics of clinical trial websites: Information distribution between ClinicalTrials.gov and 13 primary registries in the WHO registry network. Trials 2014, 15, 428. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Maros, M.E.; Capper, D.; Jones, D.T.W.; Hovestadt, V.; von Deimling, A.; Pfister, S.M.; Benner, A.; Zucknick, M.; Sill, M. Machine learning workflows to estimate class probabilities for precision cancer diagnostics on DNA methylation microarray data. Nat. Protoc. 2020, 15, 479–512. [Google Scholar] [CrossRef]

| Absolute (n) | Relative (%) * | |

|---|---|---|

| Overall study status * | ||

| Patient recruitment | ||

| Open | 198 | 55 |

| Not open | 160 | 45 |

| Recruitment status | ||

| Not yet recruiting | 64 | 18 |

| Recruiting | 134 | 37 |

| Enrolling by invitation | 15 | 4 |

| Active, not recruiting | 22 | 6 |

| Suspended | 5 | 1 |

| Completed | 95 | 27 |

| Unknown status | 23 | 6 |

| Study results | ||

| Studies with results | 6 | 2 |

| Studies without results | 352 | 98 |

| Organization/Cooperation | ||

| Number of study locations | ||

| Single study location | 288 | 80 |

| Multiple study locations | 46 | 13 |

| Not clear | 24 | 7 |

| National/International | ||

| National | 345 | 96 |

| International | 13 | 4 |

| Study location/Recruiting country ** | ||

| The United States of America | 144 | 40 |

| China | 34 | 9 |

| The United Kingdom | 28 | 8 |

| Canada | 23 | 6 |

| France | 18 | 5 |

| Switzerland | 14 | 4 |

| Germany | 13 | 4 |

| Israel | 12 | 3 |

| Spain | 12 | 3 |

| Netherlands | 11 | 3 |

| All others (Republic of Korea, Italy, Belgium, etc.) | 67 | 19 |

| Lead sponsor | ||

| University/Hospital | 292 | 82 |

| Industry | 66 | 18 |

| Funding Sources ** | ||

| Industry | 86 | 24 |

| All others (individuals, universities, organizations) | 314 | 88 |

| Government agencies | 19 | 5 |

| National Institutes of Health (NIH) *** | 11 | 3 |

| Other U.S. Federal Agency *** | 8 | 2 |

| Absolute (n) | Relative (%) * | |

|---|---|---|

| Population studied | ||

| Age group ** | ||

| Included children | 74 | 21 |

| Included adults | 341 | 95 |

| Included older adults (age > 65 year) | 320 | 89 |

| Gender of participants | ||

| Both | 333 | 93 |

| Female only | 20 | 6 |

| Male only | 5 | 1 |

| Study type and design | ||

| Observational Studies *** | 230 | 64 |

| Observational Model | ||

| Cohort | 154 | 43 |

| Case-Control | 26 | 7 |

| Case-Only | 26 | 7 |

| Other | 24 | 7 |

| Time Perspective | ||

| Prospective | 140 | 39 |

| Retrospective | 57 | 16 |

| Cross Sectional | 17 | 5 |

| Other | 16 | 4 |

| Interventional Studies *** | 128 | 36 |

| Allocation | ||

| Randomized | 66 | 18 |

| Non-Randomized | 17 | 5 |

| N/A | 45 | 13 |

| Intervention Model | ||

| Single Group Assignment | 48 | 13 |

| Parallel Assignment | 69 | 19 |

| Other (crossover, sequential, etc.) | 11 | 3 |

| Masking/Blinding | ||

| None (Open Label) | 77 | 22 |

| Masked | 51 | 14 |

| Single (Participant or Outcomes Assessor) | 19 | 5 |

| Double or triple | 32 | 9 |

| Primary purpose | ||

| Diagnostic | 37 | 10 |

| Treatment | 26 | 7 |

| Prevention | 12 | 3 |

| Supportive Care | 11 | 3 |

| Other | 42 | 12 |

| Intervention/treatment type ** | ||

| Behavioral | 40 | 11 |

| Device | 86 | 24 |

| Diagnostic Test | 77 | 22 |

| Drug | 17 | 5 |

| Procedure | 13 | 4 |

| Other | 155 | 43 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zippel, C.; Bohnet-Joschko, S. Rise of Clinical Studies in the Field of Machine Learning: A Review of Data Registered in ClinicalTrials.gov. Int. J. Environ. Res. Public Health 2021, 18, 5072. https://doi.org/10.3390/ijerph18105072

Zippel C, Bohnet-Joschko S. Rise of Clinical Studies in the Field of Machine Learning: A Review of Data Registered in ClinicalTrials.gov. International Journal of Environmental Research and Public Health. 2021; 18(10):5072. https://doi.org/10.3390/ijerph18105072

Chicago/Turabian StyleZippel, Claus, and Sabine Bohnet-Joschko. 2021. "Rise of Clinical Studies in the Field of Machine Learning: A Review of Data Registered in ClinicalTrials.gov" International Journal of Environmental Research and Public Health 18, no. 10: 5072. https://doi.org/10.3390/ijerph18105072

APA StyleZippel, C., & Bohnet-Joschko, S. (2021). Rise of Clinical Studies in the Field of Machine Learning: A Review of Data Registered in ClinicalTrials.gov. International Journal of Environmental Research and Public Health, 18(10), 5072. https://doi.org/10.3390/ijerph18105072