Abstract

Nucleus segmentation of fluorescence microscopy is a critical step in quantifying measurements in cell biology. Automatic and accurate nucleus segmentation has powerful applications in analyzing intrinsic characterization in nucleus morphology. However, existing methods have limited capacity to perform accurate segmentation in challenging samples, such as noisy images and clumped nuclei. In this paper, inspired by the idea of cascaded U-Net (or W-Net) and its remarkable performance improvement in medical image segmentation, we proposed a novel framework called Attention-enhanced Simplified W-Net (ASW-Net), in which a cascade-like structure with between-net connections was used. Results showed that this lightweight model could reach remarkable segmentation performance in the BBBC039 testing set (aggregated Jaccard index, 0.90). In addition, our proposed framework performed better than the state-of-the-art methods in terms of segmentation performance. Moreover, we further explored the effectiveness of our designed network by visualizing the deep features from the network. Notably, our proposed framework is open source.

1. Introduction

Image segmentation plays a vital role in cell biology in acquiring quantitative measurements [1]. From microscopy-based measurement [2], multiplex imaging analysis [3,4], to high-content screening [5,6], image segmentation is crucial to the characterization of cell signaling and morphology, such as the size and shape.

One of the critical steps for quantifying measurements in fluorescence microscopy is accurate nucleus segmentation. It is the first step for identifying cell borders [7], enabling each cell to characterize. Automatic nucleus segmentation alleviates the problems of assessing subtle visual features by manually inefficiently drawing the nuclear contours [8]. Over the past decades, some significant research efforts have been made to improve the performance of nucleus segmentation [9]. For instance, thresholding [10], watershed algorithm [11,12], and active contour [13,14] are some of the dominant approaches to this segmentation task. However, these methods highly rely on expert knowledge to set parameters, and adjustment parameters are required in different experimental conditions. Moreover, these classical algorithms do not work effectively in some challenging cases, such as noisy images and crowded nuclei [1,7,15].

Deep learning has recently succeeded in image segmentation tasks and often achieves a much higher performance on popular benchmarks than traditional methods [16]. From multi-organ segmentation on CT images [17,18] to tumor-tissue segmentation on histopathological images [19,20], deep learning has achieved outstanding performance improvement. These segmentation tasks can be formulated as a classification problem of each pixel with a semantic label [16]. Hence, nucleus segmentation can be considered as a problem of classifying each pixel into a semantic category. Some previous works illustrate that deep learning is effective in nucleus segmentation [1,21,22], such as U-Net [23] and W-Net [24]. The architecture of U-Net has been broadly thought of as an encoder convolutional neural network followed by a decoder network. The encoder extracts feature related to the segmentation task, and then the decoder constructs the mask given only such features. The traditional architecture of U-Net consists of basic convolution operation, pooling operation, and deconvolution operation. In contrast, W-Net is a network with cascaded U-shape architecture [24]. In W-Net, the between-net connections are designed to preserve features from shallow layers to deep layers by concatenation operations [24]. This cascade structure indicates a noticeable accuracy improvement. However, W-Net is a computationally heavy network due to its cascade architecture. Thus, a large amount of data would be required to train W-Net. Meanwhile, a longer time is required than U-Net for training a well-fitting model.

Given the importance of nucleus segmentation and only limited data for nucleus segmentation currently, in this study, we revisited the challenge of nucleus segmentation by incorporating the ideas from W-Net. In particular, inspired by U-Net and W-Net, we proposed a novel method for nucleus segmentation, named attention-enhanced simplified W-Net (ASW-Net). Our proposed model is a lightweight network utilizing a cascade-like structure with between-net connections. An attention gate is connected to the convolution block before each up-sampling process to extract representative features effectively. In this framework, we utilize the interior expansion, a post-processing method, to improve the nuclei segmentation accuracy.

In this study, we propose a novel framework with ASW-Net for the nucleus segmentation of fluorescence microscopy. Attention gates are applied to ASW-Net for better learning ability. Moreover, we visualize the deep features extracted from ASW-Net to interpret how this model effectively improves segmentation performance.

We have released ASW-Net as a publicly available segmentation tool for the community. The pre-trained model and all support materials are available at https://github.com/Liuzhe30/ASW-Net.

2. Materials and Methods

2.1. Benchmark Dataset

In total, we employed two image datasets in our experiment: the BBBC039 dataset [5] and a ganglioneuroblastoma image set [25].

BBBC039 is a high-throughput chemical screen on human osteosarcoma U2OS cell line, available from the Broad Bioimage Benchmark Collection (http://www.broad.mit.edu/bbbc) (accessed date: 22 May 2021). This image set only includes a channel of DNA of a single field of view. This collection has around 23,165 nuclei annotated by expert biologists, including variant perturbations from one experiment. The dataset consists of 200 images, each 520 × 696 pixels in size. We used the same data partitions as Broad Institute suggests comparing results with previous work. Hence, the collection is split into 100 training, 50 validation, and 50 testing images. Hence, more than 72 million pixels were used in our pixel classification task for nucleus segmentation.

The ganglioneuroblastoma dataset includes ten images/2773 nuclei. This image set is of broad heterogeneity regarding magnification (20×, 40×, or 63×). Moreover, this image set has a different signal-to-noise ratio (SNR). Overall, 70% of the dataset is normal SNR and 30% SNR data. We used this collection as external validation to check the generalization capability of ASW-Net in different magnification, different cell types, and different SNR.

2.2. Algorithm Framework

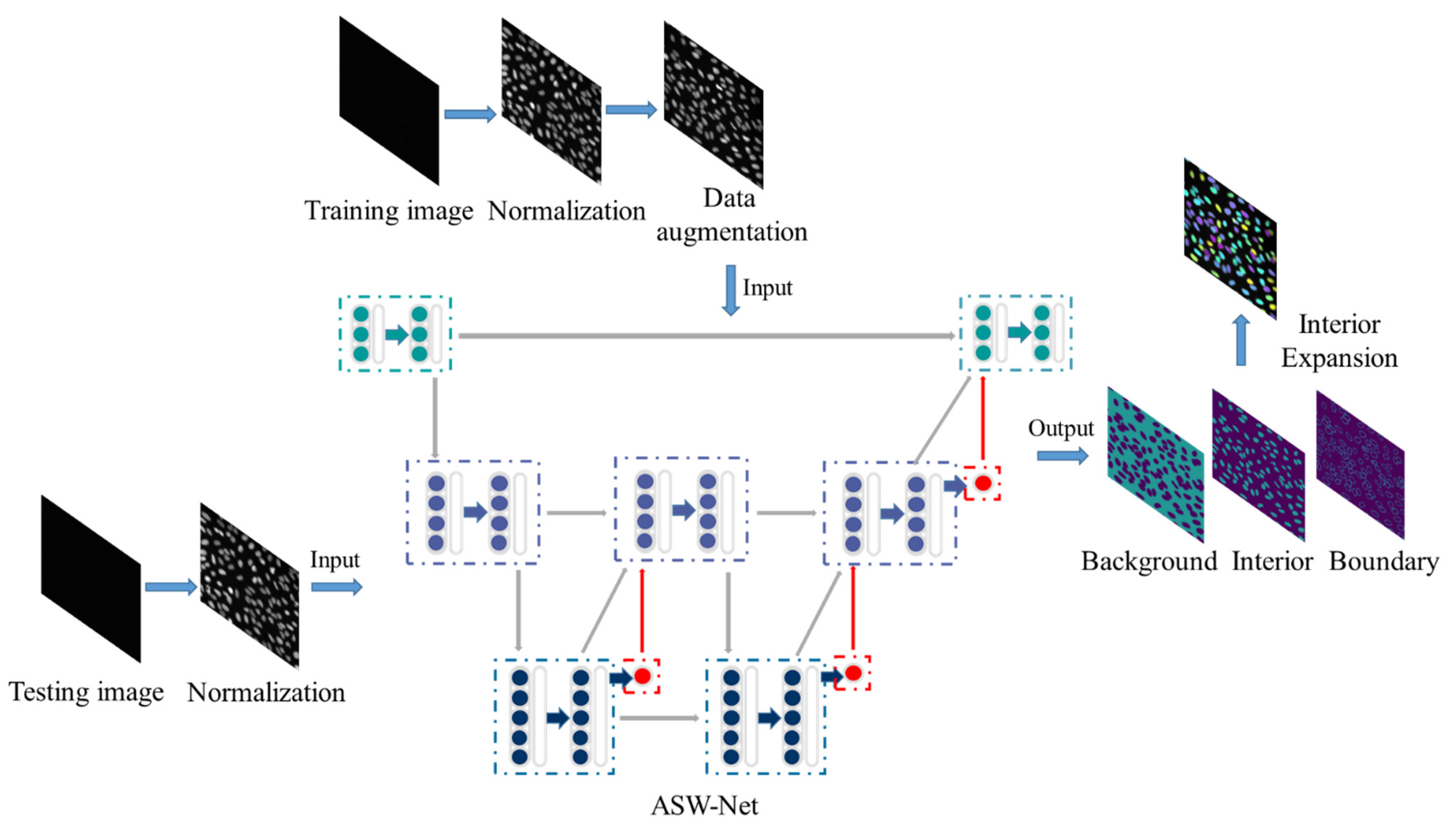

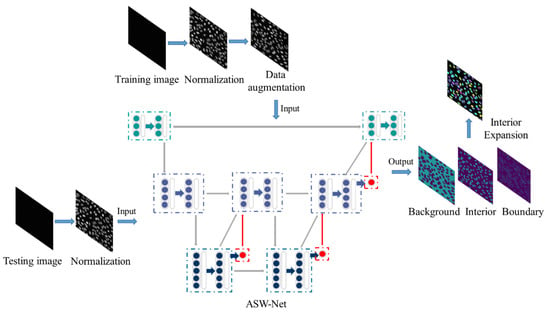

Our overall framework for nuclear instance segmentation contains three parts: image pre-processing, network architecture, and image post-processing (see Figure 1). As for the pre-processing method, we first applied gray-scale normalization to make contours and instances clearer in the source image. To avoid as much over-fitting as possible, we also utilized some data augmentation operations for the training set (rotation and flip). Then, the processed images were fed into our proposed deep-learning network ASW-Net for training or testing to predict whether a given pixel is on the edge of the nucleus, inside the nucleus, or in the background. The detailed structure of ASW-Net is demonstrated in the next section. Considering the thick boundary in the original predicted results, we implemented the interior expansion algorithm to thin the boundary of nuclei and improve prediction accuracy. After the procedures described above, we obtained the final segmentation results.

Figure 1.

Overview of the proposed framework for nuclear instance segmentation. This pipeline contains image pre-processing, network architecture, and image post-processing. ASW-NET is trained based on the training images, and appropriate weights are found in the training process. In the testing process, the ASW-NET predicts the probability of each pixel’s background, interior, and boundary. Then an interior expansion algorithm is adopted to obtain the final instance segmentation results. The details of ASW-Net are explained in Figure 2.

2.3. Model Design

2.3.1. Network Architecture

Inspired by the classical structures of U-Net [23], W-Net [24], and some pioneered research works, we proposed ASW-Net, a deep learning-based tool for cell nucleus segmentation of fluorescence microscopy. As a simplified W-net, ASW-Net can extract more features from raw images than U-net, and it is lighter than W-net at the same time. The attention mechanism also endows the model with better learning ability and interpretability.

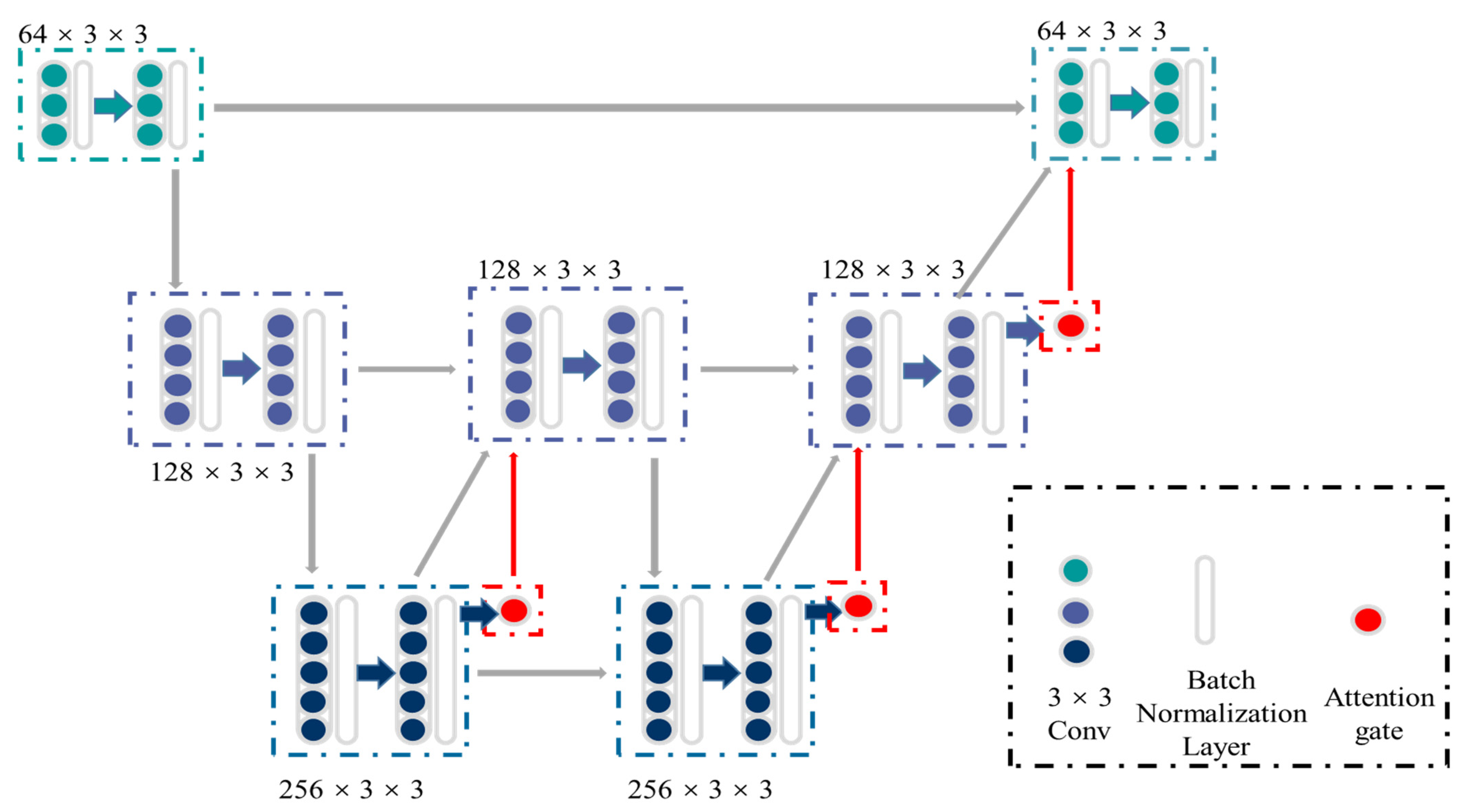

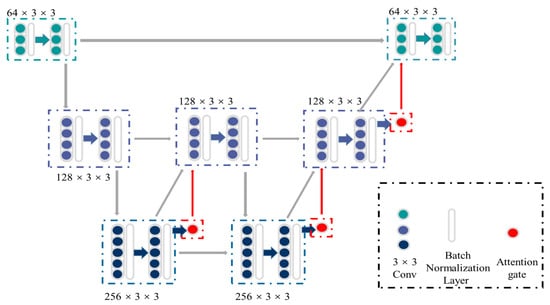

Figure 2 shows the detailed structure of ASW-Net, which contains three down-sampling processes (encoding phase), three up-sampling processes (decoding phase), and three attention gates. First, the input images are fed into two successive down-sampling processes, followed by an up-sampling process. Since the original input consists of features from a shallow level and carries unusable noise with a high probability, the first up-sampling process does not feed it back into the model. To learn the deeper features more purely, we only decode and re-enter the information generated before the second down-sampling.

Figure 2.

The structure of ASW-Net. There are three down-sampling processes (encoding phase), three up-sampling processes (decoding phase), and three attention gates, which form the shape of a ‘W’. The green and blue dots represent the convolutional blocks, while the white transparent boxes represent the batch normalization layers and the red dots represent the attention gates. The gray, green, blue, and red arrows represent the data flow.

After this, we continued to conduct a down-sampling process followed by two up-sampling processes and combined the original input into the output information to merge the deep and shallow features. Besides, batch normalization layers were implemented to reduce saturation [26], and attention gates were attached to all the upper sampling blocks to increase the accuracy of predicting the boundary of objects. We then finally obtained the classification output by combining the original input with the up-sampled result and convolving them.

2.3.2. Implementation Details

Our model was implemented, trained, and tested using the open-source software library Keras [27] and Tensorflow [28] on Nvidia Tesla v100 GPUs. The main hyperparameters, such as the kernel size of convolutional blocks and the learning rate, were explored. Besides, we also adopted an early stopping strategy and a save-best strategy. When the validation loss did not reduce in 10 epochs during training time, the training process would be stopped, and the best model parameters would be saved. In all cases, the weights were initialized by default setting in Keras; the parameters were trained using an RMSProp optimizer [29] to dynamically change the learning rate during model training. Our proposed network was trained by minimizing the weighted cross-entropy loss function [30] for semantic segmentation.

2.4. Post-Processing: Interior Expansion Algorithm to Convert a 3-Class Label to an Instance Label

We chose pixels with a probability of interior greater than 0.5 as seeds. For each seeded nucleus, it would expand its territory by one pixel at each iteration. During the iteration, the probability of the nucleus gradually declined, and the probability of the boundary increased. At last, the seeded nucleus stopped its expansion when the probability of the boundary reached the local maximum on its expansion direction. To avoid the seeded nucleus expanding to other nucleus regions in clumped objects, we discarded the boundary pixel in the last iteration. The union of these pixels and the pixels of the original interior seed were the ultimate output of this algorithm.

2.5. Performance Evaluation Metrics

A commonly used evaluation metric for nucleus segmentation is the Aggregated Jaccard Index (AJI). To quantitatively evaluate the performance of ASW-Net and other nucleus segmentation methods, we adopted four measures, including AJI [8], Dice coefficient (DICE1) [31], ensemble Dice (DICE2) [19], and panoptic quality (PQ) [21]. PQ is the product of Detection Quality (DQ) and Segmentation Quality (SQ). These metrics are used to compare the area of overlap between the predicted area of a nucleus and the ground-truth area of the nuclei. If we obtain a higher metric value, we will get more accurate downstream extracted metrics, such as area and perimeter.

3. Results

3.1. Performance of Proposed Framework and Other Existing Nucleus Segmentation Methods

To evaluate the prediction performance of our proposed method, we tested ASW-Net against CellProfiler (see Supplementary Note for parameter settings) [32], U-Net [23], and SW-Net (ASW-Net without attention gates) with ground truth as a baseline. Experimental results show that ASW-Net achieved satisfactory accuracy in classification even with insufficient labeled training samples (Figure S1). It can be seen in Table 1 that among all of the tested methods, our proposed framework has the highest score for all indicators except DICE2.

Table 1.

The performance comparison between ASW-Net and other existing methods on BBBC039 nuclei set.

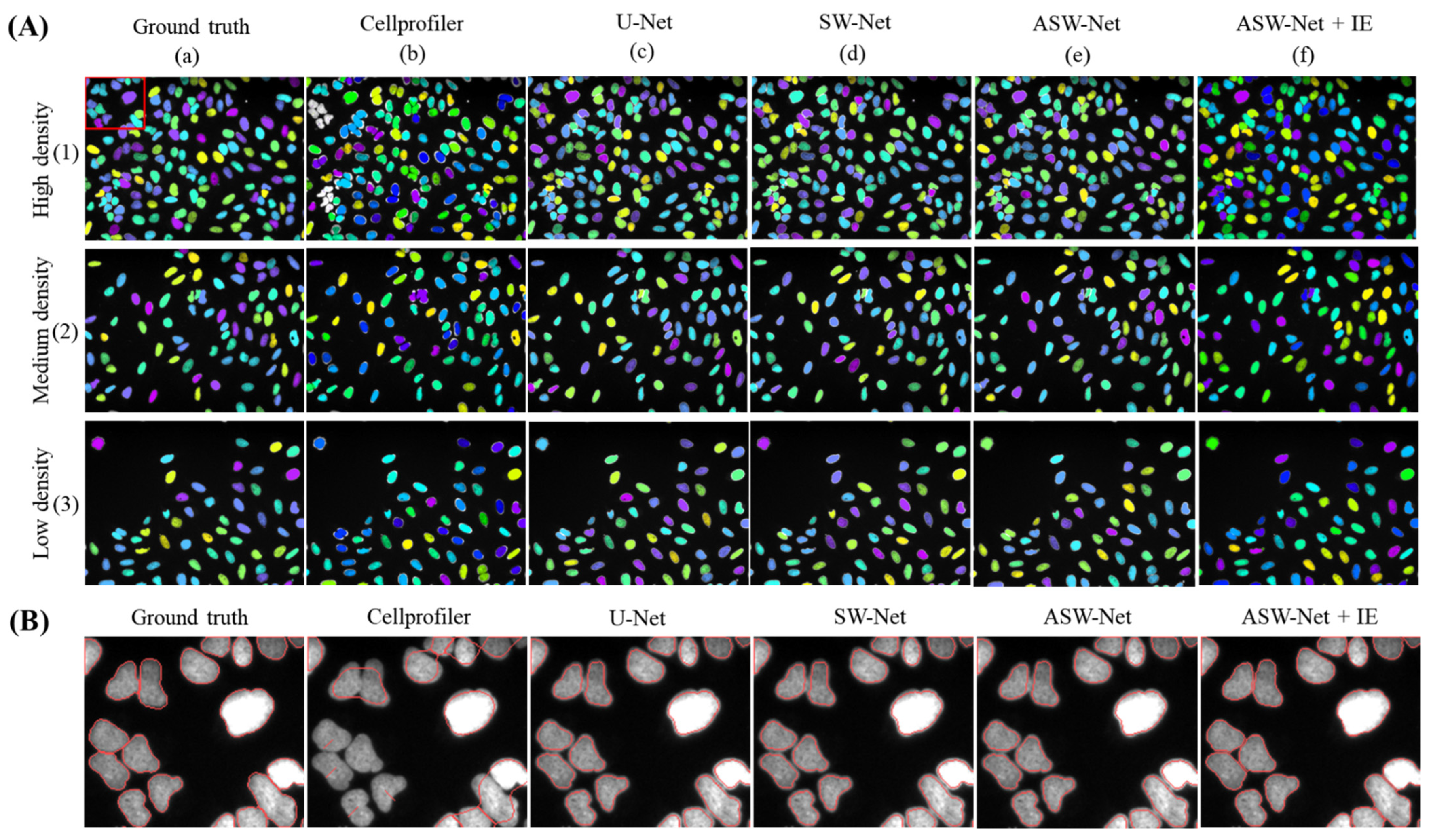

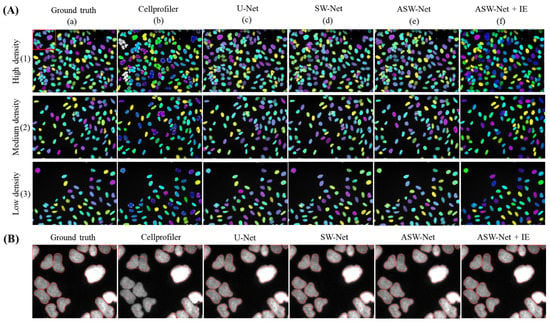

3.1.1. ASW-Net Performs Better in Different Nuclear Density

When it comes to the classification stability of ASW-Net, three images with different nuclear distribution densities were randomly selected for a case study. As shown in Figure 3A, CellProfiler performs poorly in the case of high nuclear density (see Figure 3A(b)(1)), while ASW-Net generates reliable masks no matter in the case of low, medium, or high nuclear density. Furthermore, SW-Net with the attention gates performs better than the network without the attention mechanism, proving the necessity of embedding the attention mechanism in our method.

Figure 3.

Visualized comparison between ASW-Net and other existing methods against the ground truth. (A) Three images with different nuclear distribution densities were randomly selected for this study. From top to bottom are high density, medium density, low density of nuclear images, respectively. From left to right are the masks of ground truth, the prediction of Cellprofiler, U-Net, SW-Net, ASW-Net, ASW-Net plus interior expansion, respectively. The nuclear masks are colored randomly. (B) Magnified segmentation result and the ground truth. From left to right are the mask outlines of ground truth, the prediction of Cellprofiler, U-Net, SW-Net, ASW-Net, ASW-Net plus interior expansion, respectively.

3.1.2. ASW-Net Excels at Segmentation in Low SNR Dataset

We tested our proposed framework on the ganglioneuroblastoma dataset, with a low signal-to-noise ratio and different cell types from the BBBC039 image set. We evaluated the performance of the framework on the high SNR and the low SNR ganglioneuroblastoma dataset, respectively. As shown in Table 2 and Table 3, none of the five methods performed as well as the previous dataset. The results confirm the difficulty in accurate nuclei segmentation. However, our proposed framework still achieved satisfactory segmentation results and outperformed other methods, especially in a low SNR ganglioneuroblastoma image set. It is worth mentioning that ASW-Net performs better than U-Net and SW-Net, the two deep learning networks without attention gates. It indicates that the attention mechanism used in ASW-Net probably helps the model transfer to this noisy dataset.

Table 2.

The performance comparison on high SNR ganglioneuroblastoma dataset.

Table 3.

The performance comparison on low SNR ganglioneuroblastoma dataset.

3.2. Ablation Study

To examine whether a particular component of ASW-Net was vital or necessary, we carried out an ablation study by removing some network elements. The experiments performed in this section shared the same features and hyper-parameters. As is shown in Table 4, the implementation of attention mechanisms, data augmentation (rotation and flip), and post-processing (watershed) all contribute to the proposed method. Specifically, the addition of image rotation and flip significantly improved the prediction performance (∆AJI = −1.451 for no rotation, ∆AJI = −1.214 for no flip), which may be due to the undiversified training dataset. Moreover, the data augmentation also helped improve the generalization capability of the model.

Table 4.

Ablation study of ASW-Net.

Attention gates were beneficial for achieving better prediction accuracy (∆AJI = −0.587 for no attention) at the same time. On the one hand, the introduction of attention gates made the number of parameters increase, thus enhancing the learning ability of the model. On the other hand, the attention gates attached in the up-sampling process were conducive to more efficient feature extraction.

We also tested whether two post-processing methods helped improve segmentation accuracy, including watershed and interior expansion algorithms. Both of these two methods have a positive effect on prediction performance. The introduction of the watershed improves the performance a little (∆AJI = +0.005), possibly because the watershed algorithm is helpful to separate the connected cell nucleus [33]. However, we do not observe a significant improvement in the segmentation performance by the watershed algorithm because ASW-Net could already predict boundary class effectively. Hence, the clumped nuclei would be separated well after thresholding on the probabilistic map of ASW-Net. Another post-processing method, interior expansion, was also proven effective by an ablation study. Compared with the thresholding method on a probabilistic map, the interior expansion algorithm automatically expanded its boundary to the most likely place and kept the final boundary only one pixel wide and continuous. Although our ASW-Net could predict the pixels into the background class, interior class, and boundary class with high accuracy, the performance could be further improved through post-processing methods.

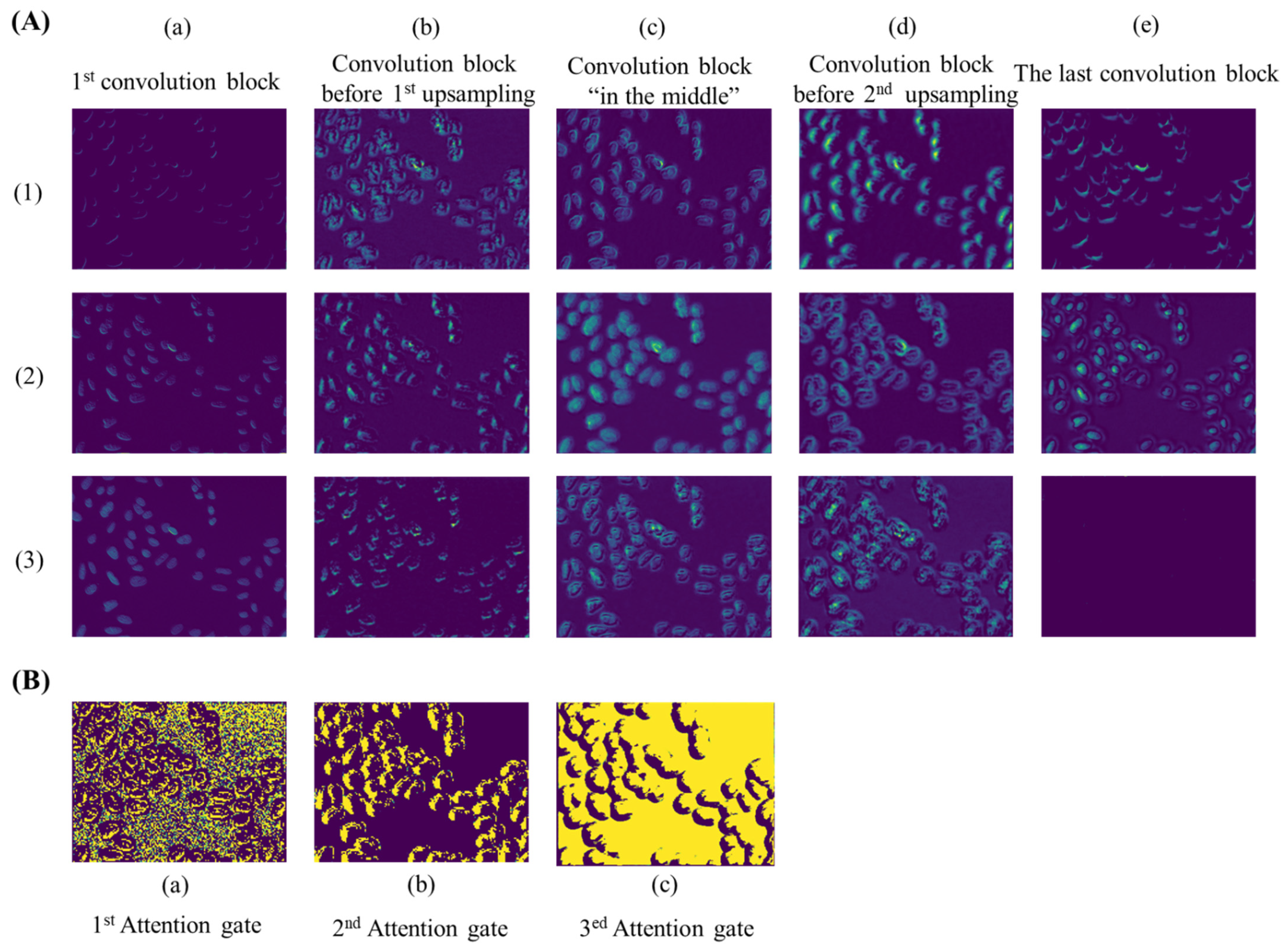

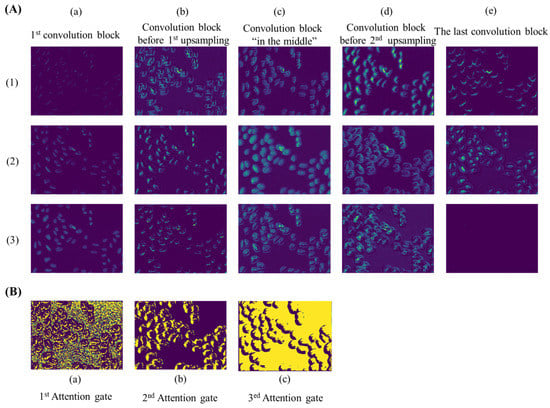

3.3. Visualization of Deep Features Extracted from Images

As an automatic feature extraction process, deep learning would learn high-level abstract features from original inputs [34]. Thus, to further explore the effectiveness of convolution blocks at different depths of ASW-Net, we visualized the feature map of each convolutional layer, and the results are shown in Figure 4A. As described earlier, the convolution block before the first up-sampling (Figure 4A(b)) and before the second up-sampling (Figure 4A(d)) incorporated the deepest abstract features, which means these two layers were able to capture more effective information for prediction. Specifically, the convolutional kernels presented by Figure 4A(b)(1), Figure 4A(d)(2), and Figure 4A(d)(3) all captured the edge of the cell nucleus and its adjacent pixels, which made the nuclear edge appear blurred and bolded in the corresponding feature maps. Figure 4A(b)(2), Figure 4A(b)(3), and Figure 4A(d)(1) show that these kernels played important roles in sharpening and positioning one side of the nucleus. Besides, it is evident that the feature map generated from the shallow convolution layer was much closer to the actual images through Figure 4A(a): the weight distribution of all feature maps looks very similar to the input images. Moreover, abstract features and raw information similar to input can both be seen in Figure 4A(c), since this layer combined the output of layers presented by Figure 4A(a) and Figure 4A(b). Finally, comparing the visualization results of the first convolution block (Figure 4A(a) with the last convolution block (Figure 4A(e), we observed that the final convolutional layer reflected the most favorable information for segmentation. The center of the nucleus and the edge of the nucleus are separated at the last layer (see Figure 4A(e)(2)), which proves the effectiveness and interpretability of the deep neural network.

Figure 4.

Visualization of feature maps extracted by convolution layers at different depths of ASW-Net. (A): (a) to (e), from left to right, represent the features extracted by the first convolution block, the convolution block before the first up-sampling, the convolution block in the middle, the convolution block before the second up-sampling, and the last convolution block, respectively. (B): (a) to (c), from left to right, represent the features extracted by the first, the second, and the third attention gate.

As for attention gates (see Figure 4B), with the deepening of the network, the distribution of weight gradually changes from distraction to focus on edge information. This indicates that attention gates had learned which parts were worthy of attention, which is undoubtedly helpful for the improvement of model prediction ability.

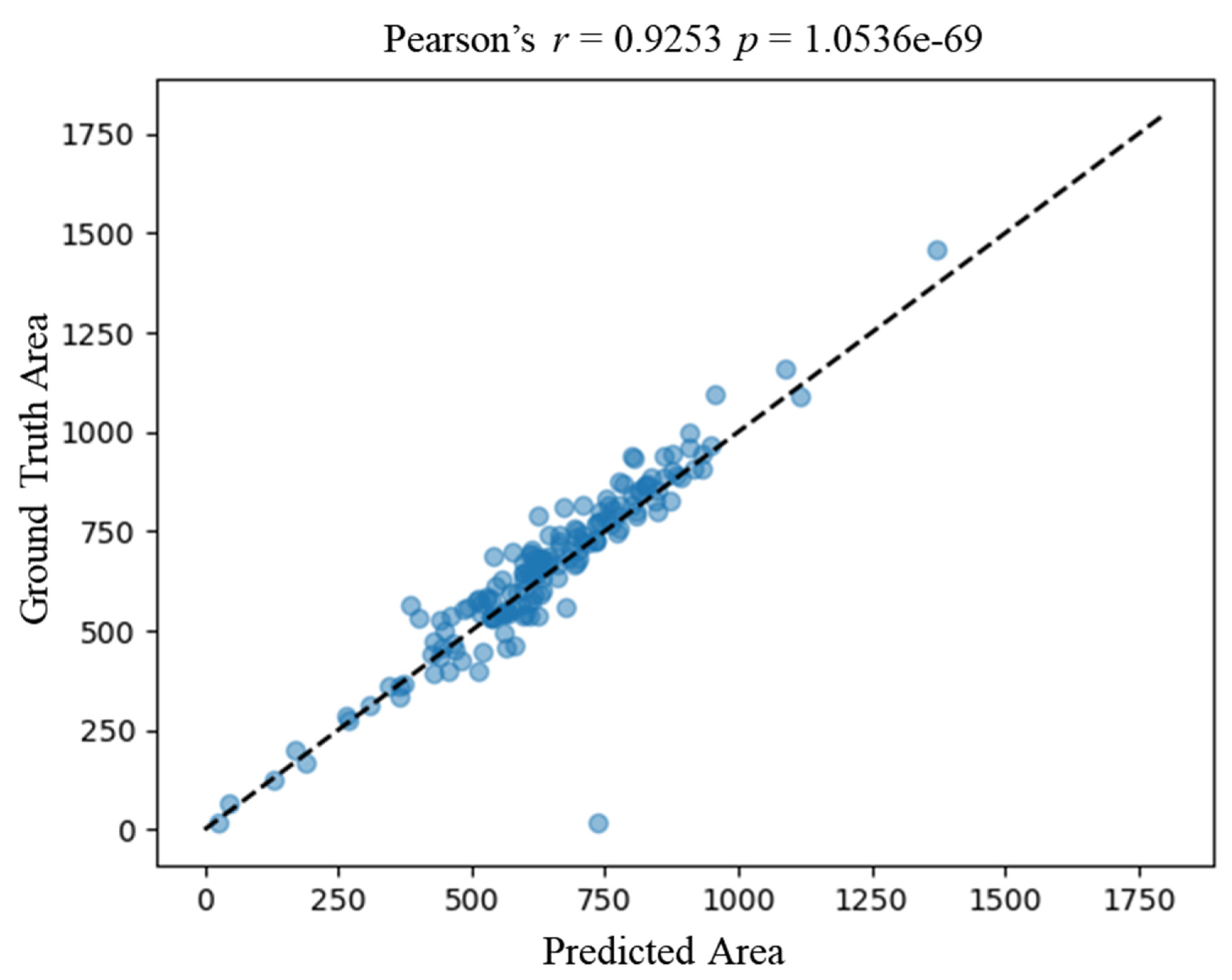

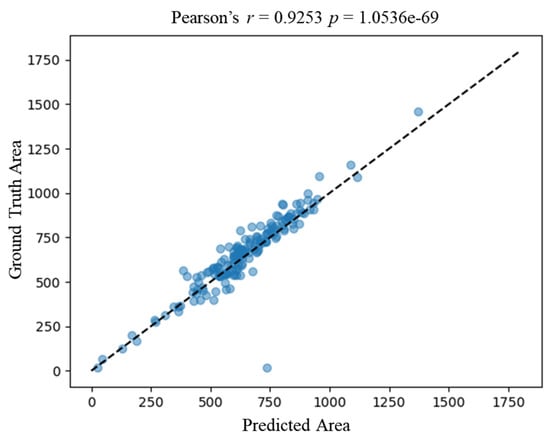

3.4. Strong Correlation of Downstream Metric Derived from Experts and Proposed Framework

We verified the correlation of downstream metrics derived from the ground truth annotation by experts and our proposed framework. We then calculated the area of each nucleus derived from expert annotation and automatic segmentation by the proposed framework. Figure 5 shows a strong correlation of the nucleus area between the ground truth and the prediction, with a correlation coefficient of 0.9253 (Pearson p-value < 0.05). The correlation result verifies the accuracy of automatic segmentation at the cellular level.

Figure 5.

Correlation between predicted area by the proposed framework and the ground truth area. The x-axis represents the predicted area of an image, and the y-axis represents the ground truth area. Each blue dot represents a nucleus. The dashed line represents the ideal correlation. Area_ground_truth = Area_predicted.

4. Discussion

Nucleus segmentation is a prerequisite for automated downstream analysis, including characterization of nucleus morphology, identification and detection of cells, and quantitative measurement of protein expression. However, manually drawing the nuclear contours is time-consuming and laborious. Automatic and accurate nucleus segmentation would alleviate this difficulty and enable biologists to yield insights into intrinsic features of nucleus morphology.

In this study, we proposed a deep learning-based framework, which is based on ASW-Net, to automatically segment the nucleus of fluorescent microscopy by integrating advantages of attention mechanism, W-Net, and adopting a cascade-like structure with between-net connections. We have shown that our lightweight model outperforms the-state-of-art methods in segmentation performance. We also showed that utilizing the attention mechanism would provide information about nucleus segmentation, leading to a much-improved performance. The attention mechanism endows our proposed tool with better learning ability and interpretability, making our tool likely to translate well to practical usage. The automatic segmentation results by our proposed framework are consistent with the ground truth, which is verified by the downstream metric, such as the area of each nucleus. We believe that the automatic segmentation framework can mitigate the difficulty of drawing nucleus contours manually and provide more accurate segmentation than other tools.

While our proposed tool achieves a promising performance in nucleus segmentation of fluorescent microscopy, there are a couple of technical limitations from the aspect of deep learning techniques, such as a theoretical explanation of our architecture effectiveness and tuning hyper-parameters for an optimal model. We also compared our proposed framework with the Cellpose architecture [35]. Our proposed framework outperformed the Cellpose architecture on the BBBC039 dataset and performed about 3% worse than the Cellpose architecture on the ganglioneuroblastoma dataset, which is expected since the number of parameters used for training by Cellpose is much greater than our ASW-Net. At the same time, our lightweight ASW-Net could produce the result faster. In summation, the introduction of attention mechanism and the resolution fusion module in ASW-Net can also be applied in other backbones of neural networks and help improve performance.

5. Conclusions

We implemented ASW-Net as a publicly available segmentation tool for the research community. The model achieves remarkable performance in nucleus segmentation. In the future, our model can be retrained on other types of nucleus images, such as highly multiplexed imaging data.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/genes13030431/s1, Figure S1: Receiver operating characteristic of pixel classification of ASW-Net. Blue, orange, green, and red curves are average background, interior, and boundary ROC curves, respectively. Supplementary Note: Cellprofiler pipeline parameter settings.

Author Contributions

W.P., Z.L. and G.N.L. conceived the idea of this research. Z.L. designed and implemented the model structure of ASW-Net. W.P. collected and processed the dataset, trained, evaluated, and visualized the model. W.P. and X.Z. implemented the post-processing algorithm. K.Y. and W.S. tested the model. W.P. and Z.L. wrote the manuscript. G.N.L. and F.X. supervised the manuscript. All authors contributed to the article and approved the submitted version. All authors have read and agreed to the published version of the manuscript.

Funding

The study was supported by the National Natural Science Foundation of China (No: 81971292, 82150610506, 12104088); Natural Science Foundation of Shanghai (No: 21ZR1428600); Shanghai Sailing Program (No: 19YF1400200); Shanghai Key Laboratory of Psychotic Disorders Open Grant (No: 13dz2260500).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data analyzed in this study are curated from public domain.

Acknowledgments

The support of the Center of High-Performance Computing (HPC) of Shanghai Jiao Tong University for this work is gratefully acknowledged.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Caicedo, J.C.; Roth, J.; Goodman, A.; Becker, T.; Karhohs, K.W.; Broisin, M.; Molnar, C.; McQuin, C.; Singh, S.; Theis, F.J.; et al. Evaluation of deep learning strategies for nucleus segmentation in fluorescence images. Cytom. Part A 2019, 95, 952–965. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gu, Y. Automated scanning electron microscope based mineral liberation analysis. An introduction to JKMRC/FEI mineral liberation analyser. J. Miner. Mater. Charact. Eng. 2003, 2, 33–41. [Google Scholar] [CrossRef]

- Gerdes, M.J.; Sevinsky, C.J.; Sood, A.; Adak, S.; Bello, M.O.; Bordwell, A.; Can, A.; Corwin, A.; Dinn, S.; Filkins, R.J.; et al. Highly multiplexed single-cell analysis of formalin-fixed, paraffin-embedded cancer tissue. Proc. Natl. Acad. Sci. USA 2013, 110, 11982–11987. [Google Scholar] [CrossRef] [Green Version]

- Giesen, C.; Wang, H.A.O.; Schapiro, D.; Zivanovic, N.; Jacobs, A.; Hattendorf, B.; Schüffler, P.J.; Grolimund, D.; Buhmann, J.M.; Brandt, S.; et al. Highly multiplexed imaging of tumor tissues with subcellular resolution by mass cytometry. Nat. Methods 2014, 11, 417–422. [Google Scholar] [CrossRef] [PubMed]

- Ljosa, V.; Sokolnicki, K.L.; Carpenter, A.E. Annotated high-throughput microscopy image sets for validation. Nat. Methods 2012, 9, 637. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Boutros, M.; Heigwer, F.; Laufer, C. Microscopy-based high-content screening. Cell 2015, 163, 1314–1325. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hollandi, R.; Szkalisity, A.; Toth, T.; Tasnadi, E.; Molnar, C.; Mathe, B.; Grexa, I.; Molnar, J.; Balind, A.; Gorbe, M.; et al. nucleAIzer: A parameter-free deep learning framework for nucleus segmentation using image style transfer. Cell Syst. 2020, 10, 453–458.e6. [Google Scholar] [CrossRef] [PubMed]

- Kumar, N.; Verma, R.; Sharma, S.; Bhargava, S.; Vahadane, A.; Sethi, A. A dataset and a technique for generalized nuclear segmentation for computational pathology. IEEE Trans. Med. Imaging 2017, 36, 1550–1560. [Google Scholar] [CrossRef] [PubMed]

- Meijering, E. Cell segmentation: 50 years down the road [life sciences]. IEEE Signal Process. Mag. 2012, 29, 140–145. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Malpica, N.; de Solórzano, C.O.; Vaquero, J.J.; Santos, A.; Vallcorba, I.; García-Sagredo, J.M.; del Pozo, F. Applying watershed algorithms to the segmentation of clustered nuclei. Cytometry 1997, 28, 289–297. [Google Scholar] [CrossRef] [Green Version]

- Xie, L.; Qi, J.; Pan, L.; Wali, S. Integrating deep convolutional neural networks with marker-controlled watershed for overlapping nuclei segmentation in histopathology images. Neurocomputing 2020, 376, 166–179. [Google Scholar] [CrossRef]

- Li, G.; Liu, T.; Tarokh, A.; Nie, J.; Guo, L.; Mara, A.; Holley, S.; Wong, S.T. 3D cell nuclei segmentation based on gradient flow tracking. BMC Cell Biol. 2007, 8, 40. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, C.; Prince, J.L. Snakes, shapes, and gradient vector flow. IEEE Trans. Image Processing 1998, 7, 359–369. [Google Scholar] [CrossRef] [Green Version]

- Caicedo, J.C.; Goodman, A.; Karhohs, K.W.; Cimini, B.A.; Ackerman, J.; Haghighi, M.; Heng, C.; Becker, T.; Doan, M.; McQuin, C.; et al. Nucleus segmentation across imaging experiments: The 2018 Data Science Bowl. Nat. Methods 2019, 16, 1247–1253. [Google Scholar] [CrossRef] [PubMed]

- Minaee, S.; Boykov, Y.Y.; Porikli, F.; Plaza, A.J.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021. [CrossRef] [PubMed]

- Gibson, E.; Giganti, F.; Hu, Y.; Bonmati, E.; Bandula, S.; Gurusamy, K.; Davidson, B.R.; Pereira, S.P.; Clarkson, M.J.; Barratt, D.C. Automatic multi-organ segmentation on abdominal CT with dense V-networks. IEEE Trans. Med Imaging 2018, 37, 1822–1834. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, X.; Lei, Y.; Liu, Y.; Tian, S.; Higgins, K.; Beitler, J.J.; Yu, D.S.; Jiang, X.; Liu, T.; Curran, W.J.; et al. Automatic multi-organ segmentation in thorax CT images using U-Net-GAN. In Medical Imaging 2019: Computer-Aided Diagnosis; SPIE: Washington, DC, USA, 2019; p. 35. [Google Scholar]

- Vu, Q.D.; Graham, S.; Kurc, T.; To, M.N.N.; Shaban, M.; Qaiser, T.; Koohbanani, N.A.; Khurram, S.A.; Kalpathy-Cramer, J.; Zhao, T.; et al. Methods for segmentation and classification of digital microscopy tissue images. Front. Bioeng. Biotechnol. 2019, 7, 53. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; He, H.; Wei, P.; Zhang, C.; Zhang, J.; Chen, J. Tumor tissue segmentation for histopathological images. In Proceedings of the 1st ACM International Conference on Multimedia in Asia, MMAsia, Beijing, China, 16–18 December 2019. [Google Scholar]

- Graham, S.; Vu, Q.D.; Raza, S.E.A.; Azam, A.; Tsang, Y.W.; Kwak, J.T.; Rajpoot, N. Hover-Net: Simultaneous segmentation and classification of nuclei in multi-tissue histology images. Med Image Anal. 2019, 58, 101563. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zeng, Z.; Xie, W.; Zhang, Y.; Lu, Y. RIC-Unet: An improved neural network based on Unet for nuclei segmentation in histology images. IEEE Access 2019, 7, 21420–21428. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9351. [Google Scholar]

- Chen, W.; Zhang, Y.; He, J.; Qiao, Y.; Chen, Y.; Shi, H.; Tang, X. W-Net: Bridged U-Net for 2D Medical Image Segmentation. arXiv 2018, arXiv:1807.04459, 1–13. [Google Scholar]

- Kromp, F.; Bozsaky, E.; Rifatbegovic, F.; Fischer, L.; Ambros, M.; Berneder, M.; Weiss, T.; Lazic, D.; Dörr, W.; Hanbury, A.; et al. An annotated fluorescence image dataset for training nuclear segmentation methods. Sci. Data 2020, 7, 262. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In 32nd International Conference on Machine Learning, ICML 2015; Springer: Cham, Switzerland, 2015; Volume 1, pp. 448–456. [Google Scholar]

- Ketkar, N. Introduction to Keras. In Deep Learning with Python; Apress: New York, NY, USA, 2017; pp. 97–111. [Google Scholar]

- Abadi, M. TensorFlow: Learning functions at scale. ACM SIGPLAN Not. 2016, 51, 1. [Google Scholar] [CrossRef]

- Wichrowska, O.; Maheswaranathan, N.; Hoffman, M.W.; Colmenarejo, S.G.; Denii, M.; De Freitas, N.; Sohl-Dickstein, J. Learned optimizers that scale and generalize. In Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, Australia, 6–11 August 2017; Volume 8, pp. 5744–5753. [Google Scholar]

- Ben Naceur, M.; Akil, M.; Saouli, R.; Kachouri, R. Fully automatic brain tumor segmentation with deep learning-based selective attention using overlapping patches and multi-class weighted cross-entropy. Med. Image Anal. 2020, 63, 101692. [Google Scholar] [CrossRef] [PubMed]

- Dice, L.R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- McQuin, C.; Goodman, A.; Chernyshev, V.; Kamentsky, L.; Cimini, B.A.; Karhohs, K.W.; Doan, M.; Ding, L.; Rafelski, S.M.; Thirstrup, D.; et al. CellProfiler 3.0: Next-generation image processing for biology. PLoS Biol. 2018, 16, e2005970. [Google Scholar] [CrossRef] [Green Version]

- Sharif, J.M.; Miswan, M.F.; Ngadi, M.A.; Salam, M.S.H.; bin Abdul Jamil, M.M. Red blood cell segmentation using masking and watershed algorithm: A preliminary study. In Proceedings of the 2012 International Conference on Biomedical Engineering, Macau, China, 28–30 May 2012; pp. 258–262. [Google Scholar]

- Farias, G.; Dormido-Canto, S.; Vega, J.; Rattá, G.A.; Vargas, H.; Hermosilla, G.; Alfaro, L.; Valencia, A. Automatic feature extraction in large fusion databases by using deep learning approach. Fusion Eng. Des. 2016, 112, 979–983. [Google Scholar] [CrossRef]

- Stringer, C.; Wang, T.; Michaelos, M.; Pachitariu, M. Cellpose: A generalist algorithm for cellular segmentation. Nat. Methods 2020, 18, 100–106. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).