Neural-Network-Based Approaches for Optimization of Machining Parameters Using Small Dataset

Abstract

:1. Introduction

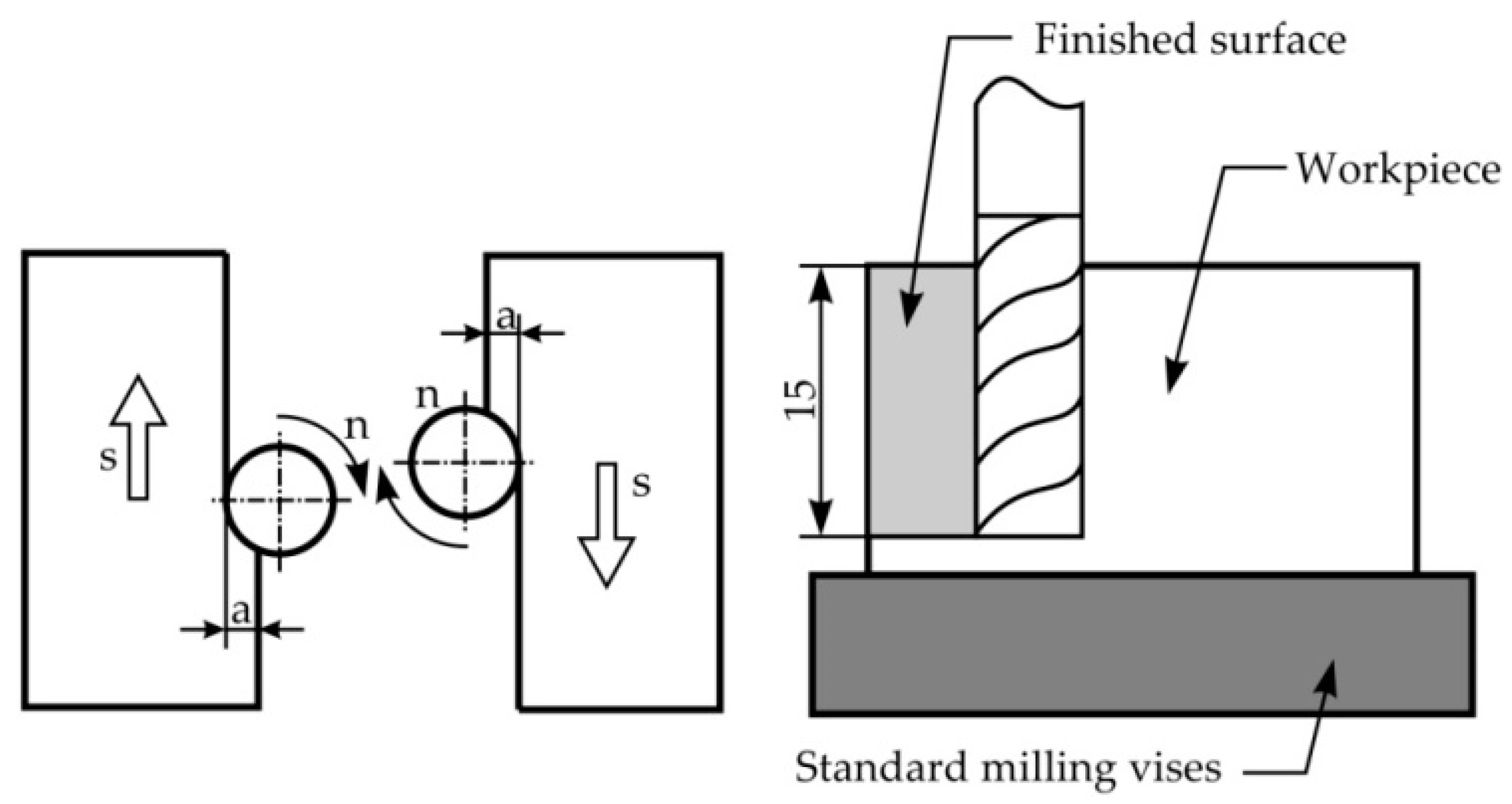

2. Experimental Procedure

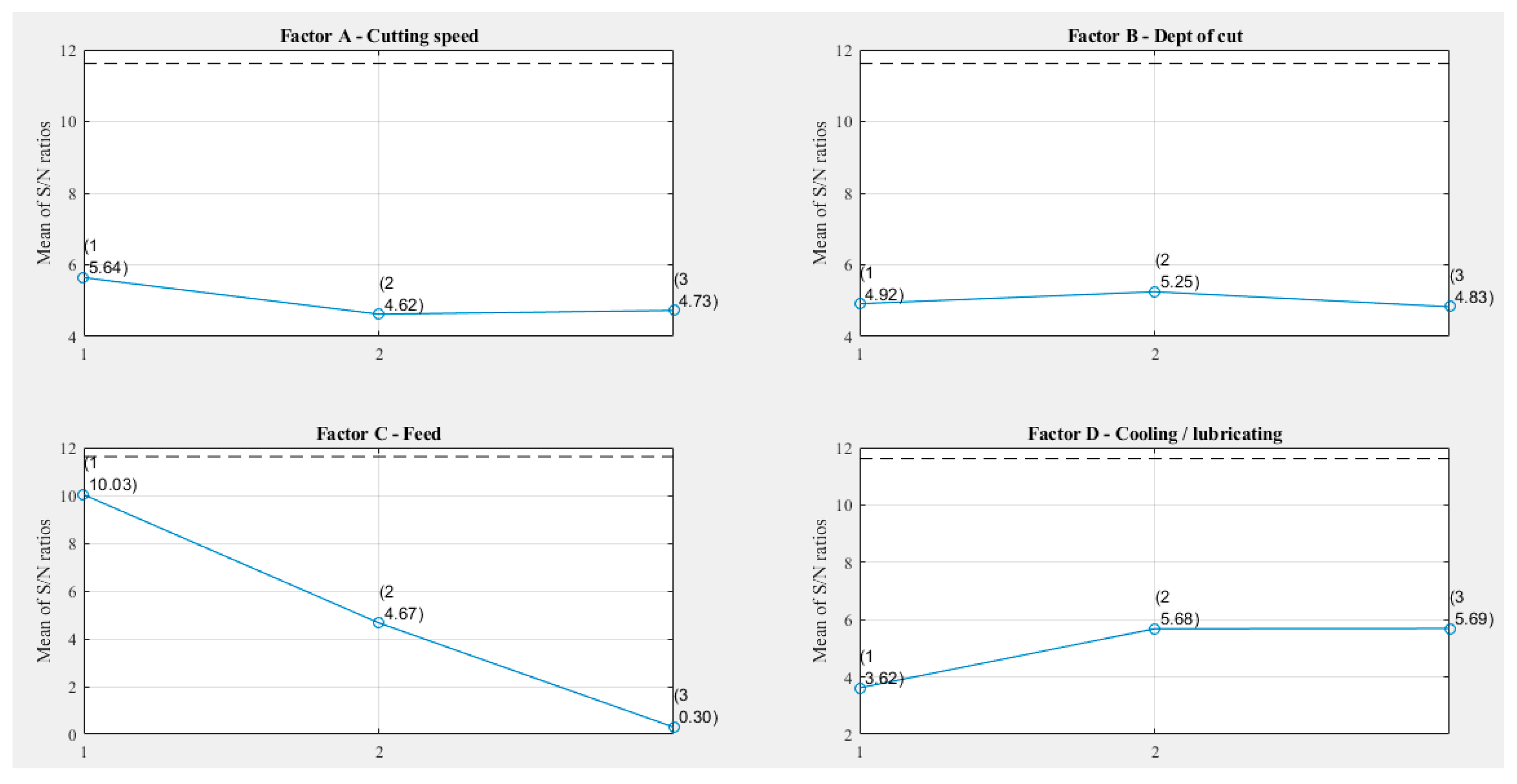

3. Taguchi Method for Optimization of Cutting Parameters

- Smaller is better:

- Bigger is better:

- Nominal is the best:

4. ANOVA Technique

- The influence of parameters that we did not consider in the experiment on the arithmetic mean roughness, Ra, was negligible;

- The small value of the error indicates that the experiment can be considered successful.

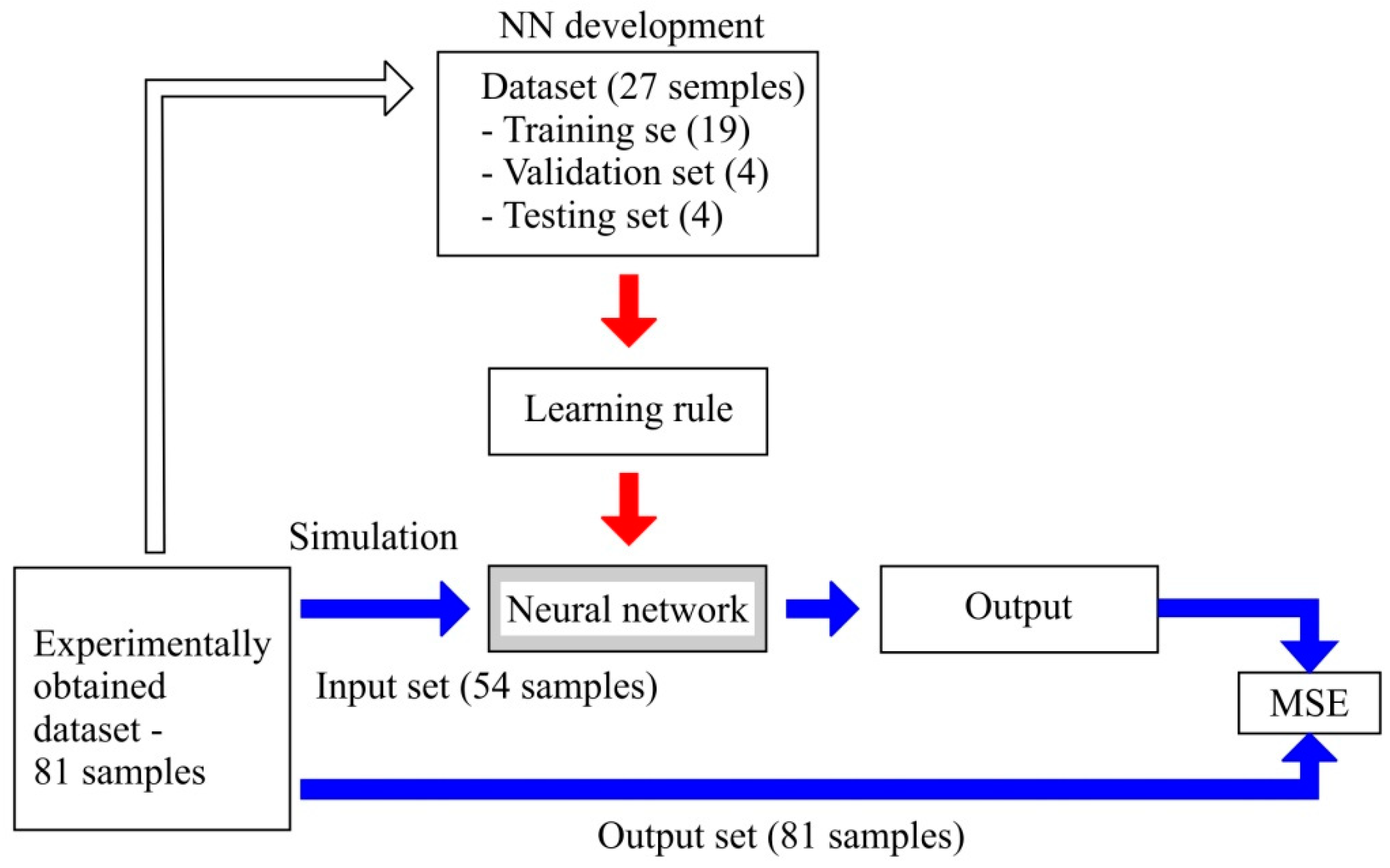

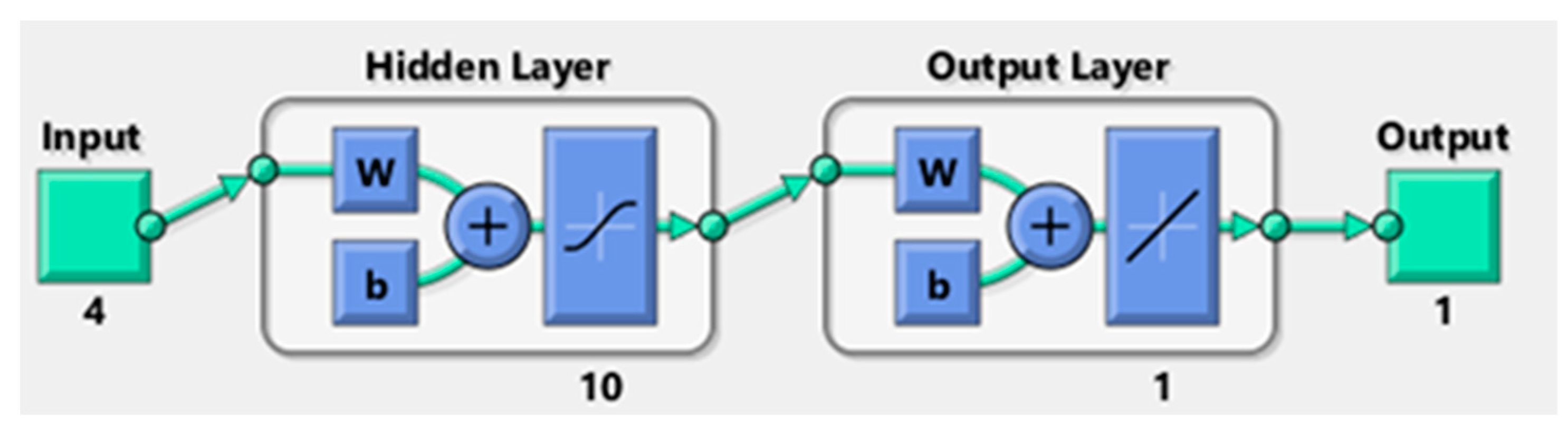

5. Artificial Neural Networks

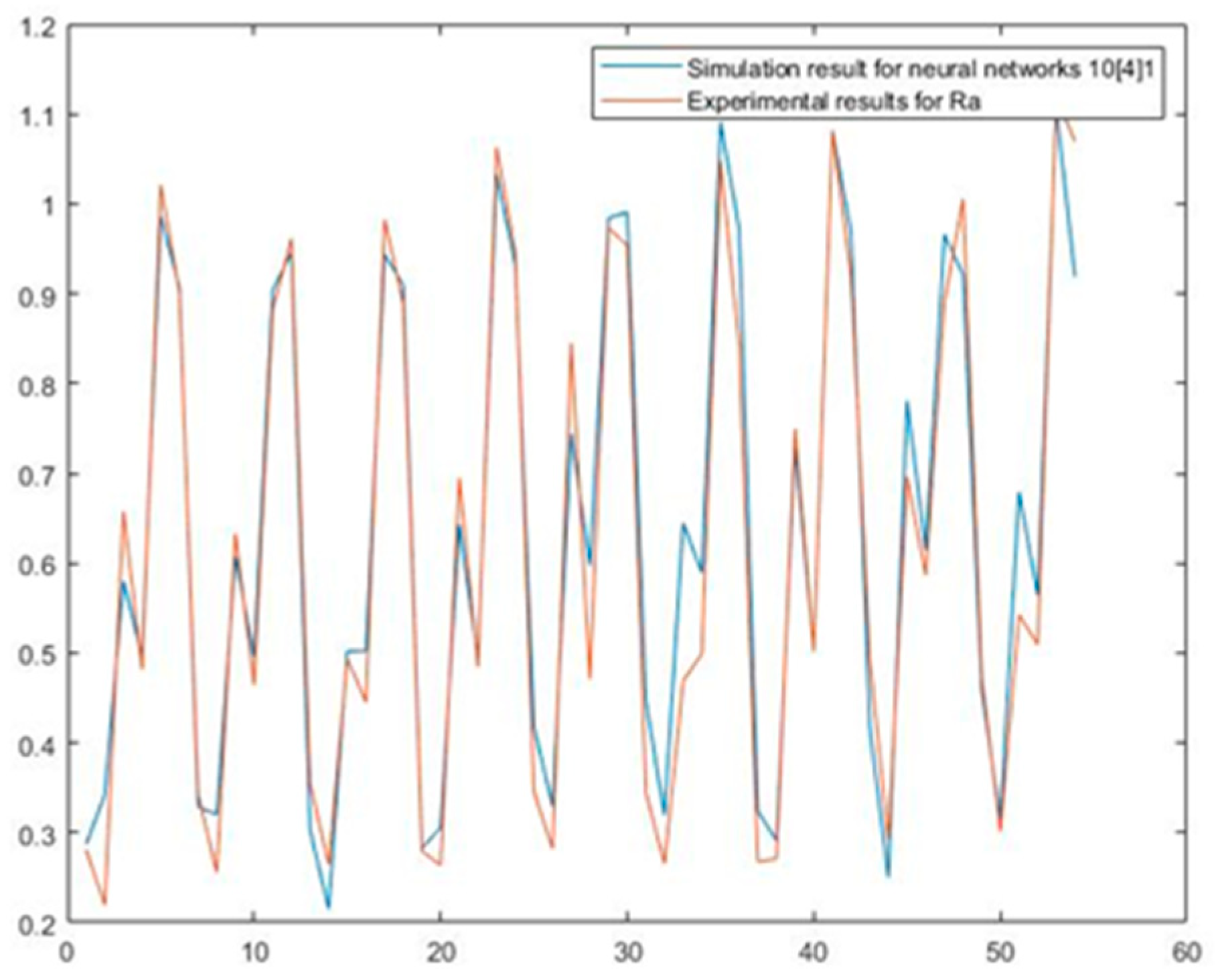

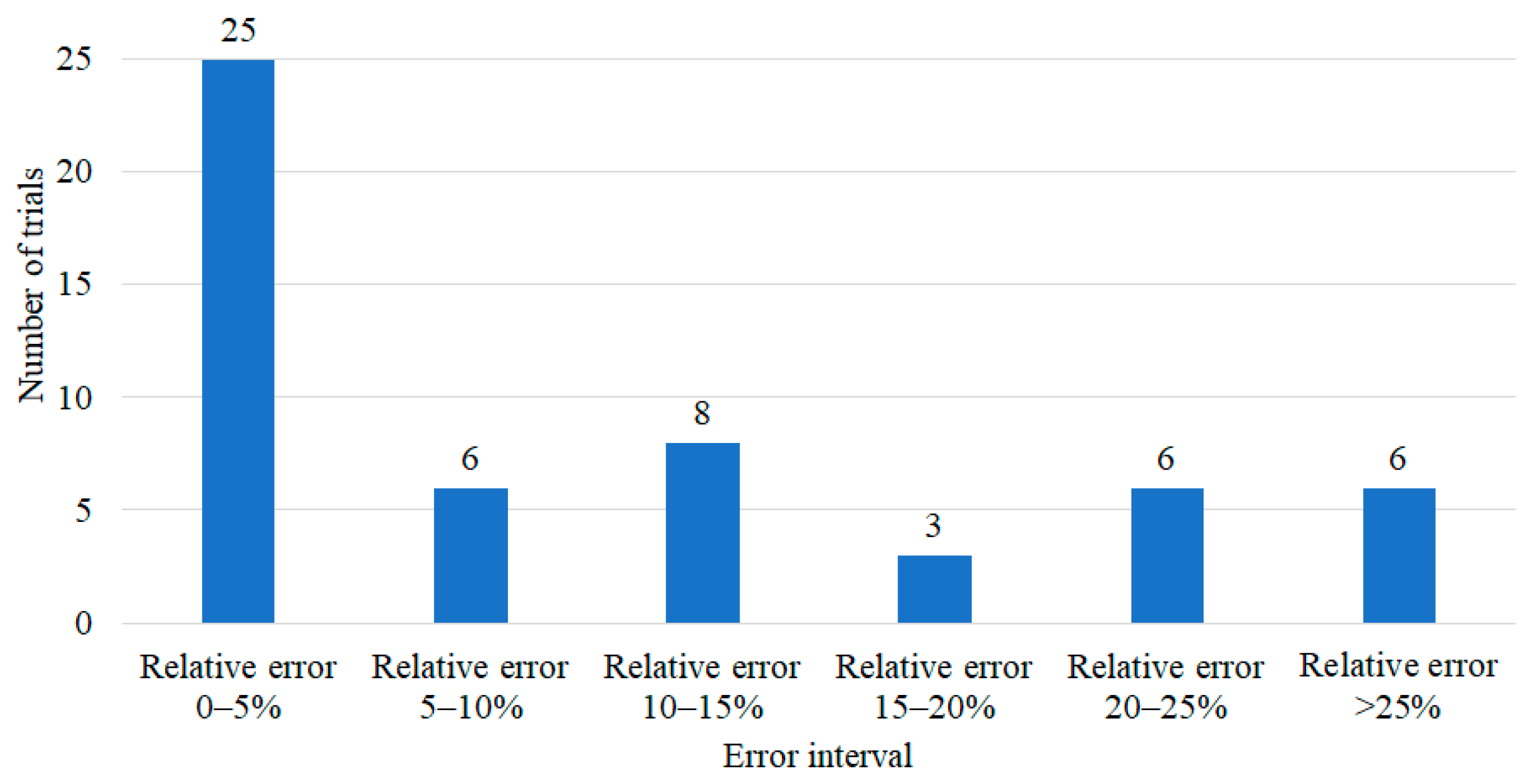

6. Results and Discussions

7. Conclusions

- When designing and conducting an experiment using the Taguchi technique, the number of samples is smaller in comparison to the full factor plan. In such a case, neural networks handle small datasets of experimental data. Bearing in mind that the set of available data is small, it is necessary to carefully plan the neural network topology and algorithms for training.

- In this study, the dataset used for ANN development contained 27 samples. The full factorial plan was used to simulate and evaluate the neural network.

- Improving neural network performance is possible through the trial-and-error method. This means including different training algorithms, different numbers of hidden layers and neurons, learning parameters, the transfer function, etc. Of the 108 developed neural network models, a topology consisting of four neurons in the input layer, one hidden layer with ten neurons and one neuron in the output layer (4-10-1) was found to have the lowest value of MSE, equal to 0.00444271. The 4-10-1 network structure was trained using the BR algorithm. The results and the levels of the mean square error (MSE) were acceptable in terms of the proposed model for the prediction of arithmetic mean roughness (Ra).

- Twenty-seven input-output pairs were consider as a small dataset in the context of neural network modeling. This study shows that it is possible to obtain a good prediction and that the small dataset is not an obstacle.

- Compared to conventional methods, the advantages of using ANNs are their higher speed, simplicity and the possibility of learning based on examples.

- A disadvantage is that the application of this method requires an experimentally determined dataset, which can be expensive and time-consuming in some cases.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| NN Architecture | R | Number of Epochs | MSE | NN Architecture | R | Number of Epochs | MSE | ||

|---|---|---|---|---|---|---|---|---|---|

| Levenberg–Marquardt (LM) algorithm | Bayesian regulation (BR) algorithm | ||||||||

| 1 | 4 × 1 × 1 | 0.91322 | 6 | 0.0020 | 1 | 4 × 1 × 1 | 0.98901 | 41 | 0.00189 |

| 2 | 4 × 2 × 1 | 0.9556 | 11 | 0.0019 | 2 | 4 × 2 × 1 | 0.99467 | 44 | 0.00096 |

| 3 | 4 × 3 × 1 | 0.97698 | 6 | 0.0027 | 3 | 4 × 3 × 1 | 0.99430 | 97 | 0.00027 |

| 4 | 4 × 4 × 1 | 0.983 | 9 | 0.0047 | 4 | 4 × 4 × 1 | −0.9837 | 58 | 0.056499 |

| 5 | 4 × 8 × 1 | 0.979 | 7 | 0.006 | 5 | 4 × 8 × 1 | 0.98222 | 125 | 2.14 × 10−14 |

| 6 | 4 × 10 × 1 | 0.799 | 6 | 0.014 | 6 | 4 × 10 × 1 | 0.98051 | 173 | 1.75 × 10−14 |

| 7 | 4 × 1 × 1 × 1 | 0.976 | 7 | 0.0012 | 7 | 4 × 1 × 1 × 1 | 0.98909 | 1000 | 0.00189 |

| 8 | 4 × 2 × 2 × 1 | 0.975 | 8 | 0.0057 | 8 | 4 × 2 × 2 × 1 | 0.98356 | 37 | 0.0577 |

| 9 | 4 × 3 × 2 × 1 | 0.943 | 6 | 0.0012 | 9 | 4 × 3 × 2 × 1 | 0.9838 | 37 | 0.0577 |

| 10 | 4 × 5 × 2 × 1 | 0.9964 | 9 | 0.00074 | 10 | 4 × 5 × 2 × 1 | 0.9838 | 38 | 0.0577 |

| 11 | 4 × 8 × 4 × 1 | 0.846 | 8 | 0.027 | 11 | 4 × 8 × 4 × 1 | 0.98402 | 38 | 0.0577 |

| 12 | 4 × 10 × 4 × 1 | 0.890 | 8 | 0.019 | 12 | 4 × 10 × 4 × 1 | 0.9838 | 38 | 0.0577 |

| 13 | 4 × 2 × 2 × 2 × 1 | 0.960 | 19 | 0.032 | 13 | 4 × 2 × 2 × 2 × 1 | −0.78219 | 86 | 0.0577 |

| 14 | 4 × 3 × 2 × 2 × 1 | 0.965 | 17 | 0.0018 | 14 | 4 × 3 × 2 × 2 × 1 | 0 | 21 | 0.0667 |

| 15 | 4 × 4 × 3 × 2 × 1 | 0.9837 | 15 | 0.0013 | 15 | 4 × 4 × 3 × 2 × 1 | 0 | 35 | 0.0643 |

| 16 | 4 × 5 × 3 × 2 × 1 | 0.960 | 8 | 0.013 | 16 | 4 × 5 × 3 × 2 × 1 | 0.91736 | 32 | 0.0662 |

| 17 | 4 × 7 × 4 × 2 × 1 | 0.950 | 12 | 0.006 | 17 | 4 × 7 × 4 × 2 × 1 | 0 | 31 | 0.0664 |

| 18 | 4 × 10 × 4 × 2 × 1 | 0.99713 | 4 | 0.00020 | 18 | 4 × 10 × 4 × 2 × 1 | 0.90476 | 41 | 0.0658 |

| Resilient backpropagation (RP) algorithm | Gradient descent (GD ×) algorithm | ||||||||

| 1 | 4 × 1 × 1 | 0.97513 | 6 | 3.16 × 10−5 | 1 | 4 × 1 × 1 | 0.97565 | 141 | 0.00058136 |

| 2 | 4 × 2 × 1 | 0.9436 | 6 | 0.010833 | 2 | 4 × 2 × 1 | −0.9199 | 56 | 0.006586 |

| 3 | 4 × 3 × 1 | 0.9633 | 6 | 0.0024626 | 3 | 4 × 3 × 1 | 0.94019 | 140 | 0.0042864 |

| 4 | 4 × 4 × 1 | 0.82739 | 6 | 0.0044551 | 4 | 4 × 4 × 1 | 0.5879 | 88 | 0.0028835 |

| 5 | 4 × 8 × 1 | 0.97874 | 6 | 0.012403 | 5 | 4 × 8 × 1 | 0.7549 | 186 | 0.0060558 |

| 6 | 4 × 10 × 1 | 0.99259 | 11 | 0.021152 | 6 | 4 × 10 × 1 | −0.91372 | 6 | 0.031521 |

| 7 | 4 × 1 × 1 × 1 | 0.9148907 | 16 | 0.0011574 | 7 | 4 × 1 × 1 × 1 | 0.82356 | 74 | 0.0037192 |

| 8 | 4 × 2 × 2 × 1 | 0.29668 | 7 | 0.019677 | 8 | 4 × 2 × 2 × 1 | 0.27105 | 81 | 0.033783 |

| 9 | 4 × 3 × 2 × 1 | 0.053017 | 8 | 0.039857 | 9 | 4 × 3 × 2 × 1 | 0.89452 | 77 | 0.042865 |

| 10 | 4 × 5 × 2 × 1 | 0.95375 | 17 | 0.010477 | 10 | 4 × 5 × 2 × 1 | 0.91328 | 117 | 0.012526 |

| 11 | 4 × 8 × 4 × 1 | 0.99162 | 19 | 0.012794 | 11 | 4 × 8 × 4 × 1 | 0.76334 | 74 | 0.0075099 |

| 12 | 4 × 10 × 4 × 1 | 0.96849 | 6 | 0.0019671 | 12 | 4 × 10 × 4 × 1 | 0.30189 | 54 | 0.020986 |

| 13 | 4 × 2 × 2 × 2 × 1 | 0.96881 | 30 | 0.0028199 | 13 | 4 × 2 × 2 × 2 × 1 | −0.903229 | 77 | 0.03963 |

| 14 | 4 × 3 × 2 × 2 × 1 | −0.055383 | 9 | 0.019247 | 14 | 4 × 3 × 2 × 2 × 1 | −0.24808 | 77 | 0.03962 |

| 15 | 4 × 4 × 3 × 2 × 1 | 0.40091 | 10 | 0.034651 | 15 | 4 × 4 × 3 × 2 × 1 | 0.92829 | 116 | 0.023847 |

| 16 | 4 × 5 × 3 × 2 × 1 | 0.96214 | 18 | 0.0026711 | 16 | 4 × 5 × 3 × 2 × 1 | 0.93969 | 64 | 0.037863 |

| 17 | 4 × 7 × 4 × 2 × 1 | 0.93932 | 15 | 0.002873 | 17 | 4 × 7 × 4 × 2 × 1 | 0.9381 | 6 | 0.034819 |

| 18 | 4 × 10 × 4 × 2 × 1 | 0.93649 | 15 | 0.0023631 | 18 | 4 × 10 × 4 × 2 × 1 | 0.95615 | 124 | 0.025836 |

| Quasi-Newton backpropagation (BFG) algorithm | Scaled conjugate gradient backpropagation (SCG) algorithm | ||||||||

| 1 | 4 × 1 × 1 | 0.97722 | 13 | 0.002691 | 1 | 4 × 1 × 1 | 0.97859 | 15 | 0.0017497 |

| 2 | 4 × 2 × 1 | 0.92073 | 32 | 0.0044498 | 2 | 4 × 2 × 1 | 0.92591 | 17 | 0.012217 |

| 3 | 4 × 3 × 1 | 0.9232 | 16 | 0.007515 | 3 | 4 × 3 × 1 | 0.88958 | 12 | 0.0026530 |

| 4 | 4 × 4 × 1 | 0.97149 | 14 | 0.0024259 | 4 | 4 × 4 × 1 | 0.96801 | 15 | 0.00099602 |

| 5 | 4 × 8 × 1 | 0.78725 | 25 | 0.0044701 | 5 | 4 × 8 × 1 | 0.77885 | 24 | 0.0061669 |

| 6 | 4 × 10 × 1 | 0.89511 | 42 | 0.0075095 | 6 | 4 × 10 × 1 | 0.9683 | 75 | 0.0030131 |

| 7 | 4 × 1 × 1 × 1 | 0.9739 | 16 | 0.0023739 | 7 | 4 × 1 × 1 × 1 | 0.98276 | 21 | 0.0005 |

| 8 | 4 × 2 × 2 × 1 | 0.94194 | 45 | 0.0025083 | 8 | 4 × 2 × 2 × 1 | 0.9474 | 13 | 0.0070227 |

| 9 | 4 × 3 × 2 × 1 | 0.89233 | 7 | 0.042685 | 9 | 4 × 3 × 2 × 1 | −0.727 | 7 | 0.04 |

| 10 | 4 × 5 × 2 × 1 | 0.96475 | 27 | 0.004755 | 10 | 4 × 5 × 2 × 1 | 0.97979 | 27 | 0.0015945 |

| 11 | 4 × 8 × 4 × 1 | 0.93033 | 18 | 0.022565 | 11 | 4 × 8 × 4 × 1 | 0.91423 | 14 | 0.01604 |

| 12 | 4 × 10 × 4 × 1 | 0.90857 | 14 | 0.00099236 | 12 | 4 × 10 × 4 × 1 | 0.81501 | 6 | 0.04416 |

| 13 | 4 × 2 × 2 × 2 × 1 | 0.84936 | 13 | 0.026458 | 13 | 4 × 2 × 2 × 2 × 1 | 0.055 | 12 | 0.047 |

| 14 | 4 × 3 × 2 × 2 × 1 | 0.96006 | 14 | 0.013682 | 14 | 4 × 3 × 2 × 2 × 1 | −0.0726 | 7 | 0.040627 |

| 15 | 4 × 4 × 3 × 2 × 1 | 0.057505 | 12 | 0.040046 | 15 | 4 × 4 × 3 × 2 × 1 | 0.899 | 11 | 0.0083887 |

| 16 | 4 × 5 × 3 × 2 × 1 | 0.90791 | 9 | 0.017959 | 16 | 4 × 5 × 3 × 2 × 1 | 0.97423 | 28 | 0.0096273 |

| 17 | 4 × 7 × 4 × 2 × 1 | 0.78003 | 9 | 0.032067 | 17 | 4 × 7 × 4 × 2 × 1 | 0.463 | 10 | 0.025448 |

| 18 | 4 × 10 × 4 × 2 × 1 | 0.96165 | 20 | 0.0037442 | 18 | 4 × 10 × 4 × 2 × 1 | 0.67 | 14 | 0.014792 |

References

- Khorasani, A.M.; Yazdi, M.R.S.; Safizadeh, M.S. Analysis of machining parameters effects on surface roughness: A review. Int. J. Comput. Mater. Sci. Surf. Eng. 2012, 5, 68–84. [Google Scholar] [CrossRef]

- Lu, C. Study on prediction of surface quality in machining process. J. Mater. Processing Technol. 2008, 205, 439–450. [Google Scholar] [CrossRef]

- Al Hazza, M.H.; Adesta, E.Y. Investigation of the effect of cutting speed on the Surface Roughness parameters in CNC End Milling using Artificial Neural Network. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Kuala Lumpur, Malaysia, 2–4 July 2013; p. 012089. [Google Scholar]

- Alharthi, N.H.; Bingol, S.; Abbas, A.T.; Ragab, A.E.; El-Danaf, E.A.; Alharbi, H.F. Optimizing cutting conditions and prediction of surface roughness in face milling of AZ61 using regression analysis and artificial neural network. Adv. Mater. Sci. Eng. 2017, 2017, 7560468. [Google Scholar] [CrossRef] [Green Version]

- Beatrice, B.A.; Kirubakaran, E.; Thangaiah, P.R.J.; Wins, K.L.D. Surface roughness prediction using artificial neural network in hard turning of AISI H13 steel with minimal cutting fluid application. Procedia Eng. 2014, 97, 205–211. [Google Scholar] [CrossRef] [Green Version]

- Hossain, M.I.; Amin, A.N.; Patwari, A.U. Development of an artificial neural network algorithm for predicting the surface roughness in end milling of Inconel 718 alloy. In Proceedings of the 2008 International Conference on Computer and Communication Engineering, Kuala Lumpur, Malaysia, 13–15 May 2008; pp. 1321–1324. [Google Scholar]

- Huang, B.P.; Chen, J.C.; Li, Y. Artificial-neural-networks-based surface roughness Pokayoke system for end-milling operations. Neurocomputing 2008, 71, 544–549. [Google Scholar] [CrossRef]

- Kadirgama, K.; Noor, M.; Zuki, N.; Rahman, M.; Rejab, M.; Daud, R.; Abou-El-Hossein, K. Optimization of surface roughness in end milling on mould aluminium alloys (AA6061-T6) using response surface method and radian basis function network. Jordan J. Mech. Ind. Eng. 2008, 2, 209–214. [Google Scholar]

- Pal, S.K.; Chakraborty, D. Surface roughness prediction in turning using artificial neural network. Neural Comput. Appl. 2005, 14, 319–324. [Google Scholar] [CrossRef]

- Ezugwu, E.; Fadare, D.; Bonney, J.; Da Silva, R.; Sales, W. Modelling the correlation between cutting and process parameters in high-speed machining of Inconel 718 alloy using an artificial neural network. Int. J. Mach. Tools Manuf. 2005, 45, 1375–1385. [Google Scholar] [CrossRef]

- Radha Krishnan, B.; Vijayan, V.; Parameshwaran Pillai, T.; Sathish, T. Influence of surface roughness in turning process—An analysis using artificial neural network. Trans. Can. Soc. Mech. Eng. 2019, 43, 509–514. [Google Scholar] [CrossRef]

- Vrabe, M.; Mankova, I.; Beno, J.; Tuharský, J. Surface roughness prediction using artificial neural networks when drilling Udimet 720. Procedia Eng. 2012, 48, 693–700. [Google Scholar] [CrossRef] [Green Version]

- Saric, T.; Simunovic, G.; Simunovic, K. Use of neural networks in prediction and simulation of steel surface roughness. Int. J. Simul. Model. 2013, 12, 225–236. [Google Scholar] [CrossRef]

- Kumar, R.; Chauhan, S. Study on surface roughness measurement for turning of Al 7075/10/SiCp and Al 7075 hybrid composites by using response surface methodology (RSM) and artificial neural networking (ANN). Measurement 2015, 65, 166–180. [Google Scholar] [CrossRef]

- Muñoz-Escalona, P.; Maropoulos, P.G. Artificial neural networks for surface roughness prediction when face milling Al 7075-T7351. J. Mater. Eng. Perform. 2010, 19, 185–193. [Google Scholar] [CrossRef]

- Fang, N.; Pai, P.S.; Edwards, N. Neural network modeling and prediction of surface roughness in machining aluminum alloys. J. Comput. Commun. 2016, 4, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Karagiannis, S.; Stavropoulos, P.; Ziogas, C.; Kechagias, J. Prediction of surface roughness magnitude in computer numerical controlled end milling processes using neural networks, by considering a set of influence parameters: An aluminium alloy 5083 case study. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2014, 228, 233–244. [Google Scholar] [CrossRef]

- Zain, A.M.; Haron, H.; Sharif, S. Prediction of surface roughness in the end milling machining using Artificial Neural Network. Expert Syst. Appl. 2010, 37, 1755–1768. [Google Scholar] [CrossRef]

- Zhong, Z.; Khoo, L.; Han, S. Prediction of surface roughness of turned surfaces using neural networks. Int. J. Adv. Manuf. Technol. 2006, 28, 688–693. [Google Scholar] [CrossRef]

- Benardos, P.; Vosniakos, G.C. Prediction of surface roughness in CNC face milling using neural networks and Taguchi’s design of experiments. Robot. Comput.-Integr. Manuf. 2002, 18, 343–354. [Google Scholar] [CrossRef]

- Eser, A.; Aşkar Ayyıldız, E.; Ayyıldız, M.; Kara, F. Artificial intelligence-based surface roughness estimation modelling for milling of AA6061 alloy. Adv. Mater. Sci. Eng. 2021, 2021, 5576600. [Google Scholar] [CrossRef]

- Vardhan, M.V.; Sankaraiah, G.; Yohan, M. Prediction of surface roughness & material removal rate for machining of P20 steel in CNC milling using artificial neural networks. Mater. Today Proc. 2018, 5, 18376–18382. [Google Scholar]

- Asiltürk, I.; Akkuş, H. Determining the effect of cutting parameters on surface roughness in hard turning using the Taguchi method. Measurement 2011, 44, 1697–1704. [Google Scholar] [CrossRef]

- Bagci, E.; Aykut, Ş. A study of Taguchi optimization method for identifying optimum surface roughness in CNC face milling of cobalt-based alloy (stellite 6). Int. J. Adv. Manuf. Technol. 2006, 29, 940. [Google Scholar] [CrossRef]

- Ghani, J.A.; Choudhury, I.; Hassan, H. Application of Taguchi method in the optimization of end milling parameters. J. Mater. Processing Technol. 2004, 145, 84–92. [Google Scholar] [CrossRef]

- Özsoy, N. Experimental investigation of surface roughness of cutting parameters in T6 aluminum alloy milling process. Int. J. Comput. Exp. Sci. Eng. (IJCESEN) 2019, 5, 105–111. [Google Scholar] [CrossRef]

- Ranganath, M.; Vipin, M.R.; Prateek, N. Optimization of surface roughness in CNC turning of aluminium 6061 using taguchi techniques. Int. J. Mod. Eng. Res. 2015, 5, 42–50. [Google Scholar]

- Kovač, P. Design of Experiment; University of Novi Sad, Faculty of Technical Sciences: Novi Sad, Serbia, 2015. [Google Scholar]

- Košarac, A.; Mlađenović, C.; Zeljković, M. Prediction of surface roughness in end milling using artificial neural network. In Proceedings of the International scientific conference ETIKUM 2021, Novi Sad, Serbia, 2–4 December 2021. [Google Scholar]

- Ficko, M.; Begic-Hajdarevic, D.; Cohodar Husic, M.; Berus, L.; Cekic, A.; Klancnik, S. Prediction of Surface Roughness of an Abrasive Water Jet Cut Using an Artificial Neural Network. Materials 2021, 14, 3108. [Google Scholar] [CrossRef]

- Miljković, Z.; Aleksendrić, D. Artifitial Nerural Networks, Solved Exercises and Elements of Theory; Faculty of Mechanical Engineering: Belgrade, Serbia, 2009. [Google Scholar]

- Sanjay, C.; Jyothi, C. A study of surface roughness in drilling using mathematical analysis and neural networks. Int. J. Adv. Manuf. Technol. 2006, 29, 846–852. [Google Scholar] [CrossRef]

| Author, Year | Work Material | Process | Cutting Conditions |

|---|---|---|---|

| Munoz-Escalona, et al. (2010) [15] | AA7075-T7351 | Face milling | Experimental input parameters: Cutting speed (600, 800, 1000 m/min), feed rate (0.1, 0.2, 0.3 mm/tooth), axial depth of cut (3, 3.5, 4 mm). Feature extraction: chip width, and chip thickness. |

| Benardos et al. (2002) [20] | AA Series 2 | Face milling | Experimental input parameters: Cutting speed (300, 500, 700 m/min), feed rate (0.08, 0.14, 0.2 mm/tooth), depth of cut (0.25, 0.75, 1.2 mm), tool engagement (30%, 60%, 100%), cutting fluid (yes, no). |

| Alharthi et al. (2017) [4] | AZ61 magnesium alloy | Face milling | Experimental input parameters: Cutting speed (500, 1000, 1500, 2000 m/min), feed rate (50, 100, 150, 200 mm/min), depth of cut (0.5, 1, 1.5, 2 mm). |

| Šarić et al. (2013) [13] | S235JRG2 steel | Face milling | Experimental input parameters: Number of revolutions (400, 600, 800 rpm), feed rate (100, 300, 500 mm/min), depth of cut (0.5, 1, 1.5 mm), cooling /lubricating technique: without cooling, through the tool, outside cooling. |

| Kadirgamaa et al. (2008) [8] | AA6061-T6 | End milling | Experimental input parameters: Cutting speed (100, 140, 180 m/min), feed rate (0.1, 0.15, 0.2 mm/rev), axial depth of cut (0.1, 0.15, 0.2 mm), radial depth of cut (2, 3.5, 5 mm). |

| Huang et al. (2008) [7] | AA6061 | End milling | Experimental input parameters: Cutting speed (1750, 1800, 1850, 1900, 2050, 2100, 2200, 2250, 2300, 2400, 2500 rpm) depth of cut (0.04, 0.05, 0.06, 0.07, 0.08) and feed rate (6, 8, 10, 12, 13, 14, 15, 16, 17, 18, 19, 20/min). |

| Karagiannis et al. (2014) [17] | AA5083 | End milling | Experimental input parameters: Cutting speed (5000, 6000, 7000 rpm), depth of cut (0.5, 1, 1.5 mm), and feed rate (0.05, 0.08, 0.1 mm/tooth) Tool geometry parameters: core diameter (%), flute angle (°), rake angle (°), first relief angle (°) and second relief angle (°). Output parameters: Ra, Ry, Rz. |

| Hossain et al. (2008) [6] | Inconel 718 | End milling | Experimental input parameters: Cutting speed (20, 30, 40 m/min), axial depth of cut (0.4, 0.6, 0.8 mm) and feed rate (0.04, 0.075, 0.11 mm/tooth). |

| Al Hazza et al. (2013) [3] | AISI H13 | End milling | Experimental input parameters: Cutting speed 150 up to 250 m/min, feed rate 0.05–0.15 mm/rev and depth of cut 0.1–0.2 mm. Output parameters Ra, Rt, Rz, Rq. |

| Zain et al. (2010) [18] | Titanium alloy (Ti-6A1-4V) | End milling | Experimental input parameters: Cutting speed (124.53, 130, 144.22, 160, 167.03 m/min), feed rate (0.025, 0.03, 0.046, 0.07, 0.083 mm/tooth), radial rake angle (6.2, 7, 9.5, 13.0, 14.8°). |

| Vardhan et al. (2018) [22] | P20 | Milling | Experimental input parameters: Nose radius (0.8, 1.2 mm), cutting speed (75, 80, 85, 90, 95 m/min), feed rate (0.1, 0.125, 0.75, 1, 1.25, 1.5 mm/tooth), axial depth of cut (0.5, 0.5, 0.8 mm), radial depth of cut (0.3, 0.4, 0.5, 0.6, 0.7 mm). Output parameters: material removal rate (MRR) and surface roughness (Ra). |

| Eser et al. (2021) [21] | AA6061 | Milling | Experimental input parameters: Cutting speed (100, 150, 200 m/min), depth of cut (1, 1.5, 2 mm) and feed rate (0.1, 0.15, 0.2 mm/rev) |

| Fang et al. (2016) [16] | AA 2024-T351 | Turning | Experimental input parameters: Cutting speed (150, 250, 350 m/min), depth of cut (0.8 mm constant), tool nose radius (0.8 mm constant), feed rate (varied at five levels based on the ratio to the tool radius: 1.0, 1.5, 2.0, 2.5, and 3.0). |

| Krishnan et al. (2019) [11] | AA6063 | Turning | Experimental input parameters: Cutting speed (2000 rpm), feed rate (0.1 mm/rev), depth of cut (0.5 mm). Feature extraction: frequency range grayscale value, major peak frequency (F1), and the principal component magnitude squared (F2). |

| Kumar et al. (2015) [14] | AA7075/10/SiCp and AA7075 Hybrid Composites | Turning | Experimental input parameters: Cutting speed (80, 110, 140, 170 m/min), feed rate (0.05, 0.1, 0.15, 0.2 mm/rev), approaching angle (45, 60, 75, 90°). Experiments were conducted without cooling/lubricating media (dry cutting). |

| Zhong et al. (2006) [19] | Aluminum and copper | Turning | Experimental input parameters: Tool insert grade (TiAlN coated carbide, PCD), tool insert nose radius (0.2, 0.4, 0.8 mm), tool insert rake angle (0, +5, +15 deg), work piece material (aluminum, coper), cutting speed (500, 1000, 2500 rev/min), feed rate (0.01, 0.1, 0.2 rev/min), depth of cut (0.05, 0.5, 1 mm). Output parameters: Surface roughness parameters Ra, Rt. |

| Pal et al. (2005) [9] | Mild steel | Turning | Experimental input parameters: Cutting speed (325, 420, 550 m/min), depth of cut (0.2, 0.5, 0.8 mm) and feed rate (0.04, 0.1, 0.2 mm/rev). |

| Beatrice et al. (2014) [5] | Hardened steel H13 | Turning | Experimental input parameters: Feed rate (0.05, 0.075, 0.1 mm/rev), cutting speed (75, 95, 115 m/min), depth of cut (0.5, 0.75, 1 mm). |

| Ezugwu et al. (2005) [10] | Inconel 718 | Turning | Experimental input parameters: Cutting speed (20, 30, 40, 50 m/min), feed rate (0.25, 0.30 mm/rev), cutting time (312, 774 s) and the coolant delivery pressure (110, 150, 203 bar). Seven output parameters were observed: cutting force (Fz) and feed force (Fx), power consumption (P), surface roughness (Ra), average flank wear (VB), maximum flank wear (VBmax), nose wear (VC). |

| Vrabel et al. (2012) [12] | Udimet 720 | Drilling | Experimental input parameters: Feed rate, cutting speed, thrust force. Output parameters: drill flank wear VB and surface roughness. |

| Author, Year | ANN Types, Training Algorithm, Network Topology | Dataset | Remarks |

|---|---|---|---|

| Munoz-Escalona, et al. (2010) [15] | The radial basis function NN (RBF NN), generalized regression (GRNN) networks, and feed forward back propagation neural network (FFBP NN) were compared. | Taguchi design of DoE, L9 orthogonal array. | The presented results show a good correlation between the surface roughness and thickness of the chip. FFBP neural network shows better results than radial basis network. |

| Benardos et al. (2002) [20] | FFBP NN Training algorithm: Levenberg–Marquardt (LM) Network topology 5-3-1 showed the best performance. | The dataset contained 27 samples (18 used for training, 4 for validation, 5 for testing). | ANN was able to predict the surface roughness with a mean squared error (MSE) equal to 1.86%. |

| Alharthi et al. (2017) [4] | FFBP NN Training algorithms: LM, Momentum Network topology 3-6-1 showed the best performance. | The experiment was conducted based on a full factorial plan. The dataset contained 64 samples (80% training set, 20% testing, and validation set). | This paper analyzed two models: ANN and regression model. The predicted values of Ra were compared to the results of the experiment. Both models could predict Ra with high accuracy. The determination coefficients were 95% for the best neural network and 94% for regression analysis. |

| Šarić et al. (2013) [13] | Several different NN models were analyzed: RBF NN, FFBP NN, modular NN (MNN) Training algorithm—not stated. Optimal network topology—not stated. | Not stated. | Different learning rules and transfer functions were analyzed. For all three NN types the best results were obtained with the sigmoid transfer function. The Delta learning rule provided the best results for FFBP NN and MNN, whereas the normalized-cumulative delta provided the best results for RBF NN. Results show that all networks could be implemented and efficiently in surface roughness prediction. |

| Kadirgamaa et al. (2008) [8] | RBF NN compared to response surface method (RSM). | Not stated. | RBF NN can predict the arithmetic mean roughness more accurately than RSM. |

| Huang et al. (2008) [7] | FFBP NN, Training algorithm—not stated. Network topology 5-8-7-1 showed the best performance. | The dataset contained 336 samples. | After launching the adaptive control function, the arithmetic mean roughness Ra value was smaller. |

| Karagiannis et al. (2014) [17] | FFBP NN Training algorithm—not stated. Network topology 8-7-5-4-3 showed the best performance. | The dataset contained 18 samples based on an L18 (21 × 37) orthogonal array. | The network had 3 output parameters: Ra, Ry, Rz. Coefficient of correlation during training R = 1, validation R = 0.89, testing R = 0.93. For enhancement of the FFBP NN model, researchers needed to increase the number of trials. |

| Hossain et al. (2008) [6] | FFBP NN Training algorithm LM Network topology—not stated. | The dataset contained 27 samples. | NN had very good predictive performance. |

| Al Hazza et al. (2013) [3] | FFBP NN Training algorithm LM Network topology 3-20-4-4 showed the best performance. | The dataset contained 20 samples, a data ratio of 70:15:15. | Predicted and experimental data were in good agreement. |

| Zain et al. (2010) [18] | FFBP NN Training algorithm: trained (gradient descent with momentum and adaptive learning rule BP). Network topology 3-1-1 showed the best performance. | The dataset contained 24 samples, with a data ratio of 85:15. | NN model could predict Ra using a small dataset. The small number of samples was not a hindrance to obtaining good prediction results. |

| Vardhan et al. (2018) [22] | FFBP NN Training algorithm—not stated. Network topology 5-8-8-2 showed the best performance. | The dataset contained 50 samples based on L50 (21 × 54) orthogonal array. | The developed ANN network predicted the arithmetic mean roughness Ra and MRR with a deviation of 4.3785% and 17.45823% compared to the test data set. |

| Eser et al. (2021) [21] | FFBP NN Five training algorithms: Broyden–Fletcher–Goldfarb–Shanno (BFGS), central pattern generator (CPG), Levenberg–Marquardt (LM), resilient backpropagation (RP), scaled conjugate gradient (SCG) Network topology—not stated. | The dataset contained 27 samples. | The results of comparing RSM and ANN models were presented in this research. Both models provided results very close to experimentally obtained ones. The ANN trained using the SCG algorithm showed the best results. |

| Fang et al. (2016) [16] | Two NN models were used: FFBP NN and RBF NN. Training algorithm and network topology—not stated. | The dataset contained 45 samples (38 training sets, 7 test sets). | This paper presented a comparison of the prediction of the arithmetic mean roughness (Ra) obtained using the RBF and MLP models. The second model provided better results, especially in the prediction of maximum roughness height. |

| Krishnan et al. (2019) [11] | FFBP NN Training algorithm—not stated. Network topology 6-10-1 showed the best performance. | The dataset contained 40 samples. | The shown methodology used an ANN to detect the errors in the surface roughness of the materials. |

| Kumar et al. (2015) [14] | FFBP NN Training algorithm—variable learning rate backpropagation (GDX) The neural network had 2 hidden layers and 10 neurons. | The experiment was conducted based on a full factorial plan. The dataset contained 64 samples. | Response surface methodology (RSM) was used for the analysis of the experimental data. ANN prediction and RSM were compared to experimental data. The correlation coefficient of the RMS to the experiment was 0.9972 and that of the ANN to the experiment 0.99571. Results showed that the ANN had a greater deviation than the RSM prediction. |

| Zhong et al. (2006) [19] | FFBP NN Training algorithm—not stated. Network topology 7-14-18-2 showed the best performance | The dataset contained 304 samples (274 training sets, 30 testing sets). | Surface roughness parameters predicted by the neural network were in good agreement with experimentally obtained ones. |

| Pal et al. (2005) [9] | FFBP NN, Training algorithm—not stated. NN 5-5-1 showed the best performance | The dataset had 27 samples; 20 were used for training and 7 for testing. | Predicted surface roughness was compared with experimental data and was in good agreement. |

| Beatrice et al. (2014) [5] | FFBP NN Training algorithm LM. Network topology 3-7-7-1 showed the best performance in surface roughness prediction. | The dataset contained 27 samples based on the L27 orthogonal array. Out of that number 23 samples were used for training and 4 for testing. | A neural network model was developed using a small dataset. Despite this, the model predicted the arithmetic mean roughness with considerable accuracy, since the error between the NN model simulation results and experimentally obtained ones was less than 7%. |

| Ezugwu et al. (2005) [10] | FFBP NN Training algorithms: LM and Bayesian regularization (BR). Network topology 5-10-10-7 trained by the BR algorithm showed the best performance. | The dataset contained 102 samples. LM algorithm dataset ratio: 50:25:25 BR algorithm dataset ratio 67:33. | Two neural networks were made, having one and two layers, 10 and 15 neurons in each, trained by two algorithms, LM and BR. The best results were shown by a neural network trained using the BR algorithm, with two layers and 15 neurons in the hidden layer. |

| Vrabel et al. (2012) [12] | FFBP NN Training algorithm—not stated. NN 3-4-1 and 3-5-1 showed the best performance in tool wear prediction and NN 4-6-1 and 4-6-4-1 in surface roughness prediction. | Dataset had 42 samples; 32 were used for training and 10 for testing. | NN 3-5-1 was used in the prediction of tool wear. The average RMS error was 12.7%. NN 4-6-4-1 was used for the prediction of surface roughness, with an average RMS error of 2.64%. |

| Factor | Level | ||

|---|---|---|---|

| 1 | 2 | 3 | |

| Cutting speed (m/min) | 120 | 160 | 200 |

| Axial dept of cut (mm) | 0.6 | 0.8 | 1 |

| Feed per tooth (mm/tooth) | 0.05 | 0.1 | 0.15 |

| Cooling/lubricating technique | Emulsion | Dry cut | Air |

| Element | Fe | Si | Cu | Mn | Mg | Zn | Cr | Ti | Other Each | Al |

|---|---|---|---|---|---|---|---|---|---|---|

| % | 0.5 | 0.4 | 1.2–2 | 0.3 | 2.1–2.9 | 5.1–6.1 | 0.21 | 0.21 | 0.15 | Balance |

| Number of Trials | Cutting Speed (m/min) | Axial Depth of Cut (mm) | Feed Per Tooth (mm/tooth) | Cooling/Lubricating Techniques | Replicate | Average Ra (µm) | S/N Ratio (dB) | ||

|---|---|---|---|---|---|---|---|---|---|

| Ra 1 (µm) | Ra 2 (µm) | Ra 3 (µm) | |||||||

| 1 | 120 | 0.6 | 0.05 | Emulsion | 0.348 | 0.361 | 0.345 | 0.3513 | 9.085613 |

| 2 | 120 | 0.6 | 0.1 | Dry machining | 0.477 | 0.476 | 0.476 | 0.476 | 6.441781 |

| 3 | 120 | 0.6 | 0.15 | Air cooling | 0.936 | 0.925 | 0.926 | 0.929 | 0.639686 |

| 4 | 120 | 0.8 | 0.05 | Dry machining | 0.264 | 0.262 | 0.259 | 0.261 | 11.68051 |

| 5 | 120 | 0.8 | 0.1 | Air cooling | 0.515 | 0.514 | 0.516 | 0.515 | 5.763855 |

| 6 | 120 | 0.8 | 0.15 | Emulsion | 0.973 | 0.971 | 0.973 | 0.972 | 0.243697 |

| 7 | 120 | 1 | 0.05 | Air cooling | 0.261 | 0.259 | 0.259 | 0.260 | 11.71168 |

| 8 | 120 | 1 | 0.1 | Emulsion | 0.574 | 0.689 | 0.633 | 0.632 | 3.98291 |

| 9 | 120 | 1 | 0.15 | Dry machining | 0.868 | 0.865 | 0.87 | 0.868 | 1.232942 |

| 10 | 160 | 0.6 | 0.05 | Emulsion | 0.327 | 0.39 | 0.40 | 0.369 | 8.665359 |

| 11 | 160 | 0.6 | 0.1 | Dry machining | 0.529 | 0.499 | 0.501 | 0.510 | 5.854275 |

| 12 | 160 | 0.6 | 0.15 | Air cooling | 0.939 | 0.929 | 0.935 | 0.934 | 0.589963 |

| 13 | 160 | 0.8 | 0.05 | Dry machining | 0.319 | 0.319 | 0.315 | 0.318 | 9.960567 |

| 14 | 160 | 0.8 | 0.1 | Air cooling | 0.566 | 0.569 | 0.563 | 0.566 | 4.943671 |

| 15 | 160 | 0.8 | 0.15 | Emulsion | 1.082 | 1.088 | 1.083 | 1.084 | −0.70326 |

| 16 | 160 | 1 | 0.05 | Air cooling | 0.309 | 0.301 | 0.306 | 0.305 | 10.30452 |

| 17 | 160 | 1 | 0.1 | Emulsion | 0.825 | 0.809 | 0.809 | 0.814 | 1.783956 |

| 18 | 160 | 1 | 0.15 | Dry machining | 0.978 | 0.973 | 0.975 | 0.975 | 0.216939 |

| 19 | 200 | 0.6 | 0.05 | Emulsion | 0.434 | 0.419 | 0.413 | 0.422 | 7.493751 |

| 20 | 200 | 0.6 | 0.1 | Dry machining | 0.558 | 0.598 | 0.575 | 0.577 | 4.776484 |

| 21 | 200 | 0.6 | 0.15 | Air cooling | 0.923 | 0.93 | 0.919 | 0.924 | 0.686561 |

| 22 | 200 | 0.8 | 0.05 | Dry machining | 0.3 | 0.306 | 0.296 | 0.300 | 10.43829 |

| 23 | 200 | 0.8 | 0.1 | Air cooling | 0.53 | 0.514 | 0.519 | 0.521 | 5.663246 |

| 24 | 200 | 0.8 | 0.15 | Emulsion | 1.088 | 1.095 | 1.088 | 1.090 | −0.75119 |

| 25 | 200 | 1 | 0.05 | Air cooling | 0.284 | 0.282 | 0.287 | 0.284 | 10.92344 |

| 26 | 200 | 1 | 0.1 | Emulsion | 0.723 | 0.732 | 0.725 | 0.727 | 2.773295 |

| 27 | 200 | 1 | 0.15 | Dry machining | 0.938 | 0.938 | 0.943 | 0.940 | 0.540524 |

| Factors | Level | Delta | Rank | ||

|---|---|---|---|---|---|

| 1 | 2 | 3 | |||

| Cutting speed | 5.64 | 4.62 | 4.73 | 1.02 | 3 |

| Axial dept of cut | 4.92 | 5.25 * | 4.83 | 0.42 | 4 |

| Feed per tooth | 10.03 * | 4.67 | 0.30 | 9.73 | 1 |

| Cooling/lubricating techniques | 3.62 | 5.68 | 5.69 * | 2.07 | 2 |

| Speed | Dept of Cut | Feed | Cooling/Lubricating | S/N | Ra Calculated | Ra Test | |

|---|---|---|---|---|---|---|---|

| Level | 1 | 2 | 1 | 3 | 11.619 | 0.262458 | 0.2556 |

| Factor | DOF | Sum of Squares | Variance | F | Percent |

|---|---|---|---|---|---|

| A | 2 | 0.024 | 0.012 | 8.347 | 1.163% |

| B | 2 | 0.005 | 0.957 | 1.884 | 0.263% |

| C | 2 | 1.914 | 0.003 | 659.569 | 91.932% |

| D | 2 | 0.112 | 0.056 | 38.654 | 5.388% |

| Error | 18 | 0.03 | 0.001 | 1.254% | |

| Total | 26 | 2.08 | 100% |

| No. | Training Algorithm | ANN Architecture | MSE |

|---|---|---|---|

| 1 | SCG | 4 (1) 1 | 0.0053 |

| 2 | SCG | 4 (3) 1 | 0.0305 |

| 3 | SCG | 4 (4) 1 | 0.00997 |

| 4 | SCG | 4 (2-2) 1 | 0.0225 |

| 5 | SCG | 4 (5-2) 1 | 0.0063 |

| 6 | SCG | 4 (5-2-3) 1 | 0.0102 |

| 7 | BFG | 4 (1) 1 | 0.0127 |

| 8 | BFG | 4 (10-4) 1 | 0.0172 |

| 9 | BFG | 4 (10-4-2) 1 | 0.015 |

| 10 | GDX | 4 (1) 1 | 0.0043 |

| 11 | GDX | 4 (3) 1 | 0.0194 |

| 12 | GDX | 4 (8) 1 | 0.0680 |

| 13 | RP | 4 (1) 1 | 0.00348 |

| 14 | RP | 4 (8-4) 1 | 0.01593 |

| 15 | BR | 4 (10) 1 | 0.0025 |

| 16 | BR | 4 (1-1) 1 | 0.0028 |

| 17 | LM | 4 (5-2) 1 | 0.00823 |

| 18 | LM | 4 (10-4-2) 1 | 0.01287 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kosarac, A.; Mladjenovic, C.; Zeljkovic, M.; Tabakovic, S.; Knezev, M. Neural-Network-Based Approaches for Optimization of Machining Parameters Using Small Dataset. Materials 2022, 15, 700. https://doi.org/10.3390/ma15030700

Kosarac A, Mladjenovic C, Zeljkovic M, Tabakovic S, Knezev M. Neural-Network-Based Approaches for Optimization of Machining Parameters Using Small Dataset. Materials. 2022; 15(3):700. https://doi.org/10.3390/ma15030700

Chicago/Turabian StyleKosarac, Aleksandar, Cvijetin Mladjenovic, Milan Zeljkovic, Slobodan Tabakovic, and Milos Knezev. 2022. "Neural-Network-Based Approaches for Optimization of Machining Parameters Using Small Dataset" Materials 15, no. 3: 700. https://doi.org/10.3390/ma15030700

APA StyleKosarac, A., Mladjenovic, C., Zeljkovic, M., Tabakovic, S., & Knezev, M. (2022). Neural-Network-Based Approaches for Optimization of Machining Parameters Using Small Dataset. Materials, 15(3), 700. https://doi.org/10.3390/ma15030700