In this study, a modified triangular stereo FTP method is implemented to discuss its compatibility and performance towards automated dent inspections. In addition, two contributions are presented.

Secondly, a strategy for the reduction of phase unwrapping errors is shown (

Section 2.4). All phase-based single-shot methods initially output a wrapped (or modulo

) phase. After spatial unwrapping, two methods can be found in the literature to relate phase with depth. In systems calibrated using the pinhole model [

34], the object phase can be directly used to solve the stereo correspondence problem, thus proceeding with triangulation [

31,

32]. Alternatively, the depth can be seen as a function of the

phase shift of the object, calculated with respect to a

reference plane, as commonly performed in phase-height mapping methods [

22,

33]. While triangular stereo methods are generally more accurate, suitable for extended depth range measurements, and easier to calibrate out of the lab [

30,

35], here, it is shown that the employment of the phase shift has the benefit of reducing unwrapping errors and that its advantage can be brought to triangular stereo methods by means of a

virtual reference plane.

2.1. Notation and System Geometry

Throughout the article, the pinhole camera model is used for both camera and projector [

34]. Without loss of generality, the camera optical centre is placed in the world origin

, oriented in the same way so that the world

z-axis corresponds to the camera optical axis.

is the camera intrinsic matrix, while its rotation matrix and translation vector, due to its position, are simply

(

identity matrix) and

, respectively. The projector optical center is placed in

, freely oriented. Its intrinsic matrix, rotation matrix, and translation vector are identified by

,

, and

, respectively. Lens distortion correction is managed using the radial and tangential model [

34], but is not explicitly reported in the following formulae. Camera-projector calibration is assumed as known, following one of the several methods available in the literature [

31,

36,

37,

38]. The described system geometry is represented in

Figure 1.

and are the camera and projector image planes, respectively. In particular, is the plane where the image acquired by the camera lays after distortion correction has been applied, while the distortion-free fringe image chosen as projector input lays on . On each image, a pixel coordinate system is defined, with and being two generic points laying on camera and projector images, respectively.

A sinusoidal fringe pattern is chosen with fringes perpendicular to the

-axis, having period

(in pixels) and frequency

. A red-coloured stripe is drawn for one central period, following the intensity trend (

Figure 2). After projection, the corresponding points can be identified from the camera using a weighted average of red intensity values over the

u-axis, producing sub-pixel accuracy. The use of a similar centerline was also proposed in [

31].

The equation of the virtual reference plane is considered at , thus laying perfectly in front of the camera, where is chosen as the average depth of the red stripe world points, readily calculated by triangulation.

The aim of FTP is to find the generic 3D point H belonging to the object, observed as and , with A being the intersection of the ray and .

2.2. Automatic Band-Pass Filter

In FTP, a band-pass filter must be applied to isolate the fundamental spectrum and filter out DC component and upper harmonics [

17,

22,

29]. The band of interest can be identified by the interval

, where

is the fundamental frequency, as seen from the camera, and

r is an appropriate radius. Experimentally, it can be shown that the fundamental spectrum varies significantly when scanning objects at different distances, requiring the human interaction for the manual selection of the band-pass at each scan. This becomes impractical when dealing with a large number of scans with varying distances. The automatic band-pass filter solves this problem.

The estimation of

assumes that the area of major interest is at depth

(as calculated in

Section 2.1). The intersection of camera optical axis and

is simply

, that is projected on the projector image plane to find

:

where

is a function that divides a homogeneous coordinate by its 3

rd element. On

, two points can be identified as

covering a full period

. Then,

is deprojected back to

and, from here, to the camera:

where:

The same applies to , giving . Lens distortion is corrected accordingly in all of the steps. and lie on at a distance corresponding to the projector period and its orientation. From Pythagorean geometry, is calculated as the projection of over the -axis. Finally, and, throughout this paper, .

The band-pass estimation does not require any user input and allows for some automation [

29,

35] and extended adaptability of FTP in different system geometries and at varying distances from the object, particularly when scanning surfaces roughly placed in front of the camera, as in the application here discussed. The automatic band-pass can successfully isolate the main spectrum (

Figure 3) and it was successfully used in all the following simulations (

Section 3) and experiments (

Section 4).

2.3. Virtual Reference Image

On the domain of the points of observed from the camera and illuminated by the projector, the relation between projector and camera image planes is bijective. This allows to virtually build a reference image as seen from the camera.

The generic point

is projected to the world point

, which is, in turn, observed from the camera as

.

is calculated from

as

In other words, the effect of Equation (

5) is to find the line passing through

and

that intersects

at a certain world point

A, and then project

A onto

. As usual, camera and projector coordinates are also corrected for lens distortion.

With this simple strategy, each point of the camera image is mapped to its corresponding point and its intensity value on the projected fringe image on . As the mapping generally produces noninteger numbers, the final camera image is derived with cubic interpolation. The red stripe is not needed in this image.

The phase variation due to perspective is clearly visible in

Figure 4. As for its frequency content, the virtual reference image is the same as the one that would be acquired placing a real plane at the same position. However, the effects of light diffusion, object albedo, and environment illumination are not introduced.

The virtual reference image is then used to calculate the phase shift, similarly to phase-height methods [

22,

33] but without a physical plane. The usefulness of this is explained below.

2.4. Reduction of Noise Effect over Phase Jumps

With single-shot FTP, the choice of unwrapping algorithms is limited to the spatial ones. To calculate the absolute phase

, estimated as

, spatial phase unwrapping cannot rely on information other than the phase map

that, due to the presence of noise, may present

genuine or

fake -jumps [

39]. The two are indistinguishable, making the spatial unwrapping such a challenging task [

40].

In both stereo triangulation and phase-height mapping methods,

is related to the world

z coordinate. While stereo triangulation directly relates

to a point on

[

31,

32], phase-height mapping estimates

z as a function of the

phase shift from a reference phase

calculated over a plane [

22,

33].

Although the proposed method uses a stereo triangulation approach, it also exploits the calculation of

with respect to a

virtual reference image (

Section 2.3) to reduce the number of

-jumps, both genuine and fake ones. Consequently, unwrapping errors are reduced, as there is less probability for the noise to interfere with

-jumps.

The virtual reference and the object intensity values observed from the camera can be represented, respectively, as

and

where

is the fundamental frequency (

Section 2.2),

and

are intensity modulations,

represents the phase modulation over the virtual reference image (caused by perspective only), and

is the phase modulation over the object caused by perspective, object depth, and noise.

After fast Fourier transform (FFT), filtering and inverse FFT (without aliasing) of

g, the wrapped object phase can be obtained as

where

stands for the wrapping operation (or modulo

). Then, the classic direct method estimates the unwrapped object phase as

where

represents a generic spatial unwrapping algorithm. Note that

is still a

relative phase, with uncertain fringe order.

Furthermore, as in phase-height mapping methods [

22,

33], the wrapped phase shift can be calculated from a new signal obtained as the filtered object signal multiplied by the complex conjugate of the filtered reference [

22]:

and the unwrapped object phase can be obtained also, as

where

is the absolute reference phase and

is the unwrapped phase shift.

Equations (

11) and (

13) should lead to the same result. However, the interaction of noise over

-jumps will be greater in Equation (

11) than in Equation (

13). In fact, under the general assumption of FTP,

and

must vary very slowly compared to the fundamental frequency

[

22], and as a direct consequence,

presents less

-jumps compared to

. An example is shown in

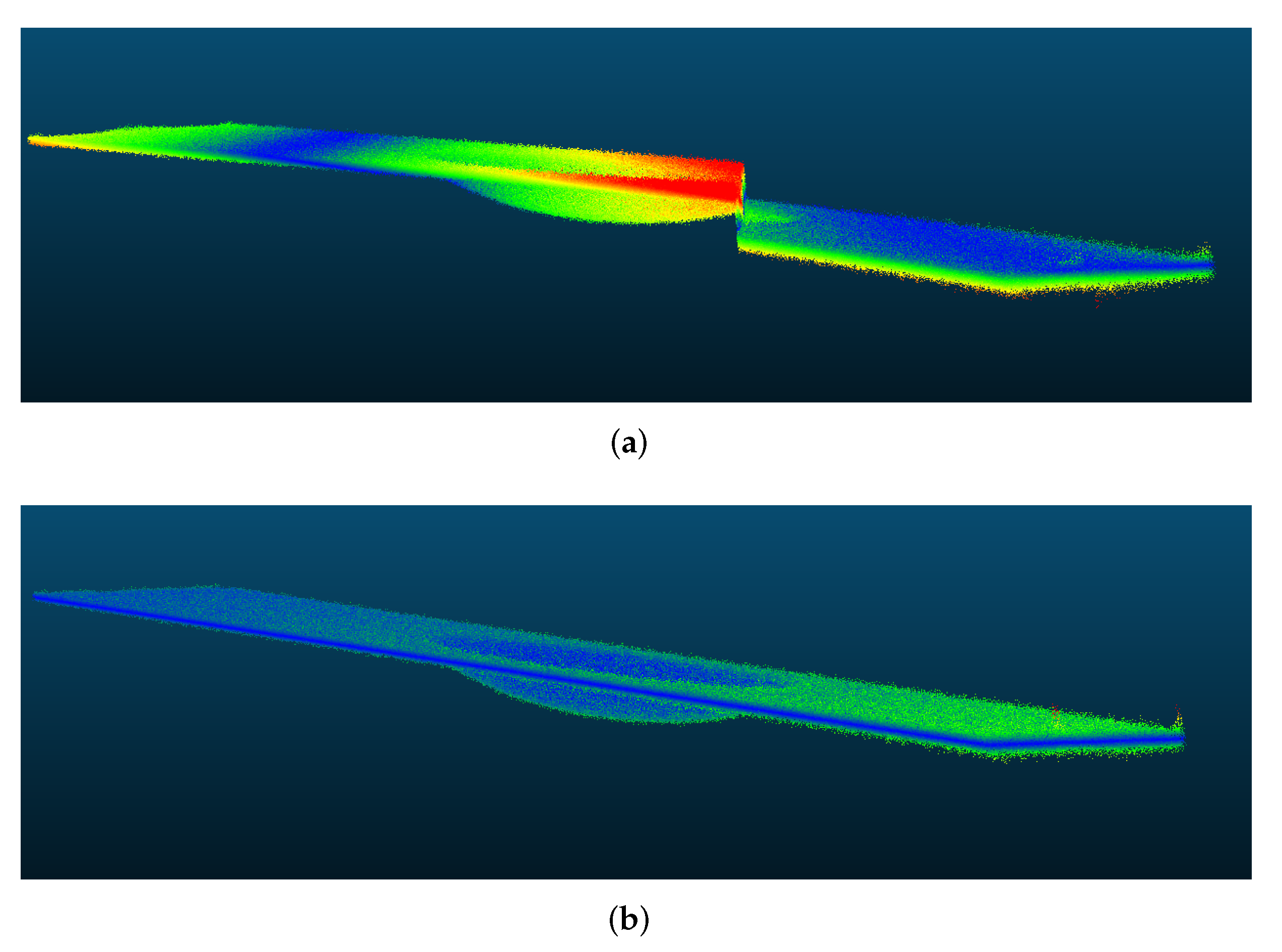

Figure 5.

Therefore, Equation (

13) allows to deal with the unwrapping of the signal

that contains a slower trend superimposed modulo

on the same noise distribution found on

(which originates from the sole object image, as the virtual reference is noise-free). This leads to a more faithful unwrapped phase.

To show the practical effect of this, a white noise (

) is added to

of the same example of

Figure 5. The wrapped object phase and phase shift are shown in

Figure 6: it is clear that the task of unwrapping becomes more challenging due to noise interaction with jumps and that the probability for it to interfere are lower in the second case, due to fewer

-jumps.

The unwrapped phase using the algorithm by Itoh [

41] is shown in

Figure 7. Even in this very simple case, noise causes a genuine jump right after

to be missed, thus propagating the error to the right. The unwrapping of

in Equation (

13), instead, is more robust and outputs the correct result.

Basic single-shot structured-light applications cannot rely on additional knowledge to eliminate noise, and a spatial unwrapping algorithm cannot count on information other than the wrapped phase itself. Therefore, the reduction of

-jumps increases performance, regardless of the unwrapping algorithm used. This was confirmed by the following simulations (

Section 3) and experiments (

Section 4).

Furthermore, simulations in the absence of noise revealed that the use of Equation (

13) in place of Equation (

11) increases the numerical precision. This can be explained by the fact that, in computers, complex numbers are generally stored in algebraic form and the argument is extracted by means of the

function. Numerically, its asymptote regions are more sensitive to machine precision error, and Equation (

13) leads to reduced error, as the slow-growing signal crosses those regions fewer times. Depending on sampling and period, the error

decreased up to

.

2.5. Stereo Correspondence

Before proceeding with triangulation, the relative unwrapped phase

must be shifted accordingly to obtain the absolute phase

, following

where

is the difference from the correct fringe order [

40].

Additional information is needed to find

, for example, the absolute phase value in correspondence of the red stripe [

31]:

where

N is the number of pixels of the red stripe and

phase values at stripe locations. Then, each

value may be directly associated with its

coordinate, as

where

is the projector resolution along the

-axis.

Equivalently, Equation (

14) can be rewritten as

where

is obtained through Equation (

5). Applying the above relation for each of the red stripe

u-coordinates found on

allows to resolve for

k. The average

k is selected and rounded to the nearest integer. Numerically, the latter approach delivers increased accuracy, as

k is correctly forced to be an integer.

Once

k is known, Equation (

17) can be used to retrieve all the

points with respect to each camera point. Finally,

is calculated via epipolar geometry, and having all the corresponding couples,

and

, one may proceed with stereo triangulation.