Abstract

The paper considers the problem of algorithmic differentiation of information matrix difference equations for calculating the information matrix derivatives in the information Kalman filter. The equations are presented in the form of a matrix MWGS (modified weighted Gram–Schmidt) transformation. The solution is based on the usage of special methods for the algorithmic differentiation of matrix MWGS transformation of two types: forward (MWGS-LD) and backward (MWGS-UD). The main result of the work is a new MWGS-based array algorithm for computing the information matrix sensitivity equations. The algorithm is robust to machine round-off errors due to the application of the MWGS orthogonalization procedure at each step. The obtained results are applied to solve the problem of parameter identification for state-space models of discrete-time linear stochastic systems. Numerical experiments confirm the efficiency of the proposed solution.

1. Introduction

Matrix orthogonal transformations are widely used in solving various problems of computational linear algebra [1].

The problem of calculating the values of the derivatives in matrix orthogonal transformations arises in automatic differentiation [2], perturbation and control theories, differential geometry when solving such problems as calculating Lyapunov exponents [3,4], numerical solutions of the matrix differential Riccati equation [5,6], and the Riccati sensitivity equation [7,8], computing higher-order derivatives in the experiment planning [9]. In the theory of Kalman filtering [10,11,12,13,14], orthogonal transformations are used to efficiently compute the solution of the matrix difference Riccati equation.

The methods for calculating the values of derivatives in matrix orthogonal transformations are similar in their properties to automatic (algorithmic) differentiation methods. Three methods for calculating derivatives are currently most common:

- symbolic (analytical) differentiation;

- numerical differentiation;

- automatic (algorithmic) differentiation.

Symbolic differentiation allows one to obtain exact analytical formulas for the derivatives of the elements of a parameterized matrix, but this approach requires significant computational costs, and is not suitable for solving problems in real-time. With numerical differentiation, the result depends significantly on many factors, for example, on the step size. On the contrary, algorithmic (automatic) differentiation does not allow one to calculate expressions for derivatives or their tabular approximation, but does allow one to calculate the values of the derivatives at a given point for given values of the function arguments. This requires knowledge of the expression for the function, or at least a computer program to calculate it.

Consider a rectangular parameterized matrix and a diagonal matrix , where is a scalar real parameter. The problem of algorithmic differentiation of matrix MWGS transformation (MWGS—the modified weighted Gram–Schmidt orthogonalization [10]) is to find, at a given point a triangular (upper or lower) matrix of derivatives and the diagonal derivative matrix using known parameterized matrices , and obtained as the result of MWGS transformation (where ) triangular (upper or lower) matrix with ones on the diagonal and diagonal matrix .

Suppose that the domain of the parameter is such that the matrix has full column rank and the diagonal matrix . In what follows, for convenience of presentation, we denote and . Then, we will call two pairs of matrices and as MWGS-based arrays.

In our recent papers [15,16], we have proposed two methods for algorithmic differentiation of the MWGS-based arrays. These computational methods are based on the forward MWGS-LD orthogonalization ([16], p. 66) and the backward MWGS-UD orthogonalization procedure ([10], p. 127).

In this paper, we further develop our recently obtained results. Our research aims to construct a novel computational algorithm for evaluating the derivatives of MWGS factors of information matrix Y. Firstly, we show how our early suggested methods for algorithmic differentiation of the MWGS-based arrays can be applied to construct a new MWGS-based array algorithm for computing the information matrix sensitivity equations. Secondly, we demonstrate how the proposed algorithm can be efficiently applied to solve the parameter identification problem when gradient-based optimization methods are used.

The paper is organized as follows. Section 2 provides basic definitions associated with the information form of the Kalman filter, discusses the MWGS-based array algorithm for computing the information matrix, and presents two algorithms for computing derivatives of the MWGS-based arrays. Section 3 contains the main result of the paper—the new MWGS-based array algorithm for computing the information matrix sensitivity equations. Section 4 discusses the implementation details of the proposed algorithm and demonstrates how it can be applied for solving the parameter identification problem of the one practical stochastic system model. Finally, conclusions are made in Section 5.

2. Methodology

2.1. Information Kalman Filter and Information Matrix

The information Kalman filter (IKF) is an alternative formulation of the well-known Kalman filter (KF) [17]. IKF differs from KF in that it computes not an error covariance matrix P but its inverse matrix Y known as the information matrix. When there is no a priori information about the initial state value, the IKF is particularly useful because it easily starts from . In the same case, the initial error covariance matrix is not defined. Additionally, an implementation of the IKF could be computationally cheaper when the size of the measurements vector is greater than the size of the state vector [11]. Information filter does not use the same state vector representation as KF. They utilize the so-called information state instead ([11], p. 263).

Consider a discrete-time linear stochastic system

where is the state and are the measurements; k is a discrete-time instant. The process noise and the measurement noise are Gaussian white-noise processes with zero mean and covariance matrices and . That means

where denotes the Kronecker delta function. The initial state vector .

Suppose that matrices are invertible [11]. Consider the problem of information filtering. It is to calculate at each discrete-time moment k the information state estimate, given . The solution is obtained by the conventional IKF equations, which are as follows [11]:

I. Time Update: The predicted information state estimate and the predicted information matrix obey the difference equations

where

II. Measurement Update: The updated (filtered) information state estimate obeys

The filtered information matrix satisfies the difference equation

Equations (4)–(9) can be derived from the KF formulas by taking into account the definitions of the information matrix and the information state.

Furthermore, we will use the notations. Let B be a triangular matrix which can be either a unit upper triangular matrix, i.e., (with 1’s on the main diagonal) or a unit lower triangular matrix, i.e., . D is a diagonal matrix.

2.2. The MWGS-Based Array Algorithm for Computing the Information Matrix

Let us consider Equations (5)–(7) and (9). They allow one to compute information matrix at each discrete-time instant k. To improve the numerical robustness to machine round-off errors, we have proposed in [18] a new MWGS-based array algorithm for computing the information matrices and the information states in IKF.

The MWGS-based array computations imply the use of numerically stable modified weighted Gram–Schmidt (MWGS) orthogonalization procedure for updating the required quantities. (The MWGS outperforms the conventional Gram–Schmidt algorithm for computational accuracy [19].) In [18], we have used both the forward MWGS-LD and the backward MWGS-UD orthogonalization procedures.

Each iteration of these IKF implementations has the following form: given a pair of matrices (so-called the pre-arrays), compute a pair of matrices (the post-arrays) using the MWGS orthogonalization procedure

where a rectangular matrix , the MWGS transformation matrix () produces the block triangular matrix with 1’s on the main diagonal. A matrix is either an upper triangular block matrix or a lower triangular block matrix such that

where the diagonal matrices are , , and ; see ([10], Lemma VI.4.1) for details.

We have proved ([18], Statement 1) that Algorithm 1 is algebraically equivalent to the IKF given by Equations (4)–(9). Thus, we have obtained an alternative method for calculating the information matrix Y within the MWGS-based array algorithm. A significant advantage of Algorithm 1 is its numerical robustness to machine round-off errors. A detailed discussion can be found in [18,20].

| Algorithm 1 [18]. The MWGS-based array IKF. |

| Initialization. Let . Compute the modified Cholesky decomposition. (The modified Cholesky decomposition has the form where A is a symmetric positive definite matrix, is a diagonal matrix, and is a unit triangular (lower or upper) matrix [1,11].) . Set the initial values and , . ▹ For do I. Time Update. Apply the modified Cholesky decomposition for the process noise covariance matrix . Compute matrices and . Find the MWGS factors of matrix as follows: I.A. In the case of the forward MWGS-LD factorization (i.e., ), the following steps should be done: I.B. In the case of the backward MWGS-LD factorization (i.e., ), one has to follow the next steps: Given , find the predicted information state estimate: II. Measurement Update. Apply the modified Cholesky factorization for the measurement noise covariance matrix . Compute matrices and . Find the filtered MWGS factors : Next, compute the filtered estimate by (8). ▹ End. |

2.3. Algorithmic Differentiation of the MWGS-Based Arrays

When solving practical problems of parameter identification [21], the discrete linear stochastic model (1),(2) is often parameterized. The latter means that the system matrices could depend on the unknown parameter . Therefore, it should be estimated together with the hidden state vector given measurements . In this case, any parameters’ estimation scheme includes two components, namely: the filtering method for computing an identification criterion and the chosen optimization algorithm to identify the optimal value . Altogether, it is called the adaptive filtering scheme [22].

It is well-known that the gradient-based optimization algorithms converge fast and, therefore, they are the preferred methods for practical implementation [8]. They require the computation of the gradient of identification criterion. The latter leads to the problem of the adaptive filter derivatives computation. The related vector- and matrix-type equations are called the filter sensitivity equations with respect to unknown parameter .

Consider conventional information Kalman filter, presented by Equations (4)–(9), and MWGS-based IKF (Algorithm 1). We can construct matrix sensitivity equations for information matrix evaluating in the conventional IKF by direct differentiation of (5)–(7), and (9). This solution is not hard, and it is as follows:

However, a corresponding solution is not obvious for the MWGS-based array IKF (Algorithm 1). Finding new computational methods for evaluating the derivatives of MWGS-factors of information matrix Y is the aim of our research.

Let us consider two of our methods for algorithmic differentiation of the MWGS-based arrays. They were proposed in [15,16].

Case 1. Consider the forward MWGS-LD orthogonalization procedure (10) and (11), where (lower triangular matrix), (diagonal matrix).

Lemma 1

([16]). Let entries of the pre-arrays , in (11) be known differentiable functions of a parameter θ. Consider the transformation (11) in Case 1. Given the derivatives of the pre-arrays and , we can calculate the corresponding derivatives of the post-arrays:

where , , are strictly lower triangular, diagonal and strictly upper triangular parts of the matrix product , respectively. and are diagonal and strictly lower triangular parts of the product .

Case 2. Consider the backward MWGS-UD orthogonalization procedure (10) and (11) where (upper triangular matrix), (diagonal matrix).

Lemma 2

([15]). Let entries of the pre-arrays , in (11) be known differentiable functions of a parameter θ. Consider the transformation (11) in Case 2. Given the derivatives of the pre-arrays and , we can calculate the corresponding derivatives of the post-arrays:

where , , are strictly lower triangular, diagonal and strictly upper triangular parts of the matrix product , respectively. and are diagonal and strictly upper triangular parts of the product .

Applying Lemmas 1 and 2, we construct the corresponding algorithms for calculating the values of derivatives in the MWGS-based arrays for given parametrized matrices and .

Remark 1.

FunctionMWGS-LD(, ) implements the forward MWGS orthogonalization procedure.

Remark 2.

FunctionMWGS-UD(, ) implements the backward MWGS orthogonalization procedure.

Thus, computational Algorithms 2 and 3 have the following properties:

- They allow calculating, at a given point, the values of derivatives of elements of the matrix factors obtained by MWGS transformation of the pair of parameterized matrices. In this case, there is no need to calculate values of the derivatives of elements of the MWGS transformation matrix.

- These algorithms require simple addition and multiplication matrix operations, and only one triangular and one diagonal matrix inversion operation. Therefore, they have a simple structure to easily implement in program code.

It should be noted here that the results of Lemma 2 and Algorithm 3 have been successfully applied in [24] for constructing an efficient UD-based algorithm for the computation of maximum likelihood sensitivity of continuous-discrete systems.

| Algorithm 2. Diff_LD (LD-based derivative computation). |

| ▹ Input data: , , , |

| , , . ▹ Begin 1 evaluate ← , ← ; 2 evaluate ←, ← ; 3. compute ←MWGS-LD(,); ▹ For do 4 compute X←; 5 split X into three parts ←X; 6 compute V←; 7 split V into three parts ←V; 8 obtain result ← ; 9 obtain result ← . ▹ End for ▹ End. ▹ Output data: , ; , . |

| Algorithm 3. Diff_UD (UD-based derivative computation). |

| ▹ Input data: , , , |

| , , . ▹ Begin 1 evaluate ← , ← ; 2 evaluate ←, ← ; 3 compute ← MWGS-UD(,); ▹ For do 4 compute X←; 5 split X into three parts ←X; 6 compute V←; 7 split V into three parts ←V; 8 obtain result ← ; 9 obtain result ← . ▹ End for ▹ End. ▹ Output data: , ; . |

3. Main Result

The New MWGS-Based Array Algorithm for Computing the Information Matrix Sensitivity Equations

Now, we are ready to present the main result—the new MWGS-based array algorithm for computing the information matrix sensitivity equations. We are extending the functionality of Algorithm 1 so that it is able to calculate not only the values of information matrix Y using MWGS-based arrays, but also the values of their derivatives.

Let us consider the given value of parameter .

The new Algorithm 4 naturally extends any MWGS-based IKF implementation on the information matrix sensitivities evaluation.

| Algorithm 4. The differentiated MWGS-based array. |

| Initialization. Let . Evaluate the initial value of information matrix . Find , . Apply the modified Cholesky factorization . Find , . Set the initial values and . ▹ For do I. Time Update. I.1 Evaluate matrices , , and . Find , , and , . I.2 Use the modified Cholesky decomposition for matrices and to find , and , . I.3 Given the MWGS factors and their derivatives , find their predicted values and their derivatives () as follows: I.A. In the case of the forward MWGS-LD factorization (i.e., ), the following steps should be taken:

|

I.B. In the case of the backward MWGS-LD factorization (i.e., ), one has to take the next steps:

|

| II. Measurement Update. II.1 Evaluate matrices and . Find and , . II.2 Use the modified Cholesky decomposition for matrices and to find , and , . II.3 Given the MWGS factors and their derivatives , find the corresponding pairs of matrices and () as follows:

▹ End. |

4. Discussion

4.1. Implementation Details of Algorithm 4

Let us consider the computational scheme of the constructed algorithm in detail. The new Algorithm 4 is built based on one of two variants of the MWGS transformation and the corresponding method of algorithmic differentiation of such an orthogonal transformation. Therefore, Algorithms 2 and 3 can be considered as basic computational tools (or technologies) for the implementation of Algorithm 4. From this point of view, the technology of software implementation of the new algorithmic differentiation algorithm seems to be simple and understandable. It consists of only three simple steps:

- Fill in block pre-arrays with available data.

- Execute an algorithm for calculating derivatives in a matrix MWGS transformation of one of the types corresponding to Case 1 or Case 2.

- As a result, get block post-arrays and read off the required results from them in the form of matrix blocks.

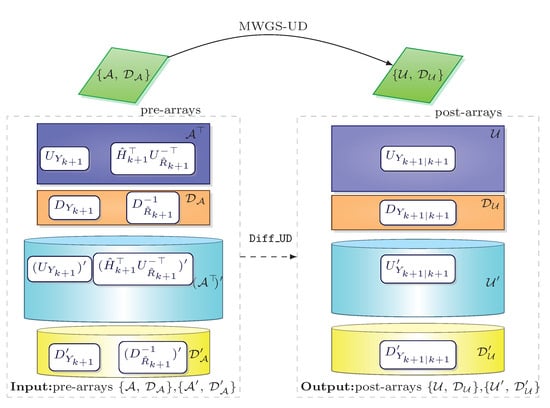

The implementation scheme of the measurement update step in Algorithm 4 based on the MWGS-UD transformation is shown in Figure 1. Similarly, a general scheme for the MWGS-LD transformation can also be represented.

Figure 1.

Implementation scheme of the measurement update step in Algorithm 4 based on the MWGS-UD transformation.

The computational complexity of the novel Algorithm 4 is mainly determined by the computational complexity of Algorithm 1, i.e., the MWGS-based information-form Kalman filtering algorithm. A detailed analysis of its computational complexity is given in ([18], Section 5.2).

It was shown that conventional IKF and Algorithm 1 have the complexity of the same order. However, IKF requires four full matrix inversions while calculating the information matrix Y. At the same time, Algorithm 1 requires only one full matrix inversion of the matrix . Besides, if the matrices and are positively definite and do not depend on k, then the modified Cholesky decomposition needs to be performed only once at the initialization step of the MWGS-based algorithm. If matrix is not singular and also does not depend on k, then the inversion of matrix F also needs to be performed only once, i.e., at the initialization step of the MWGS-based algorithm. Algorithm 4 requires additionally only one inversion of the unit triangular matrix and one inversion of the diagonal matrix (see Algorithm 2 or 3). Therefore, no additional inversions of the full matrices are required.

To summarize, the information matrix sensitivity evaluation based on the conventional IKF (Equations (5)–(7) and (19)–(23)) requires eight inversions of the full matrices, while the new Algorithm 4 avoids the full matrix inversion operations and requires the inverse of the unit triangular and diagonal matrices, only. Thus, we can conclude that the newly proposed algorithm is computationally efficient compared to the conventional IKF.

4.2. Application of the Results Obtained to the Problem of Parameter Identification

In practice, the matrices characterizing discrete-time linear stochastic system (1)–(2) are often known up to certain parameters. Consider an important problem of parameter identification [21]. Assume that the elements of system matrices , , , , , and are functions of unknown system parameters vector . It needs to be identified. For the sake of simplicity, instead of , , etc., we will write , , , etc.

We wish to demonstrate how the new Algorithm 4 can be applied to solve the parameter identification problem of the practical stochastic system model.

Consider the instrument error model for one channel of the INS (Inertial Navigation System) given as follows [25]:

where , , and subscripts x, y, A, G denote “axis ”, “axis ”, “Accelerometer”, and “Gyro”, respectively.

The state vector where the first element is the random error in reading velocity along axis of a GSP, the second element is the angular error in determining the local vertical, the third one is the accelerometer reading random error, and the fourth one is the gyro constant drift rate.

The constants , g, a are, respectively, equal to the rate of data arrival and processing, the gravity acceleration, and the semi-major axis of the Earth. The quantities and . The constants and are elements of the accepted model of the correlation function . Numerical values of the model parameters are given in Table 1.

Table 1.

Numerical values of parameters.

Equations (38) and (39) correspond to the general model (1)–(2). Note that, in our case, all system model matrices do not depend on k.

Let us suppose that parameter is unknown and needs to be identified. This means that the model parameter , and therefore , .

Solving the problem of parameter identification, we use the Active Principle of Adaptation (APA) [26,27,28,29], which consists of constructing an Auxiliary Performance Index (API) [23,28,30] and minimizing it with the use of a gradient-based numerical procedure.

The APA approach to system adaption within the parameter uncertainty differs in the fact that it suggests an indirect state prediction error control in the API form. It has to satisfy two main requirements:

- it depends on the system observable values only;

- it attains its minimum coincidently with the Original Performance Index (OPI).

The API satisfies a relation

if the OPI is defined as the expected (Euclidean) norm of the state prediction error. Thus API and OPI have the one and the same minimizing argument .

In order to construct the API, we build a Standard Observable Model (SOM), i.e., we perform the corresponding transformation of the basis in the state space from the representation (1)–(2). Model

is equivalent to the original model (1)–(2) and is its canonical representation, where is the new state vector; , , and are matrices of the following form:

From representation (42), (43) it follows that the maximum observability index of the system is .

Using the results of [26,27,28], we construct the auxiliary performance index (API)

for which the auxiliary process is written in the form:

where is the prediction state estimate obtained in an adaptive filter.

Next, to get a reasonable estimate for (44), we replace it by the realizable (workable) performance index

With the purpose of finding the optimal value of the unknown parameter and to minimize the API (46), we can use existing methods of numerical optimization. Moreover, all non-search methods require the calculation of the API gradient. Assume that the gradient-based optimization method is chosen for the parameter identification procedure. Then, from (46), we can write the expression for calculating the API gradient:

Evaluating (47) requires the computation of sensitivities (partial derivatives) of the auxiliary process with respect to the adjustable parameter . Various methods for calculating the sensitivities based on the standard Kalman filter are discussed in detail in [8].

Now, we are ready to demonstrate how the new Algorithm 4 can be applied for constructing a computational scheme for the numerical identification of the parameter based on the API approach.

The identification of unknown parameter and the estimation of state vector of the system (38)–(39) according to the criterion

can be performed simultaneously by the following algorithm.

Let us consider the practical application of Algorithm 5 to identify the unknown value of in the model (38)–(39). We have simulated the sequence of output signals with the “true” value of . All computer codes were constructed in MATLAB.

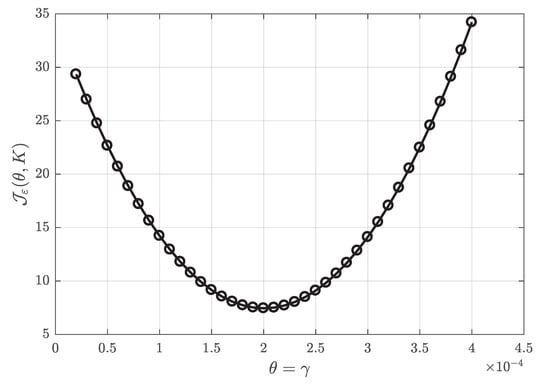

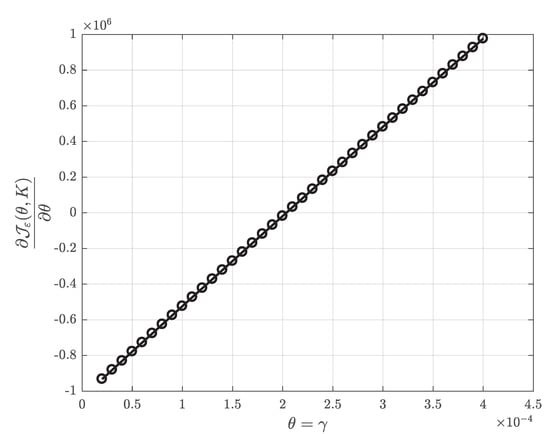

In order to conduct our numerical experiments, we have implemented Algorithms 1–5 as the corresponding MATLAB functions. Then, we have calculated the API (46) and the API gradient (47) depending on different values of . Results are illustrated by Figure 2 and Figure 3.

| Algorithm 5. The API-based parameter identification computational scheme. |

| BEGIN |

| ✓ Assign an initial parameter estimate for . REPEAT

|

| END |

Figure 2.

The values of the API identification criterion depending on values of parameter , calculated using Algorithms 1 and 5.

Figure 3.

The values of the API gradient depending on values of parameter , calculated using Algorithms 4 and 5.

As can be seen from these figures, the minimum point of the API coincides with the true value of parameter . Furthermore, a plot of the API gradient has negative values to the left and positive values to the right of the zero point, which correspond to the minimum of the API. All this evidence substantiates our theoretical derivations.

Further to solve a parameter identification problem, we apply the MATLAB Optimization Toolbox with the built-in function fminunc, which implements the gradient-type method. Algorithm 5 was incorporated into the optimization method fminunc to compute the API and its gradient. We have chosen the initial value and the stopping criteria epsf=, epsx=.

Results summarized in Table 2 show that the computed estimate comes close to the true parameter value .

Table 2.

Performance of the API-based identification of the model parameter .

So, we conclude that the newly constructed Algorithm 4 can be efficiently applied to solve the parameter identification problem when the gradient-based optimization method is used.

5. Conclusions

This paper presents the new MWGS-based array algorithm for computing the information matrix sensitivity equations. We have extended the functionality of the MWGS-based information-form Kalman filtering methods so that they are able to calculate not only the values of the information matrix using the MWGS-based arrays, but also the values of their derivatives. The proposed algorithm is robust to machine round-off errors due to the application of the MWGS orthogonalization procedure at each step.

Moreover, we have demonstrated how the new Algorithm 4 can be applied for solving the parameter identification problem of the one practical stochastic system model, i.e., a simplified version of the instrument error model of the INS. We also have suggested the new API-based parameter identification computational scheme. Numerical experiments conducted in MATLAB confirm the efficiency of the proposed solution.

Author Contributions

Conceptualization, A.T. and J.T.; methodology, A.T. and J.T.; software, A.T.; validation, J.T.; formal analysis, A.T. and J.T.; investigation, A.T. and J.T.; resources, A.T. and J.T.; data curation, A.T. and J.T.; writing—original draft preparation, J.T.; writing—review and editing, A.T. and J.T.; visualization, A.T. and J.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| MWGS | Modified weighted Gram–Schmidt transformation |

| MWGS-LD | Forward MWGS procedure |

| MWGS-UD | Backward MWGS procedure |

| KF | Kalman filter |

| IKF | Information Kalman filter |

| TU | Time update step |

| MU | Measurement update step |

| APA | Active principle of adaptation |

| API | Auxiliary Performance Index |

References

- Golub, G.H.; Van Loan, C.F. Matrix Computations; Johns Hopkins University Press: Baltimore, MD, USA, 1983. [Google Scholar]

- Giles, M. An Extended Collection of Matrix Derivative Results for Forward and Reverse Mode Algorithmic Differentiation; Report 08/01; Oxford University Computing Laboratory: Oxford, UK, 2008; 23p. [Google Scholar]

- Dieci, L.; Eirola, T. Applications of Smooth Orthogonal Factorizations of Matrices. In The IMA Volumes in Mathematics and Its Applications; Springer: New York, NY, USA, 2000; Volume 119, pp. 141–162. [Google Scholar]

- Dieci, L.; Russell, R.D.; Van Vleck, E.S. On the Computation of Lyapunov Exponents for Continuous Dynamical Systems. SIAM J. Numer. Anal. 1997, 34, 402–423. [Google Scholar] [CrossRef]

- Kunkel, P.; Mehrmann, V. Smooth factorizations of matrix valued functions and their derivatives. Numer. Math. 1991, 60, 115–131. [Google Scholar] [CrossRef]

- Dieci, L. On smooth decompositions of matrices. SIAM J. Matrix Anal. Appl. 1999, 20, 800–819. [Google Scholar] [CrossRef]

- Åström, K.-J. Maximum Likelihood and Prediction Error Methods. Automatica 1980, 16, 551–574. [Google Scholar] [CrossRef]

- Gupta, N.K.; Mehra, R.K. Computational aspects of maximum likelihood estimation and reduction in sensitivity function calculations. IEEE Trans. Autom. Control 1974, AC-19, 774–783. [Google Scholar] [CrossRef]

- Walter, S.F. Structured Higher-Order Algorithmic Differentiation in the Forward and Reverse Mode with Application in Optimum Experimental Design. Ph.D. Thesis, Humboldt-Universität zu Berlin, Berlin, Germany, 2011. [Google Scholar]

- Bierman, G.J. Factorization Methods for Discrete Sequential Estimation; Academic Press: New York, NY, USA, 1977. [Google Scholar]

- Grewal, M.S.; Andrews, A.P. Kalman Filtering: Theory and Practice Using MATLAB, 4th ed.; John Wiley & Sons, Inc.: New York, NY, USA, 2015. [Google Scholar]

- Kailath, T.; Sayed, A.H.; Hassibi, B. Linear Estimation; Prentice Hall: Hoboken, NJ, USA, 2000. [Google Scholar]

- Gibbs, B.P. Advanced Kalman Filtering, Least-Squares and Modeling: A Practical Handbook; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2011. [Google Scholar]

- Grewal, M.S.; Weill, L.R.; Andrews, A.P. Global Positioning Systems, Inertial Navigation, and Integration; John Wiley & Sons, Inc.: New York, NY, USA, 2001. [Google Scholar]

- Tsyganova, J.V.; Kulikova, M.V. State sensitivity evaluation within UD based array covariance filters. IEEE Trans. Autom. Control 2013, 58, 2944–2950. [Google Scholar] [CrossRef]

- Tsyganova, Y.V.; Tsyganov, A.V. On the Computation of Derivatives within LD Factorization of Parametrized Matrices. Bull. Irkutsk. State Univ. Ser. Math. 2018, 23, 64–79. (In Russian) [Google Scholar] [CrossRef]

- Kaminski, P.G.; Bryson, A.E.; Schmidt, S.F. Discrete square-root filtering: A survey of current techniques. IEEE Trans. Autom. Control 1971, AC-16, 727–735. [Google Scholar] [CrossRef]

- Tsyganova, J.V.; Kulikova, M.V.; Tsyganov, A.V. A general approach for designing the MWGS-based information-form Kalman filtering methods. Eur. J. Control 2020, 56, 86–97. [Google Scholar] [CrossRef]

- Björck, A. Solving linear least squares problems by Gram-Schmidt orthogonalization. Bit Numer. Math. 1967, 7, 1–21. [Google Scholar] [CrossRef]

- Tsyganova, J.V.; Kulikova, M.V.; Tsyganov, A.V. Some New Array Information Formulations of the UD-based Kalman Filter. In Proceedings of the 18th European Control Conference (ECC), Napoli, Italy, 25–28 June 2019; pp. 1872–1877. [Google Scholar]

- Ljung, L. System Identification: Theory for the User, 2nd ed.; Prentice Hall PTR: Upper Saddle River, NJ, USA, 1999. [Google Scholar]

- Kulikova, M.V. Likelihood Gradient Evaluation Using Square-Root Covariance Filters. IEEE Trans. Autom. Control 2009, 54, 646–651. [Google Scholar] [CrossRef]

- Semushin, I.V.; Tsyganova, J.V.; Tsyganov, A.V. Numerically Efficient LD-computations for the Auxiliary Performance Index Based Control Optimization under Uncertainties. IFAC-PapersOnline 2018, 51, 568–573. [Google Scholar] [CrossRef]

- Boiroux, D.; Juhl, R.; Madsen, H.; Jørgensen, J.B. An Efficient UD-Based Algorithm for the Computation of Maximum Likelihood Sensitivity of Continuous-Discrete Systems. In Proceedings of the 2016 IEEE 55th Conference on Decision and Control (CDC), ARIA Resort & Casino, Las Vegas, NV, USA, 12–14 December 2016; pp. 3048–3053. [Google Scholar]

- Broxmeyer, C. Inertial Navigation Systems; McGraw-Hill Book Company: Boston, MA, USA, 1956. [Google Scholar]

- Semushin, I.V. Adaptation in Stochastic Dynamic Systems—Survey and New Results I. Int. J. Commun. Netw. Syst. Sci. 2011, 4, 17–23. [Google Scholar] [CrossRef][Green Version]

- Semushin, I.V. Adaptation in Stochastic Dynamic Systems—Survey and new results II. Int. J. Commun. Netw. Syst. Sci. 2011, 4, 266–285. [Google Scholar] [CrossRef][Green Version]

- Semushin, I.V.; Tsyganova, J.V. Adaptation in Stochastic Dynamic Systems—Survey and New Results IV: Seeking Minimum of API in Parameters of Data. Int. J. Commun. Netw. Syst. Sci. 2013, 6, 513–518. [Google Scholar] [CrossRef]

- Semushin, I.V. The APA based time-variant system identification. In Proceedings of the 53rd IEEE Conference on Decision and Control (CDC), Los Angeles, CA, USA, 15–17 December 2014; pp. 4137–4141. [Google Scholar]

- Tsyganova, Y.V. Computing the gradient of the auxiliary quality functional in the parametric identification problem for stochastic systems. Autom. Remote Control 2011, 72, 1925–1940. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).