1. Introduction

Since the late 19th century, the manufacturing industry has experienced multiple transformations. The shift from manual techniques to automated systems was driven by mechanization and steam power [

1].

Each subsequent industrial revolution has brought groundbreaking technologies that have progressively reshaped how goods are produced, distributed, and consumed. From electricity and assembly lines in the early 20th century to computer-controlled automation in the latter half. Today, the integration of digital technologies into manufacturing processes has reached an unprecedented scale, fundamentally altering operational efficiency, resource management, and environmental performance [

2].

Recent evidence suggests that the digitalization of manufacturing has significantly improved the carbon emission efficiency of exports, demonstrating that modern technological integration not only enhances productivity but also contributes to more sustainable industrial practices [

3].

Moreover, the presence of digital industries in urban areas has been shown to promote innovation ecosystems that speed up the development and adoption of new technologies [

2].

This shift toward digitally enabled manufacturing is also reshaping global competitiveness, as nations and regions that successfully combine innovative capacity with advanced digitalization levels are better positioned to lead in international markets [

4]. In this context, understanding how to employ emerging technologies to optimize manufacturing processes and overcome quality control challenges has become a major concern for industry and academia as well.

These technological advances have become essential in the shipbuilding sector, where they have boosted the quality of final products and streamlined production workflows. Modern defect detection technologies now enable shipyards to perform precise inspections at various stages of assembly, which makes it possible to detect deviations early on and reduce the need for costly rework [

5].

Quality control processes have thus evolved from traditional manual inspections to highly integrated systems that monitor dimensional accuracy, structural integrity, and compliance with design specifications throughout the entire construction cycle. Implementing these frameworks reduces defects, improves customer satisfaction, and optimizes resource allocation by identifying inefficiencies before they propagate throughout the process [

6].

These digital technologies are being adopted globally at an accelerating rate, with shipyards in many regions reporting significant improvements in traceability, production efficiency, and defect management. For example, smart factory initiatives in China have shown that using intelligent initial design models combined with data-driven case analysis can substantially reduce production time and improve coordination between departments [

7].

However, the competitive environment remains challenging, especially for European shipyards. They face pressure from lower-cost producers in Asia while trying to uphold their reputation for building technologically sophisticated, high-quality vessels [

8].

To remain competitive, many European shipyards have adopted a collaborative model that relies on an extensive network of subcontractors to supply specialized components and perform specific manufacturing tasks. This approach enables shipyards to focus on system integration and final assembly while harnessing the expertise and flexibility of smaller suppliers. It also distributes financial risk and allows for quicker adaptation to market fluctuations and changing regulatory standards [

9].

Given the massive scale and complexity of modern vessels, the early detection of defects in smaller structural components is essential to guaranteeing the final product’s overall integrity and safety. A typical ship is assembled hierarchically from thousands of individual elements, beginning with one of the smallest fabricated units known as simple sub-assemblies and progressing to more complex structures called T sub-assemblies. These fundamental components are then grouped and welded together to create larger modular blocks; these blocks are assembled into the main hull sections and other primary divisions of the vessel.

Because this construction process relies on the precise fit and alignment of countless prefabricated pieces, even minor dimensional deviations or weld defects at the subassembly level can propagate upward through the assembly hierarchy, leading to misalignment, structural weakness, or the need for extensive and costly rework during block integration.

Defects that remain undetected in the early stages of production may compromise the ship’s structural performance under operational loads, which could affect its long-term durability, reliability, and fuel efficiency. Therefore, it is essential to implement strict quality control measures during sub-assembly and block fabrication stages. This ensures that production schedules are maintained, costs are controlled, and the final vessel meets strict safety standards and regulatory requirements.

Machine learning and computer vision techniques have emerged as powerful tools for automating defect detection across various industrial domains [

10]. These techniques offer substantial improvements in inspection speed, consistency, and accuracy compared to manual methods. These data-driven approaches can identify patterns and anomalies that human inspectors might overlook, especially when working with large volumes of components or complex geometric structures [

11].

Within the broader field of automated inspection, point cloud processing has gained significant attention as a powerful method for capturing and analyzing three-dimensional geometric information with high precision. Generated through laser scanning or photogrammetry, point clouds provide dense spatial representations of physical objects, enabling detailed analysis of surface geometry, dimensional conformance, and structural integrity [

12]. Applying machine learning algorithms directly to point cloud representations makes it possible to detect deviations from nominal geometry, identify surface defects, and assess assembly quality entirely automatically.

One particularly promising approach within this domain involves the use of reconstruction-based models, where a neural network learns to encode the normal geometric characteristics of defect-free components and then attempts to reconstruct input point clouds. In this paradigm, regions of the input that deviate significantly from the learned distribution, such as dents, warping, or misaligned features, result in higher reconstruction errors, which can be interpreted as indicators of potential defects [

13].

Recent advancements in deep learning have demonstrated that reconstruction-based architectures are highly effective for unsupervised defect detection, where models are trained exclusively on defect-free samples without requiring labeled examples of anomalies [

14]. These architectures learn compact latent representations that capture the underlying distribution of normal data. During inference, they identify inputs that significantly deviate from this learned distribution by resulting in elevated reconstruction errors.

The value of this unsupervised strategy is especially high in manufacturing environments where there is a scarcity and high cost of labeled defect data, as the model can be trained only on examples of acceptable components and subsequently used for the identification of anomalies during production. While several studies have addressed defect detection in shipbuilding, most existing approaches depend on traditional inspection protocols or human expertise. There has been limited exploration of using advanced deep learning architectures with three-dimensional geometric data. For example, research on traditional shipbuilding practices has used logic-based analysis frameworks to systematically identify potential failure modes [

15]. Similarly, deep learning has been applied to detect painting defects in shipyards using image-based convolutional networks [

16], and machine learning classifiers have been deployed for automated weld inspection based on sensor data [

17], yet none of these studies have researched the application of point cloud autoencoders or reconstruction-based anomaly detection models for shipbuilding components.

The use of 3D reconstruction and point cloud-based methods for defect detection has become popular in many manufacturing sectors. For example, in additive manufacturing, there is a case in which they employ in situ point cloud processing combined with machine learning to rapidly identify surface defects during printing, enabling real-time quality monitoring and early intervention [

18].

Similarly, depth-based approaches have been developed to enhance anomaly detection in industrial components by simulating depth information to augment training data and improve model robustness [

19]. Additionally, lightweight network architectures specifically designed for 3D industrial anomaly detection have demonstrated that efficient point cloud processing can achieve high accuracy while maintaining computational feasibility for deployment in production environments [

20].

Recent studies have shown that deep learning-based 3D reconstruction can be effectively employed for defect detection in industrial components. For example, a recent 3D anomaly detection method based solely on point cloud reconstruction demonstrates that autoencoder-style reconstruction can localize industrial surface defects without relying on large memory banks or pre-trained models [

21]. Other authors propose full point-cloud reconstruction pipelines for 3D anomaly detection on industrial parts, demonstrating competitive performance on datasets such as MVTec 3D-AD [

22]. Reconstruction-based autoencoders have also been applied to surface inspection scenarios, where deviations are detected by comparing reconstructed and measured shapes from laser sensors [

23]. In parallel, several reviews highlight the growing role of point-cloud deep learning in industrial production and defect inspection, with reconstruction-based methods emerging as a key direction for automatic quality control [

24,

25].

Unlike these works, which mainly focus on generic benchmark datasets or other manufacturing domains, the application of reconstruction-based point cloud models to real industrial components presents an opportunity to extend their use to more complex and critical scenarios, such as shipbuilding sub-assemblies.

This work focuses on the use of point cloud reconstruction algorithms to detect overshooting defects in 3D printed representations of simple and T subassemblies, whose blueprints were provided by a company working as a subcontractor to a shipyard. To achieve this, we will evaluate a few different unsupervised learning architectures, each trained to model the geometry of defect-free samples. Following reconstruction, an Isolation Forest algorithm is applied to the resulting error metrics to classify defective components. The purpose of this study is to compare several approaches and identify suitable options for automated overshooting detection in a realistic industrial setting. Our goal is to offer practical insights for implementing reliable quality control in shipbuilding and other high-precision manufacturing environments.

Based on this context, the main contributions of this work are threefold. First, we propose an unsupervised, reconstruction-based pipeline for detecting overshooting defects in shipbuilding sub-assemblies using 3D point clouds and anomaly scores derived from reconstruction errors. Second, we systematically compare several deep learning architectures for point cloud reconstruction under a common training and evaluation setup. We analyze their convergence behavior, computational cost, and detection performance. Third, we demonstrate the practical relevance of this approach on multiple sub-assembly geometries, showing how the choice of architecture and anomaly threshold affects the trade-off between defect detection capability and false alarms, and providing guidelines for its adoption in industrial quality control.

This paper is structured in six main sections. After the introduction,

Section 2 describes the industrial context and characteristics of the case study components. In

Section 3 we present our approach.

Section 4 describes the methods and materials used.

Section 5 presents the experimental setup, implementation details, results, and comparative analysis.

Section 6 concludes with a discussion and recommendations for future research directions.

2. Case Study

A ship’s structure is built according to a hierarchical assembly process. The vessel is divided into large blocks, each of which is composed of smaller sub-blocks. These sub-blocks are assembled from multiple types of subassemblies, which are manufactured separately and joined through welding and fitting operations to form the final structural units. Among these elements, the T-subassemblies represent critical structural components located at the junctions where transverse and longitudinal beams intersect. They play an essential role in ensuring the vessel’s overall stiffness and load-bearing capacity, and therefore require high precision in their fabrication.

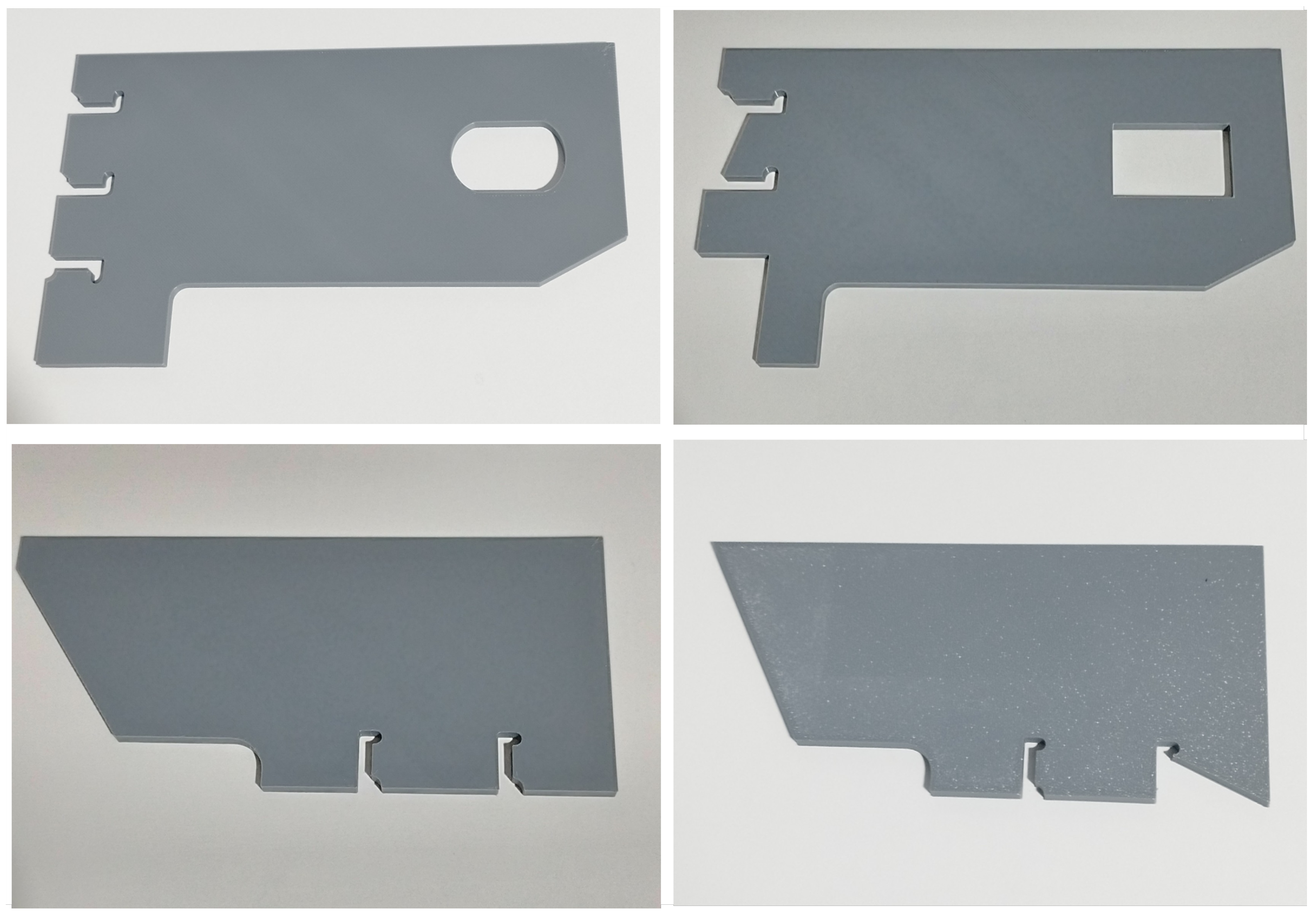

For this study, a company that works as an auxiliary supplier for a shipyard provided us with the official blueprints of a few representative T-subassemblies, as the original components are too large to handle directly. These parts were selected due to their complex geometry and the frequent occurrence of dimensional deviations during manufacturing. One of the most common issues observed during production is the overshooting defect, which occurs when material is unintentionally removed beyond the intended design surface during the cutting process. This typically happens when the thermal or mechanical cutting tool slightly exceeds the programmed trajectory, or when positioning or alignment errors cause the torch or blade to advance too far. As a result, small recesses or notches are generated along the edge, where the actual contour lies inside the nominal boundary, reducing the effective contact area at welded joints and potentially affecting both assembly precision and structural integrity.

These deviations can alter the geometry of the part, which can affect the precision of the assembly and the overall structural integrity of the vessel.

The identification of such defects is essential, as even minor deviations have the potential to compromise the assembly process and the structural performance of the parts. To address this challenge, we propose a system that leverages 3D point cloud data to identify anomalies in the geometry of the T-subassemblies.

The approach proposed is unsupervised, thereby eliminating the necessity for labeled defective samples during the training phase. This is of particular importance in industrial settings, where defects are often highly variable and unpredictable, making it impractical to collect representative datasets of all possible failure scenarios.

To this end, the T-subassemblies in question were reproduced through 3D printing, a process that was based on the original blueprints provided by the company. The models were fabricated using a fused deposition modeling (FDM) printer and polylactic acid (PLA) filament, which were selected for their ease of use, dimensional stability, and cost efficiency. This process enabled the fabrication of precise replicas that maintained the original designs’ overall geometry while exhibiting substantial dimensional reduction and enhanced manageability.

Figure 1 shows a few pictures of these replicas. The pieces on the right resemble the non-defective ones, while the pieces on the left include some overshooting defects.

3. Approach

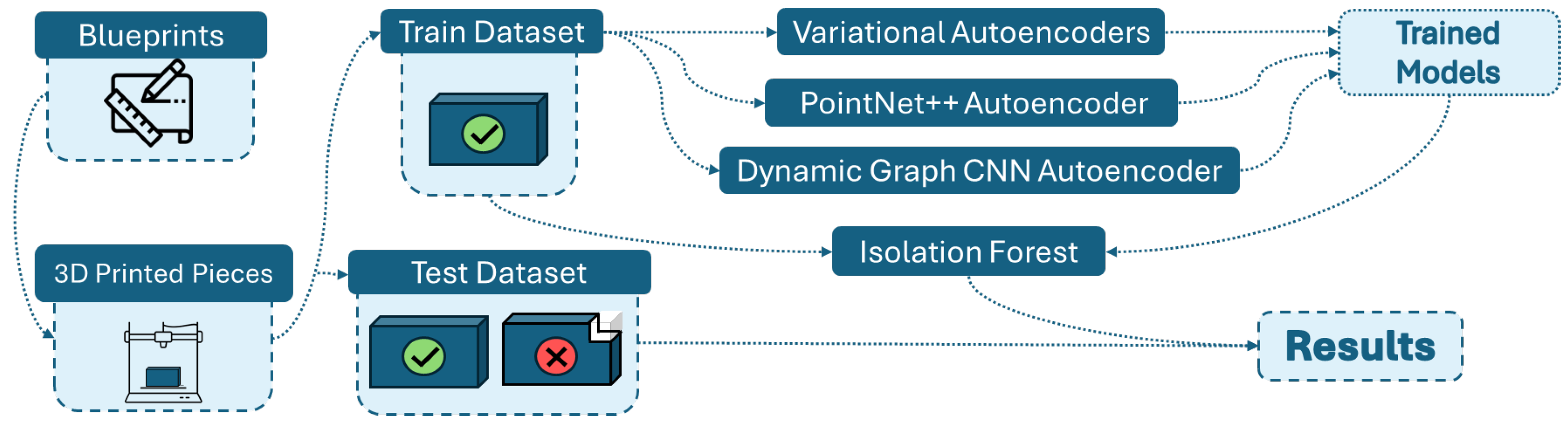

Our approach follows a four-stage reconstruction-based anomaly detection pipeline, illustrated in

Figure 2. The main stages are: (1) component modeling and defect definition, (2) point cloud acquisition and dataset construction, (3) training of point cloud reconstruction models on non-defective data, and (4) anomaly detection using Isolation Forest on reconstruction error features.

For our approach, we relied on the company’s official blueprints of three different components, referred to as T-subassemblies. These parts are characterized by their large size and considerable weight, with lengths ranging from 3 to 5 m and masses between 181 and 931 kg. Due to their dimensions, the handling or manipulation of the original pieces was impractical. Therefore, we used 3D printing technology to reproduce the components, which allowed for quicker prototyping while maintaining the geometric fidelity of the original designs.

To make the replicas easier to manipulate and to fit within the limitations of the 3D printing equipment, we uniformly scaled the models down to 5% of their original dimensions, reducing, for example, a 4 m component to approximately 20 cm in length. Additionally, based on the blueprint geometry, we generated versions of the components that intentionally incorporated overshooting defects. These defects were designed to look like the imperfections seen in real manufacturing processes, so we could analyze and compare the effect of these defects in a controlled experimental setting.

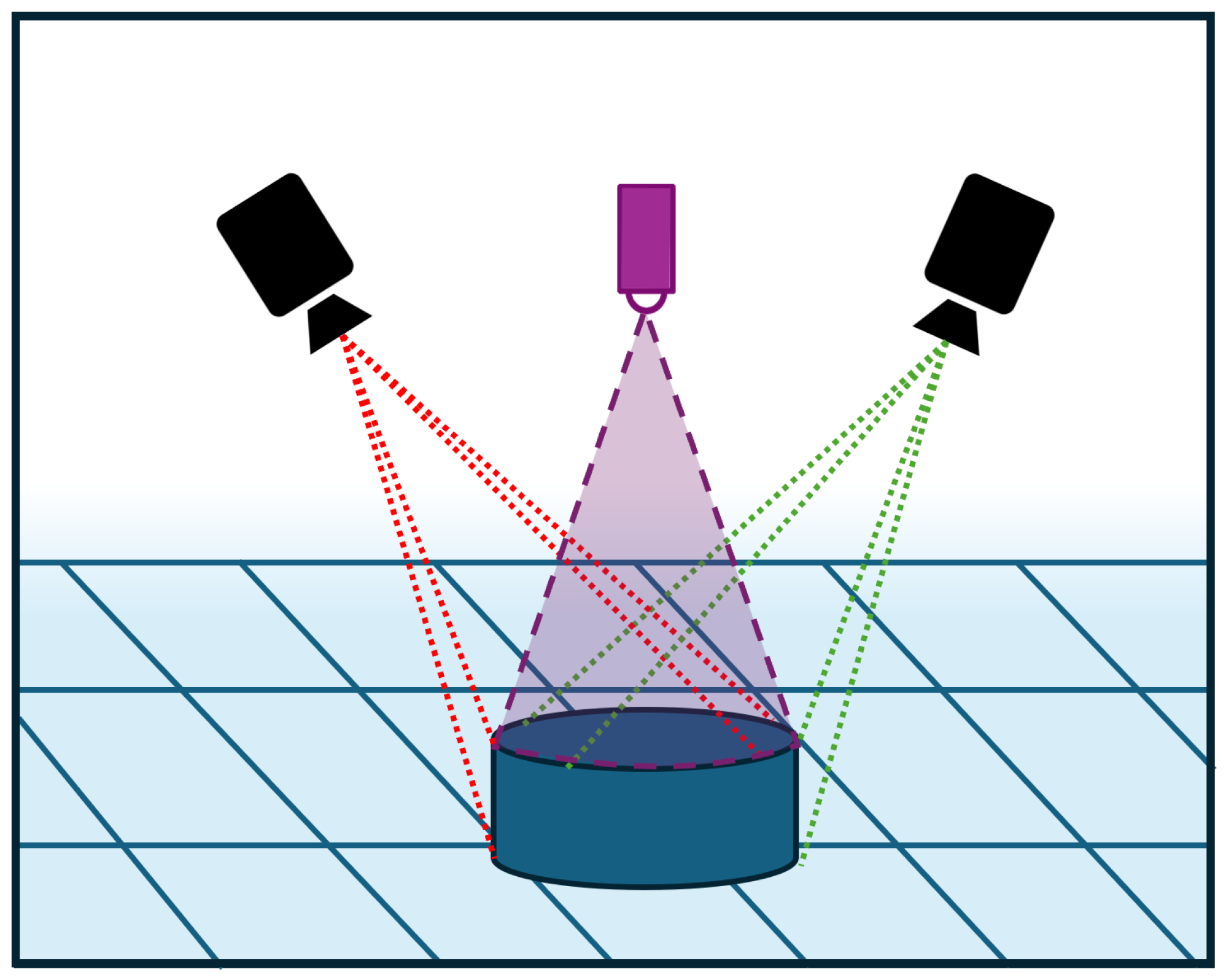

Once the 3D printed pieces were ready, our next step was to capture their geometric information as point clouds. To do this, we used an Intel RealSense D455 depth camera to get multiple point clouds for each component from different positions and orientations. This process was done for both non-defective and defective versions of the pieces. We collected data from different viewpoints and placements. This allowed us to better replicate realistic acquisition conditions, with the aim of improving the model’s capacity to generalize and adapt to diverse real-world scenarios. After the data was collected, the point clouds corresponding to the non-defective components were randomly divided into three subsets: 70% for training, 20% for testing, and 10% for validation. In contrast, all defective component samples were used exclusively during the validation phase. This data partitioning scheme was designed according to the unsupervised nature of our approach to ensure that the model was trained solely on non-defective data and that its ability to identify defects was evaluated independently.

In the third stage (model training), we selected four different architectures commonly used for unsupervised learning on 3D point cloud data reconstruction such as a Variational Autoencoder (VAE), FoldingNet, Dynamic Graph CNN (DGCNN) Autoencoder and PointNet++ Autoencoder. These models were chosen to provide a diverse representation of state-of-the-art approaches in point cloud feature extraction and reconstruction. By comparing their performance under the same experimental conditions, we aimed to evaluate how different geometric encoding strategies influence the model’s ability to represent complex shapes and to distinguish between defective and non-defective components.

In the final stage (anomaly detection), after training the models, we computed reconstruction metrics for each sample, specifically the Earth Mover’s Distance (EMD) and the Chamfer Distance (CD), to quantify the similarity between the input and reconstructed point clouds. We then used these metrics as input features for the Isolation Forest algorithm to perform anomaly detection. The Isolation Forest was trained exclusively on the reconstruction metrics obtained from the non-defective components, allowing it to learn the distribution of normal reconstruction errors.

5. Experiments and Results

The following section will include a description of the experiments that were performed and the results of those experiments.

5.1. Experiments Setup

To ensure a fair comparison across all architectures, we employed a consistent experimental setup for training and evaluation. All models were trained using 200 epochs and the Chamfer Distance as the reconstruction loss function [

35], processing point clouds with 4096 points per sample. Training was performed with a batch size of 8 and a learning rate of

, using the Adam optimizer. All experiments were performed on an NVIDIA RTX A6000 GPU, and models were implemented in TensorFlow/Keras. This uniform training configuration allows for direct performance comparison under identical conditions, isolating the impact of architectural differences on defect detection capability.

Variational Autoencoder (VAE): Following the architecture described in

Section 4, the encoder processes the input point cloud through three 1D convolutional layers with 64, 128, and 256 filters, each followed by batch normalization and ReLU activation. After global max pooling, the aggregated features are projected into two 128-dimensional vectors representing

and

of the latent distribution. The latent vector

z is sampled using the reparameterization trick as

with

, where the log-variance is clipped to

to ensure numerical stability. The decoder then expands this latent representation through fully connected layers of dimensions 512 and 1024, both with batch normalization and ReLU activations, before projecting to

output coordinates.

FoldingNet (FN): As mentioned in

Section 3, the encoder follows the PointNet structure with three 1D convolutional layers (64, 128, and 512 filters) followed by global max pooling and a fully connected layer that produces a 128-dimensional latent code. The decoder begins with a fixed 2D grid of size

with coordinates in

. This grid is tiled across the batch and concatenated with the expanded latent vector at each point. The concatenated representation is then processed through two fully connected layers with 512 neurons and ReLU activation, progressively folding the 2D grid into 3D space. The final layer outputs 3 coordinates per point, reconstructing the target point cloud through this learned deformation process.

Dynamic Graph CNN (DGCNN): Consistent with the formulation in

Section 3, the encoder constructs dynamic graphs at each layer by computing edge features between points and their

k-nearest neighbors in feature space. Four edge convolutional layers with 64, 64, 128, and 256 filters are applied, where each EdgeConv operation computes

. After global max pooling over all point features, a fully connected layer produces the 128-dimensional latent vector

. The decoder consists of two fully connected layers with 512 and 1024 neurons, followed by a final projection to

coordinates. Although the decoder does not employ graph convolutions, the rich latent representation learned through dynamic neighborhood aggregation in the encoder enables accurate reconstruction.

PointNet++ Autoencoder (PN++AE): Following the architecture design mentioned in

Section 3, the encoder employs three set abstraction layers. The first layer uses Farthest Point Sampling (FPS) to select 1024 centroids, groups each with

nearest neighbors, and applies 1D convolutions with 64, 64, and 128 filters to compute local features. The second layer downsamples to 256 centroids with the same grouping strategy, applying convolutions with 128, 128, and 256 filters. The third layer performs global aggregation with convolutions of 256, 512, and 512 filters, producing a global feature vector. This global representation is mapped through a fully connected layer with 512 neurons and batch normalization to obtain the 128-dimensional latent code. The decoder mirrors the structure used in previous models, employing fully connected layers with 1024 and 2048 neurons, each followed by batch normalization and ReLU activation, before projecting to the final

output. This hierarchical encoding strategy enables the model to capture multi-scale geometric information for accurate reconstruction.

Isolation Forest: To classify reconstructed samples as defective or non-defective, we apply the Isolation Forest algorithm to the reconstruction errors produced by each model. For every model and reference part, we first compute the Chamfer Distance and Earth Mover’s Distance between the input and its reconstruction on the training (defect-free) set and the test (defective) set, storing these values as two-dimensional feature vectors. The Isolation Forest is then trained exclusively on the error distributions of normal samples, treating (CD and EMD) as input features in an unsupervised setting.

Once fitted, the model is evaluated on the combined set of normal and defective samples by predicting anomaly labels and computing an anomaly score for each observation. To study the sensitivity of the detector, we sweep the contamination parameter from 0.001 to 0.5 in steps of 0.001, generating a separate model and evaluation for each value. For every contamination level, we report precision, recall, F1-score, and ROC-AUC, together with confusion matrices. This analysis will allow us to quantify the effect of contamination threshold choices on the relationship between correctly identifying overshooting defects and avoiding false alarms across different reconstruction architectures.

5.2. Results and Analysis

In this section, we will present the experimental results for all combinations of the reconstruction model and subassembly. As previously mentioned, we considered three different sub-assemblies, which we will refer to as objects 206, 221, and 301 to differentiate them clearly. These identifiers were randomly assigned and do not correspond to any internal naming convention, with the sole purpose of preserving the requested confidentiality of the industrial data.

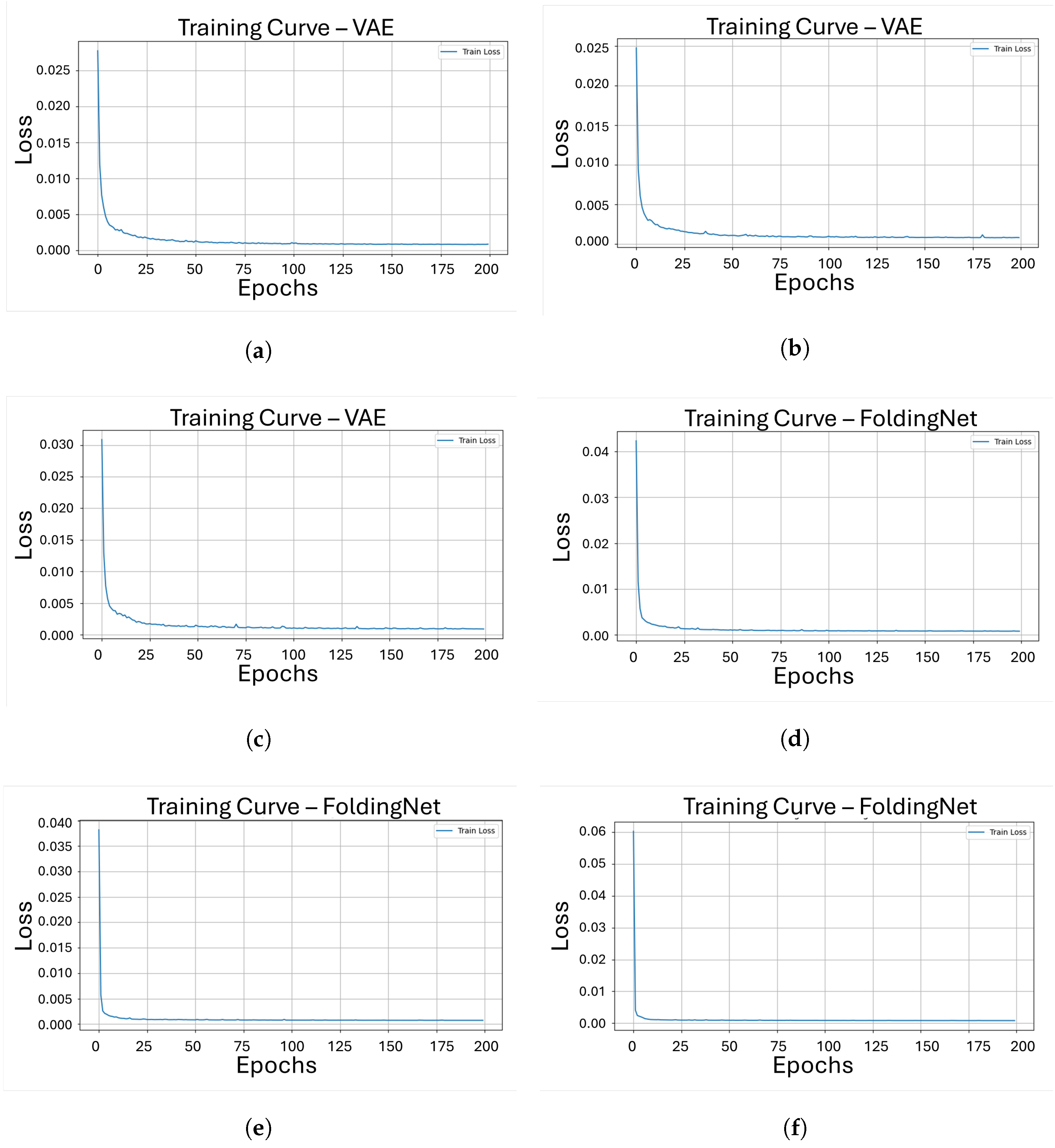

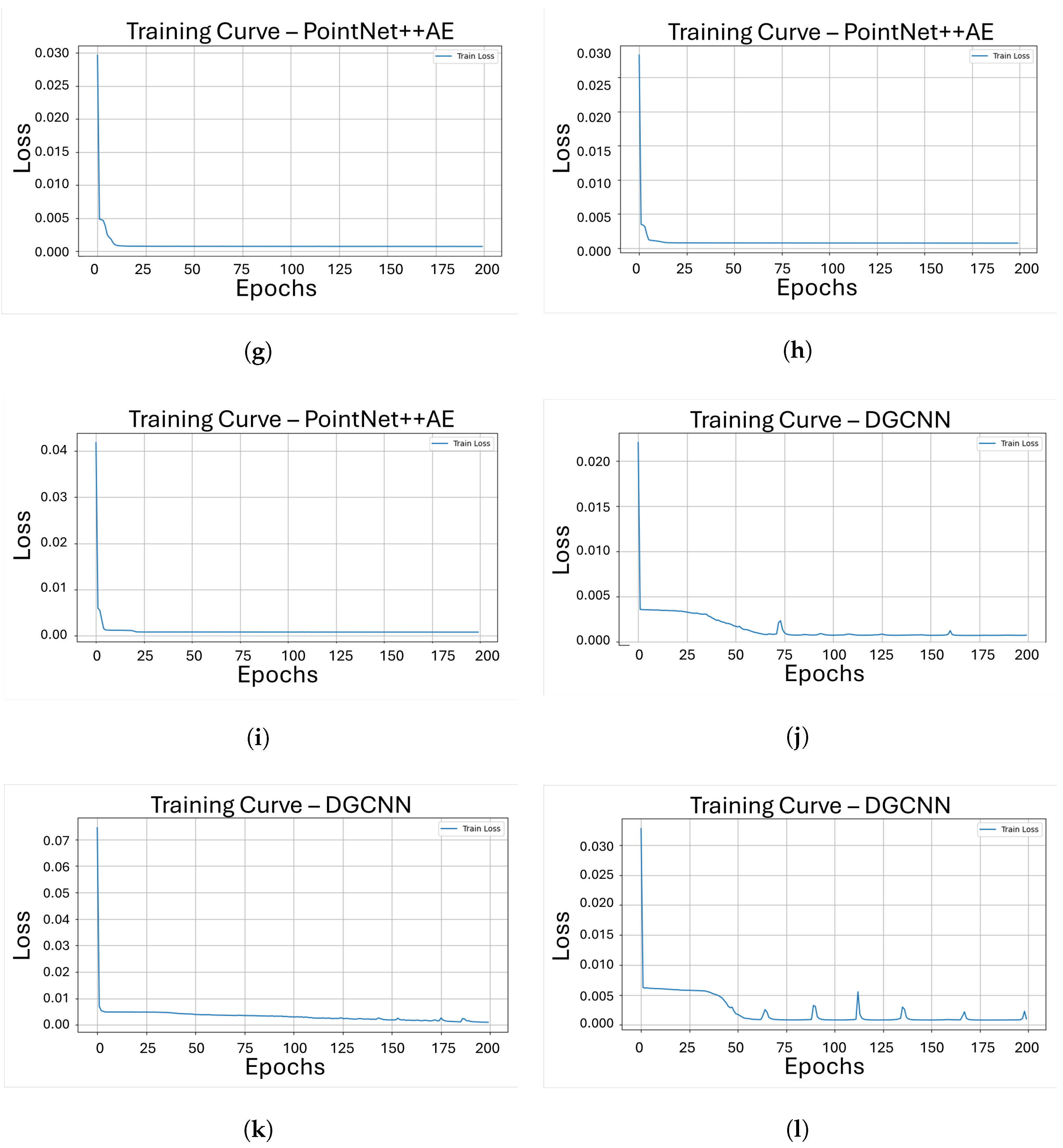

Figure 4 shows the evolution of the training loss, measured with the Chamfer Distance, over 200 epochs for each model-object pair

Figure 4a–c correspond to the VAE model trained on objects 206, 221, and 301, respectively;

Figure 4d–f show the same curves for FoldingNet;

Figure 4g–i illustrate the behavior of PointNet++ Autoencoder; and

Figure 4j–l shows the results for DGCNN. Across all cases, the loss generally decreases as the number of epochs increases, indicating that the models progressively improve their reconstruction capability under the common training setup.

From the loss curves in

Figure 4, it can be observed that the VAE, FoldingNet, and PointNet++ Autoencoder exhibit a broadly similar training behavior, with the Chamfer Distance steadily decreasing as the number of epochs increases and converging to low loss values relatively early in the training process. In contrast, the DGCNN shows a less stable trajectory, particularly for objects 206 and 301, where the loss occasionally increases during training, producing noticeable spikes before continuing to decrease. This behavior suggests that the DGCNN model requires more epochs to achieve comparable levels of reconstruction error and that its dynamic graph construction makes the training process more challenging compared to other architectures under the same conditions.

Also, we evaluated the computational characteristics of the four reconstruction models by comparing their training time, number of parameters, and GPU memory usage.

Table 1 summarizes these aspects and provides a quantitative view of the relation between model complexity and computational cost.

The average training time represents the mean training duration across the three objects (206, 221, and 301) for each model. Among the evaluated architectures, the VAE is the fastest to train (347.50 s on average), followed by FoldingNet (437.27 s) and DGCNN (554.35 s), while PointNet++AE requires noticeably more time (935.29 s). This tendency is consistent with the relative complexity of the architectures, since PointNet++AE incorporates multiple hierarchical set abstraction layers and a larger number of parameters.

The number of trainable parameters reflects the capacity of each model to learn complex representations from the data. PointNet++AE exhibits the highest parameter count, with 28,403,776 trainable parameters, followed by DGCNN with 14,220,800 and the VAE with 13,426,688 parameters. In contrast, FoldingNet is substantially more compact, with only 602,755 trainable parameters, which explains in part its competitive training time despite operating on point clouds of 4096 points. The non-trainable parameters are negligible in all cases, appearing only in the VAE and PointNet++AE due to the use of batch normalization layers.

Regarding computational load, all models were trained on the same NVIDIA RTX A6000 GPU (Nvidia, Santa Clara, CA, USA) and showed similar VRAM usage, in the range of approximately 45–47 GB as shown in

Table 1. PointNet++AE and FoldingNet show slightly higher memory consumption than the VAE and DGCNN, which is consistent with their decoder designs and internal feature representations. Overall, these results highlight that while PointNet++AE offers the highest representational capacity, it does so at the cost of increased training time, whereas FoldingNet provides a more lightweight alternative with lower parameter count and moderate computational demands.

After training all reconstruction models, we proceed to evaluate their effectiveness in the overshooting detection task. To this end, we apply the Isolation Forest algorithm to the reconstruction errors (chamfer distance and earth mover’s distance) of each model/object combination and study how the detection performance changed with the contamination parameter.

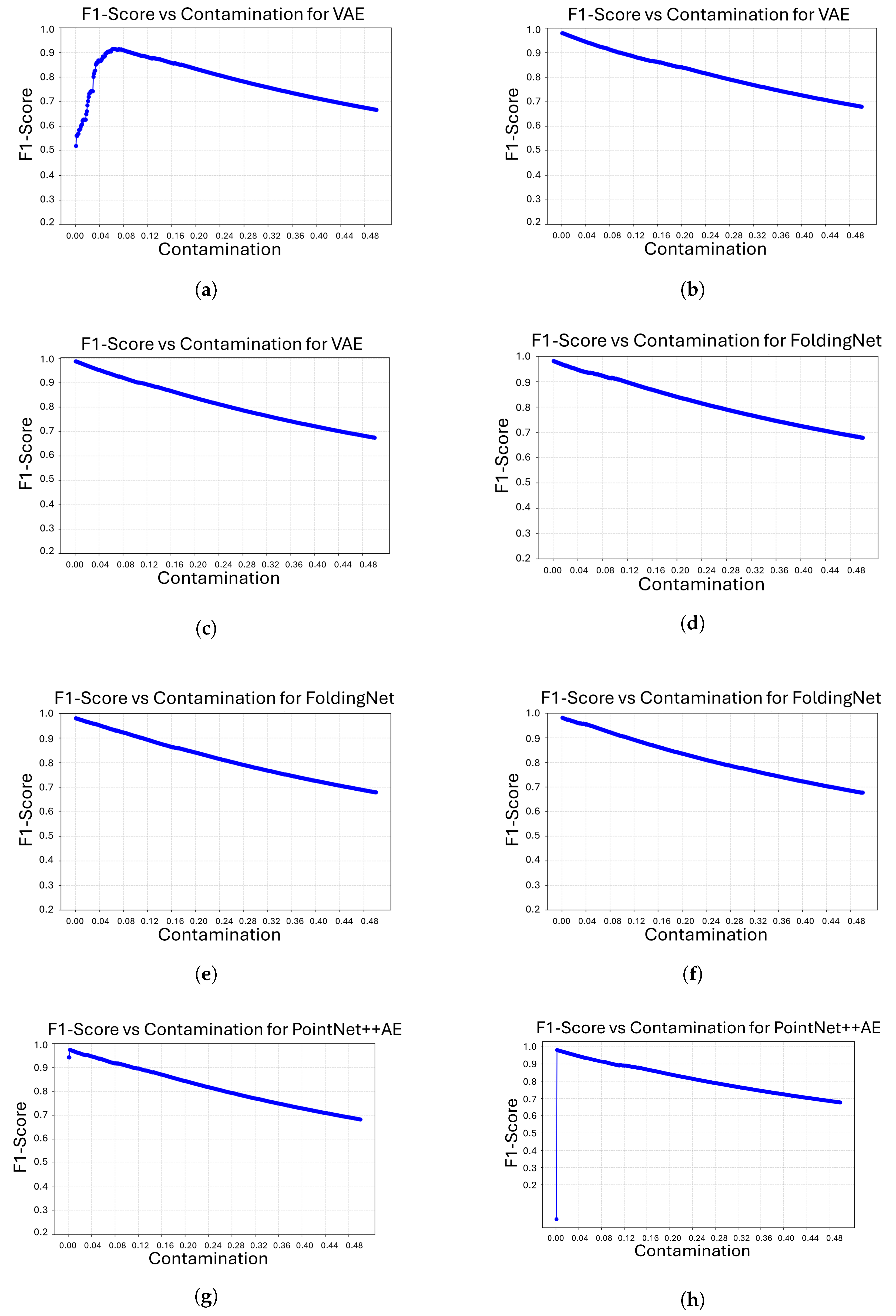

Figure 5 shows the evolution of the F1-score as a function of the contamination parameter for all model/object combinations. In general, all architectures achieve their highest F1 values at relatively low contamination levels, after which performance gradually deteriorates as the assumed proportion of anomalies increases. This behavior is consistent with the role of contamination as a decision threshold in the Isolation Forest algorithm. Overly large contamination values force the classifier to incorrectly label a high number of samples as anomalous, which negatively impacts the balance between precision and recall.

Some differences between models and objects can also be observed. For most cases, the optimal F1-score is reached at very low contamination values, indicating that a conservative anomaly threshold is sufficient to separate defective from non-defective samples. However, the VAE for object 206 requires a slightly higher contamination level to attain its maximum F1-score, suggesting that the reconstruction error distribution for this object is less sharply separated. Similarly, the DGCNN model for objects 221 and, in particular, 206 attains its best F1-scores at higher contamination values than the other architectures, which indicates that its anomaly scores are more dispersed and require a less restrictive threshold to correctly identify overshooting defects.

The best validation performance achieved by each reconstruction model on the three objects is detailed in

Table 2. The contamination value that maximizes the F1-score is selected for every model/object pair, and the precision, recall, and ROC-AUC associated with that value are reported. The results show that all architectures can achieve high detection performance, with F1-scores above 0.97 and ROC-AUC values close to 1.0 for objects 221 and 301 in most cases. This indicates a clear distinction between overshooting defects and non-defective parts. FoldingNet and PointNet++AE are consistent, attaining F1-scores around 0.98 for all three objects at very low contamination levels (between 0.001 and 0.003). This suggests their reconstruction error distributions allow Isolation Forest to operate with a conservative anomaly threshold. The VAE shows slightly more variability, especially for object 206. The optimal F1 score is 0.914, which is lower than the other objects. It is also reached at a higher contamination value of 0.062. This hints at a less distinct separation between normal and defective samples for this geometry. DGCNN shows the strongest contrast across objects. It achieves the highest F1 score (0.991) for object 301, but its performance drops for object 206 (0.795). Its optimal operating points for objects 206 and 221 require much larger contamination values (0.176 and 0.098, respectively). This behavior is consistent with the more irregular training curves observed earlier and suggests that while DGCNN can perform well on certain shapes, its anomaly scores are less stable across different sub-assemblies than those of FoldingNet and PointNet++AE.

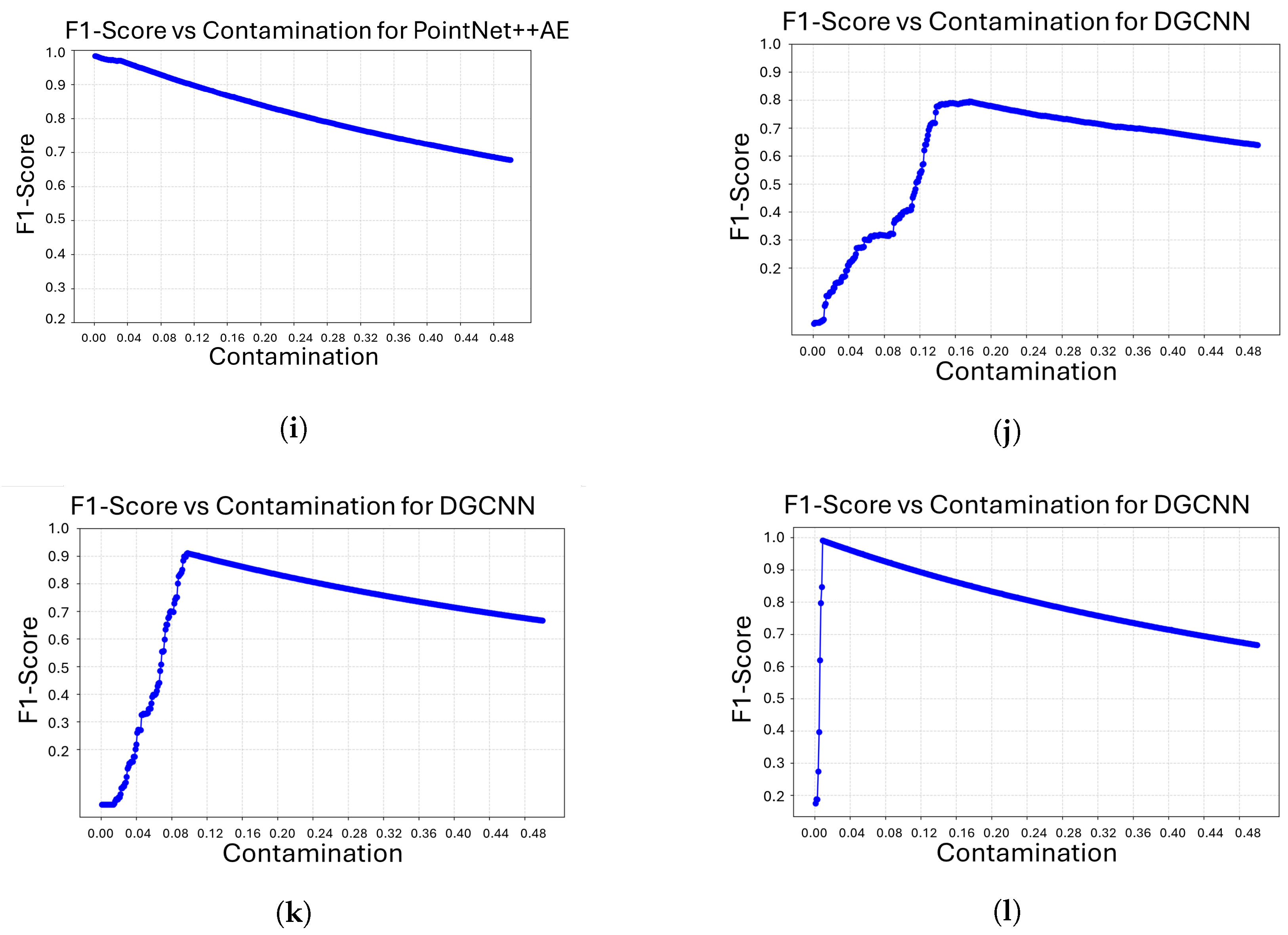

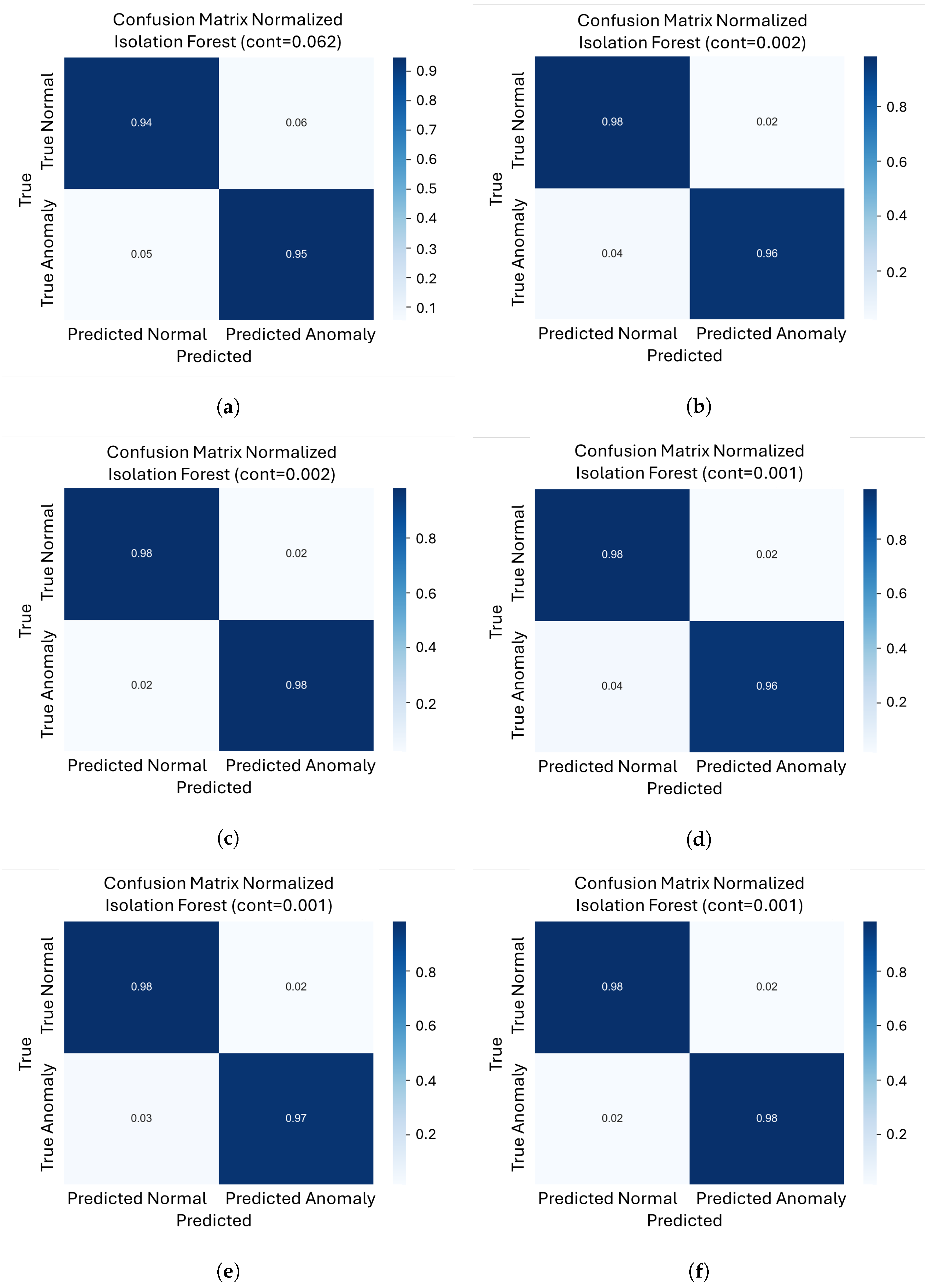

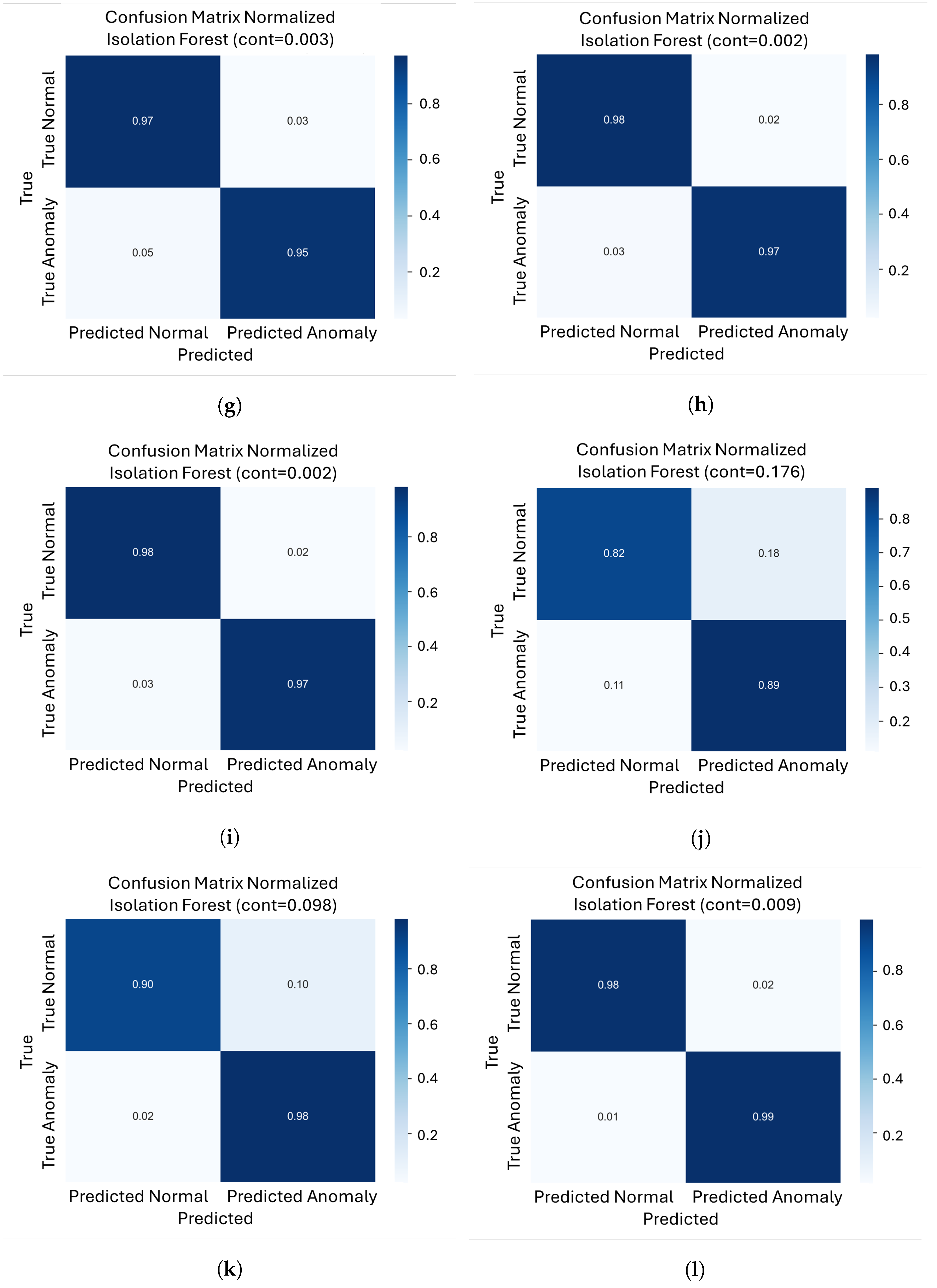

To further evaluate how each model balances false positives and false negatives at its optimal operating point, we analyze the normalized confusion matrices (

Figure 6a–l) for every model/object pair. These matrices were computed at the contamination level that maximizes the F1-score for each case.

For the VAE, object 301 shows very low error rates, with roughly 2% false positives and 2% false negatives. Object 221 exhibits similar behavior, with approximately 2% false positives and 4% false negatives. In contrast, object 206 exhibits higher misclassifications, with approximately 6% false positives and 5% false negatives, making it the most challenging case for this model.

For FoldingNet, all three objects exhibit consistently low error rates at very low contamination values. Objects 206, 221, and 301 show a balanced range of false positives (2–3%) and false negatives (3–4%), indicating an optimal compromise between missing defects and incorrectly flagging normal parts.

For PointNet++AE, Objects 301 and 221 had approximately 2% false positives and 3% false negatives. Object 206 has a slightly higher error rate, with around 3% false positives and about 5% false negatives.

For DGCNN, object 301 showed a very low false negative rate (around 1%) and a very low false positive rate (around 2%), indicating a strong tendency to detect almost all defects. However, for Object 221, the percentage of false positives increases to around 10%, while the percentage of false negatives remains at around 2%. The most extreme case is Object 206, which has approximately 18% false positives and 11% false negatives.

6. Conclusions and Future Works

Reconstruction-based models have become valuable tools for unsupervised anomaly detection, especially when labeled defective samples are limited. These architectures implicitly capture the typical geometric patterns of the underlying components by learning to encode and reconstruct only normal data. Anomalies are revealed as regions where the reconstruction differs from the input, providing a natural way to highlight defects without supervision. In the context of 3D point clouds, this approach is particularly appealing for quality control because it provides an automated method for identifying geometric deviations directly from the reconstructed shape. This study’s results showed that, when combined with Isolation Forest, reconstruction-based anomaly detection can achieve very high performance for overshooting defects. However, the results also revealed clear differences between architectures in terms of robustness and consistency.

Overall, FoldingNet and PointNet++ Autoencoder produced the most reliable results across the three objects, consistently achieving F1-scores close to 0.98 with very low contamination values. The VAE also delivered strong results for two of the objects but showed a more severe performance decline for the most challenging geometry (206). DGCNN exhibited the greatest variability; it produced the best result for object 301 but performed markedly worse for objects 206 and 221.

When examining each object individually, the behavior of the models becomes more specific. For object 301, DGCNN achieved the highest F1 score (0.991) and nearly perfect recall (0.998). This indicates that the dynamic graph representation is especially effective at separating normal and defective samples for this particular geometry. The other three models performed well on this object too, with F1-scores above 0.98. However, they did not match the combination of recall and overall F1 score achieved by DGCNN. For Object 221, FoldingNet and the PointNet++ Autoencoder performed similarly, both achieving F1 scores around 0.981 with high precision and recall. The VAE followed closely behind, while the DGCNN required a higher contamination level to reach an F1 score of 0.901. The differences are even more evident for object 206, where FoldingNet achieved the best result (an F1 score of 0.980) with an optimal balance of precision and recall. This was followed by the PointNet++ Autoencoder, which achieved an F1 score of 0.974. In contrast, the VAE dropped to 0.9145 and the DGCNN fell to 0.795, confirming the latter’s significant sensitivity to the subassembly’s specific geometry.

Taken together, these findings suggest that, although DGCNN can perform very well for specific shapes, such as Object 301, its behavior is less predictable across different components. Meanwhile, FoldingNet offers a more stable compromise. It consistently ranks among the top performers for all three objects, with high F1 scores, low required contamination, and relatively low model complexity. The PointNet++ Autoencoder also shows strong, consistent performance, particularly for objects 221 and 206, making it a solid alternative when a higher complexity model is acceptable.

From a practical point of view, our results indicate that FoldingNet is the most suitable default choice for overshooting detection in new shipbuilding sub-assemblies, as it offers a robust trade-off between accuracy, stability across geometries, and model complexity. PointNet++ Autoencoder can be recommended when a slightly higher computational cost is acceptable in exchange for comparable performance, particularly on geometries similar to objects 206 and 221, while DGCNN should be reserved for scenarios where its behavior can be validated on shapes close to object 301, given its strong dependence on the underlying geometry.

In conclusion, this study showed that reconstruction-based anomaly detection on 3D point clouds, combined with an unsupervised Isolation Forest classifier, is a viable strategy for identifying overshooting defects in shipbuilding sub-assemblies. Beyond the specific numerical results, the study shows the importance of choosing architectures that balance detection performance, stability across different geometries, and computational cost. These results can provide practical information for shipyards and subcontractors interested in integrating 3D reconstruction models into their quality control workflows.

For future work, we first suggest expanding the number and diversity of objects considered, including different types of sub-assemblies and defect geometries, to more thoroughly assess the generalization capability of the reconstruction models. We also suggest training and evaluating the full pipeline directly on point clouds acquired from real shipbuilding components instead of relying solely on 3D-printed representations. In addition, future work will focus on extending the proposed reconstruction-based approach from component-level defect detection to point-wise or region-wise defect localization on a 3D point cloud in order to better support defect localization and rework procedures in complex shipbuilding environments. Finally, we suggest integrating the best-performing models into an actual industrial inspection workflow to validate their performance under real-time constraints and evaluate their impact on shipyard quality control processes.