Hierarchical Self-Distillation with Attention for Class-Imbalanced Acoustic Event Classification in Elevators

Abstract

1. Introduction

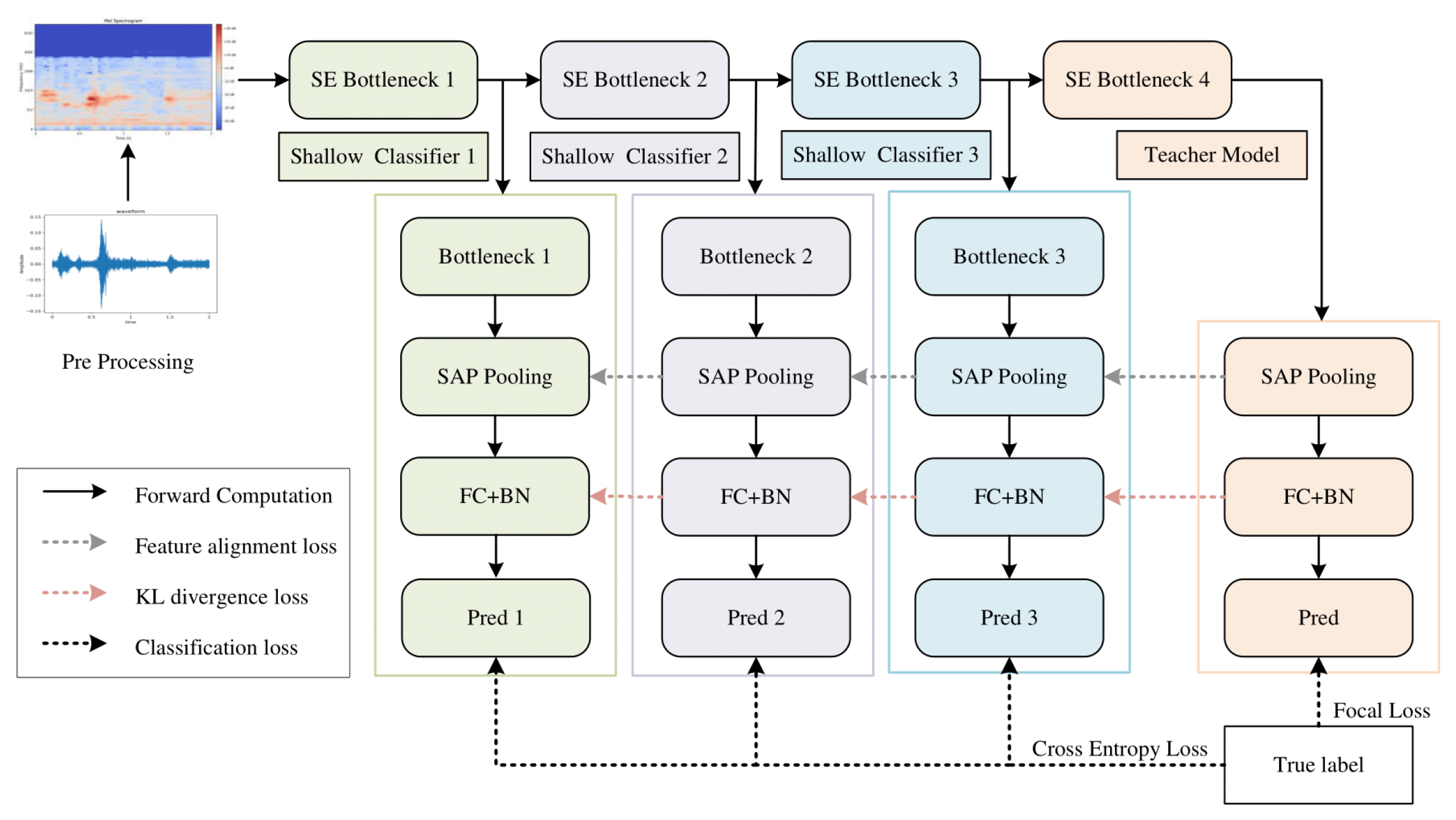

- 1.

- Shallow classifier branches are incorporated into three intermediate layers of the ResNetSE backbone, designating the deepest layer as the teacher and the shallower ones as students. The knowledge is then transferred from the teacher to the students via a combination of the KL divergence loss and the feature alignment loss.

- 2.

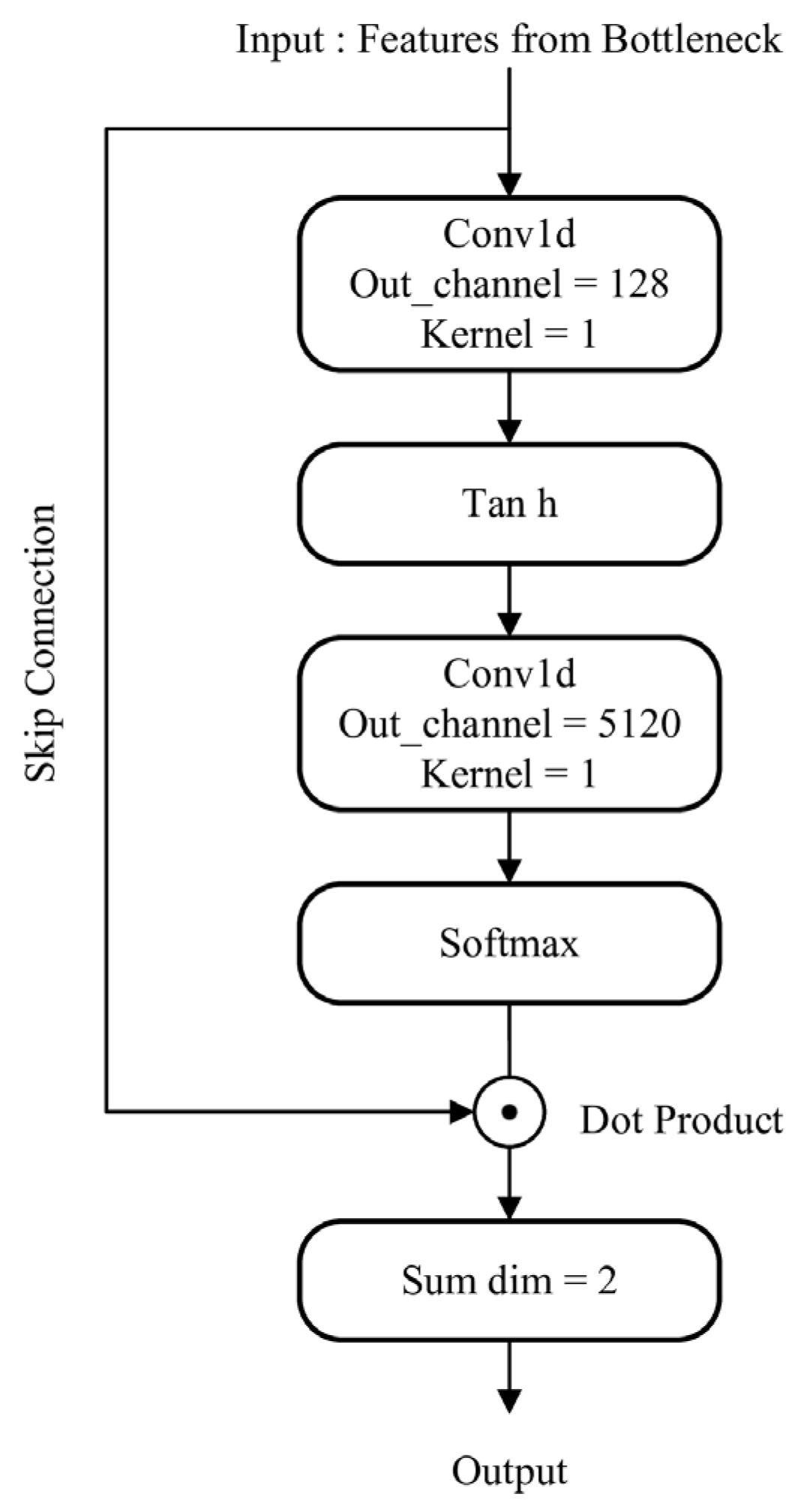

- To address the mixed acoustic interference in elevator environments, we introduced a self-attentive temporal pooling layer into the classifier network architecture, which enhances the ability to capture complex audio features through an adaptive weighting mechanism and effectively solves the problem of mixed interference of sound signals.

- 3.

- To mitigate data imbalance, the teacher network utilizes a hierarchical loss function derived from Focal Loss, which yields a marked gain in the model’s discriminative power for minority classes by reassigning importance to challenging samples.

- 4.

- Rigorous testing across two benchmark audio datasets—UrbanSound8K and an elevator audio dataset—demonstrates that the self-distillation approach and the two introduced modules are highly effective.

2. Related Works

2.1. Environmental Sound Classification

2.2. Knowledge Distillation

3. Methodology

3.1. Overview of ResNetSE-SD

3.2. Shallow Classifiers

3.3. Loss of Self-Distillation

4. Experiments and Results

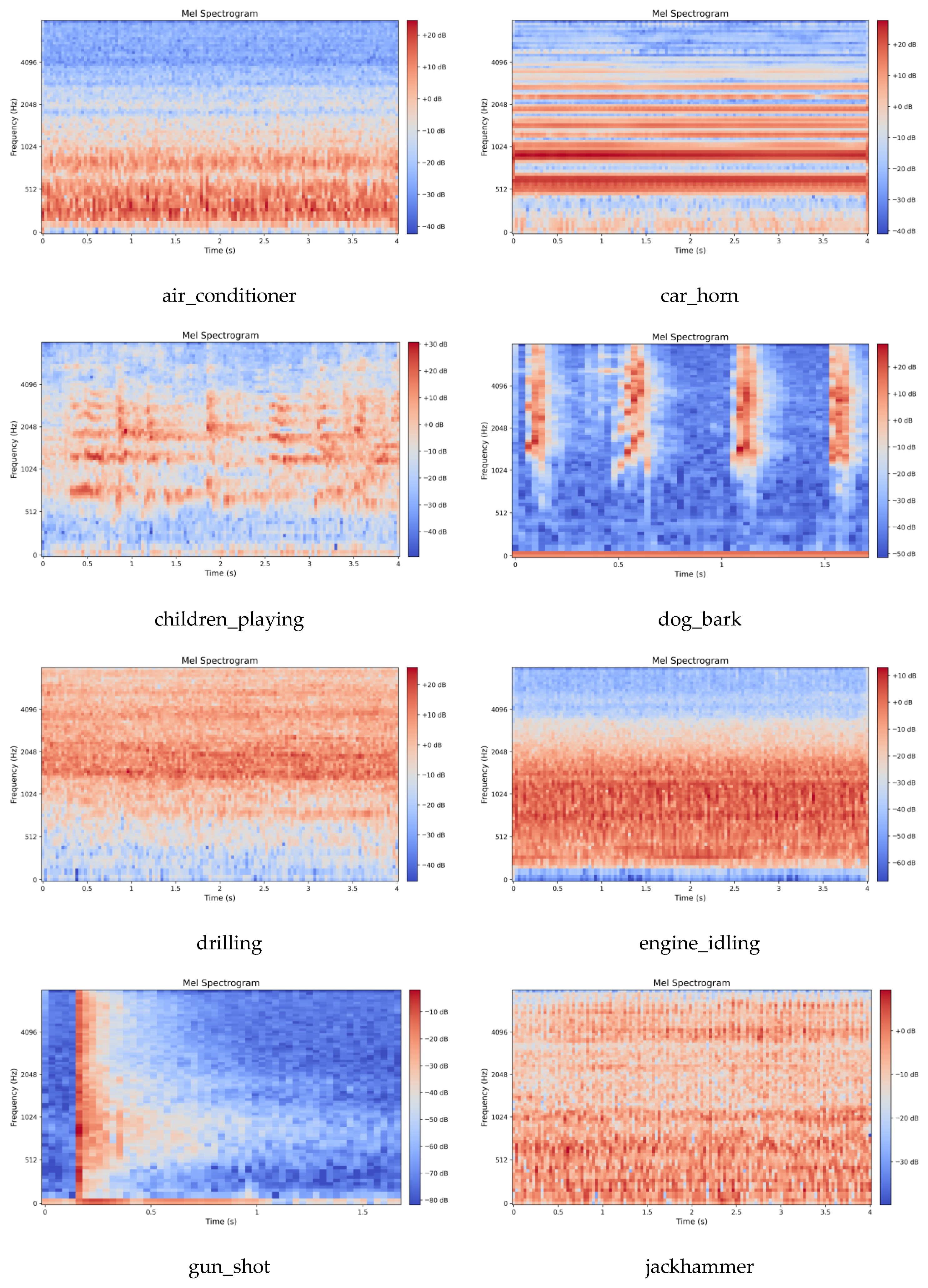

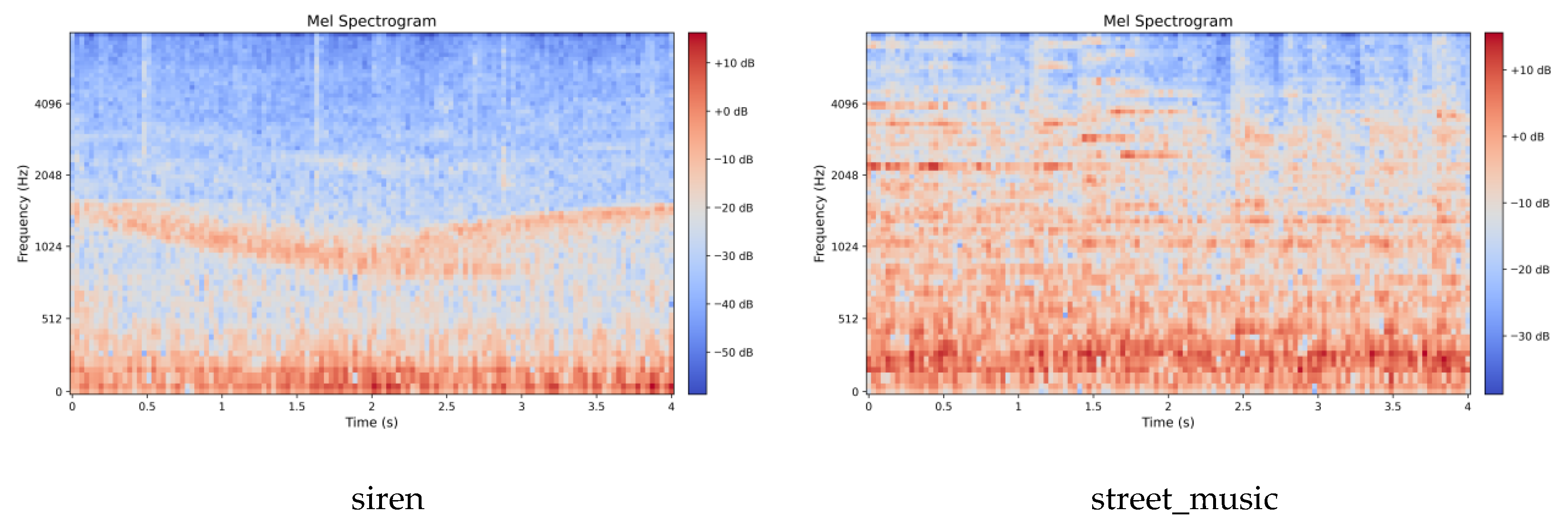

4.1. Datasets

4.2. Experiment Settings

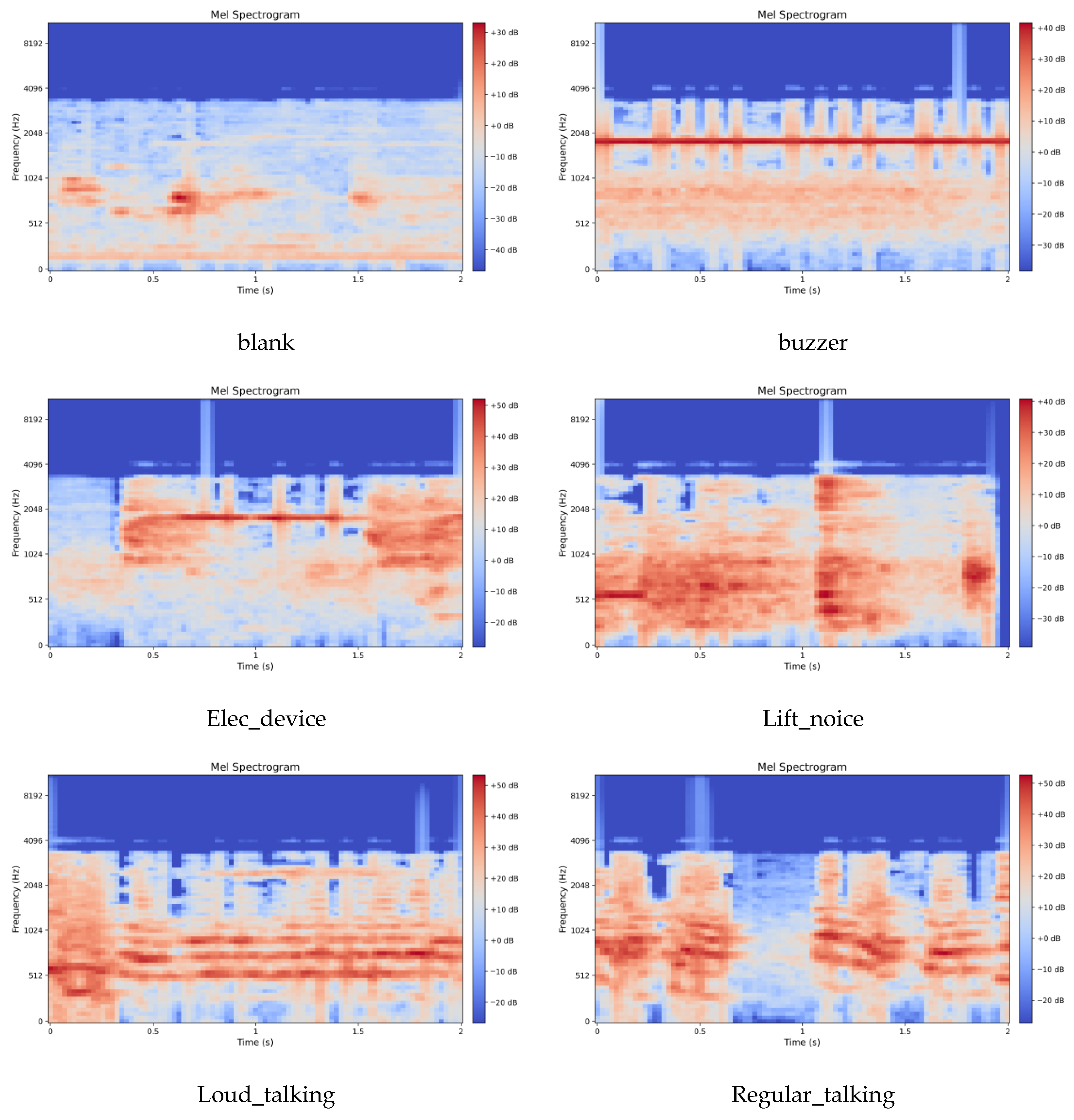

4.3. Feature Extraction

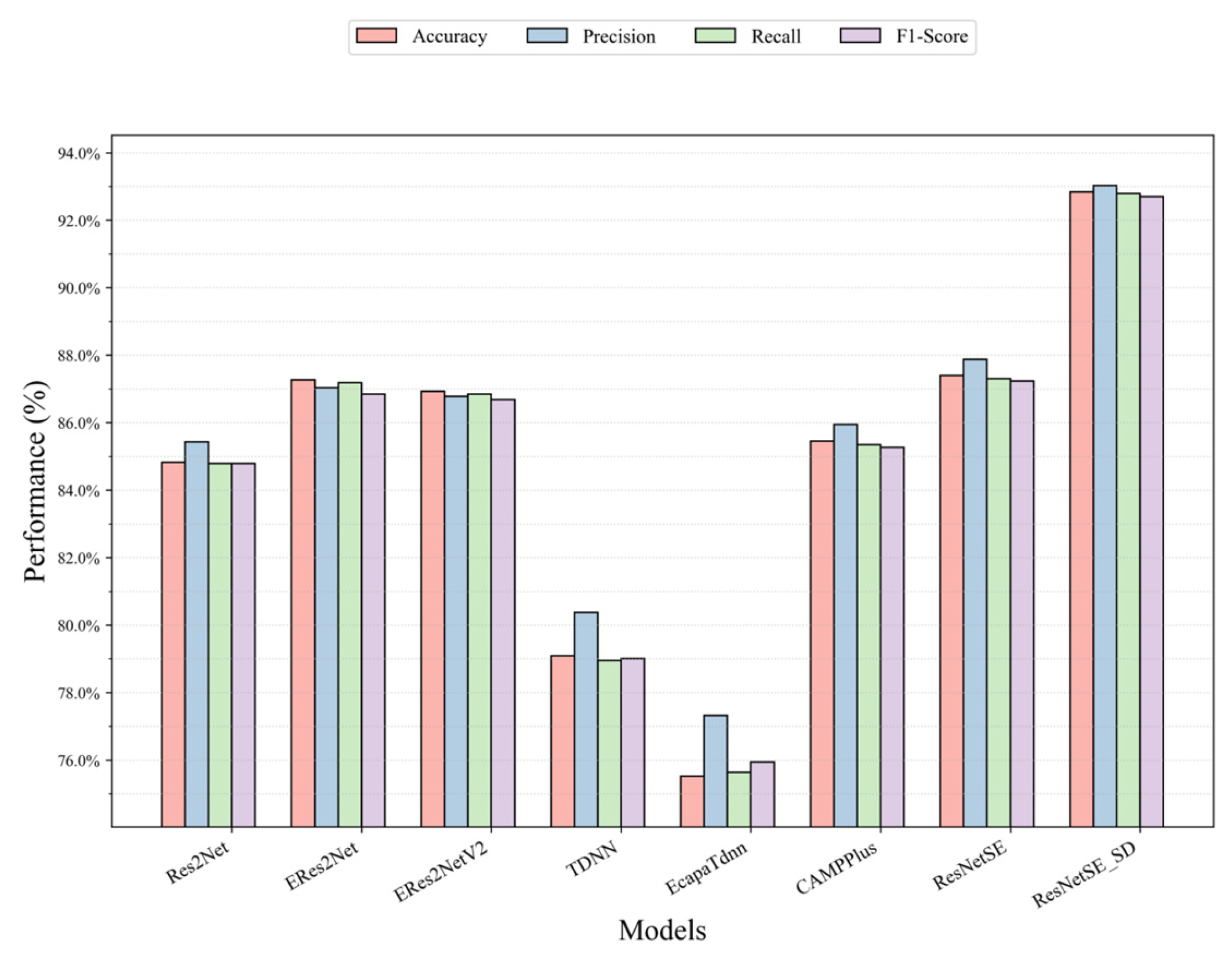

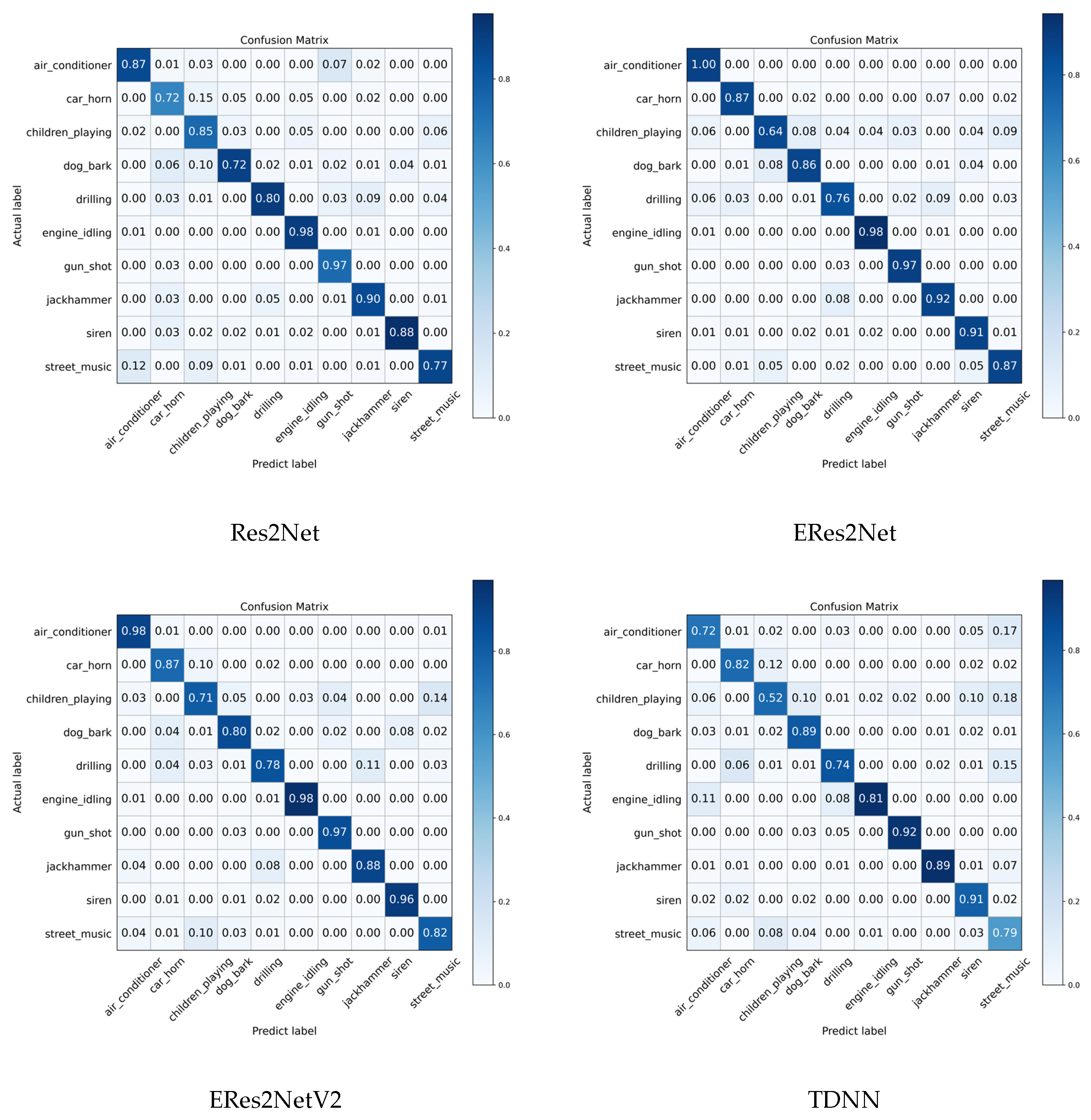

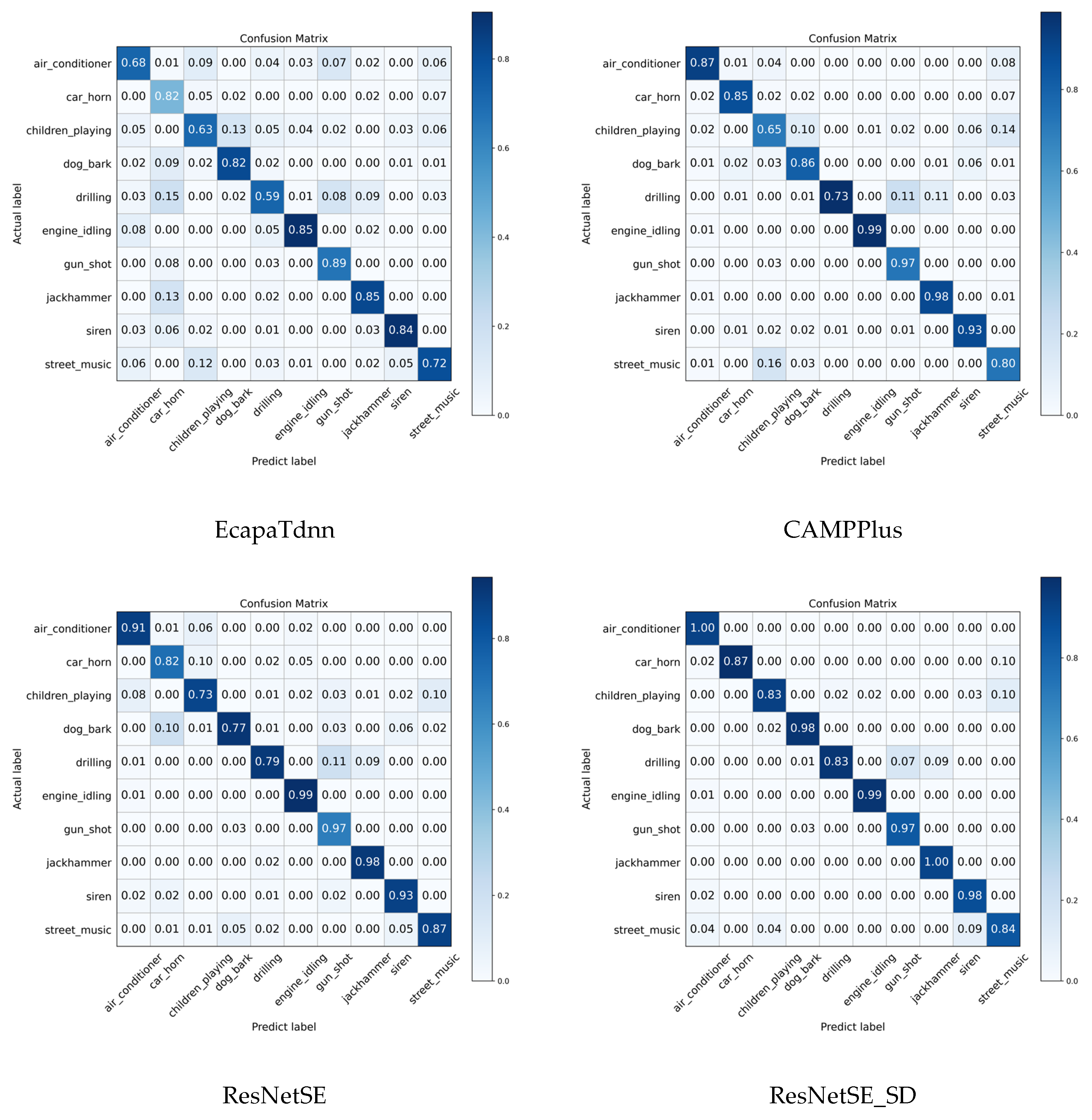

4.4. Results of the UrbanSound8K Dataset

4.5. Results of the Elevator Audio Dataset

4.6. Ablation Study

4.6.1. Impact of Pooling Layers

4.6.2. Influence from Different Loss Function

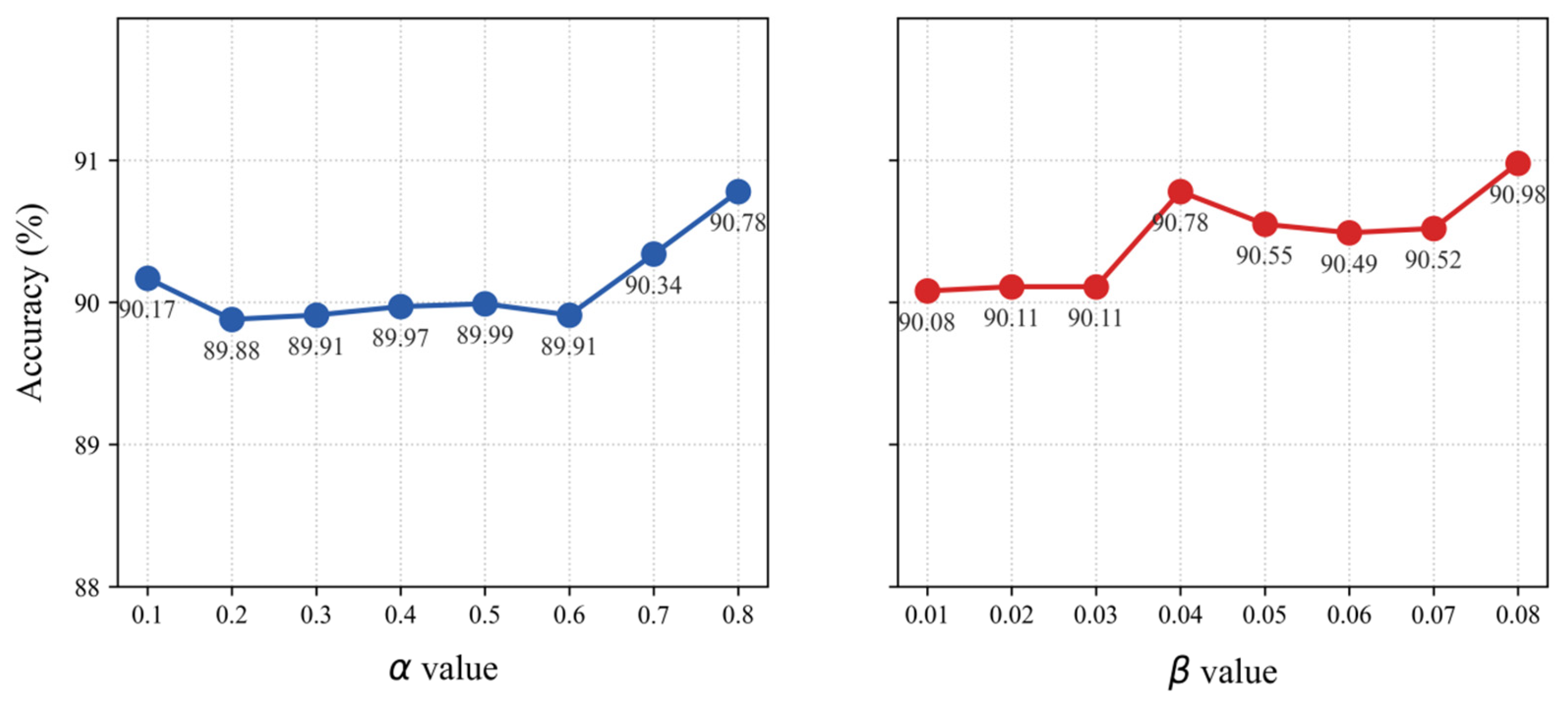

4.7. Hyper-Parameters Sensitivity Study

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Fold | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| 1 | 95.45% | 96.34% | 95.45% | 95.27% |

| 2 | 94.79% | 94.99% | 94.38% | 94.44% |

| 3 | 90.63% | 91.13% | 90.32% | 90.21% |

| 4 | 97.12% | 97.40% | 96.97% | 96.95% |

| 5 | 96.88% | 97.38% | 96.81% | 96.87% |

| 6 | 95.45% | 97.10% | 95.18% | 95.58% |

| 7 | 97.73% | 98.02% | 97.62% | 97.67% |

| 8 | 96.59% | 96.84% | 96.30% | 96.26% |

| 9 | 95.45% | 95.51% | 95.12% | 95.02% |

| 10 | 96.59% | 96.93% | 96.43% | 96.31% |

| Blank | Buzzer | Elec_Device | Lift_Noise | Loud_Talking | Regular_Talking | |

|---|---|---|---|---|---|---|

| blank | 1908 | 16 | 64 | 24 | 3 | 25 |

| buzzer | 16 | 203 | 10 | 1 | 1 | 0 |

| elec_device | 158 | 10 | 192 | 0 | 3 | 12 |

| lift_noise | 58 | 0 | 2 | 73 | 1 | 6 |

| loud_talking | 10 | 0 | 3 | 2 | 60 | 20 |

| regular_talking | 91 | 0 | 6 | 7 | 26 | 430 |

| Blank | Buzzer | Elec_Device | Lift_Noise | Loud_Talking | Regular_Talking | |

|---|---|---|---|---|---|---|

| blank | 1920 | 12 | 72 | 24 | 1 | 11 |

| buzzer | 7 | 220 | 3 | 0 | 0 | 1 |

| elec_device | 107 | 5 | 249 | 2 | 3 | 9 |

| lift_noise | 45 | 1 | 2 | 86 | 0 | 6 |

| loud_talking | 3 | 0 | 2 | 3 | 70 | 17 |

| regular_talking | 33 | 0 | 11 | 5 | 8 | 503 |

| Blank | Buzzer | Elec_Device | Lift_Noise | Loud_Talking | Regular_Talking | |

|---|---|---|---|---|---|---|

| blank | 1936 | 9 | 69 | 18 | 0 | 8 |

| buzzer | 7 | 220 | 3 | 0 | 0 | 1 |

| elec_device | 107 | 7 | 252 | 2 | 1 | 6 |

| lift_noise | 36 | 0 | 1 | 97 | 1 | 5 |

| loud_talking | 0 | 0 | 0 | 1 | 76 | 18 |

| regular_talking | 32 | 0 | 7 | 7 | 16 | 498 |

| Blank | Buzzer | Elec_Device | Lift_Noise | Loud_Talking | Regular_Talking | |

|---|---|---|---|---|---|---|

| blank | 1901 | 12 | 85 | 13 | 2 | 27 |

| buzzer | 17 | 212 | 2 | 0 | 0 | 0 |

| elec_device | 120 | 6 | 236 | 0 | 4 | 9 |

| lift_noise | 68 | 1 | 5 | 61 | 1 | 4 |

| loud_talking | 4 | 0 | 3 | 2 | 59 | 27 |

| regular_talking | 56 | 0 | 20 | 2 | 14 | 468 |

| Blank | Buzzer | Elec_Device | Lift_Noise | Loud_Talking | Regular_Talking | |

|---|---|---|---|---|---|---|

| blank | 1905 | 16 | 73 | 19 | 1 | 26 |

| buzzer | 12 | 217 | 2 | 0 | 0 | 0 |

| elec_device | 123 | 6 | 234 | 4 | 3 | 5 |

| lift_noise | 47 | 0 | 3 | 83 | 1 | 6 |

| loud_talking | 4 | 0 | 5 | 2 | 59 | 25 |

| regular_talking | 36 | 0 | 19 | 7 | 14 | 484 |

| Blank | Buzzer | Elec_Device | Lift_Noise | Loud_Talking | Regular_Talking | |

|---|---|---|---|---|---|---|

| blank | 1930 | 12 | 62 | 30 | 1 | 5 |

| buzzer | 7 | 223 | 1 | 0 | 0 | 0 |

| elec_device | 106 | 14 | 250 | 0 | 2 | 3 |

| lift_noise | 33 | 0 | 2 | 102 | 1 | 2 |

| loud_talking | 3 | 0 | 0 | 4 | 73 | 15 |

| regular_talking | 39 | 0 | 14 | 8 | 14 | 485 |

| Blank | Buzzer | Elec_Device | Lift_Noise | Loud_Talking | Regular_Talking | |

|---|---|---|---|---|---|---|

| blank | 1904 | 19 | 88 | 15 | 1 | 13 |

| buzzer | 6 | 222 | 3 | 0 | 0 | 0 |

| elec_device | 88 | 7 | 274 | 4 | 1 | 1 |

| lift_noise | 43 | 0 | 4 | 92 | 1 | 0 |

| loud_talking | 5 | 0 | 2 | 2 | 70 | 16 |

| regular_talking | 40 | 0 | 15 | 9 | 12 | 484 |

| Blank | Buzzer | Elec_Device | Lift_Noise | Loud_Talking | Regular_Talking | |

|---|---|---|---|---|---|---|

| blank | 1925 | 8 | 75 | 18 | 0 | 14 |

| buzzer | 6 | 222 | 3 | 0 | 0 | 0 |

| elec_device | 69 | 7 | 285 | 3 | 2 | 9 |

| lift_noise | 31 | 0 | 1 | 100 | 2 | 6 |

| loud_talking | 3 | 0 | 0 | 1 | 77 | 14 |

| regular_talking | 21 | 0 | 12 | 1 | 13 | 513 |

References

- Shevchik, S.A.; Masinelli, G.; Kenel, C.; Leinenbach, C.; Wasmer, K. Deep learning for in situ and real-time quality monitoring in additive manufacturing using acoustic emission. IEEE Trans. Ind. Inform. 2019, 15, 5194–5203. [Google Scholar] [CrossRef]

- Keleko, A.T.; Kamsu-Foguem, B.; Ngouna, R.H.; Tongne, A. Artificial intelligence and real-time predictive maintenance in industry 4.0: A bibliometric analysis. AI Ethics 2022, 2, 553–577. [Google Scholar]

- Glentis, G.O.; Angelopoulos, K.; Nikolaidis, S.; Kousiopoulos, G.P.; Porlidas, D.; Gkountis, D. Study of the acoustic noise in pipelines carrying oil products in a refinery establishment. In Proceedings of the 23rd Pan-Hellenic Conference on Informatics, Athens, Greece, 28–30 November 2019; pp. 34–41. [Google Scholar]

- Lei, J.; Sun, W.; Fang, Y.; Ye, N.; Yang, S.; Wu, J. A Model for Detecting Abnormal Elevator Passenger Behavior Based on Video Classification. Electronics 2024, 13, 2472. [Google Scholar] [CrossRef]

- Rabiner, L.R. A tutorial on hidden markov models and selected applications in speech recognition. Proc. IEEE 2002, 77, 257–286. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Zhou, Y.; Moosavi-Dezfooli, S.-M.; Cheung, N.-M.; Frossard, P. Adaptive quantization for deep neural network. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Elsken, T.; Metzen, J.H.; Hutter, F. Neural architecture search: A survey. J. Mach. Learn. Res. 2019, 20, 1–21. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Zhang, L.; Bao, C.; Ma, K. Self-distillation: Towards efficient and compact neural networks. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4388–4403. [Google Scholar] [CrossRef]

- Abdul, Z.K.; Al-Talabani, A.K. Mel frequency cepstral coefficient and its applications: A review. IEEE Access 2022, 10, 122136–122158. [Google Scholar] [CrossRef]

- Pathak, R.S. The Wavelet Transform; Springer: Berlin/Heidelberg, Germany, 2009; Volume 4. [Google Scholar]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Appl. 1998, 13, 18–28. [Google Scholar]

- Aurino, F.; Folla, M.; Gargiulo, F.; Moscato, V.; Picariello, A.; Sansone, C. One-class svm based approach for detecting anomalous audio events. In Proceedings of the 2014 International Conference on Intelligent Networking and Collaborative Systems, Salerno, Italy, 10–12 September 2014; pp. 145–151. [Google Scholar]

- Ota, Y.; Unoki, M. Anomalous sound detection for industrial machines using acoustical features related to timbral metrics. IEEE Access 2023, 11, 70884–70897. [Google Scholar] [CrossRef]

- Ntalampiras, S.; Potamitis, I.; Fakotakis, N. Probabilistic novelty detection for acoustic surveillance under real-world conditions. IEEE Trans. Multimed. 2011, 13, 713–719. [Google Scholar] [CrossRef]

- Yaman, O. An automated faults classification method based on binary pattern and neighborhood component analysis using induction motor. Measurement 2021, 168, 108323. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Elman, J.L. Finding structure in time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Shi, B.; Bai, X.; Yao, C. An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 2298–2304. [Google Scholar] [CrossRef]

- Jung, H.; Choi, S.; Lee, B. Rotor fault diagnosis method using cnn-based transfer learning with 2d sound spectrogram analysis. Electronics 2023, 12, 480. [Google Scholar] [CrossRef]

- Mu, W.; Yin, B.; Huang, X.; Xu, J.; Du, Z. Environmental sound classification using temporal-frequency attention based convolutional neural network. Sci. Rep. 2021, 11, 21552. [Google Scholar] [CrossRef]

- Jin, Y.; Wang, M.; Luo, L.; Zhao, D.; Liu, Z. Polyphonic sound event detection using temporal-frequency attention and feature space attention. Sensors 2022, 22, 6818. [Google Scholar] [CrossRef]

- Becker, P.; Roth, C.; Roennau, A.; Dillmann, R. Acoustic anomaly detection in additive manufacturing with long short-term memory neural networks. In Proceedings of the 2020 IEEE 7th International Conference on Industrial Engineering and Applications (ICIEA), Bangkok, Thailand, 16–21 April 2020; pp. 921–926. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. Available online: https://dl.acm.org/doi/10.5555/3295222.3295349 (accessed on 11 November 2025).

- Gong, Y.; Chung, Y.-A.; Glass, J. Ast: Audio spectrogram transformer. arXiv 2021, arXiv:2104.01778. [Google Scholar] [CrossRef]

- Chen, K.; Du, X.; Zhu, B.; Ma, Z.; Berg-Kirkpatrick, T.; Dubnov, S. Hts-at: A hierarchical token-semantic audio transformer for sound classification and detection. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 646–650. [Google Scholar]

- Atito, S.; Awais, M.; Wang, W.; Plumbley, M.D.; Kittler, J. Asit: Local-global audio spectrogram vision transformer for event classification. IEEE/ACM Trans. Audio Speech Lang. Process. 2024, 32, 3684–3693. [Google Scholar]

- Verma, P.; Berger, J. Audio transformers: Transformer architectures for large scale audio understanding. adieu convolutions. arXiv 2021, arXiv:2105.00335. [Google Scholar]

- Wu, M.-C.; Chiu, C.-T.; Wu, K.-H. Multi-teacher knowledge distillation for compressed video action recognition on deep neural networks. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2202–2206. [Google Scholar]

- Zhu, X.; Gong, S. Knowledge distillation by on-the-fly native ensemble. Adv. Neural Inf. Process. Syst. 2018, 31, 7528–7538. Available online: https://dl.acm.org/doi/10.5555/3327757.3327852 (accessed on 11 November 2025).

- Tripathi, A.M.; Paul, K. Data augmentation guided knowledge distillation for environmental sound classification. Neurocomputing 2022, 489, 59–77. [Google Scholar] [CrossRef]

- Wang, L.; Yoon, K.-J. Knowledge distillation and student-teacher learning for visual intelligence: A review and new outlooks. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3048–3068. [Google Scholar] [CrossRef]

- Sun, D.; Yao, A.; Zhou, A.; Zhao, H. Deeply-supervised knowledge synergy. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6997–7006. [Google Scholar]

- Ji, M.; Shin, S.; Hwang, S.; Park, G.; Moon, I.-C. Refine myself by teaching myself: Feature refinement via self-knowledge distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10664–10673. [Google Scholar]

- Li, Z.; Li, X.; Yang, L.; Song, R.; Yang, J.; Pan, Z. Dual teachers for self-knowledge distillation. Pattern Recognit. 2024, 151, 110422. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Salamon, J.; Jacoby, C.; Bello, J.P. A dataset and taxonomy for urban sound research. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 1041–1044. [Google Scholar]

- Park, D.S.; Chan, W.; Zhang, Y.; Chiu, C.C.; Zoph, B.; Cubuk, E.D.; Le, Q.V. SpecAugment: A Simple Data Augmentation Method for Automatic Speech Recognition. arXiv 2019, arXiv:1904.08779. [Google Scholar] [CrossRef]

- Hu, H.; Yang, S.; Zhang, Y.; Wu, J.; He, L.; Lei, J. MetaRes-DMT-AS: A Meta-Learning Approach for Few-Shot Fault Diagnosis in Elevator Systems. Sensors 2025, 25, 4611. [Google Scholar] [CrossRef]

| Module | Stage1 | Stage2 | Stage3 |

|---|---|---|---|

| Input layer | (c = 64, h = 80, w = 98) | (c = 128, h = 40, w = 49) | (c = 256, h = 20, w = 25) |

| Feature alignment module | Kernel Size = 7 × 7 channels = 512 Stride = 8 Padding = 3 × 3 Output: (512, h = 10, w = 13) | Kernel Size = 5 × 5 channels = 512 Stride = 4 Padding = 2 × 2 Output: (512, h = 10, w = 13) | Kernel Size = 7 × 7 channels = 512 Stride = 8 Padding = 3 × 3 Output: (512, h = 10, w = 13) |

| SAP layer | Reshape (1, 512 × 10, 13) Self-Attentive Pooling (5120,128) | Reshape (1, 512 × 10, 13) Self-Attentive Pooling (5120,128) | Reshape (1, 512 × 10, 13) Self-Attentive Pooling (5120,128) |

| Feature classifier | BatchNorm (5120) Fully Connected Layer (192) BatchNorm (192) Fully Connected Layer (6) | BatchNorm (5120) Fully Connected Layer (192) BatchNorm (192) Fully Connected Layer (6) | BatchNorm (5120) Fully Connected Layer (192) BatchNorm (192) Fully Connected Layer (6) |

| Class | Sample Count | Percentage |

|---|---|---|

| blank | 10,201 | 59.3% |

| buzzer | 1154 | 6.7% |

| elec_device | 1872 | 10.9% |

| lift_noise | 700 | 4.1% |

| loud_talking | 476 | 2.8% |

| regular_talking | 2800 | 16.3% |

| Models | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Res2Net | 84.82% | 85.43% | 84.78% | 84.78% |

| ERes2Net | 87.27% | 87.03% | 87.19% | 86.84% |

| ERes2NetV2 | 86.93% | 86.77% | 86.84% | 86.68% |

| TDNN | 79.09% | 80.38% | 78.95% | 79.01% |

| EcapaTdnn | 75.52% | 77.32% | 75.63% | 75.94% |

| CAMPPlus | 85.45% | 85.95% | 85.35% | 85.27% |

| ResNetSE | 87.39% | 87.88% | 87.30% | 87.23% |

| ResNetSE_SD | 92.84% | 93.02% | 92.79% | 92.69% |

| Evaluation Protocol | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Random Split | 92.84% | 93.02% | 92.79% | 92.69% |

| 10-Fold Cross-Validation | 95.67% | 96.20% | 95.50% | 95.50% |

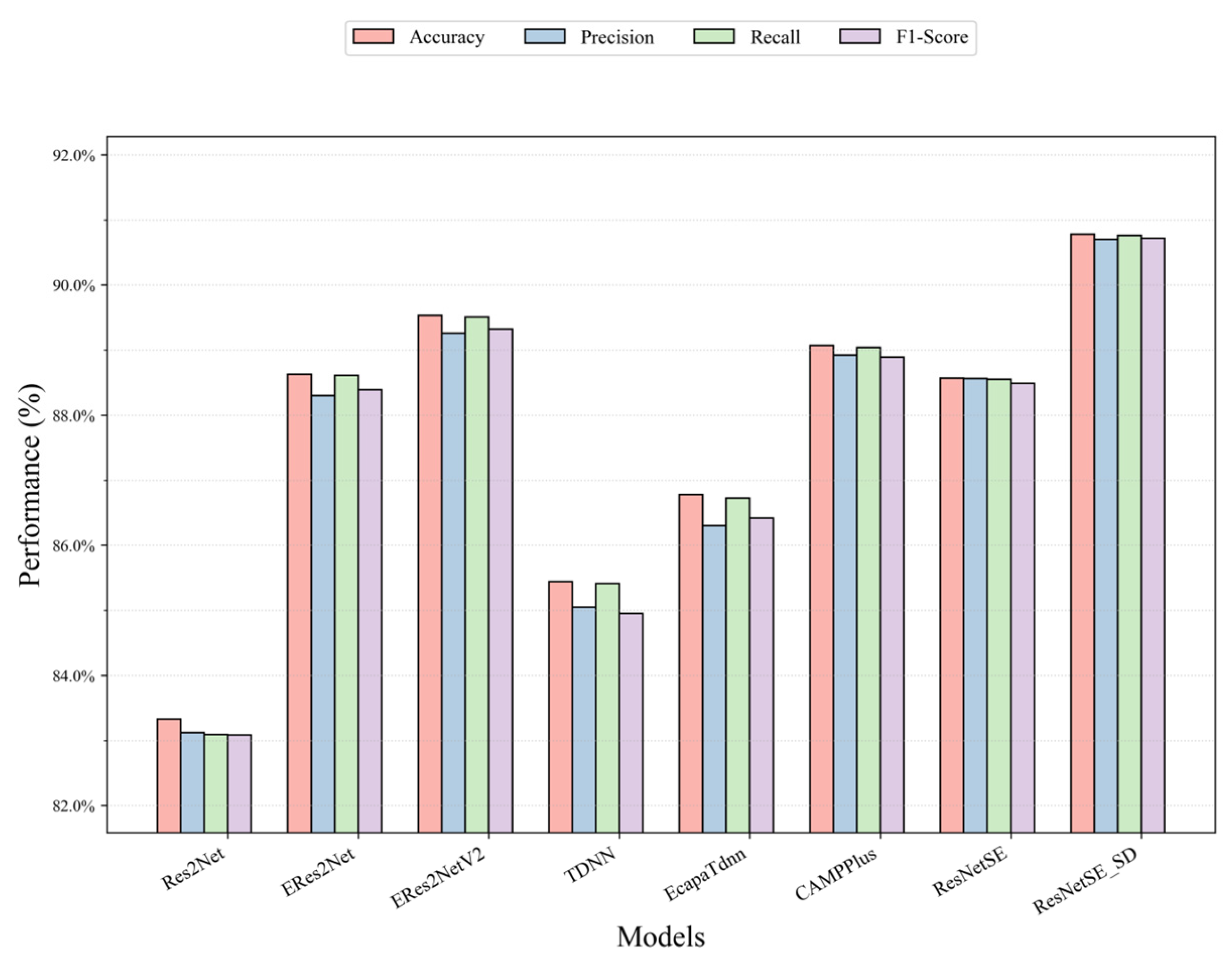

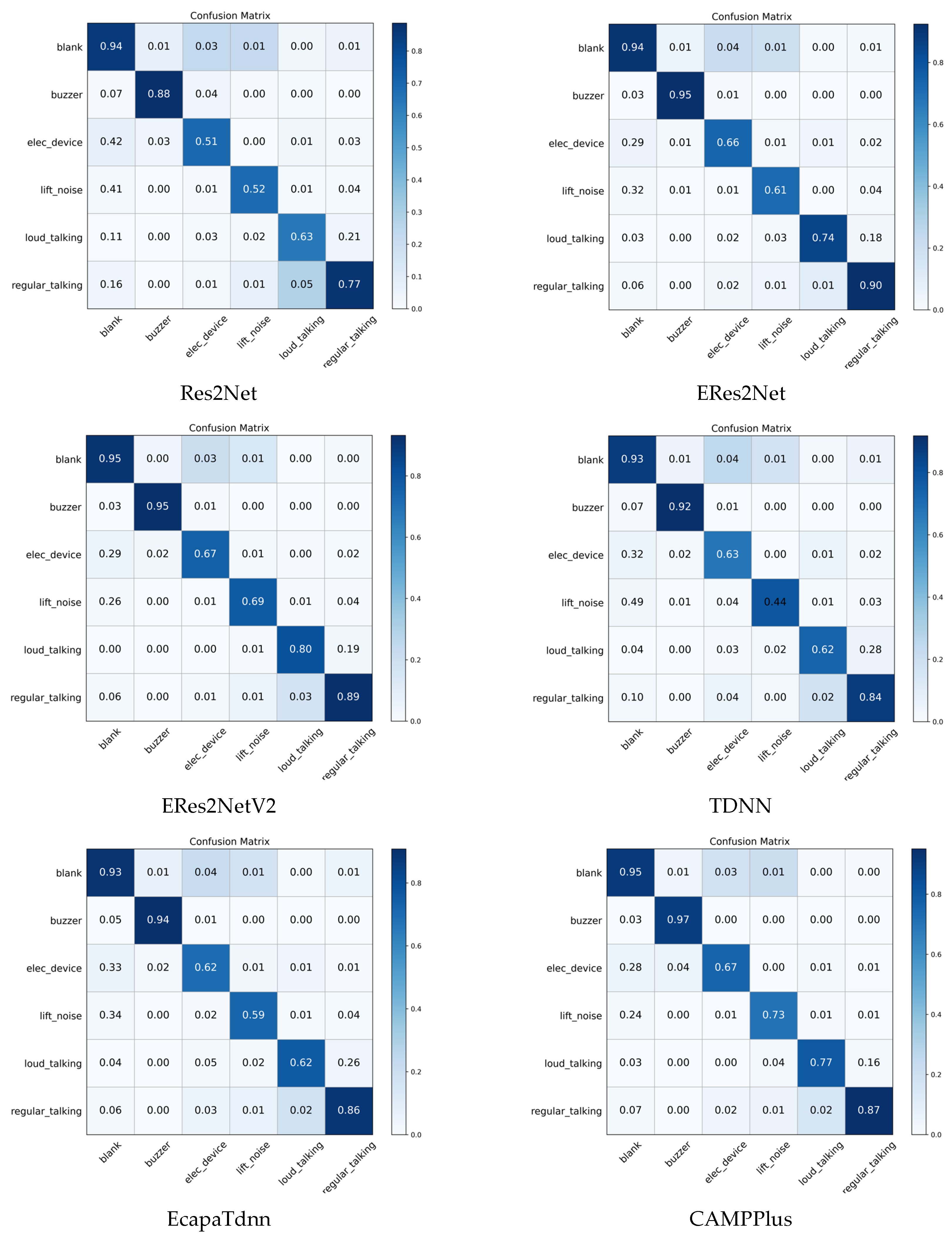

| Models | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Res2Net | 83.33% | 83.12% | 83.09% | 83.08% |

| ERes2Net | 88.63% | 88.30% | 88.61% | 88.39% |

| ERes2NetV2 | 89.53% | 89.26% | 89.51% | 89.32% |

| TDNN | 85.44% | 85.05% | 85.41% | 84.95% |

| EcapaTdnn | 86.78% | 86.30% | 86.72% | 86.42% |

| CAMPPlus | 89.07% | 88.92% | 89.04% | 88.89% |

| ResNetSE | 88.57% | 88.56% | 88.55% | 88.49% |

| ResNetSE_SD | 90.78% | 90.70% | 90.76% | 90.72% |

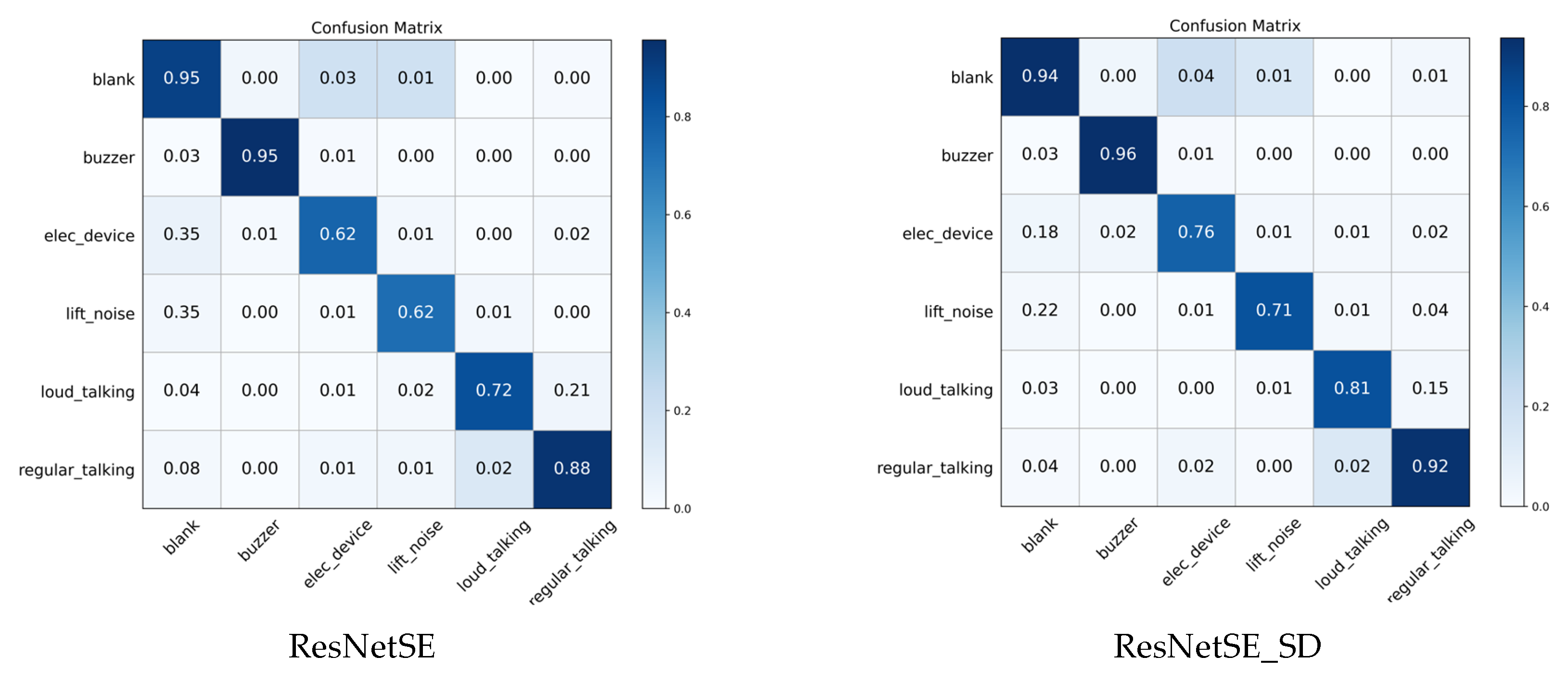

| Models | Metric | Blank | Buzzer | Elec_Device | Lift_Noise | Loud_Talking | Regular_Talking |

|---|---|---|---|---|---|---|---|

| Res2Net | Precision | 85.14% | 88.65% | 69.31% | 68.22% | 63.83% | 87.22% |

| Recall | 93.53% | 87.88% | 51.20% | 52.14% | 63.16% | 76.79% | |

| F1-Score | 89.14% | 88.26% | 58.90% | 59.11% | 63.49% | 81.67% | |

| ERes2Net | Precision | 90.78% | 92.44% | 73.45% | 71.67% | 85.37% | 91.96% |

| Recall | 94.12% | 95.24% | 66.40% | 61.43% | 73.68% | 89.82% | |

| F1-Score | 92.42% | 93.82% | 69.75% | 66.15% | 79.10% | 90.88% | |

| ERes2NetV2 | Precision | 91.41% | 93.22% | 75.90% | 77.60% | 80.85% | 92.91% |

| Recall | 94.90% | 95.24% | 67.20% | 69.29% | 80.00% | 88.93% | |

| F1-Score | 93.12% | 94.22% | 71.29% | 73.21% | 80.42% | 90.88% | |

| TDNN | Precision | 87.77% | 91.77% | 67.24% | 78.21% | 73.75% | 87.48% |

| Recall | 93.19% | 91.77% | 62.93% | 43.57% | 62.11% | 83.57% | |

| F1-Score | 90.39% | 91.77% | 65.01% | 55.96% | 67.43% | 85.48% | |

| EcapaTdnn | Precision | 89.56% | 90.79% | 69.64% | 72.17% | 75.64% | 88.64% |

| Recall | 93.38% | 93.94% | 62.40% | 59.29% | 62.11% | 86.43% | |

| F1-Score | 91.43% | 92.34% | 65.82% | 65.10% | 68.21% | 87.52% | |

| CAMPPlus | Precision | 91.12% | 89.56% | 76.00% | 70.83% | 80.22% | 95.10% |

| Recall | 94.61% | 96.54% | 66.67% | 72.86% | 76.84% | 86.61% | |

| F1-Score | 92.83% | 92.92% | 71.02% | 71.83% | 78.49% | 90.65% | |

| ResNetSE | Precision | 91.28% | 89.52% | 70.98% | 75.41% | 82.35% | 94.16% |

| Recall | 93.33% | 96.10% | 73.07% | 65.71% | 73.68% | 86.43% | |

| F1-Score | 92.29% | 92.69% | 72.01% | 70.23% | 77.78% | 90.13% | |

| ResNetSE_SD | Precision | 93.67% | 93.67% | 75.80% | 81.30% | 81.91% | 92.27% |

| Recall | 94.36% | 96.10% | 76.00% | 71.43% | 81.05% | 91.61% | |

| F1-Score | 94.02% | 94.87% | 75.90% | 76.05% | 81.48% | 91.94% |

| Models | Params (M) | FLOPs (G) |

|---|---|---|

| Res2Net | 4.16 | 0.11 |

| ERes2Net | 6.61 | 3.36 |

| ERes2NetV2 | 5.46 | 2.10 |

| TDNN | 2.54 | 0.42 |

| EcapaTdnn | 5.71 | 0.94 |

| CAMPPlus | 7.18 | 1.11 |

| ResNetSE | 6.82 | 3.66 |

| ResNetSE_SD | 18.18 | 4.92 |

| Meric | ResNetSE | ResNetSE-SD | Overhead |

|---|---|---|---|

| Training time/epoch | 25 s | 32 s | +28% |

| GPU memory (peak) | 2630 MB | 2968 MB | +12.8% |

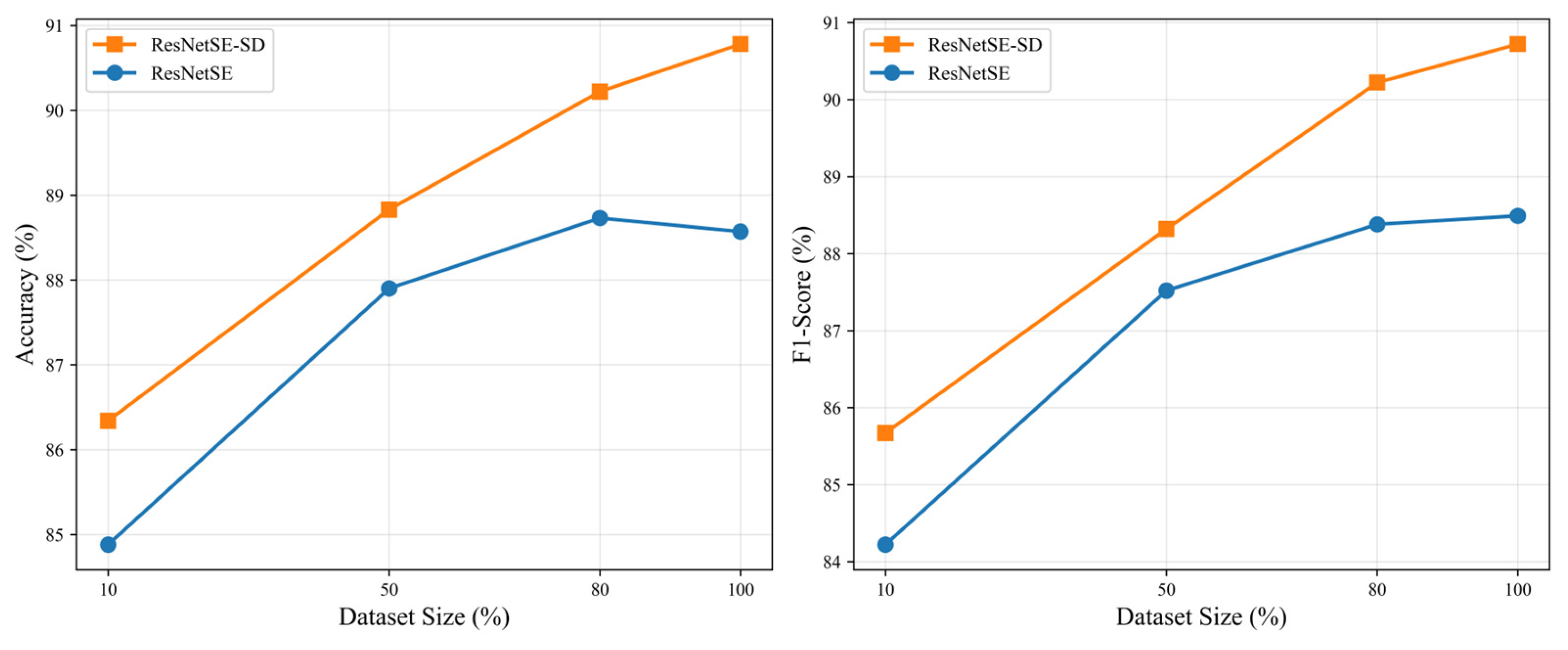

| Models | Metric | 10% | 50% | 80% | 100% |

|---|---|---|---|---|---|

| ResnetSE | Accuracy | 84.88% | 87.90% | 88.73% | 88.57% |

| Precision | 84.53% | 87.47% | 88.35% | 88.57% | |

| Recall | 84.88% | 87.85% | 88.70% | 88.57% | |

| F1-Score | 84.22% | 87.52% | 88.38% | 88.57% | |

| ResNetSE_SD | Accuracy | 86.34% | 88.83% | 90.22% | 90.78% |

| Precision | 85.91% | 88.37% | 90.26% | 90.78% | |

| Recall | 86.33% | 88.78% | 90.44% | 90.78% | |

| F1-Score | 85.67% | 88.32% | 90.22% | 90.78% |

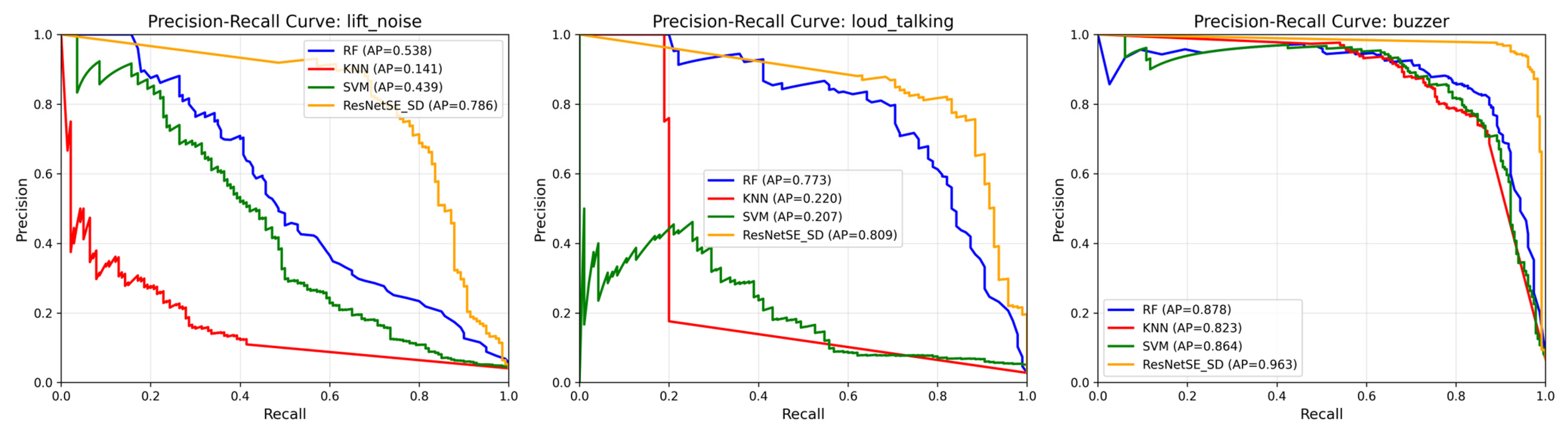

| Models | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| SVM (RBF) | 46.47% | 67.09% | 46.47% | 51.13% |

| Random Forest | 79.72% | 79.75% | 79.72% | 78.30% |

| k-NN (k = 5) | 63.62% | 59.46% | 63.62% | 54.71% |

| ResNetSE_SD | 90.78% | 90.70% | 90.76% | 90.72% |

| SpecAugment Configuration | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Frequency Masking | 89.79% | 89.48% | 89.77% | 89.45% |

| Time Masking | 89.82% | 89.55% | 89.79% | 89.44% |

| Time Warping | 89.76% | 89.69% | 89.74% | 89.65% |

| No Augmentation | 90.78% | 90.70% | 90.76% | 90.72% |

| Pooling Layer | UrbanSound8K Accuracy (%) | Elevator Audio Dataset Accuracy (%) |

|---|---|---|

| ASP | 89.32% | 90.28% |

| TAP | 87.84% | 89.73% |

| TSP | 89.20% | 90.28% |

| SAP (our model) | 92.84% | 90.78% |

| Classification Loss | KL Divergence Loss | Feature Alignment Loss | Accuracy of ResNetSE_SD |

|---|---|---|---|

| ✔ | 88.86% | ||

| ✔ | ✔ | 89.65% | |

| ✔ | ✔ | 88.83% | |

| ✔ | ✔ | ✔ | 90.78% |

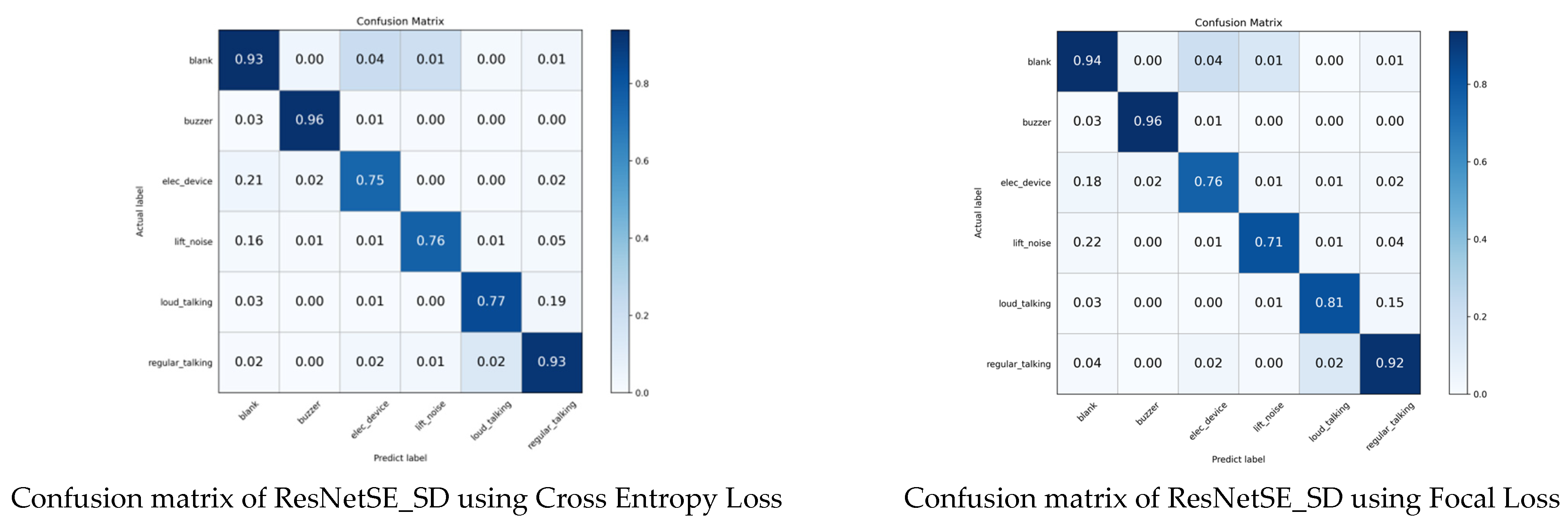

| Loss Function | Accuracy |

|---|---|

| CrossEntropy Loss | 90.34% |

| Focal Loss | 90.78% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Yang, S.; Chou, L.; Li, H.; Xu, Z.; Feng, B.; Lei, J. Hierarchical Self-Distillation with Attention for Class-Imbalanced Acoustic Event Classification in Elevators. Sensors 2026, 26, 589. https://doi.org/10.3390/s26020589

Yang S, Chou L, Li H, Xu Z, Feng B, Lei J. Hierarchical Self-Distillation with Attention for Class-Imbalanced Acoustic Event Classification in Elevators. Sensors. 2026; 26(2):589. https://doi.org/10.3390/s26020589

Chicago/Turabian StyleYang, Shengying, Lingyan Chou, He Li, Zhenyu Xu, Boyang Feng, and Jingsheng Lei. 2026. "Hierarchical Self-Distillation with Attention for Class-Imbalanced Acoustic Event Classification in Elevators" Sensors 26, no. 2: 589. https://doi.org/10.3390/s26020589

APA StyleYang, S., Chou, L., Li, H., Xu, Z., Feng, B., & Lei, J. (2026). Hierarchical Self-Distillation with Attention for Class-Imbalanced Acoustic Event Classification in Elevators. Sensors, 26(2), 589. https://doi.org/10.3390/s26020589