A Hybrid Multi-Scale Transformer-CNN UNet for Crowd Counting

Abstract

1. Introduction

2. Related Work

2.1. Model Design for Crowd Counting

2.2. Vision Transformer

2.3. Loss Function

3. Methods

3.1. Overall Architecture

3.2. Multi-Scale Vision Transformer Block

3.3. Dynamic Convolutional Attention Block

3.4. Loss Function

4. Experiments

4.1. Datasets and Implementation Details

4.2. Evaluation Metrics

4.3. Comparisons with State-of-the-Art Methods

| Method | Venue | Params(M) | SHA | SHB | UCF_CC_50 | UCF-QNRF | NWPU-Crowd | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | |||

| MCNN [5] | CVPR’16 | 0.13 | 110.2 | 173.2 | 26.4 | 41.3 | 377.6 | 509.1 | 277.0 | 426.0 | 218.5 | 700.6 |

| CSRNet [6] | CVPR’18 | 16.3 | 68.2 | 115.0 | 10.6 | 16.0 | 266.1 | 397.5 | 120.3 | 208.0 | 104.9 | 433.5 |

| CANNet [10] | CVPR’19 | 18.1 | 62.3 | 100.0 | 7.8 | 12.2 | 212.2 | 243.7 | 107.0 | 183.0 | 93.6 | 489.9 |

| SFCN+ [32] | CVPR’19 | 38.6 | 64.8 | 107.5 | 7.6 | 13.0 | 214.2 | 318.2 | 102.0 | 171.4 | 95.5 | 608.3 |

| BL [33] | CVPR’19 | 21.5 | 62.8 | 101.8 | 7.7 | 12.7 | 229.3 | 308.2 | 88.7 | 154.8 | 93.6 | 470.4 |

| AMRNet [34] | ECCV’20 | 59.3 | 61.6 | 98.4 | 7.0 | 11.0 | 184.0 | 265.8 | 86.6 | 152.2 | - | - |

| DM-Count [23] | NeurIPS’20 | 21.5 | 59.7 | 95.7 | 7.4 | 11.8 | 211.0 | 291.5 | 85.6 | 148.3 | 88.4 | 388.6 |

| LibraNet [35] | ECCV’20 | 17.9 | 55.9 | 97.1 | 7.3 | 11.3 | 181.2 | 262.2 | 88.1 | 143.7 | - | - |

| Semi [36] | ICCV’21 | 16.7 | 66.9 | 125.6 | 12.3 | 17.9 | - | - | 130.3 | 226.3 | 105.8 | 445.3 |

| P2PNet [30] | ICCV’21 | 19.2 | 52.7 | 85.1 | 6.3 | 9.9 | 172.7 | 256.2 | 85.3 | 154.5 | 77.4 | 362.0 |

| CLTR [37] | ECCV’22 | 41.0 | 56.9 | 95.2 | 6.5 | 10.6 | - | - | 85.8 | 141.3 | 61.9 | 246.3 |

| TransCrowd [38] | SCIS’22 | 90.4 | 66.1 | 105.1 | 9.3 | 16.1 | - | - | 97.2 | 168.5 | 88.4 | 400.5 |

| FIDTM [31] | TMM’22 | 66.6 | 57.0 | 103.4 | 6.9 | 11.8 | 171.4 | 233.1 | 89.0 | 153.6 | 51.4 | 107.6 |

| DMCNet [39] | WACV’23 | - | 58.5 | 84.6 | 8.6 | 13.7 | - | - | - | - | 96.5 | 164.0 |

| PET [28] | ICCV’23 | 51.7 | 49.3 | 78.8 | 6.2 | 9.7 | - | - | 79.5 | 144.3 | 74.4 | 328.5 |

| FGENet [29] | MMM’24 | - | 51.6 | 85.0 | 6.3 | 10.5 | 142.6 | 215.9 | 85.2 | 158.8 | - | - |

| VMambaCC [40] | arXiv’24 | - | 51.9 | 81.3 | 7.5 | 12.5 | - | - | - | - | 88.4 | 144.7 |

| MobileCount [41] | Neurocomputing | 3.4 | 89.4 | 146.0 | 9.0 | 15.4 | 284.8 | 392.8 | 131.1 | 222.6 | - | - |

| HMSTUNet (ours) | - | 61.9 | 49.1 | 77.8 | 6.2 | 10.3 | 142.1 | 192.7 | 77.9 | 132.5 | 43.2 | 119.6 |

4.4. Ablation Study

4.4.1. Component-Wise Ablation Study

4.4.2. Loss Function Ablation Study

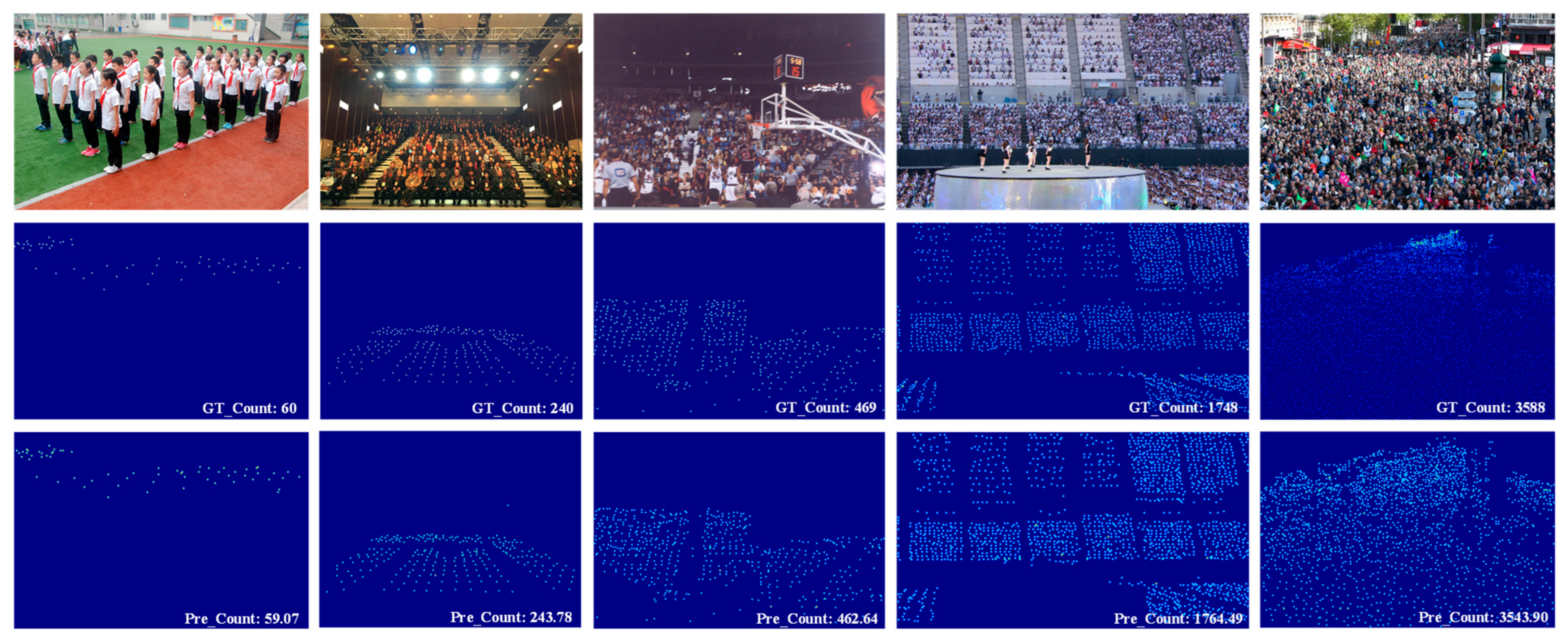

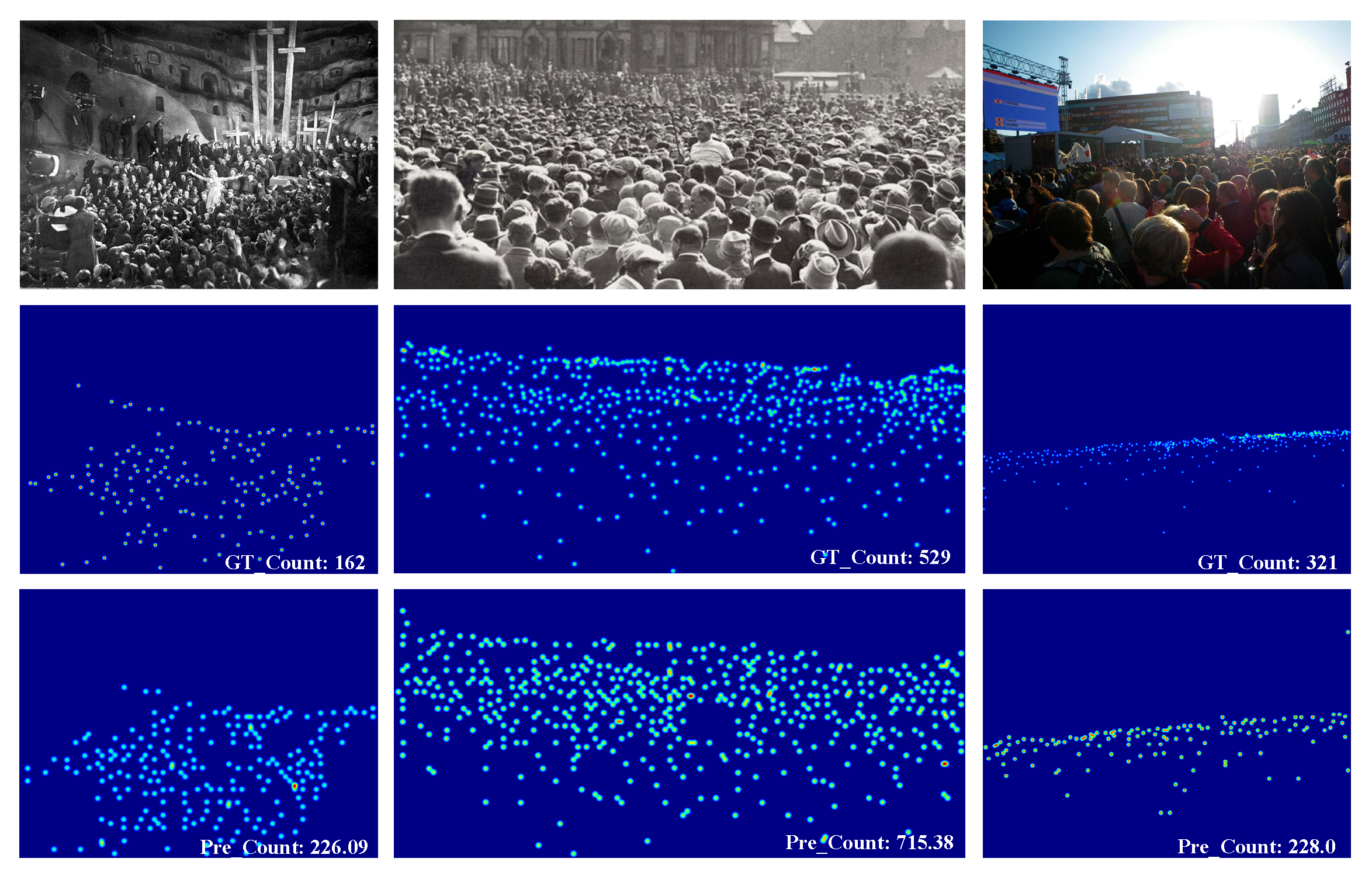

4.5. Visualization

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Saleh, S.A.M.; Suandi, S.A.; Ibrahim, H. Recent survey on crowd density estimation and counting for visual surveillance. Eng. Appl. Artif. Intell. 2015, 41, 103–114. [Google Scholar] [CrossRef]

- Luo, A.; Yang, F.; Li, X.; Nie, D.; Jiao, Z.; Zhou, S.; Cheng, H. Hybrid graph neural networks for crowd counting. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 11693–11700. [Google Scholar] [CrossRef]

- Zhang, C.; Li, H.; Wang, X.; Yang, X. Cross-scene crowd counting via deep convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 833–841. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, D.; Chen, S.; Gao, S.; Ma, Y. Single-image crowd counting via multi-column convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 589–597. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, X.; Chen, D. CSRNet: Dilated convolutional neural networks for understanding the highly congested scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1091–1100. [Google Scholar] [CrossRef]

- Liu, L.; Qiu, Z.; Li, G.; Liu, S.; Ouyang, W.; Lin, L. Crowd counting with deep structured scale integration network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1774–1783. [Google Scholar] [CrossRef]

- Chen, Z.; Zhong, F.; Luo, Q.; Zhang, X.; Zheng, Y. EdgeViT: Efficient visual modeling for edge computing. In Proceedings of the International Conference on Wireless Algorithms, Systems, and Applications, Dalian, China, 24–26 November 2022; pp. 393–405. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Lin, Z.; Han, J.; Ding, G. RepViT: Revisiting mobile CNN from ViT perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 15909–15920. [Google Scholar] [CrossRef]

- Liu, W.; Salzmann, M.; Fua, P. Context-aware crowd counting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5099–5108. [Google Scholar] [CrossRef]

- Han, T.; Bai, L.; Liu, L.; Ouyang, W. Steerer: Resolving scale variations for counting and localization via selective inheritance learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 21848–21859. [Google Scholar] [CrossRef]

- Jiang, X.; Zhang, L.; Xu, M.; Zhang, T.; Lv, P.; Zhou, B.; Yang, X.; Pang, Y. Attention scaling for crowd counting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4706–4715. [Google Scholar] [CrossRef]

- Lin, H.; Ma, Z.; Ji, R.; Wang, Y.; Hong, X. Boosting crowd counting via multifaceted attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19628–19637. [Google Scholar] [CrossRef]

- Tian, Y.; Chu, X.; Wang, H. CCTrans: Simplifying and improving crowd counting with transformer. arXiv 2021, arXiv:2109.14483. [Google Scholar] [CrossRef]

- Qian, Y.; Zhang, L.; Hong, X.; Donovan, C.; Arandjelovic, O.; Fife, U.; Harbin, P. Segmentation Assisted U-shaped Multi-scale Transformer for Crowd Counting. In Proceedings of the British Machine Vision Conference, London, UK, 21–24 November 2022. [Google Scholar]

- Zhang, Z.; Zhang, H.; Zhao, L.; Chen, T.; Arik, S.Ö.; Pfister, T. Nested hierarchical transformer: Towards accurate, data-efficient and interpretable visual understanding. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; pp. 3417–3425. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Peng, Z.; Huang, W.; Gu, S.; Xie, L.; Wang, Y.; Jiao, J.; Ye, Q. Conformer: Local features coupling global representations for visual recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 367–376. [Google Scholar] [CrossRef]

- Lee, Y.; Kim, J.; Willette, J.; Hwang, S.J. MPViT: Multi-path vision transformer for dense prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7277–7286. [Google Scholar] [CrossRef]

- Wang, W.; Chen, W.; Qiu, Q.; Chen, L.; Wu, B.; Lin, B.; He, X.; Liu, W. Crossformer++: A versatile vision transformer hinging on cross-scale attention. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 3123–3136. [Google Scholar] [CrossRef] [PubMed]

- Lempitsky, V.; Zisserman, A. Learning to count objects in images. Adv. Neur. Inf. 2010, 23, 1324–1332. [Google Scholar]

- Wan, J.; Chan, A. Adaptive density map generation for crowd counting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1130–1139. [Google Scholar] [CrossRef]

- Wang, B.; Liu, H.; Samaras, D.; Nguyen, M.H. Distribution matching for crowd counting. Adv. Neur. Inf. 2020, 33, 1595–1607. [Google Scholar] [CrossRef]

- Peyré, G.; Cuturi, M. Computational optimal transport: With applications to data science. Found. Trends Mach. Learn. 2019, 11, 355–607. [Google Scholar] [CrossRef]

- Idrees, H.; Saleemi, I.; Seibert, C.; Shah, M. Multi-source multi-scale counting in extremely dense crowd images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2547–2554. [Google Scholar] [CrossRef]

- Idrees, H.; Tayyab, M.; Athrey, K.; Zhang, D.; Al-Maadeed, S.; Rajpoot, N.; Shah, M. Composition loss for counting, density map estimation and localization in dense crowds. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 532–546. [Google Scholar] [CrossRef]

- Wang, Q.; Gao, J.; Lin, W.; Li, X. NWPU-crowd: A large-scale benchmark for crowd counting and localization. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2141–2149. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Lu, H.; Cao, Z.; Liu, T. Point-query quadtree for crowd counting, localization, and more. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 1676–1685. [Google Scholar] [CrossRef]

- Ma, H.Y.; Zhang, L.; Wei, X.Y. FGENet: Fine-grained extraction network for congested crowd counting. In Proceedings of the International Conference on Multimedia Modeling, Amsterdam, The Netherlands, 29 January–1 February 2024; pp. 43–56. [Google Scholar] [CrossRef]

- Song, Q.; Wang, C.; Jiang, Z.; Wang, Y.; Tai, Y.; Wang, C.; Li, J.; Huang, F.; Wu, Y. Rethinking counting and localization in crowds: A purely point-based framework. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3345–3354. [Google Scholar] [CrossRef]

- Liang, D.; Xu, W.; Zhu, Y.; Zhou, Y. Focal inverse distance transform maps for crowd localization. IEEE Trans. Multimed. 2023, 25, 6040–6052. [Google Scholar] [CrossRef]

- Wang, Q.; Gao, J.; Lin, W.; Yuan, Y. Learning from synthetic data for crowd counting in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8198–8207. [Google Scholar] [CrossRef]

- Ma, Z.; Wei, X.; Hong, X.; Gong, Y. Bayesian loss for crowd count estimation with point supervision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6141–6150. [Google Scholar] [CrossRef]

- Liu, X.; Yang, J.; Ding, W.; Wang, T.; Wang, Z.; Xiong, J. Adaptive mixture regression network with local counting map for crowd counting. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 241–257. [Google Scholar] [CrossRef]

- Liu, L.; Lu, H.; Zou, H.; Xiong, H.; Cao, Z.; Shen, C. Weighing counts: Sequential crowd counting by reinforcement learning. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 164–181. [Google Scholar] [CrossRef]

- Meng, Y.; Zhang, H.; Zhao, Y.; Yang, X.; Qian, X.; Huang, X.; Zheng, Y. Spatial uncertainty-aware semi-supervised crowd counting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 15529–15539. [Google Scholar] [CrossRef]

- Liang, D.; Xu, W.; Bai, X. An end-to-end transformer model for crowd localization. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 38–54. [Google Scholar] [CrossRef]

- Liang, D.; Chen, X.; Xu, W.; Zhou, Y.; Bai, X. TransCrowd: Weakly-supervised crowd counting with transformers. Sci. China Inf. Sci. 2022, 65, 160104. [Google Scholar] [CrossRef]

- Wang, M.; Cai, H.; Dai, Y.; Gong, M. Dynamic mixture of counter network for location-agnostic crowd counting. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–7 January 2023; pp. 167–177. [Google Scholar] [CrossRef]

- Ma, H.Y.; Zhang, L.; Shi, S. VMambaCC: A visual state space model for crowd counting. arXiv 2024, arXiv:2405.03978. [Google Scholar] [CrossRef]

- Wang, P.; Gao, C.; Wang, Y.; Li, H.; Gao, Y. MobileCount: An efficient encoder-decoder framework for real-time crowd counting. Neurocomputing 2020, 407, 292–299. [Google Scholar] [CrossRef]

| Datasets | Number of Images | Training/Validation/Test | Count Statistics | Avg. Resolution | ||

|---|---|---|---|---|---|---|

| Total | Max | Min | ||||

| SHA | 482 | 300/-/182 | 241,677 | 3139 | 33 | 589 × 868 |

| SHB | 716 | 400/-/316 | 88,488 | 578 | 9 | 768 × 1024 |

| UCF_CC_50 | 50 | - | 63,974 | 4543 | 94 | 2101 × 2888 |

| UCF-QNRF | 1535 | 1201/-/334 | 1,251,642 | 12,865 | 49 | 2013 × 2902 |

| NWPU-Crowd | 5109 | 3109/500/1500 | 2,133,375 | 20,033 | 0 | 2191 × 3209 |

| Method | SHA | NWPU-Crowd | ||

|---|---|---|---|---|

| MAE | MSE | MAE | MSE | |

| Baseline | 72.2 | 129.3 | 80.3 | 245.2 |

| Baseline + S1 (MSViT + DCAB) | 58.4 | 90.7 | 57.9 | 182.4 |

| Baseline + S2 (MSViT + DCAB) | 56.2 | 87.9 | 55.6 | 180.7 |

| Baseline + S3 (MSViT + DCAB) | 54.6 | 86.8 | 53.4 | 178.3 |

| Baseline + MSViT | 52.1 | 83.6 | 46.9 | 138.4 |

| Baseline + DCAB | 51.8 | 83.4 | 45.1 | 137.5 |

| Baseline + MSViT + DCAB | 49.1 | 77.8 | 43.2 | 119.6 |

| Loss Function | SHA | |

|---|---|---|

| MAE | MSE | |

| 56.2 | 95.9 | |

| 53.8 | 89.6 | |

| 52.7 | 87.1 | |

| 49.1 | 77.8 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Zhao, K.; He, C.; Peng, S.; Lu, T. A Hybrid Multi-Scale Transformer-CNN UNet for Crowd Counting. Sensors 2026, 26, 333. https://doi.org/10.3390/s26010333

Zhao K, He C, Peng S, Lu T. A Hybrid Multi-Scale Transformer-CNN UNet for Crowd Counting. Sensors. 2026; 26(1):333. https://doi.org/10.3390/s26010333

Chicago/Turabian StyleZhao, Kai, Chunhao He, Shufan Peng, and Tianliang Lu. 2026. "A Hybrid Multi-Scale Transformer-CNN UNet for Crowd Counting" Sensors 26, no. 1: 333. https://doi.org/10.3390/s26010333

APA StyleZhao, K., He, C., Peng, S., & Lu, T. (2026). A Hybrid Multi-Scale Transformer-CNN UNet for Crowd Counting. Sensors, 26(1), 333. https://doi.org/10.3390/s26010333