1. Introduction

Industry 4.0 environments place high demands on privacy, when camera-based systems monitor workflows, ensure safety, and optimize production [

1,

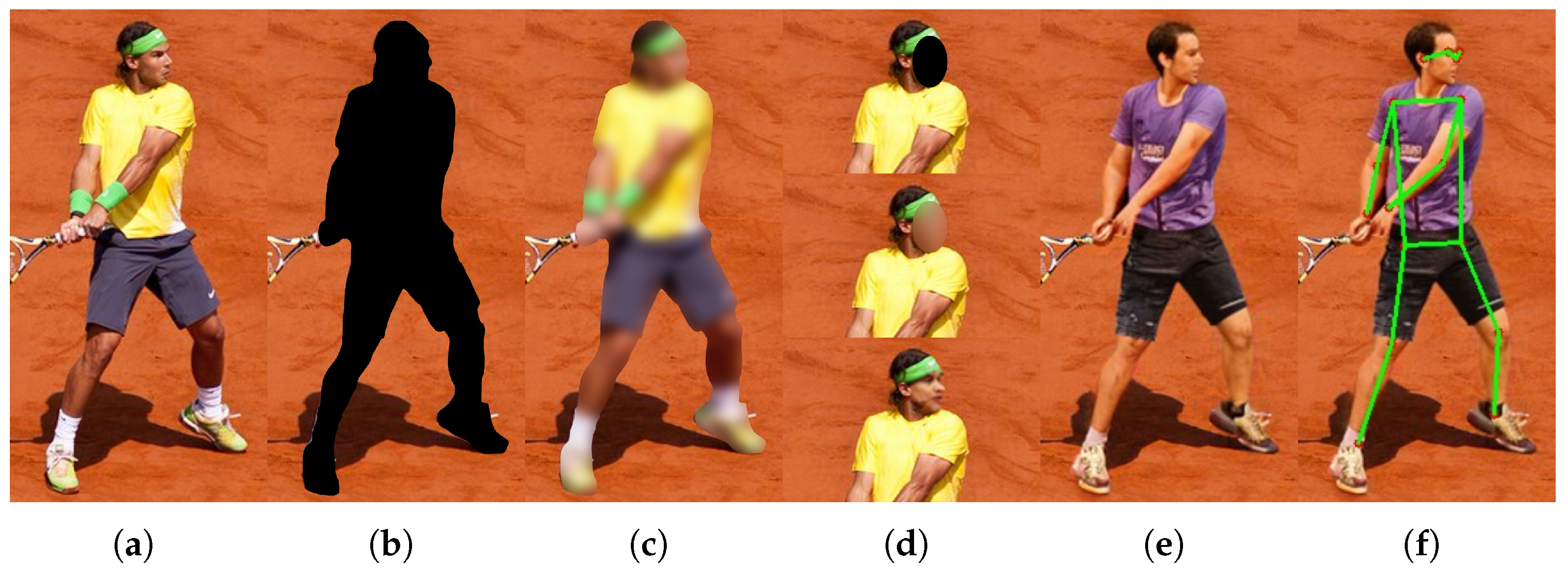

2]. These systems inevitably capture privacy-sensitive data, including human features that can uniquely identify a specific person. Traditional anonymization relies mainly on face blurring, face synthesis, or full-body obfuscation, examples of which are illustrated in

Figure 1. Face anonymization is used most frequently, while approaches addressing full-body or appearance-based anonymization are still less common [

3].

Beyond facial characteristics, human pose and gait patterns represent biometric identifiers capable of revealing identity even when the face is obscured [

6]. Surveys on gait recognition establish it as a biomarker for long-distance and non-cooperative scenarios [

7,

8,

9]. Appearance-based methods further explain how gait recognition operates using visual cues when faces are absent [

10]. Occlusion-focused work provides a systematic taxonomy showing that gait remains a viable biometric even when body parts and faces are obscured by obstacles or clothing [

11]. Together, these findings highlight gait as one of the most distinctive biometric traits. These factors collectively limit their applicability for systematic evaluation of anonymization, where unmodified pixel data and surveillance-relevant viewpoints are essential.

Anonymization methods differ not only in how strongly they suppress visual appearance, but also in whether they preserve the structural representation of the human body. This study considers anonymization that replaces visual appearance while maintaining the overall human structure, such that motion patterns remain observable. The analysis is conducted as a proof of principle under these conditions, assessing whether identity-related information encoded in motion persists despite the removal of visual appearance cues.

Beyond traditional anonymization techniques, advanced methods generate synthetic full-body replacements instead of obfuscating or shifting the original pixels. Realistic full-body anonymization, as illustrated in

Figure 1e, introduces an unexplored privacy risk: despite synthetic appearance, pose and motion trajectories remain structurally linked to the original individual. This raises the question of whether gait-based identity cues persist after such pose-driven anonymization. Existing work provides no metric to quantify what magnitude of keypoint displacement is required to disrupt gait sufficiently for recognition algorithms to fail, and recent analyses show that robustness of skeleton-based gait recognition under structural perturbations remains insufficiently understood [

12,

13,

14]. This study is the first to explicitly address this problem and to investigate whether gait remains intact to a degree that enables identity linkage across original and anonymized domains through systematic experimentation.

Records of a person walking alone are enough to raise valid privacy concerns, as gait can be exploited without the target’s consent. Even at a distance and without cooperation, records can provide sufficient data for gait-based recognition [

15]. This enables identification in public and private spaces, as gait does not require physical contact or active participation and is measurable from common surveillance footage [

16]. Consequently, gait recognition presents unique challenges for privacy protection, as individuals may be unaware that such biometric processing is taking place.

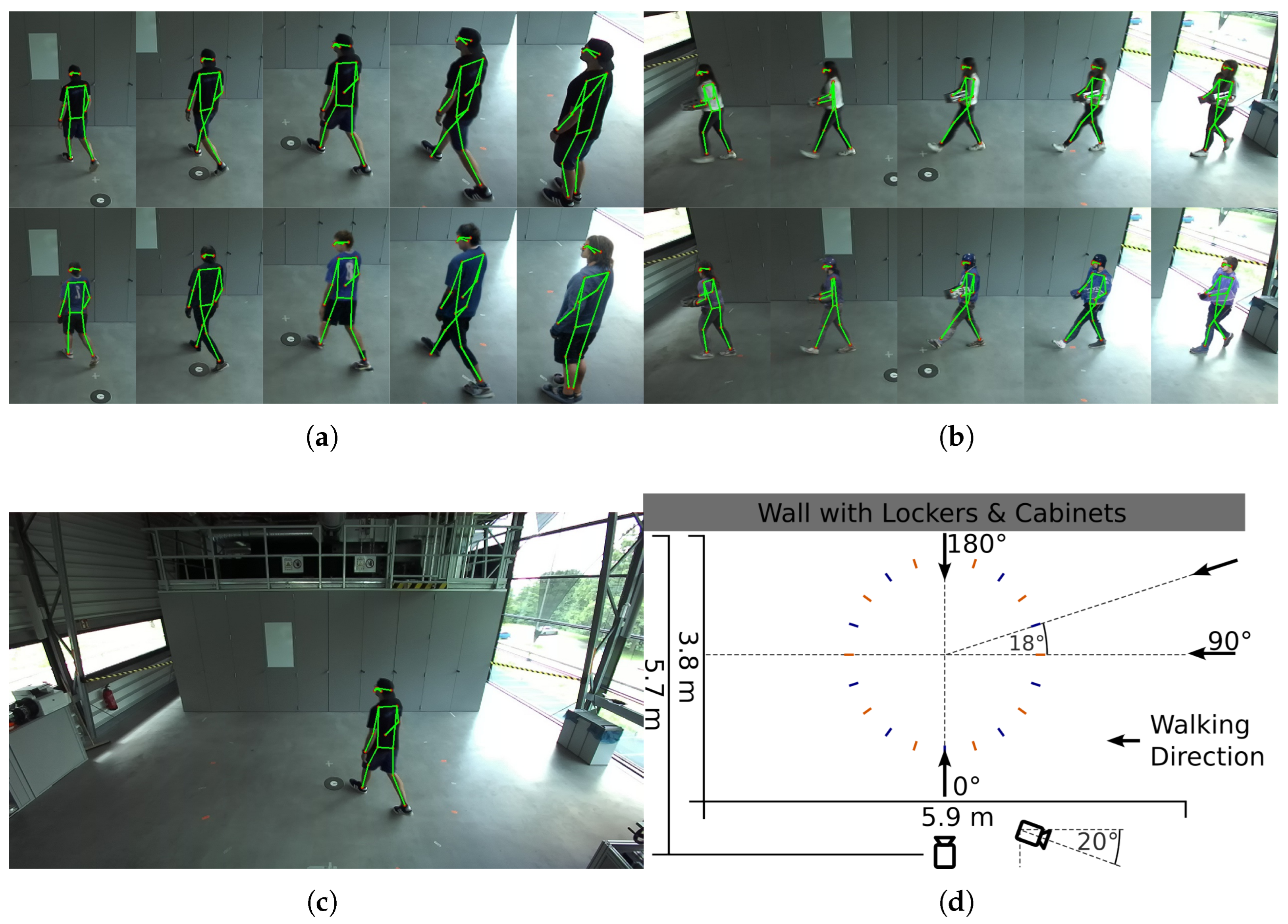

However, studying the privacy risks of gait requires datasets that provide both full visual identity and gait-relevant motion cues, which existing resources only partially satisfy. Public gait datasets such as CASIA [

17] and GRIDDS [

18] offer large-scale pose- or silhouette-based benchmarks, yet not all include original, unaltered RGB sequences. Their viewpoints are restricted to canonical side views, whereas real-world surveillance employs oblique or top-down CCTV viewpoints. Datasets that adopt a CCTV perspective, such as CCPG [

19], often contain pre-obfuscated faces, preventing an assessment of anonymization effects on full-body appearance. In addition, several datasets require formal licensing agreements that can conflict with industrial compliance policies, and some older collections no longer process access requests. These constraints limit their suitability for systematically analyzing how anonymization alters biometric information.

Further, privacy regulations, such as the European GDPR [

20], the Canadian PIPEDA [

21], and the South Korean PIPA [

22], demand safeguards when processing such data. Anonymization offers a promising way to enable legally compliant monitoring, since it can reduce or remove personal identifiers while still allowing data use. However, traditional methods (e.g., blurring, masking) often degrade information that may be required for downstream tasks [

23], while still leaving certain biometric cues intact [

24]. For example, masking can obscure carried objects, and face blurring does not alter gait, leaving identity partly exposed. In regulated industrial environments, an anonymization method is therefore considered insufficient whenever identity linkage through gait remains possible.

Traditional anonymization approaches, such as blurring and pixelation are widely applied for their simplicity but often ineffective for specific tasks. Beyond information loss, they have shown to be reversible [

25,

26,

27]. Anonymization strategies, such as [

28], are able to remove individuals entirely from frames and only supplies their pose. Although this ensures privacy guarantees, the resulting heavily altered images have limited applicability for downstream analysis.

In contrast, realistic anonymization methods generate synthetic replacements that aim to be natural-looking and can be even context preserving, allowing continued use of the data. These methods are more sophisticated but still limited, with only a few focusing on realistic full-body anonymization, such as the work of Brkic et al. [

29] and Hukkelås et al. with DeepPrivacy2 [

5]. More recently, the diffusion-based approach of Zwick et al., FADM (Full Body Anonymization using Diffusion Models) [

30], was proposed, enabling context-aware anonymization that better preserves downstream utility. An example of realistic full-body methods is shown in

Figure 1. As these methods preserve both pose and global scene consistency, they form the most relevant test case for evaluating residual biometric leakage beyond visual appearance.

While anonymization is essential for privacy compliance, its integration into vision pipelines is not without consequences. Several studies have demonstrated performance drops in core computer vision tasks when anonymized data are used. In an additional study, Hukkelås et al. [

31] reported a sharp decline in object detection, segmentation, and pose estimation when applying both traditional and realistic anonymization. Traditional methods such as full-body blurring or pixelation caused severe degradation. Blurred pedestrians were almost entirely undetectable in instance segmentation tasks. The realistic approach preserved performance more effectively but still introduced a consistent accuracy loss across all tasks.

Triess et al. [

32] show that full-body pixelation renders individuals essentially invisible to pose estimation and action recognition systems. Refs. [

33,

34] further confirm this trend, finding that anonymization reduces model accuracy, though the extent depends on the method and task. These results emphasize that anonymization not only protects privacy but also reshapes the data in ways that impact downstream learning.

Earlier work by the author [

35] expanded this by analyzing how anonymization altered the learning process of models compared to training on unaltered data. Further, the influence of anonymization to classes co-occurring with anonymized individuals was investigated. The results demonstrated that image-level modifications through anonymization propagated into training, leading to measurable shifts in feature representations and inference accuracy. This provided a systematic methodology to evaluate not only performance losses but also the mechanisms by which anonymization influenced the model.

Building on this foundation, the present study investigates whether realistic full-body anonymization can conceal identity beyond visual appearance. Specifically, it focuses on the effects of gait as a robust biometric marker. This focus is motivated by the growing dependence on monitoring in Industry 4.0, where operational safety and efficiency must be balanced with legal and ethical obligations to protect individual privacy. Similar requirements apply to healthcare, where long-term observation of individuals is necessary, but personal identity must remain protected.

To date, no study has systematically tested whether realistic anonymization removes gait-based identity cues, despite preserving the original pose. The authors of DeepPrivacy2 explicitly acknowledge this risk, noting that their DensePose-based anonymization might retain pose information, which has the potential for gait-based identification. Triess et al. [

32] showed that DeepPrivacy2 maintained pose recognition and action recognition capabilities, but they did not evaluate its impact on person identification via gait. Recent work such as GaitGuard [

36] reflects a growing awareness of gait anonymization needs, but their presented method mostly keeps the visual appearance of the individual.

This research therefore aims to close this gap. We extend existing evaluation methodologies of [

35] to address gait-related questions raised by Hukkelås et al. [

5]. We further extend the findings of Triess et al. [

32] to additional downstream tasks. Specifically, the study assesses whether realistic full-body anonymization, while obscuring visual identity, also alters gait. By comparing the performance of algorithms trained on original versus anonymized data, the study evaluates if anonymization effectively disrupts gait-based identification while retaining utility for intended applications.

The novelty of this study lies in systematically evaluating whether realistic, pose-preserving anonymization disrupts identity-relevant gait characteristics. The analysis quantifies keypoint shifts introduced by anonymization, examines their effect on downstream gait-based person identification, and tests whether anonymized sequences remain linkable to identities in unaltered recordings. As no established metric describes how much pose distortion is required to invalidate gait recognition, controlled experiments tailored to this question are essential. This work provides the first such systematic evaluation and applies it to realistic full-body anonymization.

3. Results

First, keypoint shifts between original and anonymized frames are measured to characterize pose distortions relevant for gait. Subsequently, pose-based gait recognition performance is reported for open-set and mixed-set configurations, comparing models trained on original versus anonymized poses under matched training conditions. The analyses focus on convergence behavior, domain transfer between original and anonymized data, and the influence of scene context such as object carrying and clothing changes on recognition accuracy.

3.1. Regarding Pose Differences Between Original and Anonymized Images

To quantify how anonymization affects pose estimation, keypoint positions of the original and anonymized images were compared.

Table 2 summarizes the positional shifts per joint. The keypoint comparison reveals that anonymization alters poses non-uniformly. Head-related points (nose, eyes, ears) remained relatively stable with mean shifts below 5 pixels, while the largest displacements occurred at extremities such as elbows, wrists, knees, and ankles (up to ≈13 pixels). The displacement increased with distance from the body center, indicating that minor central inaccuracies propagated along the limbs.

Right-side joints underwent slightly larger deviations than their left counterparts, caused by the camera perspective and walking direction, which mainly exposed the left side and partially occluded the right body side. Therefore, pose estimation on anonymized frames showed reduced stability for right-side joints, amplifying errors for body parts farther from the camera. This view-dependent effect demonstrates that anonymization interacts with viewpoint geometry.

Lower-body joints (hips, knees, ankles) experienced strong shifts of five to nine pixels, directly affecting stride- and rhythm-related geometry. The cumulative displacement from hips to ankles suggests that anonymization mainly perturbs limb geometry and motion cues, while upper-body alignment remains stable. As gait recognition depends on precise joint movements over time and space, these distortions reduce the consistency of pose sequences and can change the features the model learns to recognize gait patterns.

When models were trained and evaluated across different data types (original and anonymized), variations in joint positions likely hindered model adaptation, leading to uneven performance and lower accuracy across datasets. Overall, anonymization introduced measurable geometric distortions, most prominent at motion-critical joints, with possible impacts on both pose stability and gait-based model generalization.

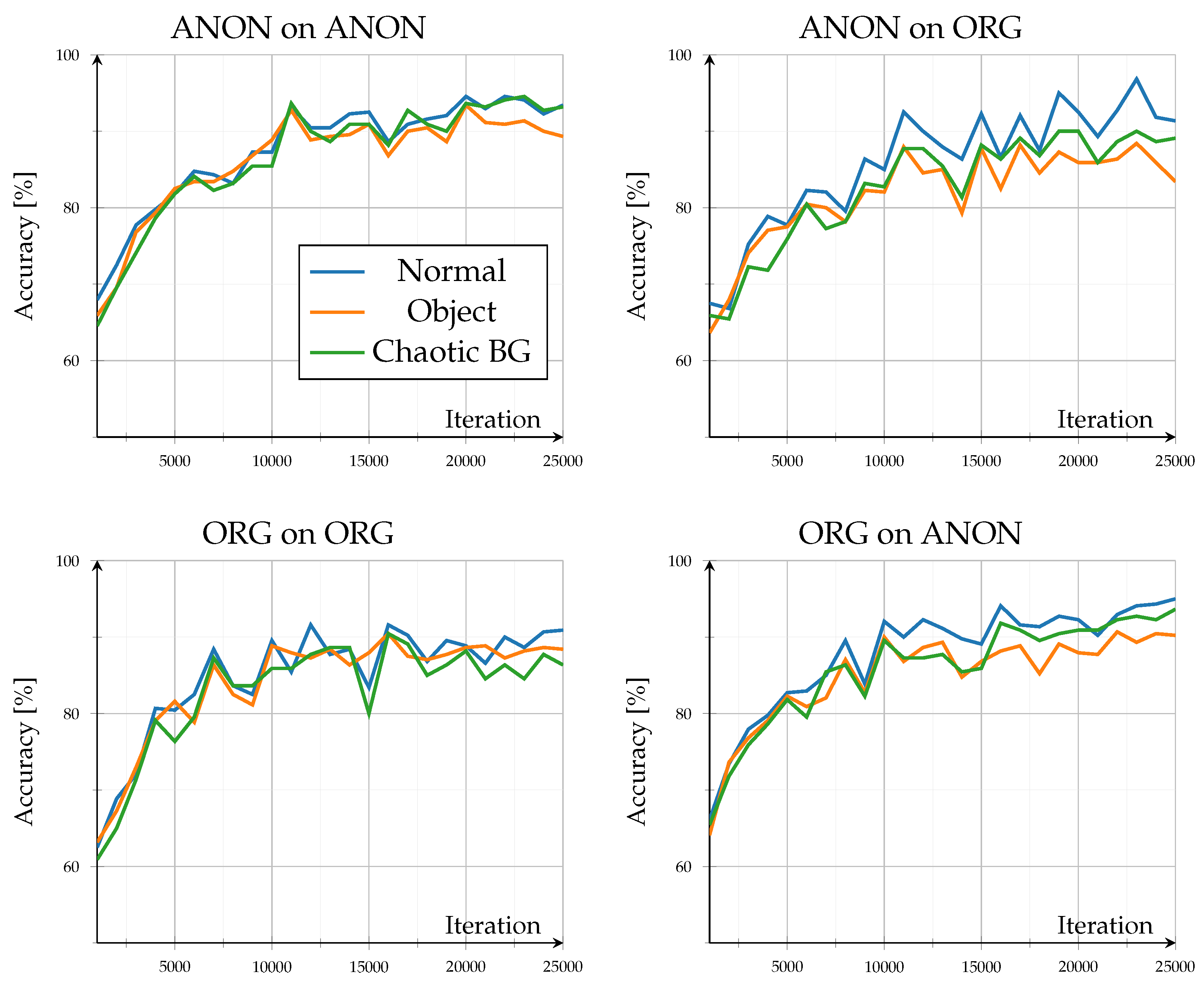

3.2. Open Set

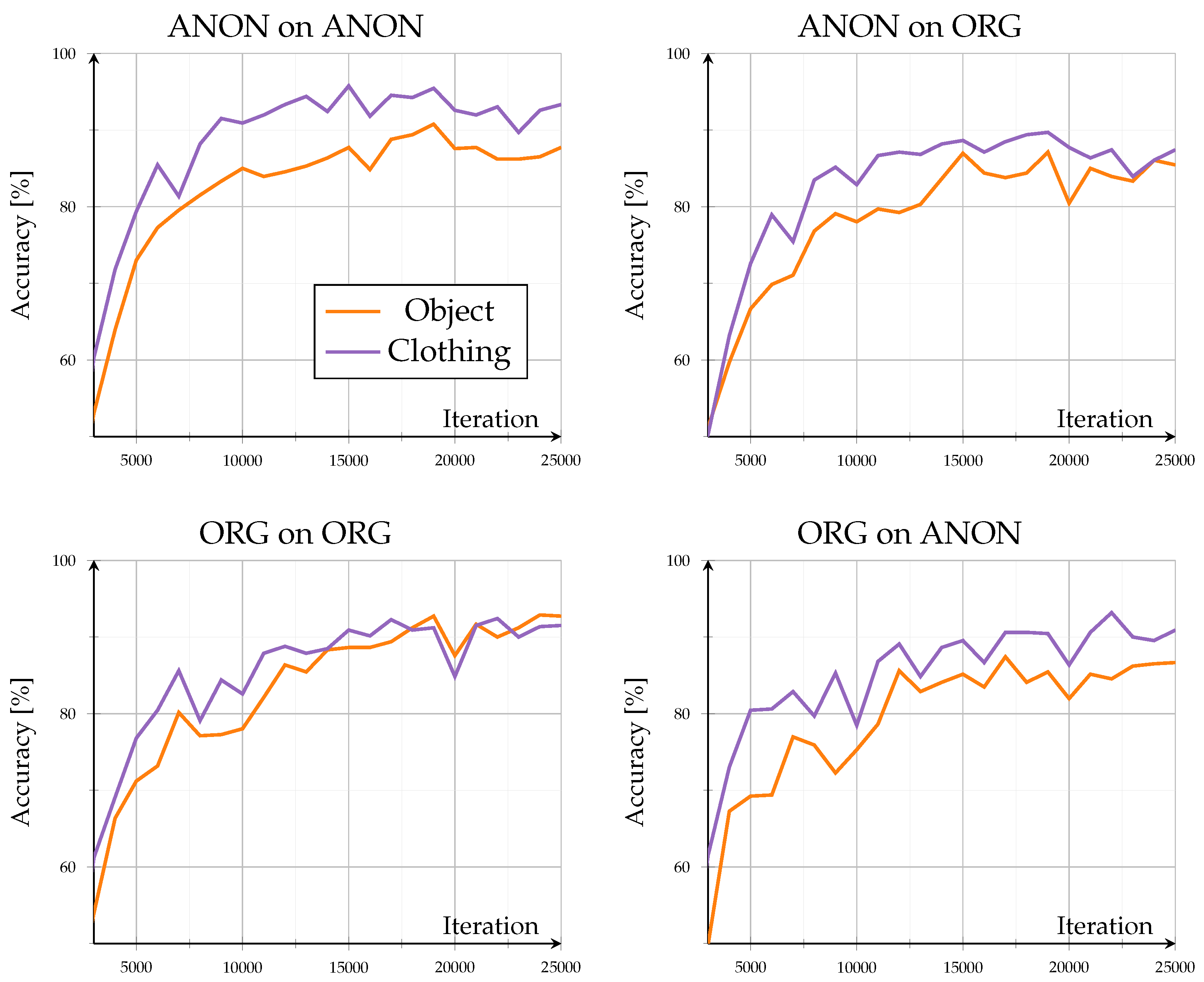

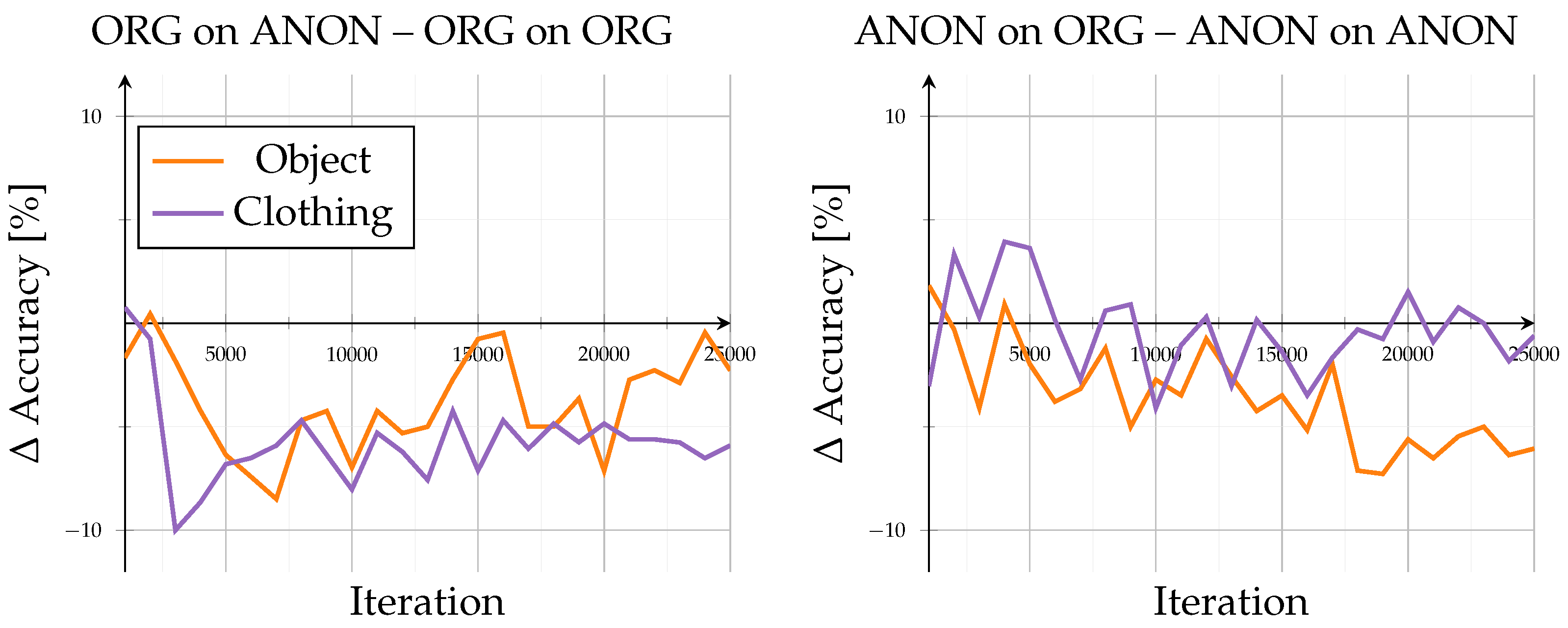

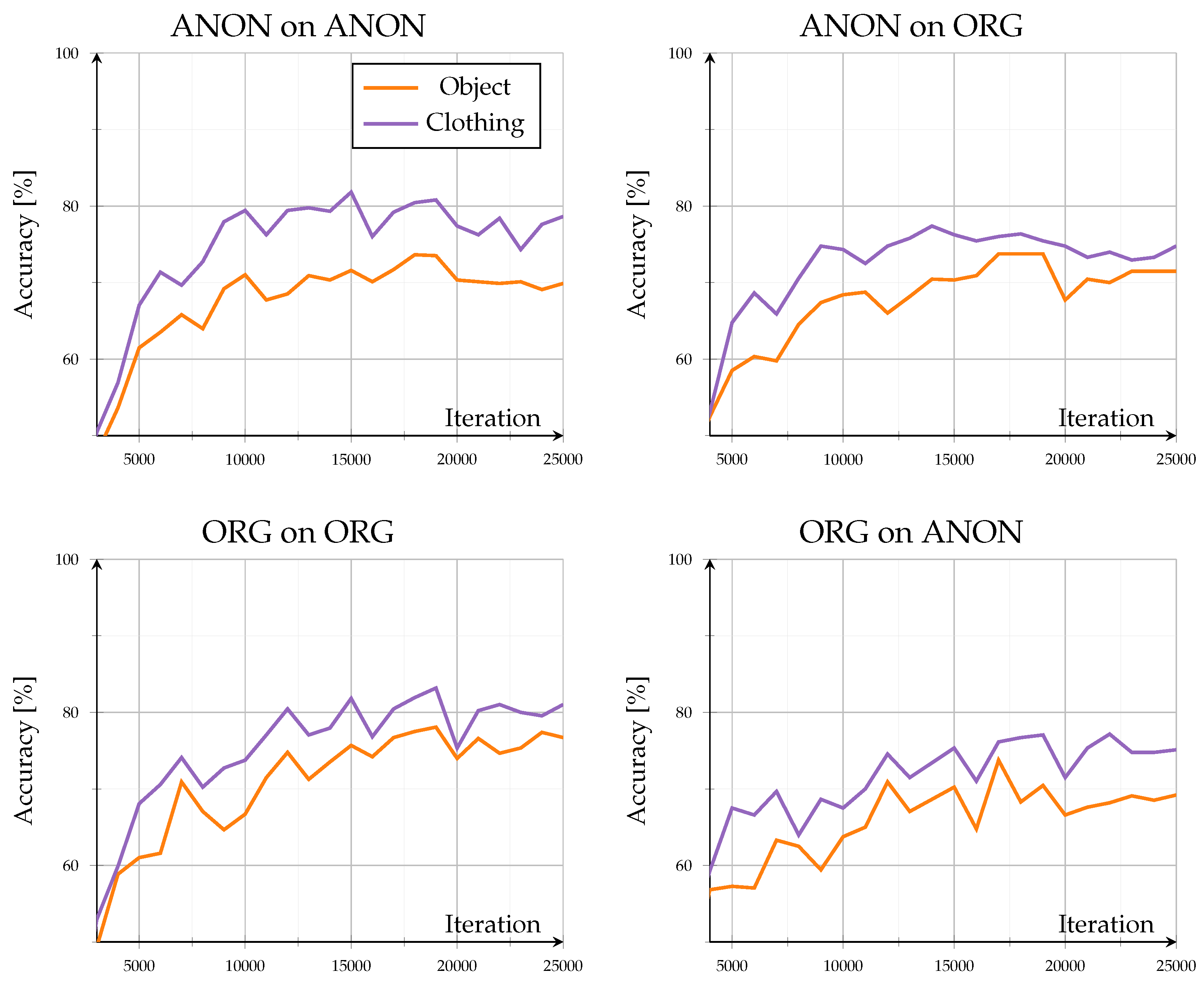

The open-set evaluation investigated how models generalized to unseen identities. Across all configurations, performance saturated beyond roughly 20,000 iterations, demonstrating stable convergence without overfitting (see

Figure 3). Accuracy on unseen data remained high, ranging between 83.4% and 95% after 25,000 iterations, consistent with the results reported by the authors of GPGait++ for comparable datasets. Scene-specific variations stayed within ±2% throughout training, indicating that background complexity and object interaction exerted only minor influence in both data domains. Overall trends showed minimal differences: training and testing on the same data type (Org on ORG, ANON on ANON) led to lower inter-scene variance, whereas cross-type evaluations (Org on Anon, ANON on ORG) exhibited a slightly higher variance.

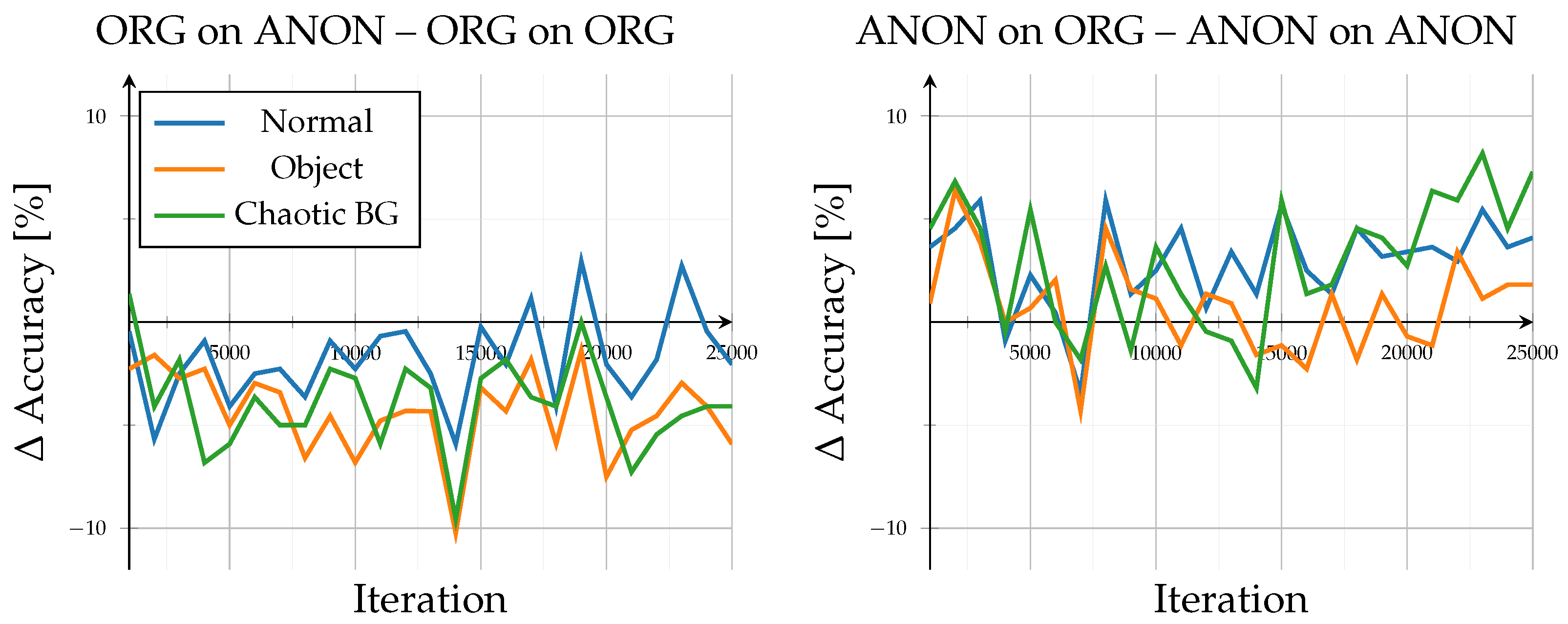

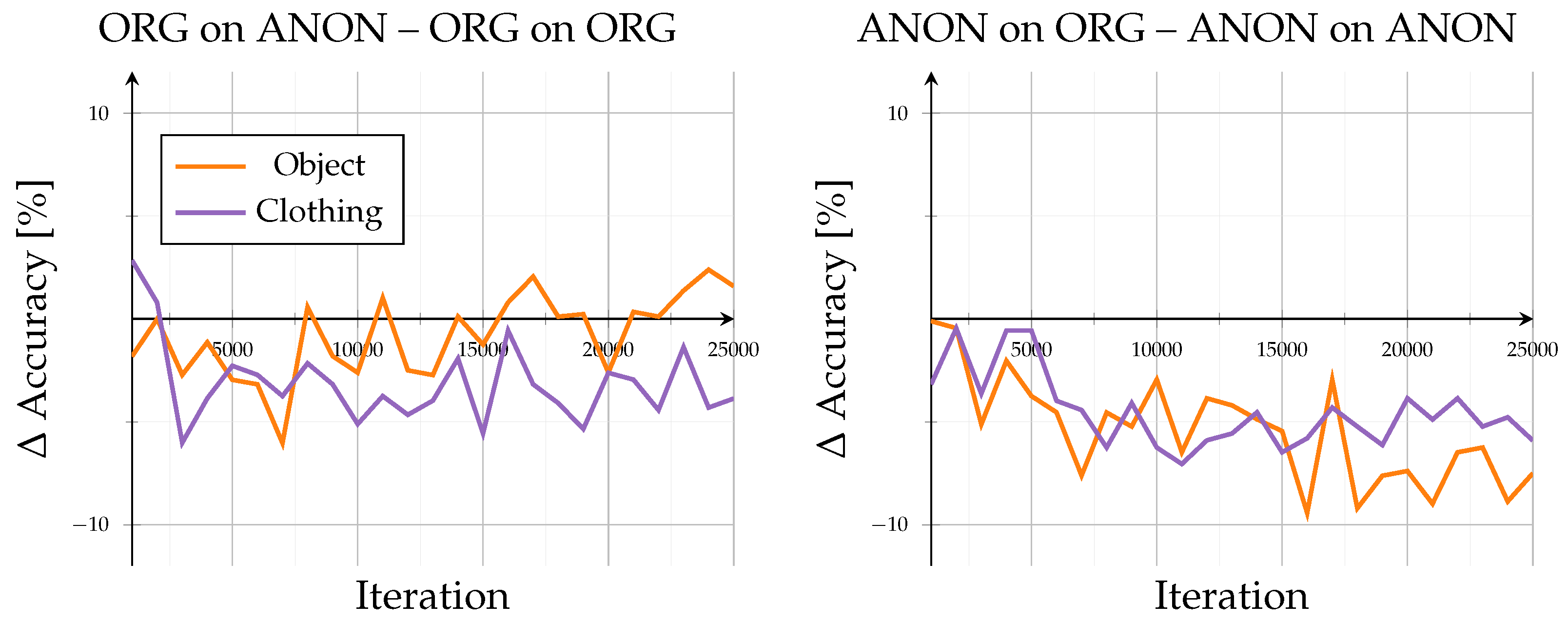

A detailed comparison of evaluation data exchange, as in

Figure 4, provides further insight into model generalization. When switching the evaluation data type (Org on Anon–Org on ORG; ANON on ORG–ANON on ANON), the model trained on original data exhibited a consistent performance decline, with accuracy reductions between −2% and −5.9% at 25,000 iterations and dropped up to −10% around iteration 14,000. In contrast, the model trained on anonymized data performed better when evaluated on original data, showing accuracy gains of approximately 1.8% to 7.3%. However, this configuration displayed stronger fluctuations, alternating between positive and negative changes, indicating less stable adaptation behavior across data domains.

Despite cross-domain discrepancies, accuracy consistently exceeded 80%, indicating that anonymization preserved essential gait structures. Overall, the results confirm the high robustness of pose-based gait recognition under realistic anonymization, showing moderate but asymmetric domain transfer effects and minimal sensitivity to environmental context.

As this open-set configuration assessed unseen identities under known scene conditions, the consistently high accuracies demonstrate strong cross-subject generalization. The model captured person-specific motion and pose characteristics rather than memorizing training identities, indicating that both original and anonymized pose representations preserved identity-discriminative information. The minimal difference between Org on ORG and ANON on ANON configurations further confirms that anonymization did not alter the fundamental gait dynamics required for a reliable cross-identity recognition.

3.3. Mixed-Set

3.3.1. Seen-Set (Known Identities Under New Situations)

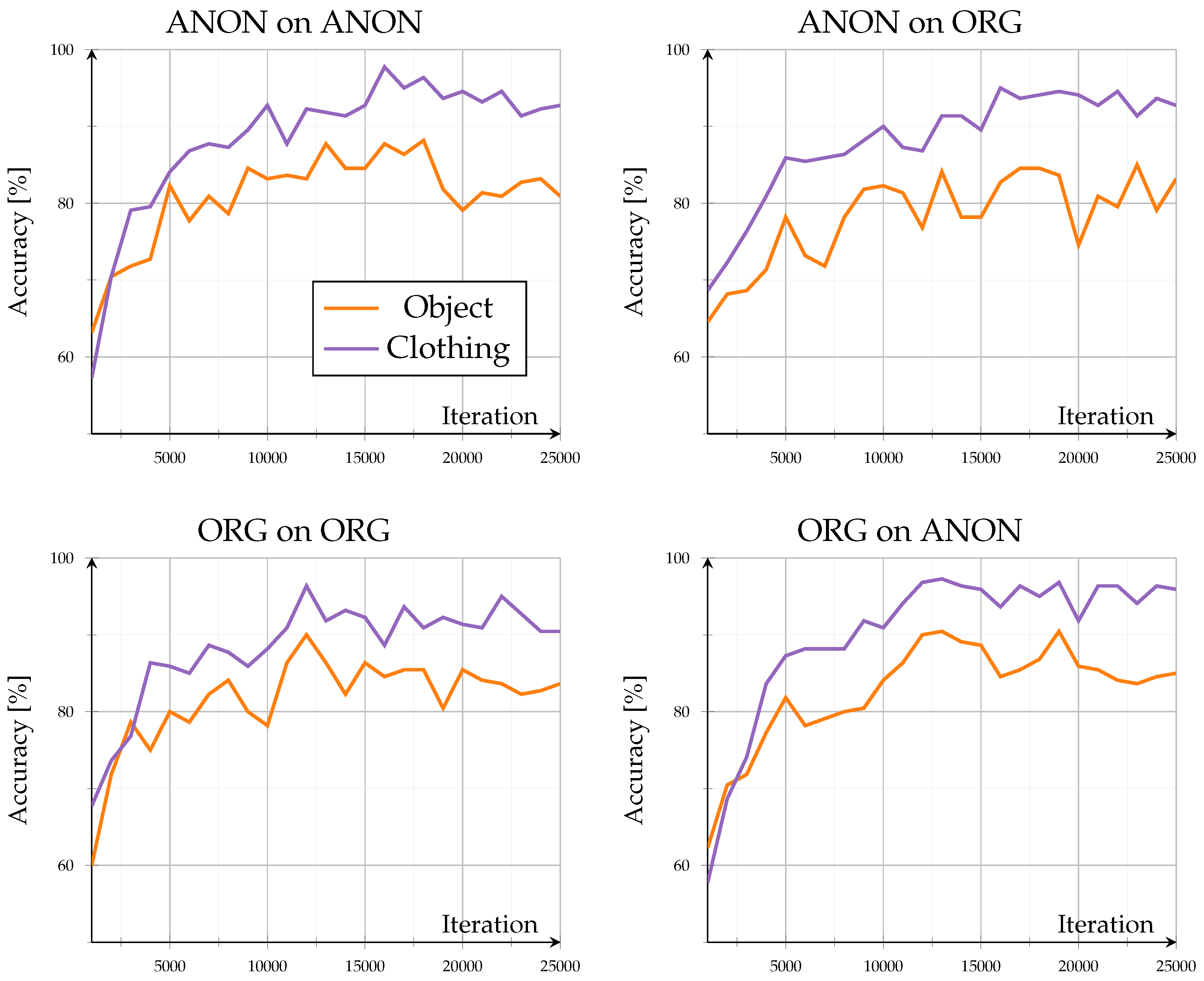

Evaluation on known identities under new situations revealed how the model adapted to contextual variations while retaining previously learned identity representations. Across all configurations, convergence stabilized beyond approximately 20,000 iterations, with accuracies between 85.5% and 93.3% after 25,000 iterations (see

Figure 5). The accuracy curves showed progression without notable oscillations, confirming stable learning behavior on familiar identities.

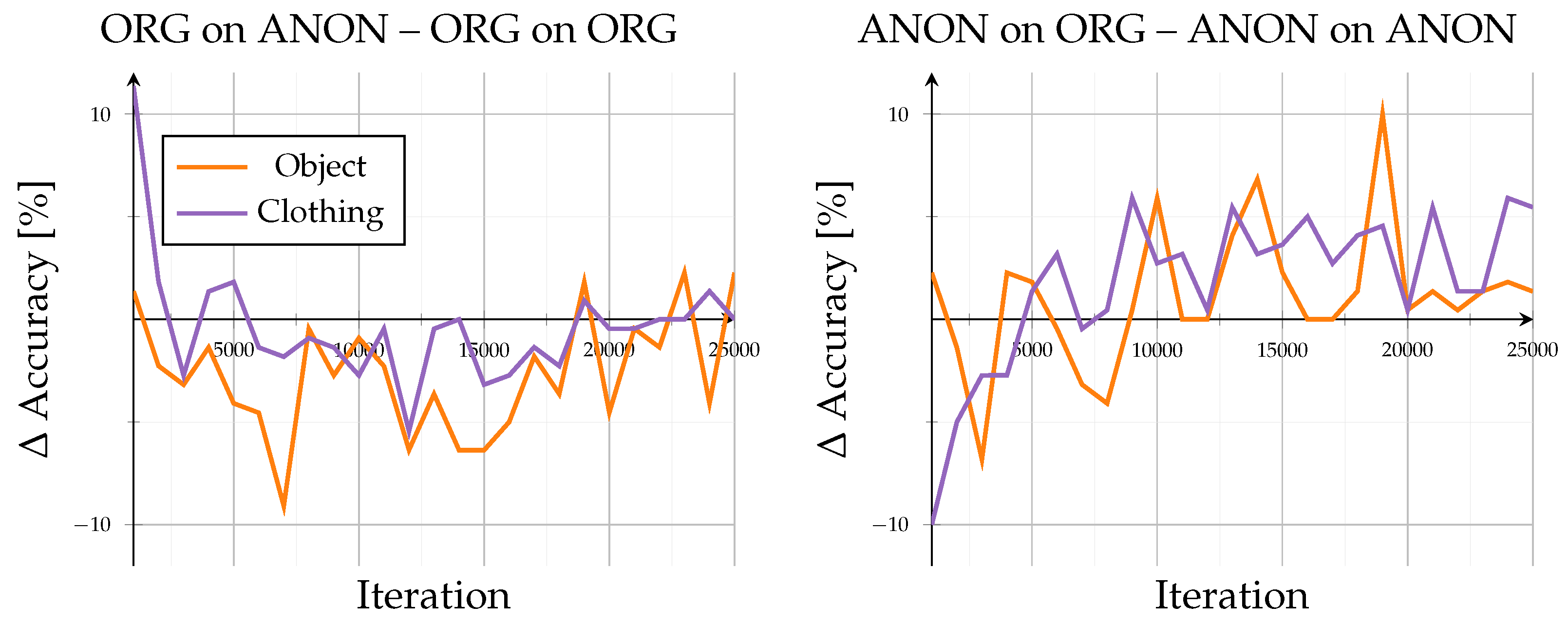

Models trained and evaluated within the same domain (Org on ORG, ANON on ANON) achieved comparable accuracy, with only minor influence from scene variation. In contrast, cross-domain configurations (Org on Anon, ANON on ORG) exhibited reduced performance when switching between data types (compare

Figure 6): Org on Anon resulted in moderate declines of −2.3 to −5.9% at 25,000 iterations, while ANON on ORG showed stronger fluctuations with drops between −0.6 and −6.1%.

Scene variation only had a minor influence (≤2%). Clothing-change scenes consistently outperformed object-interaction scenes by 4 to 6%, indicating that pose alterations caused by carrying objects impaired clarity more than gait alteration through changed clothing. The overall stability suggests that for known identities, anonymization mainly affects appearance rather than the structural gait representations.

3.3.2. Unseen-Set (New Identities Under New Situations)

Evaluation on unseen identities under new situations assessed the model’s ability to generalize to novel subjects and contexts. All configurations reached high performance between 80.9 and 95.9% after 25,000 iterations (see

Figure 7). Training saturated around 20,000 iterations, confirming stable convergence without overfitting. Scene-specific deviations remained below ±2%, indicating strong robustness to intra-scene variations, comparable to that observed for known identities.

Models trained and evaluated on identical data domains (Org on ORG, ANON on ANON) showed smooth progression from approximately 70% to 95%, flattening after about 20,000 iterations with only ±1% local fluctuations. The absence of sharp oscillations or curve crossovers indicated low variance and stable convergence within same-domain training. In contrast, cross-domain evaluations revealed distinct asymmetry (compare

Figure 8): Org on Anon led to decreases between −2 and −5.9%, reaching up to −10% around 14,000 iterations, whereas ANON on ORG produced moderate positive shifts (1.8 to 7.3%) accompanied by increased oscillations and minor drops (−1 to −2%), showing higher instability than the Org-trained configuration.

The observed asymmetry suggests that training on anonymized data introduces a greater diversity in features, enhancing robustness toward real data but increasing variance. Accuracies above 80% across all conditions confirm that anonymization preserves identity-discriminative motion patterns. As evaluation occurred on previously unseen scenes, the results demonstrate strong cross-scene generalization, showing that the models maintained stable gait representations despite environmental variation, which affected performance less than the underlying gait structure.

3.3.3. Combination of Seen and Unseen Set (New Situations, Known and Unknown Identities)

Evaluation on the mixed configuration of seen and unseen identities under new situations examined the joint influence of identity and scene novelty. As other experiments before, convergence remained stable beyond approximately 20,000 iterations without signs of divergence (see

Figure 9). Accuracies ranged between 69.2% and 81% after 25,000 iterations. Although evaluated on the same scenes as the seen and unseen subsets, the mixed setup yielded notably lower results, indicating a compounding effect in which unfamiliar identities and altered scene conditions jointly reduced recognition stability. The decline suggests a non-linear interaction between scene and identity novelty, challenging the model’s capacity to maintain consistent feature representations across diverse conditions.

Scene-related changes were most evident in object-interaction sequences (±6%), showing that carrying or manipulating objects increased pose variability and occlusion effects, thereby reducing stability. Clothing-change scenes remained comparatively consistent, as appearance variations influenced motion features less strongly. Domain-matched configurations (Org on ORG, ANON on ANON) yielded the highest performance, while cross-domain setups amplified oscillations due to combined domain and scene shifts. Differential analyses revealed accuracy drops under cross-evaluation (compare

Figure 10):

Org on Anon decreased by −3 to −6%, occasionally reaching −7%, with final iteration values ranging from −3.9 to 1.6%; ANON on ORG exhibited stronger declines between −5.9 and −7.5% at 25,000 iterations, with negative peaks up to −9.4%.

Compared to the open-set results, models trained on anonymized data showed higher resilience, suggesting that exposure to anonymized variability helps reduce environmental bias. The results indicate that scene variation, rather than anonymization, is the main factor limiting cross-scene generalization. Despite lower overall accuracies, all configurations stayed above roughly 70% accuracy, showing that key gait patterns remain preserved even in unseen environments.

3.3.4. Combined Interpretation of the Mixed-Set

The combined interpretation summarizes overall model behavior across all mixed-set configurations, linking training stability, scene sensitivity, and domain transfer effects.

Training Stability: Across all settings, convergence was reached at approximately 20,000 iterations, confirming stable training and the absence of overfitting.

Performance per Configuration: The evaluation for IDs seen in training showed stable recognition of known identities under new situations with minimal impact from anonymization. The unseen configuration confirmed strong cross-subject generalization in new environments, indicating that gait-discriminative structures remained largely preserved. For the mixed configuration, combining known and unknown identities in unseen scenes proved most challenging, with accuracies between 69% and 81%, demonstrating that combined scene and identity novelty together reduced recognition stability.

Scene and Domain Influence: Scene changes with object interaction caused the strongest accuracy decreases, as carrying objects modified poses and introduced occlusions. Clothing-change scenes remained more stable, since appearance variation had less effect on motion features. Models trained and evaluated within the same domain (Org on ORG, ANON on ANON) showed higher stability, while cross-domain setups exhibited amplified oscillations due to domain shifts. Differential analyses revealed asymmetric transfer behavior: Org on Anon decreased by −3 to −6%, whereas ANON on ORG dropped by −5.9 to −7.5%, indicating that anonymized training led to slightly higher loss when tested on original data.

General Interpretation: Compared to the open-set results, models trained on anonymized data exhibited higher stability, indicating that increased variability in anonymized training samples enhanced adaptation to new environments. Scene variation, rather than anonymization, remained the primary factor limiting generalization across different conditions. Despite lower overall accuracies, all models achieved accuracy scores above 70%, confirming that core gait characteristics were preserved even when both identity and environment differed from the training data.

4. Discussion

The conducted experiments systematically assessed the influence of realistic full-body anonymization on pose-based gait recognition. Accuracies above 80% in the open- and mixed-set experiments demonstrated that essential gait-discriminative structures remained preserved despite anonymization. The following sections discuss these results regarding generalization behavior, domain transfer asymmetries, and environmental context.

4.1. Stability and Retention of Core Gait Patterns

The keypoint analysis showed that the observed distortions were predominantly systematic rather than stochastic, with consistent offsets across sequences and subjects and directionally stable displacements that increased with kinematic distance from the torso. As a result, the temporal structure of joint trajectories remained coherent despite anonymization, enabling gait-based models to exploit stable motion patterns.

Despite lower absolute accuracies in the mixed configuration, all models maintained accuracy values of 70% and more (80 to 90%). This consistent baseline shows that anonymization does not remove the motion patterns over time that are essential for recognizing a person’s gait. This stability demonstrates that realistic anonymization preserves functional gait information necessary for recognition tasks. However, the persistence of high accuracy for known identities also indicates that identity-specific motion characteristics remain present, suggesting that such methods anonymize visual appearance but not motion identity through gait. This observation confirms the assumption made by the authors of DeepPrivacy2 that gait-related features are likely retained for anonymization based on human pose.

4.2. Cross-Identity and Cross-Scene Generalization

High accuracies in both the open-set and mixed-set unseen configurations verify strong cross-subject generalization even under anonymized conditions. The preserved performance indicates that identity-discriminative motion patterns remain intact when visual appearance is replaced by synthetic body representations. This also confirms the assumption of the DeepPrivacy2 authors that gait-related information remains present after anonymization. All configurations achieved high performance between 80% and 96%, aligning with the original GPGait++ benchmarks (reported mean accuracies of 83.5% on the CASIA-B dataset). The observed training behavior further confirms that anonymization does not disrupt structural pose consistency. Hence, gait recognition on anonymized data remains reliable as long as body-joint geometry and temporal coherence are preserved.

4.3. Domain Transfer and Asymmetry

Cross-domain evaluations revealed asymmetric behavior between original and anony-mized data.

Org on Anon configurations consistently decreased by approximately −3 to −6%, whereas ANON on ORG evaluations fluctuated more strongly, reaching up to 7% with increased variance. This asymmetry suggests that anonymized training introduces greater feature diversity, improving robustness toward real data but reducing stability. These findings correspond to observations for pure visual data by [

5,

33,

35], who reported that augmented visual variability enhanced generalization at the expense of precision. Consequently, domain adaptation strategies or multi-domain fine-tuning may further mitigate this imbalance and strengthen cross-domain transfer.

4.4. Influence of Scene Context and Occlusion

Scene-related factors, particularly object-interaction sequences, produced the largest accuracy decrease. Carrying or manipulating objects modified local pose geometry and caused partial occlusions, which degraded recognition stability by up to ±6%. In contrast, clothing-change scenes remained comparatively stable, as they altered appearance while minorly affecting motion dynamics. This is consistent with findings of [

43] that report that different clothing styles only have a limited effect on gait performance.

Our results indicate that environmental and occlusion effects, rather than anonymization, constitute the dominant limitations in cross-scene generalization. Since anonymized rendering preserves overall body topology, the observed degradation mainly originates from reduced joint visibility rather than generation artifacts.

4.5. Limitations

The scope of the dataset necessarily limits the extent to which the findings can be generalized. The study involved a restricted number of participants within a narrow age range, which precludes population-level conclusions. This design choice was deliberate, as the objective was a proof-of-principle analysis that isolates whether gait-based identity information persists under realistic full-body anonymization, rather than a comprehensive assessment across demographic groups.

Further, constraints about generalization were introduced through the recording configuration. All sequences were captured from a single, fixed CCTV-like viewpoint, reflecting a common industrial surveillance setup but limiting insight into how the observed effects scaled across heterogeneous camera geometries or oblique viewing angles. As a result, the reported findings should be interpreted as representative of similar surveillance configurations rather than arbitrary deployment scenarios.

In addition, the controlled nature of the recording environment reduced variability typically encountered in real-world industrial or healthcare settings. Factors such as diverse backgrounds, clothing styles, crowd interactions, and long-term behavioral changes were not fully represented. While this controlled setup enabled a focused analysis of anonymization-induced effects, it did not capture the full complexity of operational deployments.

Our analysis also assumed relatively stable sensing conditions. Pose estimation was performed on RGB data acquired under consistent lighting and motion conditions, and increased sensor noise, motion blur, or partial occlusions may interact with anonymization-induced distortions in ways not observed in the present experiments. Such effects could further influence pose stability and, consequently, gait-based recognition performance.

Finally, the evaluation was tied to a specific sensing and processing pipeline, including a single RGB camera, a particular pose estimation model, and one representative realistic anonymization approach. Although these components reflect widely used configurations, different sensors, pose extractors, or anonymization strategies may lead to quantitatively different outcomes.

5. Conclusions

This study investigated whether realistic full-body anonymization based on pose removed identity cues contained in human gait. Taken together, the findings demonstrate that realistic full-body anonymization suppresses appearance but preserves motion-based identity, revealing a fundamental privacy limitation previously unquantified. The results provide the first systematic evidence that pose-preserving anonymization does not break gait identity, addressing an open question left by earlier work on skeleton robustness and motion perturbations. This investigation also delivers the first quantitative analysis of anonymization-induced keypoint shifts and their direct impact on downstream gait recognition performance. By establishing a systematic evaluation framework across original and anonymized domains, the study closes a gap where no assessment protocol or distortion threshold previously existed.

The pose comparison revealed that anonymization introduced systematic but moderate geometric distortions, with small shifts at the head and larger displacements at joints located farther from the torso. Lower-body keypoints relevant for gait showed mean shifts, while overall body topology remained intact. These distortions reduced pose stability but did not alter the underlying motion patterns that enabled gait-based discrimination, indicating that appearance-level anonymization leaves the biometric signal intact.

Across all open-set and mixed-set configurations, pose-based gait recognition with GPGait++ maintained high accuracies. Models trained and evaluated within the same domain (Org on ORG, ANON on ANON) reached comparable performance, indicating that anonymized poses retained sufficient identity-discriminative information for reliable recognition. Cross-domain evaluations exposed an asymmetric domain transfer: Org on Anon consistently reduced accuracy, whereas ANON on ORG yielded moderate gains but with increased variance. Training on anonymized data therefore introduces greater feature diversity and improves robustness to real data, at the cost of higher instability.

Scene-related variation affected performance more strongly than anonymization itself. Object-interaction sequences, which induced occlusions and local pose perturbations, caused the largest accuracy drops, while clothing-change scenes remained comparatively robust, consistent with reports of limited influence of typical clothing shapes on walking mechanics. Overall, the results indicate that realistic full-body anonymization in its current form anonymizes appearance but not gait identity: core motion patterns and cross-identity discriminability are preserved. For privacy-critical applications, this implies that this anonymization alone is insufficient to neutralize gait as a biometric and must be complemented by gait-targeted obfuscation or domain-adaptation mechanisms.

6. Future Work

Future work on visual full-body anonymization should focus on mechanisms that explicitly target motion identity. Since performance degradation primarily arises from occlusions and reduced joint visibility, occlusion-aware pose refinement, 3D joint completion, or temporal keypoint smoothing may further stabilize anonymized pose sequences.

Extending the evaluation to heterogeneous camera placements, including wall-mounted and oblique viewpoints, provides an important direction for further work and allows clearer separation of viewpoint-related variability and anonymization-induced effects across realistic deployment scenarios.

Beyond viewpoint diversity, scaling the evaluation to larger and more diverse participant populations is essential to assess generalization across age ranges, body types, clothing styles, and long-term behavioral variability. Such extensions would enable a more comprehensive understanding of how anonymization-induced pose distortions interact with population-level gait diversity.

Progress also depends on dedicated anonymization-aware gait datasets that provide paired original–anonymized recordings, environment metadata, and standardized protocols for evaluating motion identity leakage.

Finally, integrating multimodal sensing and testing additional anonymization methods offer a promising direction for privacy-by-design monitoring solutions. Advancements in these areas will support anonymization strategies that preserve downstream utility while mitigating risks associated with gait-based identity leakage.