1. Introduction

The advancement of radar detection technology has led to the widespread application of inverse synthetic aperture radar sparse imaging recovery technology based on compressed sensing theory in the field. The development of compressed sensing theory, along with progress in solving algorithms [

1] and research on regularization methods, has facilitated the extensive study [

2] of sparse signal-processing technology in radar detection. The theoretical framework for transforming signals from the time domain to the frequency domain has given rise to the concept of sparse signal recovery. Despite numerous challenges, advancements in technology have led to the development of various solution algorithms. Simultaneously, sparse coding algorithms and the demands of radar signal processing have mutually driven progress, resulting in a series of applications and innovations in the radar field. Against this backdrop, the developmental trajectory is as follows.

Sparse signal recovery involves transforming time domain signals into frequency domain signals with limited bandwidth, utilizing the Fourier transform and cosine transform. The evolution of transform theory, wavelet transform, and compressed sensing theory has enabled sparse recovery technology to surpass the Nyquist sampling law, recovering original signals from observations below the theoretical sampling value. Sparse observation restoration typically involves minimizing a problem with an objective function consisting of restoration error and sparse induced terms. The regularization term, also known as the sparse induction term, primarily enforces sparsity through the L0 norm. However, the non-convex nature of the L0 norm poses challenges in optimization due to its NP-hard problem [

3] nature. In order to solve this problem, Mallat et al. [

4] applied the matching pursuit algorithm (MP) to the signal sparse decomposition task for the first time, and then the improved orthogonal matching pursuit algorithm [

5] (OMP) was applied to this field, in addition to the iterative hard threshold algorithm (IHT) [

6] and the hard threshold pursuit algorithm (HTP) [

7]. On the other hand, in order to solve the nonconvex optimization problem with the L0 norm, Donoho et al. [

8] used the L0 norm solution and proved the equivalence between the minimum L0 norm solution and the minimum L1 norm solution. Some scholars have studied the convex relaxation problem of the L1 norm, which is also known as the LASSO (least absolute shrinkage and selection operator) problem [

9]. There are also a series of algorithms to solve this problem, such as the least-angle regression algorithm (LARS) [

10], and so on.

The rapid progress of sparse coding algorithms and the increasing need for sparse recovery in radar signal processing highlight the importance of utilizing sparse coding technology to enhance radar imaging performance. Sparse coding technology aids in overcoming challenges in radar signal processing, such as detection and anti-jamming performance, to achieve high-quality imaging [

11]. At the same time, the motion of some targets can also lead to the occurrence of sparse phenomenon, about which some scholars have given some solution algorithms [

12]. With the development of new radar systems, frequency domain sparse radar, bistatic radar, and so on, have appeared. Among them, the authors [

13] point out that due to the bistatic transceiver characteristics and the need to constantly switch beams when observing the target, it is easy to cause echo loss and sparse aperture in the observation process. Various algorithms, such as the ROMP algorithm, have been proposed to improve inverse synthetic aperture radar image resolution [

14]. The literature [

15] proposes an algorithm to obtain images using Bayesian bistatic ISAR in the case of a low signal-to-noise ratio. Qiu et al. [

16] proposed a model that combines low-rank and sparse prior constraints for inverse synthetic aperture radar imaging to address the imaging problem under different sparse data patterns. Furthermore, they developed two reconstruction algorithms within the framework of the alternating direction method of multipliers. Zhang et al. [

17] proposed a sparse ISAR autofocus imaging algorithm based on sparse Bayesian learning. In the literature [

18], the Cauchy–Newton algorithm was proposed to solve the weighted L1 norm-constrained optimization problem to realize target image reconstruction.

This study aims to address sparse imaging recovery in inverse synthetic aperture radar by establishing a sparse aperture imaging model and proposing an inverse synthetic aperture radar sparse imaging restoration algorithm based on an improved exchange multiplier direction method. The algorithm’s effectiveness is evaluated through analysis of recovery outcomes under varying noise levels and sparsity, demonstrating its efficacy.

The contributions of this paper are concluded as follows:

The sparse imaging model of the inverse synthetic aperture radar is transformed into a constrained problem.

An improved Alternating Direction Method of Multipliers method with control parameters updated according to the iterations to accelerate the convergence.

2. Sparse Aperture Imaging Model

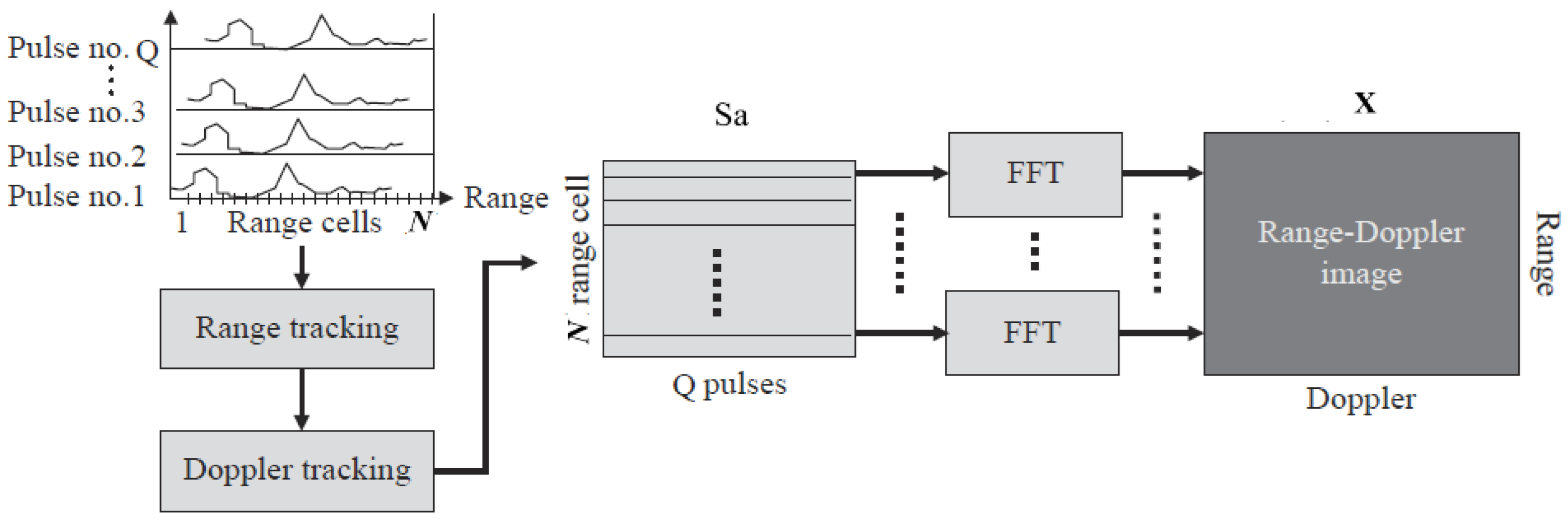

The physical ISAR imaging process is shown as follows. The echo signal can be derived from the ISAR image X and the echo model matrix .

The radar echo model used in this paper, as described in the literature [

13], assumes that there are

Q pulses in the full-aperture echo signal with a cumulative rotation angle of Δ

θ, and the definition of the sparse basis matrix is as follows:

Among them,

N is the number of range units in the ISAR image and

M is the number of Doppler units.

Q is the number of the valid pulses.

, in which subscript

q is the index of the pulsed and superscript

m is the index of the Doppler units.

θ(n) and

β(n) are the rotation angle and bistatic angle change over time during the imaging period, respectively. The two-dimensional target is imaged into

N range units and

M Doppler units by matrix

Da, but in practice, the echo signal received by bistatic ISAR is interfered by noise, so the model of the echo signal is

Formula (2) is the observation model of the ISAR image, which describes the echoes from the targets. More details can be acquired from the literature [

13]. In Formula (2),

Sa is the full aperture 2D echo data after motion compensation and phase compensation,

X is the signal to be recovered, and

is the noise in the measurement process. In the actual measurement, it is not guaranteed that all the aperture data can be completely received. Assume that the number of valid pulses that can be received is

, then the actual reception of the fusion of the effective data is

The column selection function of the matrix is

. We selected a few columns of the matrix to be operated and set the other columns to 0. In this thesis, the recovery of

X is mainly carried out through

S and

D pairs, and obviously the larger the number of effective pulses

K, the better the recovery of the signal is. For the noise

, in this study, we assume that it is Gaussian white noise [

13], The probability density function of the noise is

where known

XSince radar signals are assumed to be well sparse in this study, it can be assumed that each pixel point

in the target image obeys a Laplace distribution with the parameter

. Thus,

Assuming that the individual pixel points follow an independent distribution, the probability density function of

X is

To estimate

X, according to the Bayesian criterion,

Substituting and simplifying the above public notice yields

where

is the multiplication of the corresponding elements of the matrix, and is the Frobenious norm for the matrix which is defined as , and is the element with index .

In the optimization process, the optimization of will be solved according to the columns, and the matrix L is called the regularized sparse parameter, which is used to balance the recovery error term and the regularization term. If the noise level is larger, the corresponding element of L should take a larger value. If the noise level is smaller, the corresponding element of L should take a smaller value. In this study, we assume L is the same value, then the problem can be further simplified.

3. Inverse Synthetic Aperture Radar Sparse Imaging Recovery Based on Improved Alternating Direction Method of Multipliers

In order to solve the above optimization problem, this thesis proposes a solution method based on the improvement of the Alternating Direction Method of Multipliers (ADMMs), which is derived from the traditional one [

19], where the above problem is first transformed into a constrained problem.

The Lagrange multiplier method is then applied to add quadratic terms

to the objective function of the above problem. The algorithm does this by alternately minimizing the original variables

X and

U and maximizing the dyadic variables (a version of the Lagrange multiplier method). The pseudo-code of the algorithm is given by Algorithm 1.

| Algorithm 1. Inverse synthetic aperture radar sparse imaging recovery algorithm based on Improved Alternating Direction Method of Multipliers |

| (noise parameter) |

1. Begin

2. k = 0

6. While not converge do

11. end

13. end |

The complexity of this algorithm to encode N signals using the same matrix is

. In this algorithm, the function

is a soft threshold method and it operates for each element of the matrix. When the value of

N is large, step 7 of the algorithm is the most time-consuming step with a complexity of

. By utilizing the left eigenvector and eigenvalue of the matrix

, the step can be simplified to an operation with a complexity of

, in which

is the sparsity of the signal representation. That is, we just use the

column of the dictionary

to represent signal

. If a large

is used, the signal will be recovered perfectly, as well as the noise. Otherwise, the signal will not be recovered perfectly, but the noise will be suppressed as well. Although the value of

affects the final convergence rate, the convergence of the algorithm is independent of its value. The selection of

is discussed in detail in the literature [

10].

In this study, the parameters are changed during the iteration process to achieve a faster convergence process, where the focus is on comparing the change of the optimization variable X with its dyadic variable U and comparing U with the result of the number of iterations corresponding to multiples of 10. The following algorithm shows how to update parameter depending on the relationship between the two changes.

Our proposal combines Algorithms 1 and 2. The algorithm can effectively accelerate the convergence speed and ensure that the objective function quickly converges to the optimized objective value. The adjustment of control parameter

p, which is a real number, is selected randomly, taking the value of 10 in this paper. The smaller the

p, the more frequent the updating of the parameters

and V.

In Algorithm 2, the parameter is changed according to the relationship between and . If , which means the different between and is larger than between and , should be increased to add more penalty to the third term of the object function shown in Formula (12), and vice versa. So, the convergence of the method can benefit from the tuning of parameter .

Although we change parameters

and

according to the iterations, the complexity of the algorithm is still not changed, but in each iteration the penalty is changed to make the independent variables

and

optimal.

| Algorithm 2. Algorithm for updating the parameters of Improved Alternating Direction Method of Multipliers |

| (adjustment of control parameter) |

(iterative variables)

1. Begin

4. end

12. end

16. end

17.end |

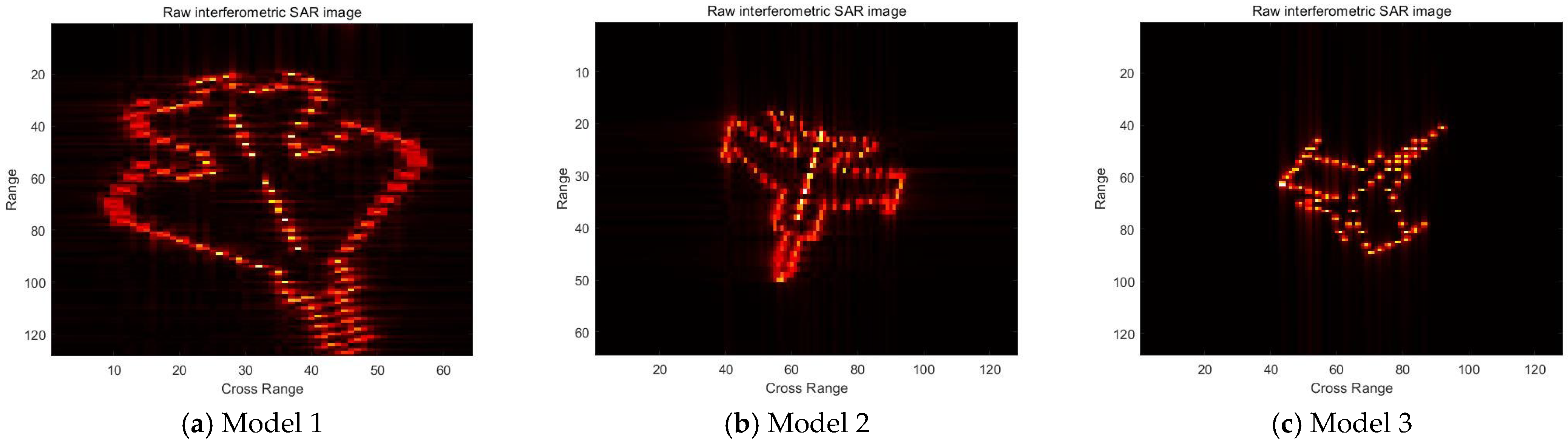

4. Results and Discussion

The experimental part selects the simulated imaging results of inverse SAR of three different models of airplanes, in which the inverse SAR image sizes of the three models are 64 × 128, 128 × 64, and 128 × 128, respectively, in which the first number is the quantity of the cross range with denotation

M and the second number is the Doppler range with denotation

N. The physical quantity of the axes is the number of image pixels

which is dependent on the measurement matrix with size

. In the following experiments,

is initialized by a random matrix with

128. The original

K of the three models are 64, 128, and 128, respectively. Their original interferometric SAR images, which are notated by

X, are shown in

Figure 1:

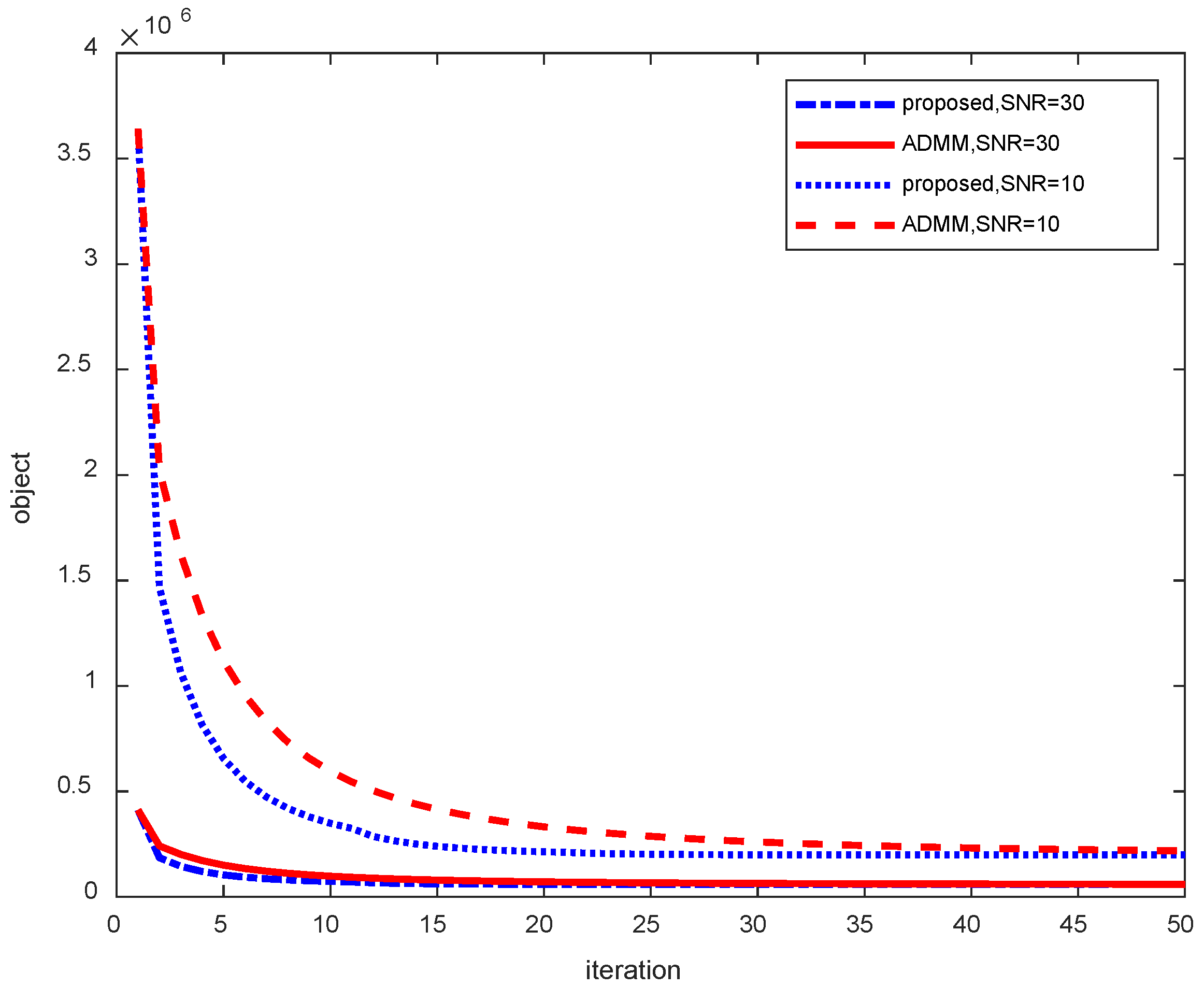

First, the speed of the convergence of the improved algorithm of this thesis is compared with respect to the original method of swapping multiplier directions. For different model airplanes selecting a line of measurement, the change in the objective function value with the number of iterations is shown in

Figure 2.

Figure 2 shows the convergence curves of model 1–model 3 at the 10th, 30th, and 60th measurement points with equal signal-to-noise ratios, where the horizontal coordinate is the number of iterations and the vertical coordinate is the value of the objective function. Comparison of the results leads to the conclusion that the convergence speed of the improved algorithm is higher than that of the original Alternating Direction Method of Multipliers. The degree of improvement varies from case to case, e.g., for model 2 observation 30, the improvement starts at the 12th iteration step and is small relative to the other two cases.

Figure 3 shows the convergence speed of each algorithm for different noise cases for model 1. It can be seen that the objective function values converge to larger values for the more noisy case (signal-to-noise ratio of 10). Conversely, with less noise, the objective function value converges to a smaller value, as shown in

Figure 4. On the other hand, in terms of convergence speed, the case with relatively small noise has a faster convergence speed and is already close to the final convergence value at the 10th iteration, whereas the case with larger noise (signal-to-noise ratio of 10) has a relatively slower convergence speed and is probably close to the final convergence value at the 25th iteration.

In order to quantitatively analyze the effect of sparse recovery,

is the original image and

is the processed image. The relative recovery error (RRE) is defined as

where

is the Frobenius paradigm, which is defined as

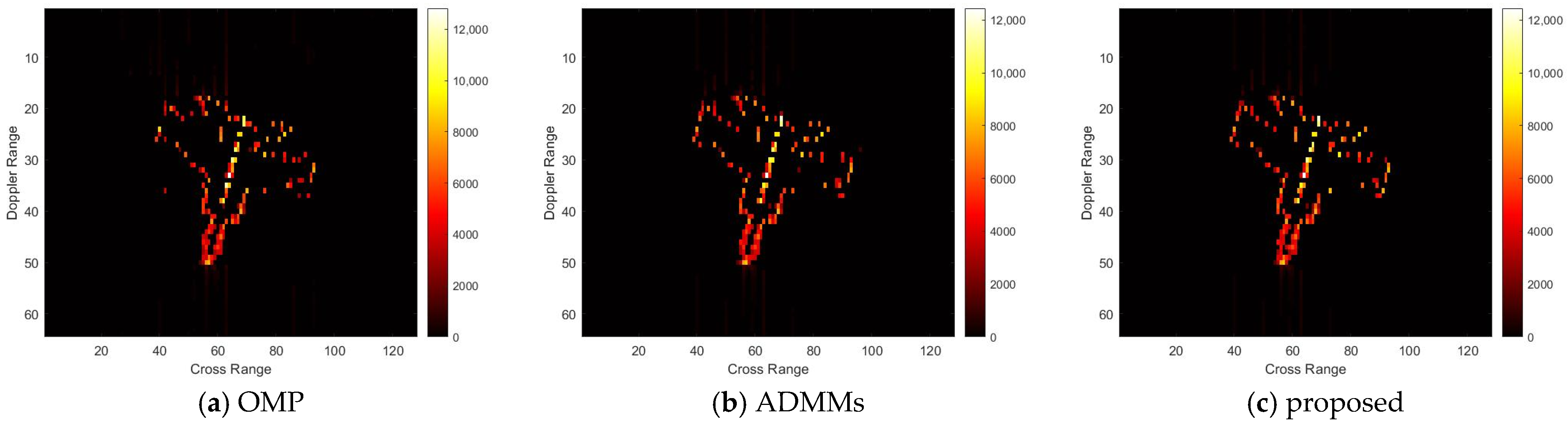

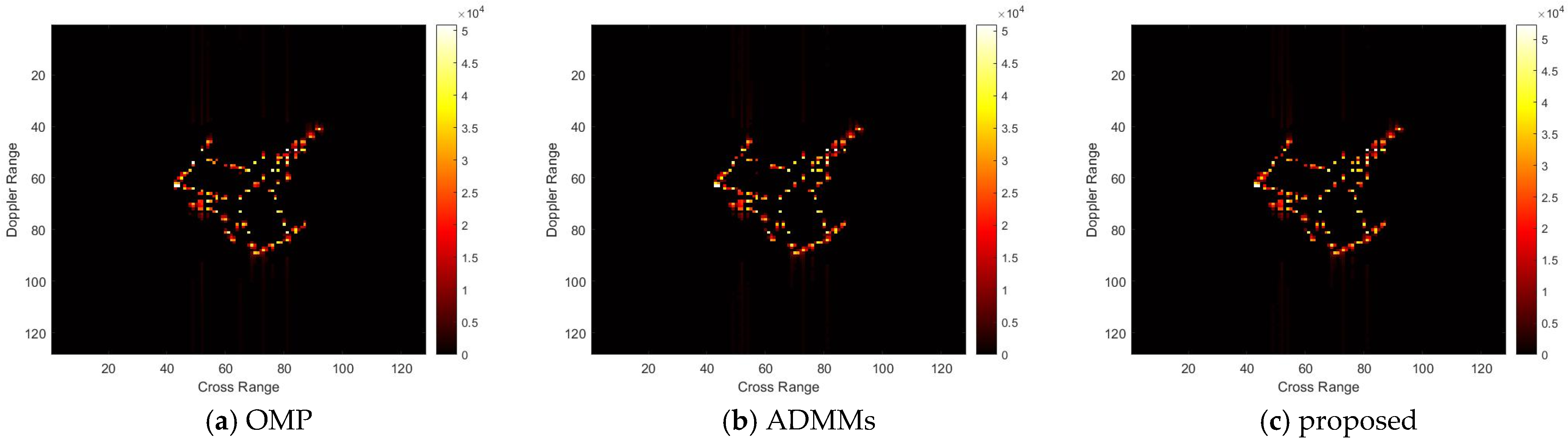

For model 1, the relative errors of recovery at different noise levels and with measurement sparsity are shown in

Table 1 below. The imaging results are shown in

Figure 5 and

Figure 6 below. In this experiment, our proposal achieves the same results as the ADMMs for different SNRs and a sparsity of K, since our proposal is an improvement of the ADMMs, and the worst results are the same as the ADMMs when

p in Algorithm 2 is very large. For the same SNR, the least RRE can be achieved for the largest sparsity K since the fitting error is small in this setting. Detailed analysis of the effect of the SNR and K on the RRE will be shown in the following experiments.

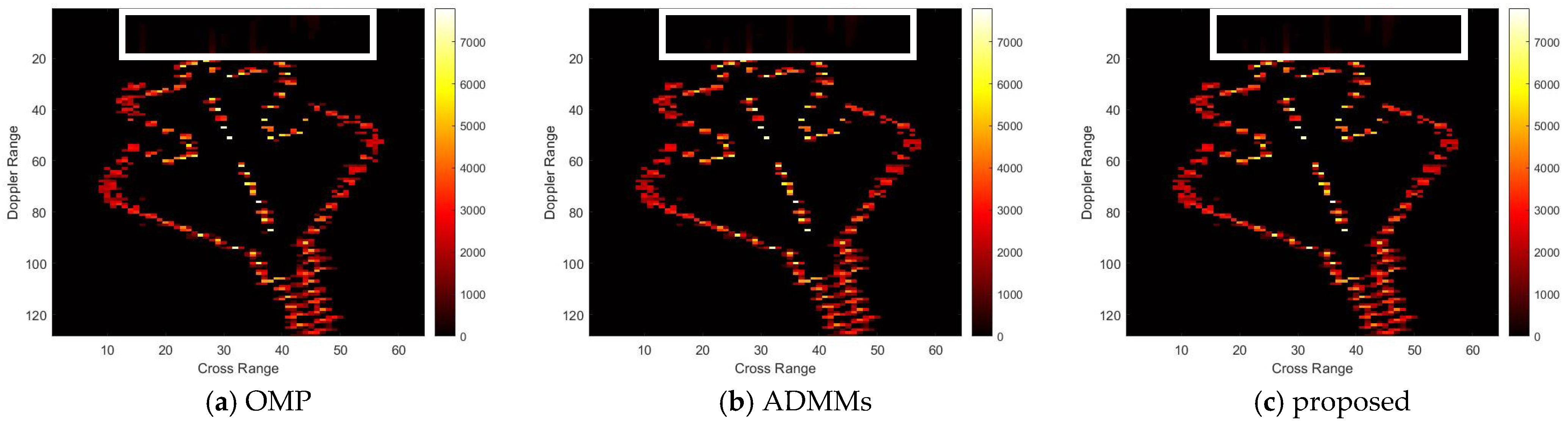

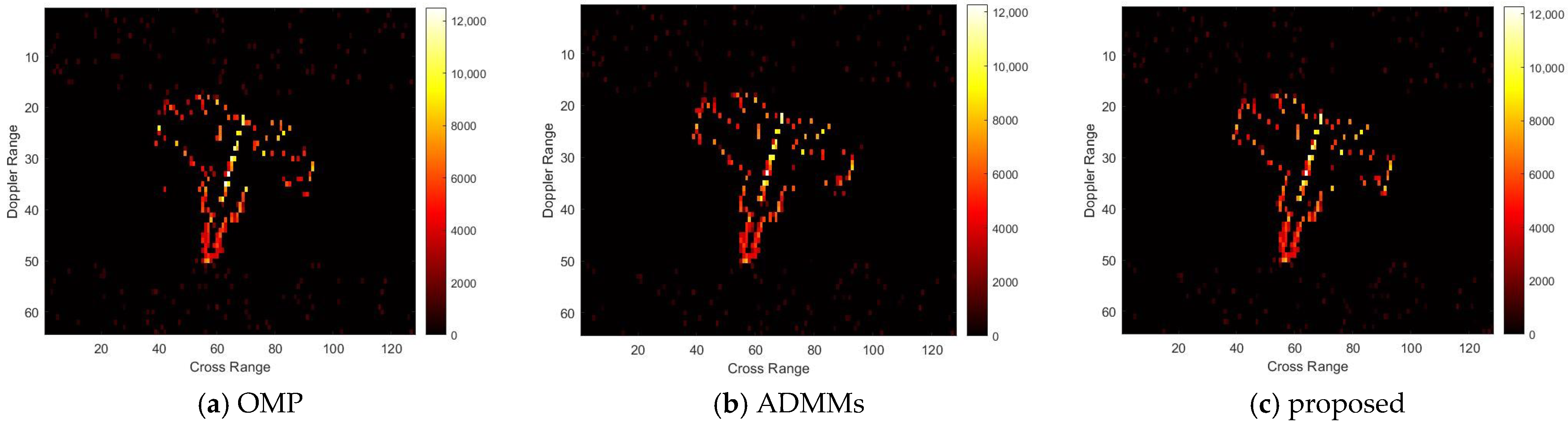

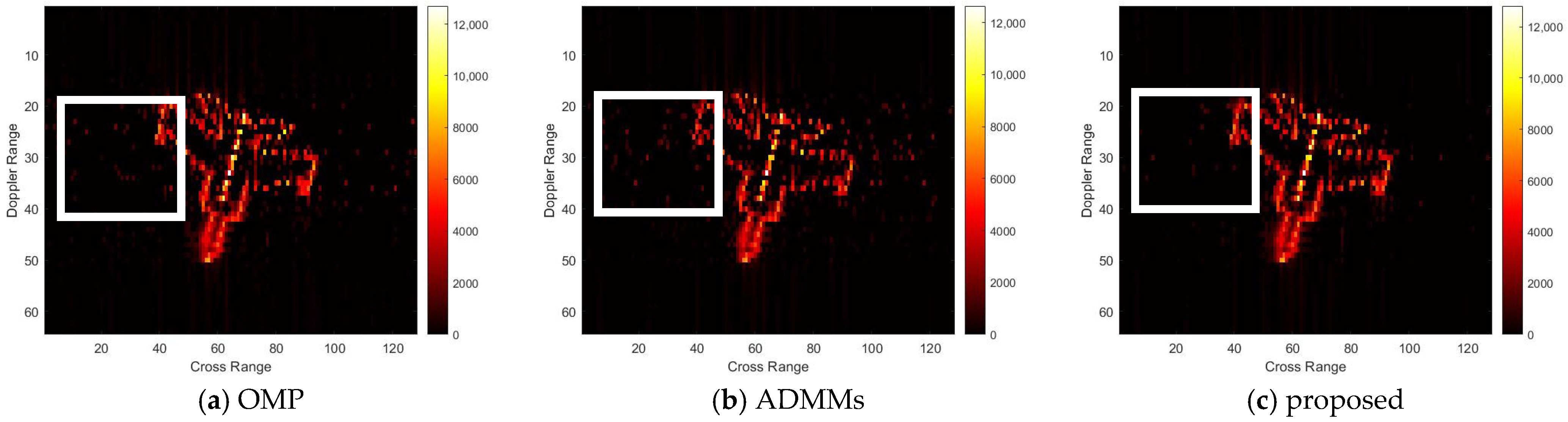

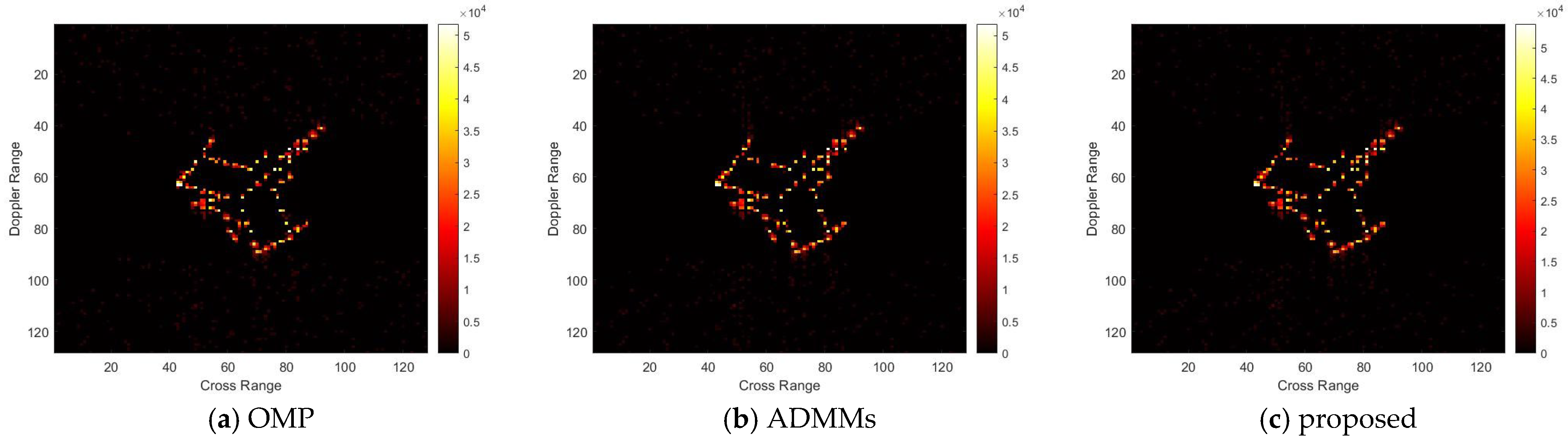

For model 2, the relative errors of recovery at different noise levels and with measurement sparsity are shown in

Table 2 below. The figure marked bold is the best result among all the compared algorithms. The imaging results are shown in

Figure 7,

Figure 8,

Figure 9 and

Figure 10 below. In this experiment, our proposal achieves the best results, especially for the experiments with large sparsity K (the last column of

Table 2). From the white rectangle in

Figure 8 and

Figure 9, we can conclude that our proposal suppresses the noise better than the other algorithms.

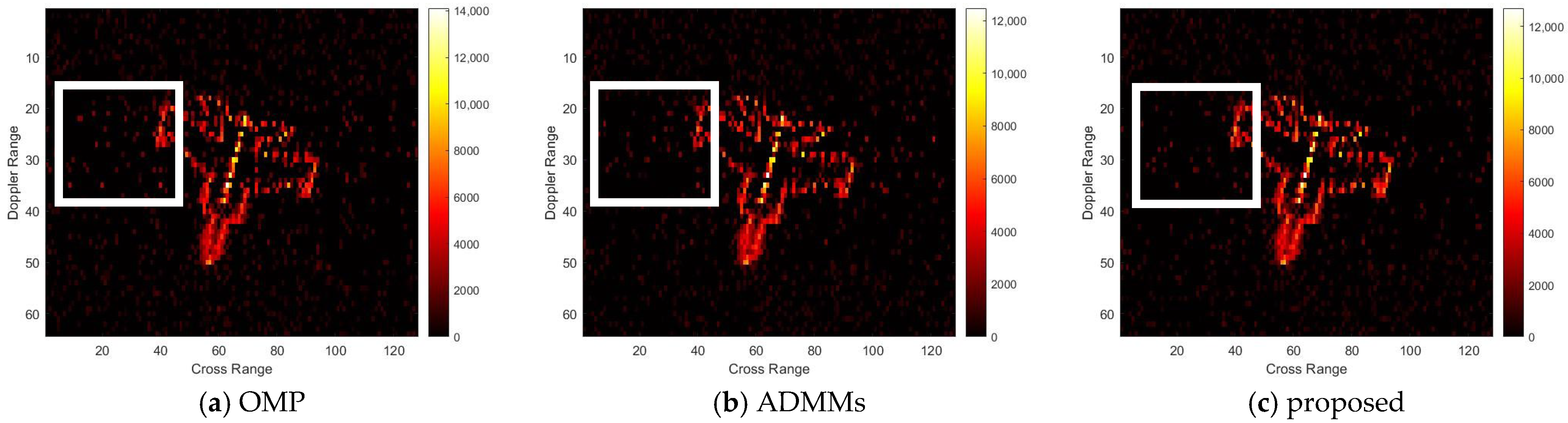

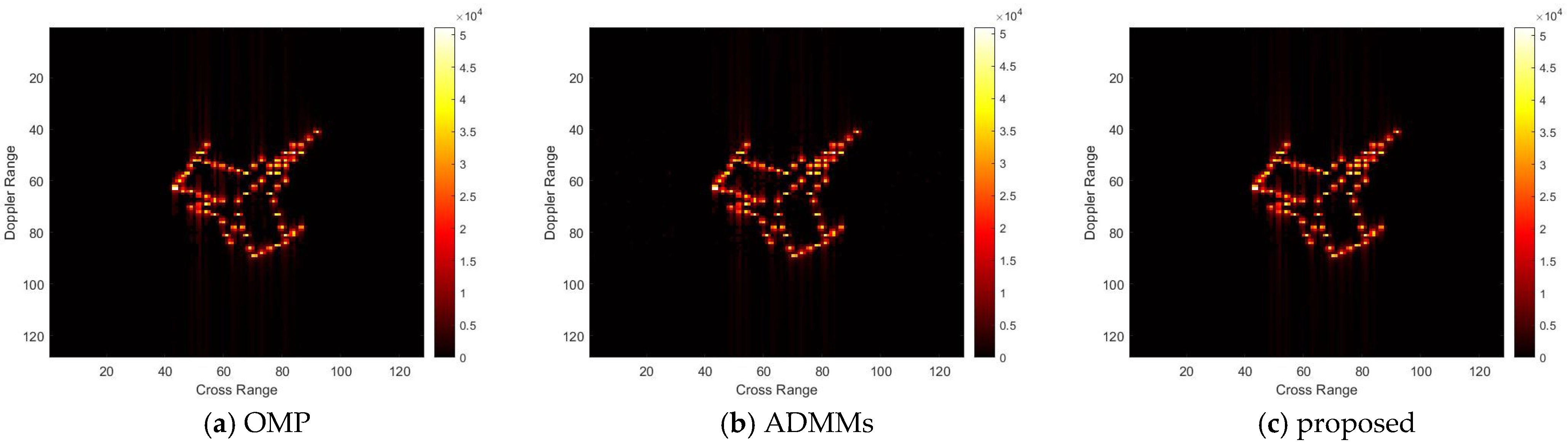

For model 3, the relative errors of recovery at different noise levels and with measurement sparsity are shown in

Table 3 below. The figure marked bold is the best result among all the compared algorithms. The imaging results are shown in

Figure 11,

Figure 12,

Figure 13 and

Figure 14 below. In this experiment, the same conclusion can be achieved as plane model 2.

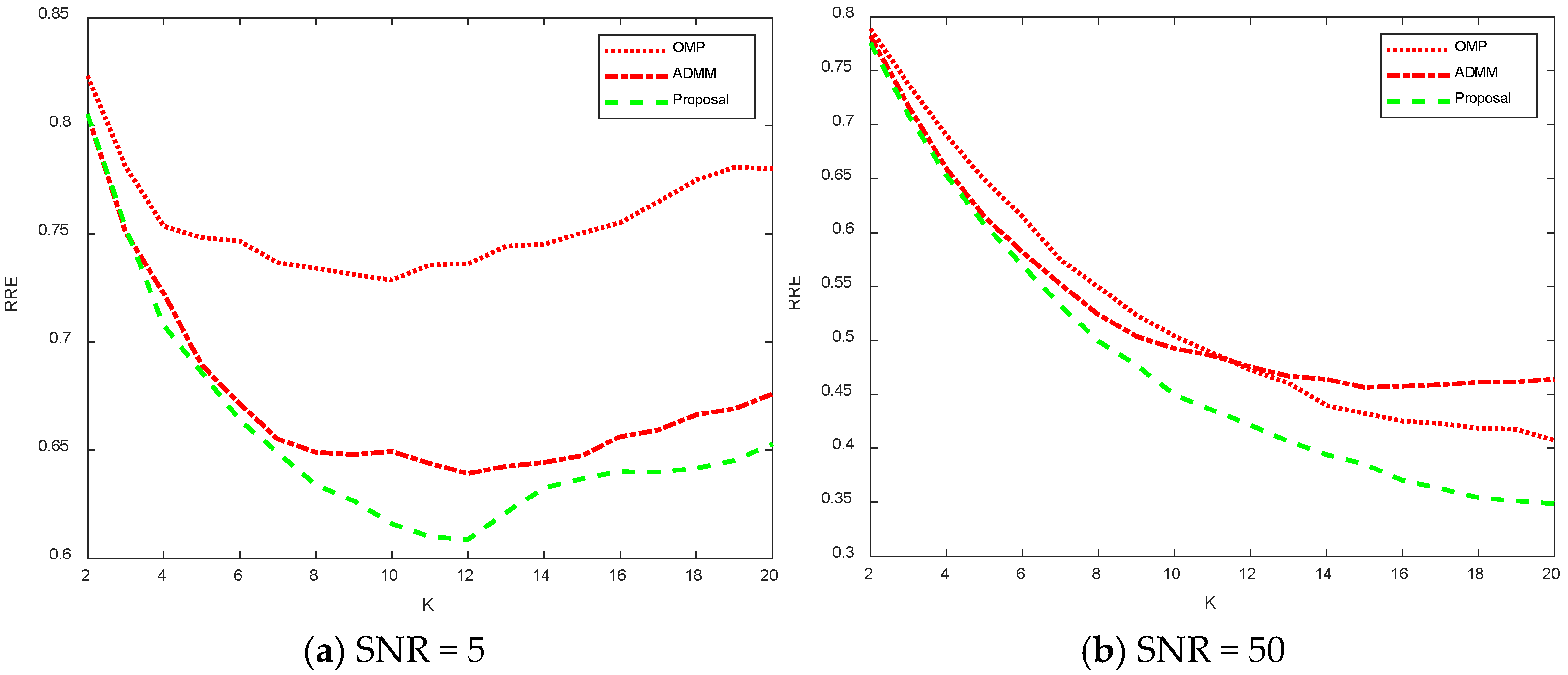

Figure 15 shows a comparison plot of the recovery error for different noise and sparsity values.

Figure 15a shows the recovery error for SNR = 5 for model 2 at different sparsity values. Analyzing the left figure, it can be concluded that as the value increases and the sparsity decreases, the recovery error first decreases and then increases, while as the sparsity decreases, the better the fit to the signal and, therefore, the error decreases. However, since the noise is more drastic in the SNR = 5 case, the recovery process recovers the noise as the sparsity continues to decrease, so the recovery error relative to the original signal gradually increases. For the recovery errors calculated by the three algorithms, the OMP algorithm has the worst recovery, and the algorithm proposed in this paper has the best recovery. For

Figure 15b, SNR = 50, the noise is relatively small. As a result, the recovery error decreases gradually as the sparsity decreases and more received signals are used to recover the original signal. The algorithm proposed in this paper can obtain a smaller relative recovery error compared to the other two algorithms.

The OMP method is a greedy method that is not definitely convergent to the optimal, so the RRE is large. The ADMMs and our proposal is the convex approximation, and our proposal tunes the parameters to get a better solution to the original constrained problem than ADMMs, so the least RRE can be got.

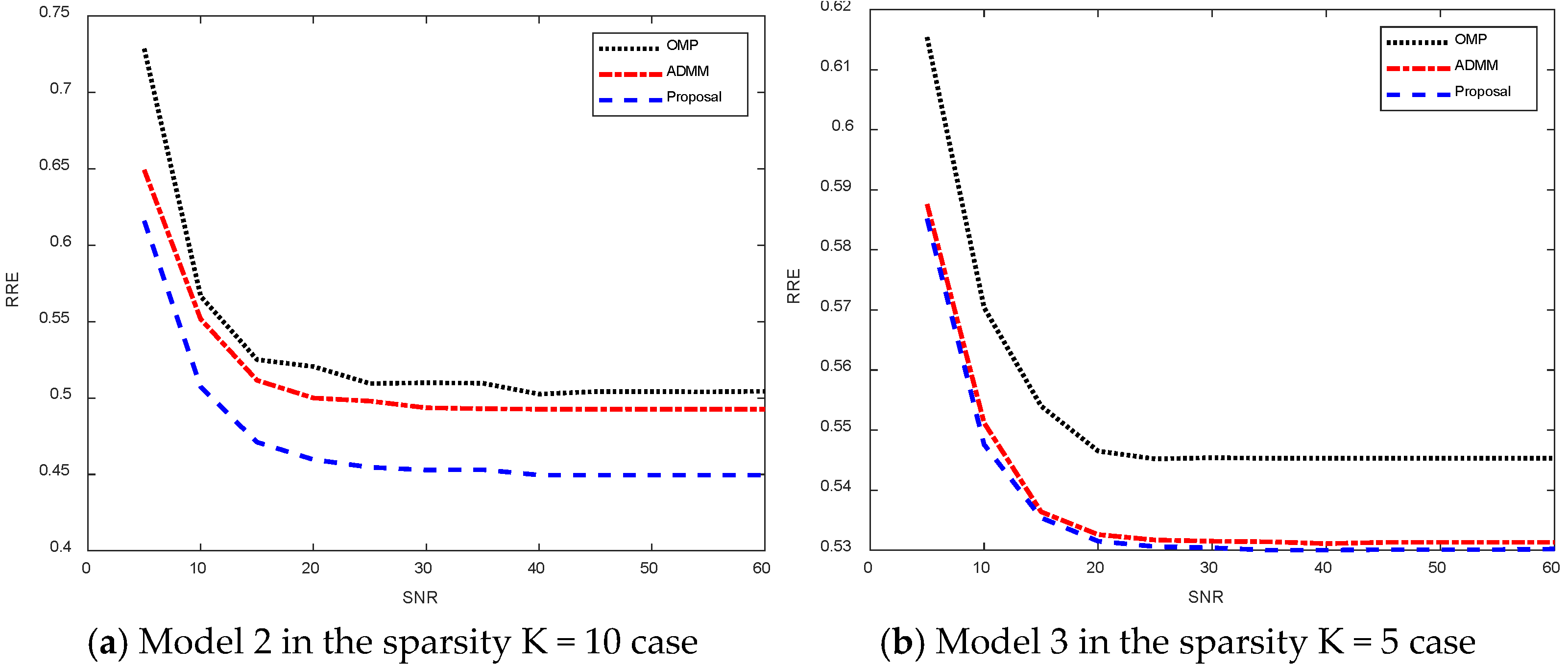

Figure 16 shows the relative recovery error curves of different algorithms for different signal-to-noise ratios.

Figure 16a shows the relative recovery error plot for model 2 with sparsity K = 10. As the noise decreases, the different algorithms stabilize at a certain relative recovery error value, which is due to the error due to sparsity. And among them, the algorithm proposed in this thesis that stabilizes in the relative recovery error is smaller than the other two algorithms, and the algorithm in this thesis is able to achieve better sparse recovery results.

Figure 16b shows the relative recovery error for model 3 in the sparsity K = 5 case, which stabilizes at a certain value as the noise decreases, and again, the algorithm proposed in this thesis is able to obtain a smaller relative recovery error.

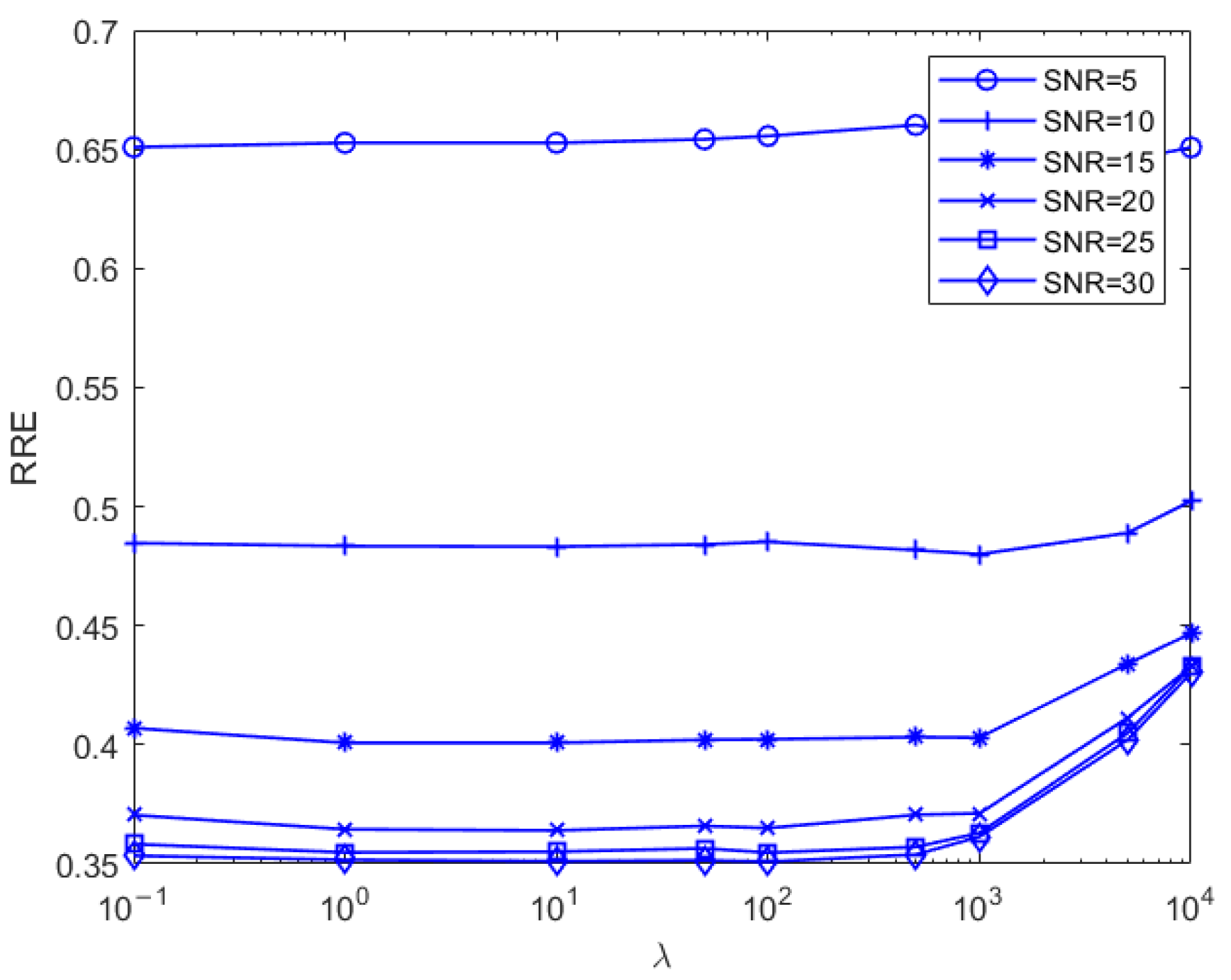

Figure 17 shows, for model 2, the relative recovery error curves for different

values taken at different noises in the sparsity K = 20 case. Analyzing the figure below, it can be concluded that the optimal

value is 5000 in the case of relatively large noise, and the smaller relative recovery error is achieved by increasing the sparsity to suppress the noise. With decreasing noise levels, the optimal “λ” value also decreases. When the signal-to-noise ratio exceeds 15, a “λ” value ranging from 1 to 100 yields a near-optimal relative recovery error.

As shown in Equation (11), the parameter balances the fitting error and noise suppression. If the SNR is large, such as 30 dB, a small value of should be selected to give a large weight to the fitting error in the objection function; thus, a small fitting error can be achieved by minimizing the objection function. Otherwise, the large value of should be used for a small SNR since the large value of can suppress the severe noise.

The effect of the proposed algorithm is analyzed below for different parameter update frequencies, where the number i denotes that the parameters are updated every i iterations. Calculate the recovery time for the model 2, K = 10 case. Analyzing

Table 4, it can be concluded that the minimum time is consumed to change the parameters every 10 iterations. As i increases, the frequency of parameter changes decreases, the amount of computation decreases gradually, while the speed of convergence also decreases, and there exists an optimal value of i that minimizes the time consumed. In this experiment, the optimal number of iterations is 10.