ITap: Index Finger Tap Interaction by Gaze and Tabletop Integration

Abstract

:1. Introduction

- (1)

- Does ITap’s manual cursor mode achieve superior target selection performance, in terms of speed and accuracy, compared to the traditional gaze + pinch interaction method?

- (2)

- Does ITap offer improved usability, comfort, and reduced cognitive load in scrolling and swiping tasks compared to conventional pinch-based gestures?

2. Related Work

2.1. Touch-Based Interaction

2.2. Gaze-Based Interaction

3. ITap Methodology

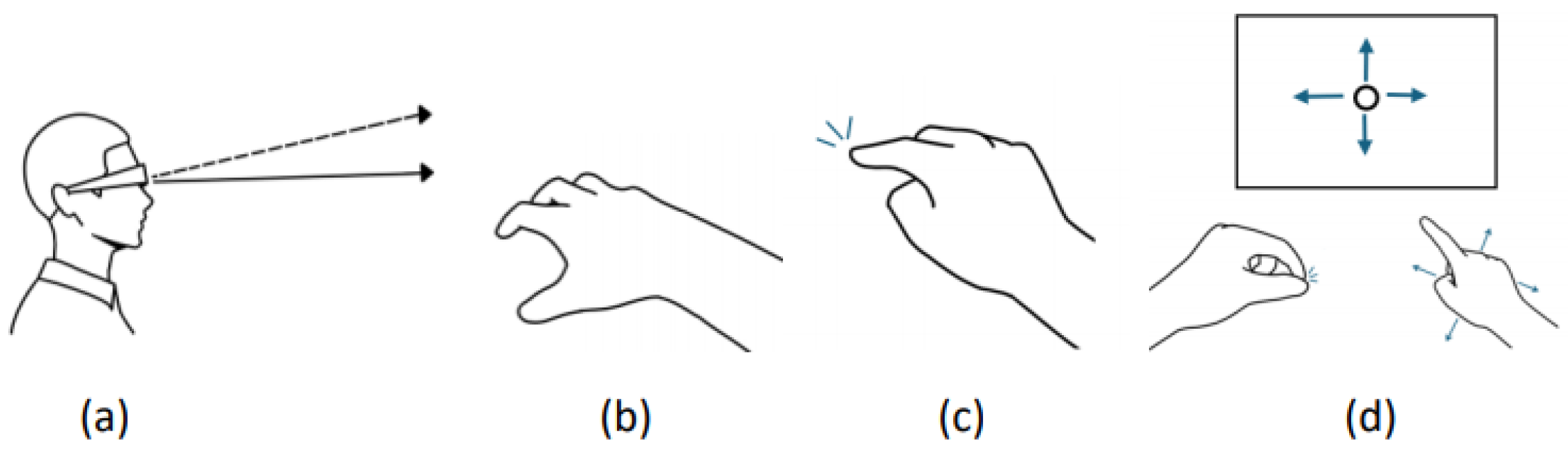

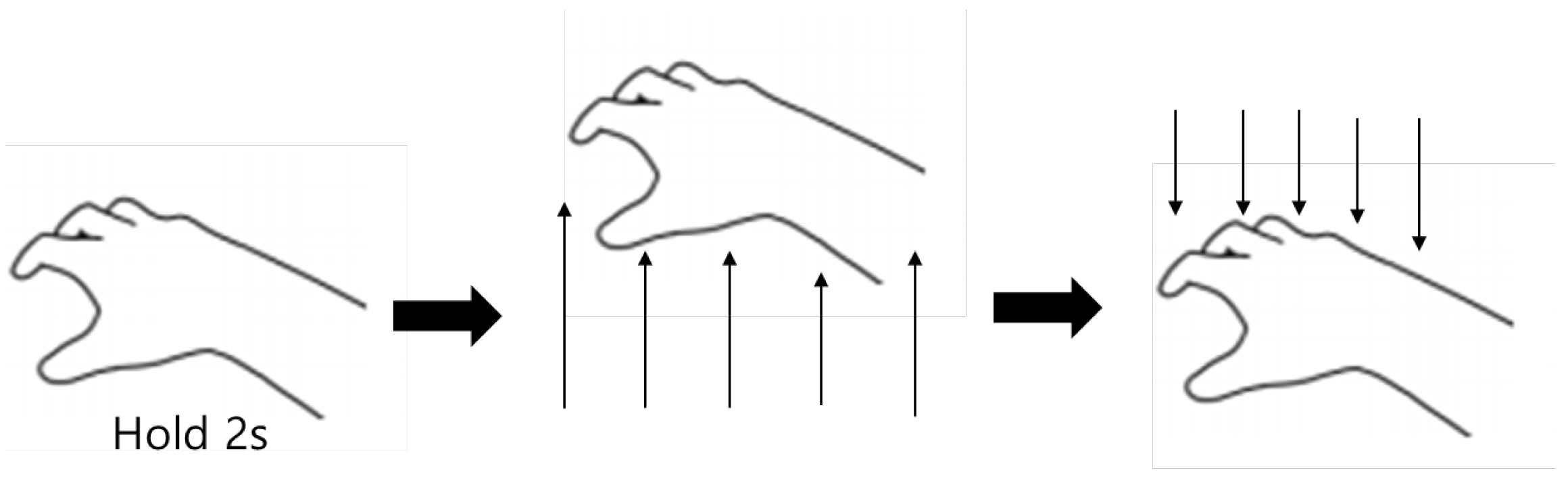

3.1. ITap

3.2. ITap Design Consideration

3.3. Implementation

4. Experiment

4.1. Ethical Considerations

- Ethics Committee Name: Bioethics Review Committee, Kumoh National Institute of Technology

- Approval Code: 202412-HR-011-01

- Approval Date: 26 December 2024

4.2. Participants and Experimental Setup

4.3. Experiment 1: Target Selection Accuracy and Speed

4.4. Experiment 2: Scrolling and Swiping Usability

4.5. Limitations for Experiment

5. Result

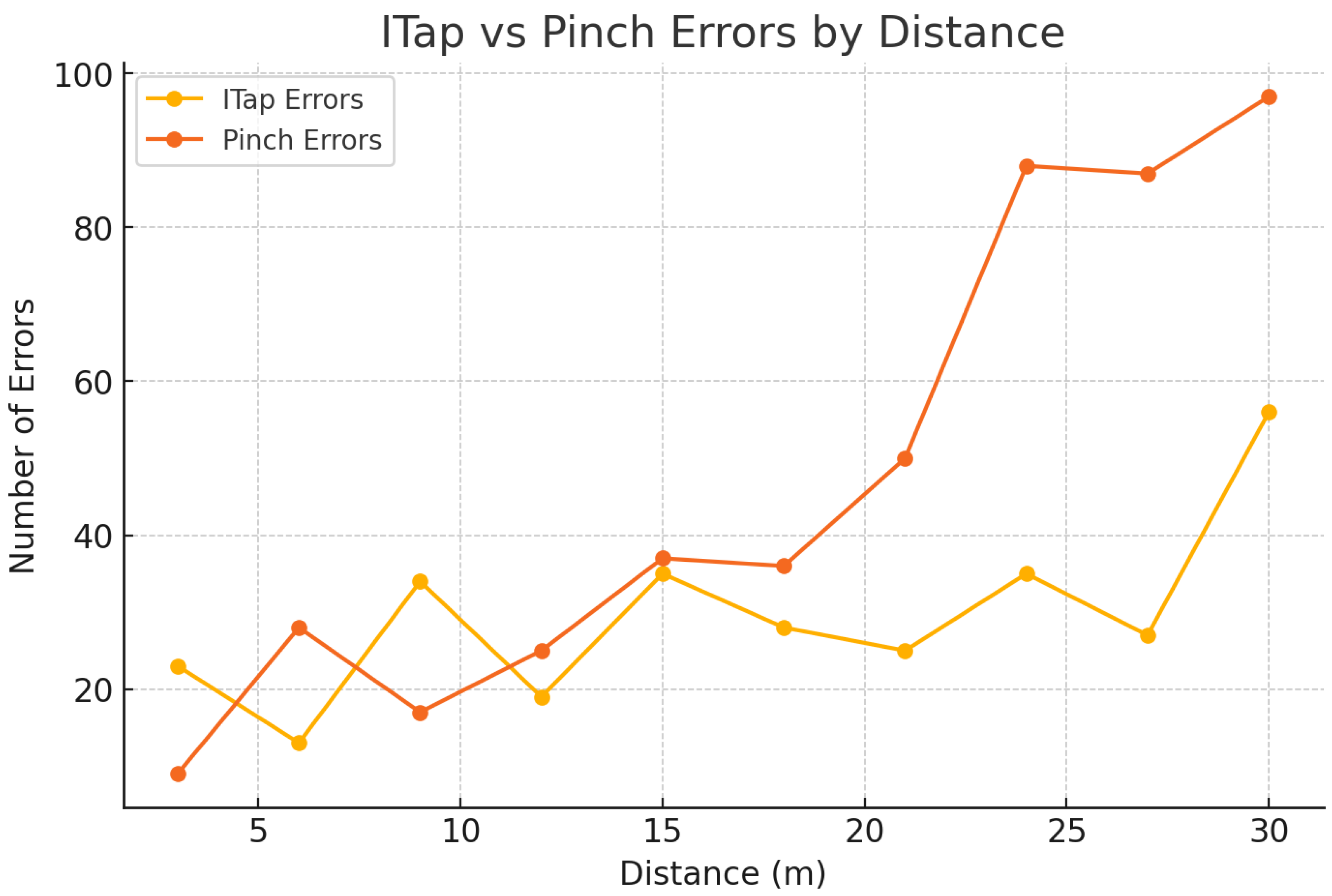

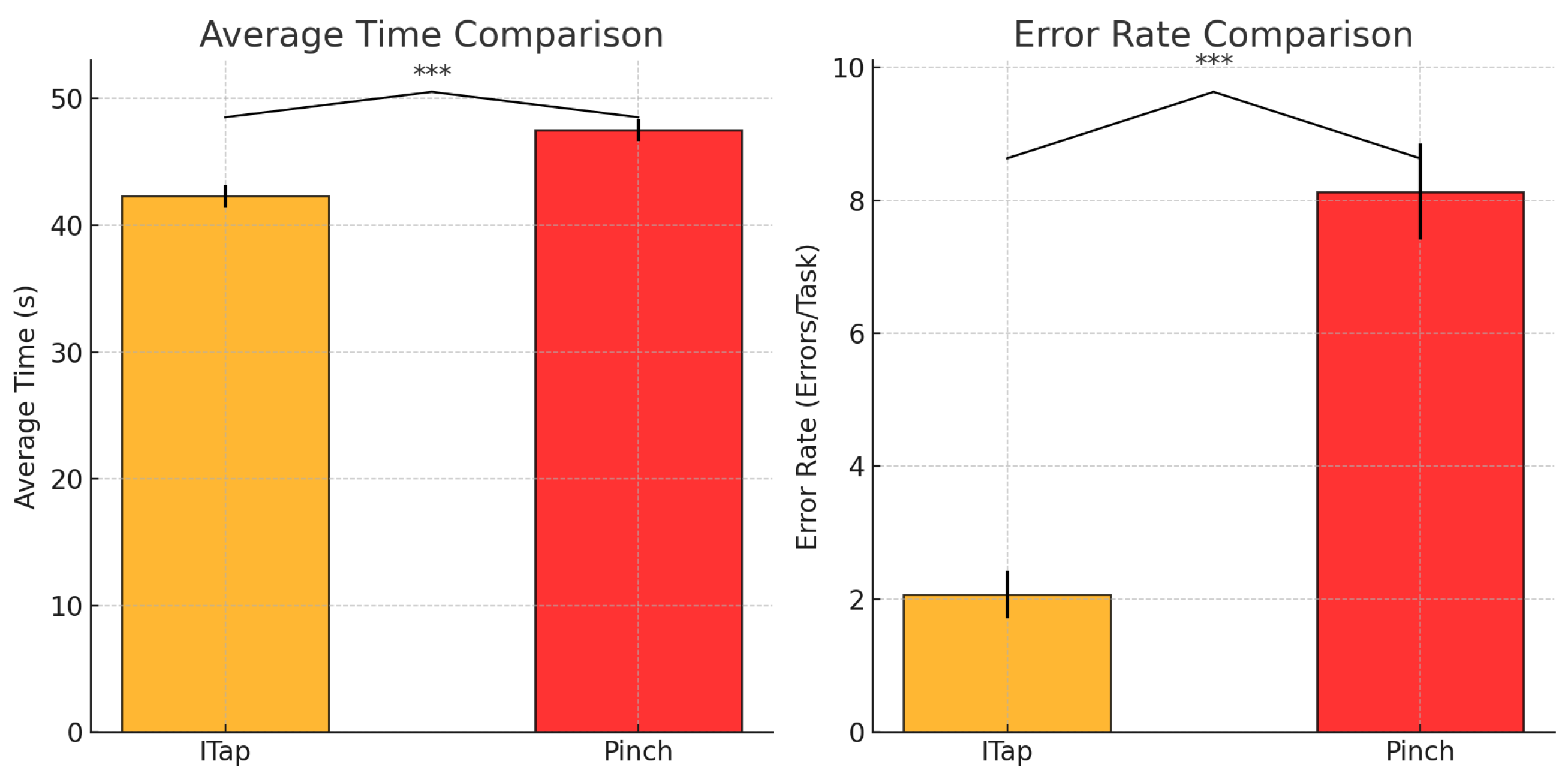

5.1. Selection Facilitation Task Completion Time

5.2. Disambiguation Task Performance

5.3. Usability Evaluation

6. Discussion

6.1. Experiment 1: Target Selection and Disambiguation

6.2. Experiment 2: Usability in Scrolling and Swiping Tasks

6.3. General Implications and Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pfeuffer, K.; Mayer, B.; Mardanbegi, D.; Gellersen, H. Gaze + pinch interaction in virtual reality. In Proceedings of the 5th Symposium on Spatial User Interaction, Brighton, UK, 16–17 October 2017; pp. 99–108. [Google Scholar]

- Pfeuffer, K.; Gellersen, H. Gaze and touch interaction on tablets. In Proceedings of the 29th Annual ACM Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; pp. 301–311. [Google Scholar]

- Shi, R.; Wei, Y.; Qin, X.; Hui, P.; Liang, H.N. Exploring gaze-assisted and hand-based region selection in augmented reality. Proc. ACM Hum.-Comput. Interact. 2023, 7, 160. [Google Scholar] [CrossRef]

- Wagner, U.; Albrecht, M.; Wang, H.; Pfeuffer, K.; Gellersen, H. Gaze, wall, and racket: Combining gaze and hand-controlled plane for 3D selection in virtual reality. In Proceedings of the ISS’24 ACM Interactive Surfaces and Spaces, Vancouver, BC, Canada, 27–30 October 2024. [Google Scholar]

- Feit, A.M.; Williams, S.; Toledo, A.; Paradiso, A.; Kulkarni, H.; Kane, S.; Morris, M.R. Toward everyday gaze input: Accuracy and precision of eye tracking and implications for design. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1118–1130. [Google Scholar]

- Luro, F.L.; Sundstedt, V. A comparative study of eye tracking and hand controller for aiming tasks in virtual reality. In Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications, Denver, CO, USA, 25–28 June 2019; pp. 1–9. [Google Scholar]

- Schuetz, I.; Fiehler, K. Eye tracking in virtual reality: Vive Pro Eye spatial accuracy, precision, and calibration reliability. J. Eye Mov. Res. 2022, 15, 3. [Google Scholar] [CrossRef] [PubMed]

- Cournia, N.; Smith, J.D.; Duchowski, A.T. Gaze-vs. hand-based pointing in virtual environments. In Proceedings of the CHI’03 Extended Abstracts on Human Factors in Computing Systems, Fort Lauderdale, FL, USA, 5–10 April 2003; ACM: New York, NY, USA, 2003; pp. 772–773. [Google Scholar]

- Kopper, R.; Bacim, F.; Bowman, D.A. Rapid and accurate 3D selection by progressive refinement. In Proceedings of the 2011 IEEE Symposium on 3D User Interfaces (3DUI), Singapore, 19–20 March 2011; pp. 67–74. [Google Scholar]

- Steed, A.; Parker, C. 3D selection strategies for head-tracked and non-head-tracked operation of spatially immersive displays. In Proceedings of the 8th International Immersive Projection Technology Workshop, Monterey, CA, USA, 7–9 November 2004; Volume 2. [Google Scholar]

- Sidenmark, L.; Prummer, F.; Newn, J.; Gellersen, H. Comparing gaze, head, and controller selection of dynamically revealed targets in head-mounted displays. IEEE Trans. Vis. Comput. Graph. 2023, 29, 4740–4750. [Google Scholar] [CrossRef] [PubMed]

- Kangas, J.; Kumar, S.K.; Mehtonen, H.; Järnstedt, J.; Raisamo, R. Trade-off between task accuracy, task completion time and naturalness for direct object manipulation in virtual reality. Multimodal Technol. Interact. 2022, 6, 6. [Google Scholar] [CrossRef]

- Mutasim, A.K.; Batmaz, A.U.; Stuerzlinger, W. Pinch, click, or dwell: Comparing different selection techniques for eye-gaze-based pointing in virtual reality. In Proceedings of the 2021 ACM Conference, Virtual, 8–11 November 2021; Volume 15, p. 7. [Google Scholar]

- Insko, B.E. Passive Haptics Significantly Enhances Virtual Environments. Ph.D. Thesis, The University of North Carolina at Chapel Hill, Chapel Hill, NC, USA, 2001. [Google Scholar]

- Teather, R.J.; Natapov, D.; Jenkin, M. Evaluating haptic feedback in virtual environments using ISO 9241–9. In Proceedings of the 2010 IEEE Virtual Reality Conference (VR), Waltham, MA, USA, 20–24 March 2010; pp. 307–308. [Google Scholar]

- Hansberger, J.T.; Peng, C.; Mathis, S.L.; Shanthakumar, V.A.; Meacham, S.C.; Cao, L.; Blakely, V.R. Dispelling the gorilla arm syndrome: The viability of prolonged gesture interactions. In Proceedings of the Virtual, Augmented and Mixed Reality: 9th International Conference, VAMR 2017, Held as Part of HCI International 2017, Vancouver, BC, Canada, 9–14 July 2017; pp. 505–520. [Google Scholar]

- Li, X.; Han, F.; Sun, X.; Liu, Y.; Li, Y.; Chen, Y. Bracelet: Arms-down selection for Kinect mid-air gesture. Behav. Inf. Technol. 2019, 38, 401–409. [Google Scholar] [CrossRef]

- Jang, S.; Stuerzlinger, W.; Ambike, S.; Ramani, K. Modeling cumulative arm fatigue in mid-air interaction based on perceived exertion and kinetics of arm motion. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 6–11 May 2017; pp. 3328–3339. [Google Scholar]

- Xiao, R.; Schwarz, J.; Throm, N.; Wilson, A.D.; Benko, H. MRTouch: Adding touch input to head-mounted mixed reality. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1653–1660. [Google Scholar] [CrossRef] [PubMed]

- Schäfer, A.; Reis, G.; Stricker, D. Comparing controller with the hand gestures pinch and grab for picking up and placing virtual objects. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 12–16 March 2022; pp. 738–739. [Google Scholar]

- Pfeuffer, K.; Gellersen, H.; Gonzalez-Franco, M. Design principles and challenges for gaze + pinch interaction in XR. IEEE Comput. Graph. Appl. 2024, 44, 74–81. [Google Scholar] [CrossRef]

- Chatterjee, I.; Xiao, R.; Harrison, C. Gaze + gesture: Expressive, precise and targeted free-space interactions. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 9–13 November 2015; pp. 131–138. [Google Scholar]

- Malik, S.; Laszlo, J. Visual touchpad: A two-handed gestural input device. In Proceedings of the 6th International Conference on Multimodal Interfaces, State College, PA, USA, 14–15 October 2004; pp. 289–296. [Google Scholar]

- Nielsen, M.; Störring, M.; Moeslund, T.B.; Granum, E. A procedure for developing intuitive and ergonomic gesture interfaces for HCI. In Proceedings of the Gesture-Based Communication in Human-Computer Interaction: 5th International Gesture Workshop, GW 2003, Genova, Italy, 15–17 April 2003; pp. 409–420. [Google Scholar]

- Kytö, M.; Ens, B.; Piumsomboon, T.; Lee, G.A.; Billinghurst, M. Pinpointing: Precise head-and eye-based target selection for augmented reality. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–14. [Google Scholar]

- Pfeuffer, K.; Alexander, J.; Chong, M.K.; Gellersen, H. Gaze-touch: Combining gaze with multi-touch for interaction on the same surface. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014; ACM: Honolulu, HI, USA, 2014; pp. 509–518. [Google Scholar]

- Zhai, S.; Morimoto, C.; Ihde, S. Manual and gaze input cascaded (MAGIC) pointing. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Pittsburgh, PA, USA, 15–20 May 1999; ACM: Pittsburgh, PA, USA, 1999; pp. 246–253. [Google Scholar]

- Lystbæk, M.; Pfeuffer, K.; Gellersen, H. Gaze-Hand Alignment: Combining Eye Gaze and Mid-Air Pointing for Interacting with Menus in Augmented Reality. Proc. ACM Hum.-Comput. Interact. 2022, 6, 145. [Google Scholar]

- Wang, Y.; Kopper, R. EyeSQUAD: Gaze-Based Progressive Refinement for 3D Selection. Front. Virtual Real. 2021, 2, 607165. [Google Scholar] [CrossRef]

- McDonald, B.; Zhang, Q.; Nanzatov, A.; Peña-Castillo, L.; Meruvia-Pastor, O. SmartVR Pointer: Using Smartphones and Gaze Orientation for Selection and Navigation in Virtual Reality. Sensors 2024, 24, 5168. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Zheng, Y.; Zhao, S.; Ma, X.; Wang, Y. Balancing Accuracy and Speed in Gaze-Touch Grid Menu Selection in AR via Mapping Sub-Menus to a Hand-Held Device. Sensors 2023, 23, 9587. [Google Scholar] [CrossRef] [PubMed]

- Jeong, J.; Kim, S.-H.; Yang, H.-J.; Lee, G.A.; Kim, S. GazeHand: A Gaze-Driven Virtual Hand Interface. IEEE Access 2023, 11, 118742–118750. [Google Scholar] [CrossRef]

- Chen, X.L.; Hou, W.J. Gaze-Based Interaction Intention Recognition in Virtual Reality. Electronics 2022, 11, 1647. [Google Scholar] [CrossRef]

- Stellmach, S.; Dachselt, R. Look and touch: Gaze-supported target acquisition. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 2981–2990. [Google Scholar]

- Bondarenko, V.; Zhang, J.; Nguyen, G.T.; Fitzek, F.H. A universal method for performance assessment of Meta Quest XR devices. In Proceedings of the 2024 IEEE Gaming, Entertainment, and Media Conference (GEM), Los Angeles, CA, USA, 5–7 June 2024; pp. 1–6. [Google Scholar]

- Chen, D.L.; Giordano, M.; Benko, H.; Grossman, T.; Santosa, S. Gazeraycursor: Facilitating virtual reality target selection by blending gaze and controller raycasting. In Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology (VRST), Christchurch, New Zealand, 9–11 October 2023. [Google Scholar]

- Plasson, C.; Cunin, D.; Laurillau, Y.; Nigay, L. 3D tabletop AR: A comparison of mid-air, touch and touch+ mid-air interaction. In Proceedings of the International Conference on Advanced Visual Interfaces, Salerno, Italy, 28 September–2 October 2020; pp. 1–5. [Google Scholar]

- Kim, J.; Ahn, J.-H.; Kim, Y. Immersive Interaction for Inclusive Virtual Reality Navigation: Enhancing Accessibility for Socially Underprivileged Users. Electronics 2025, 14, 1046. [Google Scholar] [CrossRef]

- Billinghurst, S.S.; Vu, K.P.L. Touch screen gestures for web browsing tasks. Comput. Hum. Behav. 2015, 53, 71–81. [Google Scholar] [CrossRef]

- Hou, B.J.; Newn, J.; Sidenmark, L.; Khan, A.A.; Gellersen, H. GazeSwitch: Automatic eye-head mode switching for optimized hands-free pointing. Proc. ACM Hum.-Comput. Interact. 2024, 8, 227. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.; Lee, J.; Ahn, J.-H.; Kim, Y. ITap: Index Finger Tap Interaction by Gaze and Tabletop Integration. Sensors 2025, 25, 2833. https://doi.org/10.3390/s25092833

Kim J, Lee J, Ahn J-H, Kim Y. ITap: Index Finger Tap Interaction by Gaze and Tabletop Integration. Sensors. 2025; 25(9):2833. https://doi.org/10.3390/s25092833

Chicago/Turabian StyleKim, Jeonghyeon, Jemin Lee, Jung-Hoon Ahn, and Youngwon Kim. 2025. "ITap: Index Finger Tap Interaction by Gaze and Tabletop Integration" Sensors 25, no. 9: 2833. https://doi.org/10.3390/s25092833

APA StyleKim, J., Lee, J., Ahn, J.-H., & Kim, Y. (2025). ITap: Index Finger Tap Interaction by Gaze and Tabletop Integration. Sensors, 25(9), 2833. https://doi.org/10.3390/s25092833