The user-based evaluation aimed at responding to the following research questions:

Before the session, the interaction with the system was explained to participants, detailing all the possible gestures and their operation. More specifically, participants were guided through the system via a short tutorial performed by the facilitator. For each session, the facilitator would wear the AR glasses, while the displayed contents of the glasses were also projected on the laptop screen. The facilitator then performed a detailed walkthrough of the system with the appropriate gestures for the interaction. When the training was completed, the facilitator answered users’ questions if there were any. Furthermore, as all participants were first-time users, they were also provided with a printed summary of the gestures, and were instructed to use it whenever needed during the experiment. In addition, participants were informed that they could do the experiment sitting on a stool or standing up. Meanwhile the facilitator would be monitoring the whole process through the laptop’s screen while also taking handwritten notes of user comments, interactions with the system, errors, and assistance required, as well as task success. This evaluation aimed at determining the usability of the developed User Interface by users who are specialists in the field and who perform the inspection process in the context of their daily job tasks. Furthermore, the intuitiveness and naturalness of the proposed gestures was evaluated.

Scenarios

Defect Detection in the microchip-production line. In this user evaluation, the gesture-based AR application for the PLOs of the microelectronics-assembly production lines was evaluated. More specifically, the application displays the UI that a user has to interact with, through hand gestures, in order to perform the PCB defect-inspection process. The system was evaluated through three main tasks.

Task 1: Initiate the inspection process. In this task, users were asked to initiate the PCB inspection process. In the initial screen of the application, there is a menu with three options. In order to initiate the PCB inspection process, the user has to select the second menu item with the finger-count gesture. The task is successful when the user performs the correct gesture and the pop up message shows up on the screen. Then, the user has to carry out a thumbs-up gesture to start the inspection.

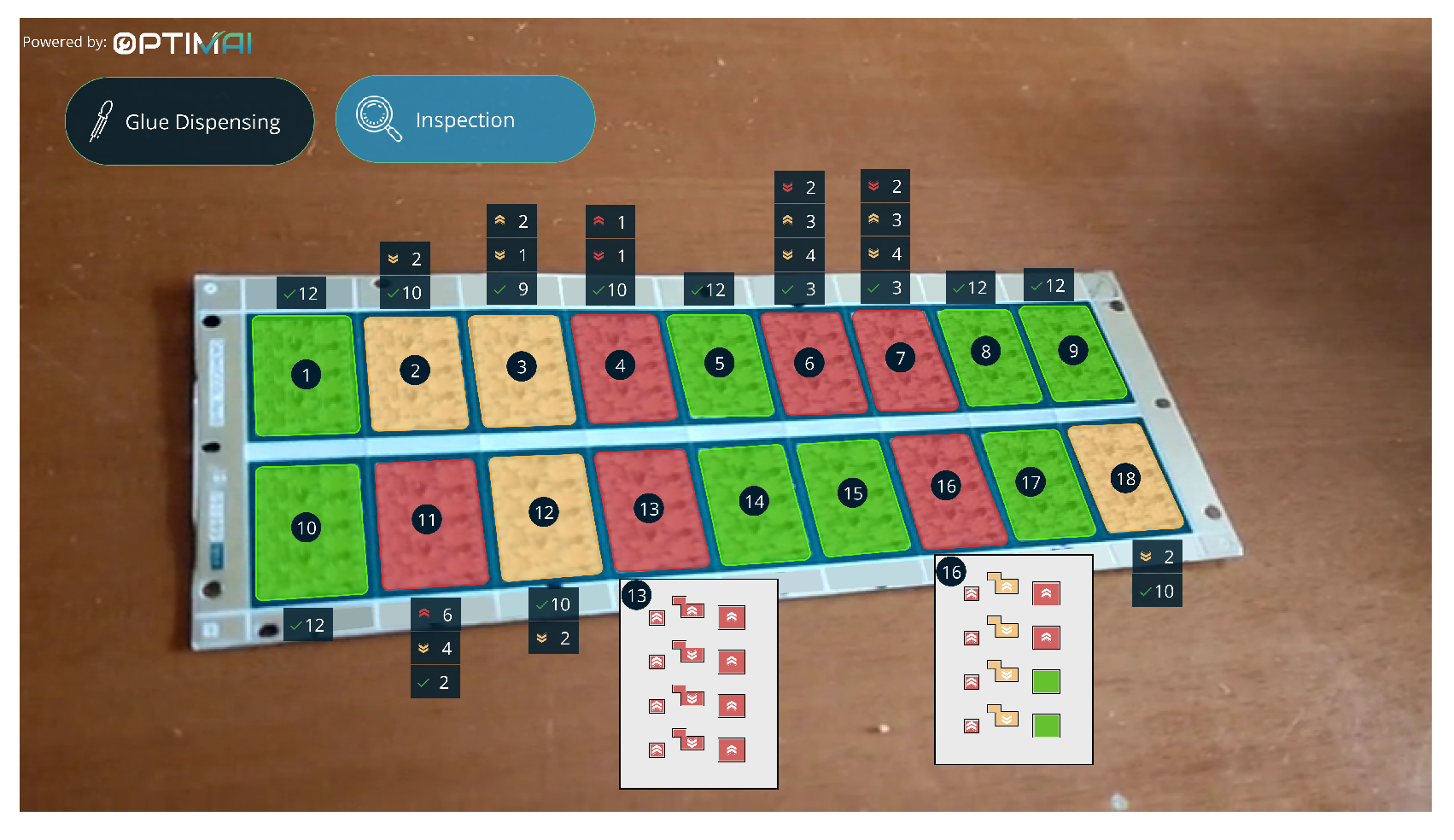

Task 2: View inspection analysis results. Users were asked to view the inspection results and report which microchips were defective and why, which were good, and which acceptable. This task is considered successful if the user is able to see the defects and point out the defective, good, and acceptable microchips and provide a rationale for this.

Task 3: Deactivate the system. Finally, the user was asked to deactivate the system by performing the correct gesture.

Defect Detection in the antenna-production line. In this user evaluation, the gesture-based AR application for the PLOs of the antenna-production line was evaluated. In this case, the application displays the UI that a user has to interact with, through hand gestures, in order to perform the antenna defect-inspection process and view prediction analysis results. The system was evaluated through two main tasks.

Task 1a: Enable defect updates. In the initial screen of the application, there is a menu with two options. In order to initiate the defect updates, the user has to select the second menu item with the finger-count gesture. The task is successful when the user performs the correct gesture and the pop up message with the alerts shows up on the screen.

Task 1b: Stop Production. The alerts that are received regarding each antenna passing through the machine indicate that the antennas are ok. After a few seconds, the system detects a defective antenna, notifies the user, and prompts them to stop the production with the close fist gesture. The task is considered successful when the user performs the gesture and the pop up message for initiating manual inspection appears.

Task 1c: Carry out defect inspection. The user has to perform the gesture finger count one to select the first item from the menu as the pop up message suggests to begin the inspection process. Then, they have to position the antenna in front of the glasses and perform the thumbs-up gesture. The task is considered successful when the user can see the red rectangle surrounding the defect on the antenna and repeat the message displayed for the type of defect.

Task 1d: Return to Home Screen. Finally, when the inspection is completed, the user is prompted to go to the Home Screen with the correct gesture to start the second scenario.

Task 2a: Select the appropriate menu item for obtaining updates. The user has to initiate the defect-update process again by selecting the second menu item with the finger-count gesture.

Task 2b: Prediction analysis. This time, the system predicts that there is an upcoming defect. Therefore, the system prompts the user to stop the production and watch the simulation video of the defect by selecting the third menu item. The task is complete when the user has watched the video.

Task 2c: Deactivate the system. Finally, the user has to deactivate the system with the closed fist gesture.

Bill of Materials inspection in lift manufacturing. In this user evaluation, the gesture-based AR application for the PLOs of the lift manufacturing was evaluated. In this case, the application displays the UI that a user has to interact with, through hand gestures, in order to perform the lift Bill of Materials inspection process, calibrate the motors for the lift velocity, and view unit-testing diagrams for sound, velocity, and pressure. The system was evaluated through three main tasks.

hlTask 1a: Initiate the inspection process. The user has to perform the gesture finger count one to select the first item from the menu. Following the prompt from a pop up message, the user then proceeds to start the inspection process by making a thumbs-up gesture.

Task 1b: Tool collection for the inspection process. The user has to directly look at the tools and gather the ones that are highlighted by the system. The task is considered successful if the user picks up all the tools indicated by the system.

Task 1c: Lift Bill of Materials inspection. In this task, the user must focus directly on the lift Bill of Materials and verify each part against the type specified in the order. If a part matches the indicated type, the user should perform a thumbs-up gesture. Conversely, if a part does not match the specified type, the user should perform a thumbs-down gesture and then proceed to replace the part with the correct type. This process continues until all parts listed in the Bill of Materials are correct according to the specified types.

Lift velocity calibration. Task 2a: Initiate the calibration process. The user has to perform the gesture finger count two to select the second item from the menu. Following the prompt from a pop up message, the user confirms the initiation of the calibration process by making a thumbs-up gesture.

Task 2b: Calibration of the lift velocity. The user uses gestures to calibrate the velocity of the lift. The calibration is conducted by turning each of the three motors to the right or left, depending on the required adjustment. The user selects the appropriate motor performing with the finger one, two, or three gestures, respectively. Then using the slide left or right gesture, they initiate the turn of the motor. To stop the movement of the motor, the operator performs the closing fist gesture.

Task 2c: Complete the calibration process. The user has to perform the confirmation gesture to finish the calibration process.

Lift unit testing. Task 3a: Initiate the unit-testing process. The user has to perform the gesture finger count three to select the third item from the menu. Following the prompt from a pop up message, the user confirms the initiation of the unit-testing process by making a thumbs-up gesture.

Task 3b: Lift unit-testing diagrams. The user carefully looks at the diagrams in front of them. The task is considered successful if the user indicates the correct max value of the sound in those diagrams.

Task 3c: Complete the unit testing. The user has to close the diagrams and complete the step using a thumbs-up gesture.

Results

This section presents the key findings from the evaluations. Initially, we discuss the success of each task based on experiments conducted at the three pilot sites. Subsequently, participant satisfaction regarding task performance is examined through the analysis of responses to the SEQ. Insights into system usability, as perceived by participants, are derived from the results of the SUS questionnaires. Qualitative feedback from participants, obtained through semi-structured debriefing interviews, is also summarized. Finally, this section provides valuable design guidelines for gesture-based AR systems in industrial environments.

Task Success. Regarding the microelectronics application evaluation, all participants successfully completed task 3 by performing the correct gesture. For task 1, 70% of the participants performed the correct gesture. The rest of the participants were somewhat confused and performed the wrong gesture with 2 of them performing the correct one after not seeing a response from the system (therefore, the task was marked as Partial Success), and the other receiving a hint from the expert (hence, the task was marked as failed). For the second task, 90% of the users could distinguish the acceptable and borderline acceptable microchips. However, some of them (56%) could not explain the reason behind the defective microchips, relating the defectiveness with the glue annotation areas and numbers marked as partially successful. Furthermore, one of the users was colorblind and could not identify the difference between the colored microchips; thus, they inferred the answer, and the task was marked as ‘Fail’. In summary, Task 3, was 100% successful, while Task 1 was 70% successful with 20% partially successful and 10% failed, and Task 2 was 40% successful, 50% partially successful, and 10% failed.

During the antenna-application evaluation, 100% of the participants successfully completed Task 1b, 2a, and 2b. Regarding Task 1a, 20% of the participants were not sure how to select the menu item and had to consult the cheatsheet to perform the correct gesture. Regarding Task 1c, during the inspection process, the users were asked about the type of defect and three of them could not determine the type of defect. The reasons behind this were that the font size was considered somewhat small and also that the message was not clearly seen due to misplacement of the glasses. In Task 1d, 20% of the participants did not remember the gesture for going back to the Home Screen so they needed to look at the cheatsheet. Finally, for Task 2c, 20% of the users also did not remember how to deactivate the system and they performed the correct gesture after consulting the cheatsheet. None of the tasks were completely failed. Task 1b, 2a, and 2b were 100% successful. Task 1a, 1d, and 2c were 80% successful and 20% partially successful, while Task 1c was 70% successful and 30% partially successful.

During the evaluation of lift manufacturing, all participants successfully completed the tasks related to the selection of menu items (Tasks 1a, 2a, 3a), as well as the main tasks including the calibration of lift velocity (Tasks 2b, 2c), understanding unit-testing diagrams (Task 3b), and inspection of the Bill of Materials (Task 1c). However, during Task 1c, two participants reported difficulties in reading the text and understanding the detection indicators. Regarding the collection of the tools for the inspection process (Task 1b) 60% of the participants successfully completed the task, 30% partially successful, and 10% failed. The main reason for the failures, based on the observer notes, was the placement of the UI that indicated the tools to be collected, their quantity, and remaining items. Placed in the bottom right corner of the AR glasses, this UI was blurry for some participants, impeding readability. Moreover, one participant who failed the task was not familiar with English, which is the language of all the UIs. Furthermore, Task 3c, involving unit-testing completion, proved challenging for 20% of participants, who partially completed the task by performing the wrong gesture initially (Confirmation); however, they performed the correct one after the system did not respond. In summary, Tasks 1a, 1c, 2a, 2b, 2c, 3a, and 3b were successful, Task 1b was 60% successful, 30% partially successful, 10% failed, and Task 3c was 20% partially successful.

Overall, the most important difficulties encountered pertained to the recollection of appropriate gestures to perform and understand the icons for annotating the detected defects and parts of the Bill of Materials. This highlights the need for training users before the actual system usage, something which is expected to be the norm for the actual deployment of the system in the foreseen setting.

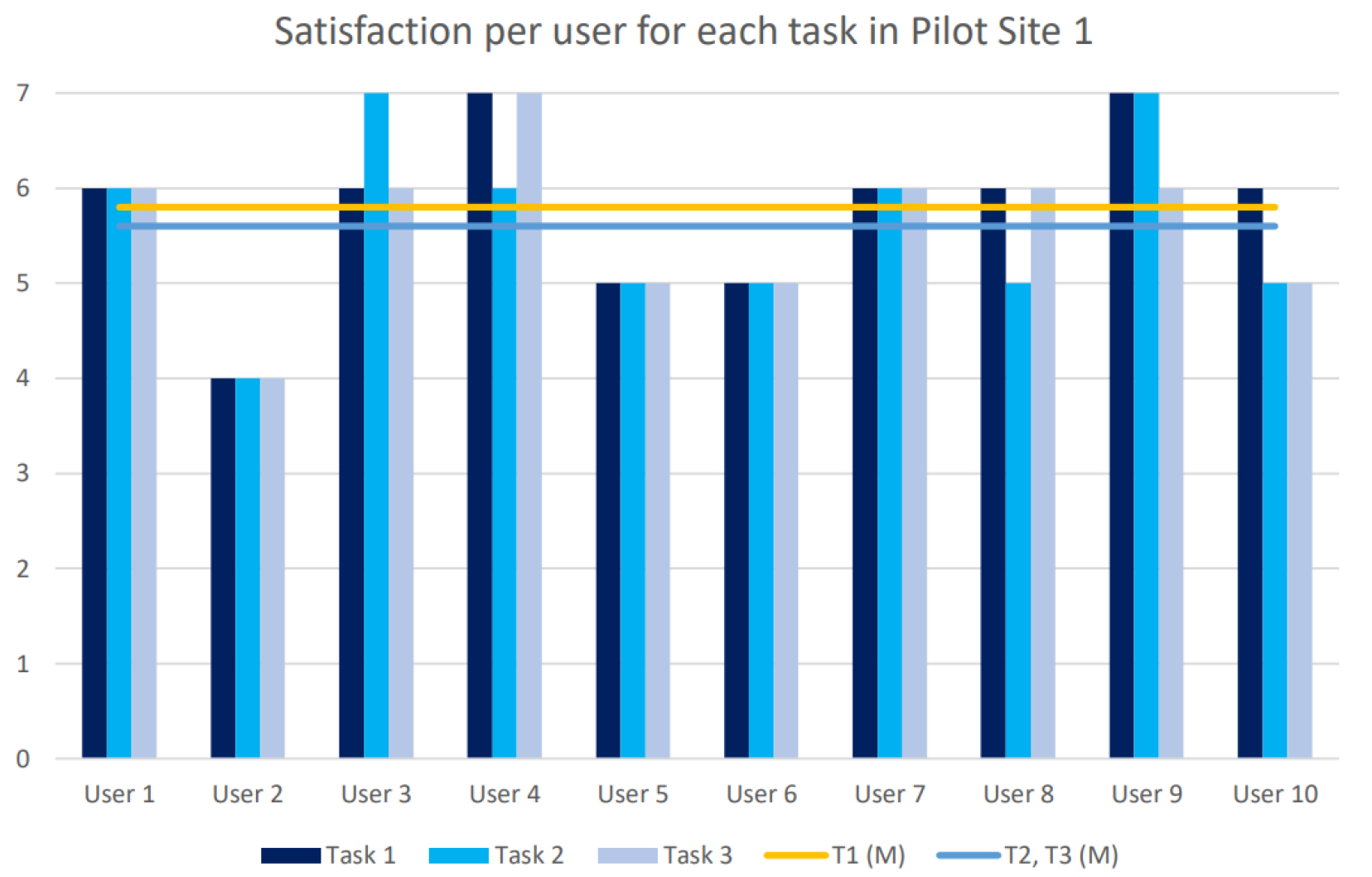

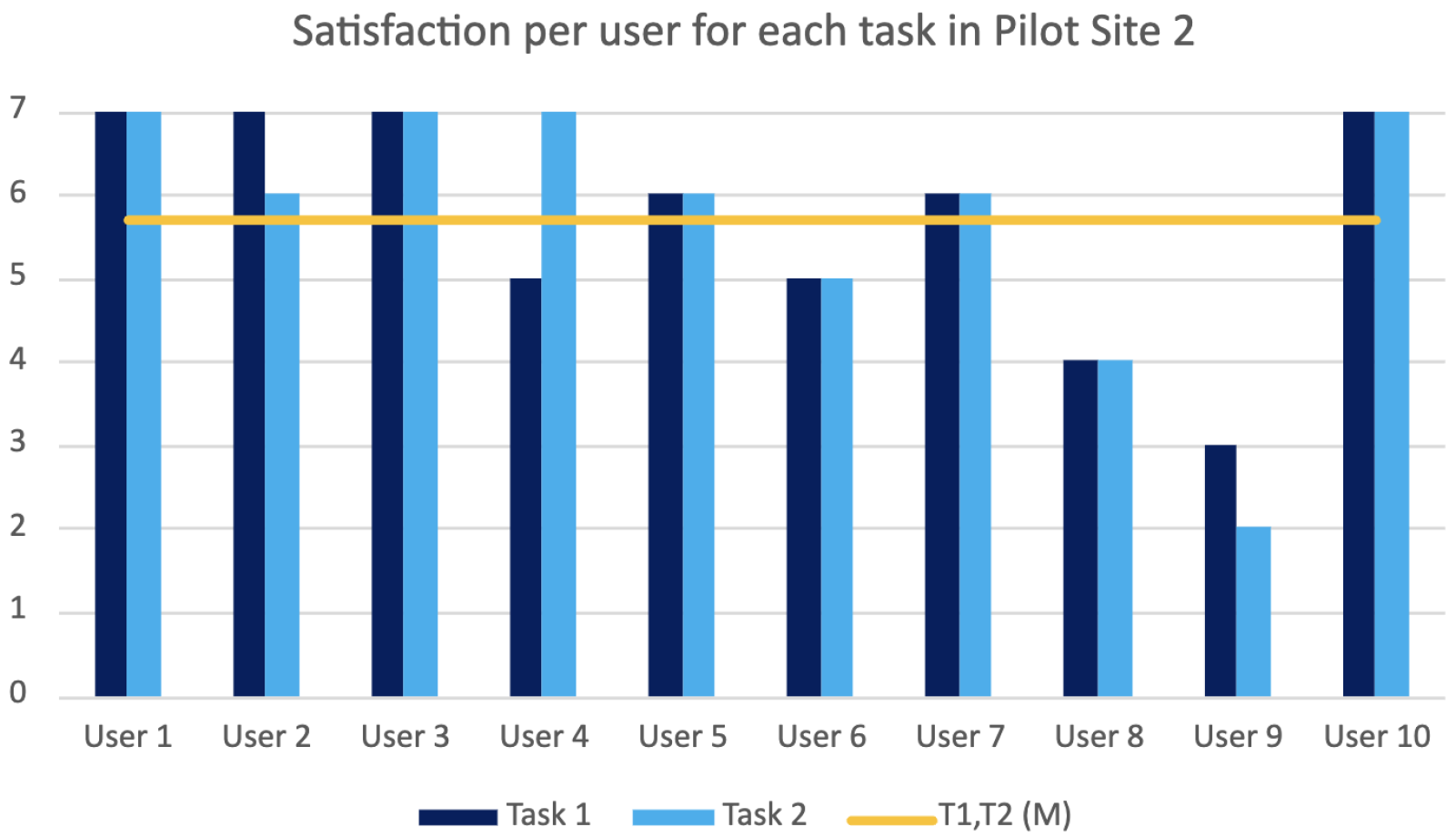

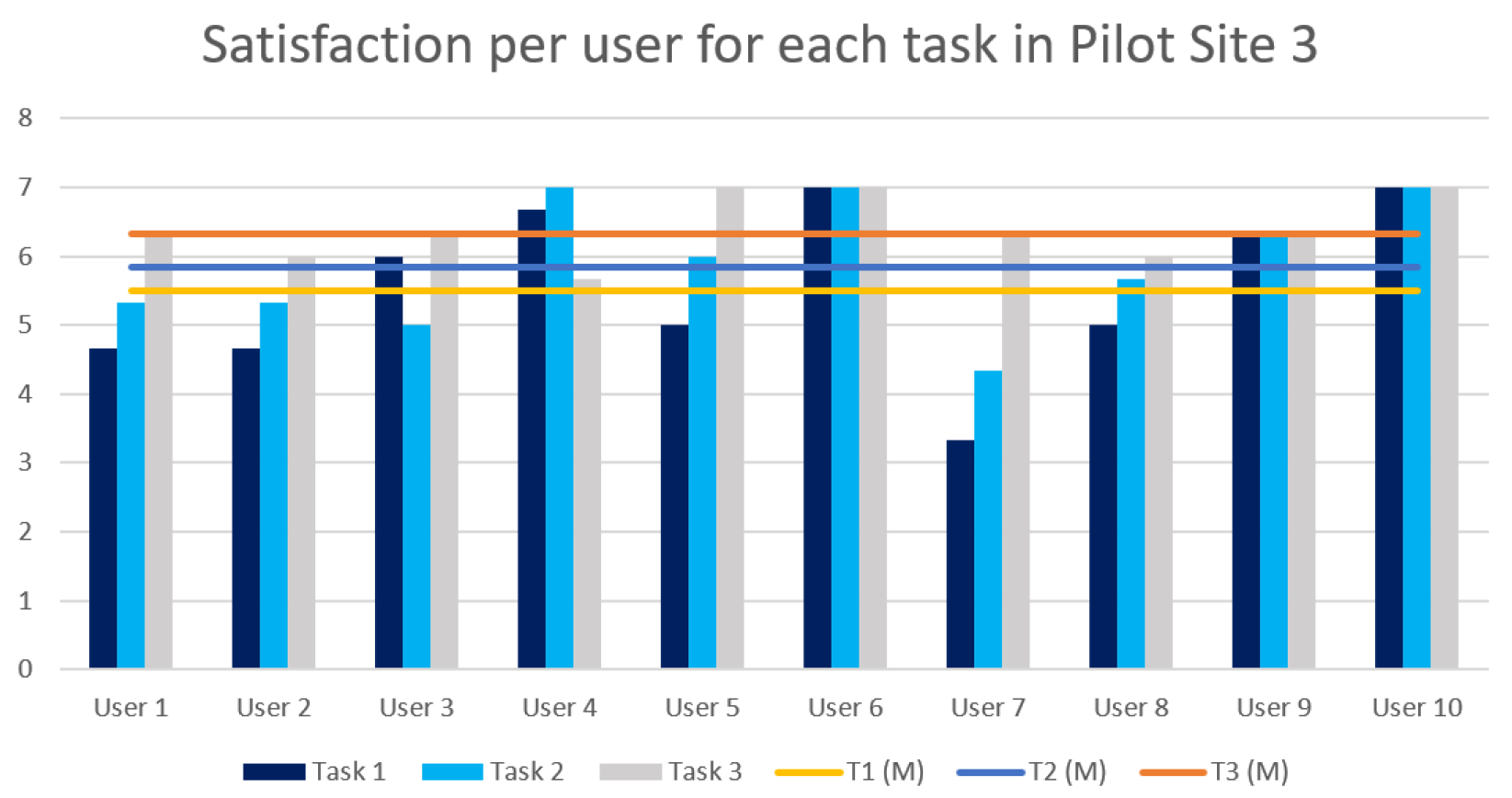

Satisfaction per task. In this section, we discuss the satisfaction of the participants regarding each task, based on their responses to the SEQ questionnaire. For each pilot site, the participants were asked to rate the difficulty of each task on a scale from 1 (= very difficult) to 7 (= very easy). The results are presented in detail in

Table 5,

Table 6 and

Table 7.

For Pilot Site 1, as illustrated also in

Figure 5, participants rated all three tasks as relatively easy, with average scores above 5.5, indicating good perceived usability. Task 1 was perceived as slightly easier than Tasks 2 and 3, though the differences are minor. The standard deviations are below 1.0, suggesting consistent responses across participants. For Pilot Site 2 (see

Figure 6), the scores indicate generally positive task experiences. However, the higher standard deviations suggest greater variability in participant responses. For Pilot site 3, results per user are provided in

Figure 7. These scores show an ascending trend in satisfaction across tasks, with Task 3 achieving the highest mean. Task 1 had the lowest score and highest variability, possibly due to interface design challenges mentioned earlier in the manuscript.

It is noted that users were asked to rate each task as a whole rather than each sub-task separately.

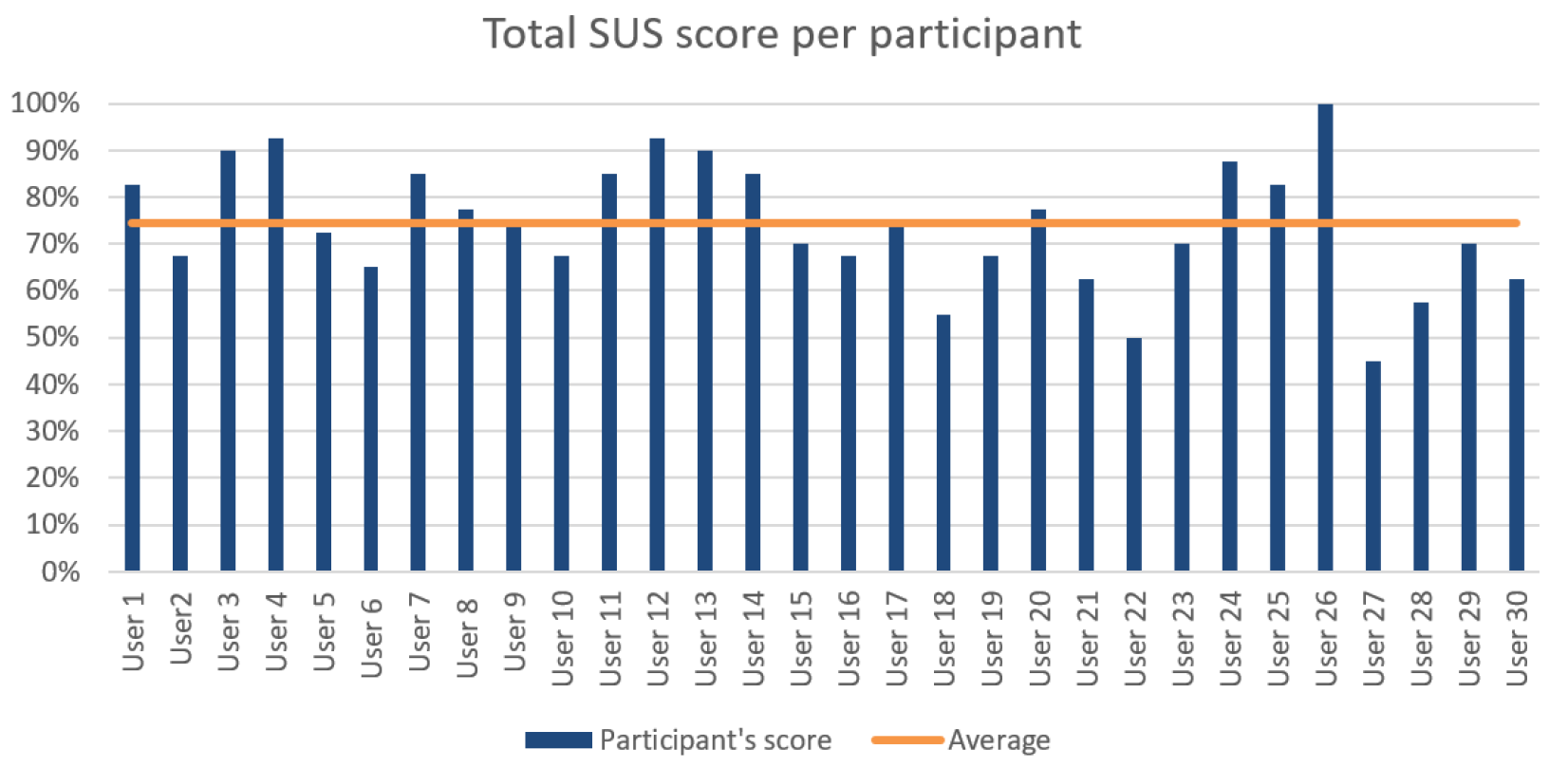

System usability. The SUS questionnaire findings were also very encouraging. The average SUS score was 74.43% (

Figure 8), exceeding the required acceptability threshold of 70% [

90]. Thus, the results indicate that the system usability scored a B grade (i.e., between 74.1% and 77.1%) [

89], indicating satisfactory performance.

Table 8 summarizes the average score (M) and Standard Deviation (SD) results from the SUS questionnaire for each pilot site and overall.

User impressions. On the final phase of the evaluation, there was a semi-structured interview with each participant. These interviews were very informative concerning their opinions on the system and provided useful information for future improvements. Common findings for all the systems are that:

Users expressed that they were satisfied with the system.

Only two felt nausea while wearing the AR glasses.

The vast majority of users (93%) claimed that the system would be useful in assisting them to become more aware of the situation at hand, given the instant feedback and the clear UI.

The majority of users (70%) highlighted that they would use it in their daily operations.

However, 10% of participants suggested that they would prefer using the equipment they already have, considering its high precision, whereas 33% of the participants also pointed out that the specific AR glasses were not ergonomic and comfortable to wear throughout their entire work shift; therefore, they would prefer to not use the system in their daily operations. Finally, 6% of the participants do not find the system useful in their daily operations, compared to their existing equipment which they would not like to have substituted. Attributes of the system that were praised by the users were the following:

The precision of gesture recognition;

The simplicity of the interaction method;

The high resolution and clear view of the AR glasses;

The detailed information provided by the system;

The real-time information provided all at once;

The clarity and simplicity of the User Interface;

The innovative technology;

The ease of learning concerning the system;

The prevention of dangers.

Pertinent quotes from what users said are “Well done! The gestures work flawlessly. This is very important to allow me to focus on my job”; “I like the information that comes all at once right away when something happens with the production line”; “The visuals are clear and the graphics are nice”; “I liked that you can perform the tasks keeping a safe distance from the machine”.

On the other hand, features that users did not like were the following:

The flickering of the GUI during the inspection process;

The AR glasses used which felt uncomfortable for long-term usage;

The blurry image of the message caused by inappropriate placement of the AR headset;

The rather small font size of information regarding the detected defects;

The extra step to perform thumbs-up to start the inspection;

The defect is obvious so it is pointless to have the system detect it but for more difficult defects it would have potential (from the antenna case study);

The detections were not visualized instantly on the AR glasses.

Suggestions made by the participants were to:

‘Lock’ detections at a certain position and not move along with the user’s field of view, to avoid the flickering effect caused by the continuous change of location of the inspection target;

Avoid highlighting materials which are good, in the microchip case study, and indicate to the user only materials which are rejectable or borderline acceptable;

Add Key Performance Indicators (KPIs) that a product should reach;

Provide statistics pertaining to the production line, such as percentage of defective products identified over the worker’s shift;

Wireless AR glasses;

Guidance through animation on how to perform the gesture;

Voice commands could be better;

The system should support multiple languages.

Discussion. This user study aimed to assess the usability and the efficiency of the proposed GUI widgets library as well as the intuitiveness of the gestures integrated in the system. The aim of the study was to determine how easy and user-friendly the system is for an operator to use, whether it supports them in their daily job tasks and how satisfied the users are with it. We observed their behavior with the system as well as their answers to the questionnaires and their comments. This way we were able to answer these questions and find out the limitations and problems of the system. Regarding the usability aspect, most users were able to complete the tasks in the scenarios, exhibiting a task performance of 83.7% (70% successful completion on average for Pilot Site 1, 87.1% for Pilot Site 2, and 94% for Pilot Site 3). The users themselves found the tasks rather easy to accomplish, with an average of 5.77 (5.67 for Pilot Site 1, 5.70 for Pilot Site 2, and 5.95 for Pilot site 3) on a scale from 1 (very difficult) to 7 (very easy), thus yielding a rate of 82.42% for ease of use. An analysis of the reasons that led to the partially successful or unsuccessful delivery of some tasks, as well as elaboration on the feedback provided by users through the interview, highlighted five main strands:

The learnability of the system without prior training;

The clarity and readability of the UI;

Barriers introduced by the dynamic nature of the system;

Difficulty in recalling and executing multiple gestures;

The language of the system.

In more detail, it turned out that even after the short demonstration, participants could always recollect the correct gestures to carry out or the meaning of specific icons. Nevertheless, the system is not intended to be a ‘walk-up-and-use’ system; instead, extensive training is expected to be provided before its actual usage in manufacturing operational environments. Furthermore, some users advocated for incorporating voice commands as an alternative, citing their ease of memorization. However, deploying this feature presents challenges, as certain pilot sites operate in noisy environments, while others require complete silence. In terms of readability, it was concluded that, especially for first-time users, it would be preferable to employ a larger font size, and position UI elements closer to the screen’s center, changes that are going to be introduced to the pertinent widgets and proposed library. In addition, an important finding is that although the system has the potential to provide real-time feedback and visualize defect information on top of a physical object, users suggested to ‘lock’ the visualization in a specific place and avoid a real-time rendering of annotations adapted constantly to the user’s field of view. The option for locking and unlocking the visualization is also an imminent future extension of the widget library. Finally, regarding the ability of the system to support multiple languages, it is a feature that is going to be integrated into the next version of the system.

In terms of suitability for supporting users in their daily job tasks, conclusions can be drawn on the one hand from the task success performance achieved, as discussed above, as well as from participants’ pertinent responses to the corresponding interview question. In this respect, it is notable that 86% of the participants suggested that they find the system useful in becoming more aware of a situation at hand they may be facing, while 70% indicated that they would use it in their daily job operations. Considering that the system is a research prototype, the results are very encouraging and promising for the potential of such context-aware adaptive AR technology for enhancing factory workers’ situational awareness for zero-defect operations. An analysis of users’ responses that were negative indicated that concerns reflect two important aspects that are of general research interest and extend beyond the scope of the current work:

The comfortability of the AR device, especially in the context of a full working shift;

The trust of operators regarding new technologies and their reluctance to abandon their existing tools and approaches.

With regard to the first, additional studies that range beyond the current work need to be conducted. At the same time, it is hoped, that with advancements in hardware, AR glasses in the future will become even more lightweight and comfortable. Regarding the latter, this is currently an active area of research in the field of explainable AI, but also reflects concerns explored in the literature regarding the adoption of technological innovations in the workplace. Findings from relevant studies [

91] indicate that resilience and opportunities for information and training are key ingredients for technology acceptance; considering therefore that the current evaluation was conducted without extensive information and training beforehand, it can be concluded that concerns expressed by workers are natural and expected.

Regarding the third research question, the satisfaction of participants with the system, analysis of the SUS score, and participants’ feedback during the interview yielded positive results. In this regard, the participants expressed positive feedback regarding the system’s user-friendliness, intuitiveness, and ease of learning, particularly regarding the level of granularity and feedback available in a single snapshot of the application. However, there were findings regarding shortcomings of the system based on the users’ opinions, as these have already been analyzed regarding usability improvements. Additional features were also suggested by participants and will be considered in future versions of the system. To summarize the findings from the evaluations based on our observations and the users’ feedback, it is believed that the system exhibits significant potential in assisting users in identifying and rectifying defects within production lines. Despite the majority of users lacking training and exposure to the specific system, they were able to complete the required scenarios successfully, demonstrating the system’s ease of learning and intuitive interface. Both experienced and inexperienced operators were able to utilize the system and carry out the necessary tasks, although it was noted that some users required additional time to become familiar with the gesture-based interface. This study is subject to two main limitations. Firstly, due to the in situ testing of the applications in only three manufacturing pilot sites, the number of users involved in the evaluation was limited. Secondly, the study lacked a cognitive walkthrough for the other two developed applications, which could have provided a more in-depth understanding of the cognitive processes involved in using the interface prior to its development. Nevertheless, this was partially compensated by the co-creation workshops that were conducted with representative end-users, and allowed our understanding of the context of user and end-user requirements.

Guidelines for the design of gesture-based AR systems in industrial settings. This section summarizes the guidelines for the design of gesture-based AR systems, as these were produced based on the systematic review of the literature and complemented with findings from the cognitive walkthrough and user-based evaluation of the applications developed.

- (G1)

Mid-air gestures are suitable for interactions when the user’s primary focus is not on the system.

- (G2)

Users exhibit a preference for traditional interactions (e.g., point and click or touch) but the use of hand gestures makes the experience more immersive and enticing for the users, capturing their attention.

- (G3)

Simple mid-air gestures are easier to remember. When complicated gestures are required, a training phase and a tutorial are beneficial.

- (G4)

Users have small tolerance for delays and errors in gesture recognition.

- (G5)

Dynamic gestures have been more widely integrated and tested in the literature; therefore, they constitute an approach that is safe to adopt.

- (G6)

Consider the gestures that you will employ taking into account the AR technology it is going to use as well as the functionality it needs to provide to the users.

- (G7)

Follow a Human-Centered Design approach to decide upon the final gesture set, the functionality that the system will offer, as well as the information that it will embed in order to be suitable for the task at hand.

- (G8)

Avoid overwhelming the user with unnecessary information (e.g., in our case, showing that everything is ok regarding a produced piece of equipment) and extraneous messages (e.g., in our case, different consecutive messages for defect detection and defect predictions).

- (G9)

When providing information that originates from system reasoning (e.g., information on defect predictions), always provide an account of the underlying rationale.

- (G10)

When providing information that originates from system reasoning (e.g., information on defect predictions), offer to operators the possibility to optionally verify or contradict the correctness of the system’s decision-making

- (G11)

Offer the option for ‘locking’ the information visualization to a stable location in the user’s field of view, even if this ‘contradicts’ the dynamic nature and inherent possibilities of the AR system.

- (G12)

Comfort of the equipment is of paramount importance for its adoption in a working setting.