Abstract

With the increasing demand for time-series analysis, driven by the proliferation of IoT devices and real-time data-driven systems, detecting change points in time series has become critical for accurate short-term prediction. The variability in patterns necessitates frequent analysis to sustain high performance by acquiring the hyperparameter. The Cumulative Sum (CUSUM) method, based on calculating the cumulative values within a time series, is commonly used for change detection due to its early detection of small drifts, simplicity, low computational cost, and robustness to noise. However, its effectiveness heavily depends on the hyperparameter configuration, as a single setup may not be universally suitable across the entire time series. Consequently, fine-tuning is often required to achieve optimal results, yet this selection process is traditionally performed through trial and error or prior expert knowledge, which introduces subjectivity and inefficiency. To address this challenge, several strategies have been proposed to facilitate hyperparameter optimizations, as traditional methods are impractical. Meta-learning-based techniques present viable alternatives for periodic hyperparameter optimization, enabling the selection of configurations that adapt to dynamic scenarios. This work introduces a meta-modeling scheme designed to automate the recommendation of hyperparameters for the CUSUM algorithm. Benchmark datasets from the literature were used to evaluate the proposed framework. The results indicate that this framework preserves high accuracy while significantly reducing time requirements compared to Grid Search and Genetic Algorithm optimization.

1. Introduction

The growing technological evolution in several domains has resulted in a large amount of data production over time. Massive amounts of data have been generated from various applications, such as application logs, sensor devices, and metrics monitoring, among others. In most domains, time-series analysis proves to be a needed and valuable strategy to provide early change detection, which provides adequate decision-making. For instance, advanced sensing technologies and methodologies, such as those proposed by [1], have highlighted the importance of high-precision change-point detection in diverse applications, including industrial monitoring and healthcare systems [2]. However, each time-series scenario demands methods capable of modeling an accurate analysis and adapting to each changing behavior. Meanwhile, traditional methods often face challenges in adapting to the dynamic nature of real-world scenarios, necessitating accurate strategies.

In offline data analysis, it is generally assumed that the data are generated by a nonstationary distribution, which means the behavior has not been the same throughout the data stream [3]. In this way, a notable problem that has been introduced by time-series studies is that changes might occur over time, which can lead to poor prediction performance and ineffective decisions if the set of hyperparameters is not adequate. However, due to the fundamental challenges in time-series patterns, in a real-world scenario, time series show highly nonlinear and nonstationary patterns [4].

Abrupt structural changes occur in many fields. To detect them, statistical analysis aims to identify these time points [5]. In general, the change-point analysis detects whether or not the change has occurred [6]. Due to structural changes that time series suffer from, the quantification and detection of these change points are considered a challenge. Considering change-point analysis and traditional techniques, the Cumulative Sum (CUSUM) algorithm has been widely used for detecting mean shifts since first introduced [7]. Due to its simplicity and ease of use beyond efficiency, it has been considered to be a base for several other proposed approaches, as it is robust to noise and has a low computational cost [8,9,10,11].

CUSUM is designed to identify the discrepancy among sequential numerical data. However, its performance can decrease due to missing representative data or incorrect hyperparameter configuration [12]. Thus, achieving good performance usually requires fine-tuning, which involves expert knowledge [13]. In this sense, to choose hyperparameters, it is necessary to solve fundamental problems in different scenarios to input the correct set, which is both time-consuming and abstract. To avoid a trial-and-error approach for hyperparameter configuration, different cases involving data analysis have been successfully solved with Automated ML (AutoML) over the years [14,15,16,17,18].

AutoML has been widely adopted in machine learning to enhance performance metrics [19], specifically in hyperparameter optimization (HPO), to tackle the inherent complexity and computational intensity of tuning machine learning models [18,20]. In the context of time series, AutoML methods have gained significant acceptance in forecasting [21] and data cleaning [22,23]. Time-series data originate from diverse sources, exhibiting significant variability, while user objectives for drift detection also differ. No single detection method consistently performs well across all scenarios, and tuning algorithm parameters is often complex and unintuitive without expert guidance. Moreover, the high cost of obtaining ground truth data makes the evaluation of detection algorithms a challenging task [24,25].

To do so, in this paper, we aim at using an AutoML-based solution to recommend a set of hyperparameters to CUSUM focusing on high predictive performance and low computational time consumption. In this manner, we propose a framework built on meta-learning (MtL) to automatically configure hyperparameters without human intervention. This strategy aims to make decisions in an objective, data-driven, and automated way [26]. Oriented by metadata information, a suitable set of hyperparameters may be automatically chosen based on performance in a certain scenario. The automatic process reduces human efforts and improves performance, adapting to the problem at hand [27]. In this context, MtL has been widely used as a strategy in various optimization problems [28,29,30].

Recent studies have applied MtL strategies in the context of change-point detection, leveraging their ability to adapt dynamically to evolving data streams. For instance, MtL techniques have been successfully employed for HPO in drift detection methods, significantly reducing manual interventions and computational costs associated with traditional trial-and-error strategies. Silva et al. [20], for example, utilized a lightweight unsupervised MtL strategy specifically designed to enhance drift detector tuning, demonstrating improved adaptability and efficiency in streaming data environments. Martins et al. [25] also applied meta-learning to dynamically adjust active learning parameters in data stream classification, illustrating substantial performance improvements under changing data distributions. However, despite these advancements, existing MtL applications primarily focus on generalized drift detection or classifier performance tuning. In contrast, our proposed approach uniquely integrates a dedicated meta-learning mechanism specifically tailored to automate hyperparameter recommendation for the CUSUM algorithm, directly addressing the gap of real-time parameter adjustment in statistical change-point detection tasks. Thus, this work presents a novel meta-recommendation framework explicitly designed to optimize CUSUM parameters, enabling more responsive, accurate, and computationally efficient drift detection in diverse real-world scenarios.

Thus, given the dependence of the CUSUM algorithm on hyperparameters, we suggest an MtL framework to dynamically recommend a suitable set of hyperparameters to CUSUM for change-point detection in time series. Our framework benefits from the collected signal based on a sliding window to determine the most suitable configuration by extracting relevant meta-features that characterize the underlying time-series dynamics. These meta-features are used to build a meta-learning model that maps time-series characteristics to a set of CUSUM hyperparameters. Our pipeline significantly reduces the time consumption in comparison to the other tested methods in order to find out the suitable hyperparameters, hence, enabling the use of the CUSUM in online environments with complex time-series behavior.

Here, we evaluated the two important aspects (predictive performance and time consumption) from the hyperparameters’ recommendation by MtL to the CUSUM prediction. To have a fair comparison, we analyzed the performance of the proposed framework in comparison to the Grid Search (GS) and Genetic Algorithm (GA) optimization approaches. The CUSUM’s hyperparameters were varied to analyze their relationship with the two main performance metrics. We summarize the main contributions of this work as follows:

- 1.

- Proposed an MtL framework to provide a guided selection of a suitable set of hyperparameters for CUSUM, which resulted to be a significant improvement in drift detection performance.

- 2.

- Analyzed the two-way relationship of drift detection performance and time consumption while varying methods and algorithms (ML baseline).

- 3.

- With the proposed automatic fine-tuning, the traditional CUSUM can be applied in scenarios with stationary time-series changes and even in online scenarios.

We believe that our contribution has potential for real-world applications where timely and accurate detection of change points is critical. In particular, industrial and engineering systems increasingly rely on data-driven monitoring for anomaly detection and predictive maintenance. For example, Sun et al. [11] applied CUSUM-based strategies for fault detection in engines, showcasing the algorithm’s effectiveness in high-reliability systems. Similarly, Kravchik and Shabtai [13] employed change detection methods for identifying cyber-attacks in industrial control systems, where early drift detection is vital for preventing disruptions. The proposed meta-learning approach, similar to Martins et al. [25], enhances these applications by eliminating the need for manual or heuristic hyperparameter selection, which is often infeasible in online or resource-constrained environments. By significantly reducing the time required to identify optimal configurations, our framework supports real-time responsiveness and adaptability, making it suitable for deployment in Industry 4.0 scenarios, such as smart manufacturing, energy systems, and autonomous infrastructure monitoring.

The remainder of this work is organized as follows: Section 2 gives an overview of mean shift detection, focusing on hyperparameters’ optimization, CUSUM, and MtL. Section 3 defines the task and its configuration steps, while Section 4 presents our proposed framework to recommend a proper set of hyperparameters for the CUSUM algorithm, the material used for experiments, the techniques, and metrics adopted. Section 5 shows the results and raises a discussion about them and their advantages and drawbacks. Section 6 presents the main conclusions and directions for future work.

2. Related Work

Time-series analysis has been widely explored in several fields. With respect to the mean shift task, it might occur in several learning problems. Thus, techniques have been developed to identify parts of the distribution in which the relationship between features and targets changes, which is known as concept drift detection [31]. Regarding the univariate distribution, the traditional techniques in detecting quickly abrupt changes have been well studied (e.g., CUSUM) [11,32]. In general, these methods are built as signal analyzers, which are based on the classifier’s performance or incoming data. However, the distribution changes over time, requiring updates or even retraining of models [33]. As mentioned above, the presence of concept drift may cause a significant drop in the accuracy of the models. The presence of changing distribution boosted researchers to develop flexible strategies and an adaptive mechanism to tackle several time-series scenarios. Taking into account the fine-tuning methods, MtL has been applied as an intelligent solution to face these challenges [1,34,35,36].

Designing and tuning ML algorithms requires substantial expertise. Hyperparameter optimization methods, such as Grid Search, Random Search, and Bayesian Search, are commonly employed to improve performance metrics [37]. Tuning involves selecting the hyperparameters for an ML model to achieve suitable performance on a specific task. In contrast, fine-tuning refers to the process of taking a pre-trained model and making minor adjustments to its parameters to adapt it to a new but related task. This approach leverages the existing knowledge of the model, allowing efficient adaptation with typically less data and computational resources [38]. Considering the plethora of possible configurations, automatic recommendation strategies often reduce processing time by pruning the search space, aiming to quickly identify the set of hyperparameters. When building ML models for a specific task, we might take advantage of the experience of related tasks, which can assist the decision-making process. MtL is concerned with observing and learning from ML experiments to provide better predictive performance. For that, it correlates the problem space and the algorithm space with the goal of maximizing performance.

Recent research has focused on improving hyperparameter optimization (HPO) for time-series methods. Jati et al. [21] proposed H-Pro, a technique that leverages data hierarchies to address test-validation mismatches in temporal cross-validation. Zhang et al. [39] introduced SSL-HPT, a self-supervised learning framework that significantly accelerates HPO compared to search-based methods. Wu et al. [40] developed an automated HPO approach using parallel genetic algorithms, demonstrating improved performance and reduced time costs for IoT time-series prediction. Fristiana et al. [41] conducted a comprehensive survey of HPO methods for deep learning in time series classification, finding that metaheuristic algorithms and Bayesian optimization are commonly used. These studies highlight the importance of efficient HPO techniques in enhancing the performance of time-series models across various domains, including forecasting, classification, and IoT applications [21,39,40,41]. However, all of them rely on costly computational methods or require storing long sequences of data. Conversely, Silva et al. [23] proposed a lightweight unsupervised method to tune algorithms for time series. Nevertheless, this method is limited to drift detectors, requiring specific changes to trigger the tuning process.

Concerning recommendations, an example is the model-agnostic meta-learning proposed by [42], which learns an initial set of parameters for the model (in general, a neural network). In this way, it can solve new learning tasks and be applied to a range of different models, achieving relevant performance. Another work in this area is presented by [43]. It proposes an algorithm built on meta-learning to learn from the prior distribution of the model parameters. The authors stated that applying MtL for recommendation speeds up model adaptation and improves performance.

In comparison to the mentioned works, our meta-learning proposal provides a solution focused on CUSUM. Considering its particularities mapped by particular meta-features we propose a more robust and efficient approach by leveraging knowledge from related tasks, enabling faster adaptation, reduced computational costs, and improved model performance across diverse time-series challenges, including hyperparameter optimization [37,42,43], concept drift detection [1,35,36], and recommendation systems [44,45,46].

3. Problem Statement

Due to the dynamical world, approaches must handle nonstationary distributions, i.e., detecting concept drift. The definition of concept drift refers to statistical properties of the target changes over time in unforeseen ways [47]. According to [48,49], given a period of time, the concept drift can be formally represented between two time points (t and ) by:

where represents the joint distribution at time t between X (set of input variables) and y (target variable); in this sense, denotes the joint distribution at time . Concept drift is related to covariance shift in the distribution, i.e., at time t, it can be inferred that concept drift refers to the joint probability change in X and y. Thus, can be defined as a composition of . In this context, concept drift refers to changes in comparison to input data and output, resulting in a variation in the distribution. In this paper, we focused on abrupt concept drift and its impact on predictive performance. To provide reliable results, timely detection was considered essential.

3.1. The Cumulative Sum Algorithm

CUSUM [7] is a statistical technique based on the Sequential Probability Ratio Test (SPRT) [50]. This algorithm is the well-known change-point detector and assumes the distribution before and after the change-point occurrence is completely specified. Suppose that the sequential observations represent the observed signal. The upper CUSUM statistic is defined as . Set . For a chosen and h, drift and threshold, respectively, the time of a change in the signal is estimated by observing when exceeds h. After a change has been detected, g is reset to zero, and the last t for which is taken as an estimate of the change time. The hyperparameter called drift is related to drift correction. Thus, it prevents false positives in the absence of an actual change or a slow drift.

The CUSUM algorithm detects the mean shift of the signal by checking when the test statistic g exceeds some threshold h. Summing consecutive signal values, a large g indicates the mean has changed. Therefore, CUSUM is a sequential analysis technique that accumulates deviations from target values. For that, there must be a correct hyperparameterization for each scenario.

Based on the literature, we inferred there are many improvements in terms of the MtL process and hyperparameters’ recommendation. Regarding time-series analysis and CUSUM, the change-point detection poses challenges not only about the signal structure but also that the MtL process cannot be costly. Therefore, the proposed approach implies identifying suitable hyperparameter values for the CUSUM in order to automate its process.

3.2. Meta-Learning for Tuning

Given a task from a plethora of options, the basic configuration and the appropriate steps to hyperparameters’ recommendation built on MtL can be designed. The pipeline is built on two macro steps: (1) Meta-learning modeling; and (2) Meta-learning predictions. The first step consists of learning from historical information. Regarding the second step, ML aims to automatically predict a suitable configuration for algorithms to predict them according to the input data.

Formally, let denote the CUSUM algorithm associated with a hyperparameter space . A vector of hyperparameters for is denoted by , where . Given a data set composed of meta-features from a distribution , we have combinations of CUSUM and respective hyperparameters. As we are facing a multi-output problem, is associated with a single instance.

The hyperparameters’ recommendation problem is described as how to find a function able to map . There are several ways and alternatives for modeling the mapping function. In this paper, we followed through meta-learning, which is considered a fast solution and task-independent for not requiring information from the input data set at hand [51]. In summary, the pipeline consists of data preparation (metadata), inducting ML models to learn from historical information, and then tuning ML algorithms.

In the context of meta-learning, a meta-database is a repository that stores meta-examples, each representing a learning problem along with the performance metrics of various algorithms applied to it. Each meta-example comprises a set of descriptive features, known as meta-features, which characterize the problem’s properties, and a target attribute, referred to as the meta-target, indicating the optimal algorithm or performance outcome for that problem. This structure facilitates the analysis and selection of suitable algorithms for new problems by leveraging prior knowledge encapsulated in the meta-database [52].

4. Experimental Methodology

We evaluated the performance of the proposed approach by assessing the effectiveness of CUSUM detection using detection metrics, specifically the F1-Score. It is worth noting that our proposal offers an integrated solution for hyperparameter tuning; however, our primary focus is on improving the drift detection rate. Since we want to explore how chosen hyperparameters affect the performance and propose a meta-modeling, we use straightforward comparison using CUSUM to provide an overview of meta-recommendation achievements when considering both hyperparameters ( and h). Regarding the whole process, the pipeline is built on two macro steps: (1) meta-learning modeling; and (2) meta-learning predictions. The first step consists of learning from historical information. Regarding the second step, ML aims to automatically quickly find a better set of ML models by predicting them according to the input data. In summary, the pipeline consists of data preparation (metadata), inducting ML models to learn from historical information, and then tuning hyperparameters from ML algorithms.

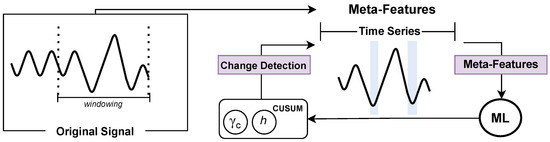

In order to obtain a robust and well-performing pipeline, we propose the architecture shown in Figure 1. The framework is fashioned in five steps: (1) Metadata preparation; (2) Meta-feature extraction; (3) Meta-model induction; (4) Hyperparameters’ prediction; (5) Evaluation step, which defines the meta-targets, the classes/labels to be predicted by the MtL recommender system. In summary, it checks for abrupt change detection in the original signal.

Figure 1.

Overview of the problem and experimental framework.

In this context, we propose a framework, which is built on meta-models to configure CUSUM’s and h hyperparameters to detect change points in time series. An MtL approach was introduced to generate metadata that supports the automatic selection of CUSUM hyperparameters, enabling faster adaptation than GS while maintaining accuracy. The next subsections present the proposed approach in detail, the datasets employed in this work, the evaluation metrics used, and the experimental setup.

To assess the impact of different meta-feature domains on the meta-learning process, we conducted an analysis of the extracted meta-features using the TSFEL (Time-Series Feature Extraction Library) package. This analysis aimed to evaluate how different types of meta-features contribute to both predictive performance and computational efficiency. The extracted meta-features were categorized into statistical, temporal, and spectral domains, and experiments were conducted selectively using different combinations of these feature sets.

In this study, we defined seven experimental setups to examine the effect of each meta-feature category: (1) Using all available TSFEL features; (2) Using only statistical meta-features; (3) Using only temporal meta-features; (4) Using only spectral meta-features; (5) Combining statistical and spectral features; (6) Combining statistical and temporal features; and (7) Combining temporal and spectral features. Each of these configurations was evaluated in terms of F1-Score and processing time.

4.1. Metadata Preparation and Meta-Feature Extraction

Data preparation is a typical process usually adopted in ML pipelines. Although inspired by human learning, our proposal involves MtL techniques to compose our dataset. Regarding the MtL workflow in this work, it is considered a learning process applied to metadata, which consists of a large set of features extracted from the input time series. This input signal consists of a time series from a fixed-size slide window. Each part of this distribution represents a sub-dataset of the previous one. Following a divide-and-conquer strategy, we segment the input time series into smaller, fixed-size windows. From each segment, relevant statistical, temporal, and spectral characteristics are extracted to compose the metadata, ensuring a detailed representation of the time-series dynamics.

Due to ML pipelines, features must be extracted from time series to turn them into a set of properties that characterizes the distribution. Composing the second step, those features were obtained from TSFEL. Introduced by [53], this package computes features across temporal, statistical, and spectral domains built to provide fast exploratory data analysis and feature extraction. Then, the extracted features, called meta-features, are combined with meta-targets, which are the most suitable CUSUM hyperparameters collected based on GS’s performance. For each window, we saved the set of hyperparameters that had the best performance for the respective time series. Using the meta-database, the MtL step induces a meta-model.

4.2. Regression Meta-Model (Recommender)

Following the framework, the third step is related to training the ML algorithm. Since the proposed method is based on MtL strategies, an associated base-learner is required. Regression models provide the final decision of a given sample based on its feature vector. Several of them were selected based on their proven capabilities, interpretability, and suitability in related problems and because there are well-known algorithms with relevant results in different problems, as follows: Decision Tree (DT), which is widely applied to represent a series of rules that lead to a class or value. It was chosen due to its interpretability and straightforward handling of nonlinear relationships, essential for meta-learning tasks involving clear rule-based decision making [54,55]; Random Forest (RF) was included for its robustness, as it aggregates multiple DTs, reducing variance and improving generalization capabilities. It is particularly advantageous in managing high-dimensional metadata and noisy datasets and provides more accurate predictions [56,57]; Support Vector Machine (SVM), which is a statistical learning algorithm, used for supervised ML, was selected due to its effectiveness in handling complex patterns and providing stable predictions in high-dimensional feature spaces. SVM excels in achieving precise regression outcomes [58,59].

By explicitly outlining these choices, our approach benefits from the complementary strengths of these models, enabling a robust and comprehensive meta-learning solution for hyperparameter recommendation in time-series analysis. This strategic combination aligns seamlessly with the framework’s overall objectives, supporting the adaptability required for handling diverse time-series scenarios and effectively addressing practical challenges such as computational efficiency, real-time responsiveness, and adaptability to concept drift. Moreover, employing different models allows us to explicitly validate the advantages and limitations of each, thereby providing insights into their performance boundaries and ensuring a more informed and balanced selection strategy in practical applications.

4.3. Datasets

To compare the classical CUSUM and the proposed solution, predictive performance, and time processing were considered. For that, we adopted benchmark datasets. The benchmark datasets include both real and synthetic ones that are used in the literature in general, which were collected from various online sources, including WorldBank, Eurostat, GapMinder, and Yahoo Finance. Before performing the experiments, all datasets underwent meticulous preprocessing to ensure the reliability and reproducibility of our findings. The preprocessing pipeline involved initial data cleaning, including the removal of missing values and anomalies identified through exploratory data analysis. Subsequently, normalization techniques were applied to standardize the scales across the different features, thus avoiding potential biases during meta-model training and ensuring consistent feature contributions. Table 1 summarizes the main aspects of each dataset used, outlining the number of samples, abrupt changes, and the percentage of detected change points.

Table 1.

Summary of the datasets used in the experiment.

4.4. Additional Optimization Methods

In addition to the proposed framework, two additional methods were explored in this experiment: Grid Search and Genetic Algorithm optimization. These methods were chosen because they are used in most of the hyperparameter optimization studies [61,62,63].

Regarding the Genetic Algorithm, it was conducted using an evolutionary algorithm software modeling package called Distributed Evolutionary Algorithms in Python 3.8 (DEAP) [64]. Selection was performed by roulette selection. Additional runtime parameters were determined empirically, which are: population size—10; generations—100; mutation rate—; crossover rate—.

For the Grid Search, at each step, all possible combinations were analyzed, storing the best performance, considering F1-Score and time processing. However, to choose the best combination, meta-models were used, as described in Section 4.2. To improve the exploratory analysis, not only a single meta-model for each hyperparameter was inducted, but also multi-output regression meta-models to predict both.

The main contributions made by this research, supported by the experimental results, were summarized under four perspectives. First, the results were presented considering the predictive performance of the meta-learners and the two traditional hyperparameters’ optimization previously mentioned (GS and GA). Second, further details were explored, and the impact of the recommendation procedure was discussed. Afterward, the meta-feature influence on the recommendation was assessed. Finally, an analysis was conducted considering the advantages and drawbacks of the proposed hyperparameters’ optimization framework.

Among the deterministic approaches, the GS is the most basic hyperparameters’ optimization method due to its simplicity. Also known as full factorial design, GS is fashioned on a finite set of values for each hyperparameter. To do so, it evaluates the Cartesian product of these sets to find a suitable one. However, GS is an exhaustive search method that requires the democratization of the hyperparameter space. It suffers from the number of function evaluations that grow exponentially with the dimensionality of the configuration space. Regardless of its drawbacks, it is still the most used method in the literature [65,66].

Considering probabilistic optimization methods, GA is a population-based method that evolves its population by applying mutations and crossover to generate a better configuration. In tuning tasks, it can be useful for complex optimization problems that require a large number of hyperparameters. Therefore, GA helps to find an optimal set of values in a domain [67].

4.5. Implementations

To carry out the experiments, the Python language was used, as well as the respective standard libraries for data manipulation. Taking into account the CUSUM algorithm, the detecta package was used, which is a module that provides the implementation of CUSUM. The CUSUM algorithm was used to identify the change points in the time series. One of the ways to implement CUSUM is to calculate the Cumulative Sum of positive and negative changes, comparing it with a threshold, where, if this threshold is reached, the change is detected, and the alert occurs, then the sum is reset, and the analysis starts again. In addition, CUSUM also has a hyperparameter called drift, which is introduced to avoid detecting changes in the absence of a real or slow change. Thus, the CUSUM’s performance in detecting change points is directly related to the input set of hyperparameters. As a default in the experiment, the values of were used for threshold while for the drift, we used: . From these, the performance analysis was elaborated.

4.6. Evaluation Metrics

The performance was evaluated from different scenarios through the F1-Score, which is calculated through the Precision and Recall. Precision and Recall are calculated by analyzing the Confusion Matrix. The respective diagonal values of this matrix are True Positive (TP) and True Negative (TN), which are divided by the sum of the values of the entire matrix (n). Recall and Precision are often used to evaluate the effectiveness of classification methods based on False Negative (FN) and False Positive (FP). In this work, these metrics were used for a fair comparison of the quality of the results obtained. Furthermore, the processing time from metadata preparation to prediction was computed.

For a fair analysis, the metrics were compared to the GS and the GA optimization to find a suitable set of hyperparameters and optimize them, respectively. Thus, it is possible to estimate the overall work execution with an additional perspective of performance analysis. In the experiments, the time consumption was calculated as the average of 30 runs.

5. Results and Discussions

5.1. Predictive Performance

The predictive performance obtained by the proposed approach, the baseline methods, and each time consumption is presented in Table 2. Observing each performance, in general, we infer that the best results were obtained from GS and GA methods, as expected. Regarding the Grid Search, it happens because all the possibilities in the predefined scenario were combined and tested in a sort of grid. The combination that yielded the best performance was selected. Considering GA’s performance, as it is based on an evolutionary process, it is efficient in comparison to traditional methods. Due to its optimization capabilities, both continuous and discrete values are tested to find an optimal result, which is an advantage in comparison to GS. However, it is time-consuming.

Table 2.

Mean values F1-Score and time for each combination of algorithm and dataset. We took advantage of the original and multi-output (MO) version of each algorithm. The bold values correspond to the best mean result for each metric in the dataset.

From meta-models, some particular results can be observed. As a single meta-model for each hyperparameter, multi-output meta-models were explored. When time processing is considered, smooth variability can be observed. Due to the small number of hyperparameters, the discrepancy between these methods is low. However, as the set of hyperparameters increases, this difference tends to be more expressive.

Comparing GS and GA optimization to each meta-model, regardless of dataset or meta-model, the proposed framework is less time-consuming than those. This is mostly related to the fact that, after the induction process, as meta-models learn from a meta-dataset, to predict them is faster than finding out each set of hyperparameters to each time series, as the training process is executed only once.

5.2. Analysis of Recommendation Procedures

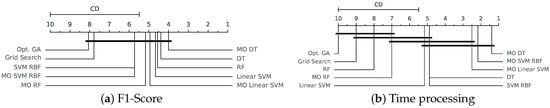

We also used the Friedman statistical test [68] to compare the performance of each strategy. Then, we used the Nemenyi post hoc test [69] with confidence to create the critical distance (CD) diagrams in Figure 2a,b, for F1-Score and time processing.

Figure 2.

Comparison of F1-score and time performances among the algorithms, and the meta-model versions with multi-output according to Nemenyi test with .

The evaluation of predictive performance using the F1-Score suggests no statistically significant differences among the tested methods (GS, GA optimization, and the proposed meta-learning models) at . This indicates that the proposed HPO framework achieves comparable accuracy to GS and GA, both of which are widely regarded as strong optimization baselines. However, a different trend is observed when analyzing computational efficiency. Statistically significant differences were found in time processing across all methods, highlighting the advantage of the proposed approach. Among the tested strategies, multi-output meta-models demonstrated the most conservative behavior in terms of computational cost, followed by single meta-models trained for each hyperparameter. Notably, the rank differences in processing time are substantial, reinforcing the impact of computational efficiency in hyperparameter selection.

When examining the practical implications of these findings, it becomes evident that the proposed HPO framework not only maintains high predictive accuracy comparable to GS and GA but also significantly reduces processing time due to its meta-learning-based design. This reduction in computational overhead is a crucial advantage, particularly in dynamic environments where rapid hyperparameter tuning is essential for real-time applications.

5.3. Meta-Feature Analysis

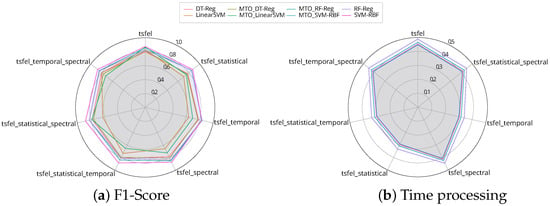

Taking advantage of the MtL framework for further analysis, we observed the impact of each meta-feature in inducting meta-models. Figure 3 shows seven different cases regarding each meta-feature domain and each evaluated metric: (1) TSFEL refers to extracting all domains from time series; (2) Extracting only statistical meta-features; (3) Only temporal ones; (4) Extracting meta-features in spectral domain; (5, 6, and 7) Combining each of the meta-features from TSFEL library.

Figure 3.

F1-Score and time processing from each meta-feature from TSFEL library.

Considering the predictive performance, we observed that the meta-features, regardless of the domain, did not significantly influence the performance. It is most related to the type of the meta-model. Instead, the meta-features domain had a significant effect on the time processing. As shown in Figure 3b, extracting all meta-features available in the TSFEL library and the spectral ones were the highest time-consuming due to their properties. The temporal was the lower focus on time.

The obtained results suggest that the meta-feature domains have considerable differences among them, and some are time-consuming. This study also shows that the predictive performance is similar for each case because these meta-feature domains did not influence the meta-model induction, indicating that the hyperparameters’ optimization framework might deal with different scenarios.

In our framework, we employed the TSFEL to generate comprehensive meta-features grouped into three primary domains: statistical, temporal, and spectral. Statistical features such as mean, variance, skewness, and kurtosis were particularly valuable in capturing the distributional properties of the data and contributed substantially to model performance by providing baseline characteristics of the time series. Temporal features, including autocorrelation and zero-crossing rate, captured the time-dependent behaviors and periodicities in the data, further enhancing the predictive accuracy of the framework.

Spectral features, such as spectral entropy and frequency-domain statistics, were instrumental in identifying patterns in the frequency domain, allowing the meta-model to detect subtle and complex variations in the time series. Our experiments indicated that combining statistical and temporal features generally resulted in higher predictive performance, suggesting their complementary nature in capturing different aspects of the time-series dynamics. Conversely, while spectral features added valuable insights, they significantly increased computational overhead due to the higher complexity of frequency-domain calculations.

Overall, this detailed meta-feature analysis highlighted that a carefully balanced combination of statistical and temporal features could effectively optimize both prediction accuracy and computational efficiency. Further research could focus on identifying feature-selection strategies to minimize computational demands while maintaining high predictive capability, particularly relevant for real-time applications.

5.4. MtL Framework: Advantages and Drawbacks

The results showed that there is a significant reduction in the time consumption when using the MtL framework. In particular, the best predictive results were achieved with GS and GA optimization. However, the proposed approach does not have statistical differences with these methods, which shows a considerable advantage and promising applications.

According to the reported results and focusing on time processing, the MtL framework demonstrated a superior capability to deal with different scenarios, maintaining the average time consumption. However, to achieve good performance, it requires an ideal set of hyperparameters in the metadata preparation stage, which might be considered a disadvantage. Given its reliance on meta-models, representative metadata, and target variables must be carefully prepared to ensure effective learning.

The primary advantage of the MtL framework lies in its structural simplicity. Unlike GA optimization, it does not require defining a complex objective function, simplifying implementation considerably. Furthermore, the MtL approach can leverage synthetic datasets with clearly identified change points to effectively train meta-models.

Despite these advantages, deploying the MtL framework in real-world streaming environments presents several challenges. One critical challenge is effectively managing concept drift in live-streaming data, where model accuracy may degrade over time if drift is not promptly and adequately addressed. Thus, ensuring the framework can continuously adapt through incremental updates without significant performance degradation is crucial. Additionally, addressing the computational resource constraints and latency considerations inherent in live data processing environments will be essential to ensure practicality and scalability in real-world scenarios.

Moreover, potential limitations exist regarding the generalizability of the MtL framework to unseen data distributions. Since the meta-learning approach heavily depends on previously observed metadata, it may encounter difficulties in accurately predicting optimal hyperparameters for datasets exhibiting characteristics significantly different from the training distributions. Thus, ensuring diversity and representativeness in the training metadata is vital to enhance the robustness and broader applicability of the framework.

6. Conclusions and Future Work

In this paper, we proposed an MtL framework to recommend a set of hyperparameters for CUSUM. For that, we have extracted meta-features to describe a time series and an ideal hyperparameter set was obtained based on GS to compose the meta-dataset. Concerning the highlighted subjects, the framework had no statistical difference in comparison to GS and GA optimization, which means that it has competitive predictive performance. Moreover, it has shown less time consumption than the others.

In future research, we aim to extend the experimental evaluation to other drift scenarios and types of concept drift to gather further insights into the proposed framework. Furthermore, we intend to investigate the integration of deep learning techniques into our MtL framework. Leveraging deep neural networks could enhance feature extraction and automated hyperparameter tuning processes, potentially improving prediction accuracy and further reducing computational overhead. Such an integration might enable the framework to dynamically adapt to complex and evolving time series data, making it more robust and effective for real-world applications.

Another promising direction involves extending our proposed MtL framework to other drift detection algorithms beyond CUSUM to evaluate its versatility and effectiveness across diverse methodologies. Exploring these extensions would validate the framework’s broader applicability and potentially contribute to a generalized solution for hyperparameter optimization in various concept drift detection contexts.

Additionally, future research should explore real-world deployment challenges, particularly addressing the handling of concept drift in live-streaming data environments. Practical deployments must consider the computational constraints and latency requirements inherent to real-time processing. The framework may benefit from incremental learning methods, capable of continuously updating the model without extensive retraining phases. It is also crucial to develop robust drift detection and adaptation mechanisms to maintain reliable performance over extended operational periods, thus enhancing the usability and applicability of the MtL framework in diverse industrial settings.

Author Contributions

Writing—original draft, J.F.L.; Supervision, S.B.J. and L.F.d.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Alalhareth, M.; Hong, S.C. Enhancing the Internet of Medical Things (IoMT) Security with Meta-Learning: A Performance-Driven Approach for Ensemble Intrusion Detection Systems. Sensors 2024, 24, 3519. [Google Scholar] [CrossRef]

- Gama, J. Knowledge Discovery from Data Streams; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Zhang, W.; Bifet, A. FEAT: A Fairness-Enhancing and Concept-Adapting Decision Tree Classifier. In Proceedings of the Discovery Science; Appice, A., Tsoumakas, G., Manolopoulos, Y., Matwin, S., Eds.; Springer: Cham, Switzerland, 2020; pp. 175–189. [Google Scholar]

- Gonçalves, P.M., Jr.; de Carvalho Santos, S.G.; Barros, R.S.; Vieira, D.C. A comparative study on concept drift detectors. Expert Syst. Appl. 2014, 41, 8144–8156. [Google Scholar] [CrossRef]

- Basseville, M.; Nikiforov, I.V. Detection of Abrupt Changes: Theory and Application; Prentice Hall: Englewood Cliffs, NJ, USA, 1993; Volume 104. [Google Scholar]

- Aminikhanghahi, S.; Cook, D.J. A survey of methods for time series change point detection. Knowl. Inf. Syst. 2017, 51, 339–367. [Google Scholar] [CrossRef] [PubMed]

- Page, E.S. Continuous inspection schemes. Biometrika 1954, 41, 100–115. [Google Scholar] [CrossRef]

- Luo, Y.; Li, Z.; Wang, Z. Adaptive CUSUM control chart with variable sampling intervals. Comput. Stat. Data Anal. 2009, 53, 2693–2701. [Google Scholar] [CrossRef]

- Oh, H.; Lee, S. Modified residual CUSUM test for location-scale time series models with heteroscedasticity. Ann. Inst. Stat. Math. 2019, 71, 1059–1091. [Google Scholar] [CrossRef]

- Lee, S.; Lee, S. Exponential family QMLE-based CUSUM test for integer-valued time series. Commun. Stat.-Simul. Comput. 2023, 52, 2022–2043. [Google Scholar] [CrossRef]

- Sun, H.; Cheng, Y.; Jiang, B.; Lu, F.; Wang, N. Anomaly Detection Method for Rocket Engines Based on Convex Optimized Information Fusion. Sensors 2024, 24, 415. [Google Scholar] [CrossRef]

- Lee, S.; Lee, S.; Moon, M. Hybrid change point detection for time series via support vector regression and CUSUM method. Appl. Soft Comput. 2020, 89, 106101. [Google Scholar] [CrossRef]

- Kravchik, M.; Shabtai, A. Detecting cyber attacks in industrial control systems using convolutional neural networks. In Proceedings of the 2018 Workshop on Cyber-Physical Systems Security and PrivaCy, Toronto, ON, Canada, 19 October 2018; pp. 72–83. [Google Scholar]

- Li, Y.; Zha, D.; Venugopal, P.; Zou, N.; Hu, X. Pyodds: An end-to-end outlier detection system with automated machine learning. In Companion, Proceedings of the Web Conference 2020, New York, NY, USA, 20–24 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 153–157. [Google Scholar]

- Bhatnagar, A.; Kassianik, P.; Liu, C.; Lan, T.; Yang, W.; Cassius, R.; Sahoo, D.; Arpit, D.; Subramanian, S.; Woo, G.; et al. Merlion: A machine learning library for time series. arXiv 2021, arXiv:2109.09265. [Google Scholar]

- Lai, K.H.; Zha, D.; Wang, G.; Xu, J.; Zhao, Y.; Kumar, D.; Chen, Y.; Zumkhawaka, P.; Wan, M.; Martinez, D.; et al. TODS: An automated time series outlier detection system. Proc. AAAI Conf. Artif. Intell. 2021, 35, 16060–16062. [Google Scholar] [CrossRef]

- Talpur, F.; Korejo, I.A.; Chandio, A.A.; Ghulam, A.; Talpur, M.S.H. ML-Based Detection of DDoS Attacks Using Evolutionary Algorithms Optimization. Sensors 2024, 24, 1672. [Google Scholar] [CrossRef]

- Westergaard, G.; Erden, U.; Mateo, O.A.; Lampo, S.M.; Akinci, T.C.; Topsakal, O. Time Series Forecasting Utilizing Automated Machine Learning (AutoML): A Comparative Analysis Study on Diverse Datasets. Information 2024, 15, 39. [Google Scholar] [CrossRef]

- LeDell, E.; Poirier, S. H2O AutoML: Scalable automatic machine learning. In Proceedings of the AutoML Workshop at ICML, Vienna, Austria, 17–18 July 2020. [Google Scholar]

- Salehin, I.; Islam, M.S.; Saha, P.; Noman, S.; Tuni, A.; Hasan, M.M.; Baten, M.A. AutoML: A systematic review on automated machine learning with neural architecture search. J. Inf. Intell. 2024, 2, 52–81. [Google Scholar] [CrossRef]

- Zhang, P.; Jiang, X.; Holt, G.M.; Laptev, N.P.; Komurlu, C.; Gao, P.; Yu, Y. Self-supervised learning for fast and scalable time series hyper-parameter tuning. arXiv 2021, arXiv:2102.05740. [Google Scholar]

- Shende, M.K.; Feijoo-Lorenzo, A.E.; Bokde, N.D. cleanTS: Automated (AutoML) tool to clean univariate time series at microscales. Neurocomputing 2022, 500, 155–176. [Google Scholar] [CrossRef]

- Silva, R.P.; Junior, S.B.; Zarpelão, B.B.; De Melo, L.F. Unsupervised Tuning for Drift Detectors Using Change Detector Segmentation. IEEE Access 2024, 12, 54256–54271. [Google Scholar] [CrossRef]

- Van den Burg, G.J.; Williams, C.K. An evaluation of change point detection algorithms. arXiv 2020, arXiv:2003.06222. [Google Scholar]

- Martins, V.E.; Cano, A.; Junior, S.B. Meta-learning for dynamic tuning of active learning on stream classification. Pattern Recognit. 2023, 138, 109359. [Google Scholar] [CrossRef]

- Vanschoren, J. Meta-learning: A survey. arXiv 2018, arXiv:1810.03548. [Google Scholar]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for hyper-parameter optimization. In Proceedings of the 25th Annual Conference on Neural Information Processing Systems (NIPS 2011), Granada, Spain, 12–15 December 2011; Advances in Neural Information Processing Systems 24. pp. 1–9. [Google Scholar]

- Abraham, A. Meta learning evolutionary artificial neural networks. Neurocomputing 2004, 56, 1–38. [Google Scholar] [CrossRef]

- Wang, X.; Smith-Miles, K.; Hyndman, R. Rule induction for forecasting method selection: Meta-learning the characteristics of univariate time series. Neurocomputing 2009, 72, 2581–2594. [Google Scholar] [CrossRef]

- Bui, K.H.N.; Yi, H. Optimal hyperparameter tuning using meta-learning for big traffic datasets. In Proceedings of the 2020 IEEE International Conference on Big Data and Smart Computing (BigComp), Busan, Republic of Korea, 19–22 February 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 48–54. [Google Scholar]

- Sebastiao, R.; Gama, J. A study on change detection methods. In Proceedings of the 14th Portuguese Conference on Artificial Intelligence, EPIA, Aveiro, Portugal, 12–15 October 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 12–15. [Google Scholar]

- Wang, D.; Yu, Y.; Rinaldo, A. Univariate mean change point detection: Penalization, CUSUM and optimality. Electron. J. Stat. 2020, 14, 1917–1961. [Google Scholar] [CrossRef]

- Krawczyk, B.; Minku, L.L.; Gama, J.; Stefanowski, J.; Woźniak, M. Ensemble learning for data stream analysis: A survey. Inf. Fusion 2017, 37, 132–156. [Google Scholar] [CrossRef]

- Ali, S.; Smith-Miles, K.A. A meta-learning approach to automatic kernel selection for support vector machines. Neurocomputing 2006, 70, 173–186. [Google Scholar] [CrossRef]

- Rossi, A.L.D.; de Leon Ferreira, A.C.P.; Soares, C.; De Souza, B.F. MetaStream: A meta-learning based method for periodic algorithm selection in time-changing data. Neurocomputing 2014, 127, 52–64. [Google Scholar] [CrossRef]

- Gabbay, I.; Shapira, B.; Rokach, L. Isolation forests and landmarking-based representations for clustering algorithm recommendation using meta-learning. Inf. Sci. 2021, 574, 473–489. [Google Scholar] [CrossRef]

- Truong, A.; Walters, A.; Goodsitt, J.; Hines, K.; Bruss, C.B.; Farivar, R. Towards Automated Machine Learning: Evaluation and Comparison of AutoML Approaches and Tools. In Proceedings of the 31st International Conference on Tools with Artificial Intelligence (ICTAI), Portland, OR, USA, 4–6 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1471–1479. [Google Scholar]

- Ali, Y.A.; Awwad, E.M.; Al-Razgan, M.; Maarouf, A. Hyperparameter search for machine learning algorithms for optimizing the computational complexity. Processes 2023, 11, 349. [Google Scholar] [CrossRef]

- Jati, A.; Ekambaram, V.; Pal, S.; Quanz, B.; Gifford, W.M.; Harsha, P.; Siegel, S.; Mukherjee, S.; Narayanaswami, C. Hierarchical Proxy Modeling for Improved HPO in Time Series Forecasting. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 6–10 August 2023; KDD ’23. pp. 891–900. [Google Scholar]

- Wu, D.; Guan, Q.; Fan, Z.; Deng, H.; Wu, T. Automl with parallel genetic algorithm for fast hyperparameters optimization in efficient iot time series prediction. IEEE Trans. Ind. Inform. 2022, 19, 9555–9564. [Google Scholar] [CrossRef]

- Fristiana, A.H.; Alfarozi, S.A.I.; Permanasari, A.E.; Pratama, M.; Wibirama, S. A Survey on Hyperparameters Optimization of Deep Learning for Time Series Classification. IEEE Access 2024, 12, 191162–191198. [Google Scholar] [CrossRef]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Yamagata, T.; Santos-Rodríguez, R.; Flach, P. Continuous Adaptation with Online Meta-Learning for Non-Stationary Target Regression Tasks. Signals 2022, 3, 66–85. [Google Scholar] [CrossRef]

- Brazdil, P.B.; Soares, C.; Da Costa, J.P. Ranking learning algorithms: Using IBL and meta-learning on accuracy and time results. Mach. Learn. 2003, 50, 251–277. [Google Scholar] [CrossRef]

- Soares, C.; Brazdil, P.B. Selecting parameters of SVM using meta-learning and kernel matrix-based meta-features. In Proceedings of the ACM Symposium on Applied Computing, New York, NY, USA, 23–27 April 2006; pp. 564–568. [Google Scholar]

- Zöller, M.A.; Huber, M.F. Survey on automated machine learning. arXiv 2019, arXiv:1904.12054. [Google Scholar]

- Widmer, G.; Kubat, M. Learning in the presence of concept drift and hidden contexts. Mach. Learn. 1996, 23, 69–101. [Google Scholar] [CrossRef]

- Gama, J.; Žliobaitė, I.; Bifet, A.; Pechenizkiy, M.; Bouchachia, A. A survey on concept drift adaptation. ACM Comput. Surv. (CSUR) 2014, 46, 1–37. [Google Scholar] [CrossRef]

- Lu, J.; Liu, A.; Dong, F.; Gu, F.; Gama, J.; Zhang, G. Learning under concept drift: A review. IEEE Trans. Knowl. Data Eng. 2018, 31, 2346–2363. [Google Scholar] [CrossRef]

- Wald, A. Sequential Analysis; Courier Corporation: North Chelmsford, MA, USA, 2004. [Google Scholar]

- Vanschoren, J. Meta-learning. In Automated Machine Learning; Springer: Cham, Switzerland, 2019; pp. 35–61. [Google Scholar]

- Fernandes, L.H.d.S.; Lorena, A.C.; Smith-Miles, K. Towards understanding clustering problems and algorithms: An instance space analysis. Algorithms 2021, 14, 95. [Google Scholar] [CrossRef]

- Barandas, M.; Folgado, D.; Fernandes, L.; Santos, S.; Abreu, M.; Bota, P.; Liu, H.; Schultz, T.; Gamboa, H. TSFEL: Time series feature extraction library. SoftwareX 2020, 11, 100456. [Google Scholar] [CrossRef]

- Quinlan, J. C4.5 Programs for Machine Learning; Morgan Kaufmann: San Mateo, CA, USA, 1992. [Google Scholar]

- Sharma, T.C.; Jain, M. WEKA approach for comparative study of classification algorithm. Int. J. Adv. Res. Comput. Commun. Eng. 2013, 2, 1925–1931. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Scornet, E.; Biau, G.; Vert, J.P. Consistency of random forests. Ann. Stat. 2015, 43, 1716–1741. [Google Scholar] [CrossRef]

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer Inc.: New York, NY, USA, 1995. [Google Scholar]

- Wang, L. Support Vector Machines: Theory and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2005; Volume 177. [Google Scholar]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the IEEE International Conference on Computer Vision. ICCV 2001, Vancouver, BC, Canada, 7–14 July 2001; IEEE: Piscataway, NJ, USA, 2001; Volume 2, pp. 416–423. [Google Scholar]

- Lameski, P.; Zdravevski, E.; Mingov, R.; Kulakov, A. SVM Parameter Tuning with Grid Search and Its Impact on Reduction of Model Over-fitting. In Rough Sets, Fuzzy Sets, Data Mining, and Granular Computing; Springer: Berlin/Heidelberg, Germany, 2015; pp. 464–474. [Google Scholar]

- Bouktif, S.; Fiaz, A.; Ouni, A.; Serhani, M.A. Optimal deep learning lstm model for electric load forecasting using feature selection and genetic algorithm: Comparison with machine learning approaches. Energies 2018, 11, 1636. [Google Scholar] [CrossRef]

- Jiang, Y.; Tong, G.; Yin, H.; Xiong, N. A pedestrian detection method based on genetic algorithm for optimize XGBoost training parameters. IEEE Access 2019, 7, 118310–118321. [Google Scholar] [CrossRef]

- Fortin, F.A.; De Rainville, F.M.; Gardner, M.A.; Parizeau, M.; Gagné, C. DEAP: Evolutionary Algorithms Made Easy. J. Mach. Learn. Res. 2012, 13, 2171–2175. [Google Scholar]

- Mantovani, R.G.; Rossi, A.L.D.; Vanschoren, J.; Bischl, B.; de Carvalho, A.C.P.L.F. Effectiveness of Random Search in SVM hyper-parameter tuning. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015; pp. 1–8. [Google Scholar]

- Feurer, M.; Hutter, F. Hyperparameter optimization. In Automated Machine Learning. The Springer Series on Challenges in Machine Learning; Springer: Cham, Switzerland, 2019; pp. 3–33. [Google Scholar]

- Leung, F.H.F.; Lam, H.K.; Ling, S.H.; Tam, P.K.S. Tuning of the structure and parameters of a neural network using an improved genetic algorithm. IEEE Trans. Neural Netw. 2003, 14, 79–88. [Google Scholar] [CrossRef]

- Friedman, M. The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J. Am. Stat. Assoc. 1937, 32, 675–701. [Google Scholar] [CrossRef]

- Nemenyi, P. Distribution-Free Multiple Comparisons. Ph.D. Thesis, Princeton University, Princeton, NJ, USA, 1963. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).