Abstract

This study analyzes human sleep disorders using non-contact approaches. The proposed approach analyzes periodic limb movement disorder (PLMD) under sleep conditions. This was conceptualized as data capture using a non-contact approach with ultrasonic sensors. The model was designed to estimate PLMD and classify it using real-time sleep data and a machine learning-based random forest classifier. Hardware schemes play a vital role in capturing sleep data in real time using ultrasonic sensors. A field-programmable gate array (FPGA)-based accelerator for a random forest classifier was designed to analyze PLMD. This is a novel approach that aids subjects in taking further medications. Verilog HDL was used for PLMD estimation using a Xilinx Vivado 2021.1 simulation and synthesis. The proposed method was validated using a Xilinx Zynq-7000 Zed board XC7Z020-CLG484.

1. Introduction

Sleep disorders can significantly impact daily life, making advanced technologies crucial for achieving optimal health and well-being within the medical system. New innovations are essential for improving sleep quality and promoting a happier, healthier life. In 2004, the World Health Organization (WHO) conducted a meeting on sleep challenges [1]. According to the international classification of sleep disorders, there are over 70 distinct types of sleep-related conditions [2]. Research on identifying sleep disorders is scarce, with many existing studies concentrating on the detection of sleep apnea [3,4]. Periodic limb movement disorders are characterized by polysomnographic features that include disruptions in sleep continuity, such as arousal and awakenings [5]. Sleep disorders listed in more than 70 different PLMD challenges have been observed in approximately 4–11% of the general adult population and 5–8% of the pediatric population [5]. There are no available approaches for the early clinical analysis of PLMD. Subjects with PLMD can improve their sleep disorders by continuous monitoring. In this regard, the specialist can detect PLMD early through technological intervention [5]. Our proposed accelerator aims to analyze the different stages such as early, middle, and high-level sleep disorder, and assist doctors and patients with PLMD using a non-contact monitoring approach.

In response to the limitations of available sleep disorder detection methods, and to enable extended monitoring over periods of weeks or months, innovative methods for conducting measurements have been developed. PLMD-related data were captured from the sleep posture and movements of the population. Collecting real-time sleep data at a high resolution (every second) over the course of days for individual patients is quite challenging in standard practice. In this regard, artificial intelligence and cutting-edge deep learning technologies are essential for analyzing PLMD. This research integrated multiple challenges, such as sleep postures, periodic movement of sleep postures, static posture with lower limb movement, and data capture with non-wearable and non-contact sensors. Data mining, storage, and processing of PLMD were carried out using computing methods.

The periodic and aperiodic movements of the lower limb in sleep conditions have been estimated using different approaches, such as bed-based monitoring [6]. Bed-based sensor fusion for position changes was evaluated by Maggi and Sauter [7]. Actigraphy systems are preferred for PLMD analyses [8]. The RFID-based non-invasive method with a CNN algorithm was used to estimate sleep postures and movements [9]. Probabilistic data-driven approaches are preferred for analyzing PLMD data [10]. In [11], a trained specialist manually evaluated the Emfit (electromechanical film) sensor readings to diagnose PLMD. Although no assessment of individual events was conducted, the average number of limb movements recorded by EMG (electromyography) and Emfit demonstrated a strong correlation, with a Spearman’s coefficient of 0.87.

Sensor fusion is the preferred method used to define the PLMD details. Principal component analysis was used for the measurement fusion. The orthogonal linear transformation executed using PCA remaps the signals onto a novel coordinate framework. Within this framework, the coordinates are organized based on the eigenvalues corresponding to the newly derived eigenvectors [12]. Recent studies have presented decision fusion using a naive Bayes classifier trained during cross-validation [6]. However, effective PLMD requires computation methods. The aforementioned studies involved sensing and analysis using machine learning approaches. The real-time challenge is computing PLMD data, such as PCA, probabilistic, and naive Bayes classifiers. Cloud and edge computing approaches have been utilized, such as GPU and CPU. At the edge computing level, the replacement of a GPU with low power consumption is essential for an individual’s PLMD analysis.

This study provides an edge computing solution with an FPGA for PLMD analysis using machine learning algorithms. The novel contributions of the study are as follows:

- A machine learning system was designed to identify conditions using sleep data collected from an unobtrusive array of non-contact ultrasonic sensors.

- A hardware accelerator was designed for the estimation of PLMD based on a random forest classifier.

- The subject’s PLMD was monitored using measurement fusion with FPGA-based edge computations.

This section presents the requirements and background of the PLMD analysis. This motivated us to investigate PLMD using the methods proposed in Section 2. The results of the proposed method are presented in the form of experimental validation in Section 3. Conclusions and future scope are presented in Section 4.

2. Methods

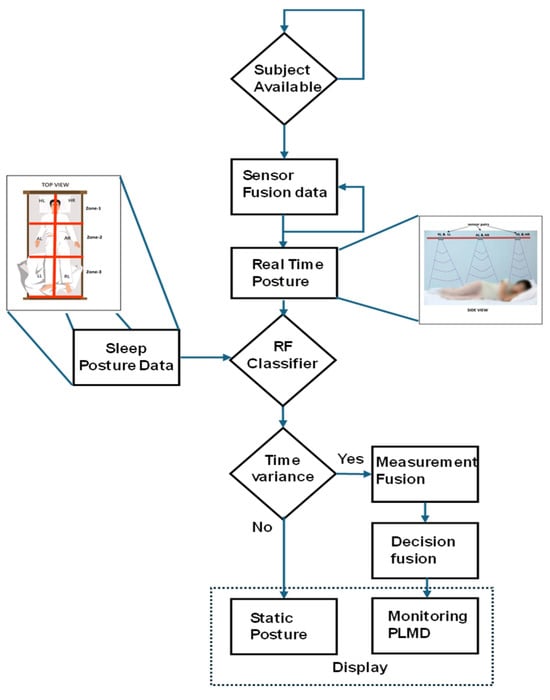

Figure 1 presents the flowchart for the movement analysis and monitoring of subjects with PLMD, with blue arrows indicating the step-by-step procedure. This approach will assist early clinical studies and healthcare professionals in monitoring the progress of PLMD treatment.

Figure 1.

Flowchart of PLMD analysis and monitoring.

2.1. Machine Learning-Based Posture Analysis

Sleep postures were analyzed to determine the PLMD stage and status. In this section, data capture and machine learning analysis based on the recorded data are explained in Algorithm 1. The respective postures were classified using a random forest classifier. Sleep posture analysis was performed using training and test datasets, and the results were validated with real-time data. The proposed approach considered the sleep data reported in previous studies [13,14]. Two sets of sleep data are static and adaptive; these data were captured as echo signals converted into distance using ultrasonic sensor arrays. Xi represents a sensor feature, and its dimensions are based on the position and timing of the sensor. Sleep posture labels are represented by yi; six pairs of sleep postures were labeled as static and time-variant. D is the training dataset, and T represents the number of decision trees among the total features (M) sampled and the selected features (m). Input and initialization are the initial steps in the algorithm. Sample n instances with replacements from D at D_t and randomly select m features from M for feature selection. Tree function training was carried out with D_t and the selected features. After performing the random forest algorithm, the data were input as trained postures in F.

| Algorithm 1 Sleep Posture Classifier-Based Random Forest Algorithm |

|

In Line 1, we start with the input dataset D, which consists of multiple data points represented as pairs, (X1, y1), (X2, y2), …, (Xn, yn), where each Xi denotes the input features, and yi is the corresponding target value. Line 2 initializes an empty list called forest (F), which will eventually store the collection of decision trees. In Line 3, a loop is initiated to run M times, with each iteration dedicated to training one decision tree. In Line 4, a bootstrap sample D_t is created by randomly sampling data points from D with replacement, introducing variability and promoting model robustness. Line 5 explains the random selection of a subset of m features from the total set of M features, which are used for splitting nodes in the tree, ensuring diversity among the trees in the forest. In Line 6, a decision tree is trained using the bootstrap sample D_t and the selected subset of features. Line 7 ensures that the tree is grown fully without any pruning, allowing it to learn detailed patterns from the training data. In Line 8, this fully grown decision tree is appended to forest F, progressively building the ensemble. Finally, in Line 9, after all M trees have been trained and added to the forest, the complete set {T1, T2, …, TM} is returned as the final output, i.e., the fully trained random forest model.

2.2. Periodic Limb Movement Disorder (PLMD) Analysis and Monitoring

Algorithm 2 shows the PLMD analysis of a subject in real time is challenging; in this study, it was integrated with sleep posture and time variance.

| Algorithm 2 Periodic Limb Movement Disorder (PLMD) Analysis Algorithm |

|

PLMD analysis was performed using sleep posture analysis, considering factors such as current posture and posture start time, plmd_episodes, and initialized movement duration. The analysis was performed until the loop sensor data provided the respective distances, and the results were analyzed for every iteration. Line 5 represents the respective posture, in which we trained our sleep postures from the previous algorithm with yi as the posture label, which is considered the same as the current posture. The analysis began by measuring the limb movement using fusion measurement. Movement duration is a fusion measurement parameter computed from the difference between the sensor fusion data of the previous and current limb states. Decision fusion determines the subject’s state and the status of PLMD, based on a predefined standard threshold value. However, if the movement duration parameter introduces ambiguity, it may hinder the ability of decision fusion to accurately distinguish between subjects with and without PLMD. If the presence of PLMD is confirmed, its duration also provides the PLMD stages.

2.3. Hardware-Based Accelerator for Posture Analysis

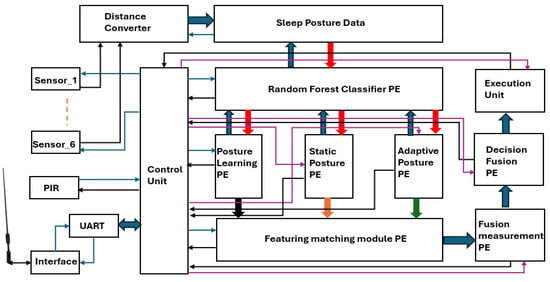

The analysis and monitoring of PLMD with a VLSI architecture are presented in Figure 2.

Figure 2.

The overall architecture of PLMD analysis and monitoring.

The analysis was performed using sleep data capture and distance normalization. A posture-learning processing element (PE) using a random forest classifier (RFC) processing element was employed for learning updates based on the captured sleep data. Current posture was registered using static and adaptive PEs, and the real-time sleep postures were compared with the learned postures for matching. Limb movements were measured using the fusion measurement PE, and the stage of PLMD was confirmed by the decision fusion PE. The decision was displayed and communicated through the execution unit. The control unit operated at 100 MHz and controlled all processing elements.

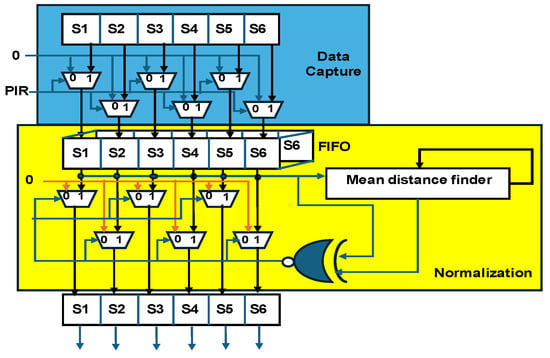

Data Capture and Normalization

Figure 3 shows the hardware scheme for data capture and normalization. In this study, data were collected using six pairs of ultrasonic sensors, with the readings stored in arrays S1–S6. Data collection was enabled using a PIR sensor, which triggered the recording process, and all the external interfaces with the control unit are represented by blue lines. The FIFO buffer is defined with dimensions L × W × H, where L (length) = 20 bits, W (width) = 6 arrays, and H (height) = 40. The height value corresponds to the data stored at four iterations per second for 10 min (4 iterations × 10 min = 40 data blocks).

Figure 3.

Hardware scheme for data capture and normalization.

The mean distance finder plays a key role in the normalization process. Normalization was parameterized using two values, namely early normalization and encoded normalization, which correspond to different physical conditions of the subject. Early normalization refers to distance computation in the absence of a subject and may vary depending on time and environmental conditions. Encoded normalization, on the other hand, is based on trained sensor data specific to the subject. The mean distance finder processes both normalization values and provides feedback by comparing the previous mean distance with the current mean distance, enabling precise normalization across multiple data iterations.

2.4. Hardware-Based Accelerator for PLMD Analysis and Monitoring

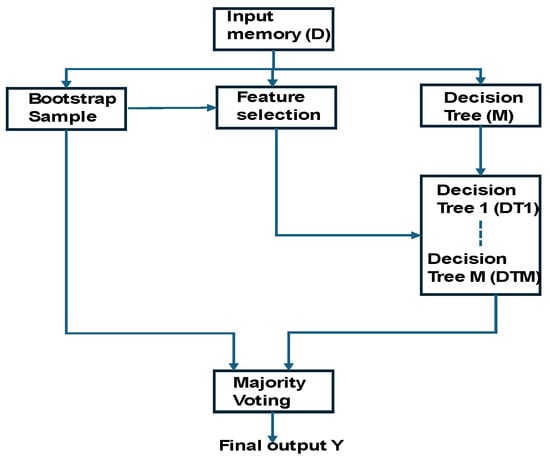

2.4.1. Random Forest-Based Fusion Decision

The random forest-based fusion decision (RFFD) flowchart is presented in Figure 4. Sensory data (D) were captured and stored in the input memory. This input was then shared with the bootstrap sampling, feature selection, and decision tree construction modules (denoted as M). The bootstrap samples were directed to both majority voting and feature selection processes. A series of decision trees were constructed up to M, and each tree was integrated with its corresponding selected features. Posture features were selected based on posture labels, such as supine pose, covering both static and movement postures. The decision trees were constructed using the random forest method, and the number of iterations (m) was determined based on the accuracy results. The final fusion decision was established through a majority voting system across the ensemble of trees.

Figure 4.

Flowchart of random forest-based fusion decision (RFFD).

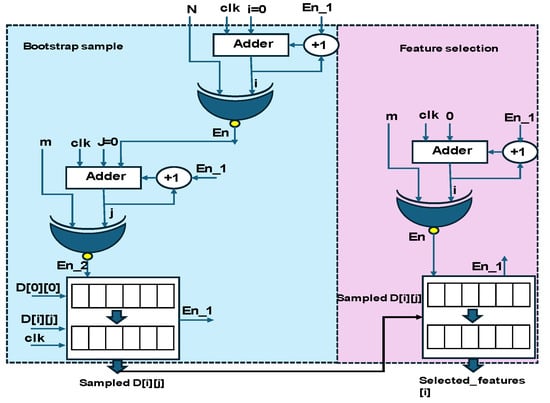

The bootstrap sample and feature selection hardware schemes are shown in Figure 5. They were processed based on two iterations and resulted in the sampled data D[i][j]. The resulting data were fetched into the feature selection module. The features of the pose were considered from the standard posture methods [13]. In digital models, the binary array patterns derived from data capture and normalization were used as references. The selected features [i] were fed to the RF decision tree.

Figure 5.

Hardware schemes of bootstrap sample modules and feature selection.

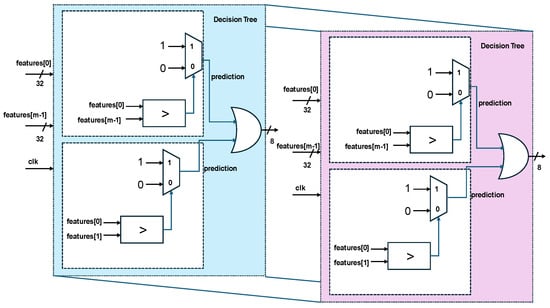

The RF decision tree consists of a number of submodules based on accuracy and consists of the features selected through comparisons, as shown in Figure 6.

Figure 6.

Hardware schemes of random forest-based decision tree.

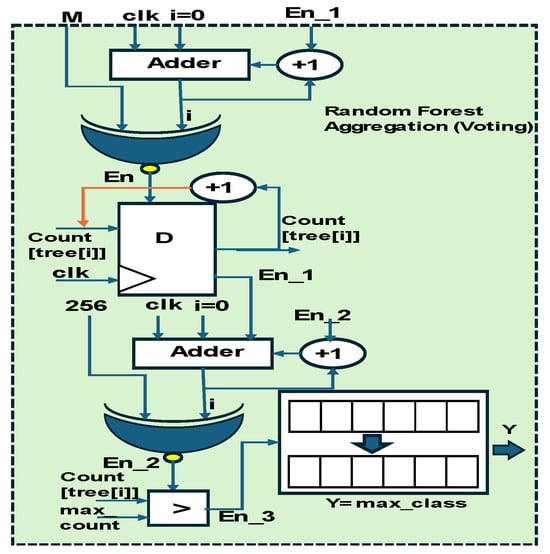

The final stage of the RF modules is voting, as shown in Figure 7. This is initiated by storing the count values, which are compared with the maximum value; the Y output is retained as the max class.

Figure 7.

Hardware scheme of random forest voting.

2.4.2. Fusion Measurement Based on Time Variance

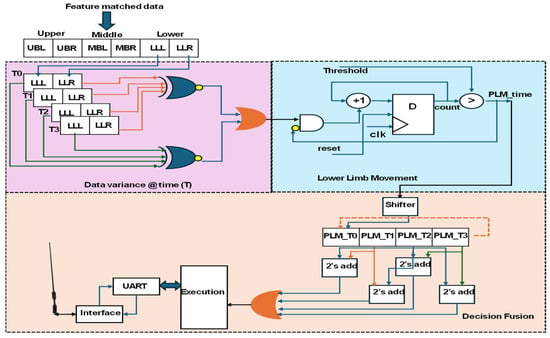

This module aims to define the subject positioned in a static or adaptive posture and detect PLMD using the feature-matching PE, the decision fusion PE, and the fusion measurement PE. As shown in Figure 4, in the RF classifier, a static posture is considered a time-invariant posture as per standard [13], and subject movement from point to point within the sleeping area represents an adaptive posture, as defined in [14]. The feature-matching module PE was constructed using a binary search tree [15], which plays a vital role in fusion measurement and decision fusion PEs. The core of the PLMD analysis involves hardware schemes, as shown in Figure 8.

Figure 8.

Hardware schemes of fusion measurement and decision fusion.

The feature-matching processing element (PE) provided posture confirmation, which served as input to the fusion measurement PE. The integrated data variance over time, in both static posture and lower limb movement, was used to evaluate PLM (periodic limb movement) duration. Data variance was captured at four iterations per second and stored over T1 to T3 time intervals. The lower limb—left (LLL) and lower limb—right (LLR) readings were compared each second to detect variations. When limb movement was detected, a duration counting module was activated, with thresholds set based on the subject’s PLMD stage. The duration values were stored in a FIFO buffer in the decision fusion block using a circular shifter mechanism. By comparing adjacent time windows, the system identified the duration of limb movement and inferred the subject’s PLMD stage. Before clinical diagnosis, the execution module shares this information with either a doctor or the subject’s caregiver, enabling a better understanding of PLMD.

3. Results

The results of the proposed PLMD monitoring method using hardware schemes are presented in this section.

3.1. Experimental Setup

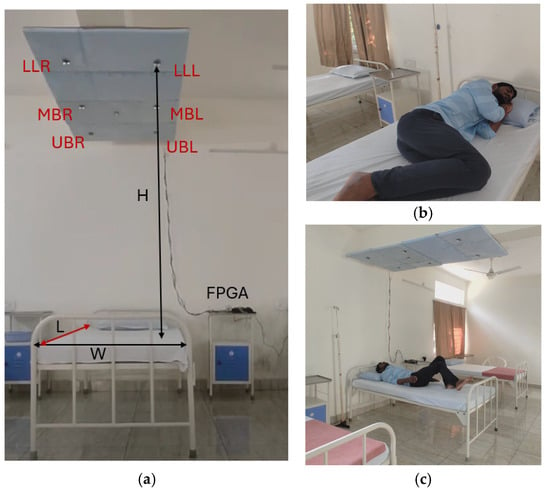

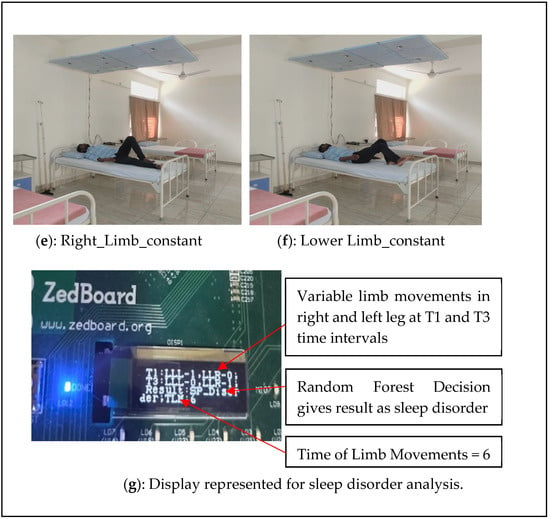

The PLMD monitoring experimental setup, shown in Figure 9a–c, comprised ultrasonic sensors for sleep data capture and a PIR sensor for the estimation of the availability of bed space. These sensors were positioned on the ceiling above the bed, facing towards it. Data processing and PLMD detection were carried out using a field-programmable gate array device (FPGA). Six pairs of PWM-based ultrasonic sensors (manufactured by Rhydo Labz, India) were used to capture real-time sleep posture data.

Figure 9.

(a–c) Illustration of the experimental setup of contactless sleep posture analysis.

Ultrasonic sensors operated at a frequency of 40 kHz with a 5 V DC supply, consuming less than 20 mA of power. An ultrasonic sensor produces echoes and converts them into distances using the pulse width modulation technique. The sensors were precisely positioned to detect distances ranging from 2 cm to 4 m, providing stable, non-contact measurement data essential for accurate posture analysis. The complete analysis of experiment is shown in Figure 10.

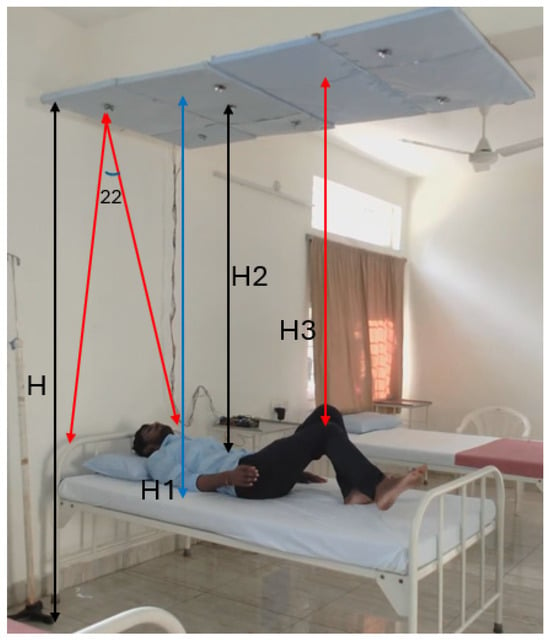

Figure 10.

Illustration of the experimental setup for analysis with sensors placed at a distance.

Figure 9a represents the environmental setup for the experiment. The distance between the sensor array and the bed was recorded as the height (H), the distance between the front and end of the bed was recorded as the length (L), and the width (W) was recorded as the difference between the left and right edges of the bed. Sensor arrays were positioned at the upper side (UBR, UBL), middle (MBR, MBL), and lower side (LLL, LLR). The distance was captured every 0.25 s from all sensors concurrently and processed using the hardware schemes. Machine learning was performed using the sensor’s distance and the time variance between distances for PLMD detection.

In this study, the difference between the ceiling and floor (H) was 2.8 m, and the distance between the sensor array and the bed (H1) was 2.2 m. The ultrasonic sensor operated with a coverage of 22°; the area covered on the bed with an H1 distance was around 80 cm. H2 was 2.1 m, and H3 was 1.9 m.

Distance covered on bed = Tan (22°) × 2.2 m (H1 distance) = 88 cm.

= Tan (22°) × 2.1 m = 84 cm.

= Tan (22°) × 1.9 m = 76 cm.

= Tan (22°) × 2.1 m = 84 cm.

= Tan (22°) × 1.9 m = 76 cm.

The H3 distance refers to the area covered on the bed (76 cm). In this regard, lower limb movement at H3 was recorded with event-driven conditions every 0.25 s.

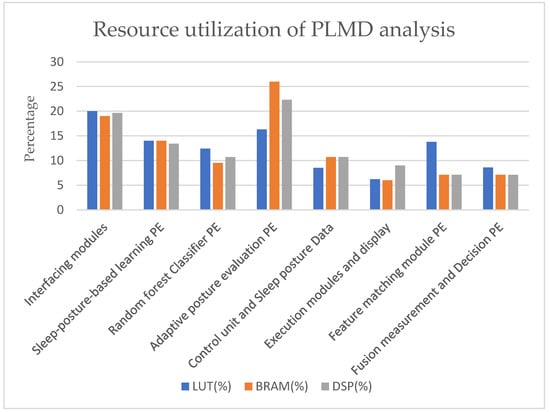

3.2. Resource Utilization

The FPGA Xilinx Zynq-7000 Zed board was used for computing the proposed PLMD monitoring algorithms. Table 1 presents an outline of resource consumption for PLMD analysis and monitoring using hardware schemes. A Xilinx reconfigurable device (San Jose, CA, USA) was used, which is part of the Xilinx Zynq family, namely an XC7Z020-1CSG484 Zed board. In this approach, a processing system (PS) was used to record time-stamped tasks during service execution. The Zed board’s programming logic (PL) is responsible for controlling the logic and interfacing. To achieve system-level synchronization, the PS and PL were integrated using the AXI lite protocol. The Zed board includes block RAM (BRAM), consisting of 140 blocks (36 kb, equivalent to 4.9 Mb) and 220 digital signal processing (DSP) slices, which were partially employed in this method. The operated frequency of FPGA was 100 MHz, and it was synchronized with the external components using UART communication modules and PWDC modules for ultrasonic sensors.

Table 1.

FPGA resource utilization for PLMD analysis accelerator.

Among the various computing approaches, edge computation is the most efficient and accurate. FPGA-reconfigurable devices excel in power efficiency and parallel processing capabilities [16]. In the field of PLMD analysis, FPGAs are at the forefront of providing adaptive position–event solutions with respect to time variance. The resource utilization of FPGAs was evaluated using three metrics: lookup table (LUT), block RAM (BRAM), and digital signal processing (DSP) slices.

The overall resource consumption was as follows: 59% of LUT, 60% of BRAM (2.94 Mb), and 51% of DSP slices. The resource utilization of each module is presented in Figure 11. The x-axis represents the computational modules, and the y-axis shows the percentage of resource utilization. The interfacing modules consumed 20% of the LUT, 19% of the BRAM, and 20% of the DSP slices of overall device utilization, indicating more LUT consumption in these modules. The adaptive posture PE consumed the most BRAM and DSP slices, around 26% and 22%, respectively. It also involved more traversing steps and data storage. It is a key player in PLMD feature measurement.

Figure 11.

Resource utilization of PLMD analysis accelerator.

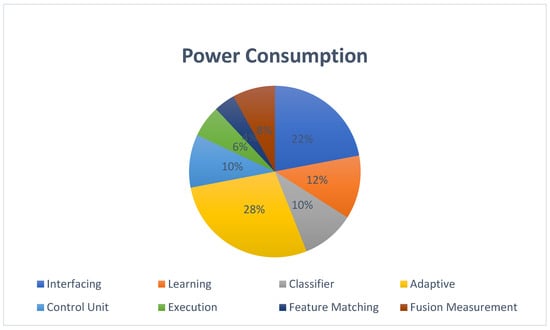

The power consumption of the device when operating a reconfigurable device (FPGA) is shown in Figure 12. According to the Xilinx power estimator (XPE), the static device power consumption was 1.4 W. In the overall power distribution, the power consumption of the adaptive posture PE accounted for 28%. XPE analysis also provided power consumption data for the other components. The second-highest power demand was attributed to interfacing with the external modules.

Figure 12.

Device power consumption of PLMD analysis accelerator.

The total power consumption of the device was 1.4 watts, comprising 0.98 watts of dynamic power and 0.42 watts of static power. The hardware configuration employed 12 pipeline stages (S) and operated at a device clock time (Tclk) of 10 ns. The validation process involved 40 (N) iterations. Equations (2) and (3) describe the 510 ns latency observed in the proposed hardware scheme.

Latency per iteration = 12 × 10 ns = 120 ns

Total latency = (N + S − 1) × T clk = (40 + 12 − 1) × 10 ns = 510 ns.

The system demonstrated an overall accuracy rate of 97.5%. This figure was calculated by combining the data from multiple sensors. The system successfully made 40 and 39 accurate data predictions. The accuracy and error rate calculations are shown in Equations (4) and (5), respectively. As indicated in Equations (6) and (7), the system achieved an accuracy rate of 97.5% with a corresponding error rate of 2.5%.

Error rate = 1 − Accuracy

3.3. Experimental Results

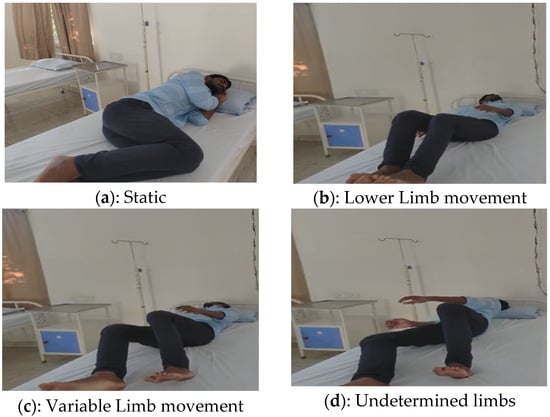

The experimental validations are represented in Figure 13a–g. The PLMD experimental results are shown in Figure 13a–f. The findings indicate that the proposed hardware schemes are versatile for the detection of PLMD. In Figure 13a, the subject is positioned in a static posture facing toward the right in the fetal pose. After some time in the supine position, lower limb movements were observed (Figure 13b,c). Figure 13d shows the undetermined states of the limbs due to continuous variable limb movements. PLMD versatility was observed with constant right limb movements, as shown in Figure 13e. Figure 13f represents constant movements in the right and left limbs. All the sleep postures were analyzed continuously using the FPGA accelerator. The determined sleep disorder is shown in Figure 13g for the postures shown in Figure 13c–d. Posture versus limb movements with respect to the period were classified as both-limb and one-limb movements, and both were included in the analysis. The PLMD analysis, displayed on the LCD of the Zed board FPGA, is illustrated in Figure 13g, presenting an alternative approach to limb movement analysis. In this experiment, T1 marks the initial event where the lower limb—left (LLL) is in motion, represented as logic ‘1’, while the lower limb—right (LLR) remains static, denoted as logic ‘0’. At event T3, the LLL becomes static (logic ‘0’), and the LLR is in motion (logic ‘1’). The time of limb movement (TLM), representing the duration of limb activity, was measured as six units. Each unit duration may vary depending on the individual’s condition or PLMD stage.

Figure 13.

(a–g) Experiment results of PLMD with a volunteer.

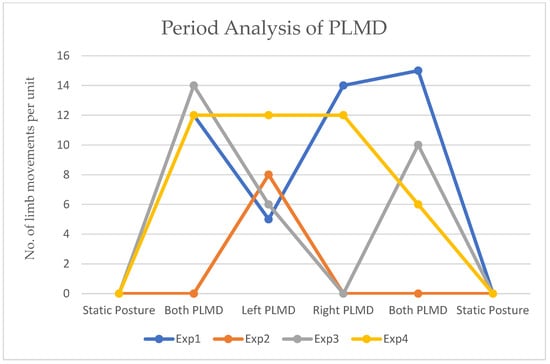

PLMD was detected with four different lower limb movements: In Exp1, both left and right limbs moved. In Exp2, the right limb remained constant, whereas the left limb moved. In Exp3, only the right lower limb moved. In Exp4, limb movement was constant across various durations; thus, it was classified as a non-PLMD status. When both limbs changed position for only one instant and then maintained a static position, it was considered a pose change and therefore not PLMD. The x-axis represents lower limb movement events, including static, as well as PLMD in the left, right, and both limbs, while the y-axis shows the number of limb movements per unit time.

Figure 14 shows the experimental results when new findings were observed. The x-axis is labeled as the event type, and the y-axis counts the number of movement units. PLMD patients displayed limb movements in different forms. In Exp1, subjects presented both limb movements with 12 and 15 units, and individual limb movements were observed, with 5 units for the left limb and 14 units for the right. In Exp2, only left lower limb movements were recorded, with eight units. As mentioned in the above accuracy analysis, the offset values from static to limb movements were not clear, and the error rate was approximately 2.5% in this case. Similarly, movements in both limbs and the left limb alone were observed in Exp3. Each unit was considered based on velocity, step size, and step size from the initial pose to another pose with respect to limb movements as computed using the distances from the ultrasonic sensors. In Exp4, limb movement was constant across various durations and therefore was classified as non-PLMD status. The limitation of early-stage PLMD analysis is that small movements with respect to step size are challenging to analyze, as is the very small step size at the beginning of limb movements.

Figure 14.

Experiment results of PLMD analysis with respect to periods.

3.4. Comparison

The proposed hardware schemes for PLMD detection are compared with relevant research methods in Table 2. Novel hardware schemes were developed for the identification and monitoring of PLMD. Non-wearable approaches have been developed with 97.5% accuracy and the utilization of machine learning algorithms for analysis. FPGA is advantageous in this approach for the concurrent computation of algorithms with synchronization.

Table 2.

Comparison of sleep posture analysis with relevant research methods.

4. Conclusions

In this study, PLMD detection and its challenges were investigated using hardware accelerators. The proposed approach involves data capture and normalization using non-wearable sensors and hardware schemes, the results of which were inputted into the accelerators. PLMD monitoring and analysis were performed using a hardware accelerator with a random forest classifier and binary-based feature-matching algorithms. These tools proved effective, with an accuracy of 97.5% and optimized power consumption of around 1.4 watts for computation. The limitation is that the analysis of early stages of PLMD involves small movements with respect to step size. Future research should focus on analyzing early movements with small step sizes and velocity. Partial reconfiguration is another possible future method for analyzing multiple scenarios.

Author Contributions

Conceptualization, S.K.G., M.C.C., G.D.V., N.J. and S.D.; methodology, M.S., S.K.G., M.C.C. and S.D.; validation, M.S., M.C.C., G.D.V., M.B., D.H.K. and N.J.; writing—original draft preparation, M.S., M.C.C., S.K.G., M.B. and D.H.K.; writing—review and editing, M.C.C., S.K.G., G.D.V., N.J. and S.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in this study are included in the article and available at: https://github.com/Chinnaiah21/Ultrasonic-sensor-data. Further inquiries can be directed to the corresponding author.

Acknowledgments

The EDA Tool and FPGA hardware were supported by the B V Raju Institute of Technology, Medak (Dist.), Narsapur.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PLMD | Periodic limb movement disorder |

| FPGA | Field-programmable gate array |

| GPU | Graphics Processing Unit |

| CNN | Convolution neural network |

| Xi | Sensor Feature, considering units in centimeters |

| yi | Sleep posture labels, considering in seconds |

| D | Training dataset |

| T | Number of decision trees |

| M | Total Features, considering units in seconds |

| m | Sampled with features |

| D_t | Bootstrap sample (D), per second |

| LLL | Lower limb—left |

| LLR | Lower limb—right |

| UBR | Upper-bed, right-side |

| UBL | Upper-bed, left-side |

| MBR | Middle-bed, right-side |

| MBL | Middle-bed left-side |

| LLL | Lower limb—left |

| LLR | Lower limb—right |

| TLM | Time of limb movement |

| UART | Universal asynchronous receiver/transmitter |

| RFID | Radiofrequency identification |

| RFFD | Random forest-based fusion decision |

| RF | Random forest |

References

- World Health Organization. Regional Office for Europe. WHO Technical Meeting on Sleep and Health: Bonn, Germany, 22–24 January 2004. Available online: https://iris.who.int/bitstream/handle/10665/349782/WHO-EURO-2004-4242-44001-62044-eng.pdf (accessed on 11 November 2024).

- American Academy of Sleep Medicine. International Classification of Sleep Disorders, 2nd ed.; ICSD-2; American Academy of Sleep Medicine: Darien, IL, USA, 2005. [Google Scholar]

- Hwang, S.H.; Lee, H.J.; Yoon, H.N.; Jung, D.W.; Lee, Y.-J.G.; Jeong, D.-U.; Park, K.S. Unconstrained sleep apnea monitoring using polyvinylidene fluoride film-based sensor. IEEE Trans. Bio-Med. Eng. 2014, 61, 2125–2134. [Google Scholar] [CrossRef] [PubMed]

- Beattie, Z.T.; Hagen, C.C.; Pavel, M.; Hayes, T.L. Classification of breathing events using load cells under the bed. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; Volume 2009, pp. 3921–3924. [Google Scholar]

- Drakatos, P.; Olaithe, M.; Verma, D.; Ilic, K.; Cash, D.; Fatima, Y.; Higgins, S.; Young, A.H.; Chaudhuri, K.R.; Steier, J.; et al. Periodic limb movements during sleep: A narrative review. J. Thorac. Dis. 2021, 13, 6476–6494. [Google Scholar] [CrossRef] [PubMed]

- Waltisberg, D.; Amft, O.; Brunner, D.P.; Troster, G. Detecting disordered breathing and limb movement using in-bed force sensors. IEEE J. Biomed. Health Inform. 2016, 21, 930–938. [Google Scholar] [CrossRef] [PubMed]

- Maggi, A.; Sauter, M. Measuring Device for Detecting Positional Changes of Persons in Beds. U.S. Patent 14/130 396, 15 May 2014. [Google Scholar]

- Gschliesser, V.; Frauscher, B.; Brandauer, E.; Kohnen, R.; Ulmer, H.; Poewe, W.; Högl, B. PLM detection by actigraphy compared to polysomnography: A validation and comparison of two actigraphs. Sleep Med. 2009, 10, 306–311. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Naya, K.; Li, P.; Miyazaki, T.; Wang, K.; Sun, Y. Non-Invasive Sleeping Posture Recognition and Body Movement Detection Based on RFID. In Proceedings of the 2018 IEEE International Conference on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), Halifax, NS, Canada, 30 July–3 August 2018; pp. 1817–1820. [Google Scholar]

- Cesari, M.; Christensen, J.A.E.; Jennum, P.; Sorensen, H.B.D. Probabilistic Data-Driven Method for Limb Movement Detection during Sleep. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 163–166. [Google Scholar]

- Rauhala, E.; Virkkala, J.; Himanen, S.-L. Periodic limb movement screening as an additional feature of Emfit sensor in sleep disordered breathing studies. J. Neurosci. Methods 2009, 178, 157–161. [Google Scholar] [CrossRef] [PubMed]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Sravanthi, M.; Gunturi, S.K.; Chinnaiah, M.C.; Lam, S.-K.; Vani, G.D.; Basha, M.; Janardhan, N.; Krishna, D.H.; Dubey, S. A Field-Programmable Gate Array-Based Adaptive Sleep Posture Analysis Accelerator for Real-Time Monitoring. Sensors 2024, 24, 7104. [Google Scholar] [CrossRef] [PubMed]

- Sravanthi, M.; Gunturi, S.K.; Chinnaiah, M.C.; Lam, S.-K.; Vani, G.D.; Basha, M.; Janardhan, N.; Krishna, D.H.; Dubey, S. Adaptive FPGA-Based Accelerators for Human–Robot Interaction in Indoor Environments. Sensors 2024, 24, 6986. [Google Scholar] [CrossRef] [PubMed]

- Thathsara, M.; Lam, S.-K.; Kawshan, D.; Piyasena, D. Hardware Accelerator for Feature Matching with Binary Search Tree. In Proceedings of the 2024 IEEE International Symposium on Circuits and Systems (ISCAS), Singapore, 19–22 May 2024; pp. 1–5. [Google Scholar]

- Wan, Z.; Yu, B.; Li, T.Y.; Tang, J.; Zhu, Y.; Wang, Y.; Raychowdhury, A.; Liu, S. A Survey of FPGA-Based Robotic Computing. IEEE Circuits Syst. Mag. 2021, 21, 48–74. [Google Scholar] [CrossRef]

- Kolappan, S.; Krishnan, S.; Murray, B.J.; Boulos, M.I. A low-cost approach for wide-spread screening of periodic leg movements related to sleep disorders. In Proceedings of the 2017 IEEE Canada International Humanitarian Technology Conference (IHTC), Toronto, ON, Canada, 21–22 July 2017; pp. 105–108. [Google Scholar]

- Kye, S.; Moon, J.; Lee, T.; Lee, S.; Lee, K.; Shin, S.-C.; Lee, Y.S. Detecting periodic limb movements in sleep using motion sensor embedded wearable band. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 1087–1092. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).