Abstract

Aiming at the complexity of network architecture design and the low computational efficiency caused by variations in the number of modalities in multimodal cloud detection tasks, this paper proposes an efficient and unified multimodal cloud detection model, M2Cloud, which can process any number of modal data. The core innovation of M2Cloud lies in its novel multimodal data fusion method. This method avoids architectural changes for new modalities, thereby significantly reducing incremental computing costs and enhancing overall efficiency. Furthermore, the designed multimodal data fusion module possesses strong generalization capabilities and can be seamlessly integrated into other network architectures in a plug-and-play manner, greatly enhancing the module’s practicality and flexibility. To address the challenge of unified multimodal feature extraction, we adopt two key strategies: (1) constructing feature extraction modules with shared but independent weights for each modality to preserve the inherent features of each modality; (2) utilizing cosine similarity to adaptively learn complementary features between different modalities, thereby reducing redundant information. Experimental results demonstrate that M2Cloud achieves or even surpasses the state-of-the-art (SOTA) performance on the public multimodal datasets WHUS2-CD and WHUS2-CD+, verifying its effectiveness in the unified multimodal cloud detection task. The research presented in this paper offers new insights and technical support for the field of multimodal data fusion and cloud detection, and holds significant theoretical and practical value.

1. Introduction

Cloud detection, as a core task within the realm of remote sensing technology, holds a pivotal position in numerous key areas such as weather prediction [1], climate simulation [2], and environmental monitoring [3]. With the swift advancements in science and technology, a diverse array of technical methodologies has emerged to enhance both the accuracy and efficiency of cloud detection processes.

Traditional methods are mainly based on threshold setting [4,5], statistical analysis [6,7] and physical modeling [8,9], which provide a solid foundation for an in-depth understanding of the cloud characteristics. These approaches reveal the basic properties and distribution rules of clouds through careful analysis of remote sensing data. While effective in many scenarios, they face significant limitations: threshold-based methods often fail to distinguish clouds from snow due to their nearly identical reflectance in visible and near-infrared bands; statistical approaches rely heavily on manual feature engineering; and physical models are constrained by predefined rules that cannot adapt to complex, dynamic cloud patterns. These fundamental constraints hinder their performance in challenging detection scenarios.

To address these limitations, deep learning has emerged as a powerful alternative, bringing revolutionary changes to cloud detection. In particular, Convolutional Neural Network (CNN) has become a mainstream tool in cloud detection tasks with its excellent feature extraction and classification capabilities, significantly improving the accuracy of detection [10,11,12]. Some scholars introduce branch networks as the auxiliary of the backbone network [13,14,15] to enhance the performance of feature extraction. At the same time, the introduction of Transformer architecture further enhances the robustness and generalization performance of cloud detection models with its powerful ability to capture long-distance dependencies and context information [16,17,18].

However, in complex and variable scenes, the feature similarity between clouds and ground highlighted background (especially snow and water area) is always a challenging problem in cloud detection. With the continuous progress of satellite technology, the acquisition of remote sensing data has become increasingly abundant [19]. Multimodal learning technology has opened up a new path for improving the performance of cloud detection through the efficient deep fusion of information. But the existing multimodal methods [20,21] remain fundamentally limited by their requirement for dedicated backbone networks per modality—an architecture that becomes increasingly inefficient as new modalities are introduced. In view of this, we face a challenging research issue: how to design and implement an efficient and unified multimodal framework that has the ability to process any number of modal data to construct a mode-independent cloud detection model.

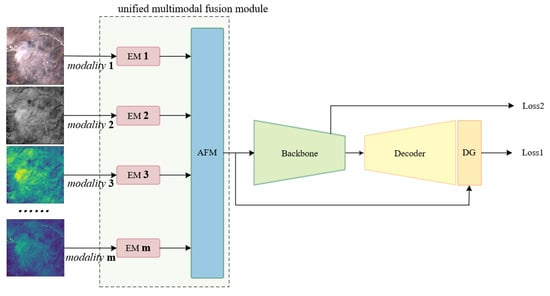

To address the aforementioned challenges, this paper introduces a highly scalable, unified multimodal cloud detection model designated as M2Cloud, which incorporates two key advances over conventional multimodal frameworks. First, our modality-agnostic fusion strategy eliminates the need for architectural modifications when incorporating new modalities. Second, the system demonstrates plug-and-play interoperability across backbone architectures. The architectural framework of M2Cloud is illustrated in Figure 1. The primary contributions of this study are delineated as follows:

Figure 1.

An overview of M2Cloud.

- A novel approach to multimodal data fusion: When confronted with the task of processing a variable number of modalities, we reconsider the fusion strategy for multimodal data and devise a scheme that obviates the need for structural modifications to the network architecture with the introduction of each new modality. This innovative approach minimizes the incremental computational cost associated with each additional mode, thereby enhancing overall efficiency. Furthermore, the multimodal data fusion module possesses exceptional extrapolation capabilities and can be seamlessly integrated into other network architectures in a user-friendly, plug-and-play fashion. This characteristic augments the practicality and flexibility of the module.

- Construction and exploration of a multimodal cloud detection model:The proposed M2Cloud model demonstrates remarkable performance, achieving or even surpassing SOTA accuracy levels on public multimodal datasets through the deep integration of multimodal data. This outcome not only validates the efficacy of the M2Cloud model in unified multimodal cloud detection tasks but also offers a viable reference methodology for the construction of similar models.

2. Related Work

2.1. Cloud Detection Method Based on Deep Learning

Convolutional Neural Networks (CNNs) have exhibited exceptional capabilities in extracting spectral and spatial features, leveraging their local receptive fields, weight sharing, translation invariance, and pooling techniques. These attributes enable deep architectures to efficiently capture high-level semantic representations, which are pivotal for remote sensing cloud detection tasks [22]. However, the conventional encoder–decoder structure often encounters issues such as spatial information loss and feature dilution. To address these challenges, several studies [23,24,25] have incorporated attention mechanisms, including attention modules, into their frameworks. Furthermore, in an effort to bolster the capacity of deep learning networks to grasp intricate information characteristics, researchers have delved into multi-feature fusion strategies [15,26,27].

CNNs excel in local connectivity and feature extraction but struggle with capturing global context information. Conversely, Transformer models, exemplified by ViT (Vision Transformer) [28] and Swin Transformer [29], are adept at global feature extraction and contextual understanding. Singh introduced SSATR-CD, a vision transformer-based method for cloud detection in Sentinel-2 images [30]. Recognizing the strengths of Transformers in global feature extraction and CNNs in local feature extraction, several studies have integrated Transformers with CNNs to enhance the performance of cloud detection networks [31]. Gu et al. [32] employed a hybrid approach that combines Transformer and CNN models to concurrently extract semantic and spatial details from images, particularly when dealing with high- and low-resolution images. MAFNet [33] proposed a multi-branch attention fusion network that integrates ResNet50 dual-branch and Swin Transformer for feature extraction. The Multi-Branch Attention Fusion Module (MAFM) in MAFNet enhances location information through positional encoding and utilizes Multi-Branch Aggregation Attention (MAA) to fuse features of the same level from the two branches, thereby improving the capabilities for boundary segmentation and small object detection. These hybrid methods underscore the considerable potential of combining CNNs with Transformers for cloud detection tasks. But it is important to note that they primarily focus on feature-level fusion within single-modality data rather than addressing the challenges of multimodal fusion.

2.2. Multimodal Cloud Detection Method

Visible light is extensively utilized in satellite imagery and various other domains owing to its high definition and abundant informational content. Cloud identification primarily involves the analysis of grayscale values within the red, green, and blue channels, or the examination of the image’s texture and structure. Notably, clouds exhibit a heightened reflectance in the near-infrared band (NIR), resulting in a pronounced contrast against the background, thereby facilitating their identification and differentiation [34]. The integration of multi-spectral data from both visible and near-infrared light constitutes a pivotal approach in multimodal cloud detection methodologies [35,36,37]. This strategy markedly enhances the accuracy and robustness of cloud detection processes.

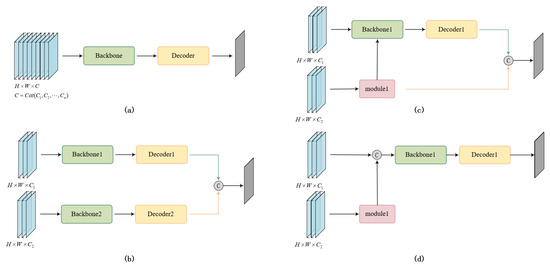

Effectively distinguishing clouds from high-brightness backgrounds (such as snow, water bodies, etc.) in complex scenarios solely based on visible and near-infrared (VNIR) bands still poses significant challenges [38,39]. Mid- and short-wavelength infrared (SWIR) channels can be utilized to differentiate clouds from bright backgrounds due to their unique spectral response characteristics [40]. In particular, SWIR bands exhibit notable advantages in distinguishing clouds from snow-covered areas [41,42]. Meanwhile, vegetation, water bodies, urban areas, barren lands, and other land covers exhibit significant differences in top-of-atmosphere (TOA) reflectance across different spectral bands compared to clouds, providing abundant feature information for land cover classification [43]. Geographic information offers important prior information for cloud and snow detection in remote sensing images [21]. The integration of geospatial data enhances the spatial localization capability of models used for cloud detection [44,45]. However, when processing multimodal data, these methods often adopt a strategy of simply concatenating images from different modalities as input to the feature extraction network as illustrated in Figure 2a. This approach overlooks the complex correlations and complementarity that exist among multimodal data across different bands and types, thereby limiting the depth and breadth of information utilization from multimodal data.

Figure 2.

Multimodal fusion methods in existing cloud detection networks. (a) Simple concatenation of multimodal data along the channel dimension. (b–d) Specialized dual-modal fusion architecture.

Wu et al. [21] specifically designed a “geographic information encoder” that encodes the elevation, latitude, and longitude of the image into a set of auxiliary maps, which are then input into the detection network. Ma et al. [46] fused the differential features of the image and top-of-atmosphere (TOA) reflectance information, reducing the misclassification of thin clouds and bright surface features, as well as missed detections. Fan et al. [47] guided the model to focus on the spatial relationships between clouds and cloud shadows in the context by fusing prior knowledge extracted from dark channels, thermal channels, and NDVI (Normalized Difference Vegetation Index). Xu et al. [48] proposed a novel gradient-aware feature aggregation module (GAFAM), which focuses on gradient changes within cloud boundary regions and utilizes gradient information to guide feature aggregation. These methods are limited to handling dual-modal situations and cannot effectively integrate more than two modal data. Furthermore, they typically employ separate feature extraction networks for each modality as illustrated in Figure 2b–d. This results in the need for complex redesign and adjustment of the original model whenever the number of modalities increases, significantly reducing efficiency.

2.3. Multimodal Datasets for Cloud Detection

The WHUS2-CD dataset [43], compiled by Wuhan University, includes Sentinel-2A imagery with 13 spectral bands at resolutions of 10 m, 20 m, and 60 m. It comprises 32 images, featuring diverse land covers like farmland, forests, and snow/ice. The dataset spans April 2018 to May 2020, covering all seasons. WHUS2-CD+ adds four images with snow/ice scenes.

The CloudSEN12+ dataset [49] expands CloudSEN12 [50], doubling expert-labeled annotations to become the largest for cloud and cloud shadow detection in Sentinel-2 imagery. It includes over 50,000 image patches with diverse cloud scenarios, labeled in two sizes: 509 × 509 and 2000 × 2000 pixels at 10 m resolution.

The GF1_WHU dataSets [51] cloud detection dataset, released by the SENDIMAGE Laboratory of Wuhan University, includes 108 GF-1 wide field of view (WFV) Level-2A scenes, along with corresponding reference cloud and cloud shadow masks. The dataset has a spatial resolution of 16 m and covers four key multispectral bands: red, green, blue, and near-infrared.

The MODIS dataset [40] is a fully annotated cloud detection dataset, segmented into 1192 training images, 80 validation images, and 150 test images. It is based on data from the Moderate Resolution Imaging Spectroradiometer (MODIS), one of NASA’s key Earth observation systems. The dataset includes the 10 most differentiated channels and covers a wide range of four main scenarios: ocean, land, sea–land interface, and polar glaciers.

The 38-Cloud dataset [52] has 18 training and 20 test Landsat 8 scenes. The 95-Cloud dataset [53], an extension, includes 75 diverse training scenes but uses the same 20 test scenes as the 38-Cloud. Both datasets feature red, green, blue, and near-infrared spectral channels.

The SPARCS dataset [54] covers 80 complete satellite scenarios derived from Landsat 8 satellites. In data processing, only bands 2 (blue), 3 (green), 4 (red), and 5 (near infrared) are selected as input channels.

Levir_CS dataset [21] is a large-scale dataset for cloud and snow detection, consisting of 4168 GF-1 WFV satellite images. These images exhibit global distribution characteristics, covering a variety of feature types such as plains, plateaus, water bodies, deserts, snow and ice, as well as combinations of these types. For each scene in the Levir_CS dataset, a four-band multispectral image, a digital elevation model (DEM) image, and a ground-truth annotated image are provided.

The specific information of these datasets above is shown in Table 1.

Table 1.

Information of the datasets.

3. Method

3.1. Overall Network and Motivation

This paper endeavors to propose a unified multimodal cloud detection model capable of addressing quantity-independent modal tasks. To address the challenges associated with unified multimodal feature extraction, this paper employs two strategies:

- The establishment of a shared, yet consistent feature extraction module for each modality, albeit with independent weights. This module is designed to learn the specific distribution characteristics within each modality while preventing the direct blending of modalities with substantial discrepancies, thereby preserving their inherent features. Additionally, a unique identifier, in the form of an inductive bias, is assigned to each modal category.

- The utilization of cosine similarity enables the network to adaptively learn complementary features across different modalities, mitigating the redundancy of similar features.

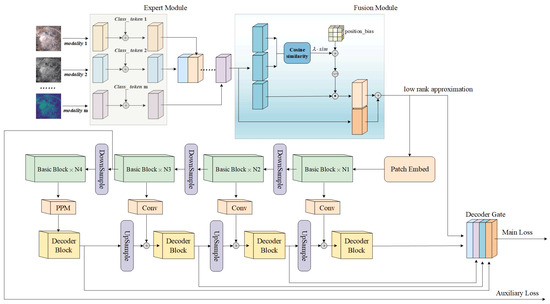

Consequently, our model comprises four core modules: the Modal Feature Expert Module, the Adaptive Multimodal Fusion Module, the backbone network, and the decoder as illustrated in Figure 3. In the subsequent subsections, we delve into the specifics of each module.

Figure 3.

An overview of our proposed network. Unified Multimodal Cloud Detection Process: Data from various modalities are inputted into expert modules that share the same structure but possess independent weights. The extracted feature maps are then superimposed along the channel dimension. Complementary information is effectively fused by calculating cosine similarity. Subsequently, a low-rank approximation of the fused feature maps is fed into the backbone network for further feature extraction. Simultaneously, this approximation serves as an auxiliary input to the decoding gate, aiding in the decoding process of cloud detection.

3.2. Modal Feature Expert Module

In constructing a unified multimodal cloud detection model, it is vital to ensure that feature extraction modules across different modalities share a consistent structure, while each module maintains independent weights to capture modal-specific distribution characteristics. To avoid feature corruption from directly mixing disparate modalities, we adopt an inductive bias approach by assigning a unique identifier to each modality category. Specifically, we initialize these modal class labels using a truncated cosine function within the interval . In practice, we employ a partial discrete sampling of cosine function values within [0,].

Let be the number of modal branches and i be the branch index (from 0 to ), then inter-class identifier of the ith modality can be expressed as

where represents the angle in radians calculated by

Given the monotonically decreasing nature of the cosine function within the specified range, and by disrupting its inherent symmetry (considering only a portion of one half-period), each modal category can be assigned a unique, non-repeating cosine value as an identifier. This approach guarantees that, despite the potential similarities in features between two modal categories, their identifiers remain distinguishable due to different angles, thereby effectively mitigating the risk of multimodal features mutually canceling out in specific scenarios.

Furthermore, we associate gradient attributes with modality class labels and utilize matrix broadcast techniques to sample these identifiers, aligning them with the scale of the feature maps. This facilitates the network’s learning of token-level feature information during training. Consequently, we not only effectively leverage the complementary information among different modalities but also ensure the model’s stability in complex and dynamic environments. Notably, the model is capable of automatically discarding the modal class token in unimodal scenarios.

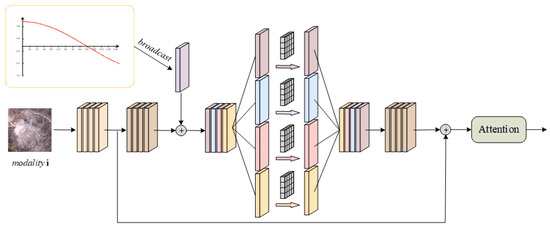

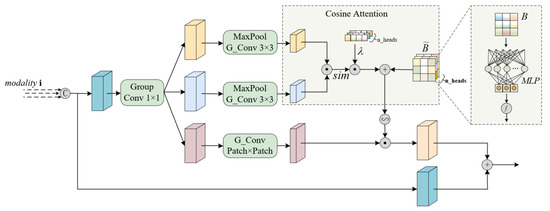

Given the linear growth of computational complexity with the number of modes, lightweight design methodologies are integrated in this section. Specifically, each modal signal is input into the corresponding EM (expert module) processing unit, denoted as the i-th modality. As depicted in Figure 4, the initial features are mapped into a higher-dimensional space. To strike a balance between the feature extraction capability and computational efficiency, group convolution with a group number G is utilized, reducing the computational cost, model parameters, and floating-point operations (FLOPs) to 1/G of those required by standard convolution. Furthermore, residual connections are introduced to facilitate cross-layer feature propagation and fusion.

Figure 4.

The architecture of the expert module.

3.3. Adaptive Multimodal Fusion Module

The repeated stacking of similarity features fails to introduce novel perceptual information into the neural network. When dealing with multimodal data, the principal challenge lies in exploring and extracting complementary features among different modalities, while simultaneously preserving the semantic information of each modality in a compact, low-dimensional representation. This represents the core issue in achieving quantity-agnostic modal feature fusion.

From the perspective of spatial cosine distance, a larger cosine distance between two basis vectors (or feature vectors) indicates a more significant difference in their spatial orientation, often perceived as a potential indicator of feature complementarity. When two feature vectors from different modalities exhibit a large cosine distance in space, they may represent unique information or attributes specific to their respective modalities. In the fusion process, these features can complement one another, thereby providing a more comprehensive and accurate description of the data.

As shown in Figure 5, we denote the high-order multimodal matrix received by the EM as , where the stacking of the n modalities is arranged solely along the channel dimension without fusion. Each modality can be decomposed into m individual subspaces in the channel dimension. Initially, X is replicated into three distinct copies through linear transformations, with each modality having a unique set of weights, represented as

where , for all . For and , the cosine similarity can be calculated using the following formula:

where ⊙ denotes the Hadamard product. is a learnable modifier that scales the similarity scores to help stabilize the training process and improve the performance of the model. The initial value is log(10). During training, is restricted to between log(10) and log(100) by the formula

to update. This means that the value of is cropped to no more than 100, thus avoiding the negative impact of too large or too small values on training. is added to the similarity score to take into account the relative position between elements. The position bias is processed by a multi-layer perceptron (MLP) and scaled by a sigmoid activation function. The specific formula is

where sgn(B) is the symbolic function of the position deviation B, which maps the size of the position deviation to a suitable range through the scaling factor.

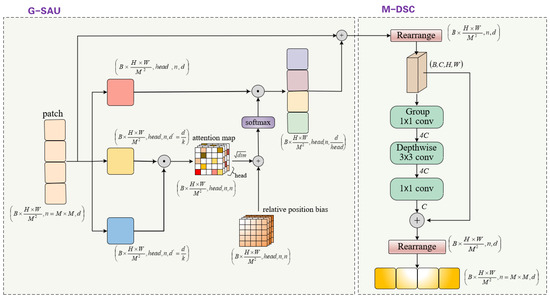

Figure 5.

The architecture of the adaptive fusion module.

After obtaining the cosine similarity matrix, the attention weights are calculated using the softmax function. Furthermore, the cosine distance matrix is derived and integrated with a copy of the data . This integration aims to allocate heterogeneous relationships. The fusion process follows the mathematical expression below:

Here, and denote the Softmax function applied in different dimensions, respectively. Here, the similarity is converted to the difference value, which is normalized and dot-multiplied with to obtain the fused complementary feature representation .

To deeply fuse feature representations and effectively reduce dimensionality, we employ a global convolution operation that focuses on channel paths, extracts cross-modal shared features, and eliminates redundant information. Additionally, we introduce residual connections to maintain feature integrity and prevent performance degradation. The final features are

3.4. Backbone

We previously highlighted the potential of combining CNNs and Transformers for cloud detection. However, existing hybrid models often adopt a parallel design, using CNN and Transformer modules independently as feature encoders. While this leverages the strengths of both, it increases model complexity and computational demands. Moreover, due to the inherent differences in feature extraction between CNNs and Transformers, current studies are limited to simple fusion strategies like concatenation or weighted summation, which may not fully capture the complex interactions between these features.

To address these issues, this paper proposes an innovative hybrid basic block that integrates CNNs and Transformers as the core component of the network backbone. Additionally, we design corresponding stacking principles to ensure flexible scalability, allowing the model to adapt to varying scales and complexity requirements.

3.4.1. Basic Block

Multimodal feature maps encompass richer information dimensions, suggesting that when performing multimodal extrapolation, we may need to rely on a more extensive network architecture to accommodate diverse feature bases and comprehensively extract features. Within the basic block, we innovatively design two operator series structures: the Global Self-Attention Unit (G-SAU) and the Multigroup Depthwise Separable Convolution (M-DSC).

G-SAU, with its unconstrained perceptual dimension, excels in modeling long-distance dependencies. Meanwhile, CNN demonstrates high sensitivity to the contextual relationships between local pixels, aiding in compensating for the potential limitations of the self-attention mechanism in capturing local information. In M-DSC, we ingeniously adopt a convolution-based inverted residual structure to replace the feed-forward network comprising MLP in the conventional ViT. This design strategy facilitates the extraction of texture details from small target objects and further enhances the model’s feature extraction capability in complex multimodal scenes. The entire process can be referenced in Figure 6.

Figure 6.

Structure of the basic block.

In G-SAU, despite the variations among different modal information, their spatial geometric information remains consistent. Therefore, multimodal self-attention is employed to learn these rich spatial features. The input feature map is divided along the spatial dimensions into non-overlapping patches of size . Subsequently, different linear transformations are applied to the features of each patch, mapping them into three latent state spaces. To alleviate the computational burden, the dimensions of the queries (Q) and keys (K) are sparsified to of the original dimensions, while the dimensions of the values (V) remain unchanged. Within each window, for a feature matrix X composed of n tokens, the computation of self-attention is conducted as follows:

where . d and are the original and sparsified feature dimensions, respectively. and denote the light weights and normal weights, respectively, and B represents the relative position deviation matrix.

In M-DSC, we initially reshape the one-dimensional sequence features to revert them back into , thereby restoring their spatial structure:

To enhance feature representation, we introduce grouped pointwise convolutions for blocks with odd indices, dividing feature maps into g groups and applying independent linear transformations. For even-indexed blocks, we use standard convolutions. Within each group, depthwise separable convolutions (combining depthwise and pointwise convolutions) are employed. This diversifies feature transformations and boosts expressiveness. Group feature fusion is performed via a weighted sum along the channel dimension, constructing the final local perception representation:

where and represent the results after the self-attention operation and the output of the local perception unit, respectively; represents k × k (k = 1 here) convolution; and represents k × k (k = 3) depthwise separable convolution (depthwise convolution + pointwise convolution). With the aforementioned operations, we can efficiently model local relationships.

3.4.2. Architecture Deployment

To construct a robust network architecture for parsing multi-scale semantic features, we devise a hierarchical, multi-resolution backbone. This backbone divides the feature extraction process into four distinct resolution levels, with each subsequent level being downsampled by a factor of two to capture a spectrum of information ranging from fine to coarse details.

In implementation, each resolution level comprises a predetermined number of identical basic blocks. We employ a differentiated configuration strategy: at the first, second, and fourth resolution levels (denoted as N1, N2, and N4, respectively, with N1 = N2 = N4 = 2), we maintain an equal number of basic building blocks to ensure stable and efficient feature extraction. Conversely, at the third resolution level, which may necessitate greater processing power due to the potential richness of semantic information, we augment the number of basic blocks (denoted as , with N3 = 16) to bolster the feature extraction capabilities.

3.5. Decoder

In our network architecture, we adopt the standard upsampling technique and skip connections as the core components of the decoder. At the lowest level of the network, we integrate the Pyramid Pooling Module (PPM) [55] to enhance the capability of capturing global context information.

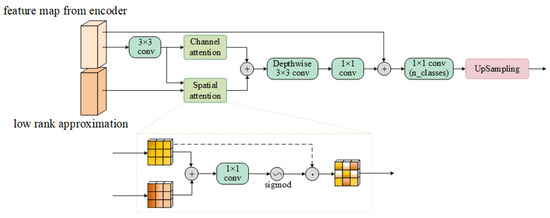

To further improve the accuracy and efficiency of deep spatial indexing, we introduce the decoder gate (DG) mechanism, which represents an efficient second-order operation strategy. It is noteworthy that deep feature maps exhibit limitations in accurately capturing spatial details, and the DG mechanism ingeniously incorporates shallow feature maps as guidance. These shallow feature maps preserve finer spatial structures and edge details of the image, thereby effectively compensating for the blurriness of deep features in detailed expression. The structure of the proposed feature decoder gate is depicted in Figure 7. The process before accessing the classifier can be approximated as

where and represent the feature map of the cascade at the decoder and the output of the approximate feature extraction module at the multimodal fusion module, and and denote channel attention and spatial attention, respectively.

Figure 7.

Structure of the decoder gate (DG).

3.6. Loss Function

To solve the problem of feature degradation in deep networks, an auxiliary classifier is introduced into the third stage of the backbone network, which enhances feature representation by incorporating additional supervised signals to accelerate and optimize the learning process during the initial training stage. However, over-reliance on shallow auxiliary branches may weaken deep feature utilization and affect model performance. To this end, we design a dynamic loss allocation strategy. During the initial training stages, the auxiliary classifier is assigned a high loss weight () to provide strong supervisory signals that combat vanishing gradients and accelerate convergence, particularly crucial for capturing the spectral contrasts characteristic of cloud detection tasks. As training progresses, a linear decay factor (( − )/epochs) facilitates a smooth transition from auxiliary-to-main classifier dominance, maintaining stable optimization while allowing gradual adaptation to deeper feature representations. In the final training stages, a minimal auxiliary weight () preserves subtle supervisory signals without over-reliance on shallow features, ensuring the model develops robust deep feature discriminability while retaining sensitivity to fine cloud boundaries. The dynamic balance between auxiliary and main loss components effectively optimizes feature learning across all network depths while maintaining the model’s ability to capture both global cloud characteristics and local boundary details. The total loss can be expressed as follows:

and () are two hyperparameters that denote the proportions allocated to the auxiliary branch at the beginning and end of the training, and and denote the losses of the main and auxiliary branches, respectively.

4. Experiment

4.1. Datasets and Evaluation Metrics

In this study, the WHUS2-CD cloud detection special dataset constructed by Wuhan University is selected. Its core advantage is that it completely contains multi-spectral and multi-resolution information of 13 spectral bands of Sentinel-2 satellite, and provides complex surface scenes (farmland, forest, snow and ice, etc.) through 32 images covering the whole season. Especially for the technical difficulty of cloud–snow confusion, the enhanced version of WHUS2-CD+ specially adds four high-resolution images of snow and ice scenes, which strengthens the detection challenge of spectral overlap region. This dataset has been widely used as a benchmark library in the field of cloud detection. Its multi-spectral characteristics, seasonal coverage integrity and targeted enhancement design can effectively verify the robustness of the algorithm under different land surface conditions and meteorological scenarios, and fully support the technical verification requirements of the advantages of this method.

Since the spatial resolutions of the VNIR, VRE/SWIR, and Ca/WV/Cir bands are 10 m, 20 m, and 60 m, respectively, the WHUS2-CD dataset categorizes the 13 bands into three resolution-based groups of modals. Given that clouds exhibit higher reflectance in the near-infrared band (NIR), thereby creating a more significant contrast with the background, which aids in their identification and discrimination [34], the Near NIR band is treated as a separate model in this paper. To establish a unified multimodal processing framework, bilinear interpolation is employed to standardize the resolution of all bands to 10 m (including those originally at 20 m and 60 m).

The performance evaluation employs the conventional set of metrics widely adopted in cloud detection research (accuracy, precision, recall, F1-score, and MIoU), ensuring direct comparability with existing studies. Here are the definitions of the key metrics:

where TP, TN, FP, and FN stand for true positive, true negative, false positive and false negative, respectively. N is the number of classes in the dataset.

4.2. Implementation Details

Our PyTorch 3.9 implementation on an RTX 3090 GPU employs AdamW optimization (, ) with 0.01 weight decay, using a linear warmup (5 epochs) to reach the initial learning rate of followed by cosine decay over 100 epochs (minimum lr ). We use cross-entropy loss with label smoothing () and a batch size of 16 (due to memory constraints), along with random horizontal flipping (p = 0.5) and rotation () for data augmentation. These hyperparameters are determined through grid search on the validation set.

4.3. Main Properties

Contribution of Multimodal Data to Network Performance. To deeply analyze the specific impact of multimodal data on network performance, we design a benchmark model, Cloud_3, which utilizes only three visible optical bands (blue, green, and red) as the standard input for the single-modal cloud detection network. The Cloud_4 model builds upon this by adding the Near InfraRed band, thus using the complete VNIR (visible and near-infrared) band as input. The Cloud_10 model further incorporates six VRE/SWIR bands, while the Cloud_13 model adds three Ca/WV/Cir bands, encompassing all band data as input. Here, multiple bands are concatenated in the channel direction without any fusion, which constitutes the data input mode of most current multimodal cloud detection networks.

Experimental results on the WHUS2-CD dataset demonstrate significant performance differences among the three models as outlined in Table 2.

Table 2.

Comparison of evaluation metrics of different baseline models on the WHUS2-CD dataset.

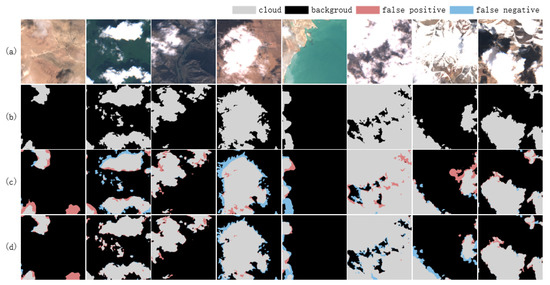

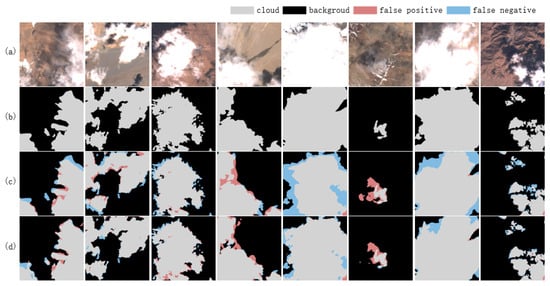

As the input data modalities transition from three visible bands to thirteen multiband configurations, the cloud detection model demonstrates a progressive enhancement in its performance metrics, including accuracy, precision, recall, F1 score, and MIoU. A significant performance improvement is observed with the incorporation of the NIR band in the transition from Cloud_3 to Cloud_4, highlighting the critical role of the NIR band in cloud detection tasks as shown in Figure 8. Further enhancement is achieved with the addition of six VRE/SWIR bands in the transition from Cloud_4 to Cloud_10, although the improvement is less pronounced. In contrast, the transition from Cloud_10 to Cloud_13, which incorporates three Ca/WV/Cir bands, yields only marginal performance gains. Despite this, the Cloud_13 model achieves the highest values across all evaluation metrics, suggesting that incremental additions to the number of bands can sustainably enhance network performance, albeit with diminishing marginal returns. Furthermore, the recall rate exhibits a gradual improvement, indicating that the integration of multimodal data effectively reduces missed detections.

Figure 8.

The important role of NIR band in cloud detection. (a) Image, (b) ground truth, (c) Cloud_3, and (d) Cloud_4.

Contribution of multimodal fusion to network performance. To deeply investigate the specific impact of multimodal fusion on network performance, this study introduces an innovative Cloud_(3,1) model architecture. The Cloud_(3,1) model leverages the VNIR band; however, it innovatively treats visible light and NIR as distinct modal inputs. This approach is designed to deeply explore the complementarity among different modalities. Furthermore, the Cloud_(3,1,6) model builds upon the Cloud_(3,1) foundation by incorporating six additional VRE/SWIR bands, constituting a third modal input. This extension equips the model with a broader spectrum of information. The Cloud_(3,1,6,3) model further refines the architecture by incorporating three additional Ca/WV/Cir bands as a fourth modal input. This model encompasses data from all critical bands, aiming to further elevate the expressiveness and generalization capability of the model in multimodal fusion tasks through comprehensive spectral coverage.

As shown in Table 3, compared with the single-modal models using multi-band direct concatenation, the models introducing multimodal fusion, and Cloud_(3,1,6,3)) exhibit significant superiority in various performance indicators. Notably, the Cloud_(3,1) model’s F1 score rises from 96.50% to 96.90% compared to the Cloud_4 model, and its MIoU increases from 93.38% to 94.09%. The improvement of the cloud detection performance by the multimodal fusion module can be intuitively reflected in Figure 9. This result indicates that treating visible light and near-infrared bands as independent modal inputs and fusing them can effectively mine and utilize the complementarity between them, thereby improving the model’s performance.

Table 3.

The evaluation metrics of different multimodal models on the WHUS2-CD dataset.

Figure 9.

Important role of multimodal fusion module in cloud detection. (a) Image, (b) ground truth, (c) Cloud_4, and (d) Cloud_(3,1).

The Cloud_(3,1,6,3) model achieves the highest values in all evaluation indicators, which further confirms that multimodal fusion can make full use of richer spectral information, significantly enhancing the model’s expression and generalization abilities. In contrast, the direct stitching of multiple bands may fail to fully capture the potential relationships between modalities. Furthermore, the multimodal fusion model’s performance is particularly outstanding in terms of recall, which demonstrates that multimodal fusion can significantly improve the model’s detection ability for cloud targets by effectively reducing the missed detection rate. Even in more complex scenarios, as shown in Table 4, the Cloud_(3,1,6,3) model still achieves the highest values on all evaluation metrics.

Table 4.

The evaluation metrics of different multimodal models on the WHUS2-CD+ dataset.

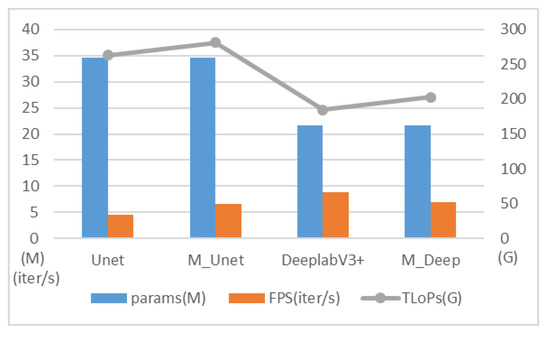

Contribution of the unified multimodal fusion module to the network performance. We deploy the unified multimodal fusion module proposed in this paper on two classic segmentation models, UNet [56] and DeepLabV3+ [57], to evaluate its impact on model performance. In the baseline models, UNet and DeepLabV3+ directly concatenate the 13 spectral bands as input. In contrast, M_UNet and M_Deep perform the following: (1) divide the 13 bands into four modality groups based on their spectral characteristics, (2) process them through our unified multimodal fusion module for coordinated feature extraction and cross-modal fusion, then (3) feed the integrated features into the original network architectures with only input channel dimensionality adjustments. This efficient implementation preserves all subsequent layers while enabling effective multimodal processing as evidenced by the consistent performance improvements. As shown in Table 5, the integration of the multimodal fusion module results in consistent improvements in key evaluation metrics, for both UNet and DeepLabV3+. These improvements demonstrate the module’s ability to effectively integrate multimodal information, thereby enhancing the segmentation performance of the models.

Table 5.

Comparison of evaluation metrics of different multimodal models on the WHUS2-CD dataset.

For UNet, the improvement in precision is particularly significant, demonstrating the module’s capability to enhance the model’s ability to identify fine-grained details. For DeepLabV3+, although the improvement is relatively modest, the consistent performance gains across both models highlight the module’s adaptability to different architectures and its potential for handling multimodal data effectively.

Figure 10 shows the comparison of the models in terms of efficiency. Compared with UNet, M_UNet exhibits a limited increase in both computational complexity and parameter count, yet achieves a significant improvement in FPS, indicating that the incorporation of the multimodal fusion module effectively optimizes the inference speed of the UNet architecture. UNet itself retains rich detailed information through its skip connection design, performing exceptionally well in tasks that demand high recall rates. The inclusion of the multimodal fusion module not only further enhances the feature extraction capabilities of M_UNet, improving both accuracy and MIoU, but also boosts the inference efficiency by efficiently integrating features and minimizing redundant calculations.

Figure 10.

Comparison of the impact of unified multimodal fusion modules on network efficiency.

DeepLabV3+ achieves efficient feature extraction through the utilization of atrous convolution and ASPP modules, making it suitable for processing high-resolution images. The integration of the multimodal fusion module further enhances the feature integration capabilities of DeepLabV3+, leading to improvements in both the recall rate and MIoU. However, when compared to the lightweight design of DeepLabV3+, M_Deep introduces additional computational steps, resulting in a decrease in inference speed.

Ablations study. We establish a baseline using four modal data inputs (consisting of 13 bands) and the backbone network. Subsequently, we modify the networks to verify the contribution of each module as detailed in Table 6. Our conclusions are as follows: (1) The baseline model exclusively utilizes the backbone to process the 13 bands of multimodal data. Although it achieves high performance, there remains potential for enhancement in recall and MIoU, suggesting limitations in the model’s feature extraction and fusion capabilities. (2) The incorporation of the expert module (EM) into the baseline model leads to improvements in accuracy, precision, F1-score, and MIoU. These findings indicate that the EM effectively extracts features from multimodal data, thereby enhancing the model’s classification accuracy and segmentation performance. However, a slight decline in recall, from 96.33% to 96.26%, may be attributed to potential information loss during the feature extraction process facilitated by the EM. (3) Upon further integrating the Adaptive Fusion Module (AFM) with the EM-enhanced baseline model, all model metrics attain optimal values. Specifically, recall experiences a notable improvement, increasing from 96.26% to 96.58%. These results demonstrate that the AFM module effectively merges multimodal features, addressing the shortcomings of the EM module in detailed information extraction. Consequently, a superior balance between precision and recall is achieved, further augmenting the model’s overall performance.

Table 6.

Evaluation of the proposed unified multimodal fusion module with different settings on the WHUS2-CD dataset.

Our model achieves competitive accuracy with 69.814 M parameters and 271.599 G FLOPs, requiring 291.336 MB memory. On a NVIDIA RTX 3090 GPU, it processes images at 4.58 FPS (218.4 ms per inference), making it suitable for offline batch processing (e.g., remote images analysis). Future work will focus on optimizing the model’s efficiency through hardware-software co-design, ensuring its viability for large-scale real-time applications.

In conclusion, the ablation experimental results emphasize the significant contribution of the proposed unified multimodal fusion module (EM + AFM) in improving model performance. The pivotal role of this unified module in the feature extraction and fusion of multispectral data is clearly validated.

4.4. Comparisons

To comprehensively evaluate the performance of the proposed model in cloud detection tasks, we utilized thirteen spectral bands from the WHUS2-CD dataset as input features for the network architecture. A rigorous comparative analysis was conducted against both classical semantic segmentation frameworks, namely UNet [56] and Deeplabv3+ [57], and state-of-the-art methodologies in the field, including AFMUnet [12], CSDFormer [39], and TransGA-Net [48]. It is noteworthy that these comparative models represent distinct architectural paradigms: AFMUnet is fundamentally based on the Convolutional Neural Network (CNN) framework, CSDFormer incorporates the Transformer architecture, and TransGA-Net adopts a hybrid CNN–Transformer approach, thereby providing a comprehensive benchmark for evaluating the proposed model’s performance across different architectural paradigms.

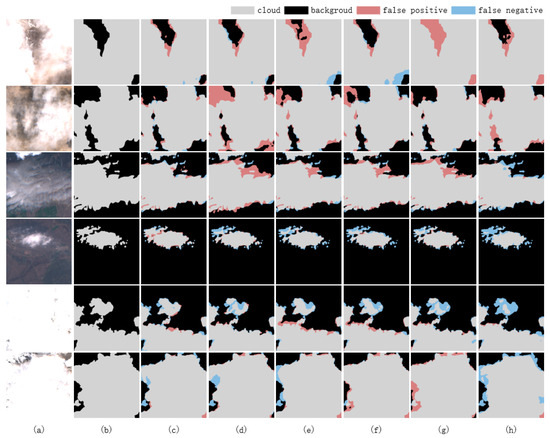

In the context of cloud detection utilizing the WHUS2-CD dataset, the proposed model exhibits superior performance as evidenced by its overall accuracy of 98.91%, recall of 96.58%, F1-score of 96.99%, and MIoU of 94.26%, as detailed in Table 7. These metrics outperformed those of comparison models, thereby validating the efficacy of leveraging multispectral information for cloud detection tasks. This is shown in Figure 11.

Table 7.

The evaluation metrics of different models on the WHUS2-CD dataset.

Figure 11.

Comparison of cloud detection results using various methods under various land covers on the WHUS2-CD dataset. (a) Image, (b) ground truth, (c) ours, (d) Unet, (e) deeplabv3+, (f) AFMUnet, (g) CSDFormer, and (h) TransGA-Net.

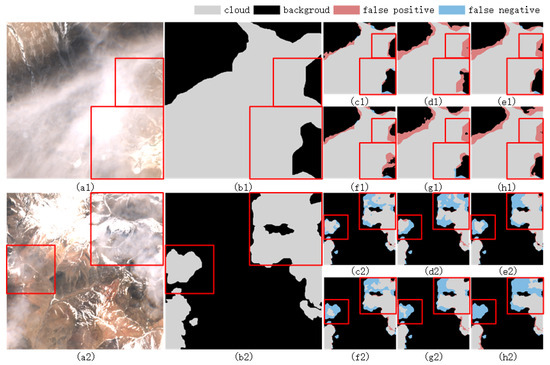

To further challenge the model’s capabilities, the WHUS2-CD+ dataset was introduced, which augmented the original WHUS2-CD dataset with four additional images depicting snow/ice scenes. As illustrated in Table 8, the proposed model achieved an overall accuracy of 98.65%, surpassing that of UNet (97.95%), DeepLabV3+ (98.40%), AFMUnet (98.57%), CSDFormer (98.49%), and TransGA-Net (98.25%). This result underscores the model’s robustness in handling complex scenes, particularly in discriminating between clouds and snow/ice. Specifically, the proposed model demonstrated a precision of 97.42%, significantly higher than that of other models, suggesting a low false detection rate in distinguishing clouds from snow/ice. Although its recall rate of 93.96% was slightly lower than UNet’s 96.04%, the combination of high precision and recall indicated that the model maintained strong detection capabilities while minimizing false detections. Notably, the model’s F1-score of 95.61% was the highest among all compared models, further affirming its comprehensive performance in complex scenes. Additionally, its MIoU of 91.83% exceeded that of other models, indicating superior accuracy in pixel-level segmentation tasks. Figure 12 shows that in a typical complex scene with coexisting snow under thin clouds, the proposed model shows excellent detection ability and can effectively distinguish cloud from snow/ice, while performing well in detail retention and boundary clarity.

Table 8.

The evaluation metrics of different models on the WHUS2-CD+ dataset.

Figure 12.

Comparison of cloud detection results using various methods in a typical complex scene with coexisting snow under thin clouds on the WHUS2-CD+ dataset. (a1,a2) Image, (b1,b2) ground truth, (c1,c2) ours, (d1,d2) Unet, (e1,e2) deeplabv3+, (f1,f2) AFMUnet, (g1,g2) CSDFormer, and (h1,h2) TransGA-Net.

The proposed model’s superiority was consistent across models of varying architectural paradigms, suggesting that its design effectively integrates multi-spectral and multi-scale information, thereby enhancing cloud detection performance. These findings demonstrate that the model performs exceptionally well on both WHUS2-CD and WHUS2-CD+ datasets, particularly in complex scenes involving cloud and snow/ice discrimination. The exceptional performance of the proposed model can be attributed to its full utilization of multispectral information, adaptability to multiresolution data, and robustness in complex scenes. These attributes position the model as a promising candidate for a wide range of potential applications in remote sensing cloud detection tasks.

5. Discussion

Advantage. The unified multimodal fusion module introduced in this paper exhibits remarkable capabilities in processing multi-scale features, accommodating inputs of diverse resolutions, and enhancing the model’s generalization ability. It effectively integrates multimodal information, harnessing the spectral characteristics of various bands, particularly adept at distinguishing between cloud and snow/ice in complex scenes. Leveraging the strengths of multimodal data, the model surpasses other comparative models across various performance metrics and demonstrates substantial potential in practical applications, notably in differentiating intricate land cover types.

Disadvantage. The model’s performance depends heavily on input data quality, as noise or missing data can degrade detection accuracy. Additionally, processing multi-spectral, multi-resolution, and multimodal data increases computational complexity, especially for large-scale remote sensing tasks, requiring substantial resources. While the model excels in distinguishing clouds from snow/ice, challenges remain in extreme scenarios, such as thin cloud cover or highly similar spectral signatures, leading to potential false positives or missed detections. These limitations suggest key areas for future research: robust preprocessing for noisy data, computational efficiency optimization, enhanced edge-case detection through improved features or physical models, and expanded training datasets for better generalization.

6. Conclusions

In this paper, we propose an innovative multimodal data fusion method that aims to solve the challenge in network architecture design when dealing with a variable number of modalities. Unlike existing methods, the proposed method does not require structural modifications to the network architecture when introducing new modalities, thus significantly reducing the incremental computational cost and improving the overall efficiency. In addition, the proposed multimodal data fusion module possesses powerful generalization capabilities and can be seamlessly integrated into other network architectures in a plug-and-play manner, which greatly enhances the practicality and flexibility of the module.

Based on this method, we further construct a multimodal cloud detection model, M2Cloud. Through the deep integration of multimodal data, we achieve excellent performance on the public multimodal datasets WHUS2-CD and WHUS2-CD+, reaching or even surpassing the current state of the art (SOTA). The experimental results demonstrate that M2Cloud performs exceptionally well in the unified multimodal cloud detection task, which not only verifies its effectiveness but also offers a feasible reference method for the construction of similar models. The research in this paper provides new ideas and technical support for the field of multimodal data fusion and cloud detection, and holds important theoretical significance and application value.

However, real-world deployment may require additional optimization for resource-constrained edge devices—a direction we plan to explore in future work. While our current cosine-based identifiers work well for the sensor configurations in this study, extending them to novel sensor types requires further investigation. Important directions for future work include developing adaptive methods for new sensor types, incorporating atmospheric physical models to better handle edge cases, and employing physics-guided learning to enhance performance on difficult detection scenarios such as thin cloud layers. Emerging fusion paradigms also warrant investigation: graph-based approaches for the explicit modeling of spectral–spatial cloud relationships, and multimodal transformers for cross-sensor feature attention. These may particularly benefit edge-case detection.

Author Contributions

Conceptualization, methodology, writing—original draft preparation, writing—review and editing, funding acquisition, Y.M.; software, validation, W.Z.; project administration, P.C.; resources, W.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 62261038.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Aybar, C.; Mateo-García, G.; Acciarini, G.; Růžička, V.; Meoni, G.; Longépé, N.; Gómez-Chova, L. Onboard Cloud Detection and Atmospheric Correction with Efficient Deep Learning Models. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 19518–19529. [Google Scholar] [CrossRef]

- Romano, F.; Cimini, D.; Di Paola, F.; Gallucci, D.; Larosa, S.; Nilo, S.T.; Ricciardelli, E.; Iisager, B.D.; Hutchison, K. The Evolution of Meteorological Satellite Cloud-Detection Methodologies for Atmospheric Parameter Retrievals. Remote Sens. 2024, 16, 2578. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, F.; Wu, Q.; Li, Z.; Tong, X.; Li, J.; Han, W. Cloud Identification and Properties Retrieval of the Fengyun-4A Satellite Using a ResUnet Model. IEEE Trans. Geosci Remote 2023, 61, 4102318. [Google Scholar] [CrossRef]

- Ma, J.; Liao, Y.; Guan, L. A Cloud Detection Algorithm Based on FY-4A/GIIRS Infrared Hyperspectral Observations. Remote Sens. 2024, 16, 481. [Google Scholar] [CrossRef]

- Massetti, L.; Materassi, A.; Sabatini, F. NSKY-CD: A System for Cloud Detection Based on Night Sky Brightness and Sky Temperature. Remote Sens. 2023, 15, 3063. [Google Scholar] [CrossRef]

- Wang, M.; Wang, X.; Pi, Y.; Ke, S. Automatic Cloud Detection in Remote Sensing Imagery Using Saliency-Based Mixed Features. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5408915. [Google Scholar] [CrossRef]

- Liang, K.; Yang, G.; Zuo, Y.; Chen, J.; Sun, W.; Meng, X.; Chen, B. A Novel Method for Cloud and Cloud Shadow Detection Based on the Maximum and Minimum Values of Sentinel-2 Time Series Images. Remote Sens. 2024, 16, 1392. [Google Scholar] [CrossRef]

- Chang, H.; Fan, X.; Huo, L.; Hu, C. Improving Cloud Detection in WFV Images Onboard Chinese GF-1/6 Satellite. Remote Sens. 2023, 15, 5229. [Google Scholar] [CrossRef]

- Shang, H.; Letu, H.; Xu, R.; Wei, L.; Wu, L.; Shao, J.; Nagao, T.M.; Nakajima, T.Y.; Riedi, J.; He, J.; et al. A hybrid cloud detection and cloud phase classification algorithm using classic threshold-based tests and extra randomized tree model. Remote Sens. Environ. 2024, 302, 113957. [Google Scholar] [CrossRef]

- Surya, S.R.; Rahiman, M.A. CSDUNet: Automatic Cloud and Shadow Detection from Satellite Images Based on Modified U-Net. J. Indian Soc. Remote Sens. 2024, 52, 1699–1715. [Google Scholar] [CrossRef]

- Li, A.; Li, X.; Ma, X. Residual Dual U-Shape Networks with Improved Skip Connections for Cloud Detection. IEEE Geosci. Remote Sens. Lett. 2024, 21, 5000205. [Google Scholar] [CrossRef]

- Du, W.; Fan, Z.; Yan, Y.; Yu, R.; Liu, J. AFMUNet: Attention Feature Fusion Network Based on a U-Shaped Structure for Cloud and Cloud Shadow Detection. Remote Sens. 2024, 16, 1574. [Google Scholar] [CrossRef]

- Zhou, X.; Xie, X.; Huang, H.; Shao, Z.; Huang, X. WodNet: Weak Object Discrimination Network for Cloud Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5627020. [Google Scholar] [CrossRef]

- Dong, J.; Wang, Y.; Yang, Y.; Yang, M.; Chen, J. MCDNet: Multilevel cloud detection network for remote sensing images based on dual-perspective change-guided and multi-scale feature fusion. Int. J. Appl. Earth Obs. 2024, 129, 103820. [Google Scholar] [CrossRef]

- Zhao, C.; Zhang, X.; Kuang, N.; Luo, H.; Zhong, S.; Fan, J. Boundary-Aware Bilateral Fusion Network for Cloud Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5403014. [Google Scholar] [CrossRef]

- Tan, H.; Sun, S.; Cheng, T.; Shu, X. Transformer-Based Cloud Detection Method for High-Resolution Remote Sensing Imagery. Comput. Mater. Contin. 2024, 80, 661–678. [Google Scholar] [CrossRef]

- Gu, G.; Weng, L.; Xia, M.; Hu, K.; Lin, H. Multipath Multiscale Attention Network for Cloud and Cloud Shadow Segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5404215. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Duan, C.; Zhang, C.; Meng, X.; Fang, S. A Novel Transformer Based Semantic Segmentation Scheme for Fine-Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6506105. [Google Scholar] [CrossRef]

- Zhang, J.; Song, L.; Wang, Y.; Wu, J.; Li, Y. Attention Mechanism with Spatial Spectrum Dense Connection and Context Dynamic Convolution for Cloud Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5408211. [Google Scholar] [CrossRef]

- Chen, L.; Pan, J.; Zhang, Z. A novel cloud detection method based on segmentation prior and multiple features for Sentinel-2 images. Int. J. Remote Sens. 2023, 44, 5101–5120. [Google Scholar] [CrossRef]

- Wu, X.; Shi, Z.; Zou, Z. A geographic information-driven method and a new large scale dataset for remote sensing cloud/snow detection. ISPRS J. Photogramm. 2021, 174, 87–104. [Google Scholar] [CrossRef]

- Johnston, T.; Young, S.R.; Hughes, D.; Patton, R.M.; White, D. Optimizing convolutional neuralnetworks for cloud detection. In Proceedings of the Machine Learning on HPC Environments, Denver, CO, USA, 12–17 November 2017. [Google Scholar]

- Zhang, C.; Weng, L.; Ding, L.; Xia, M.; Lin, H. CRSNet: Cloud and Cloud Shadow Refinement Segmentation Networks for Remote Sensing Imagery. Remote Sens. 2023, 15, 1664. [Google Scholar] [CrossRef]

- He, M.; Zhang, J. Radiation Feature Fusion Dual-Attention Cloud Segmentation Network. Remote Sens. 2024, 16, 2025. [Google Scholar] [CrossRef]

- Qian, J.; Ci, J.; Tan, H.; Xu, W.; Jiao, Y.; Chen, P. Cloud Detection Method Based on Improved DeeplabV3+ Remote Sensing Image. IEEE Access 2024, 12, 9229–9242. [Google Scholar] [CrossRef]

- Du, X.; Wu, H. Gated aggregation network for cloud detection in remote sensing image. Vis. Comput. 2023, 40, 2517–2536. [Google Scholar] [CrossRef]

- Wang, J.; Li, Y.; Fan, X.; Zhou, X.; Wu, M. MRFA-Net: Multi-Scale Receptive Feature Aggregation Network for Cloud and Shadow Detection. Remote Sens. 2024, 16, 1456. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Singh, R.; Biswas, M.; Pal, M. A transformer-based cloud detection approach using Sentinel 2 imageries. Int. J. Remote Sens. 2023, 44, 3194–3208. [Google Scholar] [CrossRef]

- Lu, C.; Xia, M.; Qian, M.; Chen, B. Dual-Branch Network for Cloud and Cloud Shadow Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5410012. [Google Scholar] [CrossRef]

- Gu, G.; Wang, Z.; Weng, L.; Lin, H.; Zhao, Z.; Zhao, L. Attention Guide Axial Sharing Mixed Attention (AGASMA) Network for Cloud Segmentation and Cloud Shadow Segmentation. Remote Sens. 2024, 16, 2435. [Google Scholar] [CrossRef]

- Gu, H.; Gu, G.; Liu, Y.; Lin, H.; Xu, Y. Multi-Branch Attention Fusion Network for Cloud and Cloud Shadow Segmentation. Remote Sens. 2024, 16, 2308. [Google Scholar] [CrossRef]

- Yao, X.; Guo, Q.; Li, A.; Shi, L. Optical Remote Sensing Cloud Detection Based on Random Forest Only Using the Visible Light and Near-Infrared Image Bands. Eur. J. Remote Sens. 2022, 55, 150–167. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, W.; Li, Q.; Min, M.; Yao, Z. DCNet: A Deformable Convolutional Cloud Detection Network for Remote Sensing Imagery. IEEE Geosci. Remote Sens. Lett. 2021, 19, 8013305. [Google Scholar] [CrossRef]

- Gong, C.; Long, T.; Yin, R.; Jiao, W.; Wang, G. A Hybrid Algorithm with Swin Transformer and Convolution for Cloud Detection. Remote Sens. 2023, 15, 5264. [Google Scholar] [CrossRef]

- Gong, C.; Yin, R.; Long, T.; Jiao, W.; He, G.; Wang, G. Spatial–Temporal Approach and Dataset for Enhancing Cloud Detection in Sentinel-2 Imagery: A Case Study in China. Remote Sens. 2024, 16, 973. [Google Scholar] [CrossRef]

- Zhang, H.K.; Luo, D.; Roy, D. Improved Landsat Operational Land Imager (OLI) Cloud and Shadow Detection with the Learning Attention Network Algorithm (LANA). Remote Sens. 2024, 16, 1321. [Google Scholar] [CrossRef]

- Li, J.; Wang, Q. CSDFormer: A cloud and shadow detection method for landsat images based on transformer. Int. J. Appl. Earth Obs. 2024, 129, 103799. [Google Scholar] [CrossRef]

- Li, X.; Yang, X.; Li, X.; Lu, S.; Ye, Y.; Ban, Y. GCDB-UNet: A novel robust cloud detection approach for remote sensing images. Knowl. Based Syst. 2022, 238, 107890. [Google Scholar] [CrossRef]

- Zhang, J.; Shi, X.; Wu, J.; Song, L.; Li, Y. Cloud Detection Method Based on Spatial–Spectral Features and Encoder–Decoder Feature Fusion. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5407915. [Google Scholar] [CrossRef]

- Wang, Y.; Gu, L.; Li, X.; Gao, F.; Jiang, T. Coexisting Cloud and Snow Detection Based on a Hybrid Features Network Applied to Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5405515. [Google Scholar] [CrossRef]

- Li, J.; Wu, Z.; Hu, Z.; Jian, C.; Luo, S.; Mou, L.; Zhu, X.X.; Molinier, M. A Lightweight Deep Learning-Based Cloud Detection Method for Sentinel-2A Imagery Fusing Multiscale Spectral and Spatial Features. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5401219. [Google Scholar] [CrossRef]

- Chen, G.; Xu, W.; Li, C.; Jing, W. GS—CDNet: A remote sensing image cloud detection method with geographic spatial data integration. Int. J. Remote Sens. 2024, 45, 9108–9130. [Google Scholar] [CrossRef]

- Cao, Y.; Sui, B.; Zhang, S.; Qin, H. Cloud Detection From High-Resolution Remote Sensing Images Based on Convolutional Neural Networks with Geographic Features and Contextual Information. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6003405. [Google Scholar] [CrossRef]

- Ma, N.; Sun, L.; He, Y.; Zhou, C.; Dong, C. CNN-TransNet: A Hybrid CNN-Transformer Network with Differential Feature Enhancement for Cloud Detection. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1001705. [Google Scholar] [CrossRef]

- Fan, S.; Song, T.; Jin, G.; Jin, J.; Li, Q.; Xia, X. A Lightweight Cloud and Cloud Shadow Detection Transformer with Prior-Knowledge Guidance. IEEE Geosci. Remote Sens. Lett. 2024, 21, 8003405. [Google Scholar] [CrossRef]

- Xu, K.; Wang, W.; Deng, X.; Wang, A.; Wu, B.; Jia, Z. TransGA-Net: Integration Transformer with Gradient-Aware Feature Aggregation for Accurate Cloud Detection in Remote Sensing Imagery. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6002805. [Google Scholar] [CrossRef]

- Aybar, C.; Bautista, L.; Montero, D.; Contreras, J.; Ayala, D.; Prudencio, F.; Loja, J.; Ysuhuaylas, L.; Herrera, F.; Gonzales, K.; et al. CloudSEN12+: The largest dataset of expert-labeled pixels for cloud and cloud shadow detection in Sentinel-2. Data Brief 2024, 56, 110852. [Google Scholar] [CrossRef]

- Aybar, C.; Ysuhuaylas, L.; Loja, J.; Gonzales, K.; Herrera, F.; Bautista, L.; Yali, R.; Flores, A.; Diaz, L.; Cuenca, N.; et al. CloudSEN12, a global dataset for semantic understanding of cloud and cloud shadow in Sentinel-2. Sci. Data 2022, 9, 782. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Li, H.; Xia, G.; Gamba, P.; Zhang, L. Multi-feature combined cloud and cloud shadow detection in GaoFen-1 wide field of view imagery. Remote Sens. Environ. 2017, 191, 342–358. [Google Scholar] [CrossRef]

- Mohajerani, S.; Saeedi, P. Cloud-Net: An end-to-end cloud detection algorithm for Landsat 8 imagery. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1029–1032. [Google Scholar]

- Mohajerani, S.; Saeedi, P. Cloud and cloud shadow segmentation for remote sensing imagery via filtered jaccard loss function and parametric augmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4254–4266. [Google Scholar] [CrossRef]

- Hughes, M.J.; Kennedy, R. High-quality cloud masking of Landsat 8 imagery using convolutional neural networks. Remote Sens. 2019, 11, 2591. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).