Abstract

This study addresses the challenges of real-time spectroscopic sensing in industrial applications, where external factors such as temperature fluctuations, pressure variations, and particle size distribution significantly impact measurement accuracy. Conventional quantitative analytical methods often neglect these dynamic influences, leading to erroneous concentration estimates. To overcome these limitations, we propose an integrated modeling framework that combines a discrete-time process model with a physics-based spectroscopic sensor model, explicitly accounting for the dynamic properties of the system. A key innovation of this work is the development and application of an Adaptive Kalman Filter (AKF) to systematically correct for measurement distortions caused by external disturbances. Unlike conventional filtering techniques, the AKF dynamically adjusts to changing process conditions by leveraging real-time observability analysis, ensuring robustness even in the presence of sensor noise and environmental variability. Furthermore, to address cases where full observability is not achievable, we introduce a reduced-order Adaptive Kalman Filter (rAKF), which optimally estimates concentrations while minimizing computational complexity. A comprehensive series of simulations was conducted to assess the sensitivity of the estimation to variations in external signal type, noise levels, and initial values for parameters and states. The findings of this study demonstrate the superior performance of both AKF and rAKF in comparison to conventional filtering techniques, including the Extended Kalman Filter. The proposed approaches have been shown to enhance the reliability of spectroscopic sensor measurements, enabling more precise real-time estimations that can be used for monitoring and advanced process control strategies in industrial settings.

1. Introduction

Spectroscopic sensors, such as infrared and Raman spectroscopy-based sensors, play a crucial role in both laboratory research and industrial applications, where they are extensively employed for chemical analysis, material characterization, and real-time process monitoring. These sensors provide valuable insights into molecular composition and structural properties, enabling the precise identification and quantification of chemical species in diverse environments [1,2].

In practical applications, spectroscopic measurements are influenced by various physical factors. Changes in sample properties, such as viscosity and temperature, as well as variations in the measurement environment, for instance optical path length, can introduce nonlinearities that distort the spectral response through both additive and multiplicative effects. These distortions may compromise measurement accuracy and reliability. Thus, it is essential to use advanced algorithms to compensate for these unwanted influences and ensure robust data interpretation. In this contribution, we have only considered temperature variations, as this is a common perturbation in the process industry (e.g., in fermentation tanks) and is also easy to recreate for testing. However, the approach can be easily extended to account for other types of perturbations.

Several authors have proposed data-driven approaches for modeling spectral responses without assuming predefined functional dependencies on wavelength. In the context of this work, an overview by [3] examines data-driven methods designed to mitigate the impact of temperature variations on Near-Infrared (NIR) spectra. Notable algorithms include Extended Multiplicative Signal Correction [4], Local and Global Partial Least-Squares calibration strategies [5], Extended Loading Space Standardization, and Systematic Error Prediction [6]. While these data-driven approaches have demonstrated effectiveness, they rely on an informative dataset for calibration, which is typically performed offline. In addition, they lack the flexibility to integrate prior knowledge, limiting their adaptability in dynamic environments.

To overcome these limitations, we propose modeling the spectral response using physics-based spectral methods [7,8]. This approach represents the spectral response through parametric models, formulated as a linear combination of peak functions that carry physicochemical significance. These models inherently account for nonlinear effects, such as peak shifts and deformations, providing a more interpretable and adaptable framework for spectral analysis.

Following the physics-based approach, the integration of the sensor model with the process dynamic model leads to a nonlinear state-space representation, where the output equation is nonlinear and contains unknown parameters. Although Kalman filters are standard algorithms for state estimation in linear time-varying systems, the model in this application does not conform to a standard structure. However, it can be reformulated in a standard form by applying a transformation as suggested in [9] for a continuous-time model. This transformation results in a time-variant linear output map, where the previously unknown parameters appear linearly in the state equation multiplying the inputs, facilitating their estimation within a structured framework. For this structure, ref. [10] presents an approach based on two interconnected Kalman-like filters, and ref. [11] proposes one based on the combination of a Kalman filter and recursive least square (RLS) algorithm. Kalman filters for linear time-varying systems have been shown to exhibit superior convergence speed and capacity to tune the filter dynamic compared to adaptive observers, such as those described in [12].

The convenient structure of the model obtained through state transformation comes at the cost of introducing additional state variables, resulting in an overparameterized model and increased computational effort for estimation. To address this issue, an alternative error prediction approach has been proposed in [13], which avoids the need for state transformation but leads to highly complex recursive equations for computing the sensitivity of the prediction error with respect to states and parameters.

To address the aforementioned limitations, the present contribution extends the author’s earlier work in continuous time [14] by incorporating a discrete-time formulation, more sophisticated and realistic sensor models, and the explicit consideration of process disturbances. Moreover, it builds upon the results presented in [15] by conducting a formal observability analysis and introducing a novel reduced-order estimator designed to decrease the dimensionality of the system and, consequently, reduce computational complexity. In addition, a thorough comparative evaluation of the proposed estimators is provided, benchmarked against the standard Extended Kalman Filter.

The paper is organized as follows: Section 2 describes an integrated discrete-time model for the dynamic aspects of the process and the spectroscopic information. Section 3 presents a standard Extended Kalman Filter and two versions of an Adaptive Kalman Filter for performing online calibration. It also provides a convergence analysis of the AKF, considering the specific structure of the model to shed light on the conditions that need to be fulfilled. Section 4 presents an example that addresses the problem of correcting the effect of an external variable on the mixture of two components. It compares the performance of all filters and performs a sensitivity analysis with respect to external signal, noise level, and initial values for parameters and states. Finally, the last section summarizes the conclusions and outlines future work.

2. Process and Sensor Modeling

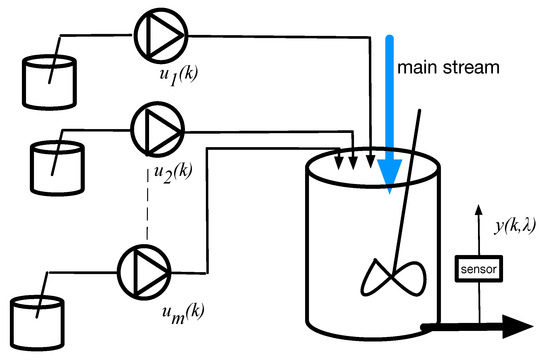

Consider a process in which the concentration of each component in the mixture is independently controlled and monitored by a spectroscopic sensor, as shown in Figure 1.

Figure 1.

Basic mixing process.

The transport and mixing dynamic is represented by a discrete-time linear model as follows:

where is the input vector, is the state vector, and denotes the concentration vector. The state disturbance vector, , follows an independent Gaussian noise process with zero mean and a covariance matrix . The dimensions of the state-space model matrices are , , and . The model assumes that is Hurwitz and has been validated in previous research [16].

The sensor output, denoted as , represents the absorbance of the mixture and can be written, as described in [15], as

where contains the cross products between the functions modeling the effect of the external signals and the peak function representing the spectral response of the sensor. The vector is the concentration vector and is the vector of unknown calibration factors. The model also considers that the absorbance of different components is influenced by an exogenous variable . We assume that the output disturbances are independent Gaussian noise processes with zero mean and covariance matrix . Notice that Equation (2) can also be written as

where and .

Remark 1.

While the sensor model is primarily designed for spectroscopic sensors, its framework can also accommodate sensors in other applications where sensor characteristics depend on external factors and may vary over time. Drifting sensor gains and nonlinear behaviors are common in various tactical sensors. Several Kalman filters have been proposed in this field (see, for instance, [17,18]). The method proposed in this paper could potentially be applied to these contexts, although further research is required.

3. On-Line Correction

The on-line correction methods estimate concentrations based on spectral measurements, even though they are affected by exogenous variables. Thus, the on-line calibration problem can be defined as follows:

Given the process and sensor models, Equations (1) and (2), and the measurements of , and , respectively, the problem is to estimate the concentrations and the calibration factors .

We consider the unknown parameters to be constants perturbed by a zero mean stochastic variable, satisfying the following equation,

3.1. Extended Kalman Filter

By augmenting the state vector with the parameters, i.e., , Equations (1), (2) and (4) can be written as follows:

where

and .

The recursive equations for the EKF update equation is

where the estimation error is

for all . The gain matrix associated to the state is updated as follows:

where the linearized measurement model is given by with

for all . We note that the wavelength range will in practice be discretized, and measurements at different wavelengths should either be stacked into a measurement vector, or the Kalman filter can be updated with each wavelength sequentially. The same applies to the AKF and its variant in the following sections.

Remark 2.

In a stochastic framework, the matrices and represent noise covariance values, capturing the uncertainty associated with system dynamics and measurements. Conversely, in a deterministic approach, where randomness is not considered, these matrices function as tuning parameters. This perspective allows for adjusting and according to specific needs or prior knowledge, treating them as hyperparameters or regularization terms in the estimation process.

Remark 3.

The accuracy of estimated values in the Extended Kalman Filter (EKF) depends on several key factors, such as the precision of linearization, the observability of the system, the nature of noise, initial state conditions, and the underlying system dynamics. Since these elements play a crucial role in the effectiveness of EKF implementation, the specific criteria for achieving convergence will vary based on the particular application.

3.2. Adaptive Kalman Filter

To transform the nonlinear model into a time-variant linear model, it is necessary to define a new variable . This approach was proposed by [9] for continuous-time. By multiplying the recursive equation of (1) by we derive the following over-parameterized time-variant linear model:

A drawback of this overparameterization is that it neglects certain dependencies between redundant variables. As a result, the initially simple noise properties become more intricate and exhibit correlations. Consequently, noise is not explicitly taken into account in the following equations.

An Adaptive Kalman Filter with distinct error dynamics for the state and parameters can be derived using the algorithm proposed by Zhang [11], resulting in the following equations:

where the estimation error is

The state gain matrix is updated as follows:

Remark 4.

Equations (24)–(26) calculate the matrices involved in determining the state gain matrix, denoted as . The matrices and are tuning parameters. Selecting their values can be challenging because, due to the model’s overparameterization, they no longer represent the noise covariances.

The gain matrix associated with the parameters update is given by the following recursive equations:

where is a forgetting factor and is an auxiliary variable defined as follows:

Remark 5.

Equations (28)–(31) calculate matrices associated with the parameter gain matrix, , and the gain matrix related to the estimated parameter variations, , as utilized in the state estimation equation. These equations can be interpreted as a recursive least square estimator with a forgetting factor [11].

3.3. Reduced Order Adaptive Kalman Filter

A reduced order AKF (rAKF) can be obtained if the original states are not considered in the extended model, i.e., just contains a function of the new state variables , . Hence, the system equations can be written as

where

The estimation of and is the obtained by using Equations (20) and (21). The original state estimate can then be derived by solving the following least-squares problem:

Thus,

3.4. Convergence Properties

The Adaptive Kalman Filters will converge if a set of key assumptions associated to the model structure and persistency of excitation for the input signals, i.e., and , are satisfied [11].

Assumption A1.

The pairs and are uniformly completely controllable and observable, respectively.

Given that is Hurwitz, it is always possible to choose a positive definite matrix so that the pair is uniformly completely controllable, i.e., there are exit constants and such that the controllability Grammian satisfies the following:

On other hand, if satisfies the condition that there are exit constants and such that observability Grammian satisfies the following:

then the pair will be uniformly completely controllable. This condition is crucial for ensuring the parameter convergence, and it implies that the exogenous signal must exhibit a form of persistent excitation.

Remark 6.

Assumption 1 only considers the conditions to ensure the boundedness of , , and .

Remark 7.

The interpretation of in Assumption 1 is as a matrix of stacked vectors, each representing the values corresponding to a wavelength belonging to the set Λ.

Remark 8.

Notice that for the full state model, in Equation (17), in the case of the AKF, the first elements of corresponding to the state vector, , are zeros, and the matrix has a block-diagonal structure. This structure renders the state variable unobservable, as the observability Gramian will have a block of zeros on the diagonal. In contrast, the equations for the rAKF, Equation (32), lead to an observability Gramian with non-zero blocks on its diagonal.

Assumption A2.

The input signal is persistently exciting in the sense that there exists an integer and a constant such that

where and are defined by Equations (25) and (28), respectively.

Assumption 2 deals with the evolution of in (32) and imposes conditions on the input signal, . This assumption is required to ensure the boundedness of . Notice that is related to the input signal through in Equations (28) and (31).

Remark 9.

The Assumptions 1 and 2 require that the input and external variable satisfy persistent excitation condition, which in practice is not always satisfied. Recent works have addressed this problem [19,20], and the analysis of these approaches in the context of this application is the subject of future work.

4. Experimental and Simulation Results

This section presents both experimental and simulation-based results to illustrate and evaluate the proposed modeling and state estimation approach. First, the modeling methodology is demonstrated using experimental data. Following this, a simulated case study is introduced to explore the behavior and performance of the proposed state estimation algorithm under a broad range of operating conditions, highlighting its robustness and effectiveness.

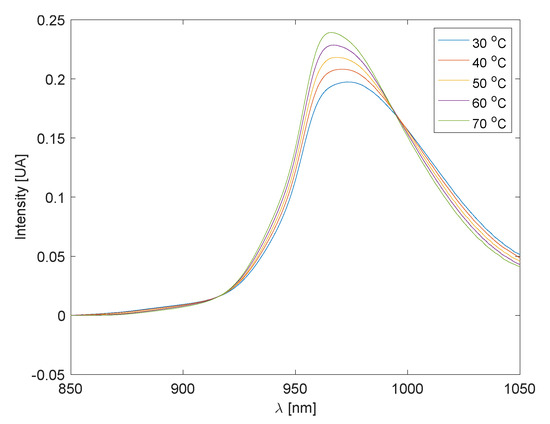

To illustrate the modeling approach using experimental data, we consider the water absorbance spectra in the wavelength range of 850 nm to 1050 nm at different temperatures, as shown in Figure 2. The dataset used for this purpose comes from [5].

Figure 2.

Water spectra for different temperatures.

Following a physics-based approach, the water spectra can be represented as a linear combination of peak functions as follows:

where the peak functions are defined in terms of Gaussian parameters, namely the center and width . A two-step approach is used to identify the model parameters and validate this structure against experimental data.

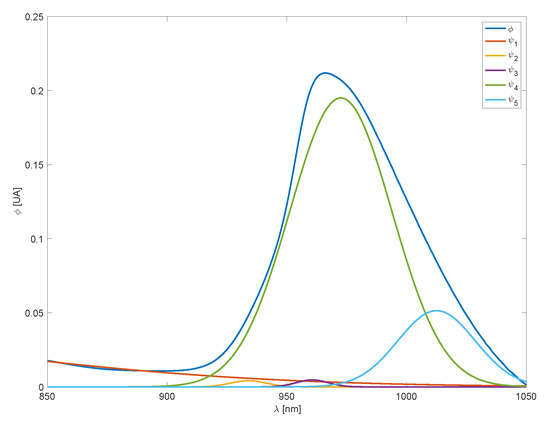

First, for a given temperature, all parameters are estimated by solving a nonlinear least-squares problem fitted to the spectral response. The resulting basis functions, scaled by their respective linear coefficients, are shown in Figure 3.

Figure 3.

Water spectra at 60 °C and basis functions.

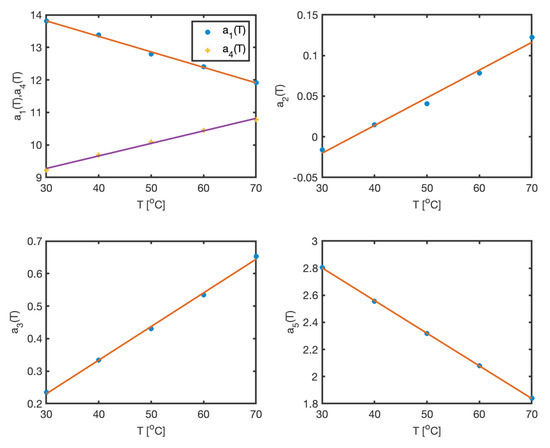

In the second step, the Gaussian parameters (, ) are kept fixed, and the linear coefficients are computed for all temperature conditions. These coefficients are then regressed against temperature. The results, depicted in Figure 4, confirm the validity of the assumed model structure—in this case, a linear dependence on temperature. The estimated parameters and corresponding regression results are summarized in Table 1. The maximum approximation error across the entire dataset is less than 1.

Figure 4.

Linear dependence of coefficients on temperature.

Table 1.

Parameters for water absorbance spectra model.

After having validated the modeling approach on the basis of real spectral responses, we illustrate the main ideas of the estimator by considering an example of the simulated mixing of two components presented in [15], where and are the flow rates of each component, is the mixture temperature, and is the measured spectrum.

The discrete state space model, which represents the transport and the mixing of the two components, is defined by the following matrices:

The measured spectrum considers the absorbance spectra of each component and the effect of temperature, i.e.,

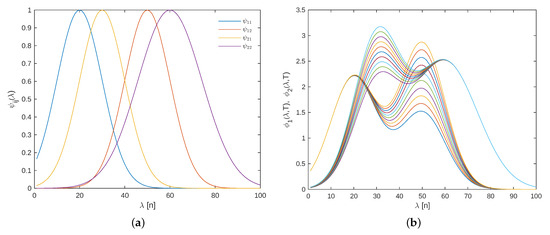

where absorbance of each component is modeled as a linear combination of two Gaussian peaks, as illustrated in Figure 5a, and described by the following parameterization:

where represents Gaussian peaks associated to the model of each component i. Note that in this case, the external variable influences the absorbance of each component linearly, as illustrated in Figure 5b.

Figure 5.

(a) Basis spectral functions. (b) Spectral response of each component for different temperatures.

Rearranging terms (44) can be written as Equation (2), where the set of parameters represents the parameters of the sensor model, which are assumed to be unknown. Their nominal values are , and vectors are as follows:

The simulation examples initially focus on a base case to highlight the core features of the algorithms under specific conditions. Subsequently, a sensitivity analysis explores the variations in initial parameters, state conditions, and noise levels to further distinguish the algorithms’ performance under different scenarios.

4.1. Base Case

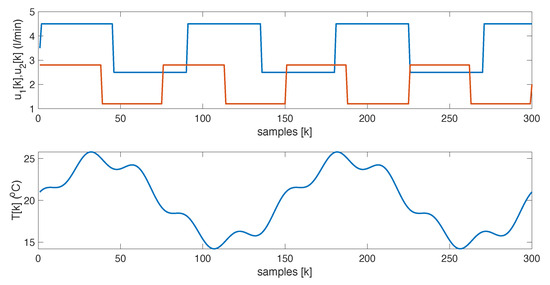

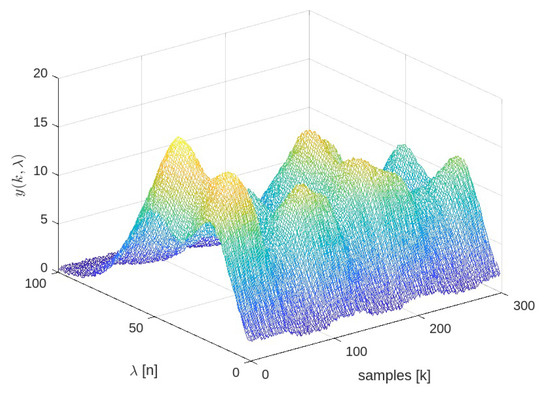

The base case considers zero-mean Gaussian disturbances in both the sensor measurements and the state equations, with variances and , respectively. It also accounts for step changes in the flow rates of each component and periodic variations in the external variable, as shown in Figure 6. The effect of these changes and the noise on the spectral response can be seen in Figure 7.

Figure 6.

Flow rates and mixture temperature.

Figure 7.

Spectral-time sensor response. The color represents the intensity of the spectral signal.

In the next simulations for both the EKF and AKF, the initial conditions for the estimated parameters are set to zero and the states are set as . To illustrate the evolution of estimated states and parameters, we consider one realization of state and measurement noise.

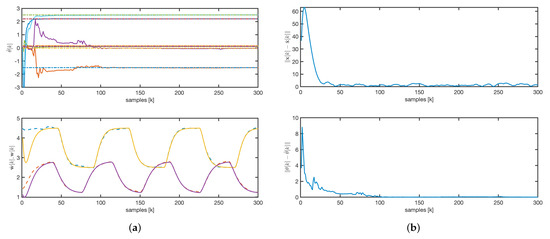

For the EKF, we consider the following parameters and initial conditions: the matrix is chosen to be a diagonal matrix, and . As illustrated in Figure 8a, the estimated parameters converge to their true values more rapidly than the state. This observation is further supported by the evolution of the state and parameter error norms shown in Figure 8b.

Figure 8.

EKF: (a) parameters and concentrations evolution. Solid line: estimated values. Dashed line: real values. (b) State and parameters error norm.

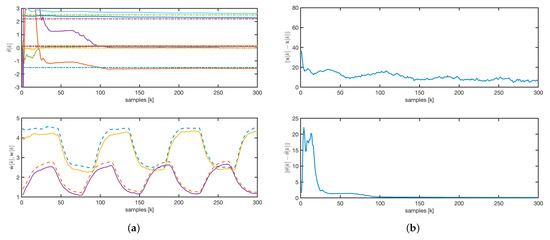

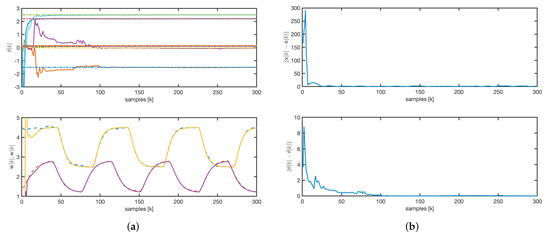

For the AKF, the matrix was chosen as a constant diagonal matrix, , and . The observer initial conditions for are set to zero. The estimated concentrations quickly converge to the real values, although some parameters take longer to stabilize, as shown in Figure 9a. Variability in input variables, such as flow rates and temperature, ensures the convergence of both estimated concentrations and parameters. Furthermore, the state error asymptotically converges to zero, as depicted in Figure 9b.

Figure 9.

AKF: (a) parameters and concentrations evolution. Solid line: estimated values. Dashed line: real values. (b) State and parameters error norm.

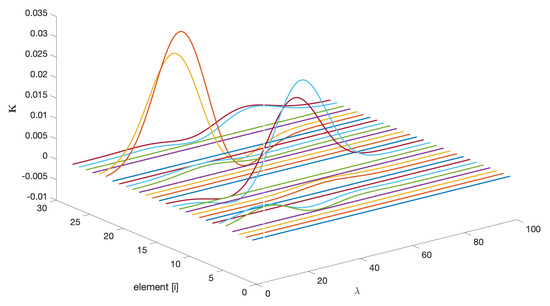

In adaptive applications, once the algorithm has converged, it is always informative to analyze the structure of the final observer gain; in this case, it is . From Figure 10, two important observations can be drawn: first, the gains associated with the estimation of the state variables are zero; second, the wavelength dependencies are linked to the location of the basis functions (see Figure 5a). Zero gains indicate a lack of observability for the associated states, which is consistent with Remark 8.

Figure 10.

Elements of the AKF final vector gain as a function of .

The evolution of the estimated parameters for the rAKF follows a similar pattern to that of the AKF, as shown in Figure 11a. However, as illustrated in Figure 11a,b, the estimated concentrations exhibit much faster dynamics, driven by the observer design rather than the open-loop system dynamics.

Figure 11.

rAKF: (a) parameters and concentrations evolution. Solid line: estimated values. Dashed line: real values. (b) State and parameters error norm.

A comparative analysis of the dynamic responses, depicted in Figure 8, Figure 9 and Figure 11, shows that both the AKF and rAKF algorithms are effective in parameter estimation. The rAKF provides faster dynamics for estimated concentrations because of its enhanced observer design. However, the EKF takes longer to converge, with the state convergence being much slower than the parameter convergence.

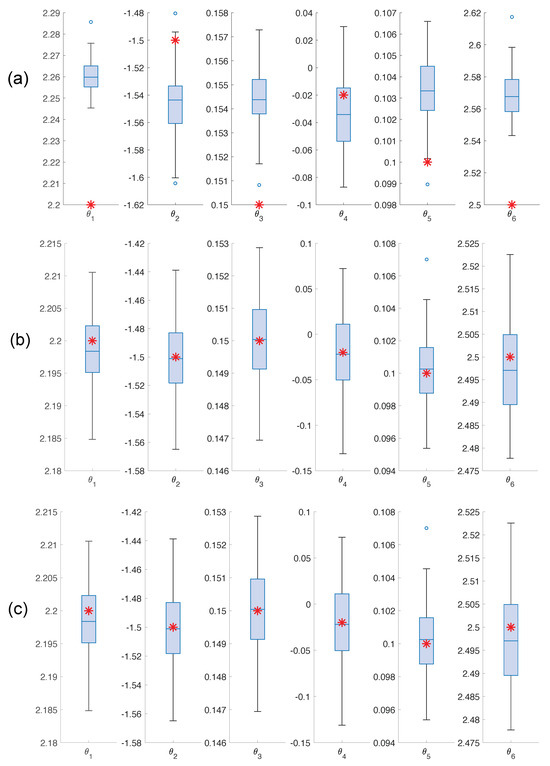

A comprehensive analysis across multiple realizations enhances the robustness and reliability of the results by capturing the variability introduced by different instances of noise. Table 2 summarizes the results for one hundred noise realizations, presenting the mean values and standard deviations of the Root Mean Squared Errors (RMSEs) for both states and parameters. The results indicate that EKF exhibits higher mean values of and compared to the AKF and rAKF. Lower RMSE values generally suggest better performance, indicating that the AKF and rAKF outperform the EKF based on these measures. Although the rAKF initially shows larger errors than the AKF, its convergence is faster. For detailed information about the accuracy of each estimate, refer to the boxplot of the estimated parameters in Figure 12. It is evident that both the AKF and rAKF provide more accurate parameter estimates compared to the EKF.

Table 2.

Performance measures.

Figure 12.

Boxplot for the parameter estimates (a) EKF (b) AKF (c) rAKF. A red asterisk represents the values of the real parameters.

4.2. Sensitivity Analysis

The following simulations demonstrate the sensitivity of the estimates to variations in the external signal, initial parameters, states, and noise levels. The values of , , and are consistent with those used in the base case.

To analyze the effect of the external signal profiles, two additional profiles are considered. The first profile consists of a constant value of 20 (°C) with step changes occurring at time instant 75, 150, and 225. This profile provides less dynamic information than the one considered in the base case. The second profile is simply a constant value of 20 (°C), which does not provide enough information for estimating the parameters, violating one of the assumptions necessary for convergence.

Based on the results summarized in Table 3, the comparison of estimation algorithms—the EKF, AKF, and rAKF—under two distinct signal profiles, Steps and Constant, reveals distinct performance characteristics. The Adaptive Kalman Filter (AKF) consistently demonstrates superior performance in both signal scenarios, showing the lowest mean Root Mean Square (RMS) error for the state and , coupled with the least variability. This indicates that the AKF provides more accurate and reliable estimates across different conditions. In contrast, the Extended Kalman Filter (EKF) exhibits the highest mean RMS errors and greater variability for both state variables, making it less consistent and accurate compared to the AKF. The reduced order Adaptive Kalman Filter (rAKF) performs better than the EKF but falls short of the AKF, presenting intermediate levels of mean errors and variability. Specifically, while the rAKF matches the AKF’s performance in estimating under both signal profiles, it does not achieve the same accuracy for the states. A comparative analysis with respect to the results of the more dynamic rich input signal (Table 2) shows that the EKF performs poorly in estimating the state variables and parameters. However, the AKF and rAKF provide similar estimates for the state variables. As expected, all algorithms perform worse in terms of parameter estimates when the signal has fewer time variations. The lack of persistent excitation in the AKF and rAKF mainly affects the parameter estimation.

Table 3.

Performance measures for different external signal profiles.

To analyze the effect of initial conditions, two sets are considered: Initial Condition 1, where the state and parameters are zero, and Initial Condition 2, where and . The results summarized in Table 4 clearly show that the EKF is highly sensitive to the choice of initial conditions, whereas the AKF and rAKF provide consistent results regardless of initial conditions. From a practical perspective, this robustness in initialization is a significant advantage, simplifying the setup of the estimation algorithms.

Table 4.

Performance measures for different initial conditions.

Finally, Table 5 summarizes the results of the algorithms with respect to an increase of 50% and 100% of the noise level considered in the base case. At the 50% noise level, the AKF demonstrates the lowest mean RMS error for the parameters with the smallest variability, indicating superior robustness to moderate noise. The rAKF follows closely, performing slightly better than the EKF in terms of both parameters and state estimation accuracy. The EKF, while reasonably effective, shows higher mean errors and variability.

Table 5.

Performance measures for different noise level.

At the higher noise level of 100%, all algorithms exhibit increased mean RMS errors and variability. The AKF maintains a relatively better performance, although its state error increases compared to the 50% noise scenario. The rAKF shows a performance comparable to the AKF, with a slight increase in errors and variability, but it still outperforms the EKF. The EKF displays the highest mean RMS errors and variability in both state variables under both noise levels. Overall, while all algorithms are affected by increased noise, the AKF and rAKF exhibit better robustness and consistency in estimation performance compared to the EKF.

5. Conclusions

This research introduces a novel discrete-time correction algorithm based on physic-based models and an Adaptive Kalman Filter (AKF) for spectroscopic applications. The AKF is formulated using an extended model of the process and the spectroscopic sensor, enabling the simultaneous estimation of system states and calibration factors while accounting for the influence of external variables. Additionally, an observability analysis of the AKF led to the development of a reduced-order AKF (rAKF), designed to improve computational efficiency by reducing the number of estimated variables.

The results demonstrate that the AKF provides robust and accurate state estimation across various conditions, outperforming both the rAKF and the Extended Kalman Filter (EKF). The rAKF offers a computationally efficient alternative with faster convergence, making it suitable for scenarios where reduced complexity is necessary. In contrast, the EKF exhibits higher estimation errors and variability, confirming the advantages of the proposed approaches over conventional filtering techniques.

These findings highlight the potential of the AKF and rAKF for enhancing spectroscopic sensing, particularly in applications such as Near-Infrared (NIR) and Raman spectroscopy. However, the practical implementation of these filters requires careful consideration of the persistent excitation conditions necessary for ensuring asymptotic convergence. Since real-world applications may not always satisfy these conditions, future research should focus on developing relaxed convergence criteria to ensure stable performance even under limited excitation.

The promising performance of the proposed AKF framework suggests significant opportunities for advancing spectroscopic sensing technologies. Future studies will explore real-time laboratory validations and extend the methodology to broader sensing applications, further refining the capabilities of adaptive filtering in spectroscopic analysis.

Author Contributions

Conceptualization, D.S. and T.A.J.; methodology, J.Y.; software, D.S.; validation, D.S., T.A.J. and J.Y.; formal analysis, T.A.J.; investigation, D.S., T.A.J. and J.Y.; resources, D.S.; data curation, D.S. and J.Y.; writing—original draft preparation, D.S.; writing—review and editing, T.A.J. and J.Y.; project administration, D.S.; funding acquisition, D.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Fondecyt, grant number 1221225.

Institutional Review Board Statement

Not applicable for studies not involving human or animals.

Informed Consent Statement

Not applicable for studies not involving human.

Data Availability Statement

The water NIR spectra is available from https://github.com/salvadorgarciamunoz/eiot/tree/master/pyEIOT, accessed on 8 April 2025. Please note that these data were produced by Wülfert et al. [5], and all copyright remains with the authors.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bakeev, K.A. (Ed.) Process Analytical Technology; Blackwell Publishing Ltd.: Oxford, UK, 2005. [Google Scholar]

- Chen, Z.; Lovett, D.; Morris, J. Process analytical technologies and real time process control a review of some spectroscopic issues and challenges. J. Process Control 2011, 21, 1467–1482. [Google Scholar] [CrossRef]

- Hageman, J.; Westerhuis, J.; Smilde, A. Temperature Robust Multivariate Calibration: An Overview of Methods for Dealing with Temperature Influences on near Infrared Spectra. J. Infrared Spectrosc. 2005, 13, 53–62. [Google Scholar] [CrossRef]

- Martens, H.; Stark, E. Extended multiplicative signal correction and spectral interference subtraction: New preprocessing methods for near infrared spectroscopy. J. Pharm. Biomed. Anal. 1991, 9, 625–635. [Google Scholar] [CrossRef] [PubMed]

- Wülfert, F.; Kok, W.; Smilde, A. Influence of Temperature on Vibrational Spectra and Consequences for the Predictive Ability of Multivariate Models. Anal. Chem. 1998, 70, 1761–1767. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Morris, J.; Martin, E. Modelling Temperature-Induced Spectral Variations in Chemical Process Monitoring. IFAC Proc. Vol. 2004, 37, 553–558. [Google Scholar] [CrossRef]

- Alsmeyer, F.; Koß, H.J.; Marquardt, W. Indirect Spectral Hard Modeling for the Analysis of Reactive and Interacting Mixtures. Appl. Spectrosc. 2004, 58, 975–985. [Google Scholar] [CrossRef] [PubMed]

- Wöhl, J.; Kopp, W.A.; Yevlakhovych, I.; Bahr, L.; Koss, H.J.; Leonhard, K. Completely Computational Model Setup for Spectroscopic Techniques: The Ab Initio Molecular Dynamics Indirect Hard Modeling Approach. J. Phys. Chem. A 2022, 126, 2845–2853. [Google Scholar] [CrossRef] [PubMed]

- Martino, D.D.; Germani, A.; Manes, C.; Palumbo, P. State observers for systems with linear state dynamics and polynomial output. In Proceedings of the 43rd IEEE Conference on Decision and Control, Nassau, Bahamas, 14–17 December 2004; pp. 3886–3891. [Google Scholar]

- Ticlea, A.; Besancon, G. Adaptive observer design for discrete time LTV systems. Int. J. Control 2016, 89, 2385–2395. [Google Scholar] [CrossRef]

- Zhang, Q. Adaptive Kalman filter for actuator fault diagnosis. Automatica 2018, 93, 333–342. [Google Scholar] [CrossRef]

- Guyader, A.; Zhang, Q. Adaptive Observer for Discrete Time Linear Time Varying Systems. IFAC Proc. Vol. 2003, 36, 1705–1710. [Google Scholar] [CrossRef]

- Sbarbaro, D.; Johansen, T.A.; Yañez, J. A Prediction Error Adaptive Kalman filter for online spectral measurement correction and concentration estimation. In 34th European Symposium on Computer Aided Process Engineering/15th International Symposium on Process Systems Engineering; Manenti, F., Reklaitis, G.V., Eds.; Computer Aided Chemical Engineering; Elsevier: San Diego, CA, USA, 2024; Volume 53, pp. 1735–1740. [Google Scholar] [CrossRef]

- Sbarbaro, D.; Johansen, T. On-line calibration of spectroscopic sensors based on state observers. IFAC-PapersOnLine 2020, 53, 11681–11685. [Google Scholar] [CrossRef]

- Sbarbaro, D.; Johansen, T.; Yanez, J. Adaptive Kalman Filter for On-Line Spectroscopic Sensor Corrections. In Proceedings of the 2023 9th International Conference on Control, Decision and Information Technologies (CoDIT), Rome, Italy, 3–6 July 2023; pp. 2109–2114. [Google Scholar] [CrossRef]

- Johansen, T.A.; Sbarbaro, D. Lyapunov-based optimizing control of nonlinear blending processes. IEEE Trans. Control Syst. Technol. 2005, 13, 631–638. [Google Scholar] [CrossRef]

- Liu, Z.; Chen, Y.; Lu, Y. Mid-State Kalman Filter for Nonlinear Problems. Sensors 2022, 22, 1302. [Google Scholar] [CrossRef]

- Vincent, T.; Khargonekar, P. A class of nonlinear filtering problems arising from drifting sensor gains. IEEE Trans. Autom. Control 1999, 44, 509–520. [Google Scholar] [CrossRef]

- Pyrkin, A.; Son, T.M.; Cuong, N.Q.; Sinetova, M. Adaptive observer design for time-varying systems with relaxed excitation conditions. IFAC-PapersOnLine 2022, 55, 312–317. [Google Scholar] [CrossRef]

- Rodrigo Marco, V.; Kalkkuhl, J.C.; Raisch, J.; Seel, T. Regularized adaptive Kalman filter for non-persistently excited systems. Automatica 2022, 138, 110147. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).