1. Introduction

In inverse scattering for microwave breast imaging, a quantitative description of the breast, corresponding to its dielectric properties, is calculated from the measurement of the scattered field induced by the presence of the breast. From a mathematical viewpoint, this is an ill-posed problem, due in part to the lack of access to evanescent waves, and a non-linear problem because of the multiple scattering effects inside the breast. To solve this issue, iterative optimization methods incorporating prior information have been developed to minimize the deviation between the calculated and measured scattered field distributions. The contrast source inversion method (CSI) [

1,

2,

3], the distorted born iterative method (DBIM) [

4,

5], Gauss–Newton (GN) [

6], and the Broyden–Fletcher–Goldfarb–Shanno method (L-BFGS) [

7] are examples of deterministic optimization algorithms used for medical applications. Recent developments in deep learning methods, such as perceptrons (ANNs) and convolutional neural networks (CNNs), have been under investigation. These are deterministic methods based on a stochastic optimization process that incorporates an entire database as prior information. Numerous studies have shown that it is possible to improve microwave imaging techniques by combining them with deep learning, as proposed in [

8,

9,

10,

11].

More specifically, Yingying Qin et al. [

2] and Mojabi et al. [

12] investigated the use of multichannel CNNs and U-NETs, respectively, to combine microwave and ultrasound images using the databases described in [

13] and [

14]. In [

15], Ambrosanio et al. used smart-automated generation of 2D maps to create a large database on which they trained an (ANN) to transform the measured scattered field into dielectric properties.

Flores et al. [

16] used a quadratic BIM algorithm, through a complex valued U-NET, with the database in [

13]. Khoshdel et al. [

17] performed tumor detection using a 3D, automatically generated random breast tumor database. Marijn Borghouts et al. [

18] generated a tumor probability map. Costanzo and Flores used reinforcement learning techniques to perform tumor detection [

19].

Our contribution is part of the historical framework of the GeePs-L2S microwave camera [

20,

21]. We are interested in the possibility of training deep learning models to transform the qualitative images produced by the microwave camera, which represent the distribution of induced currents obtained by backpropagating the measured scattered field into a quantitative image representing the distribution of the object’s dielectric properties. Compared with other techniques, the proposed method uses operations in the spectral domain, making the process less sensitive to noise by taking advantage of high-frequency spatial filtering and less time-consuming with respect to computation. Contributions which associate deep learning with spectral imaging exist in the optical domain [

22], but to the best of our knowledge, the use of a spectral approach has not been investigated for quantitative microwave imaging of the breast. In addition, most existing papers on deep learning microwave breast imaging are based on realistic databases created from MRI scans performed on a limited number of patients. Our model is trained on a limited three dimensional database, customized (in-house) from anthropomorphic breast phantoms. There are five major reasons for this investigation: to test the validity of the proposed spectral quantitative method, which works with a limited amount of data; to offer low-cost realistic data that can be easily manipulated; to avoid health and personal protection legislation; to provide anthropomorphic printable models inspired by MRI scans to build up a reference database along with physical phantoms for experimentation; and to easily increase and diversify data, by designing a variety of phantoms of different shapes and sizes. Extension of our 3D database, requiring large-scale production of anthropomorphic cavities and associated simulations, is time consuming. Consequently, this will be investigated in the future. This paper focuses on assessment of the original proposed imaging technique, rather than performance metrics relevant for working with an extensive database. The preliminary results are presented to validate the proposed concept.

This paper is organized as follows:

Section 2 highlights the database production process; the anthropomorphic breast model files are used to calculate the truncated spectrum of the induced currents in the planar configuration of the microwave camera. The optimization process including the architecture of the U-NETs and the normalization–denormalization process applied to the data is detailed in

Section 2.5. Select numerical results that illustrate both the strengths and limitations of the approach are shown in

Section 3. Finally, a discussion about the range of this contribution and its future perspectives is given in

Section 4.

2. Materials and Methods

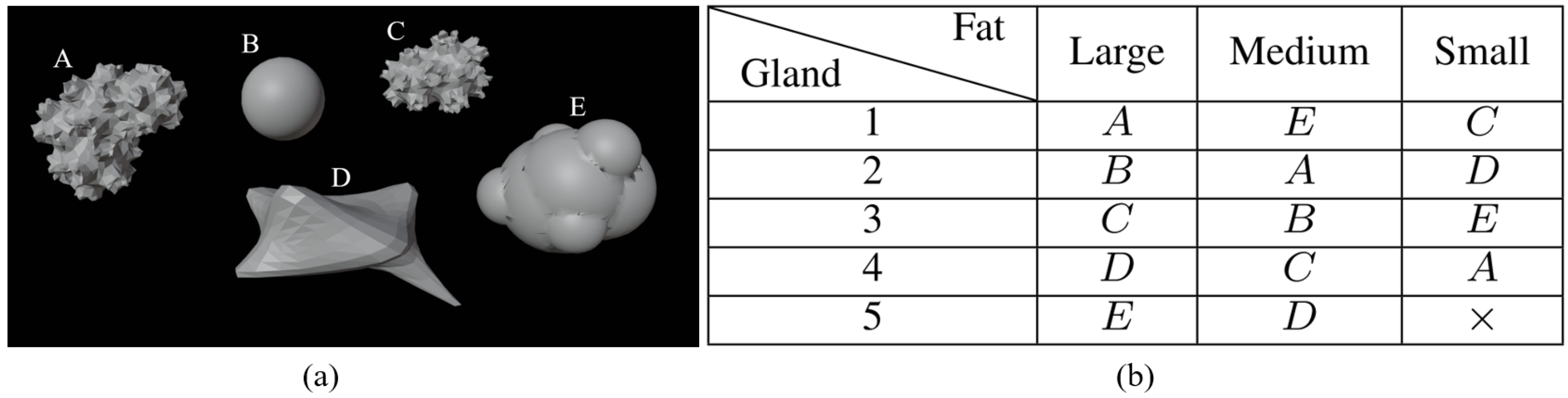

2.1. Generation of Anthropomorphic Breast Phantoms

To implement deep learning techniques, we created a database based on three-dimensional anthropomorphic models inspired by the GeePs-L2S breast phantom [

23,

24]. The breast is divided into three different parts—skin-associated fat, glandular tissues, and tumors—as shown in

Figure 1. We developed, in addition to the GeePs-L2S breast model, three models for fat and skin and five for glandular tissue. This enabled us to produce

models without tumors, as glandular tissue cavity number 5 does not fit inside the smallest fat cavity. This number rose to

without (case 1) or with tumors placed in the adipose (case 2) or glandular (case 3) or both (case 4). In a second step, we considered the mirror of each phantom to double the number of models. Because the phantoms are volumetric, and because of the propagation of the wave, the mirror does not correspond to a 180-degree rotation. Finally, a training set of

images at the center of the models was obtained by rotating them, considering a full rotation corresponding to 16 different views.

Different models of tumors are shown in

Figure 2a. Their size varies from 0.5 cm to 2 cm and they are located at different places to create various configurations (cases 2, 3, and 4).

Figure 2b, shows the distribution of tumor shapes associated with a letter—A, B, C, D, or E—per cavity. The distribution of tumors is chosen so that each tumor is associated with a cavity only once. As a result, there is no correlation between the use of a specific shape in the phantom and the presence of a tumor in the constructed breast.

However, the training and validation sets are nevertheless correlated, as the current dataset is built from a combination of the various cavities. The data are randomly shuffled and separated, with for training—1433 spectra— and for validation—359 spectra. We set the uncorrelated GeePs-L2S phantom aside for testing. Therefore, the testing set contains 32 spectra (16 rotations and their mirror images). The presented example is used to show that a generalization seems feasible with a wider dataset.

The dielectric properties of the various parts of the phantom are those provided by standard reference databases, e.g., the IFAC [

25] or SUPELEC RECIPES [

26]. The values of the relative permittivity,

, and conductivity,

, for each tissue used to perform the simulations are given at

in

Table 1. Because variability exists for human tissues, we added a

variation value on the dielectric properties as a first try. Higher variabilities will be investigated in the future, as studies in the field mention stronger variations of

due to the dehydration of the tissues [

27] or based on canine stroke measurements [

28,

29]. The values are constant within each cavity due to the simulation constraints (the numerical model is based on a method of moments solution to the surface integral equation). The distribution of permittivity variations between the phantoms is uniform.

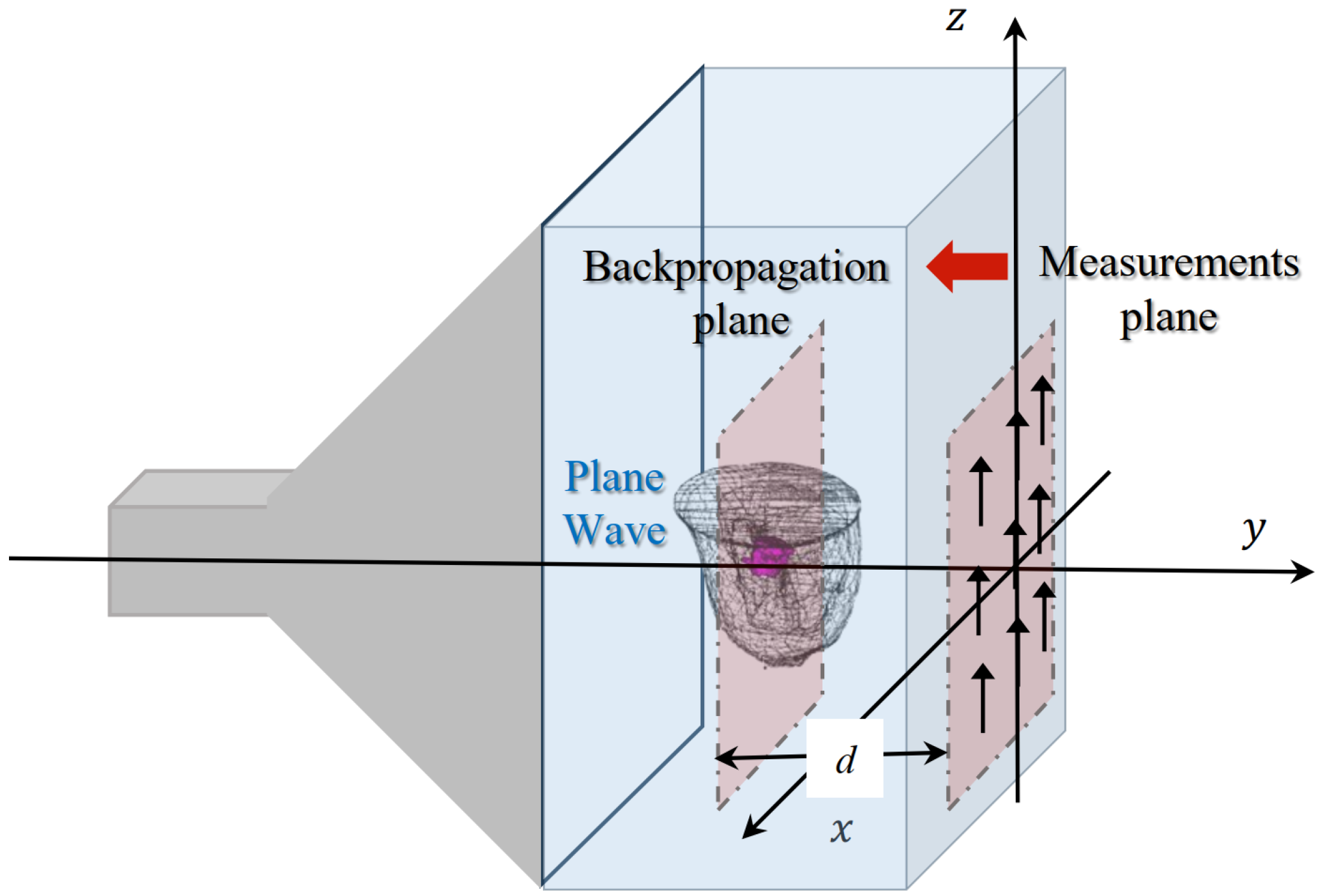

2.2. Experimental Set-Up: GeePs-L2S Planar Camera

The various breast phantoms in the database are studied by placing them inside the GeePs-L2S microwave planar camera as illustrated in

Figure 3 [

21]. The phantom model is placed in a water tank whose dielectric properties are

and

. The tank is illuminated by a monochromatic plane wave polarized along the z-axis at frequency

. The scattered field is computed on

points of the retina (representing the location of the dipoles) using the commercial electromagnetic software WIPL-D [

30], based on a surface integral formulation solved by method of moments. This constitutes the simulated data for the imaging problem, solved with a backpropagation algorithm. The observation is placed at

from the retina, corresponding to the location of a tumor, inside the fatty region of the GeePs-L2S testing phantom, shown in pink in

Figure 3. This location choice helps us in investigating other three-dimensional algorithms such as diffraction tomography or deep learning models for volumetric reconstruction. We then compute the filtered spectrum of the induced currents at the tumor position using the Fourier diffraction theorem.

2.3. Backpropagation Projection Algorithm

The forward electromagnetic problem states that the induced currents

can be computed by solving the integral equation given in (

1), where

G is Green’s function;

is the total electric field;

is the wavenumber at the point

,

is the wavenumber of the coupling medium (water),

∇ is the operator Nabla, and

is the identity matrix. A single bar below quantities indicates that they are considered a vector and a double bar stands for matrices.

The dipoles placed on the retina of the camera measure the vertical component of the total electric field. We deduce the field scattered by the phantom

by subtracting the incident field along the

z-axis. Assuming that the depolarization inside the phantom is negligible, it is known that Equation (

1) can be reduced to a scalar equation. In that way, the scattered field along the z-component on the retina,

at a distance

d from the object, is then written as follows:

Because of Weyl’s angular spectrum expansion, Green’s function can be expressed as follows:

If we only consider the propagative waves, Equation (5) enforces that

. This condition can only be verified in the so-called visible range, a region bounded by a circle of radius

, according to

Some supplementary calculations lead to the Fourier diffraction theorem (

7) [

31,

32]. It stipulates that the Fourier transform of the induced currents

is linked to the filtered spectrum, limited to the visible domain, of the scattered field

.

Then, we can easily deduce the backpropagation projection equation given in (

8) [

20], which gives an approximation of the induced currents inside the phantom.

This formula integrates the induced currents of the entire 3D phantom into a single projective plane, making

given in

Figure 4a difficult to interpret. It also means that the projective plane includes information from the different parts of the phantom, making the tumor localization difficult. For assessing the possibility of tumor detection, we calculate the difference between the induced currents with and without the tumor

Figure 4b. It appears that tumor information is present in the differential image, demonstrating the sensitivity of the method to the presence of the tumor.

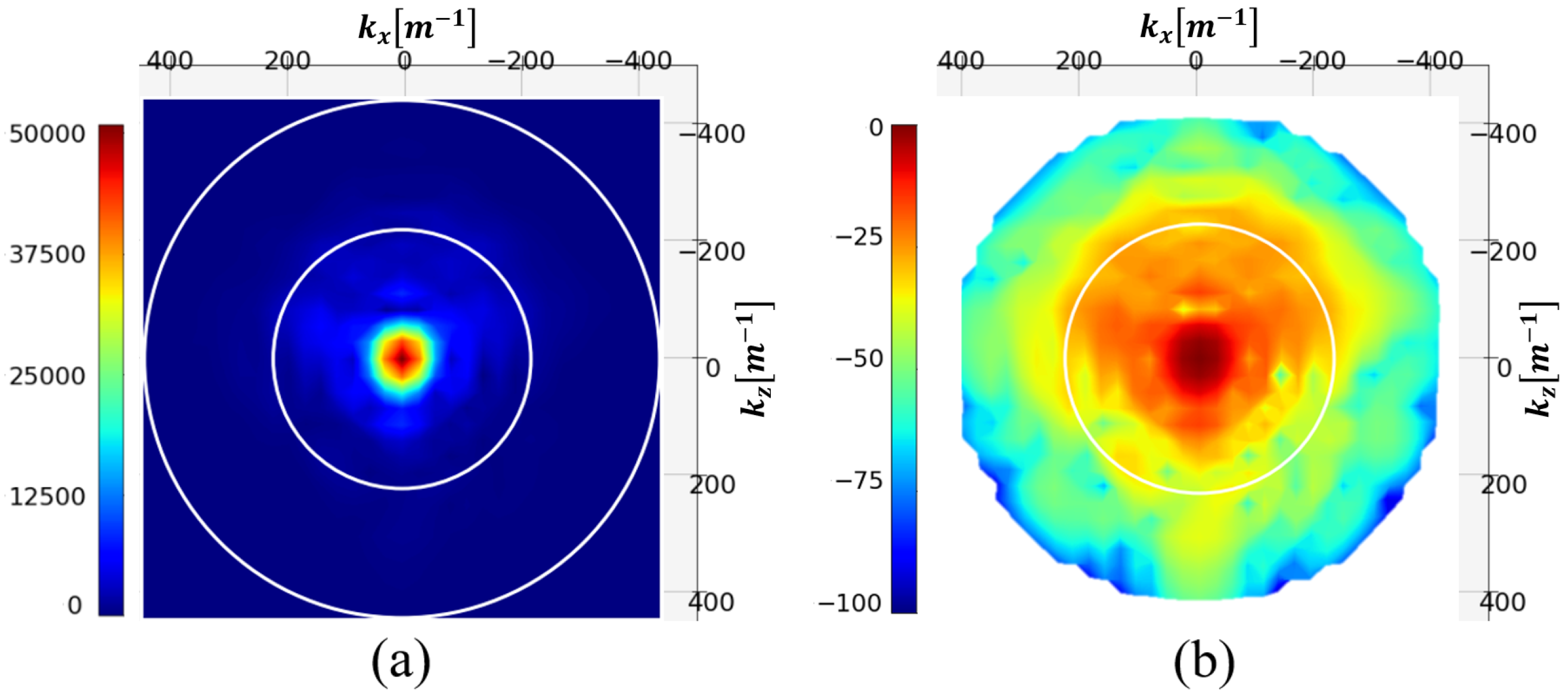

2.4. Spectral Filtering

Once the distribution of the scattered field on the retina of the camera has been obtained, it is possible to deduce its spectrum using fast Fourier transform, followed by the spectrum of its currents using (

7). Let us introduce the Normalized Spectral Density of

, noted NSD, given by the following:

The measurement is conducted in water with 64 × 64 dipoles spaced

. The spectrum

is therefore composed of 4096 pixels, and its extent is

. The Fourier diffraction theorem naturally filters out the high spatial frequencies of the current spectrum

. The range of the spectrum, according to Equation (

6), is bounded by a circle of diameter

, referred to as the visible range, which reduces the number of pixels to 805 pixels.

In

Figure 5, the modulus of the

distribution of the test phantom and its NSD in decibel [dB] are represented. The two white circles represent the limit of the visible range (outer circle) and this same limit divided by two.

The figure shows that the spectrum is almost evanescent outside the visible half-domain. Filtering spatial frequencies outside this circle should therefore not significantly degrade the image. This operation reduces the spectrum to 197 pixels.

Hence, spectral filtering offers several advantages. Firstly, spectrum truncation reduces the number of unknowns to be determined, thereby reducing the complexity of the problem posed, from 4096 to 197 pixels. Secondly, filtering the invisible spectrum and beyond removes the information contained in evanescent waves, which are not accessible due to measurement noises. This natural truncation in the back-propagation algorithm can be assimilated to a regularization technique, making the spectral technique less sensitive to noise addition.

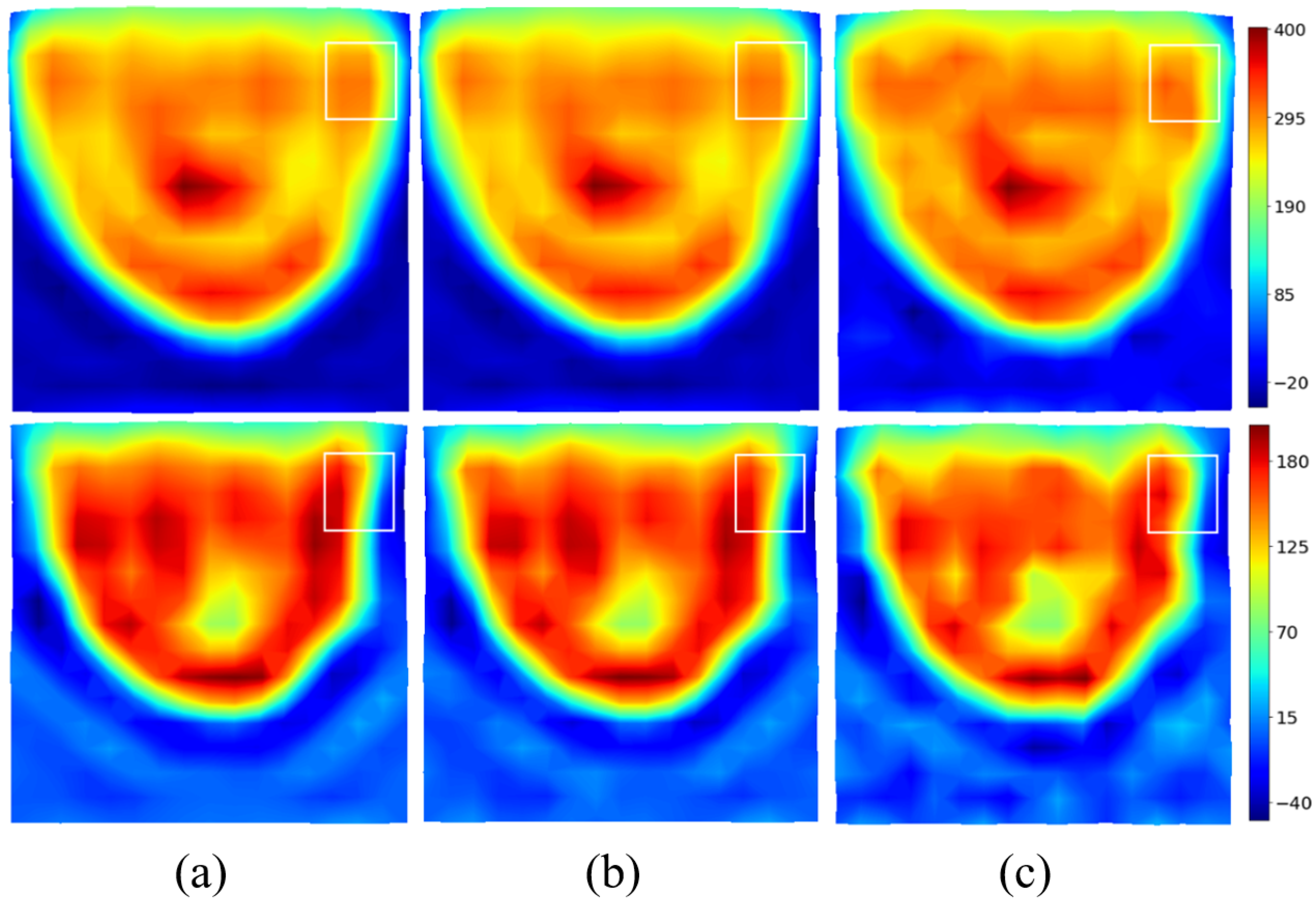

The real and imaginary parts of the induced current distributions are reconstructed for different signal-to-noise ratios and shown in

Figure 6. Additive White Gaussian Noise (AWGN) is added on

before filtering. As expected, the method is very robust, and a signal-to-noise ratio (SNR) of 10 dB has a limited effect on the reconstruction. The spectral version of the induced currents—in the visible domain divided by two—will be taken in the following as the input of the U-NETs.

2.5. From Qualitative to Quantitative Imaging

Deep learning techniques are online optimization processes. To optimize the spectrum of induced currents, a reference must be chosen. The simplest and most logical approach would be to conduct a qualitative-to-qualitative optimization to optimize the approximate induced currents with respect to the theoretical currents calculated using Equation (2). However, from a computational viewpoint, this choice is not satisfactory. Considering the variations in the field inside the phantom complicates the process of optimizing the induced currents map. The use of the complex contrast

C, given in Equation (

10), seems to be a better choice, as it is strongly linked to different parts of the breast and more challenging.

In a first step, the data must be standardized and normalized, before training the U-NETs. The choice of scaling method is linked to the values of the parameters to be optimized. Here, the imaginary part of the dielectric contrast and

currents is small compared to their real part, which can lead to balance problems when the error is backpropagated to the neural network. Min–max normalization ensures that real and imaginary parts follow equivalent dynamics. For this reason,

and the reference contrast C are rescaled to the interval [0, 1] using the standard min–max scaling formula on the real and imaginary parts.

To avoid the exploding gradient issue during the U-NET backpropagation process and because deep learning models are pseudo-probabilistic models, the input data should be close to 0. It is therefore desirable for the real and imaginary parts of the spectrum to lie within the interval

. Hence, a simple normalization is applied to generate the input

and reference spectra

used in the optimization process. The applied operation preserves the Fourier relationship as it only modifies the magnitude and not the phase of the quantities:

The aim of the U-NET pair is to perform a full transformation of the input

into the output contrast

, using

as a reference. The input, reference, and output of the U-NETs for the test example are shown in

Figure 7.

In a second step, the denormalization and destandardization process required to compute

and

values from the

contrast reconstructed by the U-NETs is conducted as follows. We introduce

and

, the mean of the real or imaginary value of all the pixels outside the phantom on the reconstructed image (in water), respectively. As the dielectric properties of water are known, the operations given in (

16) and (17) calibrate the reconstructed values,

and

, with respect to the water, making the process less sensitive to oscillations caused by spectral reconstruction errors.

By using min–max scaling a second time, we can rescale

and

in the interval

and

to enforce that the maximum and the minimum values reconstructed by the U-NETs are positive. That way, Equations (

18) and (19) give us the dielectric properties

and

reconstructed by the U-NETs.

Finally, the application of the contour adds prior information by enforcing the constant values of

and

outside the phantom.

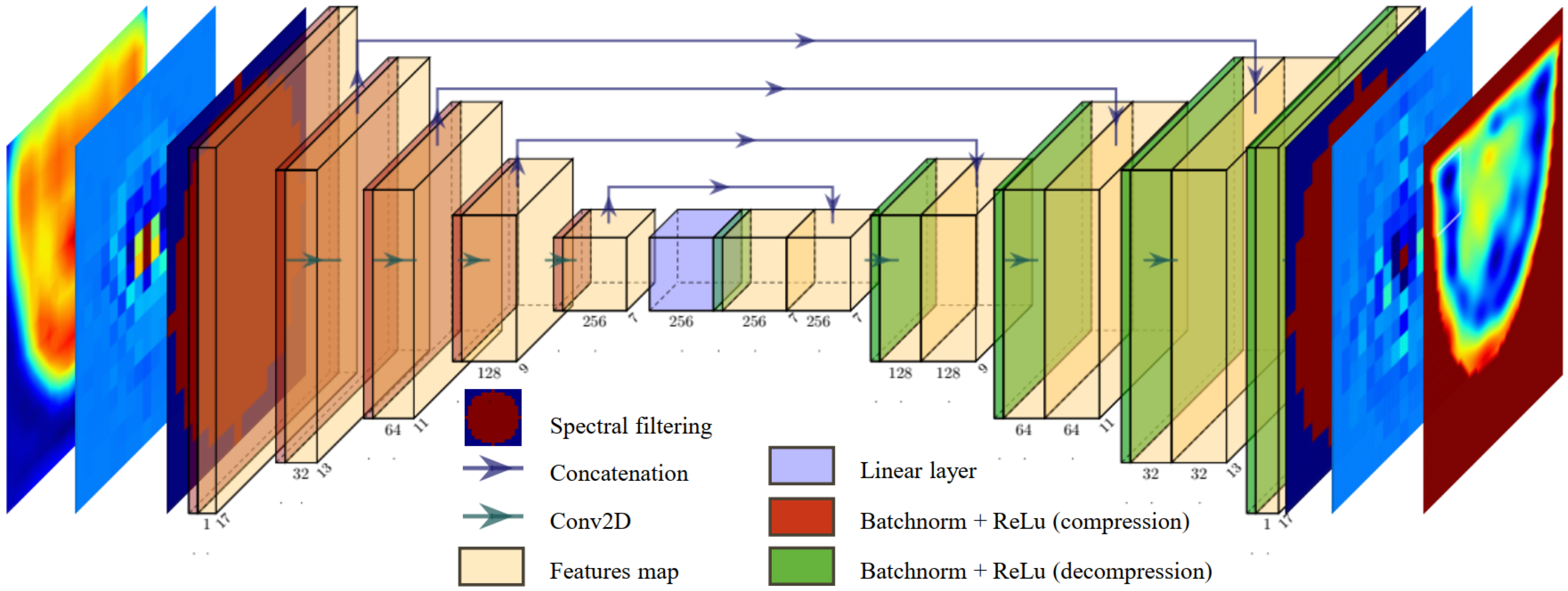

2.6. U-NETs for Spectral Reconstruction of Dielectric Contrast

The model was fully developed hand-made in Python, using functions from the open-source PyTorch library [

33]. For spectrum enhancement, we chose to use a pair of identical U-NETs [

34], with the architecture illustrated in

Figure 8. One U-NET is designed to optimize the real part of the spectrum

, while the second U-NET focuses on the imaginary part. The spectral filter is applied both on the spectral input and output of the U-NET pair. That way, the U-NETs exclusively optimizes the filtered part and performs a proper pixel-to-pixel transformation of the spectrum.

To perform the reconstructions, the spectra are grouped into batches of three, containing a total of parameters. In the compression phase, we implement a series of filters with decreasing sizes, using zero-padding adjustments to ensure gradual compression of the spectrum without employing pooling operations. This allows the information to be compressed through convolutions, reducing the dimensions of each feature map from to . The various applied filters gradually generate an increasing number of feature maps, gathering the statistical information needed to modify the spectrum.

During the decompression phase, the filter dimensions are unchanged, and the padding is gradually increased to the initial spectrum size at the end of the network. The features of the U-NETs architecture are presented in

Table 2.

Before each convolution, batch normalization is applied to speed up the learning process, and the ReLu function is introduced to add non-linearity to the U-NETs. The transition from compression to decompression is a linear layer.

The Adam optimizer [

35] minimizes the Weighted Mean Absolute Percentage Error (WMAPE) loss for the real and imaginary components of the spectrum, given by Equations (20) and (21), respectively. The optimizer parameters are set to their default values: learning rate

and inertial coefficients

.

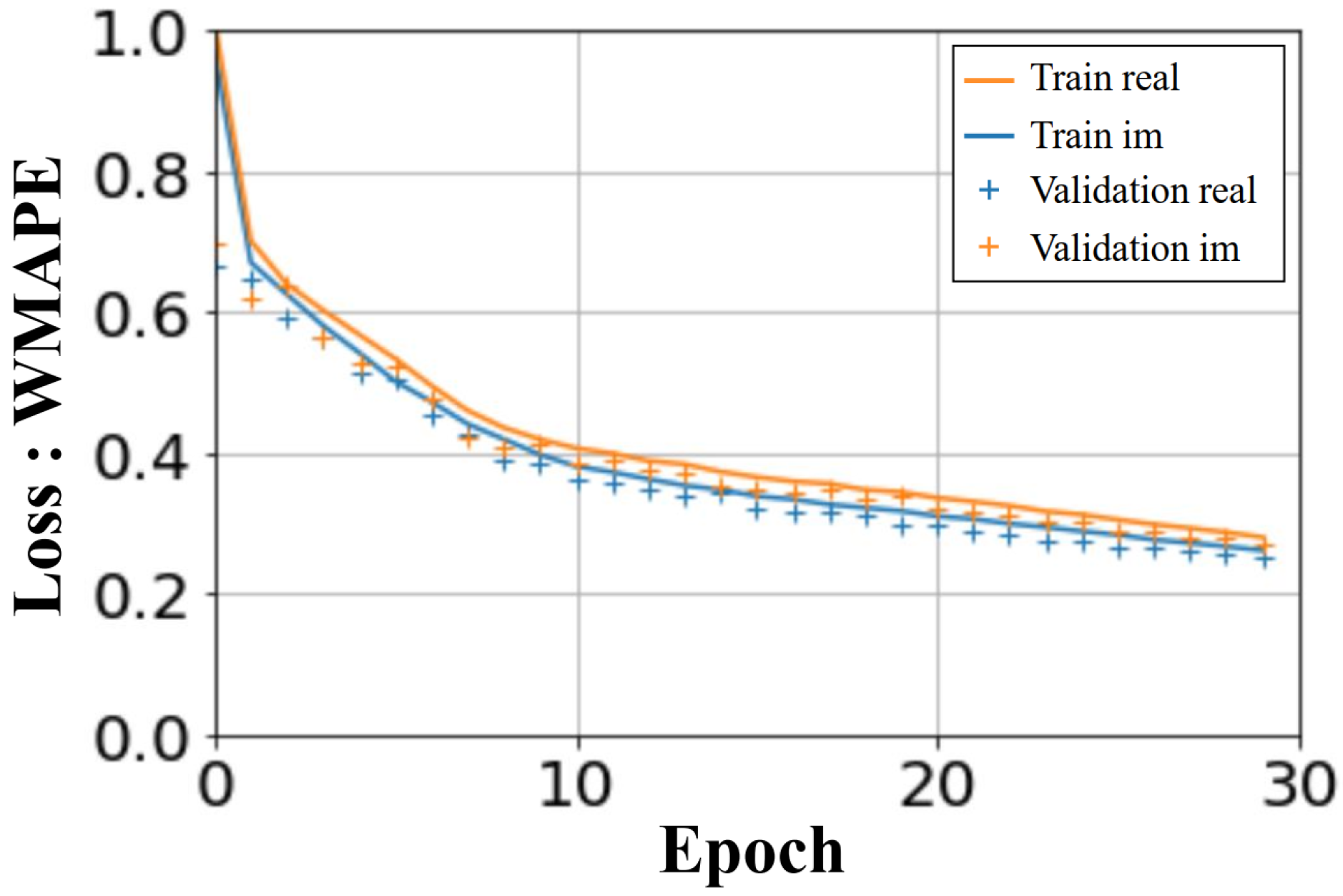

The U-NETs were trained over 30 epochs. The computation time for the learning phase was less than an hour and a half, on an NVIDIA RTX A2000 8GB. The computed loss in

Figure 9 shows the WMAPE loss function as a function of the number of epochs. The loss values displayed are average values, taking into account all the batches processed. A similar convergence is observed for the learning and validation process, for both real and imaginary parts.

4. Discussion

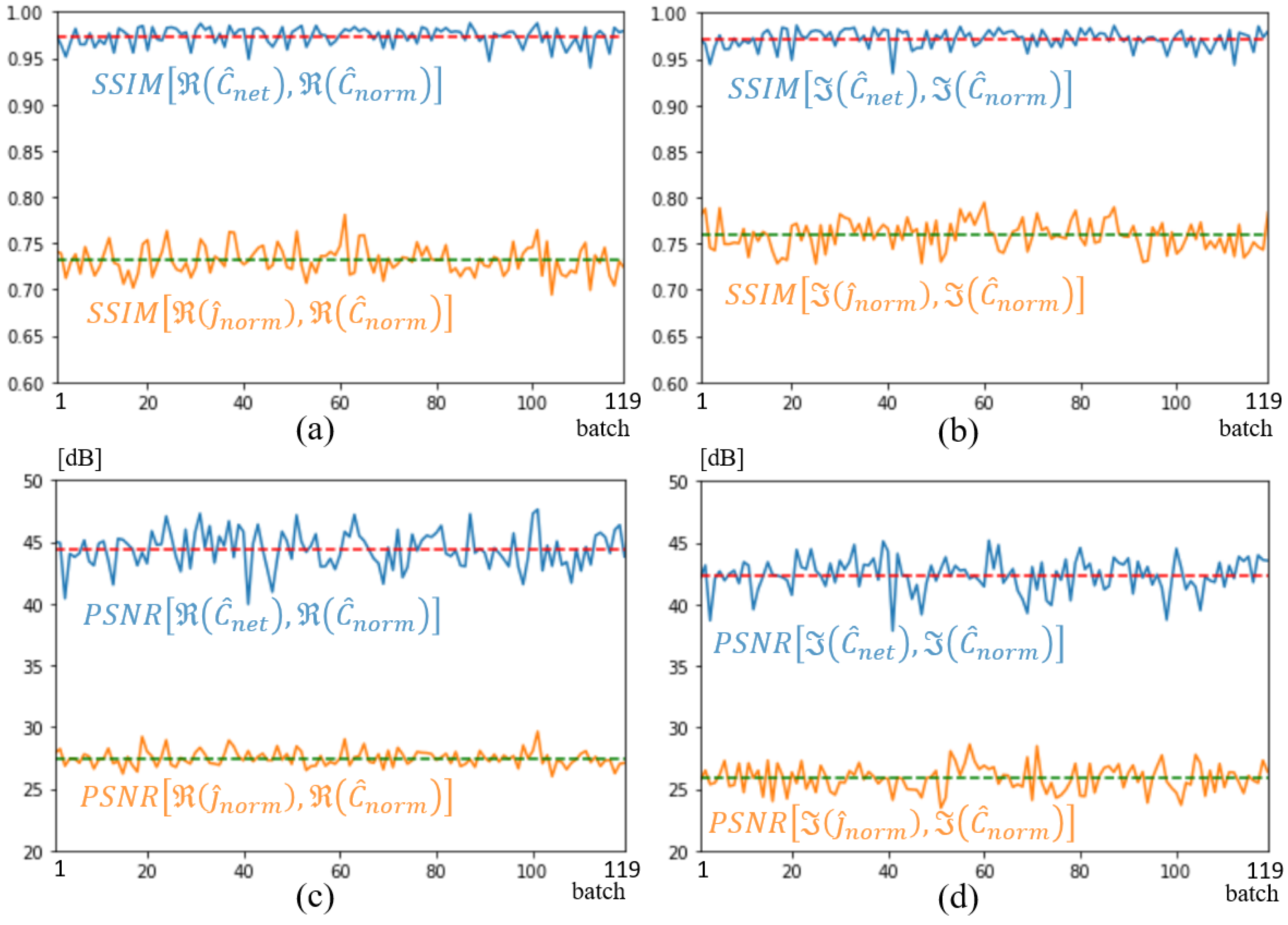

The main objective of this paper was to develop a combination of U-NETs with a spectral method to produce real-time quantitative breast imaging. The preliminary results presented in the previous section validate the method and confirm its potential in terms of efficiency with an SSIM of 0.97 in validation and 0.83 in testing and robustness to a low SNR of 10 dB. A second achievement was the development of an in-house, realistic, diverse, low-cost, and easy-to-use 3D database. To our knowledge, both developments are new.

However, this work is a first step. Indeed, as research on model architectures such as CNN, U-NET, ANN, and CVCNN remain important, the most essential aspect of deep learning remains the quality and quantity of the database and data pre/post-processing, as the neural network parameters are optimized from the available dataset. Unfortunately, the number of breast phantoms currently available worldwide remains limited. In the literature, studies that clearly avoid data leakage by using uncorrelated testing and training sets, achieving deep learning generalization results during image enhancement, dispose either of a large amount of automatically generated data [

15] or of information from other modalities [

12]. This is not the case here. Consequently, overfitting is mandatory with such a small database, and the correlation between training and validation sets is ensured by the combinatorial nature of the training/validation set. This is clearly an obstacle to generalization, and techniques such as regularization or dropout are useless when facing a lack of data.

To solve this issue, we propose in this study to begin with a small in-house database, with the idea of optimizing and extending it in the future. The idea is to study both the reconstruction process and the data themselves in an optimized way. This would enable us to better understand the behavior of the neural network as a function of the database, i.e., its size, processing, and potential biases. The database would become a customizable input, and the optimization process would be seen as a unified optimization of the model and data.

This database must be realistic, diversified, and easy to develop. This is why we propose a combination of anthropomorphic breast cavities, derived from modifications made to a realistic phantom, generated from an MRI scanner [

13]. Working with phantoms not only frees us from legal and ethical issues, but also allows us to control the entire database development program. Moreover, the existence of printable twins of constructed cavities can be used for experiments or to calibrate measurements.

However, conducting research in this way comes at a cost both in terms of development time and effort. This is why this study focuses primarily on assessing the validity of the method rather than its performance.

Does the combination of U-NETs with a spectral method of very low computational cost, compared to classical optimization methods—DBIM, CSI, GN, etc.—yield promising results ? The answer is positive, if we refer to the images and associated metrics presented in the previous section.