Abstract

In response to the global trend of population aging, the issue of providing elderly individuals suitable leisure and entertainment has become increasingly important. In this study, it aims to utilize artificial intelligence (AI) technology to offer the elderly with a healthy and enjoyable exercise and leisure experience. A human–machine interactive system is designed using computer vision, a subfield of AI, to promote positive physical adaptation for the elderly. The relevant literature on the needs of the elderly, technology, exercise, leisure, and AI techniques is reviewed. Case studies of interactive devices for exercise and leisure for the elderly, both domestically and internationally, are summarized to establish the prototype concept for system design. The proposed interactive exercise and leisure system is developed by integrating motion-sensing interfaces and real-time object detection using the YOLO algorithm. The system’s effectiveness is evaluated through questionnaire surveys and participant interviews, with the collected survey data analyzed statistically using IBM SPSS 26 and AMOS 23. Findings indicate that (1) AI technology provides new and enjoyable interactive experiences for the elderly’s exercise and leisure; (2) positive impacts are made on the elderly’s health and well-being; and (3) the system’s acceptance and attractiveness increase when elements related to personal experiences are incorporated into the system.

1. Introduction

1.1. Research Background and Motivation

1.1.1. Research Background

The global aging population has been steadily increasing in recent years. In Taiwan, for example, by the end of March 2008, 14.05% of the population was aged 65 and older, officially marking the onset of an aging society. With low birth rates, many older adults now face the challenge of living alone, making home safety and suitable leisure activities critical issues. Upon retirement, seniors often experience a shift in focus, requiring emotional adjustment and the need to find new interests and life goals.

Although previous studies have suggested various leisure activities for the elderly, traditional ones, such as chess or walking in parks, remain dominant compared to those enjoyed by younger generations. In 1997, the World Health Organization defined quality of life as an individual’s perception of their position in life, encompassing personal goals, expectations, standards, and concerns. To better meet the needs of the elderly, it is essential to explore innovative leisure activities that incorporate modern technology to enhance their physical health, as well as their overall quality of life [1].

1.1.2. Research Motivation

As the global aging population grows, elderly individuals face challenges such as home safety, adjusting to a new life focus, and engaging in meaningful leisure activities. Since traditional leisure options dominate, it has become urgent to explore how modern technology can better address their evolving needs [2]. With retirement prompting significant lifestyle changes, the rapid development of technology presents new opportunities to enhance their quality of life.

This research aims to explore how artificial intelligence (AI) can create innovative, enjoyable, and health-promoting leisure activities to improve the quality of life for the elderly [3]. Specifically, the study seeks to develop a human–machine interactive system that enables elderly individuals to engage in exercise and leisure using AI techniques like object detection and interactive motion sensing. The system’s impact on the elderly will be evaluated statistically through prototype development and experience activities in elderly-related settings, with a focus on its effects on the physical health, where “physical health” in this study is defined as the “normal functioning of the body”. Accordingly, “improving or promoting the physical health” mentioned in the sequel means “having better uses of the functions of the user’s body”.

Additionally, the feasibility of integrating technology into leisure activities for the elderly will be thoroughly explored, providing valuable insights for future research in this field.

1.2. Literature Review

1.2.1. Needs of Aging for the Elderly

As individuals age, their physical and mental functions gradually decline, affecting motor, perceptual, and cognitive abilities, which in turn impact daily life. Huang [4] identified four key indicators of aging: loss of reproductive ability, graying hair, reduced physiological functions, and the onset of chronic diseases. Aging is a complex process influenced by genetics, lifestyle, and health, leading to a decline in the ability to perform daily activities. However, appropriate physical activity can help maintain organ function and delay aging [5]. Aging is not solely driven by disease—factors like reduced physical agility, external response abilities, weight loss, and changes in social and health perceptions also play a role [6]. Elderly individuals often face difficulties using technology due to these age-related changes. Chen [7] noted that the gap in using technological products is wider for the elderly, often due to physiological factors. However, if designers consider aging-related needs, they can create products better suited to the elderly’s physiological and psychological conditions [8].

As the aging population grows, gerontology research increasingly addresses not only the physiological aspects of aging but also the psychological challenges faced by the elderly, aiming to help them live healthier and more fulfilling lives [9]. Rowe and Kahn [10] defined successful aging as involving (1) reducing disease and disability, (2) maintaining cognitive and physical functions, and (3) active participation in daily activities. Baltes and Baltes [11] viewed aging success as a psychological adaptation process, encompassing (1) selection, (2) optimization, and (3) compensation, known as the SOC model. Lin [12] expanded these ideas, defining successful aging as the ability to adapt well to aging, emphasizing physical health, psychological well-being, and maintaining strong family and social relationships to enjoy life in old age.

Successful aging is shaped by individual choices and behaviors, driven by personal autonomy. Franklin and Tate [13] emphasized that life satisfaction is key to successful aging. Regular exercise helps reduce anxiety, depression, and negative emotions, boosts self-esteem, and enhances cognitive function, thereby improving life satisfaction [14]. The concept of “active aging”, introduced in Singapore, encourages ongoing learning and embodies the spirit of aging without feeling aged. Education can delay physical aging and prevent psychological and social decline, promoting successful aging [15].

In addition to active learning, leisure activities play a vital role in successful aging. Li and Gao [16] found a strong positive correlation between leisure participation and successful aging. Heintzman and Mannell [17] showed that leisure activities benefit physical and mental health, skill development, stress relief, and social connections. Yan and Guo [18] observed that leisure education helps elderly individuals improve health, meet personal needs, refocus life, reduce stress, and gain enjoyment, promoting psychological, physiological, and social benefits. Hsieh [19] emphasized that engaging in meaningful activities and maintaining strong family and social ties are essential for successful aging, and various positive approaches to aging contribute to this process [20].

In response to the aging population trend, countries worldwide have implemented strategies to help elderly individuals age with dignity, health, and happiness. The concept of active aging encourages regular participation in physical leisure activities, promoting successful aging and addressing the challenges of growing older.

1.2.2. Exercise and Leisure Activities for the Elderly

After retirement, elderly individuals who stay at home for long periods need activities that refresh both their body and mind. The International Society for Gerontechnology defines gerontechnology as the design of technologies and environments that enable elderly individuals to live independently, healthily, comfortably, and safely while participating in society. Engaging in activities promotes better health for the elderly, as staying home without engaging in daily activities can hinder their sense of well-being. In contrast, social activities help foster a sense of health and well-being [21]. Lin [22] categorized elderly leisure activities into three types: solitary, social, and fitness-oriented. Hughes [23] noted the shift from tangible recreational products like books and tapes to cloud-based media like e-books, music, and videos.

Lian [24] highlighted that leisure activities improve life satisfaction, emotional well-being, physical fitness, and slow the decline in physical functions for elderly individuals. Digital interactive behaviors, in turn, rely on systems that offer real-world models for learning digital operations [25]. Unlike younger individuals, the elderly are slower in both physical activity and visual processing, requiring entertainment options tailored to their capabilities. Caprani et al. [26] found that elderly individuals perform better with touchscreens than other input devices and prefer them. To improve adoption rates, Pal et al. [27] suggested designing systems with ease of operation in mind, using user-friendly interfaces and simple button operations. Thus, touchscreens should be prioritized in designing such devices.

1.2.3. Orange Technology and Applications

Wang [28,29] introduced the concept of orange technology, which emphasizes human-centeredness and humanitarian care, focusing on developing technologies that enhance health, happiness, and well-being. The color orange, a mix of red and yellow, symbolizes brightness, health, happiness, and warmth. Liu and Kao [30] described orange as a balance between the energy of red and the liveliness of yellow, associated with joy and sunlight. In color theory, orange represents passion, charm, creativity, determination, and achievement, evoking warmth and inspiration.

As detailed by Wang [31], Liu and Chen [32], and Chen [33], orange technology encompasses three main areas, health technology, happiness technology, and care technology, outlined as follows. (A) Health technology includes (a) enhancing the health or quality of life for the elderly, (b) integrating computer communications and medical systems, and (c) building cloud platforms for health promotion, disease prevention, telemedicine, mobility, and home care. (B) Happiness technology involves (a) personal and national happiness indices, (b) methods to measure happiness, and (c) applying technology to improve happiness levels. (C) Care technology focuses on designing innovative products and systems that promote human care and connection.

The application of AI in the home domain has expanded significantly, encompassing areas from security monitoring to smart assistants. Today, nearly all household systems are integrated with AI, with four main categories identified [34]: (1) entertainment and information, (2) automated control, (3) security monitoring, and (4) healthcare. Of these, entertainment and information applications are already embedded in homes, and future developments are expected to focus on the latter three categories.

Examples of current smart home applications include facial recognition security locks, smart assistants, and care robots. Existing systems can be classified into five dimensions based on usage: health monitoring, environmental monitoring, companionship, social interaction, and entertainment [27]. Xu and Bai [35] noted that children working away from home often have limited communication with elderly parents, and the greatest risk for the elderly is not just health concerns, but also isolation and loneliness.

The demographic structure of the elderly population is shifting, with an increasing proportion of elderly individuals, leading to an aging society. After 65, most people transition from the workforce to family life, gradually moving from independence to requiring assistance with daily activities. To ease the burden on caregivers, the market is seeing a rise in care robots. Sharkey and Sharkey [36] identified three main functions of care robots: (1) assisting the elderly or caregivers with daily tasks, (2) monitoring the elderly’s behavior and health, and (3) providing companionship. These robots not only address physiological care but also cater to psychological needs. Additionally, smart assistants, integrated with the Internet of Things (IoT), manage household information, enable remote control of appliances, and provide reminders, such as weather forecasts or traffic updates, preventing inconveniences when leaving the house.

Several existing cases of orange technology applications are analyzed and compared in this study from the aspects of human–machine interfacing format, AI technique type, and interaction scheme, as shown in Table 1.

Table 1.

Analysis of existing cases of orange technology.

1.2.4. AI and Computer Vision Technology

Artificial intelligence (AI) is a technology that enables computers to simulate human-like thinking and perform specific tasks on behalf of humans. The concept was first introduced by John McCarthy in a 1956 research project on AI, marking the emergence of AI as a distinct academic discipline [40].

Before 1956, AI was in its early stages, with scientists working to develop machines capable of performing human tasks. A key contribution came in 1936 when Alan Turing published his paper “On Computable Numbers, with an Application to the Entscheidungsproblem [41], introducing the “Turing Test” and laying the foundation for modern computers and AI theory. In 1943, Warren Sturgis McCulloch and Walter Harry Pitts Jr. published “A Logical Calculus of Ideas Immanent in Nervous Activity”, introducing artificial neural networks (ANNs) and demonstrating their mathematical relationship to logical expressions, inspiring further research in the field [42].

AI became an established research area only after John McCarthy formally defined it, marking the beginning of its first golden era from 1956 to 1970. During this period, major advancements included machine learning, expert systems, and natural language processing. In 1957, American psychologist Frank Rosenblatt proposed the Perceptron model, and by 1960, it was applied in the Mark 1 Perceptron system to distinguish gender in photographs. This invention was pivotal in the AI field and laid the foundation for modern neural network design [43].

AI entered its second golden era from 1980 to 1990, with breakthroughs such as the Hopfield Neural Network introduced in 1982, advancing speech recognition technologies. After 2006, advancements in graphics card technology and expanded storage capacity significantly improved hardware parallel processing, enabling rapid access to vast data. The introduction of deep learning in 2012 led to major breakthroughs in speech recognition, computer vision, and natural language processing.

It is noteworthy that computer vision, a subfield of AI, has seen significant development over the past two decades and is now widely applied across various domains, including industry, healthcare, and transportation. This field focuses on enabling machines to “see”, often referred to as machine vision. By using cameras and computational systems, computer vision substitutes human visual perception, allowing for the identification, tracking, and measurement of objects, while mimicking human cognitive processes to interpret and recognize images. The core principle of computer vision is to simulate human vision using cameras that capture images and send them to a computer system for real-time processing. The system analyzes these images frame by frame, using algorithms like image segmentation, smoothing, and edge sharpening, which enhance the computer’s ability to process image content efficiently.

One important technique in computer vision is the deformable part model (DPM), which represents an object as a combination of parts. For instance, it models a human as a combination of the head, body, arms, and legs. Felzenszwalb et al. [44] found that the DPM is a more accurate method for object detection compared to traditional hand-crafted feature approaches, such as sliding window feature extraction followed by classification. The model enhances the use of histogram of oriented gradients features and introduces both global and local models, significantly improving object detection accuracy. However, the DPM has drawbacks, including complex features, slower computation speed, and reduced performance when detecting objects that undergo rotation or stretching.

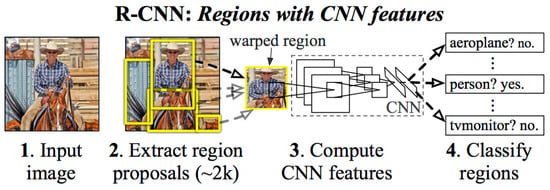

Another computer vision method is the region-based convolutional neural network (R-CNN), proposed by Girshick et al. [45]. This method applies the selective search algorithm to generate candidate regions, which are then processed through a CNN to extract desired features. The extracted features are sent to multiple support vector machine (SVM) classifiers, and regions with high classification accuracy are retained as the final object detection regions. The R-CNN process generates approximately 2000 candidate regions from the original image through selective search. Each candidate region requires CNN feature extraction and SVM classification, resulting in significant computational load and slower detection speeds. However, the R-CNN method offers the advantage of substantially improved detection accuracy and introduces a deep learning-based framework for object detection. An architecture of the R-CNN is shown in Figure 1.

Figure 1.

The region-based convolutional neural network (R-CNN) architecture proposed by Girshick et al. [45].

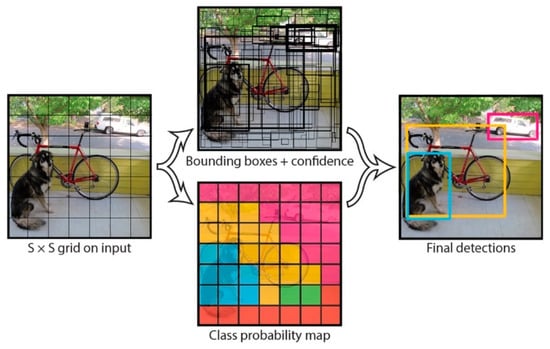

A third technique in computer vision is the use of the YOLO (You Only Look Once) algorithm [46] for real-time object detection. The algorithm divides an image into a grid, and detects and processes objects within each grid cell, as shown in Figure 2. Redmon et al. [47] highlighted three advantages of the YOLO algorithm: (1) it enables real-time image processing with less than 25 milliseconds of delay, (2) it performs comprehensive image inference, reducing background error rates to less than half of those in the fast R-CNN algorithm, and (3) it learns generalized representations of objects, making it less prone to errors when processing new or unseen images. However, the YOLO algorithm also has drawbacks, including difficulty in detecting tightly clustered small objects (e.g., flocks of birds) and challenges in generalizing objects with uncommon aspect ratios.

Figure 2.

An illustration of the YOLO model proposed by Redmon et al. [47].

Currently, YOLO has also been applied in elderly care, primarily for fall detection among elderly individuals. Sun et al. [48] categorized human postures into four types, standing, sitting, leaning, and falling, using labeled images to generate extensive training data. Lu and Chu [49] proposed three methods for fall detection: wearable devices, environmental sensors, and image recognition. For wearable devices, tri-axial accelerometers placed on the elderly person’s body collect movement speed changes, determining a fall when acceleration exceeds a preset threshold [50]. Regarding environmental sensors, Su et al. [51] suggested using ceiling-mounted Doppler radar to detect falls by motion analysis.

Finally, image recognition is one of the most widely used computer vision technologies. Agrawal et al. [52] applied background subtraction to identify foreground objects in an image. By using human body contours and template matching, the individual in the image was classified. The distance between the person’s bounding box and the center of the body was then measured to determine whether a fall had occurred.

The differences in the training models of the YOLO, DPM, and R-CNN techniques are organized in this study, as shown in Table 2. Several existing cases of computer vision-based works for interactive experience are analyzed and compared in this study from the aspects of presentation form, technique used, and interaction scheme, as shown in Table 3.

Table 2.

Differences in the training models of the YOLO, DPM, and R-CNN techniques.

Table 3.

Analysis of existing cases of computer vision-based interactive experience works.

1.3. Research Goal and Process

1.3.1. System Design Concepts

In this study, a review of the relevant literature is conducted to explore the physical and mental functional states of the elderly, as well as potential issues of aging. Key concepts such as active aging and successful aging, along with challenges faced by elderly individuals when using technological products, are also discussed. Based on the case analysis, it is observed that AI and computer vision technologies have become increasingly advanced, benefiting not only interactive exhibitions but also leisure and entertainment for the elderly.

This study aims to design a technology-based exercise and leisure system that enables the elderly to enjoy gaming experiences while benefiting from sensory stimulation, hand–eye coordination, and physical exercise. By integrating AI and computer vision technologies, particularly the YOLO algorithm, into leisure activities, the system can enrich the lives of older adults while helping them maintain a healthy mind and body through appropriate physical activity.

More specifically, several prototype concepts derived in this study for designing such a system are outlined as follows.

- (1)

- Selecting appropriate leisure and entertainment themes for the elderly and using situational simulations to foster a sense of connection to reality during device use.

- (2)

- Designing YOLO algorithm-based recognition techniques for the human–machine interface and integrating Kinect-based skeletal recognition schemes, enabling the system to detect body movements and allowing participants to interact naturally.

- (3)

- Designing motion-based interactions that provide an intuitive mode of engagement, reducing operational difficulty and minimizing the burden on the elderly.

- (4)

- Designing appropriate game interactions to enhance the elderly’s cognitive awareness of life and physical activity, thereby improving their physiological functions through entertainment.

1.3.2. Research Goal

It aims to answer the following research questions in this study.

- (1)

- What are the advantages of integrating AI technologies into exercise and leisure activities for the elderly?

- (2)

- How can target detection and computer vision technologies be incorporated into exercise and leisure activities for the elderly?

- (3)

- How can AI technologies be used to enhance the positive effects of exercise and leisure activities for the elderly?

The research objectives of this study are summarized as follows.

- (1)

- The application of AI technologies in human–machine interaction and their forms of expression will be explored.

- (2)

- The correlation between exercise and leisure activities and the lives of the elderly will be examined, and a system prototype will be constructed.

- (3)

- Current AI applications and cases in the field of leisure and entertainment will be investigated, and system design concepts will be summarized.

- (4)

- According to the literature review on the relation between leisure activities and the elderly, an interactive system will be proposed for the elderly using AI technologies.

- (5)

- Through surveys and user interviews, the usability and effect of the developed system will be explored.

1.3.3. Research Process

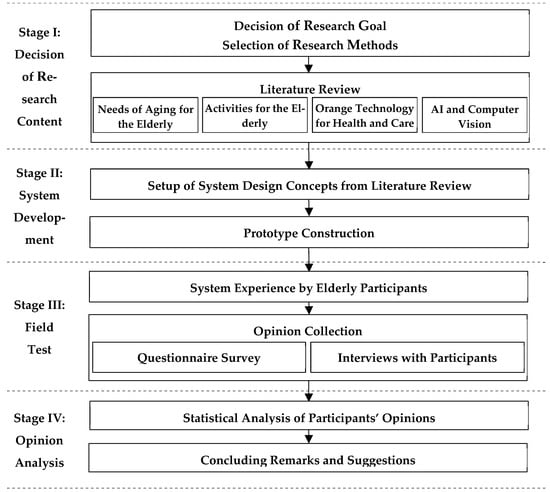

The research process of this study is illustrated in Figure 3, which can be divided into four stages as described in the following. More details involved in the process will be described in subsequent sections.

Figure 3.

The flowchart of the research process of this study.

- (1)

- Stage I: decision of research content—The research goal and the selection of research methods for this study are determined based on a literature review conducted from four perspectives: (1) the needs of the elderly in aging, (2) leisure activities for the elderly, (3) orange technology for health and care, and (4) AI and computer vision.

- (2)

- Stage II: system development—The design concepts of the proposed system are derived from the reviewed literature. Then, a prototype system is constructed accordingly.

- (3)

- Stage III: Field Test—The prototype system is tested at a care center, where the elderly are invited to experience the system. Their opinions about using the system are collected by questionnaires and interviews.

- (4)

- Stage IV: Opinion Analysis—The users’ opinions are analyzed statistically using the SPSS and AMOS packages, and conclusions are drawn with suggestions for future research also provided.

2. Methods

2.1. Prototype Development

In this study, a prototype of the proposed interactive exercise and leisure system for the elderly was developed. Following Chi and Guo [56] and based on the methods of Connell and Shafer [57], the development process was divided into seven stages: rapid planning, rapid analysis, rapid development, demonstration and evaluation, prototype revision, requirement approval, and finalization of specification requirements. Additionally, Eliason [58] outlined a four-step prototype development process: requirement analysis, system design, system implementation, and system evaluation.

In this study, the following four steps are followed to build and assess the prototype of the proposed interactive exercise and leisure system.

- (1)

- Requirement analysis: Through a literature review, the issues faced by the elderly in leisure activities today are identified. The AI technology and the YOLO algorithm are explored, and the applications of them to leisure activities for the elderly are summarized, culminating in the formulation of the design principles for the system.

- (2)

- Prototype design: Based on the derived design concepts, the desired interactive exercise and leisure system for the elderly is designed, with an interactive flowchart being created to illustrate the system’s interaction process.

- (3)

- Prototype development: The integration of the motion-sensing technique and the YOLO algorithm are conducted for use in the proposed system for the elderly.

- (4)

- Prototype evaluation: A user experience activity is held to evaluate the system’s effectiveness. Public demonstrations, questionnaire surveys, and user interviews are carried out to analyze the users’ feedback and assess the system’s performance.

2.2. Questionnaire Survey

The questionnaire survey method is a research approach that collects participants’ opinions through questionnaires, enabling the direct gathering of large amounts of information. Ye and Ye [59] recommended that when designing a questionnaire, specific steps, formats, principles, approaches, and methods should be followed to ensure objectivity, clarity, adequacy, logical consistency, rationality, adaptability, and specificity. Based on statistical analysis results, the effectiveness of the system can be assessed. The implementation steps conducted in this study for the questionnaire survey are as follows.

- (1)

- Survey time: After a participant experiences the interactive exercise and leisure system, they are invited to complete a questionnaire, which takes approximately 5 min.

- (2)

- Survey participants: Questionnaires will be distributed anonymously to all elderly participants. Each participant’s experience process will last about 10 min, followed by 5 min for completing the questionnaire.

- (3)

- Survey implementation steps: (i) Explain the questionnaire items to the participant. (ii) Distribute a questionnaire to each participant. (iii) Request the participant to fill out the questionnaire.

The questionnaire for this study is designed using the technology acceptance model (TAM) [60] and the strategic experiential modules (SEMs) [61], with modifications based on the specific characteristics of this research. The two scales of system usability and user experience are developed.

After participants interact with the proposed interactive system, quantitative questionnaires are distributed and collected, with valid samples analyzed. Additionally, random sampling of elderly participants are conducted for semi-structured interviews, and verbatim transcripts are analyzed for qualitative validation. The elderly participants’ behavior and experiences with AI in leisure activities will be assessed using the TAM, allowing the impact of AI technology on the elderly to be evaluated. The SEMs will also be employed to assess users’ experiences.

The two scales, system usability and user experience, used in the questionnaire survey are explained in more detail in the following.

- (1)

- System usability evaluation: The purpose of this evaluation is to assess the elderly’s acceptance of technology integration in leisure activities, as well as the usability of the system proposed in this study across various aspects. The questionnaire design is primarily based on the TAM [60], with modifications made to suit the specific characteristics of this study. The questionnaire consists of 12 questions, labeled T1 to T12, as shown in Table 4.

Table 4. Questions designed for evaluation of system usability in the questionnaire survey.

Table 4. Questions designed for evaluation of system usability in the questionnaire survey.

- (2)

- User experience evaluation: The purpose of this evaluation is to assess the users’ experience and feelings after interacting with the system proposed in this study. The questionnaire design is primarily based on the SEMs [61], with modifications made to suit the specific characteristics of this study. The questionnaire consists of 20 questions, labeled S1 to S20, as shown in Table 5.

Table 5. Questions designed for evaluation of user experience in the questionnaire survey.

Table 5. Questions designed for evaluation of user experience in the questionnaire survey.

The Likert five-point scale [62] was adopted for the questionnaire design, with options ranging from “strongly disagree”, “disagree”, “neutral”, “agree”, to “strongly agree”, assigned the scores of 1, 2, 3, 4, and 5, respectively.

2.3. Interviews with Participants

The interview survey method involves direct communication between the researcher and interviewee, allowing for verbal questions and responses that capture objective facts, emotions, attitudes, and value judgments. This approach provides flexibility for interviewees to elaborate on their opinions. In this study, a semi-structured interview approach is used, as described by Flick [63], where topics are introduced with open-ended questions, allowing interviewees to respond based on their knowledge. Lin et al. [64] noted that semi-structured interviews offer a flexible format that enables participants to express their thoughts authentically. This method is employed to explore elderly participants’ experiences with the proposed system.

3. Results

3.1. System Design

3.1.1. The Design Concept

As mentioned earlier, the proportion of elderly individuals has been steadily increasing in recent years, bringing greater attention to issues related to their daily lives and leisure activities. In response, more communities are organizing group activities for the elderly, encouraging them to leave their homes and engage with others. These initiatives help foster social skills while promoting physical activity and cognitive engagement.

Many current studies focus on applying AI to elderly care and healthcare, with less attention given to exercise and leisure. Therefore, this study aims to incorporate AI technology to develop an interactive exercise and leisure system tailored for elderly individuals.

Called the “Grandpa and Grandma Move”, the system allows elderly users to engage their hand muscles in response to the system’s content and activities. The goal is to stimulate their visual and auditory perception, as well as hand strength, enabling them to follow on-screen instructions while participating in the leisure experience and training their brain. Through this system, it is hoped that elderly individuals will improve their understanding and experience of technology. The system aims to provide a sense of achievement and enjoyment, enhancing the physical function while fostering a positive, active attitude toward aging.

In more detail, AI techniques, including interactive motion sensing with Kinect hardware and real-time object recognition using the YOLO algorithm, are integrated into the proposed system for the elderly. The Kinect is equipped with a dual-lens camera, allowing it to capture images and perform computer vision functions to identify the skeletons of the user for identifying the user’s body actions. Also, the deep learning process carried out in the YOLO algorithm utilizes an image database of various perspectives of balls and tennis rackets constructed in this study to detect the existences of the ball and tennis racket.

Two activities are featured to enhance human–machine interaction: an elastic ball exercise and a tennis exercise. These activities are designed to provide leisure and entertainment for older adults.

The proposed system, which primarily includes a computer host, a TV screen, a webcam, and a Kinect motion-sensing camera, is designed as follows.

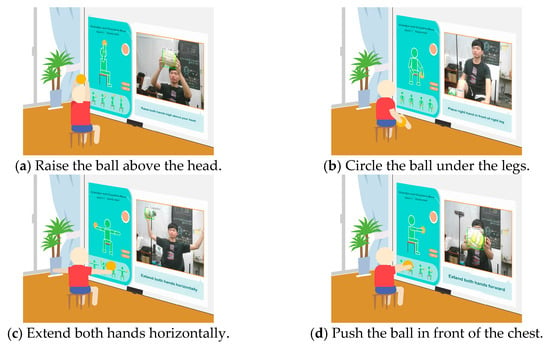

- (1)

- Elastic Ball Exercise Experience: This exercise, based on the elderly person’s past experiences and entertainment activities, is designed to engage their visual and auditory perception as well as hand strength. The participant holds an elastic ball and follows the system’s instructions to perform four actions. The YOLO algorithm detects whether the ball is in the participant’s hand, and if it moves out of range, a prompt appears to ask them to pick it up again for an optimal experience. The process is illustrated in Figure 4.

Figure 4. Illustrations of elastic ball exercise game played on the proposed system.

Figure 4. Illustrations of elastic ball exercise game played on the proposed system.

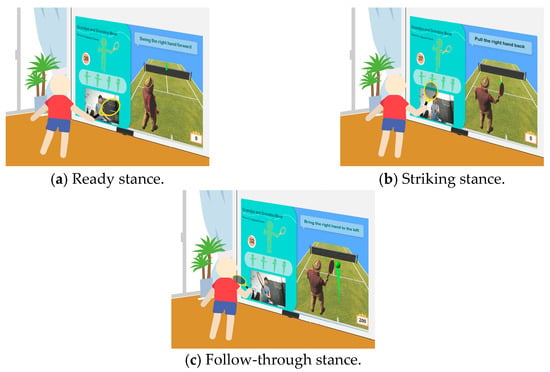

- (2)

- Tennis Exercise Experience: Based on the elderly person’s background, this exercise uses hand and leg muscles to operate the device, aiming to activate visual and auditory perception, as well as leg strength. The participant holds a tennis racket and follows system instructions to swing and hit the ball. The YOLO algorithm detects in real time whether the racket is in the participant’s hand and tracks its orientation. The racket must face the camera; if it is sideways or out of range, a prompt appears on the TV screen to ask the user to reposition the racket for the best experience. The tennis exercise process is shown in Figure 5.

Figure 5. Illustrations of tennis exercise game played on the proposed system.

Figure 5. Illustrations of tennis exercise game played on the proposed system.

It is emphasized that there is a distinction between the two types of exercise: the elastic ball exercise is performed while sitting, whereas the tennis exercise is performed while standing. This approach offers a more comprehensive physical training experience for the elderly participant.

3.1.2. The Interactive Process

AI technology is integrated into the proposed system, creating an interactive exercise and leisure platform tailored for the elderly. The system follows four main steps: it begins with a standby screen where the user initiates the game by adopting a T-pose. The user then selects either the elastic ball exercise or the tennis exercise to engage in the corresponding activities. The system is designed using Kinect-based motion-sensing interaction to identify the skeletons and the YOLO deep learning algorithm to detect the existences of the ball and tennis racket. A storyboard illustrating the interactive process scenarios is presented in Table 6.

Table 6.

Storyboard for interactive scenarios.

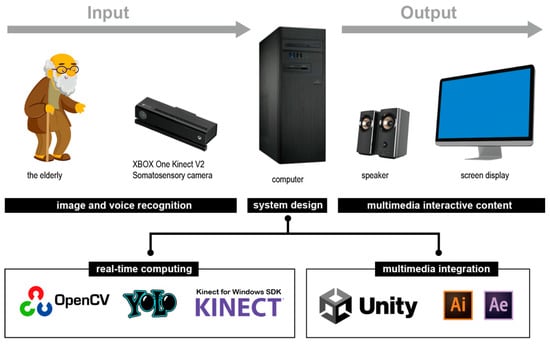

3.1.3. System Architecture

The “Grandpa and Grandma Move” interactive system is developed using Unity, integrating the OpenCV computer vision library and Kinect infrared motion-sensing techniques to track body movements and depth for motion control. The YOLO deep learning algorithm trains two prop models, which are incorporated into the system to identify the user’s selected experience. A Kinect sensor captures the user’s skeletal data, and the system compares these movements to pre-set models via a backend database to verify task completion. The interface is then displayed on a TV screen.

In terms of visual design, Illustrator is used to create the main visuals and related elements, which are then imported into After Effects for animation production. The completed animations are placed in Unity for further adjustments and content creation. For 3D models, 3D MAX is used to create the models and adjust character animations, after which the finalized models are imported into Unity for further animation tweaks and coding. This process creates an engaging and entertaining leisure experience for users. The system architecture of the “Grandpa and Grandma Move” is shown in Figure 6.

Figure 6.

Illustration of the architecture of the proposed system.

3.1.4. Main Technologies

The “Grandpa and Grandma Move” interactive system consists of two main components: the motion-sensing interactive interface and the animation content. The motion-sensing interface relies on Kinect’s skeletal detection function, using the user’s skeletal poses to interact with the system. The animation content is generated by Unity, which processes signals from Kinect motion detection and the YOLO algorithm in OpenCV to recognize user actions and trigger corresponding animations. The development environment and hardware/software equipment are outlined in Table 7, with the implemented techniques described in the following sections.

Table 7.

The environment and hardware/software equipment of the proposed interactive system.

- (A)

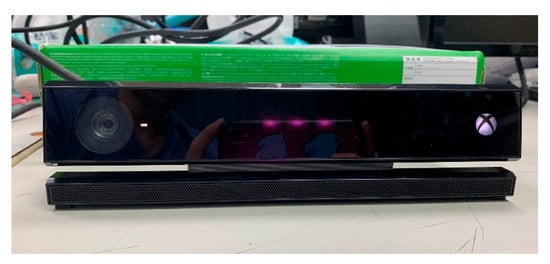

- Motion-based Interaction Detection

The “Grandpa and Grandma Move” interactive system utilizes the Kinect SDK along with the second-generation Kinect motion-sensing camera (shown in Figure 7) to implement motion-sensing operations through computer vision techniques. The Kinect camera includes a depth sensor, an RGB camera, and a microphone array with four units. The depth sensor, which combines an infrared emitter and an infrared camera, uses the time-of-flight (TOF) technique to measure distance based on the time difference between emitted and reflected infrared light. This allows the system to calculate the distance from the object to the depth camera.

Figure 7.

The second-generation Kinect motion-sensing camera used in the proposed interactive exercise and leisure system for the elderly.

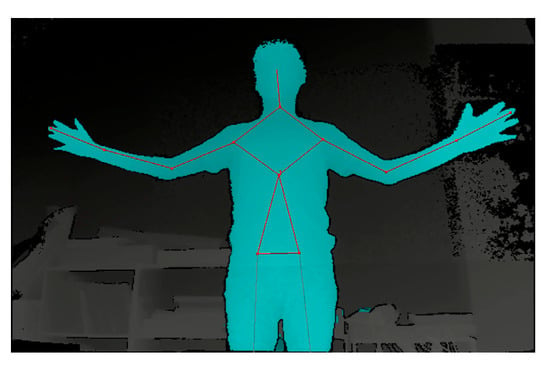

The Kinect SDK also supports human tracking and skeletal recognition, enabling the motion-sensing interaction algorithms of the “Grandpa and Grandma Move” system to focus on two main tasks: body skeleton recognition and tracking, as well as limb movement recognition. Details of these functions are described in the following sections.

- (1)

- Body skeleton recognition and tracking

The FAAST (Flexible Action and Articulated Skeleton Toolkit) and Kinect are used to track the user’s body skeleton and obtain joint coordinates, focusing on the upper body and thigh movements. An illustration of the tracked joint points is shown in Figure 8.

Figure 8.

The joint points of a human body tracked by the FAAST.

- (2)

- Limb movement recognition

Using the joint positions captured by FAAST, Kinect’s limb recognition function is used to identify the user’s movements and calculate hand bending angles. This algorithm module, shown in Table 8, includes four sets of limb movements, each triggering a corresponding event when specific conditions are met. Additionally, four continuous movements and one tennis swing movement have been designed for elderly users to experience on the “Grandpa and Grandma Move” system, with body recognition descriptions for these five movements provided in Table 9.

Table 8.

Body movement recognition algorithm module for the proposed “Grandpa and Grandma Move” interactive system.

Table 9.

Limb movement event for the proposed “Grandpa and Grandma Move” interactive system.

The proposed system uses limb movement recognition to control content flow and interactions in both the elastic ball and tennis experiences. By tracking the relative positions of body joint points and the bending angles of hands and legs, the system enables motion sensing to operate the interactive exercise and leisure system. The process is divided into five parts, including two scenarios—the elastic ball and tennis experiences—each implemented through an algorithm as described in Table 10.

Table 10.

Motion-sensing interactive control algorithm for the proposed “Grandpa and Grandma Move” interactive system.

- (B)

- Computer Vision and Object Detection

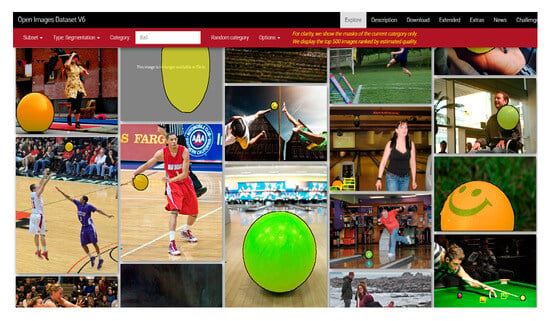

The proposed interactive system integrates AI and multimedia techniques using OpenCV algorithms for object detection. OpenCV’s deep neural network (DNN) module supports all major deep learning frameworks for model training, and from version 3.4.3 onward, it supports the YOLO algorithm. YOLO’s advantage lies in its ability to process images and output predictions through convolutional neural networks (CNNs), offering faster training and computation than other algorithms. In this study, YOLOv3 is used for real-time object detection, with the model trained on the Google Colab platform, which provides free access to high-performance GPUs. The object detection process involves setting up training images, model training, and integrating the detection process, each step of which is described in detail in the following sections.

- (1)

- Setting up training images

In this study, 1000 pre-labeled training images were collected from the Open Image Dataset using the OIDv4_ToolKit tool. These images were then converted into the format required for the YOLO algorithm. Some sample images are shown in Figure 9.

Figure 9.

Sample training images obtained from the Open Image Dataset for the YOLO algorithm.

- (2)

- Model training

To enable real-time detection, the YOLOv3 tiny version was used to reduce recognition accuracy in order to improve the frame rate. Google Colab was used for model configuration and training. The labeled images were uploaded to Google Drive, and file paths were extracted. Pre-trained YOLO model weights were downloaded for reference, and after 8000 iterations, the loss curve stabilized, indicating successful convergence.

- (3)

- Integrating the object detection process

The OpenCV package and trained model files were imported into Unity, where the parameters and weight files were configured for object detection. Using real-time video capture from the camera, the system identifies the elastic ball and highlights it. The model’s confidence in the predicted bounding box is around 93%, indicating a 93% likelihood that the object within the box is a ball. An example is shown in Figure 10.

Figure 10.

An example of object detection results.

Object detection, implemented through computer vision in this study, enables interactions in both the elastic ball and tennis experiences. By comparing real-time video captured by the camera with the trained YOLO algorithm model, the system detects the presence of the target object. The object detection process consists of five steps, corresponding to one of three tasks, (1) identifying the object (elastic ball or tennis racket), (2) checking if the elastic ball is in the current frame, and (3) checking if the tennis racket is in the current frame, as outlined by the algorithms in Table 11.

Table 11.

Object detection algorithms for the proposed interactive system.

3.2. Experimental Design

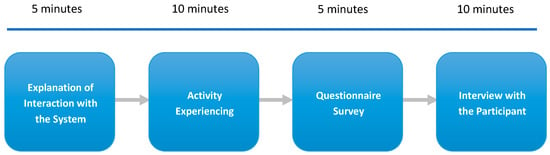

A prototype of the proposed interactive exercise and leisure system for the elderly was developed in this study. After a 5 min briefing on system usage, each participant engaged with the system for 10 min. They then completed a 5 min questionnaire and participated in a 10 min interview. This process allowed for validating the system’s usability and assessing the users’ experiences. The experimental procedure is outlined in Figure 11. The study was conducted across nine sites in Taiwan, specifically in Changhua County and Chiayi City.

Figure 11.

The experimental process for an elderly participant to use the proposed system.

3.3. Analysis of Questionnaire Survey Results

After using the interactive system, participants filled out a questionnaire. A total of 128 questionnaires were collected, with four invalid due to incomplete responses, leaving 124 valid. The survey gathered participants’ basic information and assessed two key indicators: system usability and user experience.

3.3.1. Sample Structure Analysis

The first part of the questionnaire collected basic participant information, including gender, age, and prior experience with similar technologies (Table 12). The data showed that 20.2% of participants were male, and 79.8% were female. Most participants were aged 55–74 years and 75–84 years, each accounting for 37.9% of the sample. Additionally, 78.2% had no prior experience with interactive systems, indicating limited exposure to similar technologies among the elderly.

Table 12.

Statistical summary of the participants’ basic information.

3.3.2. Analysis of Reliability and Validity of Questionnaire Survey Results

To evaluate the “system usability” and “user experience” of the interactive system, IBM SPSS 26 and AMOS 23 software were used to perform reliability and validity analyses on the collected questionnaire data. Scores for the two scales were assigned on a 1–5 Likert scale, with each question corresponding to an item listed in Table 4 and Table 5. The results, shown in Table 13 and Table 14, include the minimum (Min.), maximum (Max.), means, and standard deviations (S.D.) for the data. The process of verifying the reliability and validity of the questionnaire data followed five steps, as described below.

Table 13.

Statistics of the questionnaire data of scale of “system usability”.

Table 14.

Statistics of the scale of “user experience” questionnaire data.

- (A)

- Step 1: Verification of the adequacy of the questionnaire dataset

To verify the adequacy of the collected questionnaire data, the Kaiser–Meyer–Olkin (KMO) test and Bartlett’s test of sphericity [65] were used. A KMO value above 0.50 and a Bartlett’s test significance below 0.05 indicate that the data are suitable for further structural analysis.

The KMO and Bartlett’s test results were calculated using data from Table 13 and Table 14 in SPSS, as shown in Table 15. Both KMO values exceeded 0.50, and Bartlett’s significance was below 0.05, confirming that the data are adequate for further analysis.

- (B)

- Step 2: Finding the latent dimensions of the questions from the collected data

The structural analysis for the questionnaire survey aims to categorize the questions of each scale into meaningful subsets, each representing a latent dimension. Exploratory factor analysis (EFA) using principal component analysis and the varimax method with Kaiser normalization was conducted using the SPSS. The results, based on the inputs from Table 13 and Table 14, are presented in Table 16 and Table 17 for the two scales: system usability and user experience.

Table 16.

Rotated component matrix of the first scale, “system usability”.

Table 17.

Rotated component matrix of the second scale, “user experience”.

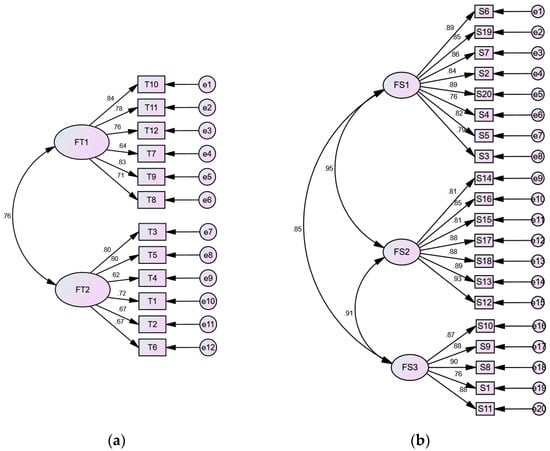

For the first scale of system usability, the variables T1 through T12, representing the twelve questions asked about this scale, are divided into two groups, FT1 = (T10, T11, T12, T7, T9, T8) and FT2 = (T3, T5, T4, T1, T2, T6), aligning with two latent dimensions termed in this study as “perceived ease of use” and “perceived usefulness”, respectively.

Likewise, for the second scale of user experience, the results are divided into three groups, FS1 = (S6, S19, S7, S2, S20, S4, S5, S3), FS2 = (S14, S16, S15, S17, S18, S13, S12), and FS3 = (S10, S9, S8, S1, S11), corresponding to three latent dimensions termed “sensation and emotion”, “action and connection”, and “cognition and technology awareness”, respectively. These results are comprehensively summarized in Table 18.

Table 18.

Collection of questions of the five latent dimensions of the two scales.

- (C)

- Step 3: Verifying the reliability of the collected questionnaire data

Reliability refers to the consistency of a dataset across repetitions [66]. In this study, reliability was assessed using the Cronbach’s α coefficient [67]. A Cronbach’s α coefficient exceeding 0.35 indicates that the data are reliable, and a value surpassing 0.70 signifies high reliability [68]. Using the data from Table 13 and Table 14, the Cronbach’s α coefficients for the two scales and five latent dimensions are provided in Table 19. All coefficients exceed 0.70, confirming that the collected datasets are reliable for further analysis.

Table 19.

The questions of the two scales and the five latent dimensions, as well as corresponding Cronbach’s α coefficients.

- (D)

- Step 4: Verification of applicability of the structural model established with the dimensions

Before validating the questionnaire data, the appropriateness of the structural model based on the latent dimensions has to be verified. This was achieved using confirmatory factor analysis (CFA) with AMOS software, resulting in three structural model graphs, shown in Figure 12. The CFA also generated fit indices for the “system usability” and “user experience” scales, as presented in Table 20. The fit indices χ2/df, CFI, and RMSEA for each scale are within acceptable ranges, “1 to 5”, “greater than 0.9”, and “between 0.05 and 0.08”, respectively, indicating a good fit between the structural model and the collected data, as suggested by Hu and Bentler [69].

Figure 12.

Confirmatory factor analysis (CFA) results using AMOS: (a,b) are the structural models of scales “system usability” and “user experience” generated through CFA, respectively.

Table 20.

Fitness indexes of the structural models of the two indicators of “system usability” and “user experience” generated through CFA.

- (E)

- Step 5: Verification of the validity of the collected questionnaire data

After confirming that the model structures of the two scales fit the questionnaire data, the validity of the data was analyzed. In Figure 12, all factor loading values (standardized regression weights) for the two scales—along the paths from FT1 and FT2 to questions T1–T12, and FS1, FS2, FS3 to questions S1–S20—exceed the threshold of 0.5, indicating good construct validity. This is further supported by the construct validity values for all latent dimensions, computed via EFA and detailed in Table 21, where each value exceeds the 0.6 threshold. These steps collectively verify the reliability and validity of the questionnaire data, allowing for further analysis of each latent dimension.

Table 21.

The construct validity values of the latent dimension of the two scales “system usability” and “user experience” generated through CFA.

3.3.3. Analysis of Questionnaire Data About the Scale of System Usability

The usability scale in this study was designed to assess participants’ perceptions of the AI-based system’s usability and acceptance, based on the TAM and its dimensions of “perceived ease of use” and “perceived usefulness”. A brief summary of the results is provided below.

- (A)

- Data analysis for the latent dimension of “perceived ease of use”

The question items T10, T11, T12, T7, T9, and T8 focus on “perceived ease of use”, assessing the system’s simplicity and ease of operation. These items examine participants’ perceptions of factors like operational simplicity, interface clarity, ease of learning, and usage fluency. The analysis of this dimension is presented in Table 22, with key findings summarized below.

Table 22.

An analysis of responses to questions on the latent dimension of “perceived ease of use”.

- (1)

- The average scores for T10, T11, and T12 range from 4.66 to 4.71 with 100% agreement, indicating the system is easy to operate and interactive.

- (2)

- T9 has an average score of 4.71 and a 99.2% agreement rate, suggesting the interface design meets user needs, though further optimization is recommended.

- (3)

- T7 and T8 received 98.4% agreement, with some users requesting additional learning support, suggesting the inclusion of tutorials or more application scenarios.

The survey results show users have highly positive views of the system’s convenience, interactivity, and interface, with minor improvement opportunities (e.g., T7 and T8) suggesting the need for additional support for new users.

- (B)

- Data analysis for the latent dimension of “perceived usefulness”

The question items T3, T5, T4, T1, T2, and T6 focus on the system’s contributions to physical health, including the coordination of hands, feet, and eyes, as well as its perceived usefulness in promoting health and exercise. These items assess aspects such as coordination improvement, health benefits, relaxation, and willingness to continue using the system. The analysis of the latent dimension “perceived usefulness” is presented in Table 23, with the following key points summarized.

Table 23.

An analysis of responses to questions on the latent dimension of “perceived usefulness”.

- (1)

- T3, T4, and T5 scored above 4.70, with T5 scoring the highest at 4.80, indicating significant improvement in hand–foot movement and coordination.

- (2)

- T1 and T6 received scores of 4.75 and 4.76, with a 99.2% agreement rate, showing strong recognition of the system’s health benefits and acceptance of continued use.

- (3)

- T2 received a 100% agreement rate, reflecting unanimous satisfaction with the system’s ability to make recreational exercise easier and more enjoyable.

- (4)

- T4 had a slightly lower agreement rate of 97.6%, suggesting that enhancing foot movement and adding health data tracking could improve the user experience.

The results show that users perceive the system as highly effective for enhancing exercise, providing health benefits, and offering a relaxing experience, with a strong willingness to continue using it. Further improvements in foot movement and health features are recommended.

3.3.4. Analysis of Questionnaire Data About the Scale of User Experience

The user experience scale in this study aims to understand participants’ experiences after interacting with the AI-based system. The evaluation scale was designed with reference to Schmitt’s experiential strategy module [61]. It includes three latent dimensions: “sensation and emotion”, “action and connection”, and “cognition and technology awareness”. A brief summary of the analysis of these three latent dimensions is as follows.

- (A)

- Data analysis for the latent dimension of “sensation and emotion”

The question items S6, S19, S7, S2, S20, S4, S5, and S3 focus on participants’ visual, auditory, and emotional responses, such as vitality, affinity, and pleasure. These are classified as “sensation and emotion” experiences and align with the “sense” and “feel” modules in SEMs theory, which emphasize emotional responses to sensory stimuli. The analysis of this latent dimension is presented in Table 24, which reveals the following points.

Table 24.

An analysis of responses to questions on the latent dimension of “sensation and emotion”.

- (1)

- The average scores for all items of this latent dimension ranged from 4.68 to 4.84, with over 98% of participants agreeing, indicating positive evaluations of visual, auditory, and emotional responses.

- (2)

- S19 received the highest score of 4.84, reflecting the participants’ strong agreement on the system’s entertainment value and interactive appeal, while S20 scored 4.79, indicating the system’s success in motivating the participants to explore technology used in exercise and leisure activities.

- (3)

- S7 and S3 scored 4.73 and 4.72, respectively, confirming the key role of audiovisual feedback in sustaining the engagement and enhancing participation of the elderly users.

The results demonstrate that the system excels in offering sensory stimulation and emotional experiences, boosting satisfaction and engagement, and achieving the goals of bringing the “sensation and emotion” experience to the participants.

- (B)

- Data analysis for the latent dimension of “action and connection”

Question items S14, S16, S15, S17, S18, S13, and S12 focus on participants’ social behaviors (e.g., recommending and sharing) and their intention to continue using the system (e.g., re-engagement and increased motivation for leisure and entertainment). These can be classified as “action and connection” experiences, aligning with the “action experience” and “relational experience” modules in SEMs theory, which emphasize user behavior and societal connections. The analysis of the “action and connection” dimension is shown in Table 25.

Table 25.

An analysis of responses to questions on the latent dimension of “action and connection”.

- (1)

- S14 and S15, both with an average score of 4.80 and a 100% agreement rate, indicate strong social propagation effects and participants’ strong intentions to recommend and share the system.

- (2)

- S16, with the highest average score of 4.81 and a 100% agreement rate, reflects participants’ very high willingness to reuse the system and their clear intention to continue using it.

- (3)

- S17, with an average score of 4.77, and S18, with an average score of 4.83, were highly rated, demonstrating that the system effectively motivates participants to engage in more beneficial activities, enhancing their motivation for leisure, entertainment, and health.

- (4)

- S12, with an average score of 4.77 and a 98.4% agreement rate, shows that participants recognize the system’s role in enhancing their perception of leisure entertainment and its value.

The questionnaire results reveal strong agreement with the “action and connection” experience, indicating that the system promotes social behaviors, enhances motivation, and increases the perceived value of leisure and entertainment, successfully meeting the goals of the module design.

- (C)

- Data analysis for the latent dimension of “cognition and technology awareness”

The items S10, S9, S8, S1, and S11 focus on participants’ understanding of technology, changes in attitudes, and perceptions of the interactive system after the experience. These can be categorized as “cognition and technology awareness” experiences. Additionally, they align with the “thinking experience” module in SEMs theory, which emphasizes encouraging users to think and inspiring their cognition. The analysis of the latent dimension “cognition and technology awareness” is presented in Table 26. Based on this table, the following points are summarized.

Table 26.

An analysis of responses to questions on the latent dimension “cognition and technology awareness”.

- (1)

- S10, with an average score of 4.70, and S11, with an average score of 4.71, received high ratings and over 96% agreement, indicating that the system effectively enhances participants’ understanding of and attitudes toward technology, improving their awareness.

- (2)

- S9, with an average score of 4.71, shows that participants developed a higher acceptance of and willingness to explore technology, highlighting the system’s potential to inspire engagement with new technologies.

- (3)

- S8 received the highest average score of 4.81, with a 99.2% agreement rate, reflecting strong recognition of the tech–entertainment combination. Additionally, S1 scored 4.72, suggesting the system’s high appeal and entertainment value based on participants’ perceptions.

The questionnaire results show that participants highly agree with all aspects of the “cognition and technology awareness” experience. The system not only successfully enhances technological awareness and changes attitudes but also excels in interactivity and entertainment, achieving the anticipated goals of the system design.

3.4. Analysis of Results of Interviews with Participants

After the interactive system experience, 10 elderly participants were invited for interviews, which were conducted using a semi-structured format. The interview content was divided into three areas: (1) system interface operation, (2) experience perception, and (3) views on the integration of AI into leisure activities for the elderly. The first area focused on participants’ opinions about the system’s interface and how smoothly the learning process went. The second area explored the participants’ feelings and experiences with the overall system and its content. The third area discussed participants’ thoughts on the introduction of AI into leisure activities for elderly people.

3.4.1. Record of User Interviews

Table 27 presents the content and organization of the user interview results, listed in order according to the areas discussed. The invited interviewees are labeled P1~P10.

Table 27.

The record of the user interviews.

3.4.2. Summary of Interview Results

Based on the participant feedback, AI technology in elderly leisure activities is viewed as a positive innovation with a strong appeal and potential in senior entertainment. The participants showed a positive attitude toward the technology and expressed a desire for more similar activities in the future to improve their quality of life and sense of involvement. The following are more detailed findings.

- (1)

- System Interface is easy to understand and operate—Participants found the interface clear and intuitive, with most reporting smooth learning and operation, although some initially faced difficulties.

- (2)

- The interaction schemes are innovative, promoting physical health—The novel, body movement-based interaction effectively trained physical functions while fostering emotional connections, increasing happiness and engagement.

- (3)

- The exhibition and design of the proposed system received high praise—The exhibition layout and digital content were engaging and suitable for elderly users, with friendly staff and enjoyable game content enhancing the experience.

- (4)

- AI Technology enhances interaction and willingness to participate—AI technology made activities more interactive and interesting, sparking interest and openness to new technologies, and encouraging eagerness for future activities.

- (5)

- Future development suggestions are proposed—Future development suggestions are proposed: Participants expressed a desire for more frequent activities to benefit their health and entertainment and suggested diversifying content and gameplay to better meet their needs.

In short, the proposed system provides elderly users with an enjoyable, health-promoting experience, receiving highly positive feedback on the interface, interaction design, and their willingness to engage.

4. Discussion and Conclusions

4.1. Discussion

The interactive exercise and leisure system for the elderly developed in this study is described, along with details of public demonstrations, experimental processes, user interviews, questionnaire surveys, and result analysis. While suggestions for improvements in the elderly-focused design and visual interface were provided by users, overall feedback was mostly positive, with the system being perceived as more innovative and entertaining compared to previous leisure activities after the introduction of AI techniques.

The reliability and validity of responses to the “system usability” and “user experience” questionnaire items were found to exceed standard thresholds, confirming the trustworthiness of the data. Questionnaire results indicated that the average score for most items was above 4 on a 1-to-5 scale, reflecting positive responses from elderly participants regarding their experience with and acceptance of the AI-powered interactive system.

In more detail, the user interviews and questionnaire surveys revealed the following findings:

- (1)

- The interactive system was found to provide positive emotional experiences, such as happiness and joy, for the elderly.

- (2)

- The system’s gameplay was considered novel and easy to engage with, making it interesting and attractive to elderly users.

- (3)

- The elderly expressed a positive attitude toward the integration of AI, indicating good acceptance of AI-based leisure systems.

- (4)

- The system was believed to enhance physical activity and hand–eye coordination.

- (5)

- The elderly were able to easily understand the system’s interaction methods, reflecting a positive experience.

- (6)

- The system’s usability and ease of use averaged above 4.48 on a 1-to-5 scale, showing it is well suited for elderly users.

Furthermore, the reasons why the proposed system works satisfactorily for the users have been reviewed in this study, which are listed in the following.

- (1)

- Several sports were tested during the system design process, and ultimately, ball manipulation games and racket-based tennis were selected for their ease of play and suitability for elderly users.

- (2)

- Elderly users prefer ball games over tennis because the simpler and symmetrical shape of the ball makes it easier to detect by the YOLO algorithm, whereas the racket in tennis adds complexity.

- (3)

- The use of the real-time, versatile AI algorithm YOLO lowers the difficulty threshold, making it easier for elderly users to progress through the games, thereby increasing their willingness to engage with the system.

- (4)

- These AI-driven, easy-to-play games, combined with media prompts, enhance elderly users’ interest in continuous gameplay, effectively achieving the system’s goal of improving their physical health.

4.2. Concluding Remarks

With technological advancements, interactive technology in elderly leisure and entertainment has become more common. Unlike activities for younger people, systems for the elderly focus on addressing age-related physical and mental challenges and improving interactions with technology. Intuitive interfaces stimulate creativity and learning in the elderly. In this study, AI technologies, including motion sensing and computer vision, are used to guide elderly users through simple actions, helping them engage in exercise and leisure activities while connecting with technology.

A literature review of related cases was conducted to explore AI applications in elderly leisure experiences. Accordingly, design principles were applied to create an AI-based system with motion-sensing and target-detection capabilities. The resulting system, called the “Grandpa and Grandma Move”, offers an intuitive platform that enhances the enjoyment of exercise and leisure activities for elderly users.

After prototype development and public demonstrations, questionnaire surveys and user interviews were conducted to evaluate the system’s effectiveness. Statistical analysis using SPSS and AMOS revealed that the system effectively enhances the leisure and entertainment experience of elderly participants, as confirmed by three key observations described in the following.

- (1)

- AI technology enhances interactive leisure experiences for the elderly—An interactive exercise and leisure system for the elderly was designed in this study, and elderly users were fascinated by its motion-sensing technology. It was found through user interviews that the system was considered novel and enjoyable by the elderly.

- (2)

- The proposed interactive system promotes elderly health and well-being—Positive feedback was given by the majority of elderly participants in the survey and interviews. The system’s interface was found to be smooth and enjoyable, and improvements in hand–eye coordination and cognitive abilities were reported, contributing to a healthy, active lifestyle.

- (3)

- Aligning the system with familiar experiences boosts acceptance—The system was designed to enhance physical functions of the elderly while providing entertainment. Familiar elements, such as elastic ball and tennis exercises, were incorporated, and higher acceptance and engagement were reported, making the system more attractive than traditional leisure activities and encouraging continued use.

4.3. Suggestions for Future Research

In this study, an interactive exercise and leisure system suitable for elderly users has been constructed. Due to time and resource limitations, improvements on the system design still can be made for future research. Some suggestions in this regard are listed below.

- (1)

- The movements required could be simpler and clearer—The motion-sensing system might benefit from prioritizing simple postures and ergonomic design, considering elderly users’ physical abilities. Additional guidance, such as animation and audio, could be helpful for operation explanations.

- (2)

- The difficulty of object recognition may need further consideration—The YOLO algorithm struggles with irregular objects, like the side angle of a tennis ball. To improve recognition, users could interact with objects like the racket from a frontal position, and the design could consider the algorithm’s limitations to enhance interaction diversity.

- (3)

- Additional visual and auditory feedback could enhance the system—To improve user experience, more visual and auditory feedback might be added. Additionally, introducing multiplayer modes (cooperative or competitive) could further enrich the interaction.

- (4)

- The system could be expanded to other elderly leisure environments—The system could be extended to other elderly environments (e.g., community centers, parks, rehabilitation centers), with adjustments for specific functions, creating a modular system that adapts to different settings.

- (5)

- Other applications and studies may also be conducted—The proposed system can be adapted to meet the needs of individuals in recovery and those with disabilities, with adjustments in game content and interaction style to provide new health benefits; the sample size can be expanded as much as possible to improve the representativeness and statistical power of the research results; the case of multi-day system uses may be studied to support additionally the statement of positive impact on the health; and finally, the system usability, which might change over time with prolonged use, may be investigated.

Author Contributions

Conceptualization, C.-M.W. and Y.-C.L.; methodology, C.-M.W., C.-H.S. and Y.-C.L.; validation, C.-H.S.; investigation, C.-H.S. and Y.-C.L.; data curation, Y.-C.L.; writing—original draft preparation, C.-M.W. and C.-H.S.; writing—review and editing, C.-M.W. and C.-H.S.; visualization, C.-H.S.; supervision, C.-M.W.; funding acquisition, C.-M.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

All subjects gave their informed consent for inclusion before they participated in the study.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- World Health Organization. WHOQOL: Measuring Quality of Life; World Health Organization: Geneva, Switzerland, 1997.

- Lin, Y.Y.; Huang, C.S. Aging in Taiwan: Building a Society for Active Aging and Aging in Place. Gerontologist 2016, 56, gnv107. [Google Scholar] [CrossRef]

- Li, H.H.; Liao, Y.H.; Hsu, C. Using Artificial Intelligence to Achieve Health Promotion for the Elderly by Utilizing the Power of Virtual Reality. In Proceedings of the 2023 5th International Electronics Communication Conference (IECC), Osaka City, Japan, 21–23 July 2023. [Google Scholar] [CrossRef]

- Huang, F. Outlook on the Elderly Service Industry and Public Service Policies under the Development of an Aging Society. Public Gov. Q. 2016, 4, 21–32. (In Chinese) [Google Scholar]

- National Health Bureau. Elderly Health Promotion Plan (2009–2012). 2009. Available online: https://www.hpa.gov.tw/EngPages/Index.aspx (accessed on 19 January 2025).

- Crews, D.E.; Zavotka, S. Aging, disability, and frailty: Implications for universal design. J. Physiol. Anthropol. 2006, 25, 113–118. [Google Scholar] [PubMed]

- Chen, Z.Y. A Study on the Technology Acceptance of the Elderly: A Case Study of the Southern Region. Kunshan Univ. J. 2015, 10, 132–144. (In Chinese) [Google Scholar]

- Li, C.F. A Study on Product Design for Elderly Users. J. Des. 2006, 11, 65–79. (In Chinese) [Google Scholar]

- Xu, H.J. Successful Aging: A Positive Perspective on Elderly Health. Community Dev. Q. 2003, 103, 252–260. (In Chinese) [Google Scholar]

- Rowe, J.W.; Kahn, R.L. Successful aging. Gerontologist 1997, 37, 433–440. [Google Scholar]

- Baltes, P.B.; Baltes, M.M. Psychological perspectives on successful aging: The model of selective optimization with compensation. Aging Hum. Dev. 1990, 30, 1–34. [Google Scholar]

- Lin, L.H. A Study on Successful Aging of Elderly Learners in Taiwan. Demogr. Res. 2006, 33, 133–170. [Google Scholar]

- Franklin, N.C.; Tate, C.A. Lifestyle and successful aging: An overview. Am. J. Style Med. 2009, 3, 6–11. [Google Scholar] [CrossRef]

- Callaghan, P. Exercise: A neglected intervention in mental health care? J. Psychiatr. Ment. Health Nurs. 2004, 11, 476–483. [Google Scholar] [CrossRef] [PubMed]

- Crowther, M.R.; Parker, M.W.; Achenbaum, W.A.; Larimore, W.L.; Koenig, H.G. Rowe and Kahn’s model of successful aging revisited: Positive spirituality—The forgotten factor. Gerontologist 2002, 42, 613–620. [Google Scholar] [CrossRef] [PubMed]

- Li, X.M.; Gao, M.H. An Exploration of the Relationship Between Elderly Leisure Activity Participation and Successful Aging. Shu-Te Online Stud. Humanit. Soc. Sci. 2014, 10, 97–122. (In Chinese) [Google Scholar]

- Heintzman, P.; Mannell, R.C. Spiritual functions of leisure and spiritual well-being: Coping with time pressure. Leis. Sci. 2003, 25, 207–230. [Google Scholar] [CrossRef]

- Yan, T.W.; Kuo, N.C. A Study on the Leisure Education Model for the Elderly. Chia Nan Univ. J. 2012, 38, 629–646. (In Chinese) [Google Scholar]

- Hsieh, C.F. Are You Getting Old?—Discussing “Successful Aging”. Health News KMUH 2009, 29, 11. (In Chinese) [Google Scholar]

- Gasiorek, J.; Fowler, C.; Giles, H. What does successful aging sound like? Profiling communication about aging. Hum. Commun. Res. 2015, 41, 577–602. [Google Scholar] [CrossRef]

- Matsuo, M.; Nagasawa, J.; Yoshino, A.; Hiramatsu, K.; Kurashiki, K. Effects of activity participation of the elderly on quality of life. Yonago Acta Med. 2003, 46, 17–24. [Google Scholar]

- Lin, G.Y. The Change of Leisure Participation Among Older People in Taiwan: Cause and Impact. Master’s Thesis, Department of Sociology, National Chengchi University, Taipei City, Taiwan, 2008. (In Chinese). [Google Scholar]

- Hughes, K. The Future of Cloud-Based Entertainment. Proc. IEEE 2012, 100, 1391–1394. [Google Scholar] [CrossRef]

- Lian, J.M. The Importance of Leisure Products for the Elderly. Master’s Thesis, Center for Aging and Welfare Technology, Yuan Ze University, Taoyuan, Taiwan, 2002. [Google Scholar]

- Hollinworth, N.; Hwang, F. Investigating familiar interactions to help older adults learn computer applications more easily. Comput. Educ. 2011, 56, 123–130. [Google Scholar]

- Caprani, N.; O’Connor, N.E.; Gurrin, C. Touch Screens for the Older User. In Assistive Technologies; Auat Cheein, F.A., Ed.; IntechOpen: London, UK, 2012. [Google Scholar] [CrossRef]

- Pal, D.; Triyason, T.; Funikul, S. Smart homes and quality of life for the elderly: A systematic review. In Proceedings of the 2017 IEEE International Symposium on Multimedia (ISM), Taichung, Taiwan, 11–13 December 2017. [Google Scholar]

- Wang, J.F. The origin and development of Orange Technology. Sci. Dev. 2011, 466, 6–9. [Google Scholar]

- Wang, J.F. The Development and Challenges of Orange Technology. Sci-Tech Vista, 5 July 2011. Available online: https://scitechvista.nat.gov.tw/Article/c000003/detail?ID=4fb34922-2590-4f7e-bd25-3ba55243cb28 (accessed on 21 January 2025). (In Chinese)

- Liu, C.J.; Kao, S.F. A Study on the Importance of Employee Well-Being Programs Using the Orange Concept. J. Bus. Manag. 2013, 97, 61–86. (In Chinese) [Google Scholar]

- Wang, J.F. When Orange Technology Meets Green Technology: Towards a Happier Technological Life. NCKU Mag. 2013, 237, 66–71. (In Chinese) [Google Scholar]

- Liu, C.J.; Chen, W.C. A Study on the Importance of Applying Orange Technology to Cloud Services. J. Eng. Technol. Educ. 2014, 11, 159–173. (In Chinese) [Google Scholar]

- Chen, W.L. A Study on the Application of Orange Technology in the Design Strategy of Smart Preventive Devices. Shu-Te Univ. J. Technol. 2019, 21, 49–61. [Google Scholar]

- Industrial Development Bureau, Ministry of Economic Affairs, Taiwan Current Status of the Smart Vehicle Industry. Available online: https://www.sipo.org.tw/industry-overview/industry-state-quo/smart-home-industry-state-quo.html (accessed on 21 January 2025).