Advancing Textile Damage Segmentation: A Novel RGBT Dataset and Thermal Frequency Normalization

Abstract

1. Introduction

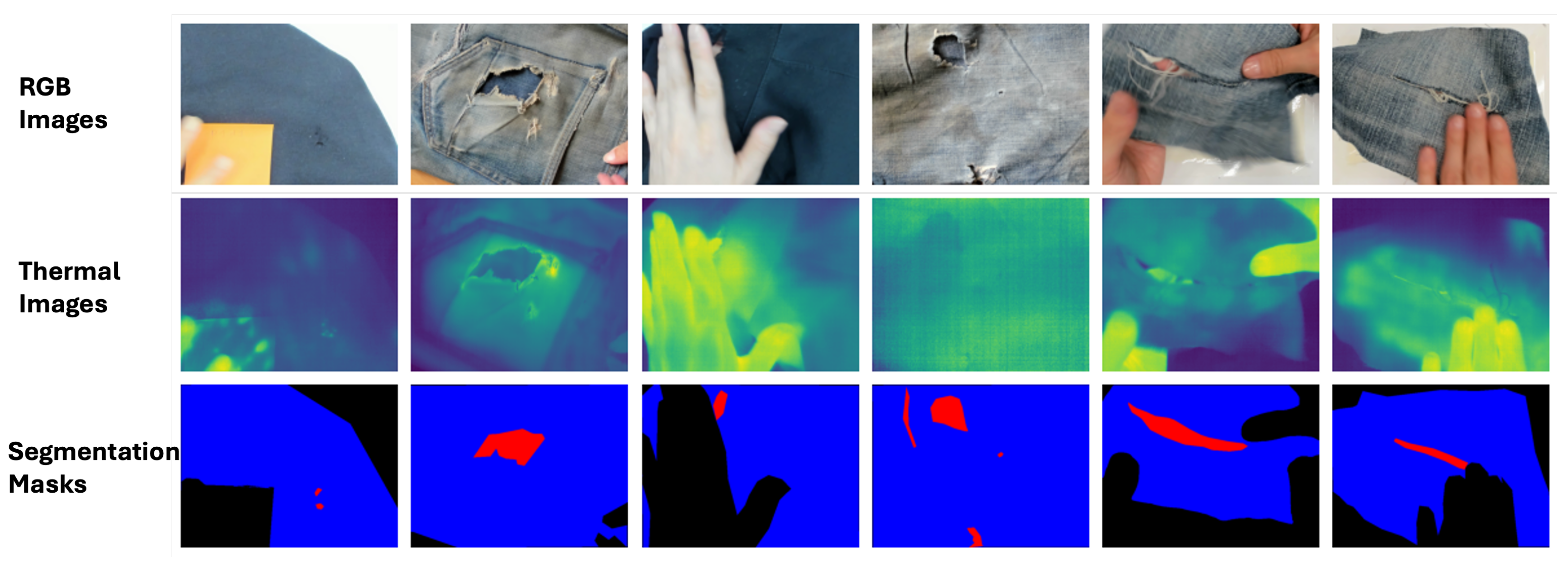

- The RGBT-Textile dataset, a novel RGB-Thermal image dataset of textile materials with damages, primarily for the segmentation of textile materials and damages along with benchmarks and experimental results. The dataset is available at this download link https://drive.google.com/drive/folders/1HLri3SDPHSY0AsAmaEloh_0Q13E91u2v (accessed on 28 March 2025).

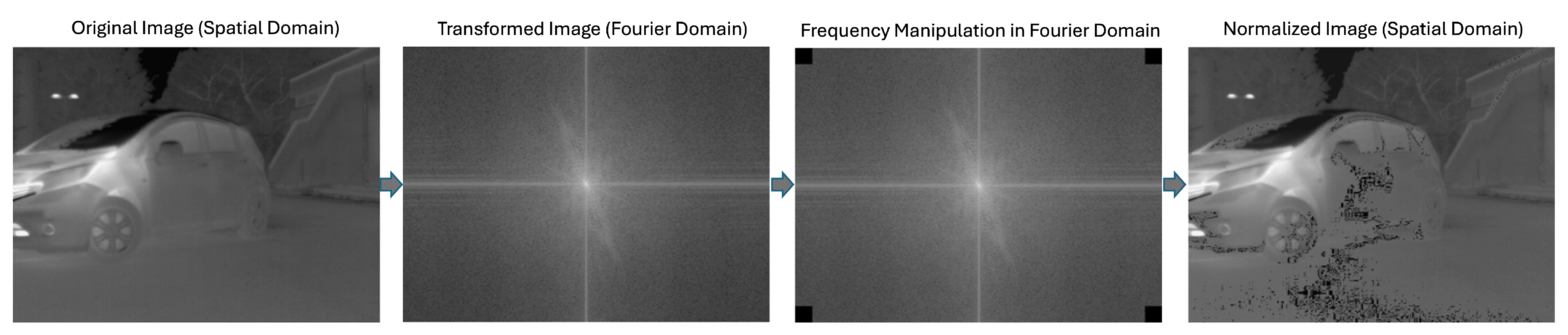

- The frequency-based thermal image normalization technique ThermoFreq, to selectively adjust frequency components based on their statistical distribution, resulting in improved segmentation performance of the RGBT models.

- Experiments with the SOTA RGBT segmentation models and benchmark datasets to highlight the effectiveness of ThermoFreq in addressing temperature noise challenges.

2. Related Works

2.1. RGBT Datasets

2.2. RGBT Segmetation Models

3. RGBT-Textile Dataset

3.1. Data Collection Protocol and Setups

3.2. Diversity of Textile Damage and Materials

4. Thermal Frequency Normalization: ThermoFreq

5. Experiments

6. Results and Discussion

6.1. Evaluation of ThermoFreq with Transformer and CNN-Based Models

6.2. Impact of Different Tolerance Thresholds

6.3. Class Specific Performance on RGBT-Textile Dataset

6.3.1. Background

6.3.2. Garment

6.3.3. Damaged Area

6.4. Robustness to Synthetic Thermal Noise

6.5. Comparative Analysis

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 3D | Three dimensional |

| CNN | Convolution Neural Network |

| mIoU | Mean Intersection over Union |

| RGBT | RGB (color imaging) and thermal infrared imaging |

| SOTA | State-of-the-art |

References

- Millward-Hopkins, J.; Purnell, P.; Baurley, S. Scenarios for reducing the environmental impacts of the UK clothing economy. J. Clean. Prod. 2023, 420, 138352. [Google Scholar]

- Jacometti, V. Fashion and a Circular Economy; Ellen MacArthur Foundation: Cowes, UK, 2019. [Google Scholar]

- Jacometti, V. Circular economy and waste in the fashion industry. Laws 2019, 8, 27. [Google Scholar] [CrossRef]

- Boiten, V.J.; Han, S.L.C.; Tyler, D. Circular Economy Stakeholder Perspectives: Textile Collection Strategies to Support Material Circularity; European Union’s: Brussels, Belgium, 2017. [Google Scholar]

- Papers with Code—Thermal Image Segmentation—paperswithcode.com. Available online: https://paperswithcode.com/task/thermal-image-segmentation/latest (accessed on 27 March 2025).

- Wang, Q.; Yin, C.; Song, H.; Shen, T.; Gu, Y. UTFNet: Uncertainty-guided trustworthy fusion network for RGB-thermal semantic segmentation. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar]

- Pang, Y.; Huang, Y.; Weng, C.; Lyu, J.; Bai, C.; Yu, X. Enhanced RGB-T saliency detection via thermal-guided multi-stage attention network. Vis. Comput. 2025, 1–19. [Google Scholar] [CrossRef]

- Zhao, S.; Liu, Y.; Jiao, Q.; Zhang, Q.; Han, J. Mitigating modality discrepancies for RGB-T semantic segmentation. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 9380–9394. [Google Scholar] [PubMed]

- Bao, W.; Huang, M.; Hu, J.; Xiang, X. Attention-guided multi-modal and multi-scale fusion for multispectral pedestrian detection. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Shenzhen, China, 4–7 November 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 382–393. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Reza, M.K.; Prater-Bennette, A.; Asif, M.S. MMSFormer: Multimodal Transformer for Material and Semantic Segmentation. IEEE Open J. Signal Process. 2024, 5, 599–610. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1290–1299. [Google Scholar]

- Rayhan, F.; Ahmed, S.; Mahbub, A.; Jani, R.; Shatabda, S.; Farid, D.M. Cusboost: Cluster-based under-sampling with boosting for imbalanced classification. In Proceedings of the 2017 2nd International Conference on Computational Systems and Information Technology for Sustainable Solution (CSITSS), Bengaluru, India, 21–23 December 2017; pp. 1–5. [Google Scholar]

- Chen, Y.T.; Shi, J.; Ye, Z.; Mertz, C.; Ramanan, D.; Kong, S. Multimodal object detection via probabilistic ensembling. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 139–158. [Google Scholar]

- Ha, Q.; Watanabe, K.; Karasawa, T.; Ushiku, Y.; Harada, T. MFNet: Towards real-time semantic segmentation for autonomous vehicles with multi-spectral scenes. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 5108–5115. [Google Scholar]

- Cho, Y.; Bianchi-Berthouze, N.; Marquardt, N.; Julier, S.J. Deep thermal imaging: Proximate material type recognition in the wild through deep learning of spatial surface temperature patterns. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–13. [Google Scholar]

- Shivakumar, S.S.; Rodrigues, N.; Zhou, A.; Miller, I.D.; Kumar, V.; Taylor, C.J. Pst900: Rgb-thermal calibration, dataset and segmentation network. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9441–9447. [Google Scholar]

- Sun, Y.; Zuo, W.; Liu, M. Rtfnet: Rgb-thermal fusion network for semantic segmentation of urban scenes. IEEE Robot. Autom. Lett. 2019, 4, 2576–2583. [Google Scholar]

- Sun, Y.; Zuo, W.; Yun, P.; Wang, H.; Liu, M. FuseSeg: Semantic segmentation of urban scenes based on RGB and thermal data fusion. IEEE Trans. Autom. Sci. Eng. 2020, 18, 1000–1011. [Google Scholar]

- Deng, F.; Feng, H.; Liang, M.; Wang, H.; Yang, Y.; Gao, Y.; Chen, J.; Hu, J.; Guo, X.; Lam, T.L. FEANet: Feature-enhanced attention network for RGB-thermal real-time semantic segmentation. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 4467–4473. [Google Scholar]

- Wu, W.; Chu, T.; Liu, Q. Complementarity-aware cross-modal feature fusion network for RGB-T semantic segmentation. Pattern Recognit. 2022, 131, 108881. [Google Scholar]

- Xu, J.; Lu, K.; Wang, H. Attention fusion network for multi-spectral semantic segmentation. Pattern Recognit. Lett. 2021, 146, 179–184. [Google Scholar]

- Zhang, J.; Liu, H.; Yang, K.; Hu, X.; Liu, R.; Stiefelhagen, R. CMX: Cross-modal fusion for RGB-X semantic segmentation with transformers. IEEE Trans. Intell. Transp. Syst. 2023, 24, 14679–14694. [Google Scholar] [CrossRef]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1597–1604. [Google Scholar] [CrossRef]

- Cho, Y.; Julier, S.J.; Marquardt, N.; Bianchi-Berthouze, N. Robust tracking of respiratory rate in high-dynamic range scenes using mobile thermal imaging. Biomed. Opt. Express 2017, 8, 4480–4503. [Google Scholar] [CrossRef]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; Kweon, I.S. Multispectral pedestrian detection: Benchmark dataset and baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1037–1045. [Google Scholar]

- Jia, X.; Zhu, C.; Li, M.; Tang, W.; Zhou, W. LLVIP: A Visible-Infrared Paired Dataset for Low-Light Vision. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Virtual, 11–17 October 2021. [Google Scholar]

- Davis, L.S.; Keck, M. The relationship of visible and thermal infrared imagery. In Proceedings of theIEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05)-Workshops, San Diego, CA, USA, 20–26 June 2005; p. 133. [Google Scholar]

- Kim, T.; Shin, S.; Yu, Y.; Kim, H.G.; Ro, Y.M. Causal Mode Multiplexer: A Novel Framework for Unbiased Multispectral Pedestrian Detection. arXiv 2024, arXiv:2403.01300. [Google Scholar]

- Tang, H.; Li, Z.; Zhang, D.; He, S.; Tang, J. Divide-and-Conquer: Confluent Triple-Flow Network for RGB-T Salient Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 1958–1974. [Google Scholar] [CrossRef]

- Shen, J.; Chen, Y.; Liu, Y.; Zuo, X.; Fan, H.; Yang, W. ICAFusion: Iterative cross-attention guided feature fusion for multispectral object detection. Pattern Recognit. 2024, 145, 109913. [Google Scholar] [CrossRef]

- Ouyang, J.; Jin, P.; Wang, Q. Multimodal feature-guided pre-training for RGB-T perception. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 16041–16050. [Google Scholar] [CrossRef]

- Yuan, M.; Shi, X.; Wang, N.; Wang, Y.; Wei, X. Improving RGB-infrared object detection with cascade alignment-guided transformer. Inf. Fusion 2024, 105, 102246. [Google Scholar] [CrossRef]

- Shin, U.; Lee, K.; Kweon, I.S.; Oh, J. Complementary random masking for RGB-thermal semantic segmentation. arXiv 2023, arXiv:2303.17386. [Google Scholar]

- Zhang, J.; Cao, M.; Yang, X.; Xie, W.; Lei, J.; Li, D.; Yang, G.; Huang, W.; Li, Y. Efficientmfd: Towards more efficient multimodal synchronous fusion detection. arXiv 2024, arXiv:2403.09323. [Google Scholar]

- Cao, B.; Sun, Y.; Zhu, P.; Hu, Q. Multi-modal Gated Mixture of Local-to-Global Experts for Dynamic Image Fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023. [Google Scholar]

- Li, Q.; Zhang, C.; Hu, Q.; Fu, H.; Zhu, P. Confidence-aware fusion using dempster-shafer theory for multispectral pedestrian detection. IEEE Trans. Multimed. 2022, 25, 3420–3431. [Google Scholar] [CrossRef]

- Embedded Software Development Tools & Cross Platform IDE|Qt Creator—qt.io. Available online: https://www.qt.io/product/development-tools (accessed on 30 May 2024).

- Joshi, J.; Cho, Y. TCC RGB+T Fusion Data Collection & Visualisation Software. 2025. Available online: https://github.com/PhysiologicAILab/rgbt_capture (accessed on 31 March 2025).

- Joshi, J.; Wang, K.; Cho, Y. Physiokit: An open-source, low-cost physiological computing toolkit for single-and multi-user studies. Sensors 2023, 23, 8244. [Google Scholar] [CrossRef]

- Joshi, J.; Cho, Y. IBVP dataset: RGB-thermal RPPG dataset with high resolution signal quality labels. Electronics 2024, 13, 1334. [Google Scholar] [CrossRef]

- CVAT.ai Corporation. Computer Vision Annotation Tool (CVAT); CVAT.ai Corporation: Palo Alto, CA, USA, 2023. [Google Scholar]

- Thermal Testing—IDFL—idfl.com. 2023. Available online: https://idfl.com/2022/08/02/thermal-testing/ (accessed on 27 March 2023).

- Vollmer, M. Infrared thermal imaging. In Computer Vision: A Reference Guide; Springer: Berlin/Heidelberg, Germany, 2021; pp. 666–670. [Google Scholar]

- Alpar, O.; Krejcar, O. Quantization and equalization of pseudocolor images in hand thermography. In Proceedings of the Bioinformatics and Biomedical Engineering: 5th International Work-Conference, IWBBIO 2017, Granada, Spain, 26–28 April 2017; Proceedings, Part I 5. Springer: Berlin/Heidelberg, Germany, 2017; pp. 397–407. [Google Scholar]

- Cho, Y.; Bianchi-Berthouze, N.; Julier, S.J. DeepBreath: Deep learning of breathing patterns for automatic stress recognition using low-cost thermal imaging in unconstrained settings. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, USA, 23–26 October 2017; pp. 456–463. [Google Scholar]

- Cho, Y.; Julier, S.J.; Bianchi-Berthouze, N. Instant stress: Detection of perceived mental stress through smartphone photoplethysmography and thermal imaging. JMIR Ment. Health 2019, 6, e10140. [Google Scholar]

- Krefer, A.G.; Lie, M.M.I.; Borba, G.B.; Gamba, H.R.; Lavarda, M.D.; de Souza, M.A. A method for generating 3D thermal models with decoupled acquisition. Comput. Methods Programs Biomed. 2017, 151, 79–90. [Google Scholar] [CrossRef] [PubMed]

- Takumi, K.; Watanabe, K.; Ha, Q.; Tejero-De-Pablos, A.; Ushiku, Y.; Harada, T. Multispectral object detection for autonomous vehicles. In Proceedings of the Theon Thematic Workshops of ACM Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 35–43. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 8026–8037. [Google Scholar]

- Falcon, W.A. Pytorch lightning. GitHub 2019, 3. Available online: https://github.com/Lightning-AI/pytorch-lightning (accessed on 27 March 2023).

- MMCV Contributors. OpenMMLab Computer Vision Foundation. 2018. Available online: https://github.com/open-mmlab/mmcv (accessed on 27 March 2023).

- Rayhan, F. FR-MRInet: A deep convolutional encoder-decoder for brain tumor segmentation with relu-RGB and sliding-window. Int. J. Comput. Appl. 2018, 975, 8887. [Google Scholar]

- Rayhan, F.; Galata, A.; Cootes, T.F. ChoiceNet: CNN learning through choice of multiple feature map representations. Pattern Anal. Appl. 2021, 24, 1757–1767. [Google Scholar] [CrossRef]

- Rayhan, F.; Galata, A.; Cootes, T.F. Not all points are created equal-an anisotropic cost function for facial feature landmark location. In Proceedings of the the 31st British Machine Vision Virtual Conference, BMVC, Virtual, 7–10 September 2020. [Google Scholar]

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 7262–7272. [Google Scholar]

- Joshi, J.N.; Berthouze, N.; Cho, Y. Self-adversarial Multi-scale Contrastive Learning for Semantic Segmentation of Thermal Facial Images. In Proceedings of the 33rd British Machine Vision Conference 2022, BMVC, London, UK, 21–24 November 2022. [Google Scholar]

| Damage Type | Size Range (mm2) | Material Type | Thermal Variation (°C) | Occurrence Frequency (%) |

|---|---|---|---|---|

| Holes | 5–50 | Cotton, Blended | 1.2–2.5 | 30 |

| Stains | 10–120 | Polyester, Synthetic | 0.5–1.8 | 25 |

| Tears | 15–150 | All materials | 1.0–3.0 | 20 |

| Stitching Errors | 5–30 | Cotton, Synthetic | 0.7–1.5 | 15 |

| Discolorations | 20–100 | Blended, Polyester | 0.6–1.2 | 10 |

| Model | CRM_RGBTSeg | MMS- Former-84 | UNet | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Backbone | Swin Base | Swin Small | Swin Tiny | ResNet18 | ResNet50 | |||||

| Normalization | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| Datasets | ||||||||||

| KAIST Multispectral Pedestrian | 0.552 | 0.567 | 0.544 | 0.560 | 0.512 | 0.554 | 0.552 | 0.565 | 0.550 | 0.555 |

| LLVIP Dataset | 0.682 | 0.701 | 0.680 | 0.692 | 0.664 | 0.679 | 0.663 | 0.675 | 0.670 | 0.673 |

| MF Dataset | 0.578 | 0.586 | 0.572 | 0.582 | 0.556 | 0.570 | 0.578 | 0.589 | 0.577 | 0.580 |

| OSU Thermal Pedestrian | 0.980 | 0.994 | 0.980 | 0.994 | 0.970 | 0.994 | 0.980 | 0.992 | 0.990 | 0.993 |

| McubeS | 0.523 | 0.545 | 0.519 | 0.542 | 0.497 | 0.530 | 0.504 | 0.522 | 0.512 | 0.520 |

| PST900 | 0.877 | 0.889 | 0.871 | 0.885 | 0.849 | 0.880 | 0.874 | 0.855 | 0.872 | 0.875 |

| RGBT-Textile (Ours) | 0.842 | 0.846 | 0.840 | 0.843 | 0.822 | 0.835 | 0.842 | 0.852 | 0.840 | 0.845 |

| Dataset\Model | CRM_RGBTSeg | MMSFormer | Unet ResNet18 | Unet ResNet50 | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tolerance | ||||||||||||||||||||

| KAIST Multispectral Pedestrian | 0.552 | 0.563 | 0.564 | 0.463 | 0.367 | 0.552 | 0.561 | 0.562 | 0.464 | 0.365 | 0.550 | 0.560 | 0.561 | 0.460 | 0.365 | 0.555 | 0.565 | 0.566 | 0.462 | 0.368 |

| LLVIP Dataset | 0.682 | 0.697 | 0.698 | 0.600 | 0.501 | 0.663 | 0.671 | 0.672 | 0.574 | 0.475 | 0.670 | 0.684 | 0.685 | 0.589 | 0.482 | 0.673 | 0.688 | 0.689 | 0.590 | 0.485 |

| MFNet Dataset | 0.556 | 0.578 | 0.579 | 0.480 | 0.381 | 0.578 | 0.585 | 0.586 | 0.487 | 0.389 | 0.577 | 0.595 | 0.596 | 0.489 | 0.387 | 0.580 | 0.598 | 0.599 | 0.491 | 0.390 |

| OSU Thermal Pedestrian | 0.980 | 0.990 | 0.991 | 0.793 | 0.694 | 0.980 | 0.988 | 0.989 | 0.791 | 0.692 | 0.990 | 0.993 | 0.994 | 0.790 | 0.693 | 0.993 | 0.996 | 0.996 | 0.792 | 0.695 |

| McubeS | 0.523 | 0.541 | 0.542 | 0.444 | 0.345 | 0.504 | 0.518 | 0.519 | 0.421 | 0.322 | 0.512 | 0.529 | 0.531 | 0.422 | 0.327 | 0.520 | 0.535 | 0.537 | 0.425 | 0.330 |

| PST900 | 0.877 | 0.885 | 0.868 | 0.748 | 0.639 | 0.874 | 0.881 | 0.872 | 0.729 | 0.625 | 0.872 | 0.883 | 0.870 | 0.735 | 0.628 | 0.875 | 0.886 | 0.873 | 0.737 | 0.630 |

| Dataset (Ours) | 0.822 | 0.842 | 0.843 | 0.745 | 0.646 | 0.842 | 0.848 | 0.849 | 0.751 | 0.652 | 0.840 | 0.856 | 0.857 | 0.749 | 0.650 | 0.845 | 0.861 | 0.862 | 0.754 | 0.655 |

| Performance Metrics\Model | CRM_RGBTSeg | MMSFormer | UNet-ResNet18 | UNet-ResNet50 | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tolerance | ||||||||||||||||||||

| mIoU/Whole dataset | 0.822 | 0.842 | 0.841 | 0.830 | 0.820 | 0.810 | 0.828 | 0.827 | 0.817 | 0.808 | 0.840 | 0.856 | 0.857 | 0.749 | 0.650 | 0.845 | 0.861 | 0.862 | 0.754 | 0.655 |

| Precision/Whole dataset | 0.830 | 0.850 | 0.849 | 0.838 | 0.828 | 0.818 | 0.835 | 0.834 | 0.824 | 0.815 | 0.848 | 0.864 | 0.865 | 0.757 | 0.656 | 0.853 | 0.870 | 0.871 | 0.759 | 0.661 |

| Recall/Whole dataset | 0.815 | 0.835 | 0.834 | 0.823 | 0.815 | 0.803 | 0.820 | 0.819 | 0.811 | 0.803 | 0.832 | 0.849 | 0.850 | 0.738 | 0.646 | 0.837 | 0.854 | 0.855 | 0.742 | 0.650 |

| mIoU/Background | 0.850 | 0.870 | 0.869 | 0.858 | 0.850 | 0.836 | 0.854 | 0.853 | 0.845 | 0.838 | 0.860 | 0.878 | 0.879 | 0.769 | 0.661 | 0.865 | 0.882 | 0.883 | 0.773 | 0.665 |

| mIoU/Garment | 0.810 | 0.830 | 0.829 | 0.820 | 0.810 | 0.796 | 0.813 | 0.812 | 0.808 | 0.798 | 0.825 | 0.842 | 0.843 | 0.740 | 0.633 | 0.830 | 0.848 | 0.849 | 0.745 | 0.640 |

| mIoU/Damaged area | 0.580 | 0.645 | 0.640 | 0.630 | 0.620 | 0.565 | 0.629 | 0.623 | 0.617 | 0.608 | 0.590 | 0.655 | 0.660 | 0.550 | 0.448 | 0.605 | 0.670 | 0.675 | 0.555 | 0.452 |

| Dataset | CRM_RGBTSeg | MMSFormer | UNet-ResNet50 | |||

|---|---|---|---|---|---|---|

| Orig | Aug | Orig | Aug | Orig | Aug | |

| KAIST Multispectral Pedestrian | 0.567 | 0.541 | 0.565 | 0.538 | 0.555 | 0.512 |

| LLVIP Dataset | 0.701 | 0.683 | 0.675 | 0.651 | 0.673 | 0.634 |

| MF Dataset | 0.586 | 0.569 | 0.589 | 0.563 | 0.580 | 0.547 |

| RGBT-Textile (Ours) | 0.846 | 0.843 | 0.852 | 0.831 | 0.845 | 0.809 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rayhan, F.; Joshi, J.; Ren, G.; Hernandez, L.; Petreca, B.; Baurley, S.; Berthouze, N.; Cho, Y. Advancing Textile Damage Segmentation: A Novel RGBT Dataset and Thermal Frequency Normalization. Sensors 2025, 25, 2306. https://doi.org/10.3390/s25072306

Rayhan F, Joshi J, Ren G, Hernandez L, Petreca B, Baurley S, Berthouze N, Cho Y. Advancing Textile Damage Segmentation: A Novel RGBT Dataset and Thermal Frequency Normalization. Sensors. 2025; 25(7):2306. https://doi.org/10.3390/s25072306

Chicago/Turabian StyleRayhan, Farshid, Jitesh Joshi, Guangyu Ren, Lucie Hernandez, Bruna Petreca, Sharon Baurley, Nadia Berthouze, and Youngjun Cho. 2025. "Advancing Textile Damage Segmentation: A Novel RGBT Dataset and Thermal Frequency Normalization" Sensors 25, no. 7: 2306. https://doi.org/10.3390/s25072306

APA StyleRayhan, F., Joshi, J., Ren, G., Hernandez, L., Petreca, B., Baurley, S., Berthouze, N., & Cho, Y. (2025). Advancing Textile Damage Segmentation: A Novel RGBT Dataset and Thermal Frequency Normalization. Sensors, 25(7), 2306. https://doi.org/10.3390/s25072306