Construction of a Real-Time Detection for Floating Plastics in a Stream Using Video Cameras and Deep Learning

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Acquisition

2.2. Data Preprocessing

2.3. Detecting, Tracking, and Counting Algorithms

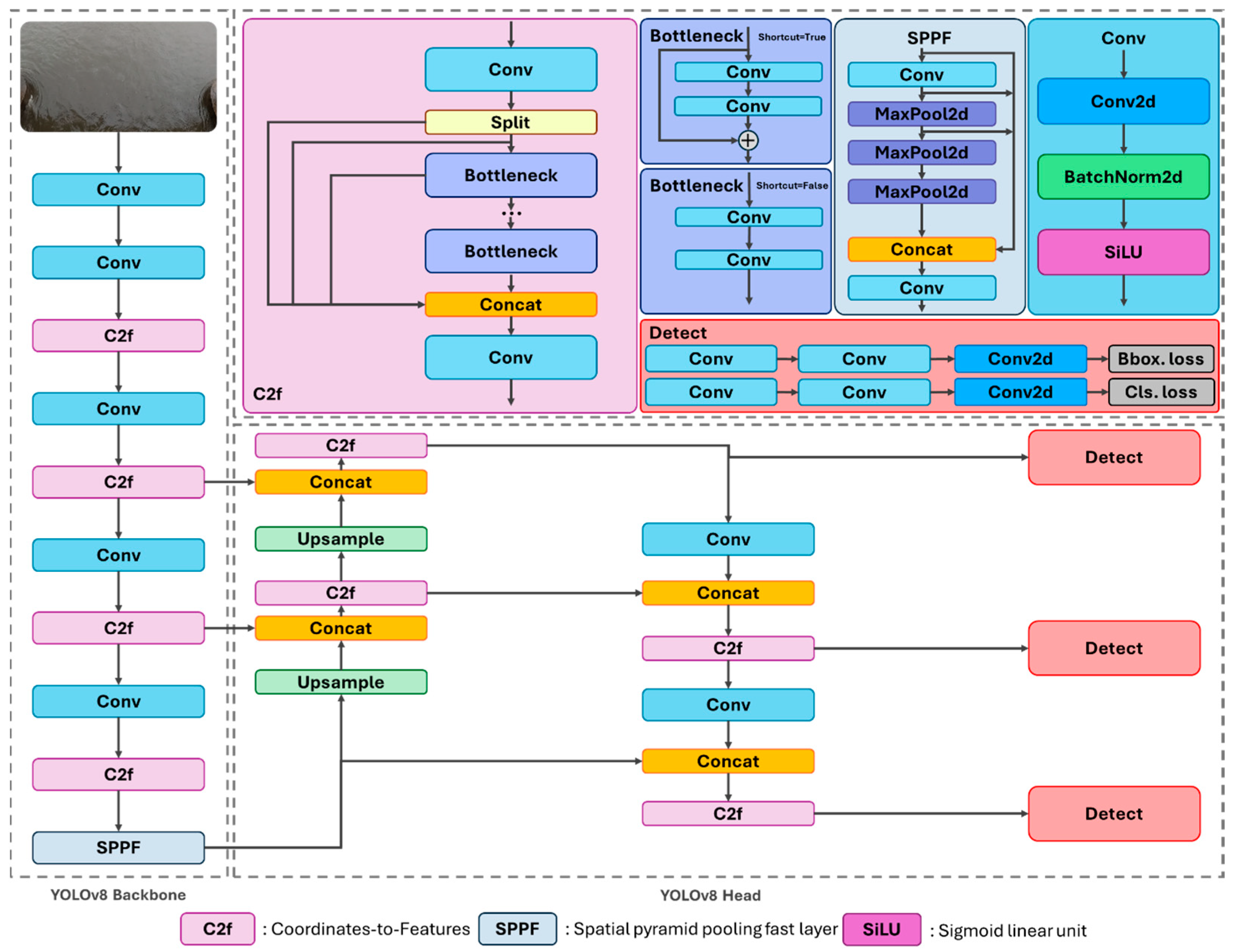

2.3.1. Modified YOLOv8

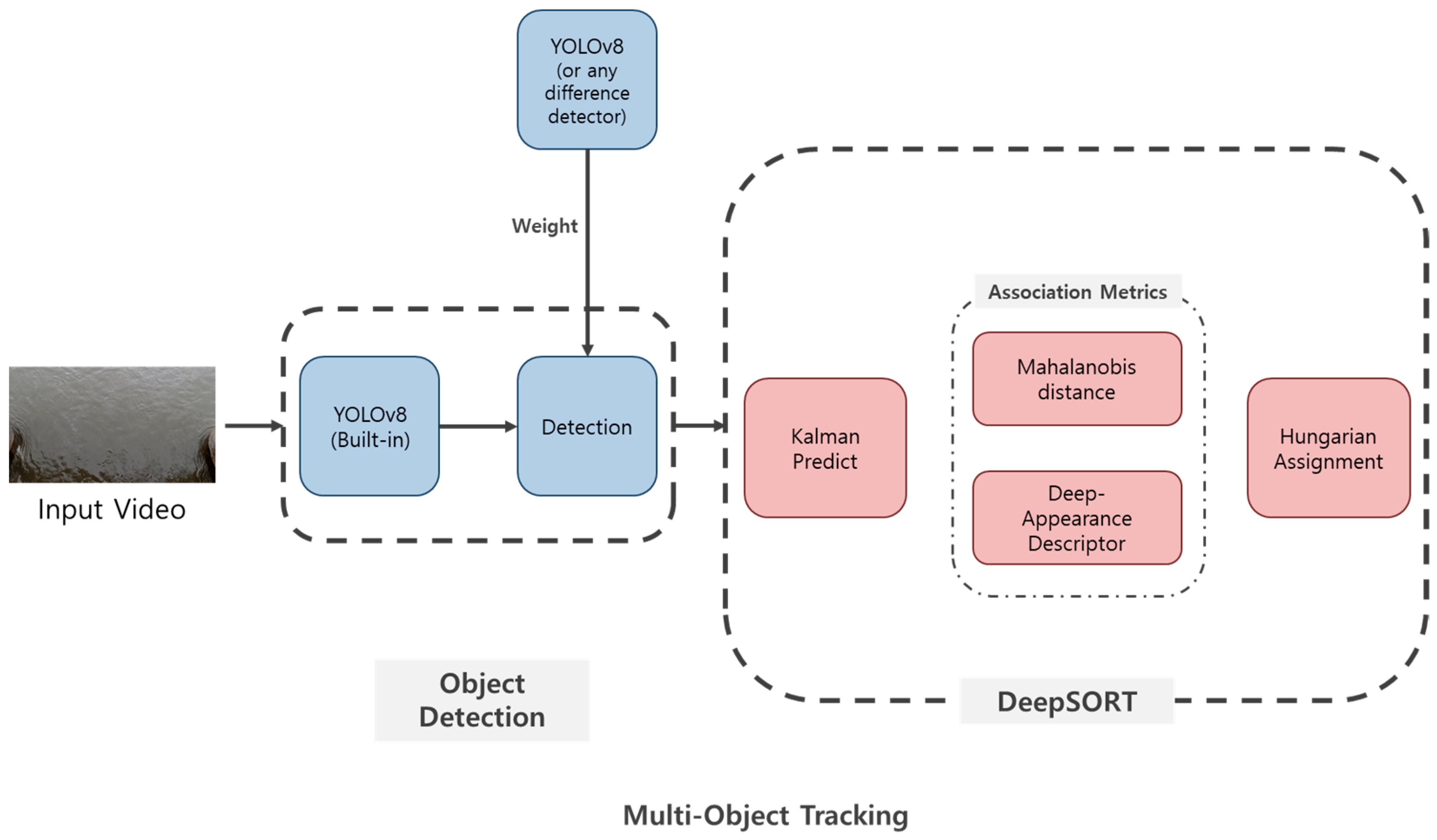

2.3.2. Deep-SORT

2.3.3. Model Evaluation

Object-Classification Performance

Object-Detection Performance

Object-Counting Performance

3. Results and Discussion

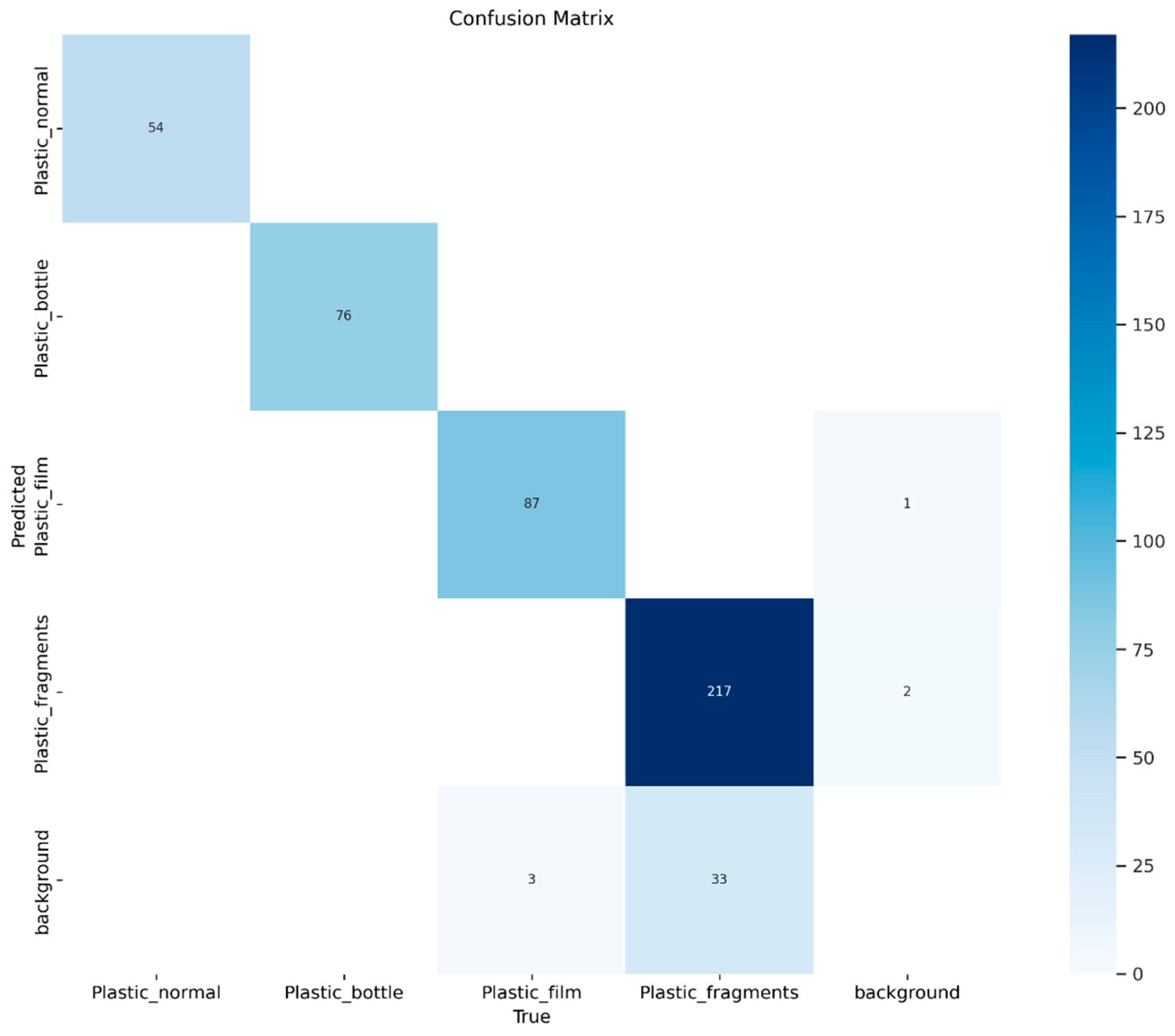

3.1. Object Classification

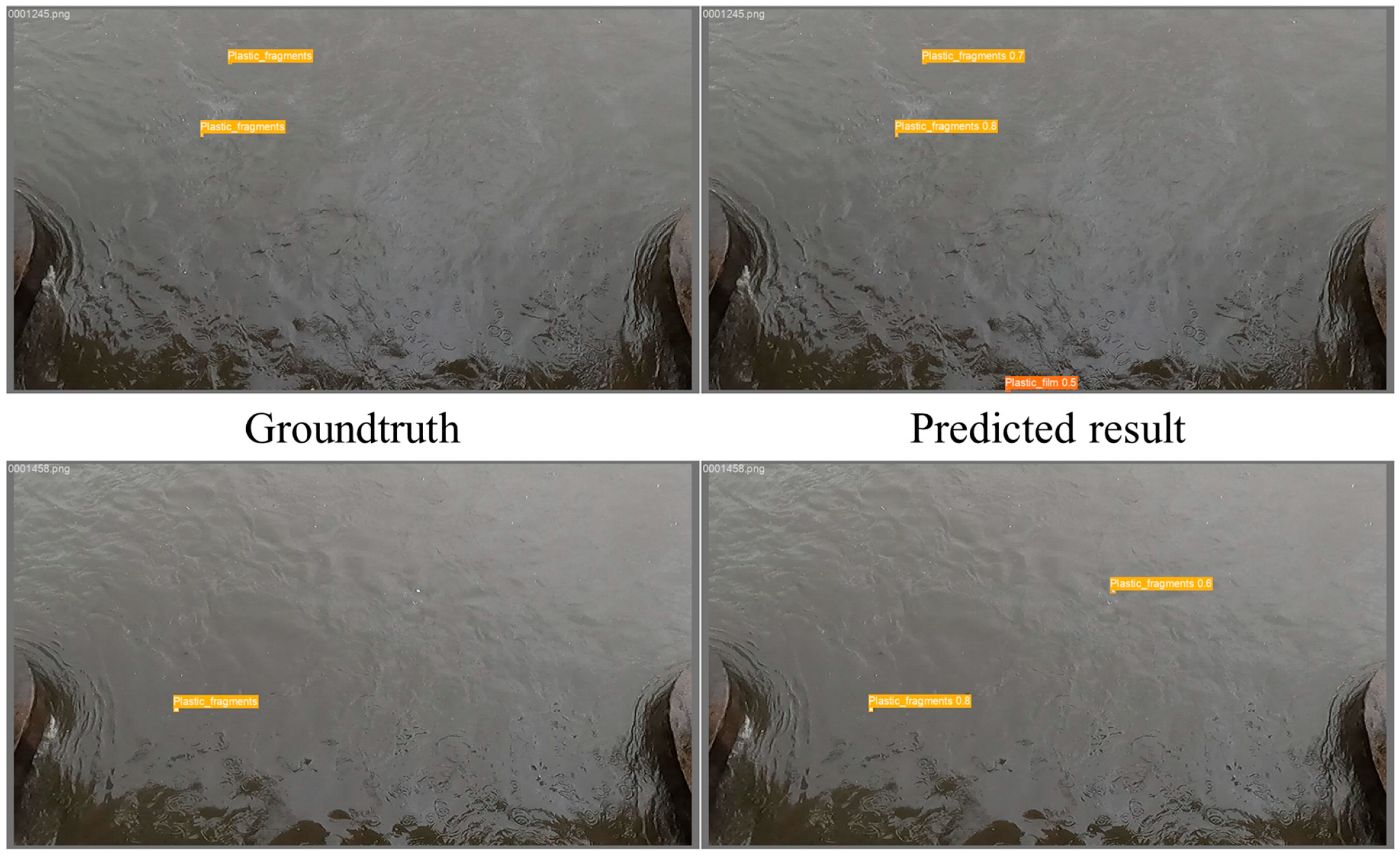

3.2. Object Detection

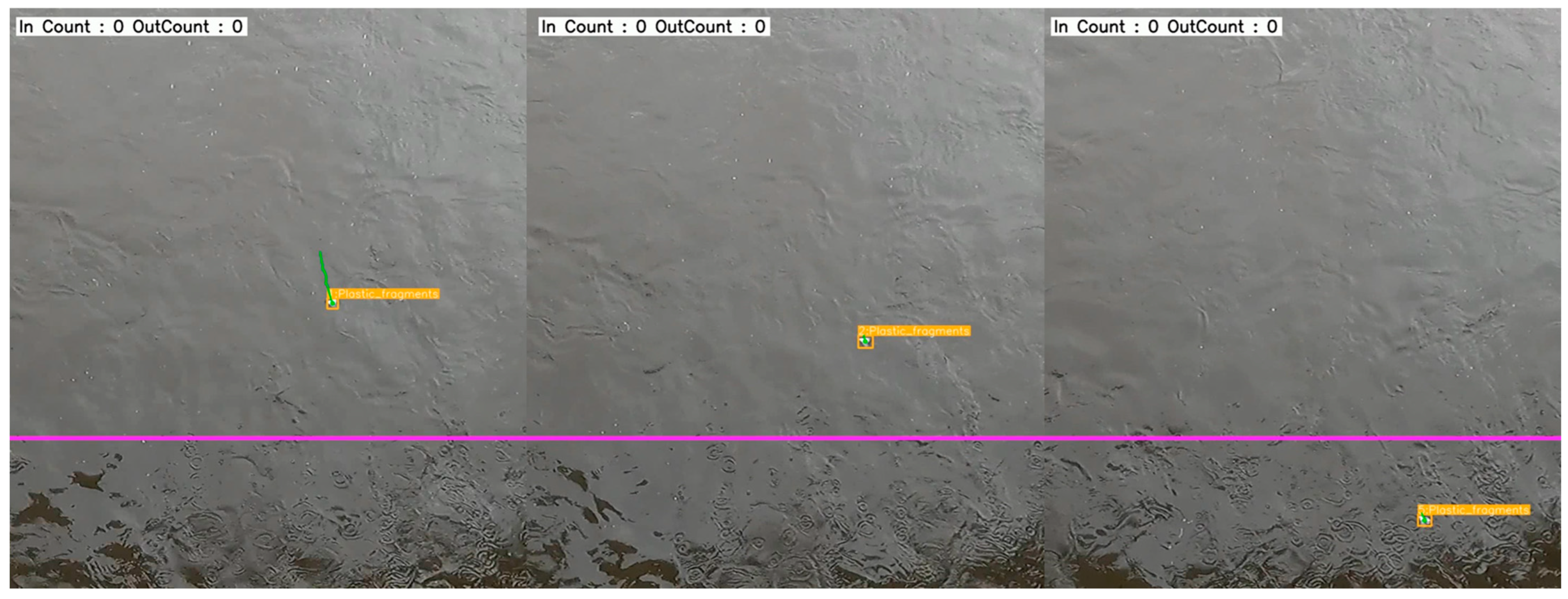

3.3. Object Counting

4. Conclusions

- The model’s object-detection performance yielded a mean average precision (mAP) of 0.990 at an Intersection over Union (IoU) threshold of 0.5, and 0.714 under the stricter evaluation range of IoU = 0.5:0.05:0.95. Compared to binary-class detection models from prior studies, this multi-class model demonstrated robust classification and detection capabilities, despite the increased complexity introduced by diverse plastic debris types. These results underscore its potential for practical application under field conditions, particularly during rainfall events and across varying debris morphologies.

- Several factors influencing model performance were identified. Visual similarities between film and fragment debris types posed challenges to accurate detection, contributing to elevated error rates. Additionally, class imbalance—most notably the predominance of the fragments class—may have led to overfitting in that category, while underfitting occurred in others, resulting in misclassifications. To enhance model performance, strategies such as balancing class representation through selective data augmentation and improving feature extraction for small, morphologically similar plastic debris were proposed.

- The tracking and counting of plastic debris proved challenging due to objects frequently submerging and re-emerging under dynamic hydrological conditions. Of 32 tracked debris items, only six were successfully monitored across a designated counting line. This suggests that further improvements are required, particularly in addressing environmental variability, object size, and debris type, to enhance tracking efficacy.

Supplementary Materials

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Andrady, A.L. Microplastics in the marine environment. Mar. Pollut. Bull. 2011, 62, 1596–1605. [Google Scholar] [PubMed]

- Gewert, B.; Plassmann, M.M.; MacLeod, M. Pathways for degradation of plastic polymers floating in the marine environment. Environ. Sci. Process. Impacts 2015, 17, 1513–1521. [Google Scholar] [PubMed]

- Batel, A.; Borchert, F.; Reinwald, H.; Erdinger, L.; Braunbeck, T. Microplastic accumulation patterns and transfer of benzo[a]pyrene to adult zebrafish (Danio rerio) gills and zebrafish embryos. Environ. Pollut. 2018, 235, 918–930. [Google Scholar] [PubMed]

- Galloway, T.S.; Lewis, C.N. Marine microplastics spell big problems for future generations. Proc. Natl. Acad. Sci. USA 2016, 113, 2331–2333. [Google Scholar] [CrossRef] [PubMed]

- Assembly, G. Resolution Adopted by the General Assembly on 11 September 2015; United Nations: New York, NY, USA, 2015. [Google Scholar]

- Rochman, C.M.; Tahir, A.; Williams, S.L.; Baxa, D.V.; Lam, R.; Miller, J.T.; Teh, F.-C.; Werorilangi, S.; Teh, S.J. Anthropogenic debris in seafood: Plastic debris and fibers from textiles in fish and bivalves sold for human consumption. Sci. Rep. 2015, 5, 14340. [Google Scholar] [CrossRef] [PubMed]

- Wright, S.L.; Thompson, R.C.; Galloway, T.S. The physical impacts of microplastics on marine organisms: A review. Environ. Pollut. 2013, 178, 483–492. [Google Scholar] [PubMed]

- Laist, D.W. Impacts of marine debris: Entanglement of marine life in marine debris including a comprehensive list of species with entanglement and ingestion records. Mar. Pollut. Bull. 1997, 18, 318–326. [Google Scholar]

- Cable, R.N.; Beletsky, D.; Beletsky, R.; Wigginton, K.; Locke, B.W.; Duhaime, M.B. Distribution and modeled transport of plastic pollution in the Great Lakes, the world’s largest freshwater resource. Front. Environ. Sci. 2017, 5, 45. [Google Scholar]

- Blettler, M.C.; Mitchell, C. Dangerous traps: Macroplastic encounters affecting freshwater and terrestrial wildlife. Sci. Total Environ. 2021, 798, 149317. [Google Scholar] [CrossRef] [PubMed]

- Kikaki, A.; Karantzalos, K.; Power, C.A.; Raitsos, D.E. Remotely sensing the source and transport of marine plastic debris in Bay Islands of Honduras (Caribbean Sea). Remote Sens. 2020, 12, 1727. [Google Scholar] [CrossRef]

- Basu, B.; Sannigrahi, S.; Sarkar Basu, A.; Pilla, F. Development of novel classification algorithms for detection of floating plastic debris in coastal waterbodies using multispectral Sentinel-2 remote sensing imagery. Remote Sens. 2021, 13, 1598. [Google Scholar] [CrossRef]

- Cortesi, I.; Masiero, A.; Tucci, G.; Topouzelis, K. UAV-based river plastic detection with a multispectral camera. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 855–861. [Google Scholar]

- Kalaitzakis, M.; Vitzilaios, N. UAS Control under GNSS Degraded and Windy Conditions. Robotics 2023, 12, 123. [Google Scholar] [CrossRef]

- van Lieshout, C.; van Oeveren, K.; van Emmerik, T.; Postma, E. Automated river plastic monitoring using deep learning and cameras. Earth Space Sci. 2020, 7, e2019EA000960. [Google Scholar] [CrossRef]

- Sekachev, B.; Manovich, N.; Zhiltsov, M.; Zhavoronkov, A.; Kalinin, D.; Hoff, B.; TOsmanov; Kruchinin, D.; Zankevich, A.; Sidnev, D.; et al. opencv/cvat: v1.1.0, v1.1.0; Zenodo: Geneva, Switzerland, 2020. [CrossRef]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024. [Google Scholar]

- Lazarevich, I.; Grimaldi, M.; Kumar, R.; Mitra, S.; Khan, S.; Sah, S. Yolobench: Benchmarking efficient object detectors on embedded systems. In Proceedings of the IEEE/CVF International Conference on Computer Vision and Pattern Recognition, Paris, France, 1–6 October 2023; pp. 1169–1178. [Google Scholar]

- Hussain, M. YOLOv5, YOLOv8 and YOLOv10: The Go-To Detectors for Real-time Vision. arXiv 2024, arXiv:2407.02988. [Google Scholar]

- Xu, L.; Zhao, Y.; Zhai, Y.; Huang, L.; Ruan, C. Small Object Detection in UAV Images Based on YOLOv8n. Int. J. Comput. Intell. Syst. 2024, 17, 223. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Dalianis, H.; Dalianis, H. Evaluation metrics and evaluation. In Clinical Text Mining: Secondary Use of Electronic Patient Records; Springer: Berlin/Heidelberg, Germany, 2018; pp. 45–53. [Google Scholar]

- Hand, D.J.; Christen, P.; Kirielle, N. F*: An interpretable transformation of the F-measure. Mach. Learn. 2021, 110, 451–456. [Google Scholar] [PubMed]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Wu, Z.; Xiong, C.; Ma, C.Y.; Socher, R.; Davis, L.S. Adaframe: Adaptive frame selection for fast video recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1278–1287. [Google Scholar]

- Luiten, J.; Osep, A.; Dendorfer, P.; Torr, P.; Geiger, A.; Leal-Taixé, L.; Hota, B.L. A Higher Order Metric for Evaluating Multi-Object Tracking. Int. J. Comput. Vis. 2021, 129, 548–578. [Google Scholar] [CrossRef] [PubMed]

| Hyperparameters | Construction Environment | ||

|---|---|---|---|

| Image size | 1280 | GPU | NVIDIA A100 40Gb |

| Epochs | 1000 | OS | Ubuntu 22.04 |

| Optimizer | SGD | Software environments | Python 3.12 |

| Learning rate | 0.01 | Pytorch 2.4.0 | |

| Augmentation | 90 degrees | Torchvision 0.19.0 | |

| 0.5 scale | CUDA 12.1 | ||

| CUDNN 9.1.0 | |||

| Train/Validation | Test | |||||

|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | |

| Overall | 0.984 | 0.980 | 0.982 | 0.980 | 0.979 | 0.980 |

| Plastic_normal | 0.992 | 1.000 | 0.996 | 0.983 | 1.000 | 0.991 |

| Plastic_bottle | 0.994 | 1.000 | 0.997 | 0.988 | 1.000 | 0.993 |

| Plastic_film | 0.997 | 0.989 | 0.993 | 0.989 | 0.982 | 0.985 |

| Plastic_fragments | 0.952 | 0.930 | 0.941 | 0.962 | 0.932 | 0.947 |

| Train/Validation mAP | Test mAP | |||

|---|---|---|---|---|

| IoU = 0.5 | IoU = [0.5:0.05:0.95] | IoU = 0.5 | IoU = [0.5:0.05:0.95] | |

| Overall | 0.990 | 0.699 | 0.992 | 0.714 |

| Plastic_normal | 0.995 | 0.738 | 0.995 | 0.754 |

| Plastic_bottle | 0.995 | 0.736 | 0.995 | 0.752 |

| Plastic_film | 0.995 | 0.720 | 0.994 | 0.720 |

| Plastic_fragments | 0.973 | 0.601 | 0.985 | 0.628 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, H.; Byeon, S.; Kim, J.H.; Shin, J.-K.; Park, Y. Construction of a Real-Time Detection for Floating Plastics in a Stream Using Video Cameras and Deep Learning. Sensors 2025, 25, 2225. https://doi.org/10.3390/s25072225

Lee H, Byeon S, Kim JH, Shin J-K, Park Y. Construction of a Real-Time Detection for Floating Plastics in a Stream Using Video Cameras and Deep Learning. Sensors. 2025; 25(7):2225. https://doi.org/10.3390/s25072225

Chicago/Turabian StyleLee, Hankyu, Seohyun Byeon, Jin Hwi Kim, Jae-Ki Shin, and Yongeun Park. 2025. "Construction of a Real-Time Detection for Floating Plastics in a Stream Using Video Cameras and Deep Learning" Sensors 25, no. 7: 2225. https://doi.org/10.3390/s25072225

APA StyleLee, H., Byeon, S., Kim, J. H., Shin, J.-K., & Park, Y. (2025). Construction of a Real-Time Detection for Floating Plastics in a Stream Using Video Cameras and Deep Learning. Sensors, 25(7), 2225. https://doi.org/10.3390/s25072225