1. Introduction

In today’s digital landscape, the seamless integration of technology into everyday life has heightened the demand for secure and reliable authentication mechanisms [

1]. Among various biometric authentication techniques [

2,

3], face recognition technology stands out as an efficient and user-friendly solution, bridging the gap between physical and digital identities. However, face recognition systems are increasingly vulnerable to sophisticated presentation attacks (PAD) [

4], where adversaries exploit advanced attack presentation (AP) techniques such as high-resolution video replays, 3D masks, and AI-generated synthetic images to bypass authentication mechanisms [

5]. These threats are continuously evolving, demanding robust, adaptive, and future-proof PAD strategies capable of mitigating both known and emerging attack vectors. The dynamic nature of such attacks underscores the pressing need for more advanced countermeasures that not only address current vulnerabilities, but also anticipate future threats.

A major challenge in enhancing PAD systems lies in their ability to effectively detect and neutralize sophisticated presentation attacks [

6]. Traditional PAD approaches, though effective to a certain extent, primarily rely on data-driven models trained on specific attack patterns, limiting their ability to generalize beyond known threats. As a result, these systems struggle to counter novel attack presentation (AP) techniques [

7], making them a significant security vulnerability. The inability to adapt to new forms of attacks poses a serious risk to critical security infrastructures, financial systems, and sensitive data repositories. Therefore, ensuring the resilience and robustness of face recognition technologies is no longer just a technical challenge, but a crucial security imperative. As biometric authentication becomes increasingly embedded in global security frameworks [

8], maintaining its integrity against ever-evolving cyber threats is paramount [

9]. The motivation behind this research stems from this urgency, driving the need for more intelligent, adaptive, and scalable PAD solutions.

Recent studies have explored similar approaches to enhance PAD systems. For instance, ref. [

10] proposed a one-class knowledge distillation framework for face PAD, where a teacher network is trained on source domain data, and a student network learns to mimic the teacher’s representations only using genuine face samples from the target domain. During testing, the similarity between the teacher and student network representations is used to distinguish between genuine and attack samples. This method showed improved performance in cross-domain PAD scenarios. Another notable work by [

11] introduced a meta-teacher framework for face anti-attack presentation (AP), where a meta-teacher is trained to supervise PA detectors more effectively. The meta-teacher learns to provide a better-suited supervision than handcrafted labels, significantly improving the performance of PA detectors. Building upon these advancements, our research integrates the teacher–student learning paradigm with PAD methodologies to enhance the adaptability and robustness of PAD systems against evolving presentation attacks. This integration aims to improve the generalization capabilities of PAD systems, ensuring their effectiveness in diverse and unseen attack scenarios. Another notable study [

12] introduced a dual-teacher knowledge distillation method, incorporating perceptual and generative knowledge to improve generalization across different attack types, while leveraging domain alignment techniques to mitigate training instability. Furthermore, the learning meta-patterns [

13] approach enhances the robustness of PAD systems by focusing on intrinsic attack presentation (AP) cues rather than superficial characteristics, thus improving the generalization capabilities. Additionally, federated learning [

14] has been explored as a means to strengthen face PAD models while preserving data privacy, allowing multiple entities to collaboratively train detection systems without sharing raw data.

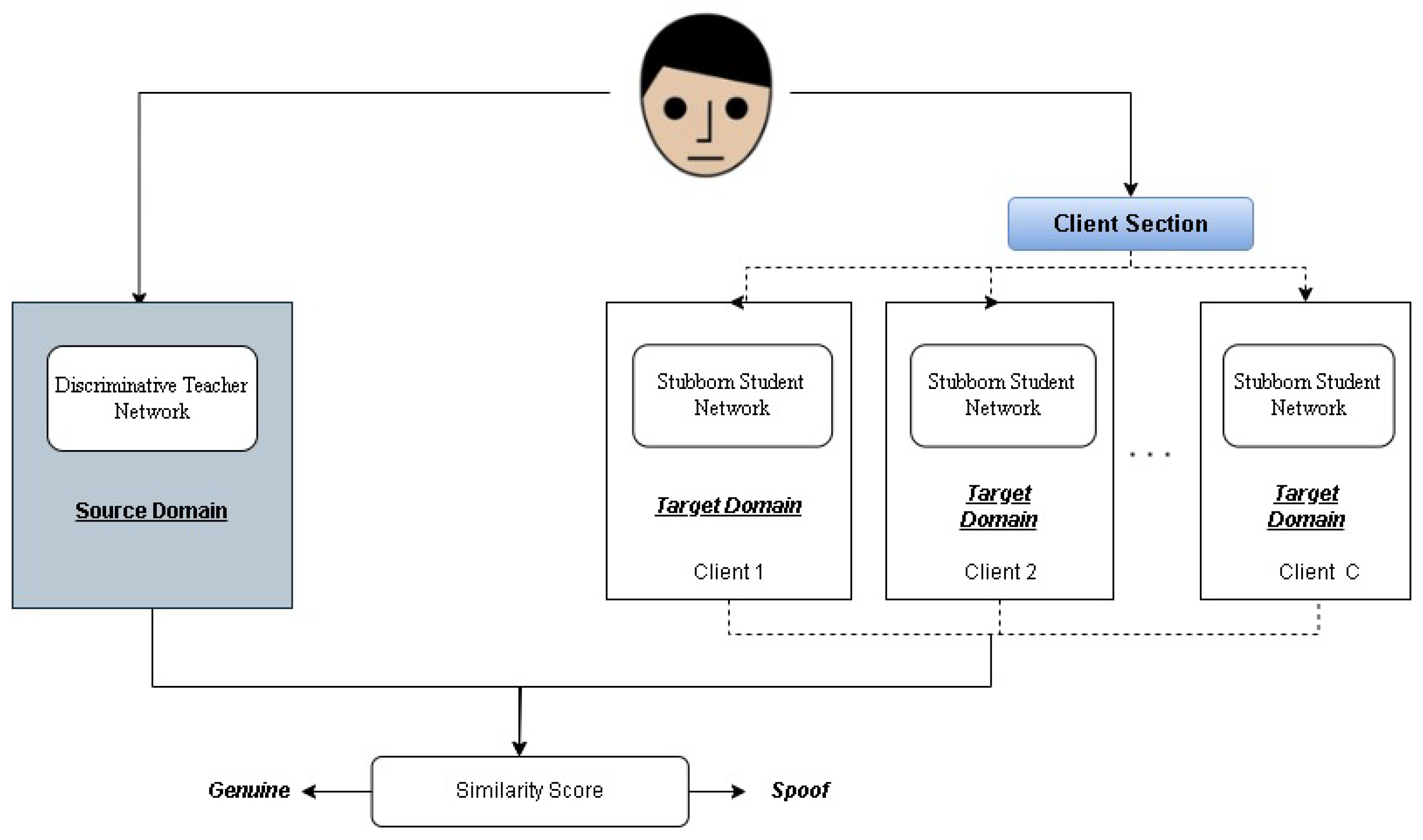

These advancements have collectively contributed to the ongoing evolution of PAD technologies, reinforcing the necessity for adaptive and intelligent defense mechanisms. Our study makes a significant contribution to biometric security by proposing an innovative integration of a teacher–student learning framework with state-of-the-art PAD methodologies. The proposed approach aims to enhance the adaptability and accuracy of PAD systems, enabling them to effectively counter both known and emerging attack presentation (AP) attacks. By leveraging the deep knowledge of a teacher network—trained on an extensive dataset of both genuine and synthetic attack samples—the model guides a student network to specialize in recognizing subtle and complex facial features within targeted domains (refer to

Figure 1). This strategic fusion bridges the gap between traditional PAD models and adaptive learning mechanisms, ensuring that the system remains resilient against evolving threats. Additionally, this framework lays the foundation for a scalable and adaptable security blueprint, enabling future research to build on these advancements, for more robust and trustworthy biometric authentication systems.

The rapid evolution of attack presentation (AP) techniques [

15], combined with the widespread adoption of face recognition systems in critical sectors, necessitates a shift from reactive security measures to proactive, learning-driven approaches. Traditional static defense mechanisms are no longer sufficient; modern security demands intelligent, self-improving systems that continuously adapt to new attack methodologies. Ensuring the integrity and trustworthiness of biometric authentication systems [

16] is essential for securing applications ranging from personal devices to global security infrastructures. Addressing this challenge requires moving beyond conventional PAD methods and implementing advanced machine-learning-driven solutions capable of predicting, evolving with, and mitigating emerging threats. This research directly addresses these concerns by leveraging cutting-edge AI-driven PAD methodologies, offering a scalable, resilient, and adaptable approach to biometric security.

The contributions of our research to the biometric security field and face presentation attack detection systems, which are different from the present literature, can be summarized in the following bullet points:

Innovative Integration of Teacher–Student Learning Framework: We introduce a new application of the teacher–student learning paradigm to PAD systems, using the extensive knowledge of a teacher network trained on various real and synthetic attack vectors to guide a student network in accurately identifying genuine facial features.

Enhanced Adaptability to Novel Attack Presentation (AP) Attacks: Our methodology significantly enhances the adaptability of PAD systems, enabling them to effectively counter both known and emergent attack presentation (AP) attacks. This adaptability is crucial for maintaining the integrity of biometric authentication systems amid a constantly changing environment of threats.

Scalable and Adaptable Blueprint for Future Research: The proposed integration of machine learning frameworks with advanced PAD techniques provides a scalable and adaptable blueprint for future endeavors in biometric security. This contribution lays the groundwork for further innovations in the domain, potentially leading to more resilient and trustworthy authentication systems.

Empirical Validation Against State-of-the-Art Solutions: Through rigorous experimentation and validation on benchmark datasets, our research demonstrated superior performance and efficacy in detecting attack presentation (AP) attacks, as compared to previous solutions. This empirical evidence substantiates the practical applicability and effectiveness of our proposed methodology.

Fresh Perspective on Biometric Authentication Vulnerabilities: By synergizing advanced PAD methodologies with a teacher–student learning framework, our study offers a fresh perspective on addressing the vulnerabilities inherent in biometric authentication systems. This contribution provides new avenues for enhancing security and making face recognition technologies more reliable.

This manuscript has the follow parts:

Section 2 presents work related to the field, and

Section 3, features detailed methodology information.

Section 4 presents our observation and experimental results, along with technical details.

Section 5 describes our implementation in detail.

Section 6 presents limitations and future suggestions. In

Section 7, we present the conclusions.

3. Methodology

Our methodology addresses the growing complexity of biometric security threats by enhancing face presentation attack detection (PAD) accuracy. At its core, our approach integrates a teacher–student learning framework with advanced PAD techniques to improve adaptability and real-world performance. Traditional PAD methods, while effective against known attack presentation (AP) tactics, often struggle with novel and sophisticated attack strategies. To overcome this limitation, our model is designed to enhance generalization and robustness, ensuring stronger defense against emerging threats.

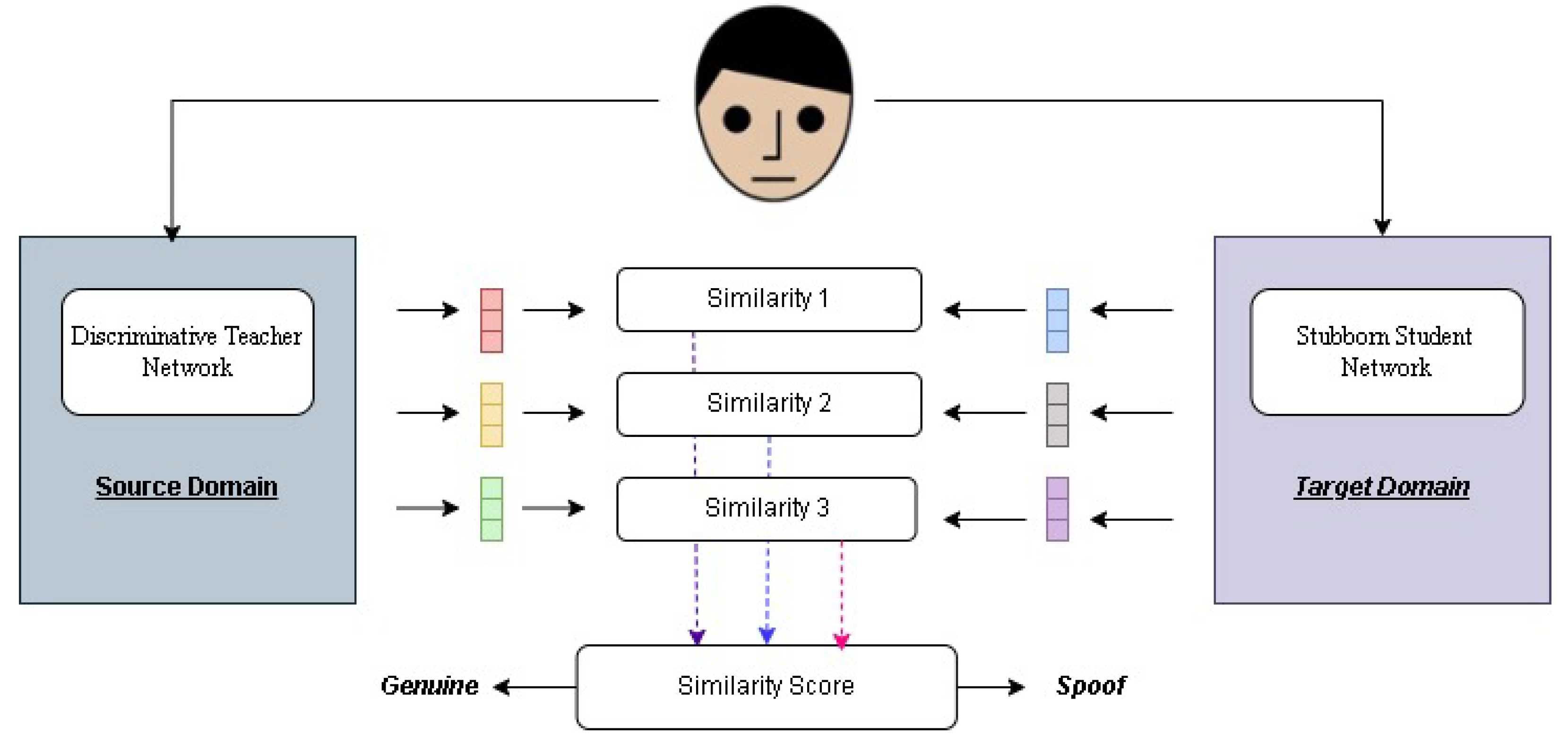

In our system, a mentor network focuses on a diverse set of genuine and attack samples and transfers its knowledge to a learner network. This knowledge distillation process enables the student network to differentiate between real and spoofed facial presentations with high precision, even when encountering unseen attack types shown in

Figure 2. Unlike conventional PAD methods, which rely heavily on dataset-specific features, our approach enhances adaptability by incorporating deep-learning-based feature analysis and adversarial training to improve detection accuracy.

By shifting from static, dataset-dependent PAD models to a more dynamic and learning-driven system, our method establishes a new benchmark in biometric security. This adaptability ensures that face recognition technologies remain resilient and trustworthy, even as cyber threats evolve in complexity.

3.1. Innovative Integration of the Teacher–Student Learning Framework

To enhance face presentation attack detection (PAD), we put-forward a mentor–learner studying framework that effectively transfers knowledge from a well-trained teacher network to a more adaptable student network. This approach addresses key challenges in PAD by ensuring the student network can generalize well to unseen attack types, while remaining computationally efficient.

At the core of this framework, the teacher network is trained on a diverse dataset that includes a wide range of attack presentation (AP) attacks, from sophisticated 3D mask attacks to photo and video-based spoofs. By learning intricate patterns that differentiate genuine faces from attacks, the teacher network develops a robust feature-extraction capability, ensuring accurate classification across different attack scenarios. This knowledge distillation process enables the student network to inherit critical decision-making patterns from the teacher, while remaining lightweight and adaptable.

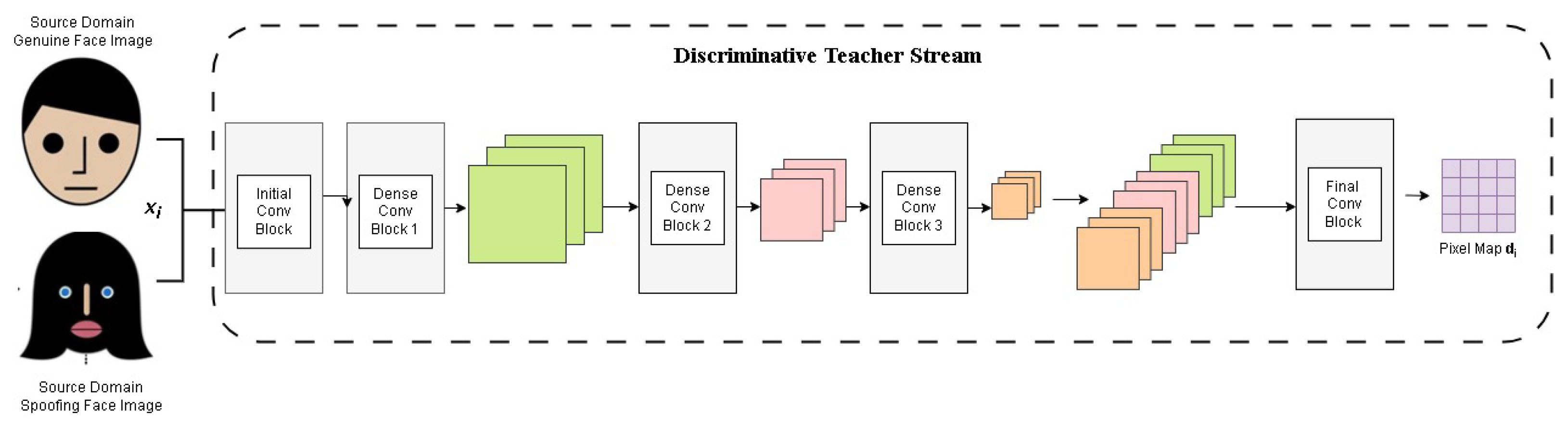

The teacher network is designed to prioritize and extract essential features that distinguish genuine facial attributes from spoofed ones. It employs deep convolutional layers to analyze biometric data and identify subtle texture, motion, and depth cues indicative of attack presentations (APs) as shown in

Figure 3. The extracted feature representations serve as the foundation for the student network, allowing it to make informed decisions with minimal training data.

Unlike the mentor network, the learner network focuses on refining its understanding of genuine face representations within specific target domains. Instead of directly learning from attack samples, the student absorbs the distilled knowledge provided by the teacher network, allowing it to recognize genuine faces with high precision. This strategic specialization makes the student network more efficient and adaptable, particularly in scenarios where attack presentation (AP) attacks continuously evolve.

The training process is carefully structured to expose the student network to a curated subset of data used to train the mentor network. This subset is optimized to highlight the subtle, yet distinctive cues of genuine faces, ensuring the student network remains focused on authenticity detection, without unnecessary complexity. The learning process is reinforced through multilevel similarity measurement, where the student progressively refines its feature representation to align closely with the teacher’s outputs, as detailed in Algorithm 1.

| Algorithm 1: Algorithm for Inequitable Teacher Network Training |

Input: Veritable face images from (), Attack Presentations (AP) samples from (), Maximum training Iteration , Hyper parameters for training, learning Rate , Batch size Results: DT parameters

- 1.

Initialize Model parameters as, → for IT and for FCB; - 2.

For

to

do - 3.

specimen , specimen with stamps from - 4.

Extracting feature by encoding - 5.

Pixel map prediction by processing with - 6.

Comp with and like in Equation ( 1) - 7.

Update . - 8.

end for loop - 9.

Result: return ;

|

Our teacher–student framework represents a significant leap in PAD methodologies by ensuring that face recognition systems remain adaptive, scalable, and resistant to emerging attack types. By enabling efficient knowledge transfer and real-time generalization, this framework not only enhances current PAD systems but also lays the foundation for future advancements in biometric security.

3.2. Advanced PAD Methodologies

To improve the precision and resilience of face presentation attack detection (PAD), cutting-edge deep learning techniques are incorporated into our system. By leveraging leading edge techniques, the model attains improved feature extraction and attack presentation (AP) sensing through a combination of Convolutional Neural Networks (CNNs), activation functions, pooling mechanisms, and optimized loss functions. These components collectively enable a more comprehensive analysis of facial textures, expressions, and biometric cues, effectively distinguishing genuine faces from spoofed presentations.

Convolutional Neural Networks (CNNs) serve as the backbone of our PAD system, demonstrating an exceptional capability for processing facial image data. CNNs extract hierarchical feature representations through multiple layers of convolution operations, which help detect texture inconsistencies and attack presentation (AP) artifacts. The convolution operation is mathematically defined as

where

represents the output mapping of features,

I is the input image, and

K is convolutional quintessence. This operation enables the network to capture spatial hierarchies in the input facial data, allowing it to identify distinct patterns indicative of attack presentations (APs).

Following convolution, the activation function introduces a roundabout into network, allowing it to learn intricate patterns that differentiate real and fake facial presentations. We employ the Rectified Linear Unit (ReLU) activation function, which is mathematically defined as follows:

ReLU ensures that only positive values are retained in the feature map, effectively filtering out irrelevant information and tweaking the network’s ability to focus on important facial details. This improves the model’s ability to pick out genuine from fake faces, even with challenging lighting and pose variations.

To manage computational complexity and retain essential information, pooling layers are applied to reduce feature map dimensions, while preserving key patterns. Max pooling is a widely used technique in our model, defined as:

where

is the pooled feature map and

represents a local region in the input feature map. This operation ensures that only the most significant features are retained, making the network resilient to spatial variations in the input images.

The effectiveness of the CNN model is further enhanced through loss function optimization, which ensures accurate classification of genuine and spoofed facial images. We use a cross-entropy loss function, widely adopted for classification tasks, defined as

where

z represents the true class label,

denotes the predicted probability, and the summation runs over all classes

n. This function measures the deviation between the speculated and true labels, guiding the model to refine its predictions through backpropagation and gradient descent optimization. Our deep-learning-based PAD model undergoes end-to-end training, where

Convolutional layers extract low-level and high-level facial features.

Activation functions introduce non-linearity to capture complex patterns.

Pooling layers reduce the feature map size, retaining key information.

The loss function optimizes the network performance through backpropagation.

By integrating these advanced deep learning methodologies, our approach significantly improves PAD accuracy, making it more robust to varied lighting conditions, pose variations, and unseen attack types.

3.3. Pertinacious Student Network Training

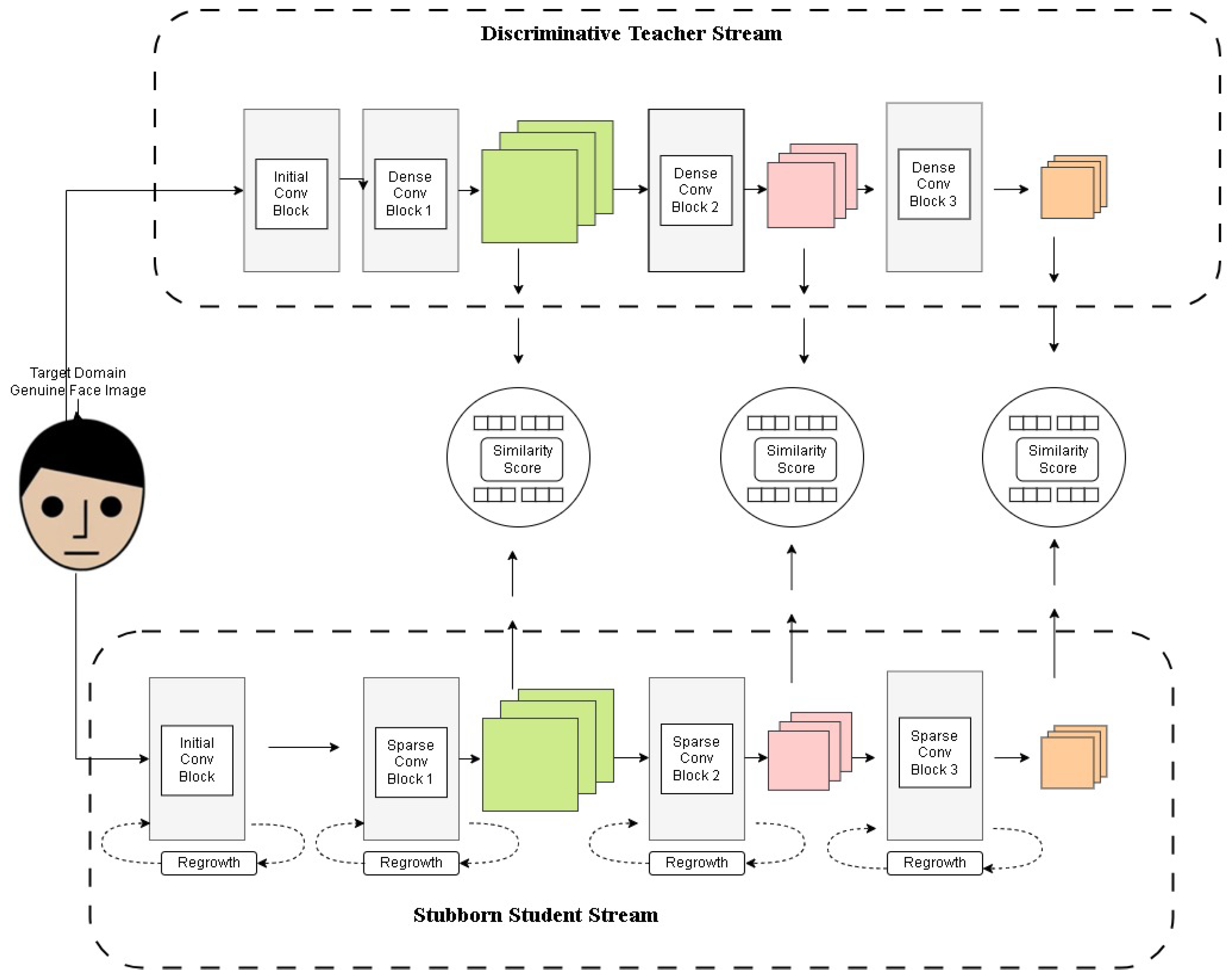

To further enhance the strength and adaptability of the PAD system, we introduce the Pertinacious Student (PS) network. The PS network is designed to refine its understanding of genuine facial representations by learning from the Teacher Network (IT), while maintaining computational efficiency. The key objective of the PS network is to ensure that its feature representations closely align with those extracted by the IT network, thereby enabling it to generalize well to unseen target domains.

During training, real face photos from the target domain are input to both the mentor network (IT) and learner network (PS) for feature extraction. The feature representations extracted from both networks are compared using cosine similarity, allowing the PS network to iteratively adjust its parameters to match the teacher’s learned representations.

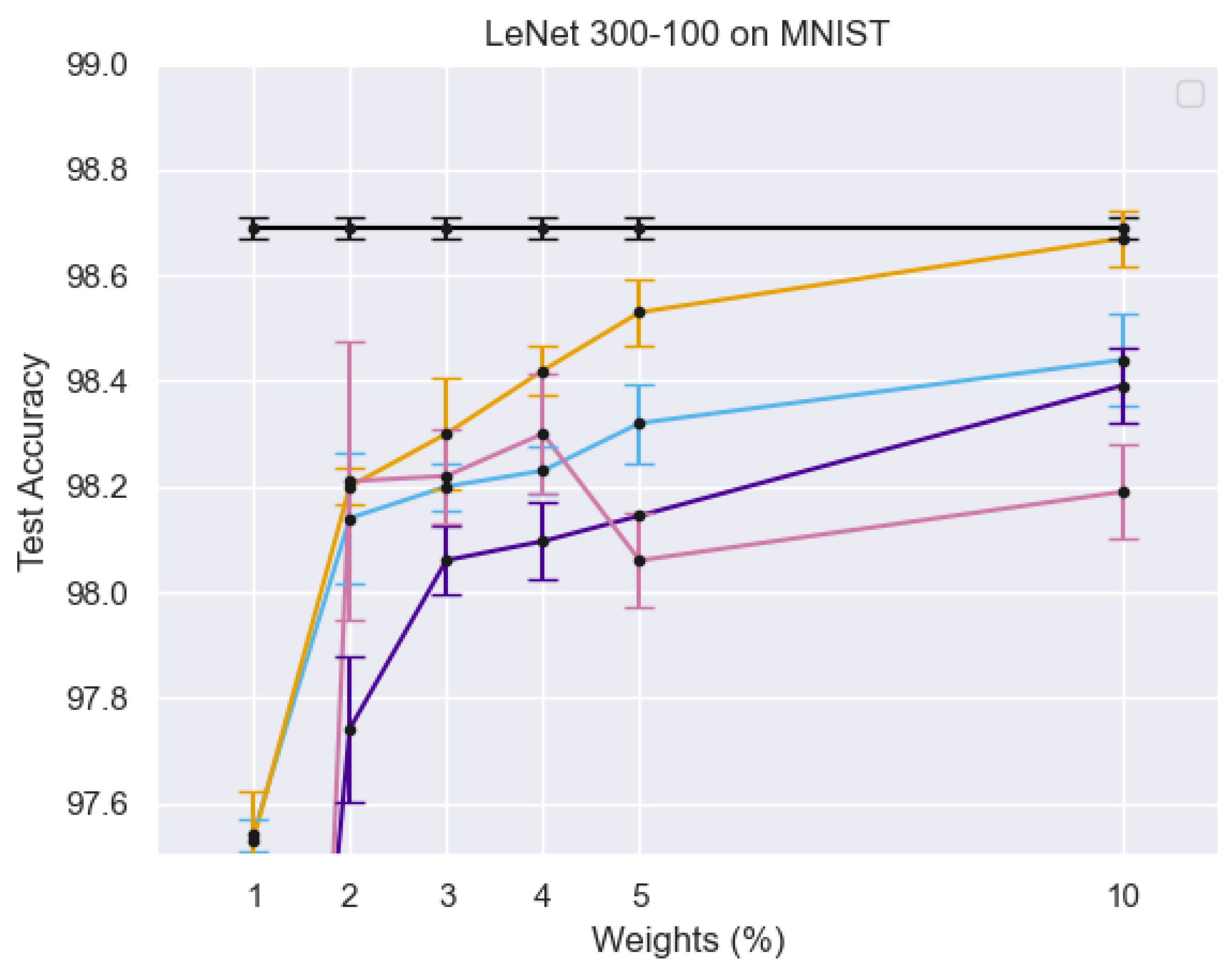

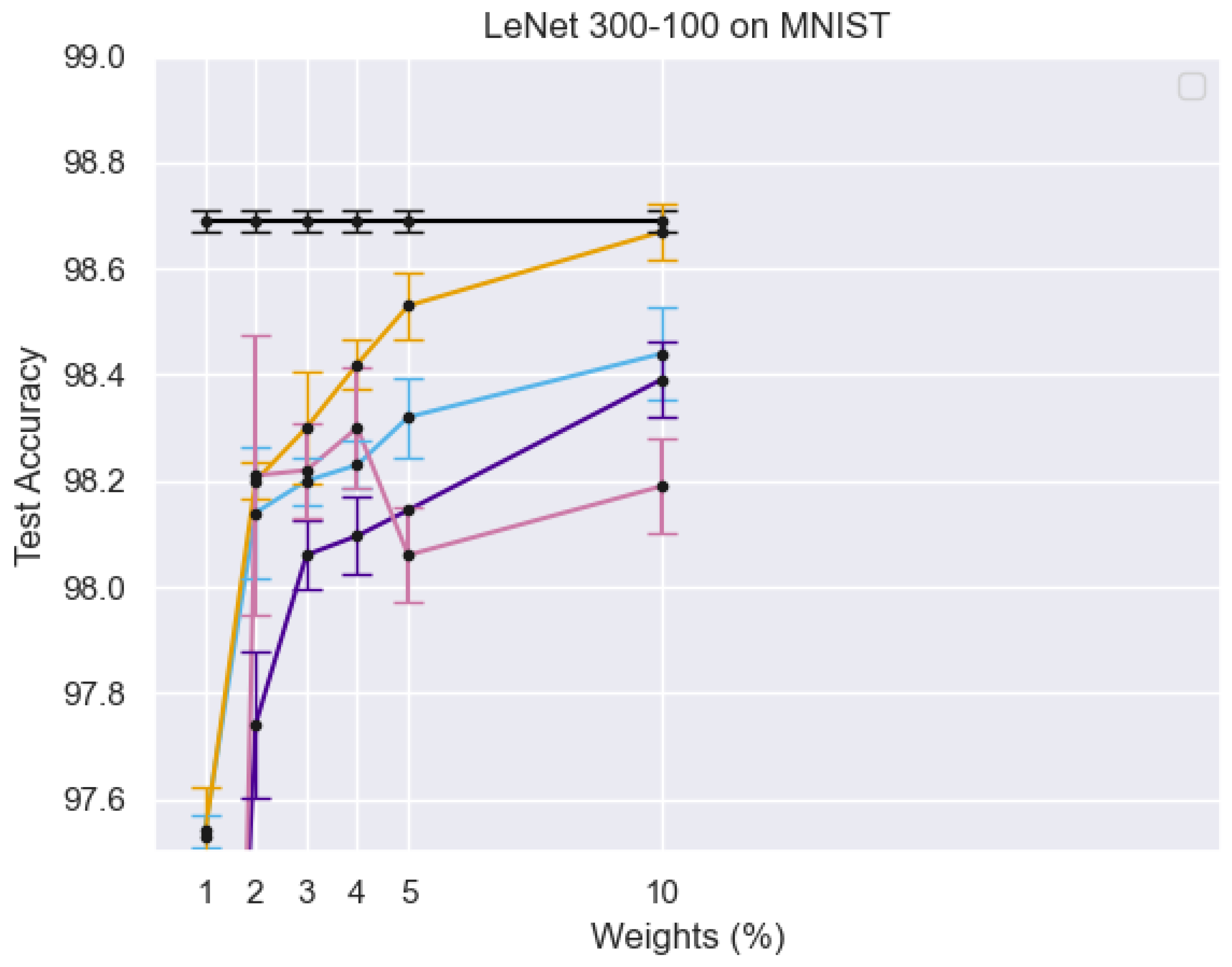

Figure 4 illustrates the training process of the Student Network, where real face images from the target domain are passed through both the teacher and student networks. The different curves in the graph represent test accuracy trends under varying percentages of active weights, showing the impact of sparse training on model performance. The error bars indicate the variance in accuracy, demonstrating the robustness of the PS network. The results suggest that using a limited number of active weights still achieves competitive accuracy, reinforcing the efficiency of the sparse training strategy.

Figure 5 illustrates the training process of the PS network, where target domain face images are processed within both the teacher and student networks. Multi-level similarity is computed to optimize the PS network using sparse training.

X-axis (Weights %): Represents the percentage of active parameters in the sparse PS network.

Y-axis (Test Accuracy): Measures the model’s performance on the MNIST dataset. Different Colored Lines: Represent different experimental configurations, highlighting variations in model architectures, training strategies, or sparsity levels. Black Line with Error Bars: Likely represents a baseline model or fully dense network for comparison. Other Lines: Show how test accuracy improves or fluctuates as the percentage of active weights increases, demonstrating the impact of sparse training on classification performance.

The feature representations generated by the IT and PS networks are given by

where

are feature representations from the teacher network (IT), and

are feature representations from the student network (PS).

A cosine similarity function is used to evaluate the sequence between these feature representations, as defined in Equation (

6):

where

f and

represent feature vectors. The Learning Similarity (LS

S) function, which serves as the optimization objective, is defined as

where

are weighting factors controlling the contribution of different feature levels.

The learner network optimization function is formulated as follows:

where

and

are parameters of the mentor and learner networks, respectively.

Employing multiple PS networks increases the total number of features in the system, which poses a challenge in terms of computational efficiency. To mitigate this, we introduce a sparse training technique, where the PS network utilizes sparse convolution kernels, ensuring that only a selected percentage (

) of the parameters are active. This client and source domain setup is shown in

Figure 6.

The determination of active and inactive parameters in the convolution layers is defined as follows:

where

represents active parameters,

denotes inactive parameters,

is the initial indicator, and

is the threshold.

To maintain network efficiency, pruning is applied using Equation (

10), where a portion of the least important parameters is removed:

New parameters are grown through an adaptive regrowth mechanism, defined as

where

and

are smoothing factors. The updated growth selection is defined in Equation (

13):

To further enhance the PS network’s resilience, we incorporate adversarial training and data augmentation. The adversarial loss function, optimizing detection against adversarial samples, is defined as

Random cropping, rotation, flipping, and color jittering improve the student network’s generalization ability.

The final training objective of the PS network combines both adversarial and supervised loss functions:

where

and

balance the contributions of the adversarial and supervised loss The overall pertinacious student network training algorithm shown in Algorithm 2.

3.4. Classification Techniques

Traditional classification methods often focus solely on facial appearance, neglecting contextual factors such as facial expressions and background information. However, these factors can significantly impact classification performance, especially for challenging samples.

We employ facial expression analysis to enhance the classification performance by capturing subtle variations in eye movements, eyebrow positioning, and mouth shape. This allows our model to differentiate natural expressions from artificially manipulated ones, a key indicator of attack presentation (AP) attacks. Our method builds upon existing facial analysis techniques, such as those in [

32], where facial micro-movements were used to improve biometric authentication.

Background cues often provide additional discriminatory information in PAD. Object detection and scene recognition techniques are integrated into our model to analyze the surrounding environment of an input face. This helps in detecting inconsistencies in the scene, which are common in photo, video, and deepfake-based attacks.

Given an input face image, our model performs feature extraction using a deep convolutional network, followed by a classification stage. The extracted features are processed using a fully connected layer and a softmax function to classify the image as genuine or attack:

where

W represents the weight matrix,

F is the feature vector extract, and

b is a bias term.

To further increase the effectiveness of our PAD model, we employ adversarial training, where the model is exposed to synthetically generated attack samples. Instead of detailing the adversarial loss, we refer the reader to prior works on adversarial learning.

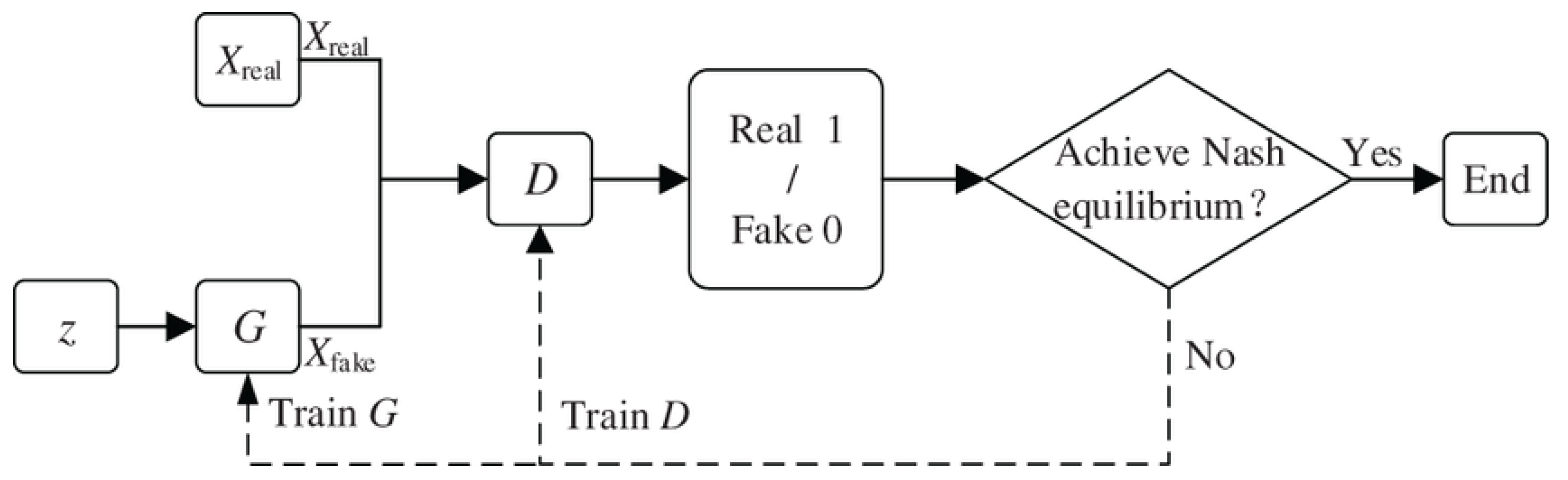

We utilize a GAN network shown in

Figure 7, where

| Algorithm 2: Pertinacious Student Network Training Algorithm |

Input: Genuine face images from , IT parameters , sparse selection (SS) density , initial regrowth rate , learning rate , maximum training iterations , batch size , and regrowth period T Result: SS parameters

- 1.

Initialize model parameters ; - 2.

For each convolutional layer, determine the parameter count for the m-th layer; - 3.

Identify indices for active parameters totaling as per Equation ( 6), and for inactive parameters totaling as per Equation ( 7); - 4.

Set parameters for all ; - 5.

For

to

do - (a)

Sample examples from ; - (b)

Extract features , , using , and features , , using ; - (c)

Calculate with , , , , , as shown in Equation ( 4); - (d)

Update active parameters in using , , and ; - (e)

Adjust regrowth rate using cosine decay; - (f)

If

then - i.

Count active parameters ; - ii.

Identify indices for the parameters to be pruned as per Equation ( 8); - iii.

Update active and inactive parameter indices: , ; - iv.

Identify indices for the parameters to be grown as per Equations ( 9)–( 12); - v.

Update active and inactive parameter indices: , ;

- 6.

Compute similarity between teacher and student representations using the defined measurement; - 7.

Calculate loss based on the weighted similarity sum at different feature levels; - 8.

Update Pertinacious Student (PS) network weights using gradient descent or an appropriate optimizer, minimizing with respect to student network parameters (); - 9.

Apply sparse training to adjust the non-zero parameter density in the PS network based on indicator magnitudes; - 10.

Perform regular parameter regrowth by pruning inactive parameters and growing new active ones based on their potential to reduce ;

Result: Return

|

Figure 7.

Basic structure of a generative adversarial network (GAN) [

33].

Figure 7.

Basic structure of a generative adversarial network (GAN) [

33].

During training, the teacher network acts as the discriminator, while the student network learns adversarial patterns. The adversarial loss function follows the standard GAN formulation, as in Equation (

16). This ensures that the student network learns more generalized attack features, improving its ability to detect unseen attacks.

The use of synthetic data in our model raises concerns regarding privacy and ethical implications, particularly the risk of misuse and data exposure. To mitigate these risks, we propose incorporating differential privacy techniques in synthetic data generation to prevent leakage of sensitive information. Additionally, access control mechanisms, including encryption and authentication protocols, can help regulate access to generated data. We also implement adversarial training techniques to minimize unintended data disclosure and enhance privacy protection. Bias in attack sample generation is another critical concern, and to address this, we ensure the use of balanced datasets that provide an equal representation of different demographic groups. Furthermore, fairness-aware training techniques and fairness evaluation metrics such as disparate impact and equalized odds are employed to mitigate algorithmic bias and ensure unbiased classification.

3.5. Network Architecture and Implementation Details

Our model consists of a deep convolutional neural network (CNN) with the following architecture:

Input Layer: Takes face images of size 224 × 224 pixels.

Convolutional Layers (5 layers): Extracts spatial features from facial images.

Batch Normalization and ReLU Activations: Ensures stable training and non-linearity.

Pooling Layers (2 layers): Reduces dimensionality, while preserving key facial features.

Fully Connected Layer (1 layer): Maps features to classification scores.

Softmax Output Layer: Classifies the image as real or attack.

Our model was trained using PyTorch, and the following hyperparameters were used:

Batch size: 32

Learning rate: 0.0001 (using Adam optimizer)

Number of epochs: 50

Pretrained weights: The model was initialized with weights from ResNet-50, followed by fine-tuning.

Before classification, face images were cropped using MTCNN (Multi-task Cascaded Convolutional Networks) to ensure accurate face localization. The cropped face was then resized to 224 × 224 pixels before being passed through the neural network.

During inference, the trained model evaluates input face images using both the mentor and learner networks. The teacher network extracts multi-level feature representations, which are processed using Equation (

17):

where

computes the cosine similarity between the teacher and student network features.

The final classification decision is based on a threshold

:

To further improve prediction accuracy, we use

Ensemble Learning: Aggregates outputs from the teacher and student networks.

Fine-Tuning: Adjusts the model for target-specific datasets.

Transfer Learning: Adapts the model to new attack variations with minimal re-training.

4. Observations and Experimental Results

To form an opinion of the effectiveness of our present model, in general and in client-specific domain adaptation scenarios, we conducted experiments using multiple protocols. These protocols depicted synthetic real-world settings, ensuring that the model maintained a high accuracy and adaptability across different PAD environments. We evaluated the model’s performance using cross-domain face PAD datasets, including IDIAP REPLAY-ATTACK [

20], CASIA-FASD [

34], MSU-MFSD [

35], NTU ROSE-YOUTU [

36], and OULU-NPU [

37].

Our approach introduces computational overheads due to the incorporation of multiple facial expression variations and diverse backdrops, which increase the processing power required for feature extraction and classification. This added complexity can impact inference speed, particularly on resource-constrained devices. To address these challenges, we discussed various optimizations, including model compression through pruning and quantization to reduce the model size, parallel processing leveraging GPU acceleration, and the use of lightweight data augmentation techniques to balance computational costs and data diversity. Furthermore, we explored the potential of edge AI models to ensure efficient deployment on resource-limited devices, without compromising accuracy.

To ensure a standardized evaluation of face presentation attack detection (PAD) performance, we used the internationally recognized ISO/IEC 30107-3 [

38] standard for biometric PAD appraisal. The following key performance metrics were considered:

Attack Presentation Classification Error Rate (APCER): Measures the ratio of attack presentations incorrectly classified as genuine.

Bona Fide Presentation Classification Error Rate (BPCER): Measures the ratio of Bona Fide Presentations (BPs) incorrectly classified as attacks.

Detection Error Tradeoff (DET) Curves: Visualize the tradeoff between APCER and BPCER, helping in performance comparisons.

4.1. General One-Class Domain Adaptation

We tested our face PAD system under one-class domain adaptation using the CIMN One-Class Domain Adaptation protocol, inspired by prior domain adaptation methods mentioned in

Table 1. The experiment followed these steps:

Each dataset was treated as a separate domain, serving as a source or target domain in different experimental settings.

The model was trained with full source domain training data and genuine face data from the target domain’s training set.

Performance was assessed using the target domain’s test set, evaluating the model’s generalization ability.

Comparisons wee made against the OULU One-Class Adaptation Structure A protocol from recent studies, to ensure a standardized evaluation.

4.2. Client-Specific Domain Adaptation

Our model’s quality in client-specific one-class domain adaptation was further evaluated using the CIM-N Client-Specific One-Class Domain Adaptation protocol. This setup enhanced the model performance for each target client by using limited authentic face data.

The experiment setup included

Ten clients selected from the NTU ROSE-YOUTU dataset, each representing a unique target domain.

Each client had 50 real-life face videos and 110 attack videos.

Twenty-five real face videos sample and 110 attack videos were used as the test set.

One frame per client from the remaining 25 authentic videos was used as training data.

Source Domains: CASIA-FASD (C), IDIAP REPLAY-ATTACK (I), and MSU-MFSD (M). The experiment was conducted under three sub-protocols: C-N-CS, I-N-CS, and M-N-CS, evaluating 10 client-specific tasks per protocol as sown in

Table 2.

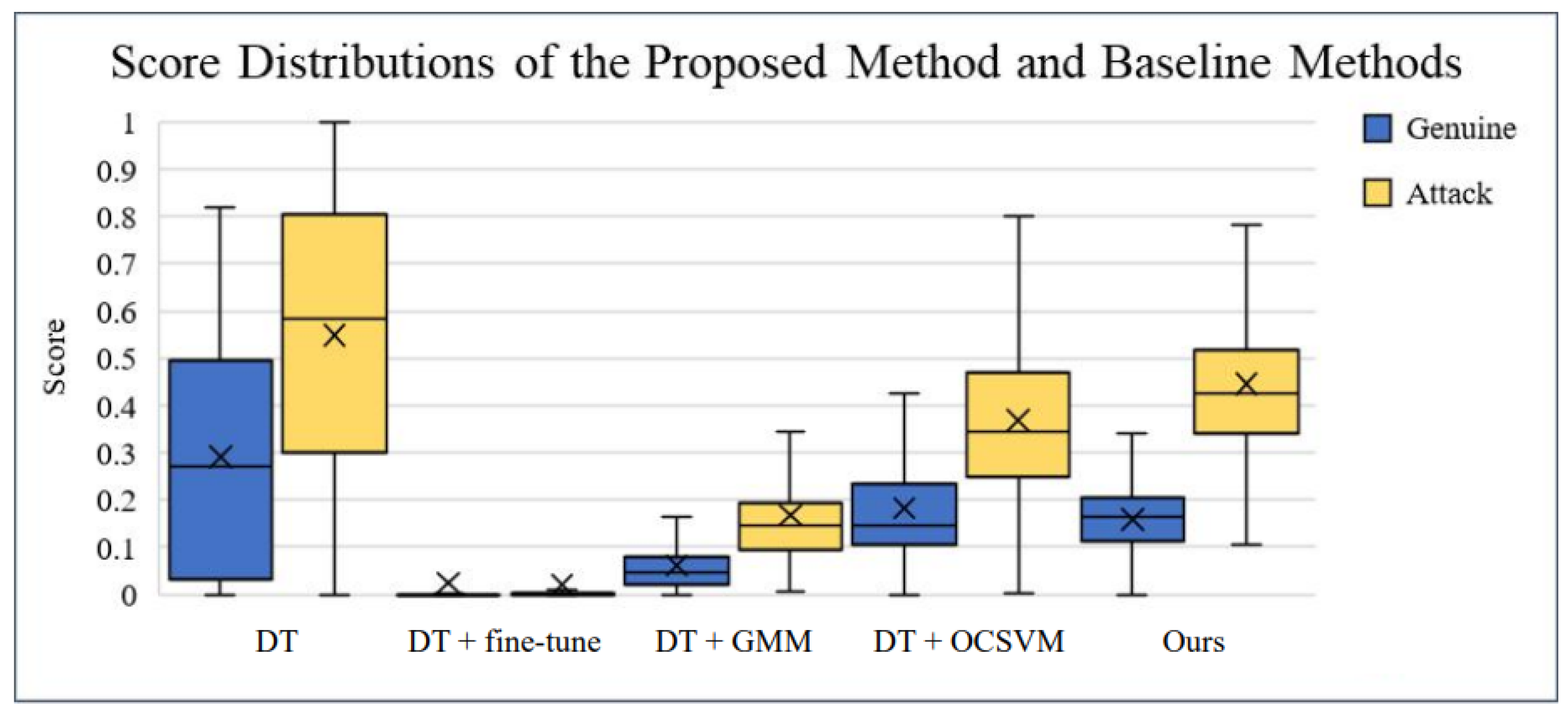

4.3. Comparison with Conventional Methods

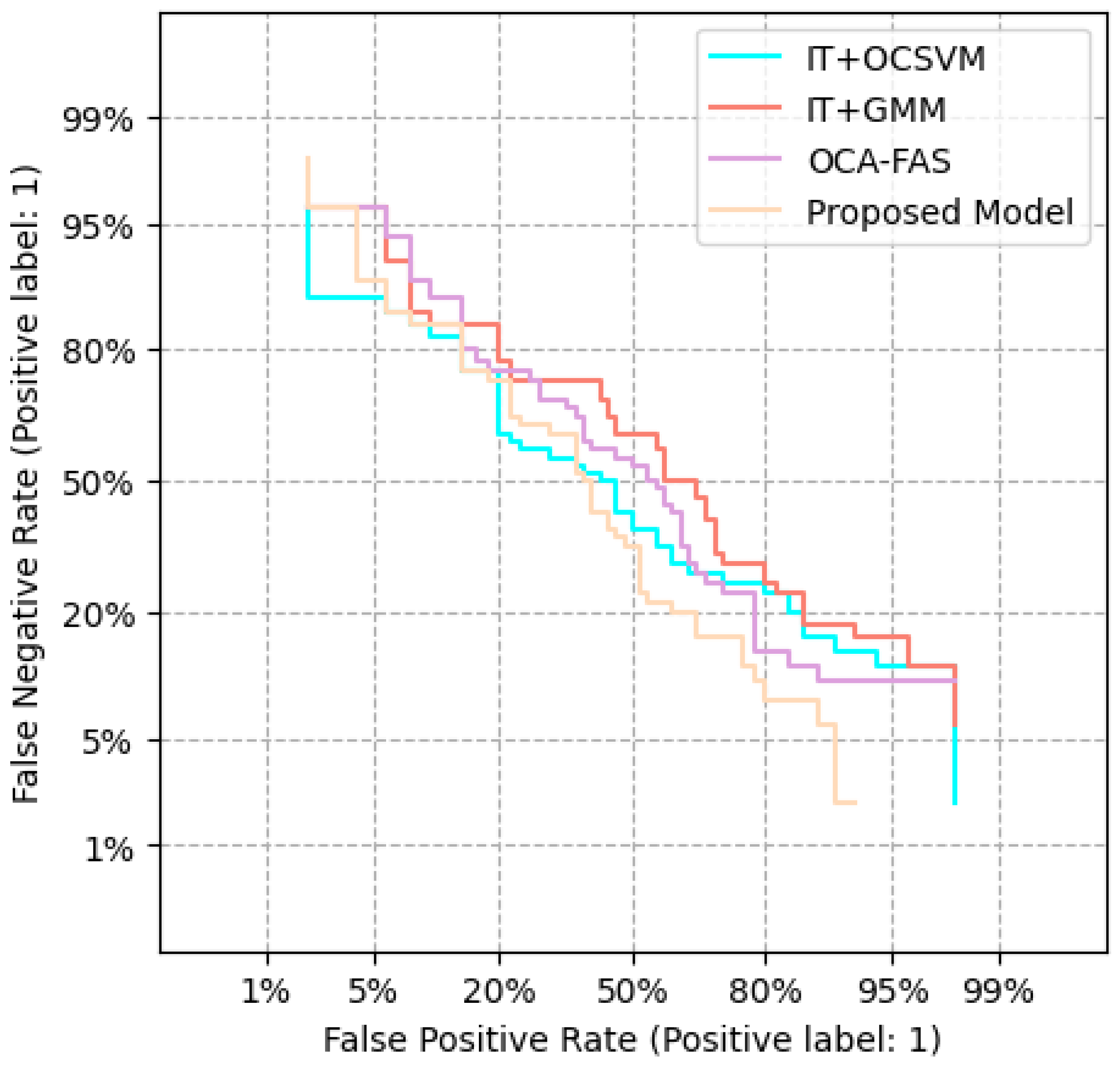

To validate the performance of our presented system, we implemented several conventional PAD techniques in one-class domain adaptation. These included

Depth Regression Network (DT): Used as the backbone method, trained on the source domain data shown in

Figure 8.

IT + OCSVM: One-Class Support Vector Machine classifier trained using features from the pre-trained IT model.

IT + GMM: Gaussian Mixture Model trained using IT-extracted features, commonly used for one-class classification.

OCA-FAS: A recent one-class adaptation method for face PAD.

Unsupervised Domain Adaptation Methods: Comparisons were made against KSA, ADA, UDA, and USDAN-Un.

4.4. Key Observations

Our model consistently outperformed the existing PAD methods in general, one-class, and client-specific domain adaptation.

APCER and BPCER values were significantly lower, ensuring fewer false positives and negatives.

The introduction of facial expression-based classification and background analysis contributed to improved cross-domain generalization.

DET curves confirm that our model maintained better performance trade-offs compared to the conventional methods.

5. Details of Implementation

To ensure a fair evaluation, we followed a standardized preprocessing pipeline across all datasets. The implementation details, including data preprocessing, model training, and evaluation settings, are discussed below.

We adopted a consistent preprocessing approach for the five datasets used in this study: CASIA-FASD [

34], IDIAP REPLAY-ATTACK [

20], MSU-MFSD [

35], NTU ROSE-YOUTU [

21], and OULU-NPU [

37]. The data preprocessing steps were as follows:

Frame Sampling: Unlike previous works that randomly selected 50 frames per video, we followed the data selection protocol of [

10], uniformly selecting 25 frames per video. This ensured comparability with standard PAD methods.

Facial Detection and Alignment: We used the dlib library for face detection and alignment, ensuring consistent cropping and frontal alignment across datasets.

Facial Resizing: All face images were resized to 128 × 128 pixels, maintaining consistency and reducing computational overhead.

To validate the impact of frame selection, we compared results using 50 random frames and 25 uniformly selected frames. The results indicated minimal performance differences, supporting our decision to adopt the standardized 25-frame selection.

The final number of images obtained per dataset after preprocessing were CASIA-FASD—30,000, IDIAP REPLAY-ATTACK—58,400, MSU-MFSD—13,700, NTU ROSE-YOUTU—161,950, and OULU-NPU—246,750.

We used the Adam optimizer to train both the DT network and SS network. The IT network was trained over 8400 renewals with a small batch size of 30 and learning rate of

. The SS network was trained over 1500 iterations with a mini-batch size of 25 and the same learning rate. In our framework, we set the weights in Equation (

4) to be equal, each with a value of 0.33. For tests with SS densities of 10% and 1%, we set the regrowth period

T to 60 and the initial regrowth rate

to 50% and 20%, respectively, using cosine decay to adjust the regrowth rate.

We used PyTorch version 1.7.0 to develop our suggested framework as well as the baseline techniques, ensuring compatibility and leveraging the deep learning framework’s capabilities.

5.1. General Domain Adaptation Experiments

5.1.1. Experiment with CIMN-OCDA

We tested the efficiency of our suggested methodology for face presentation attack detection (PAD) using the CIMN-OCDA protocol. Our approach was compared to baseline methods, all utilizing the same IT model as feature extractor. The student (PS) model’s density was fixed at 10%. We evaluated the cross-dataset achievements of the face PAD techniques with two experimental setting: ideal, and demanding. The experimental results for the ideal settings are shown in

Table 3.

The Half Total Error Rate (HTER) performance was directly computed on the target dataset’s test set using the optimal threshold in the ideal environment.

Table 3 shows the results for this optimal configuration. In the demanding setting, the HTER was computed at a threshold selected on a validation set, with the False Rejection Rate (FRR) set to 10%. For trials using dataset I as the target, authentic face samples from the development set served as the evidence set. For datasets C, M, and N, which did not have a development set, we used 20% of the real face samples from the target dataset’s training set as a validation set, as shown in

Table 4.

Table 5 presents the outcomes for the demanding situation.

Our proposed methodology consistently achieved a significant reduction in HTER across the various tasks compared to the DT method

Table 6. The average HTER reduction exceeded 10%, demonstrating the effectiveness of our strategy in improving face PAD performance with a small number of authentic face samples from the target domain. Furthermore, our technique outperformed the streamline methods using 1C domain adaptation, achieving the best performance in the ideal and challenging trial scenarios shown in

Table 7.

In

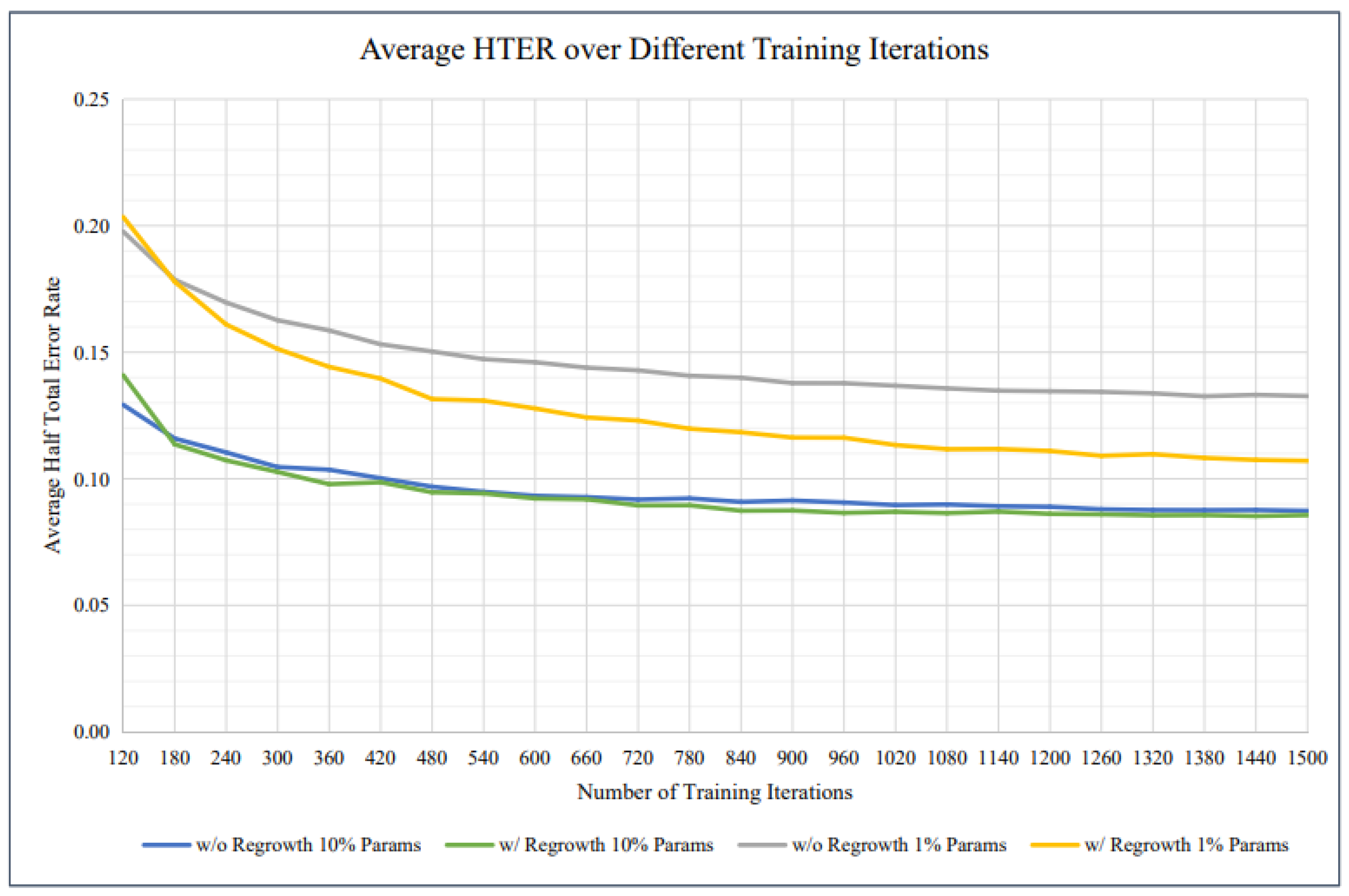

Figure 9, apresents a comparative analysis of average Half Total Error Rate (HTER) across different training iterations for models trained with and without parameter regrowth. The graph includes four curves representing different conditions: models trained without regrowth using 10% parameters (blue line), with regrowth using 10% parameters (green line), without regrowth using 1% parameters (gray line), and with regrowth using 1% parameters (yellow line). The trends indicate that models incorporating parameter regrowth generally achieve lower HTER, demonstrating improved performance. Additionally, models trained with 10% parameters consistently outperform those using only 1% parameters. This comparison highlights the effectiveness of regrowth strategies in enhancing model training efficiency and accuracy.

5.1.2. Experiments on OULU-OCA-SA

For our proposed technique against OCA-FAS using the OULU-OCA-SA protocol, a recent method for PAD, we tested our approach on Protocol 3 in OULU-OCA-SA, setting the density of the Student (SS) model to 10%. The experimental results for Protocol 3, as shown in

Table 4, revealed that our technique significantly outperformed both DTN and OCA-FAS. Our approach achieved an AUC of 99.98% and an Average Classification Error Rate (ACER) of 0.08%. Incorporating genuine face samples from the targeted domain for domain adaptation led to a 3.49% reduction in ACER and a 1.46% improvement in AUC compared to our baseline technique shown in

Figure 10.

5.2. Client-Specific Domain Adaptation Experiments

We conducted experiments in a general one-class domain adaptation setting, demonstrating the strong effectiveness of our proposed method for improving face presentation attack detection (PAD) performance using only a few genuine face images from specific target clients shown in

Table 8.

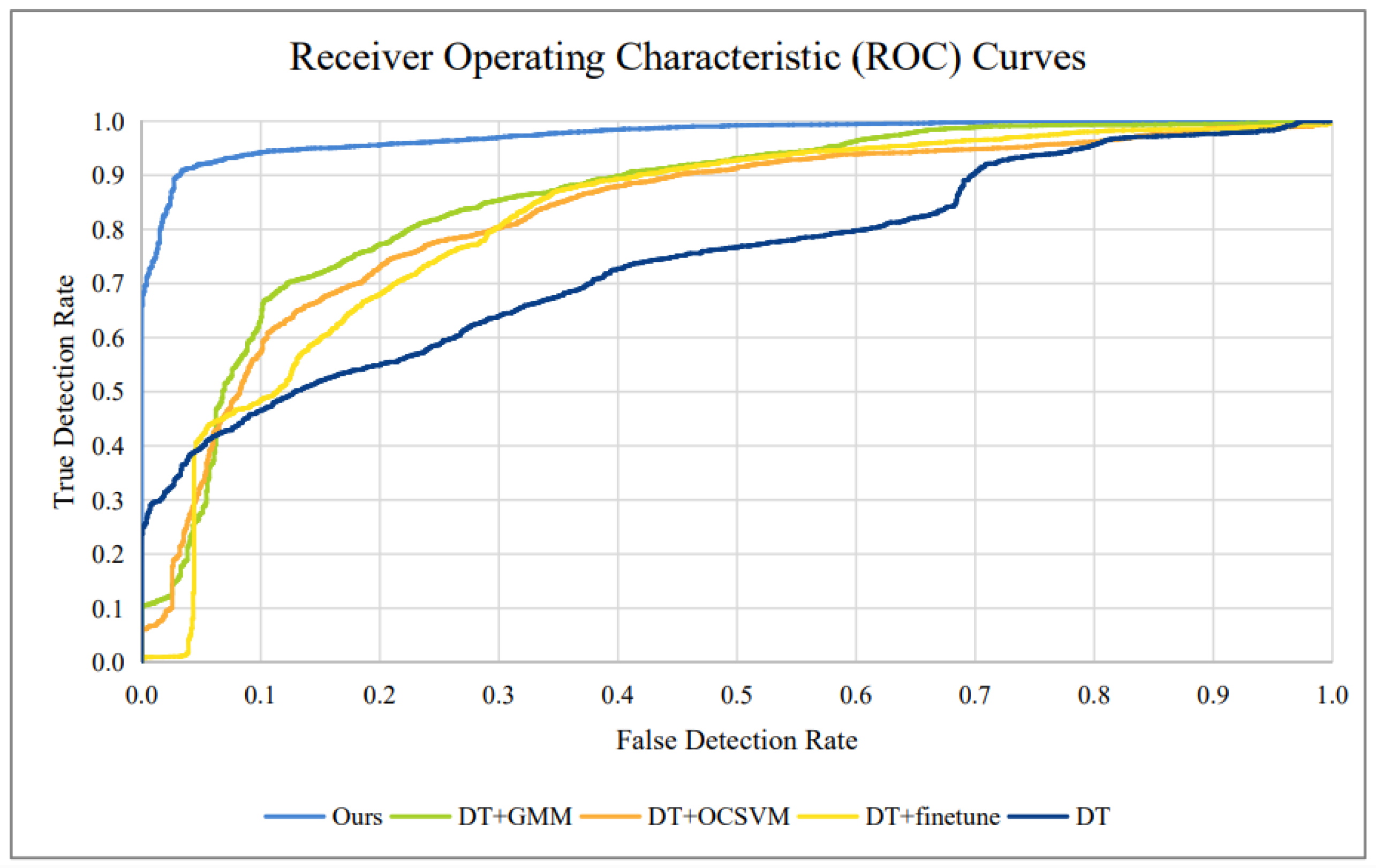

Furthermore,

Figure 11 depicts the Receiver Operating Characteristic (ROC) curves of our presented approach and baseline methods on job 1. For various False Detection Rate (FDR) situations, the True Detection Rate of our technique outperformed the TDRs of the baseline methods. The benefit of our strategy was especially noticeable for low FDR thresholds.

To evaluate the robustness of our approach across cultural and ethnic contexts, we conducted tests on datasets containing diverse ethnic groups and cultural backgrounds. Our findings suggested that variations in skin tone, facial structure, and cultural expressions could influence model performance. While our method demonstrated strong generalization across different ethnicities, minor discrepancies in accuracy were observed due to dataset imbalances. To enhance fairness and inclusivity, we propose expanding the training data to incorporate additional cultural variations, thereby improving the model performance across diverse populations.

7. Conclusions

In this research, we proposed a series of novel strategies to enhance the classification accuracy of face presentation attack detection (PAD) models, with a focus on challenging cases. Our approach expands face PAD by incorporating facial expressions and dynamic backdrops, leveraging data augmentation techniques, adversarial training, ensemble learning, fine-tuning, transfer learning, model interpretability, and attention mechanisms. Through rigorous experimentation on benchmark datasets, we demonstrated that our proposed methodology significantly outperformed existing approaches. The improvements achieved in classification accuracy can enhance the security and reliability of face recognition systems in real-world applications. Furthermore, our refined evaluation framework followed standardized preprocessing protocols, including the uniform selection of 25 frames per video, ensuring fair benchmarking with state-of-the-art PAD methods. While our method showed substantial potential, certain limitations remain. Future research should focus on addressing dataset biases, improving cross-domain adaptability, scaling to larger datasets, enhancing robustness against novel attack strategies, and enabling real-time implementation. By refining and extending the methodologies presented, we can further enhance the effectiveness and applicability of face PAD systems. Ultimately, our work contributes to strengthening the security and reliability of facial recognition systems, ensuring their robustness across diverse real-world environments.