Abstract

Online video platforms have enabled unprecedented access to diverse content, but minors and other vulnerable viewers can also be exposed to highly graphic or violent materials. This study addresses the need for a nuanced method of filtering gore by developing a segmentation-based approach that selectively blurs blood. We recruited 37 participants to watch both blurred and unblurred versions of five gory video clips. Eye-based physiological and gaze data, including eye openness ratio, blink frequency, and eye fixations, were recorded via a webcam and eye tracker. Our results demonstrate that partial blood blurring substantially lowers perceived gore in more brutal scenes. Additionally, participants exhibited distinctive physiological reactions when viewing clips with higher gore, such as decreased eye openness and more frequent blinking. Notably, individuals with a stronger fear of blood showed an even greater tendency to blink, suggesting that personal sensitivities shape responses to graphic content. These findings highlight the potential of segmentation-based blurring as a balanced content moderation strategy, reducing distress without fully eliminating narrative details. By allowing users to remain informed while minimizing discomfort, this approach could prove valuable for video streaming services seeking to accommodate diverse viewer preferences and safeguard vulnerable audiences.

1. Introduction

With the expansion of internet infrastructure, more people than ever have access to high-speed online services [1,2]. This increased connectivity has fueled the global popularity of streaming platforms such as YouTube and Over-The-Top (OTT) media services (e.g., Netflix). According to a Statista report, approximately 3.1 billion OTT video mobile apps were downloaded worldwide as of 2023 [3]. Short-form video platforms like YouTube Shorts, TikTok, and Reels have also shown rapid growth, highlighting the pervasiveness of online video consumption. As smartphones and mobile networks become widespread, video streaming has evolved into a daily activity for a broad spectrum of users.

Despite the convenience and entertainment value of online videos, harmful or inappropriate material—such as gory or violent clips—remains easily accessible to minors with minimal oversight. For instance, social media platforms like Instagram, Snapchat, and TikTok can expose children and adolescents to unsuitable content [4,5], and a large-scale Mozilla Foundation study found that approximately 15% of user “regrets” on YouTube were due to violent or graphic content recommended by the platform [6].

Viewers are also becoming desensitized to violent imagery, often seeking more intense stimuli for continued engagement [7]. This issue is amplified by the ease with which anyone—including children—can encounter disturbing scenes [8,9]. Moreover, prolonged exposure to violent media correlates with increased aggression and hostility [10,11], and unexpected encounters with blood or gore can trigger strong disgust or revulsion [12]. These findings underscore the need for targeted solutions that limit exposure to graphic content without eliminating its informative or contextual elements.

Many studies have explored automated techniques to detect and filter explicit or harmful video content (e.g., violence or nudity) through machine learning and deep learning [9,13,14]. Some methods block or warn about flagged segments [8,15], while others remove violent frames entirely [16] or apply a blurring technique to obscure inappropriate scenes or objects [17,18,19,20,21]. Although these strategies effectively limit audiences’ exposure to problematic content, most research focuses on detection accuracy and filtering performance, neglecting to examine how such interventions influence user experiences.

Studies on viewers’ physiological and behavioral responses to gruesome or frightening material have revealed that variables like blink frequency, eye gaze, and heart rate shift when exposed to violent imagery [22,23,24,25]. However, many of these works compare highly disturbing stimuli with entirely neutral clips, leaving a gap in understanding how partial reductions in gore—such as segmentation-based blurring—affect the viewing experience. Additionally, individual differences in fear or phobia may significantly alter users’ reactions, including avoidance behavior and elevated arousal [26,27,28].

To address these gaps, we developed a segmentation-based blood-blurring model aimed at reducing the intensity of violent scenes. We then compared user experiences when watching blurred versus unblurred versions of the same clips. Using a webcam and an eye tracker, we collected eye-focused physiological and gaze data—Eye Openness Ratio, Blink Frequency, and Eye Fixations—to answer the following research questions:

- RQ1: To what extent does a segmentation-based blood blurring reduce viewers’ perceived gore?

- RQ2: How do different levels of gore influence viewing experience (i.e., eye-focused physiological reactions and eye gaze)?

- RQ3: In what ways is an individual’s fear level (i.e., blood- or injury-related phobias) associated with the viewing experience of gory videos?

Through an experiment involving 37 participants, we observed that (1) our blood-blurring model effectively lowers perceived gore, (2) participants exhibit significant differences in physiological and attentional indicators when viewing more graphic footage, and (3) an individual’s fear of blood (particularly blood-self) correlates with increased blink frequency.

Our study makes several contributions to Human–Computer Interaction (HCI). First, it transcends binary comparisons of highly violent versus neutral content by investigating how varying levels of gore affect viewer experience. Second, by monitoring physiological signals and gaze patterns, it shows that partial blurring can mitigate distress without fully removing underlying details. Third, these insights inform design implications for interactive video platforms, such as adjustable blood blurring or responsive moderation aligned with personal gore tolerance. Ultimately, our work supports more nuanced content moderation strategies, helping viewers stay informed without unnecessary exposure to graphic imagery.

2. Related Work

2.1. Detection and Filtering of Inappropriate Video Content

A significant body of research has focused on detecting and filtering inappropriate or harmful content in videos, such as violence and nudity, using machine learning and deep learning approaches. For instance, researchers have proposed methods that analyze visual cues (e.g., bloodstains and weapons) and auditory signals (e.g., gunshots and screams) to identify violent scenes [9,13]. Others have expanded these techniques to handle multiple modalities, including text, images, and video, in order to recognize not only violence or sexual content but also hate speech [14].

Beyond mere detection, several studies focus on warning users or completely blocking access to content deemed inappropriate. One system [8] issues alerts to a guardian whenever TikTok detects violent or unethical clips, allowing the guardian to restrict access. Similarly, other approaches filter or remove flagged segments, effectively preventing underage users from encountering explicit video content [15]. Some systems even delete frames containing violent scenes to reconstruct clean versions of the content [16].

Another line of research focuses on using blurring techniques to hide explicit or graphic visuals in a video rather than blocking the entire content. Some methods identify entire scenes that are considered inappropriate or violent and apply a blur to these sections [17,18]. Other advanced approaches concentrate on detecting harmful or explicit objects in images or videos [19,20,21], using machine learning or deep learning to locate the problematic areas and obscure them without affecting the rest of the scene.

Although these strategies effectively limit the audience’s exposure to inappropriate or harmful content, most studies concentrate on detection accuracy and filtering performance without thoroughly examining how such measures affect the user’s viewing experience. Our study not only develops a deep learning model to detect harmful content in violent scenes and apply blurring but also explores how this intervention shapes viewers’ experiences. By examining physiological data (i.e., eye openness ratio and blink frequency), behavioral data (i.e., eye gaze), and self-reported (i.e., perceived gore) data, we seek to clarify the impact of blurring on audience reactions. This user-centered perspective provides valuable insights for developing advanced content moderation systems that balance user safety with a nuanced, customizable viewing experience.

2.2. Behavioral and Physiological Reactions to Inappropriate or Harmful Content

Research suggests that individuals exhibit distinctive facial reactions, including eye squeezing, when viewing inappropriate or harmful content. One study [29] reported more pronounced facial muscle tension, such as furrowed brows, among participants watching fear-related stimuli (e.g., accident scenes, aggressive animals) compared to those observing neutral images. Another investigation [25] found that viewers cringed at disgust-inducing images (e.g., spoiled food, contaminated objects).

In addition, several works [22,23,30] noted that blink frequency often rises in response to violent or gore-heavy imagery, possibly reflecting a heightened startle reflex. Further evidence indicates that participants blinked more often when viewing aggressive images involving explosions, gunshots, or violence, suggesting that heightened aggression can amplify bodily responses [31]. Another work discovered that blink frequency increased when viewing aggressive video clips that elicited disgust, reflecting both attention diversion and an emotional avoidance response [24].

Many viewers avert their gaze more swiftly when confronted with threatening or repulsive content. Researchers [25,28] observed that participants showed shortened fixation times and a faster tendency to avoid frightening stimuli compared to neutral scenes.

Heart rate, skin conductance, blood pressure, and respiration can also shift considerably during the viewing of gruesome or frightening material. Studies [11,22,23,32,33,34,35] discovered increased heart rate in response to fear-inducing clips, violent scenes, or the presence of blood. Electrodermal Activity (EDA), the electrical changes measured on the surface of the skin that result from the activation of sweat glands, often triggered by emotional or physiological arousal, tends to rise when individuals see images of snakes, open wounds, or realistic blood colors [22,32,33,36], signaling heightened autonomic arousal. Research also links blood phobia to higher blood pressure [27], and multiple experiments reported accelerated breathing rates among those confronted with frightening film clips or surgeries [27,32,33].

Most previous work compares physiological responses to highly disturbing content or entirely neutral material. These comparisons do not always capture how partially reducing gore influences audience reactions. Our study addresses these gaps by examining user responses to the same video clips presented in both blurred and unblurred formats. This design clarifies how decreasing the visibility of graphic elements affects blinking, gaze, and other measurements, and extends beyond mere avoidance to investigate whether participants actually look at or ignore the gruesome objects in both versions of the same clip.

2.3. Viewing Experiences Depending on Individual Characteristics

Several studies have examined how personal fears or phobias influence responses to gory or disturbing content. Researchers have classified participants into groups based on blood–injection–injury (BII) or blood phobia, and then compared their reactions when viewing surgical footage or other graphic stimuli [26,27]. Results showed that individuals with higher blood-related fears reported stronger anxiety and displayed elevated physiological arousal, such as increased heart rate, blood pressure, or heightened avoidance behaviors.

Other investigations have focused on variations in fear responses at the individual level rather than within predefined phobia groups. Some studies relied on validated scales such as the Fear Survey Schedule (FSS) [37], the Fear of Pain Questionnaire (FPQ) [38], and the Fear Questionnaire (FQ) [39] to assess each participant’s fear levels [28,30]. Higher fear ratings correlated with stronger physiological reactions, such as more frequent blinking, faster breathing, or a tendency to look away when viewing gory or threatening scenes. In a virtual reality setting, greater intensity of frightening stimuli was associated with more pronounced gaze aversion, an effect that was magnified among participants who scored high on fear questionnaires [28].

Many previous studies have compared videos featuring intense or explicit content (e.g., violence, gore, or other disturbing material) with entirely neutral or nonviolent footage. This approach leaves a gap in our understanding of how partial reductions in gore might influence viewers. To address this gap, our study presents the same clips in both blurred and unblurred forms, and then measures physiological and gaze-related responses. In addition, fear of blood is assessed through subscales that distinguish between blood-self and blood-other, allowing for a deeper analysis of how individual blood-related sensitivities interact with different levels of gore in the same video.

3. Methodology

3.1. Development of a Blood Detection and Blurring Algorithm

In this study, we aim to detect blood in video content and apply blurring to enhance viewers’ overall watching experience. To achieve this, we develop and evaluate a model capable of segmenting and blurring blood regions in video frames.

For blood detection in videos, we adopt YOLO-based instance segmentation models. YOLO is known for its rapid inference speed and high accuracy. Among the available models, we focused on YOLOv5s-seg and YOLOv8s-seg. YOLO offers various model sizes—nano (n), small (s), medium (m), large (l), and xlarge (x)—with different levels of complexity. We selected the small (s) models to balance performance and inference speed.

To fine-tune the YOLO models to detect blood, we utilized three open blood-segmentation datasets [40,41,42]. In total, 4787 images (and their corresponding annotations) were used. These images included various red objects—such as bloodstained items (e.g., knives, tissues), bleeding faces and body parts, injuries, gore movie scenes, surgical images, blood droplets, and specimen containers/syringes holding blood—as well as non-blood images (e.g., red carpets, red clothes/accessories, red fruits, red text, general faces, and objects). The annotation files contained segmentation boundary coordinates for all visible blood regions in each image. We trained instance segmentation models on the datasets described above, focusing on YOLOv5s-seg and YOLOv8s-seg. The hyperparameters for both models were set as follows: epoch = 50 or 100, batch = 64, and imgsz = 640.

To evaluate model performance, we performed 5-fold cross-validation. We used evaluation metrics, such as Precision, Recall, and Mean Average Precision (mAP). The evaluation result is shown in Table 1. The mAP metric provides a comprehensive assessment of detection performance by computing precision at various recall levels. Specifically, we adopted the two following variations of mAP [43]:

- mAP50: This version considers a true positive if the predicted and ground-truth bounding boxes have an Intersection over Union (IoU) of at least 0.5. Since a 50% overlap is relatively lenient, this metric can be viewed as a less strict standard of detection.

- mAP50-95: Here, IoU thresholds range from 0.50 to 0.95 in increments of 0.05. Each threshold’s mAP score is calculated, and these scores are then averaged. This approach is more stringent, requiring a larger overlap between predicted and ground-truth boxes than the previous criterion.

Table 1.

Comparison of YOLO-based blood detection via instance segmentation: bounding boxes vs. segmentation masks.

Table 1.

Comparison of YOLO-based blood detection via instance segmentation: bounding boxes vs. segmentation masks.

| Metric | YOLOv5s-Seg | YOLOv8s-Seg | ||

|---|---|---|---|---|

| Epoch = 50 | Epoch = 100 | Epoch = 50 | Epoch = 100 | |

| Box | ||||

| Precision | 0.825 | 0.869 | 0.787 | 0.837 |

| Recall | 0.695 | 0.732 | 0.633 | 0.691 |

| mAP50 | 0.744 | 0.776 | 0.703 | 0.757 |

| mAP50-95 | 0.498 | 0.555 | 0.503 | 0.578 |

| Mask | ||||

| Precision | 0.789 | 0.828 | 0.764 | 0.819 |

| Recall | 0.647 | 0.683 | 0.605 | 0.655 |

| mAP50 | 0.688 | 0.726 | 0.661 | 0.718 |

| mAP50–95 | 0.377 | 0.432 | 0.388 | 0.452 |

By comparing both mAP50 and mAP50-95, we can gauge not only the overall detection ability of our models but also how they perform under increasingly strict IoU requirements. The results demonstrate the effectiveness of our YOLO-based models in detecting blood across diverse scenarios. The evaluation result shows that the YOLOv8s-seg model trained for 100 epochs exhibited the most balanced performance in blood segmentation, making it our chosen model for subsequent analyses.

Using the chosen YOLOv8s-seg model, we applied ffmpeg [44] to extract the audio track and split the video into individual frames at a rate of 30 fps. Next, we used cv2 [45] to read each frame and apply instance segmentation of blood. This process generated a pixel-wise segmentation map that assigned each pixel to a detected object instance, providing both box coordinates (for bounding boxes) and mask coordinates (for the segmented regions). The box coordinates, stored in a separate file along with frame numbers, were later leveraged for gaze-tracking experiments. The mask coordinates, which capture precise pixel boundaries, enabled the application of blur to identified blood regions. We then re-rendered each processed frame, combined it with the previously extracted audio track, and produced a final output video whose blood content was blurred.

3.2. Recruitment

Between December 2024 and January 2025, we conducted an experiment to examine the effectiveness of blurring blood scenes. A recruitment poster was created and distributed via the university’s online communities and bulletin boards, inviting potential participants to complete a screening survey. This survey, administered through Google Forms, included the adapted version of the Multidimensional Blood/Injury Phobia Inventory (MBPI) [46]. We adapted three blood-related subscales, Blood-self (4 items), Injury (6 items), and Blood/injury-Others (5 items) from the MBPI. The participants answered each item on a 4-point Likert scale (1 = very slightly or not at all; 4 = extremely). In addition, the survey also included sample images and video materials containing blood that participants would later view during the experiment. We recruited individuals who completed the survey and indicated they had no issues watching videos containing blood.

A total of 50 individuals initially indicated both their willingness to participate and their comfort with watching gory videos via the survey. However, 13 failed to schedule a visit or did not attend due to personal reasons (e.g., flu or scheduling conflicts), leaving 37 final participants (15 females; age: M = 24.7, SD = 2.0). This study was conducted after obtaining approval from the Institutional Review Board (IRB) (approval number: KHGIRB-24-701). All participants were fully informed about the study’s objectives, procedures, and data protection measures, and they voluntarily consented to participate. They also agreed to the collection and use of their data for research purposes and were informed of their right to withdraw at any time.

3.3. Experiment

Each participant was invited individually to a laboratory that was fully set up for the experiment. The authors were present to provide and explain the research guidelines. During the experiment, only one participant and the authors remained in the laboratory, and no one else was permitted to enter or leave, ensuring that participants could concentrate on the experiment.

As a preview, participants first watched a 1.5 min video clip where a soldier, shot in the abdomen during war, receives treatment, from Saving Private Ryan (1998) (01:28:45–01:30:15), to remind them that the experiment would involve scenes containing this kind of blood and gore. They were then asked to sign the consent form before proceeding with the main part of the experiment. Five primary video clips were used:

- Clip (1)

- Parasite (2019): A South Korean black comedy–thriller film directed by Bong Joon-ho. This clip includes a scene in which a knife murder turns a party into complete chaos. The video clip (00:00:02–00:02:03) is from YouTube [47].

- Clip (2)

- Dr. Romantic (2016): A South Korean television medical melodrama. This clip shows an emergency patient undergoing abdominal surgery in a hospital. The clip (00:03:44–00:08:02) is from YouTube [48].

- Clip (3)

- Nasal Tip Plasty Surgery: The clip is about a cosmetic surgical scene. The clip (00:00:11–00:00:47) is from YouTube [49].

- Clip (4)

- The Witch: Part 2 (2022): A South Korean science fiction action horror film by Park Hoon-jung. This clip shows bullets being removed one by one from the body of a person lying as if dead. The clip (00:31:27–00:32:40) is from Naver Series On, which is an online VOD service [50].

- Clip (5)

- The Revenant (2015): An American epic Western action drama film directed by Alejandro G. Iñárritu. This clip shows a group of passersby discovering a person who collapsed after fighting a bear and administering emergency treatment. The clip (00:29:23–00:32:29) is from Naver Series On, which is an online VOD service.

All five video clips feature bloody scenes that might require blurring for some viewers. Each clip was provided in two versions (original and blurred), resulting in a total of 10 clips. Although the chosen clips differ in duration, we retained each clip’s natural length to preserve important contextual information because the contextual information strongly shapes perceived gore. Our repeated-measures design, where each participant viewed both the original and blurred versions of the same clip, mitigates potential effects from varying run times. To minimize learning effects, each participant viewed all 10 video clips in a randomized, counter-balanced order, accounting for both the clip sequence and the version type.

While each participant watched the videos, we collected three sensor-based measurements—Eye Openness Ratio, Blink Frequency, and Gaze Coordinates—using a webcam and an eye tracker. Therefore, prior to watching the short clip from Saving Private Ryan (1998), each participant underwent an individual calibration session to enable precise tracking of gaze coordinates and eye openness.

Eye Openness Ratio: We used the Eye Aspect Ratio (EAR) algorithm [51] to track the degree of eye openness. EAR is a computer vision technique that measures how open or closed a person’s eyes are by tracking specific facial landmarks around each eye. The algorithm computes a ratio of the vertical distances between the eyelids to the horizontal distance across the eye: when the eyes are more open, the ratio is larger, and when they are closed or blinking, the ratio decreases. We implemented the EAR algorithm in Python (v3.10.4) using the Dlib (v19.24.6) library [52].

Blink Frequency: To measure blink frequency, we also used the EAR algorithm. Referring to previous studies measuring blink frequency [53,54,55,56,57,58], which indicate that 0.25 is an empirically appropriate threshold that effectively balances the risk of missing subtle eye movements (noise) against overcounting blinks, and ensures reliable results across different conditions such as lighting and face angles—we set 0.25 as our criterion for counting blinks.

Gaze Coordinates: To collect users’ gaze coordinates, we employed eye trackers capable of monitoring eye movements. Specifically, we used a Tobii Eye Tracker 5 (Tobii, Stockholm, Sweden) device, which does not require any direct attachment to the participant. The Tobii Eye Tracker 5 (Tobii, Stockholm, Sweden) typically maintains an accuracy of about 30 pixels (1.01°) from the user’s true gaze point across the entire screen [59], and it has been widely used in UX and cognitive studies involving gaze-based metrics [60,61,62,63]. As in previous research, all experiments were conducted on a Full-HD (1920 × 1080) monitor to ensure consistent resolution conditions for all participants. We integrated the Tobii Game Integration SDK (v9.0.4.26) within a Unity environment to gather gaze coordinates (for instance, x and y), along with timestamps in milliseconds. These data were used to check whether a users’ gaze coordinate was located inside the blurred-box coordinates of the video. Before the experiment, each participant underwent a personal calibration using the Tobii Experience app (v1.69.32600). During calibration, the participants were instructed to keep their bodies and heads stable, moving only their eyes to follow a point on the screen. After the calibration, participants were allowed to move their head and body freely while watching the video during the experiment. These gaze coordinates were used to determine whether the participants looked at the blood or blurred regions within the video clips.

We applied calibration to three types of sensor data—Eye Openness Ratio, Blink Frequency, and Gaze Coordinates. The Eye Openness Ratio and Blink Frequency, recorded via a webcam using the Eye Aspect Ratio (EAR), were logged whenever a change in eye movement was detected (see Figure 1). On average, about three data points were captured per 0.1 s, so we used interpolation to normalize the values at 0.1 s intervals. Similarly, the Gaze Coordinates from an eye tracker were logged at approximately 30 frames per second, and we employed an averaging interpolation method to align them with the same 0.1 s intervals.

Figure 1.

Experimental environment for video clip viewing. (a) A participant performing an eye-tracking calibration procedure (an eye tracker is attached to the lower bezel of the monitor). (b) A participant watching the experimental video while sensor data (e.g., Eye Openness Ratio and Blink Frequency) are collected.

Over the course of about one hour, participants viewed 10 video clips, 5 original versions and 5 blurred to reduce bloody content, and then completed a brief interview after each viewing. Following each video, they rated its gore level on a 7-point Likert scale (1 = not gory at all; 7 = extremely gory), in line with prior research that used a 7-point scale to capture nuanced differences in perceived brutality [64,65]. They also provided a written rationale for their rating and participated in an interview about their viewing experience. To facilitate relative comparisons, the participants were instructed to treat the clip from Saving Private Ryan (1998) as having a score of 4, the midpoint of the 7-point scale.

The participants were informed that they could take breaks before or after viewing any video clip, and that they were free to close their eyes or look away from the screen if they felt uncomfortable. They were also assured that there would be no penalty for taking such breaks. All interviews were recorded with the participants’ consent, and upon completing the experiment, each participant received KRW 20,000 (approximately USD 15) as compensation.

4. Results

In this section, we used both user survey responses (the level of perceived gore) and sensor data (Eye Openness Ratio, Blink Frequency, and Eye Fixation on Blood Regions Using Gaze Coordinates) to explore the effects of our blurring model by applying statistical analyses. First, we ran a Shapiro–Wilk test to check for normality. Based on those results, we performed either a Wilcoxon signed-rank test (or a Friedman test for comparisons among three or more samples) or a t-test (or an ANOVA for three or more samples). All analyses were basically conducted at a 95% confidence level ( = 0.05).

We present our findings for RQ1 in Section 4.1, for RQ2 in Section 4.2, Section 4.3 and Section 4.4, and for RQ3 in Section 4.5.

4.1. Comparison of Perceived Gore Across Multiple Perspectives

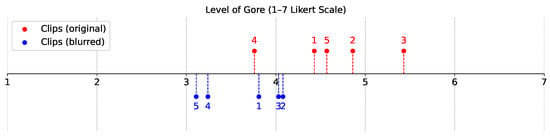

We examined whether the perceived level of gore differed across the video clips for each version (original vs. blurred). A Friedman test showed statistically significant differences in both versions (). A Dunn–Bonferroni post-hoc analysis indicated that for the original version, Clip 4 differed significantly from Clips 1, 2, 3, and 5, whereas for the blurred version, Clip 5 differed significantly from Clips 1, 2, and 3, and Clip 4 differed significantly from Clips 2 and 3. All pairwise comparisons were significant at the 95% confidence level (see Figure 2 and Table 2).

Figure 2.

The perceived level of gore.

Table 2.

Perceived gore ratings by clip and version.

Next, we investigated whether blurring influenced perceived gore. Overall, the participants rated the original versions of the clips as more gory than the blurred versions for all five videos. Statistical tests confirmed significant differences in perceived gore between the two versions of each clip.

We also examined a potential contrast effect by comparing two viewing orders: the original-first group (N = 19) and the blurred-first (N = 18) group. Our statistical analysis confirmed significant differences in Clips 1, 2, 3, and 5 for the original-first group, and in Clips 1, 3, 4, and 5 for the blurred-first group. Although the p-values for Clip 4 in the original-first group () and Clip 2 in the blurred-first group () did not reach the conventional 95% significance threshold, they suggest a marginal effect that might be considered meaningful under a less conservative threshold () or a one-sided test. Despite these borderline values, the overall pattern indicates that perceived gore was consistently lower in the blurred versions regardless of viewing order.

We analyzed the open-ended responses that the participants provided to explain their perceived gore ratings for each clip. Two authors employed affinity diagramming with open coding to categorize these responses, iterating until they reached consensus. The relevant excerpts were then examined to develop detailed descriptions and illustrative examples of participant behaviors. As a result, we identified six key factors, as shown in Table 3.

Table 3.

Factors influencing perceived gore.

These perceived levels of gore—and the differences for each pair of clips—were confirmed by the sensor data analyses presented in the following sections.

4.2. Eye Openness Ratio During Gory Video Viewing

We measured each participant’s Eye Openness Ratio (based on EAR) at 0.1 s intervals while they were watching the video clips. Because individual EAR values can differ widely, we normalized each value by dividing it by the highest EAR value that a particular participant reached during the clip. As a result, we obtained a ratio ranging from 0 to 1 for every 0.1 s interval. We then averaged these ratios to obtain each participant’s mean Eye Openness Ratio for that clip as shown in Table 4. Unfortunately, due to an error in the EAR measurement code, the detailed EAR data for the first 13 participants were compromised. As a result, we excluded those 13 participants from the Eye Openness Ratio analysis and used only the remaining 24 participants’ data.

Table 4.

Eye Openness Ratio by clip and version.

In general, participants tended to keep their eyes more closed when watching the original clips, possibly due to frowning or partially shutting their eyes in response to the graphic bloodshed. We found significant differences in the Eye Openness Ratio for Clips 2, 3, and 5, which were rated as relatively gorier than Clips 1 and 4.

We conducted a statistical analysis to determine whether viewing order (original-first vs. blurred-first) influenced participants’ Eye Openness Ratio. Among those who watched the original version first, the results generally mirrored the overall trends: Clips 3 and 5, which were rated as relatively gorier, showed significant differences in eye openness between the original and blurred versions. For Clip 2, which was identified as the second-goriest, the p-value (0.054) was slightly above the threshold, so it was not deemed significant. In contrast, participants who watched the blurred version first did not exhibit statistically significant differences for all clips. However, these findings require cautious interpretation due to the smaller sample sizes in each group, a consequence of losing data from 13 participants in this measurement.

Our qualitative interview analysis corroborated these findings. Some participants reported that they tended to close their eyes more often when watching gorier scenes. For example, P30 and P1, referring to Clip 3 (original), commented the following:

“The scene was so graphic that it was hard to keep my eyes open.”

“I felt like it was an actual nose surgery, so I couldn’t stop grimacing the whole time. And of course, since I’ve got a nose too, my imagination was all the more easily triggered, I guess.”

On the other hand, some participants indicated that they closed their eyes less frequently when viewing blurred versions of the clips. For instance, P11 commented the following on Clip 1 (blurred):

“Now that the bloody area on the face is covered (by blurring), it feels like a lot of the gruesome elements are gone, so I definitely noticed myself grimacing less frequently.”

4.3. Blink Frequency

We measured each participant’s Blink Frequency (using EAR) at 0.1 s intervals while they watched the video clips, adopting a threshold of 0.25 to identify blinks based on prior studies. Table 5 presents the mean absolute blink counts of the participants for each clip. Because these counts reflect total occurrences, the average blink count tends to be higher for longer clips. For reference, a normal blink rate is approximately 17 times per minute [66].

Table 5.

Blink Frequency by clip and version.

We observed that the participants generally blinked more when watching the original versions compared to the blurred versions, except for Clip 4, which was rated the least gory. Statistical analysis confirmed significant differences in Blink Frequency for the other four clips.

To further investigate potential order effects, we split participants into two groups based on which version (original or blurred) they viewed first. Among those who started with the original version, no clip showed a statistically significant difference in blink frequency between the original and blurred versions. Conversely, participants who began with the blurred version exhibited significant differences in Blink Frequency for Clips 2, 3, and 5, which were comparatively more gory.

4.4. Analyzing Eye Fixations on Blood Regions Using Gaze Coordinates

We measured each participant’s Eye Fixations on blood regions using gaze coordinates at 0.1 s intervals during video viewing. Table 6 summarizes the average fixation duration for each clip. To normalize these data, we calculated the proportion of 0.1 s intervals in which participants fixated on blood (or blurred) regions, relative to the total number of frames containing such regions in each clip. Thus, a value of 50 would indicate that a participant fixated on the relevant frames 50% of the time.

Table 6.

Eye fixation ratio on blood regions by clip and version.

We observed that participants tended to fixate longer on the blood area when the gore level was lower. Statistical analysis revealed significant differences in Eye Fixations for Clips 2, 3, and 5, which were rated more gory than Clips 1 and 4. The result confirmed that the participants spent more time looking at the blurred-box area in the blurred version, suggesting they avoided this region when the blood was fully visible.

We also explored whether viewing order (original first vs. blurred first) affected participants’ Eye Fixations. In the blurred-first group, Clips 1, 2, and 3 showed significantly longer fixations on the blood area in the blurred version. For Clips 2 and 3—the most gory clips—this outcome is consistent with the findings for the entire participant sample. By contrast, in the original-first group, only Clip 1 exhibited a significant difference, with participants devoting more fixation time to the blood area in the original version. Two possible reasons may account for this discrepancy. First, a “learning effect” could have occurred, since many participants were already familiar with the movie Parasite (from which Clip 1 was taken). This film is extremely famous in South Korea due to winning four Academy Awards in 2020, and 30 participants reported having seen it before this experiment. Second, Clip 1 depicts an outdoor party with many people and diverse objects, unlike the relatively contained settings of the other clips. Consequently, there were multiple elements that might draw viewers’ attention, thus reducing the relative focus on the blood area.

Our interview findings also complemented the Eye Fixations results. For instance, when asked about Clip 1, P15 noted the following:

“I had already seen this movie before, which might have influenced me. Also, it has a lot of elements that are distracting beyond just the gory parts.”

Meanwhile, P13 and P32 described how the blur helped them watch the blood area in Clips 2 and 5, respectively:

“Maybe because it was blurred, it felt less tiring than the original version. The scene of putting one’s hand in the belly to find the aorta was blurred, which made it more comfortable to watch.”

“Because it was blurred, I didn’t think it was so gory that I couldn’t keep watching.”

These comments underscore how blurring can reduce discomfort when viewing blood, making graphic scenes more tolerable for participants.

4.5. Blood/Injury Phobia Questionnaire

We employed the Multidimensional Blood/Injury Phobia Inventory (MBPI) [46] to assess participants’ blood- and injury-related phobias. Specifically, we selected three subscales focusing on blood-related fears from the MBPI:

- Blood-Self (four items): evaluates fears associated with one’s own blood.

- Injury (six items): captures apprehension about sustaining injuries and the negative emotional responses that accompany them.

- Blood/Injury-Others (five items): measures fears triggered by witnessing others’ blood or injuries.

Each item was rated on a four-point Likert scale, ranging from 1 (very slightly or not at all) to 4 (extremely). For the Blood-Self subscale, the average score was 1.66 (SD = 0.55). The Injury subscale had an average score of 2.86 (SD = 0.63), and the Blood/Injury-Others subscale showed an average score of 2.16 (SD = 0.68). Overall, participants’ Blood-Self scores were relatively low, whereas their Injury scores were comparatively high.

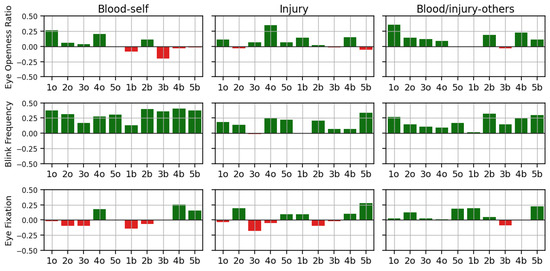

We then examined how participants’ MBPI subscales related to their sensor data—Eye Openness Ratio, Blink Frequency, and Eye Fixations—using Pearson’s correlation (see Figure 3). Notably, Blink Frequency exhibited stronger correlations than the other measures. In particular, we found that Blink Frequency and Blood-Self showed a moderate yet statistically meaningful association. Although these correlations are not extremely high, they still suggest a significant relationship worthy of further investigation.

Figure 3.

The perceived level of gore (#o: original version of Clip #, #b: blurred version of Clip #).

5. Discussion

5.1. Effect of Segmentation-Based Blood Blurring on Perceived Gore (RQ1)

We first investigated the extent to which segmentation-based blood blurring reduces viewers’ perceived gore. Our findings indicate that this approach significantly lowered perceived gore across all five video clips. Even when separating participants into original-first or blurred-first viewing groups, the blurred versions consistently produced lower gore ratings in most clips.

To deepen our understanding of these results, we identified multiple elements influencing perceived gore, including Bleeding, Severity of Injury, Violent Behavior, Victim’s Reaction, Realism, and Sound. Although our blurring technique did not address non-blood elements such as victim reactions or sound, we found that obscuring blood regions substantially reduced participants’ perceived gore. These observations align with prior research highlighting the importance of blood color, sound effects, and the overall realism of injury [36], as well as the pivotal role blood quantity plays in provoking aggressive thoughts and physiological arousal [11]. Future work could consider designing adaptive blurring strategies that vary according to the severity or volume of blood, potentially achieving greater reductions in perceived gore. Moreover, other factors that can heighten the sense of brutality—such as blood color, verbal tone, and lighting—have also been proposed [67], suggesting that a more comprehensive content moderation system might combine multiple forms of obfuscation or sensory modifications to fully address the diverse elements that contribute to perceived gore.

5.2. Physiological Reactions During Gory Video Viewing (RQ2)

Our study revealed that physiological reactions (i.e., eye openness ratio and blink frequency) differ significantly depending on the level of gore in the video clips. Most previous research compares physiological responses to highly disturbing content or entirely neutral material. We addressed this gap by examining user responses to the same clips in both blurred and unblurred formats.

When viewing gorier clips, the participants tended to close their eyes more and blink more. In addition, they looked at the blood area within the blurred versions of clips for a longer period of time compared to in the original versions. In particular, the differences were clearly observed for Clips 2 and 3, which represent the two highest levels of gore. However, no significant differences were observed in Clip 4, which represents the lowest level of gore, although it describes a gory scene rather than a natural or normal one.

The physiological responses reported in our study are in line with previous findings on reactions to violent, frightening, or disturbing stimuli. Researchers have noted that individuals display heightened facial tension—such as eye squeezing—when confronted with fear-inducing content, and relax their facial muscles when viewing neutral or nonthreatening material [25,29]. Increased blink frequency has also been observed as part of the startle response when the body recognizes and responds to perceived threats, with aggressive or shocking imagery further amplifying this reflex [31]. In addition, extreme gore can trigger discomfort and emotional avoidance, resulting in higher spontaneous blink rates (SBRs) [24]. These diverse mechanisms—ranging from facial muscle tension to reflexive blinking—underscore how different aspects of harmful content (e.g., severity, aggression, disgust) can elicit a range of involuntary reactions aimed at regulating emotional and attentional states.

5.3. Blurring and Gaze Engagement: The Role of Curiosity (RQ2)

Our study revealed that eye gaze patterns vary significantly with the level of gore in the video clips. Notably, participants fixated on blood regions longer when these areas were blurred rather than left unaltered—an outcome that contrasts with prior findings suggesting that viewers typically exhibit reduced fixation times and a quicker tendency to avoid frightening or disturbing stimuli [25,28]. This result indicates that blurring may mitigate viewers’ instinctive avoidance of gory scenes, allowing them to stay more engaged and gather additional contextual information.

Such a phenomenon can be interpreted through the lens of Information Gap Theory [68] and Cognitive Curiosity [69]. According to these theories, curiosity is triggered when individuals recognize a gap between what they already know and what they desire to know. In the context of gory scenes, viewers may want to understand the full narrative but feel deterred by explicit depictions of blood, which can prompt them to look away and lose crucial context. Visual avoidance is a common emotion regulation strategy aimed at reducing negative responses such as disgust [70] or fear [71]; by turning away, viewers attempt to down-regulate these negative feelings via behavioral responses [72].

By applying segmentation-based blurring, our approach selectively obscures only the most graphic elements while preserving other visual information. This strategy reduces emotional or attentional barriers, enabling viewers to satisfy their curiosity about the scene without the heightened discomfort that can stem from explicit imagery. As a result, blurring potentially closes the information gap, encouraging viewers to maintain visual engagement and better understand the scene’s context.

5.4. Contrast and Order Effects in Gory Content Viewing (RQ2)

Our findings align with prior research on contrast and order effects, demonstrating that the perceived intensity of a new stimulus is influenced by the severity of previously viewed content. Participants who first watched the unblurred, highly gory clip subsequently rated the blurred version as less disturbing, illustrating a contrast effect in which an intense stimulus overshadows a milder one that follows [73,74,75,76]. Conversely, those who began with the blurred clip tended to perceive the unblurred one as more horrifying, reflecting an order effect in which an initial, less intense experience increases expectations for the next [77,78].

In practical terms, continuous viewing on platforms like YouTube Shorts, TikTok, or Reels may magnify negative reactions when a sudden transition occurs from mildly to extremely violent content, or normalize violence if several gory clips are watched consecutively. Our results highlight the need for careful consideration of such contrast effects and ordering in designing streaming services that present diverse, rapidly shifting videos.

5.5. Empathy and Physiological Responses to Gory Scenes (RQ3)

To examine how an individual’s fear level (i.e., blood- or injury-related phobias) is associated with the viewing experience of gory videos, we measured the participants’ blood- and injury-related phobias using MBPI subscales [46], Blood-Self, Injury, and Blood/Injury-Others, and investigated the relation with their sensor data, Eye Openness Ratio, Blink Frequency, and Eye Fixations, using Pearson’s correlation. We found that Blood-Self exhibited moderate correlations with Blink Frequency, meaning that an individual who rated himself with high blood-self blinked more when viewing, in particular, clips with relatively higher levels of gore (i.e., Clips 2, 3, and 5). It is notable that the physiological reaction of Blink Frequency showed a clearer correlation with Blood-Self than Blood-Other, even though they watched someone else’s bleeding in the clips, not their own bleeding.

One possible explanation involves empathy, which refers to the capacity to recognize and share another person’s feelings or experiences. This concept includes pain empathy [79], physical empathy [80], cognitive empathy [81], sensory sharing [82], and vicarious pain [83]. For instance, one study demonstrated that when participants were shown images of individuals experiencing various forms of physical pain, they reported empathic distress similar to the emotions associated with feeling pain themselves [84]. Such empathic responses can promote greater immersion, yet viewers may need to guard against overidentification—particularly in cases of extreme gore—in order to recognize the fictional context and prevent excessively negative viewing experiences [80].

In this regard, our blurring technique may serve as a protective mechanism by lowering the intensity of graphic imagery. For example, repeated or extended exposure to violent, lengthy videos has been shown to desensitize viewers to real-life violence [85,86] and others’ suffering, ultimately reducing prosocial behavior [87]. Moreover, young people frequently exposed to violent media can exhibit increased physical aggression and diminished empathy [88]. By mitigating the visual impact of blood and gore, our approach helps prevent viewers from becoming overly distressed during violent or bloody scenes.

5.6. Design Implications

Based on our findings, we propose two design implications for online video streaming services aimed at enhancing users’ viewing experiences. These suggestions illustrate how segment-based blood blurring and responsive moderation could be practically implemented in light of our study’s results.

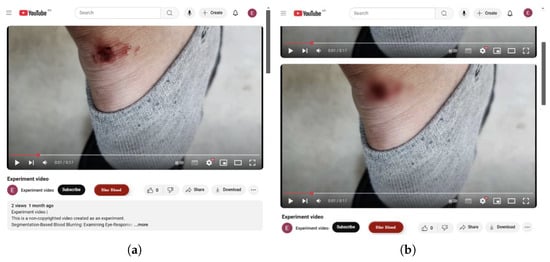

5.6.1. Implication for the Segment-Based Blood Blurring on Video Streaming Service

To explore real-world applicability, we developed a Chrome browser extension that enables users to watch a blood-blurred version of any YouTube video (see Figure 4). When a user visits a YouTube video page, the extension automatically adds a “Blur Blood” button beneath the video title. If the user anticipates disturbing or violent content, clicking the button prompts the extension to download the video, using the yt-dlp (v2024.10.22) library [89], to a server where it is processed through our YOLO-based blurring model. The resulting blurred video is then inserted below the original video player, allowing viewers to watch it in a more comfortable manner while still following the narrative context.

Figure 4.

A Chrome browser extension enabling segment-based blood blurring on YouTube. (a) A standard YouTube video page with the “Blur Blood” button added below the title. (b) The page after generating a blurred version of the video, displayed below the original player.

This prototype demonstrates the technical feasibility of offering a blurred viewing experience for users who prefer to avoid graphic imagery. Future work can focus on user-centered refinements, such as giving viewers control over the blur intensity or offering additional personalization options. These enhancements could promote a more inclusive viewing environment, accommodating individual differences in gore tolerance and further reducing unintentional exposure to violent or bloody scenes.

5.6.2. Camera-Based Detection of Discomfort and Responsive Blurring on Smartphones

Our study showed that participants’ physiological and gaze responses (e.g., eye openness ratio and blink frequency) varied significantly according to the clip’s gore level. This finding suggests the possibility of dynamically predicting a viewer’s discomfort through camera-based sensors (e.g., built-in webcams or eye trackers) and applying a corresponding level of blur. Many users watch online videos on mobile devices equipped with front-facing cameras, which could facilitate such real-time monitoring. A responsive blur system could, for instance, automatically increase the blurring intensity when it detects that the viewer’s discomfort is rising, helping them remain engaged with the content without feeling excessively disturbed.

However, for this suggested idea, privacy concerns must be carefully addressed, particularly regarding the continuous monitoring of users’ facial expressions or gaze. If implemented responsibly, this adaptive approach could empower viewers to experience less distressing but still informative versions of violent or gory videos. It would also open avenues for personalized moderation strategies that prioritize user comfort and well-being.

5.7. Limitation and Future Work

Sample size and composition: One limitation of this study involves the sample’s size and composition. The overall number of participants was relatively small (N = 37), which becomes more pronounced when comparing the original-first and blurred-first groups. Furthermore, all participants were young adults from South Korea, and potential gender- and cultural-related differences were not examined. Specifically, previous research has noted that men and women may differ in their responses to violent or graphic media [34,90], suggesting a need for broader demographic sampling in future work to enhance generalizability. Additionally, for the Eye Openness Ratio analysis, 13 participants had to be excluded due to a coding error that invalidated their EAR measurements. Although we compared demographics and other key measures for the excluded participants versus the remaining 24 and found no significant differences, the final subset was smaller than intended. This reduction potentially limits the statistical power and scope of our findings related to eye openness. Future studies should employ more robust methods for data collection and validation to minimize such exclusions, as well as explore a wider demographic range to improve representativeness.

Familiarity with the stimulus clips: In addition, we did not account for participants’ prior familiarity with the video materials. Notably, 30 out of the 37 participants had already watched the film Parasite (Clip 1), whereas very few participants had seen any of the other four clips beforehand. This familiarity may have influenced how individuals perceived the gore or engaged with the content. Future research should therefore incorporate measures of prior viewing experience, especially for well-known films, to better understand how familiarity mediates responses to graphic or blurred content.

Differences in clip durations: We also acknowledge that the selected clips differ in runtime. Our priority was to preserve each clip’s natural context and flow, rather than artificially trimming or extending the footage to achieve a uniform length. Although this approach strengthened ecological validity, it means runtime consistency was not strictly controlled. We employed a repeated-measures design so that each participant served as their own control for both the original and blurred versions of the same clip. However, future studies may wish to investigate systematically matching or adjusting clip lengths, while retaining essential context, to further clarify how viewing time intersects with physiological responses and perceived gore.

Evaluating the blurring model and future improvements: The accuracy of the blurring process itself may have influenced viewer reactions. Although participants generally felt the blurring was well applied, numerical assessments revealed that the method was not perfectly accurate. In addition, we did not measure how much segmentation-based blurring helped viewers understand contextual details. The effectiveness of a segmentation-based approach, compared to box-based blurring, was not formally evaluated, even though participant feedback (e.g., “I could simply recognize ‘Oh, there’s rebar there!’ without dwelling on the graphic aspects”, from P29) suggests that preserving contextual information can be advantageous. Under a box-based method, the entire area might have been obscured, hindering the recognition of crucial details. In future work, researchers could refine or develop more advanced algorithms to optimize both detection precision and viewer comfort, ensuring that observed outcomes truly result from the blurring intervention rather than technology-related shortcomings. We also believe that systematically examining the added benefits of segmentation-based blurring, particularly its ability to mitigate perceived gore while preserving context, is vital for enhancing the overall viewing experience of gory videos and expanding the contributions of this research.

Accessibility concerns: In our study, we focused on blurring blood. Although individuals with color insensitivity may not perceive redness as vividly, we believe that blurring can still reduce perceived gore by de-emphasizing blood flow, since the absence of full color perception does not necessarily eliminate awareness of the amount or motion of bleeding. However, its effectiveness may differ for color-insensitive viewers. To ensure our system benefits a broader range of users, future work should address accessibility concerns, similar to how some webtoon designs are adapted for color-insensitive readers [91].

6. Conclusions

In this study, we examined three research questions: (1) the extent to which segmentation-based blood blurring reduces viewers’ perceived gore, (2) how different levels of gore influence the viewing experience (including eye-based physiological responses and gaze behavior), and (3) in what ways an individual’s fear level correlates with watching gory videos. To achieve these objectives, we developed and evaluated a segmentation-based blood-blurring model designed to reduce the perceived gore of violent scenes without entirely removing important contextual information. Our experiment, involving 37 participants, revealed that partial blurring significantly lowered viewers’ perceived gore, particularly for clips with higher levels of brutality. Moreover, physiological and eye-gaze data indicated distinct patterns of eye openness, blink frequency, and fixation behaviors that corresponded to the intensity of gory content. Individual differences, including self-reported fear of blood, further influenced responses such as blink rate, demonstrating the applicability of personalized content moderation. By combining user-centered experimentation with deep learning-driven video processing, this work offers practical insights for designing and implementing more nuanced content-filtering systems. Future research may explore adaptive blurring that responds to real-time physiological signals, investigate additional factors (e.g., audio cues, context of violence), and further refine approaches to safeguard vulnerable viewers while preserving narrative coherence.

Author Contributions

Conceptualization, J.S. and S.P.; methodology, J.S. and M.C.; software, J.S. and M.C.; formal analysis, M.C.; visualization, M.C.; supervision, S.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Institute of Information & Communications Technology Planning & Evaluation (IITP) under the metaverse support program, grant funded by the Korea government (MSIT). (IITP-RS-2024(2025)-00425383).

Institutional Review Board Statement

This study was conducted after obtaining approval from the Institutional Review Board (IRB) of Kyung Hee University (approval number: KHGIRB-24-701).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. Written informed consent has been obtained from the patients to publish this paper.

Data Availability Statement

Our dataset is available at https://doi.org/10.5281/zenodo.14869369 and includes participants’ perceived gore ratings, eye openness ratio, blink frequency, eye fixation metrics, and MBPI subscale responses.

Acknowledgments

The authors gratefully acknowledge Seungjae Oh for his valuable advice. His support and guidance substantially contributed to the success of this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- McKetta, I. Despite All Odds, Global Internet Speeds Continue Impressive Increase. Technical Report, Ookla. 2021. Available online: https://www.ookla.com/articles/world-internet-speeds-july-2021 (accessed on 19 March 2025).

- Palmer, C. Internet Statistics 2025. Technical Report, HighSpeedInteret. 2025. Available online: https://www.highspeedinternet.com/resources/internet-facts-statistics (accessed on 19 March 2025).

- Ceci, L. Mobile Video Worldwide—Statistics Facts. Technical Report, HighSpeedInteret. 2024. Available online: https://www.statista.com/topics/8248/mobile-video-worldwide/#topicOverview (accessed on 19 March 2025).

- Jonathan David Haidt, Z.R. It’s Time to Make the Internet Safer for Kids. Technical Report, WIRED. 2024. Available online: https://www.wired.com/story/digital-social-media-safeguards-children-policy/ (accessed on 19 March 2025).

- Tidy, J. TikTok Loophole Sees Users Post Pornographic and Violent Videos. Technical Report, BBC. 2021. Available online: https://bbc.com/news/technology-56821882 (accessed on 19 March 2025).

- Jesse McCrosky, B.G. YouTube Regrets. Technical Report, Mozilla. 2021. Available online: https://foundation.mozilla.org/en/youtube/findings/ (accessed on 19 March 2025).

- Averill, J.R.; Malmstrom, E.J.; Koriat, A.; Lazarus, R.S. Habituation to complex emotional stimuli. J. Abnorm. Psychol. 1972, 80, 20. [Google Scholar] [CrossRef] [PubMed]

- Balat, M.; Gabr, M.; Bakr, H.; Zaky, A.B. TikGuard: A Deep Learning Transformer-Based Solution for Detecting Unsuitable TikTok Content for Kids. In Proceedings of the 2024 6th Novel Intelligent and Leading Emerging Sciences Conference (NILES), Giza, Egypt, 19–21 October 2024; pp. 337–340. [Google Scholar] [CrossRef]

- Yousaf, K.; Nawaz, T. A Deep Learning-Based Approach for Inappropriate Content Detection and Classification of YouTube Videos. IEEE Access 2022, 10, 16283–16298. [Google Scholar] [CrossRef]

- Farrar, K.M.; Krcmar, M.; Nowak, K.L. Contextual features of violent video games, mental models, and aggression. J. Commun. 2006, 56, 387–405. [Google Scholar] [CrossRef]

- Barlett, C.P.; Harris, R.J.; Bruey, C. The effect of the amount of blood in a violent video game on aggression, hostility, and arousal. J. Exp. Soc. Psychol. 2008, 44, 539–546. [Google Scholar] [CrossRef]

- Marks, I.f.; Nesse, R.M. Fear and fitness: An evolutionary analysis of anxiety disorders. Ethol. Sociobiol. 1994, 15, 247–261. [Google Scholar]

- Constantin, M.G.; Ştefan, L.D.; Ionescu, B.; Demarty, C.H.; Sjöberg, M.; Schedl, M.; Gravier, G. Affect in Multimedia: Benchmarking Violent Scenes Detection. IEEE Trans. Affect. Comput. 2022, 13, 347–366. [Google Scholar] [CrossRef]

- Qamar Bhatti, A.; Umer, M.; Adil, S.H.; Ebrahim, M.; Nawaz, D.; Ahmed, F. Explicit Content Detection System: An Approach towards a Safe and Ethical Environment. Appl. Comput. Intell. Soft Comput. 2018, 2018, 1463546. [Google Scholar] [CrossRef]

- Serra, A.C.; Mendes, P.R.C.; de Freitas, P.V.A.; Busson, A.J.G.; Guedes, A.L.V.; Colcher, S. Should I See or Should I Go: Automatic Detection of Sensitive Media in Messaging Apps. In Proceedings of the Brazilian Symposium on Multimedia and the Web, New York, NY, USA, 5–12 November 2021; WebMedia ’21. pp. 229–236. [Google Scholar] [CrossRef]

- Khan, S.U.; Haq, I.U.; Rho, S.; Baik, S.W.; Lee, M.Y. Cover the Violence: A Novel Deep-Learning-Based Approach Towards Violence-Detection in Movies. Appl. Sci. 2019, 9, 4963. [Google Scholar] [CrossRef]

- de Freitas, P.V.; Mendes, P.R.; dos Santos, G.N.; Busson, A.J.G.; Guedes, Á.L.; Colcher, S.; Milidiú, R.L. A multimodal CNN-based tool to censure inappropriate video scenes. arXiv 2019, arXiv:1911.03974x. [Google Scholar] [CrossRef]

- Verma, Y.; Bhatia, M.; Tanwar, P.; Bhatia, S.; Batra, M. Automatic video censoring system using deep learning. Int. J. Electr. Comput. Eng. (IJECE) 2022, 12, 6744–6755. [Google Scholar] [CrossRef]

- Larocque, W. Gore classification and censoring in images. Ph.D. Thesis, Université d’Ottawa/University of Ottawa, Ottawa, ON, Canada, 2021. [Google Scholar]

- Maheswaran, S.; Sathesh, S.; Ajith Kumar, P.; Hariharan, R.S.; Chandra Sekar, P.; Ridhish, R.; Gomathi, R.D. YOLO based Efficient Vigorous Scene Detection And Blurring for Harmful Content Management to Avoid Children’s Destruction. In Proceedings of the 2022 3rd International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 17–19 August 2022; pp. 1063–1073. [Google Scholar] [CrossRef]

- Bethany, M.; Wherry, B.; Vishwamitra, N.; Najafirad, P. Image Safeguarding: Reasoning with Conditional Vision Language Model and Obfuscating Unsafe Content Counterfactually. Proc. Aaai Conf. Artif. Intell. 2024, 38, 774–782. [Google Scholar] [CrossRef]

- Hamm, A.O.; Cuthbert, B.N.; Globisch, J.; Vaitl, D. Fear and the startle reflex: Blink modulation and autonomic response patterns in animal and mutilation fearful subjects. Psychophysiology 1997, 34, 97–107. [Google Scholar] [CrossRef] [PubMed]

- Lang, P.J.; Davis, M.; Öhman, A. Fear and anxiety: Animal models and human cognitive psychophysiology. J. Affect. Disord. 2000, 61, 137–159. [Google Scholar] [CrossRef]

- Maffei, A.; Angrilli, A. Spontaneous blink rate as an index of attention and emotion during film clips viewing. Physiol. Behav. 2019, 204, 256–263. [Google Scholar] [CrossRef] [PubMed]

- Fink-Lamotte, J.; Svensson, F.; Schmitz, J.; Exner, C. Are You Looking or Looking Away? Visual Exploration and Avoidance of Disgust- and Fear-Stimuli: An Eye-Tracking Study. Emotion 2021, 22, 1909–1918. [Google Scholar] [CrossRef]

- Koch, M.D.; O’Neill, H.K.; Sawchuk, C.N.; Connolly, K. Domain-specific and generalized disgust sensitivity in blood-injection-injury phobia:: The application of behavioral approach/avoidance tasks. J. Anxiety Disord. 2002, 16, 511–527. [Google Scholar] [CrossRef]

- Lumley, M.A.; Melamed, B.G. Blood phobics and nonphobics: Psychological differences and affect during exposure. Behav. Res. Ther. 1992, 30, 425–434. [Google Scholar] [CrossRef]

- Griffith, O.J. To See and Fear: A Pilot Study on Gaze Behaviour in Horror Gaming. 2024. Available online: https://osf.io/preprints/psyarxiv/pszxg_v1 (accessed on 19 March 2025).

- Dimberg, U. Facial reactions to fear-relevant and fear-irrelevant stimuli. Biol. Psychol. 1986, 23, 153–161. [Google Scholar] [CrossRef]

- Vaidyanathan, U.; Patrick, C.J.; Bernat, E.M. Startle reflex potentiation during aversive picture viewing as an indicator of trait fear. Psychophysiology 2009, 46, 75–85. [Google Scholar] [CrossRef]

- Karamova, E. Immediate Changes in Aggression Levels and Startle Response Modulation. Master’s Thesis, Webster University, Webster Groves, MO, USA, 2021. [Google Scholar]

- Kreibig, S.D.; Wilhelm, F.H.; Roth, W.T.; Gross, J.J. Cardiovascular, electrodermal, and respiratory response patterns to fear-and sadness-inducing films. Psychophysiology 2007, 44, 787–806. [Google Scholar] [CrossRef]

- Jang, E.H.; Park, B.J.; Kim, S.H.; Chung, M.A.; Sohn, J.H. Correlation between Psychological and Physiological Responses during Fear. In Proceedings of the International Joint Conference on Biomedical Engineering Systems and Technologies, Angers, France, 3–6 March 2014; Volume 4, pp. 104–109. [Google Scholar]

- Qadir, M.; Asif, M. Does normal pulse rate correlate with watching horror movies. J. Cardiol. Curr. Res. 2019, 12, 67–68. [Google Scholar]

- Fu, X. Horror Movie Aesthetics: How Color, Time, Space and Sound Elicit Fear in an Audience. Master’s Thesis, Northeastern University, Boston, MA, USA, 2016. [Google Scholar]

- Jeong, E.J.; Biocca, F.A.; Bohil, C.J. Sensory realism and mediated aggression in video games. Comput. Hum. Behav. 2012, 28, 1840–1848. [Google Scholar] [CrossRef]

- Wolpe, J.; Lang, P.J. A fear survey schedule for use in behaviour therapy. Behav. Res. Ther. 1964, 2, 27–30. [Google Scholar] [PubMed]

- McNeil, D.W.; Rainwater, A.J. Development of the fear of pain questionnaire-III. J. Behav. Med. 1998, 21, 389–410. [Google Scholar]

- Marks, I.M.; Mathews, A.M. Brief standard self-rating for phobic patients. Behav. Res. Ther. 1979, 17, 263–267. [Google Scholar] [CrossRef] [PubMed]

- Bos, J. Blood Segmentation y0ygo Dataset. 2023. Available online: https://universe.roboflow.com/joost-bos-rfw3p/blood-segmentation-y0ygo (accessed on 19 March 2025).

- Blood. Blood Segmentation Dataset. 2023. Available online: https://universe.roboflow.com/blood-3pyjx/blood_segmentation (accessed on 19 March 2025).

- backupgeneral. Blood Segmentation-2 Dataset. 2023. Available online: https://universe.roboflow.com/backupgeneral/blood_segmentation_2 (accessed on 19 March 2025).

- Ha, E.; Kim, H.; Hong, S.C.; Na, D. HOD: A Benchmark Dataset for Harmful Object Detection. arXiv 2023, arXiv:2310.05192. [Google Scholar]

- FFmpeg Developers. FFmpeg. 2025. Available online: https://www.ffmpeg.org/ (accessed on 14 February 2025).

- OpenCV Team. OpenCV-Python. 2025. Available online: https://github.com/opencv/opencv-python (accessed on 14 February 2025).

- Wenzel, A.; Holt, C.S. Validation of the multidimensional blood/injury phobia inventory: Evidence for a unitary construct. J. Psychopathol. Behav. Assess. 2003, 25, 203–211. [Google Scholar]

- YouTube. Parasite Party Murder Scene. 2022. Available online: https://www.youtube.com/watch?v=qa1Zhnt9sZA (accessed on 14 February 2025).

- YouTube. The Legendary Crazy Whale, the Queen of the Index Finger (Dr. Romantic) SBS DRAMA. 2020. Available online: https://www.youtube.com/watch?v=uEBISBfBc8I (accessed on 14 February 2025).

- YouTube. The Process of a Tip of the Nose Surgery. 2013. Available online: https://www.youtube.com/watch?v=oPn9jsDJHQg (accessed on 14 February 2025).

- Naver Series On. The Witch: Part 2. 2022. Available online: https://serieson.naver.com (accessed on 14 February 2025).

- Soukupova, T.; Cech, J. Eye blink detection using facial landmarks. In Proceedings of the 21st Computer Vision Winter Workshop, Rimske Toplice, Slovenia, 3–5 February 2016; Volume 2. [Google Scholar]

- King, D.E. dlib: A Toolkit for Machine Learning and Computer Vision. 2025. Available online: https://github.com/davisking/dlib (accessed on 14 February 2025).

- Mehta, S.; Dadhich, S.; Gumber, S.; Jadhav Bhatt, A. Real-time driver drowsiness detection system using eye aspect ratio and eye closure ratio. In Proceedings of the International Conference on Sustainable Computing in Science, Technology and Management (SUSCOM), Amity University Rajasthan, Jaipur, India, 26–28 February 2019. [Google Scholar]

- Singh, P.; Mahim, S.; Prakash, S. Real-Time EAR Based Drowsiness Detection Model for Driver Assistant System. In Proceedings of the 2022 International Conference on Signal and Information Processing (IConSIP), Pune, India, 26–27 August 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Prasitsupparote, A.; Pasitsuparoad, P.; Itsarapongpukdee, S. Real-Time Driver Drowsiness Alert System for Product Distribution Businesses in Phuket Using Android Devices. In Proceedings of the 2023 20th International Joint Conference on Computer Science and Software Engineering (JCSSE), Phitsanulok, Thailand, 28 June–1 July 2023; pp. 351–355. [Google Scholar] [CrossRef]

- Hossain, M.Y.; George, F.P. IOT Based Real-Time Drowsy Driving Detection System for the Prevention of Road Accidents. In Proceedings of the 2018 International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Bangkok, Thailand, 21–28 October 2018; Volume 3, pp. 190–195. [Google Scholar] [CrossRef]

- Sathasivam, S.; Mahamad, A.K.; Saon, S.; Sidek, A.; Som, M.M.; Ameen, H.A. Drowsiness Detection System using Eye Aspect Ratio Technique. In Proceedings of the 2020 IEEE Student Conference on Research and Development (SCOReD), Batu Pahat, Malaysia, 27–29 September 2020; pp. 448–452. [Google Scholar] [CrossRef]

- Biswal, A.K.; Singh, D.; Pattanayak, B.K.; Samanta, D.; Yang, M.H. IoT-based smart alert system for drowsy driver detection. Wirel. Commun. Mob. Comput. 2021, 2021, 6627217. [Google Scholar] [CrossRef]

- Housholder, A.; Reaban, J.; Peregrino, A.; Votta, G.; Mohd, T.K. Evaluating Accuracy of the Tobii Eye Tracker 5. In Proceedings of the Intelligent Human Computer Interaction, Tashkent, Uzbekistan, 20–22 October 2022; Kim, J.H., Singh, M., Khan, J., Tiwary, U.S., Sur, M., Singh, D., Eds.; Springer: Cham, Switherland, 2022; pp. 379–390. [Google Scholar]

- Zeng, H.; Shen, Y.; Sun, D.; Hu, X.; Wen, P.; Song, A. Extended Control With Hybrid Gaze-BCI for Multi-Robot System Under Hands-Occupied Dual-Tasking. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 829–840. [Google Scholar] [CrossRef] [PubMed]

- Smith, P.; Dombrowski, M.; Macdonald, C.; Williams, C.; Pradeep, M.; Barnum, E.; Rivera, V.P.; Sparkman, J.; Manero, A. Initial Evaluation of a Hybrid eye tracking and Electromyography Training Game for Hands-Free Wheelchair Use. In Proceedings of the 2024 Symposium on Eye Tracking Research and Applications, New York, NY, USA, 4–7 June 2024. ETRA ’24. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, Y.; Zhang, X.; Zhang, Y. Immersive Teleoperation Platform Based on Eye Tracker. In Proceedings of the 2024 6th International Symposium on Robotics & Intelligent Manufacturing Technology (ISRIMT), Online, 12–14 September 2024; pp. 282–286. [Google Scholar] [CrossRef]

- Bature, Z.A.; Abdullahi, S.B.; Chiracharit, W.; Chamnongthai, K. Translated Pattern-Based Eye-Writing Recognition Using Dilated Causal Convolution Network. IEEE Access 2024, 12, 59079–59092. [Google Scholar] [CrossRef]

- Bartneck, C.; Keijsers, M. The morality of abusing a robot. Paladyn J. Behav. Robot. 2020, 11, 271–283. [Google Scholar] [CrossRef]

- Krahé, B.; Moller, I.; Huesmann, L.R.; Kirwil, L.; Felber, J.; Berger, A. Desensitization to media violence: Links with habitual media violence exposure, aggressive cognitions, and aggressive behavior. J. Personal. Soc. Psychol. 2011, 100, 630. [Google Scholar] [CrossRef]

- Bentivoglio, A.R.; Bressman, S.B.; Cassetta, E.; Carretta, D.; Tonali, P.; Albanese, A. Analysis of blink rate patterns in normal subjects. Mov. Disord. 1997, 12, 1028–1034. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Ji, Q. Video Affective Content Analysis: A Survey of State-of-the-Art Methods. IEEE Trans. Affect. Comput. 2015, 6, 410–430. [Google Scholar] [CrossRef]

- Loewenstein, G. The psychology of curiosity: A review and reinterpretation. Psychol. Bull. 1994, 116, 75. [Google Scholar]

- Berlyne, D.E. Conflict, Arousal, and Curiosity; McGraw-Hill Book Company: New York, NY, USA, 1960. [Google Scholar]

- Otero, M.C.; Levenson, R.W. Emotion regulation via visual avoidance: Insights from neurological patients. Neuropsychologia 2019, 131, 91–101. [Google Scholar] [CrossRef] [PubMed]

- Aue, T.; Hoeppli, M.E.; Piguet, C.; Sterpenich, V.; Vuilleumier, P. Visual avoidance in phobia: Particularities in neural activity, autonomic responding, and cognitive risk evaluations. Front. Hum. Neurosci. 2013, 7, 194. [Google Scholar] [CrossRef] [PubMed]

- Otero, M.C. An Examination of Visual Avoidance as an Emotion Regulation Strategy in Neurodegenerative Disease. Ph.D. Thesis, University of California, Berkeley, CA, USA, 2019. [Google Scholar]

- Manstead, A.S.R.; Wagner, H.L.; MacDonald, C.J. A contrast effect in judgments of own emotional state. Motiv. Emot. 1983, 7, 279–290. [Google Scholar]

- Harris, A.J. An experiment on affective contrast. Am. J. Psychol. 1929, 41, 617–624. [Google Scholar]

- Kenrick, D.T.; Gutierres, S.E. Contrast effects and judgments of physical attractiveness: When beauty becomes a social problem. J. Personal. Soc. Psychol. 1980, 38, 131. [Google Scholar] [CrossRef]

- Larsen, J.T.; McGraw, A.P.; Gilovich, T. Can contrast effects regulate emotions? A follow-up study of vital loss decisions. PLoS ONE 2012, 7, e42010. [Google Scholar] [CrossRef]

- Kramer, R.S.; Pustelnik, L.R. Sequential effects in facial attractiveness judgments: Separating perceptual and response biases. Vis. Cogn. 2021, 29, 679–688. [Google Scholar]

- Geers, A.L.; Lassiter, G.D. Affective assimilation and contrast: Effects of expectations and prior stimulus exposure. Basic Appl. Soc. Psychol. 2005, 27, 143–154. [Google Scholar]

- Zhou, F.; Li, J.; Zhao, W.; Xu, L.; Zheng, X.; Fu, M.; Yao, S.; Kendrick, K.M.; Wager, T.D.; Becker, B. Empathic pain evoked by sensory and emotional-communicative cues share common and process-specific neural representations. elife 2020, 9, e56929. [Google Scholar] [CrossRef] [PubMed]

- Morse, J.M.; Mitcham, C.; van Der Steen, W.J. Compathy or physical empathy: Implications for the caregiver relationship. J. Med. Humanit. 1998, 19, 51–65. [Google Scholar] [CrossRef]

- Tremblay, M.P.B.; Meugnot, A.; Jackson, P.L. The Neural Signature of Empathy for Physical Pain… Not Quite There Yet! Social and Interpersonal Dynamics in Pain: We Don’t Suffer Alone; Springer: Berlin/Heidelberg, Germany, 2018; pp. 149–172. [Google Scholar] [CrossRef]

- Giummarra, M.J.; Fitzgibbon, B.; Georgiou-Karistianis, N.; Beukelman, M.; Verdejo-Garcia, A.; Blumberg, Z.; Chou, M.; Gibson, S.J. Affective, sensory and empathic sharing of another’s pain: The E mpathy for P Ain S cale. Eur. J. Pain 2015, 19, 807–816. [Google Scholar] [CrossRef]

- Decety, J.; Ickes, W. The Social Neuroscience of Empathy; Mit Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Goubert, L.; Craig, K.D.; Vervoort, T.; Morley, S.; Sullivan, M.J.; de CAC, W.; Cano, A.; Crombez, G. Facing others in pain: The effects of empathy. Pain 2005, 118, 285–288. [Google Scholar] [PubMed]

- Drabman, R.S.; Thomas, M.H. Exposure to Filmed Violence and Children’s Tolerance of Real Life Aggression. Personal. Soc. Psychol. 1974, 1, 198–199. [Google Scholar]

- Thomas, M.H.; Drabman, R.S. Toleration of real life aggression as a function of exposure to televised violence and age of subject. Merrill-Palmer Q. Behav. Dev. 1975, 21, 227–232. [Google Scholar]

- Bushman, B.J.; Anderson, C.A. Comfortably numb: Desensitizing effects of violent media on helping others. Psychol. Sci. 2009, 20, 273–277. [Google Scholar]

- Krahé, B.; Möller, I. Longitudinal effects of media violence on aggression and empathy among German adolescents. J. Appl. Dev. Psychol. 2010, 31, 401–409. [Google Scholar] [CrossRef]

- yt-dlp Developers. yt-dlp: A youtube-dl Fork with Additional Features and Fixes. 2025. Available online: https://github.com/yt-dlp/yt-dlp (accessed on 14 February 2025).

- Berry, M.; Gray, T.; Donnerstein, E. Cutting film violence: Effects on perceptions, enjoyment, and arousal. J. Soc. Psychol. 1999, 139, 567–582. [Google Scholar] [CrossRef] [PubMed]

- Ha, S.; Kim, J.; Kim, S.; Moon, G.; Kim, S.B.; Kim, J.; Kim, S. Improving webtoon accessibility for color vision deficiency in South Korea using deep learning. Univers. Access Inf. Soc. 2024, 1–18. [Google Scholar] [CrossRef]