Deep Reinforcement Learning-Enabled Computation Offloading: A Novel Framework to Energy Optimization and Security-Aware in Vehicular Edge-Cloud Computing Networks

Abstract

1. Introduction

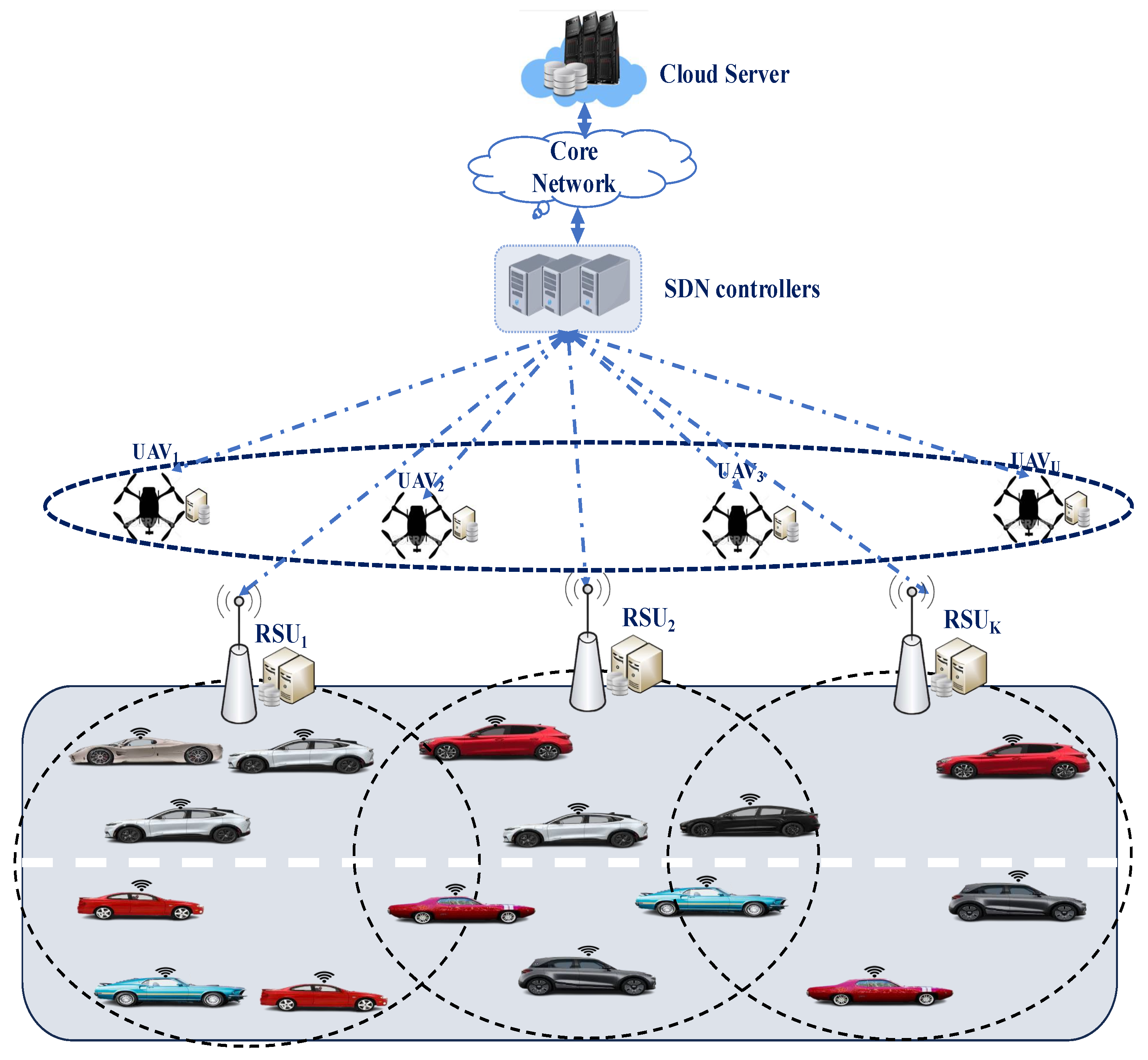

- An optimized load-balancing technique is presented to enhance the load distribution among RSUs, wherein vehicles are reassigned to the most suitable RSUs according to their task size, location, and CPU cycles. Moreover, UAVs are employed alongside edge servers to deliver computational and communicative resources by hovering over congested areas where the RSU servers remain overwhelmed.

- A robust security layer is developed, combining AES with dynamic one-time encryption key generation to secure data transfer, ensuring improved critical information security during offloading.

- A new caching technique for edge servers is employed, concentrating on the selective caching of application code and task-specific data to minimize energy consumption while maintaining latency requirements. The caching strategy considers server capacity, task popularity, and data size to enhance efficiency and reduce energy consumption.

- A comprehensive model is developed that integrates computation offloading, security, load balancing, and task caching, aiming to minimize energy consumption in vehicles while meeting the latency demands.

- Given the problem’s NP-hard complexity, the paper develops a deep learning-based algorithm and an equivalent reinforcement learning model to solve it efficiently, allowing for effective decision making in dynamic environments.

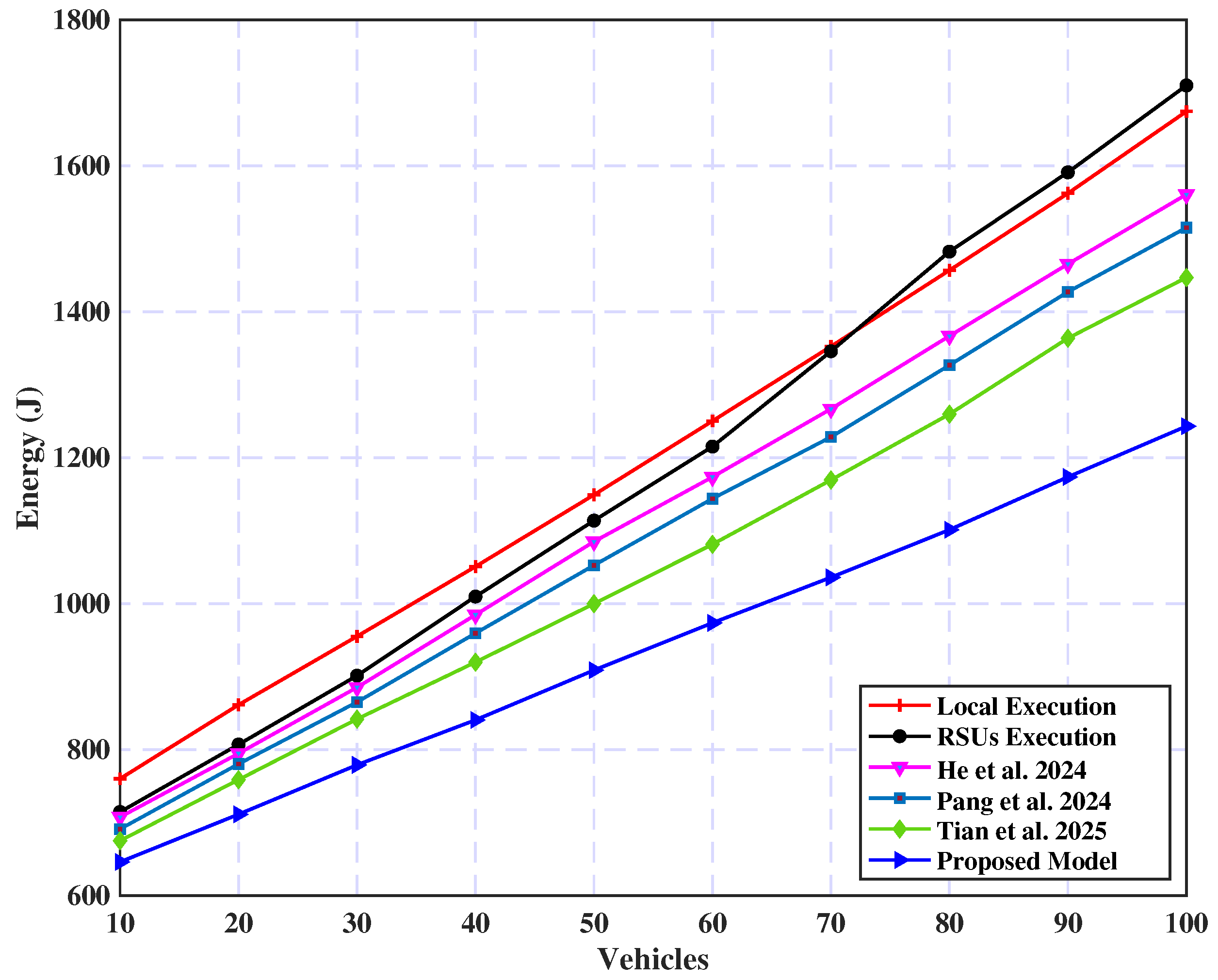

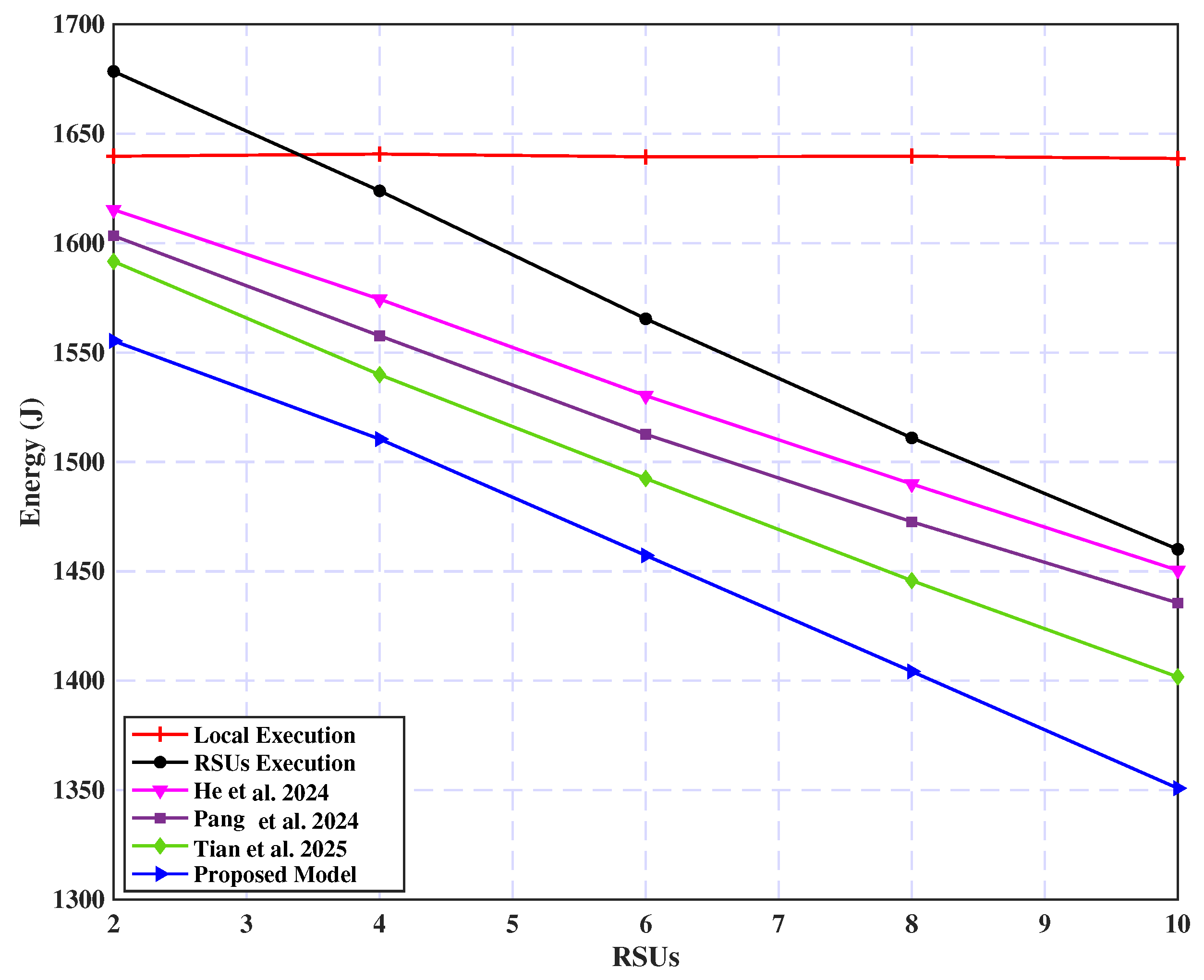

- Simulation results proved that the proposed model demonstrates fast and efficient convergence while significantly outperforming current benchmark techniques in reducing system energy consumption.

2. Related Work

2.1. Traditional-Based Techniques

2.2. Deep Learning-Based Techniques

3. System Model

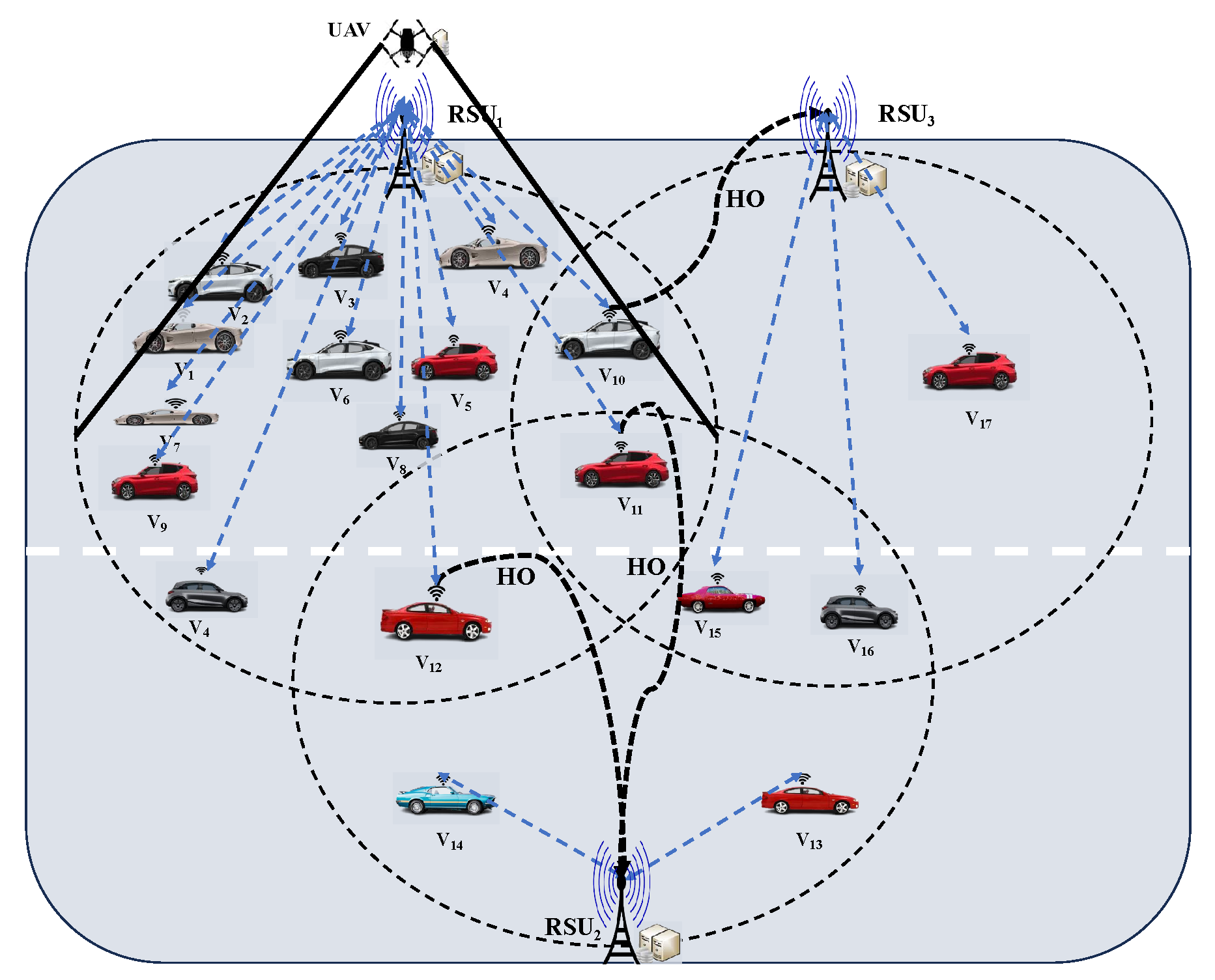

3.1. Network Model

3.2. Load Balancing

| Algorithm 1 Optimizing RSUs’ Load Distribution |

|

3.3. Communication Model

3.4. Computation Model

3.4.1. Local Processing

3.4.2. Remote Processing

3.5. Security

3.6. Task Caching

3.7. Problem Formulation

4. Proposed Distributed Deep Learning Algorithm

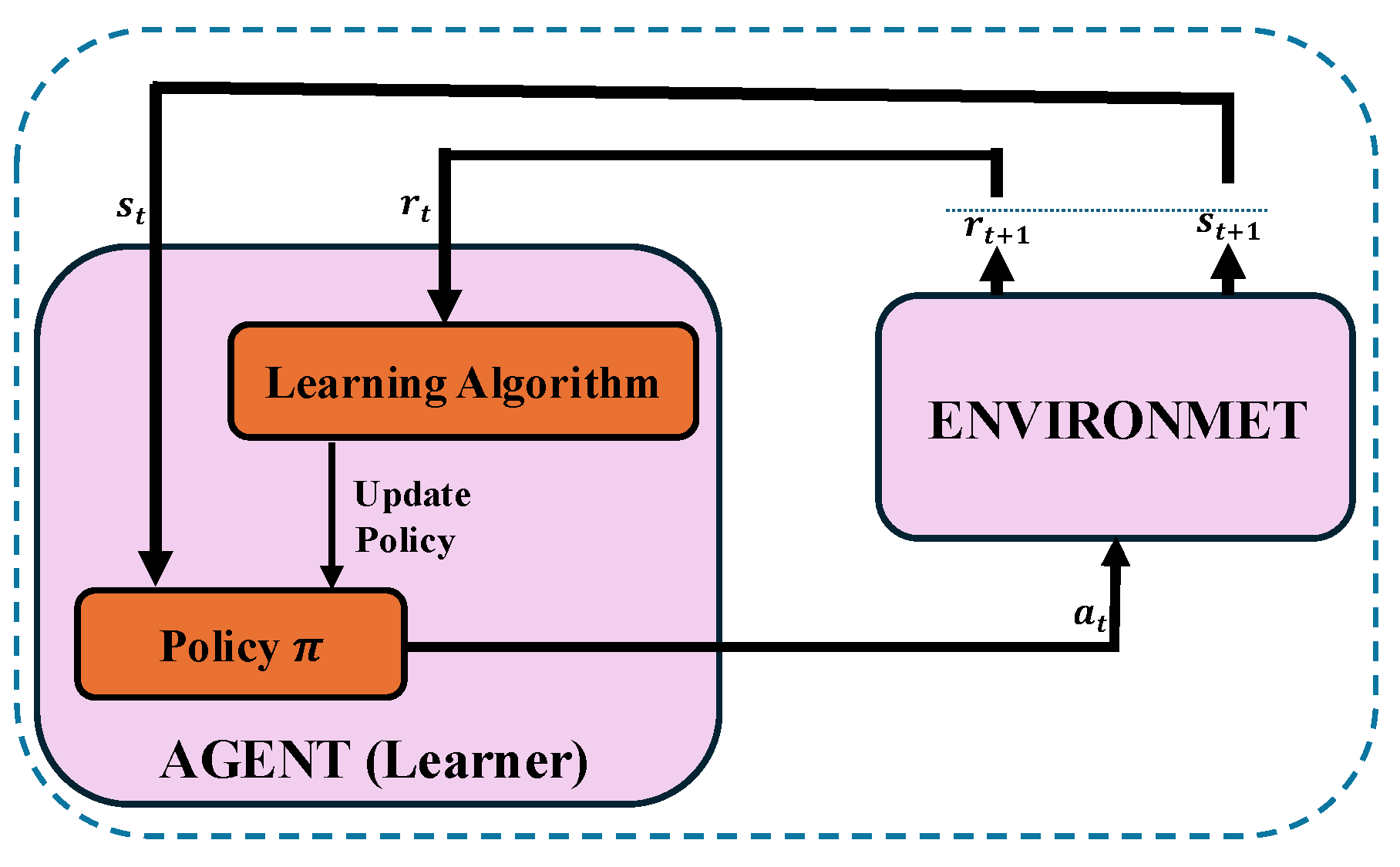

4.1. An Introduction to the Principles of Reinforcement Learning

4.2. Essential Elements of the Reinforcement Learning Framework

- State: The state space, denoted by S, is defined by the essential task-specific attributes and, formally, at time t, can be represented as .

- Action: The action space, denoted by A, encompasses the caching and offloading decisions made by the system and, formally, at time t, can be represented as , where the selection of each action is governed by a policy .

- Reward: In this study, the objective function defined in Equation (24) is directly mapped to the reward function within the RL framework. Specifically, the reward , at time t, is determined by evaluating the current state and the selected action according to the policy . This process is repeated iteratively over time, where the cumulative reward is minimized according to the policy , to optimize system performance through the formula , where indicates the system energy in Equation (24).

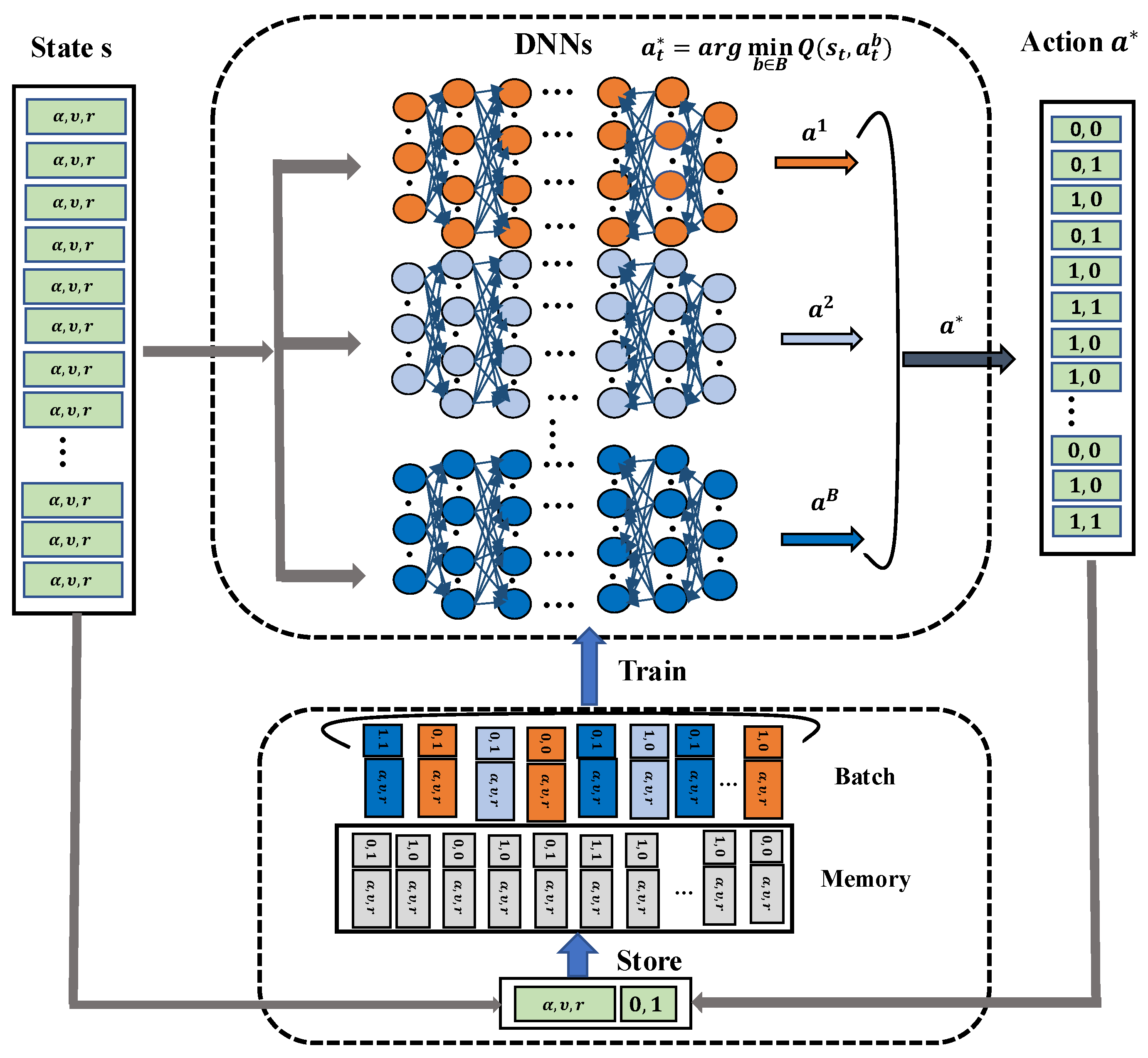

4.3. Robust Distributed Deep Reinforcement Learning

| Algorithm 2 Secured and Optimized DDRL Algorithm |

|

5. Evaluation and Discussion of Simulation Results

5.1. Simulation Setup

5.2. Experimental Results and Discussions

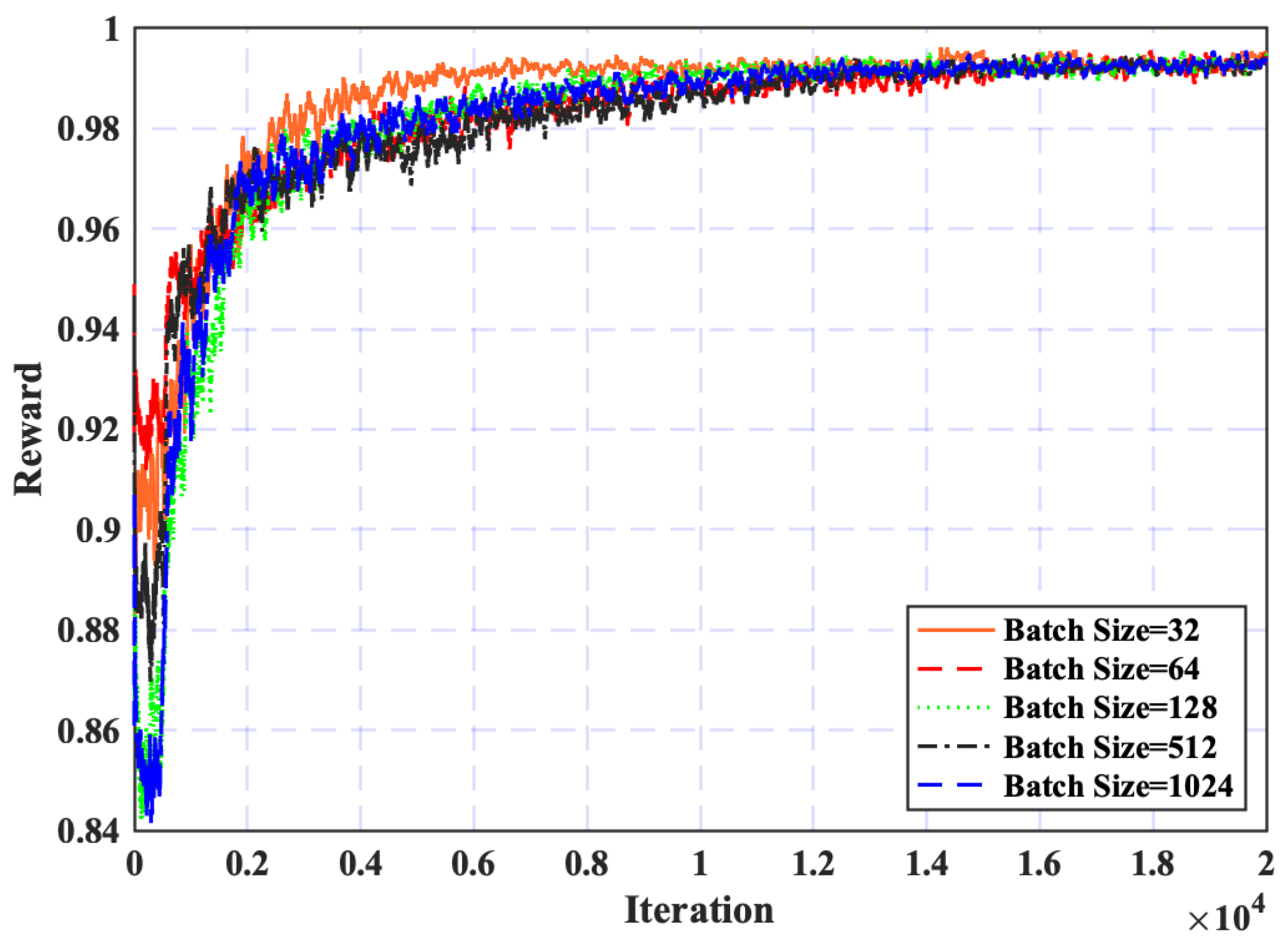

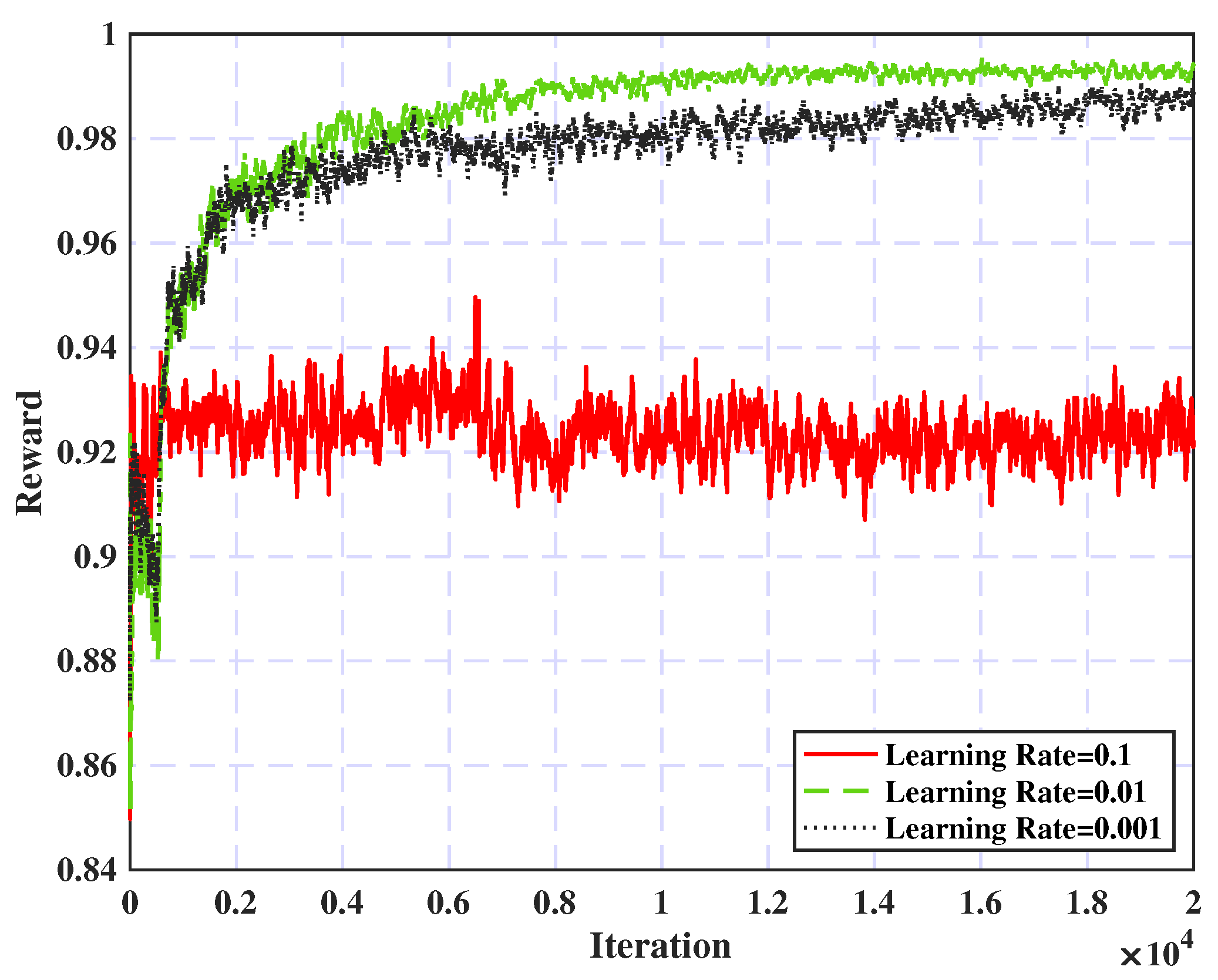

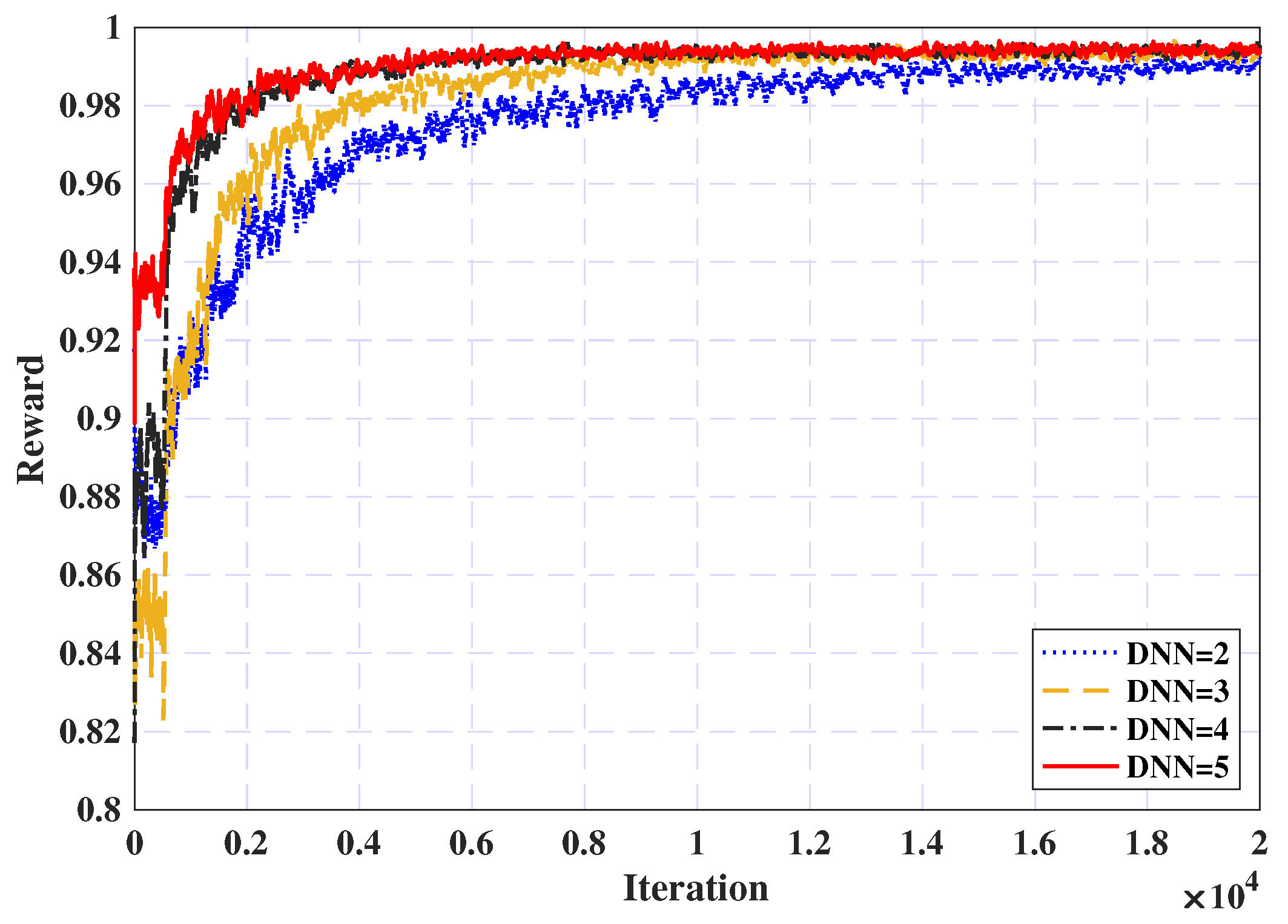

5.2.1. Convergence Analysis

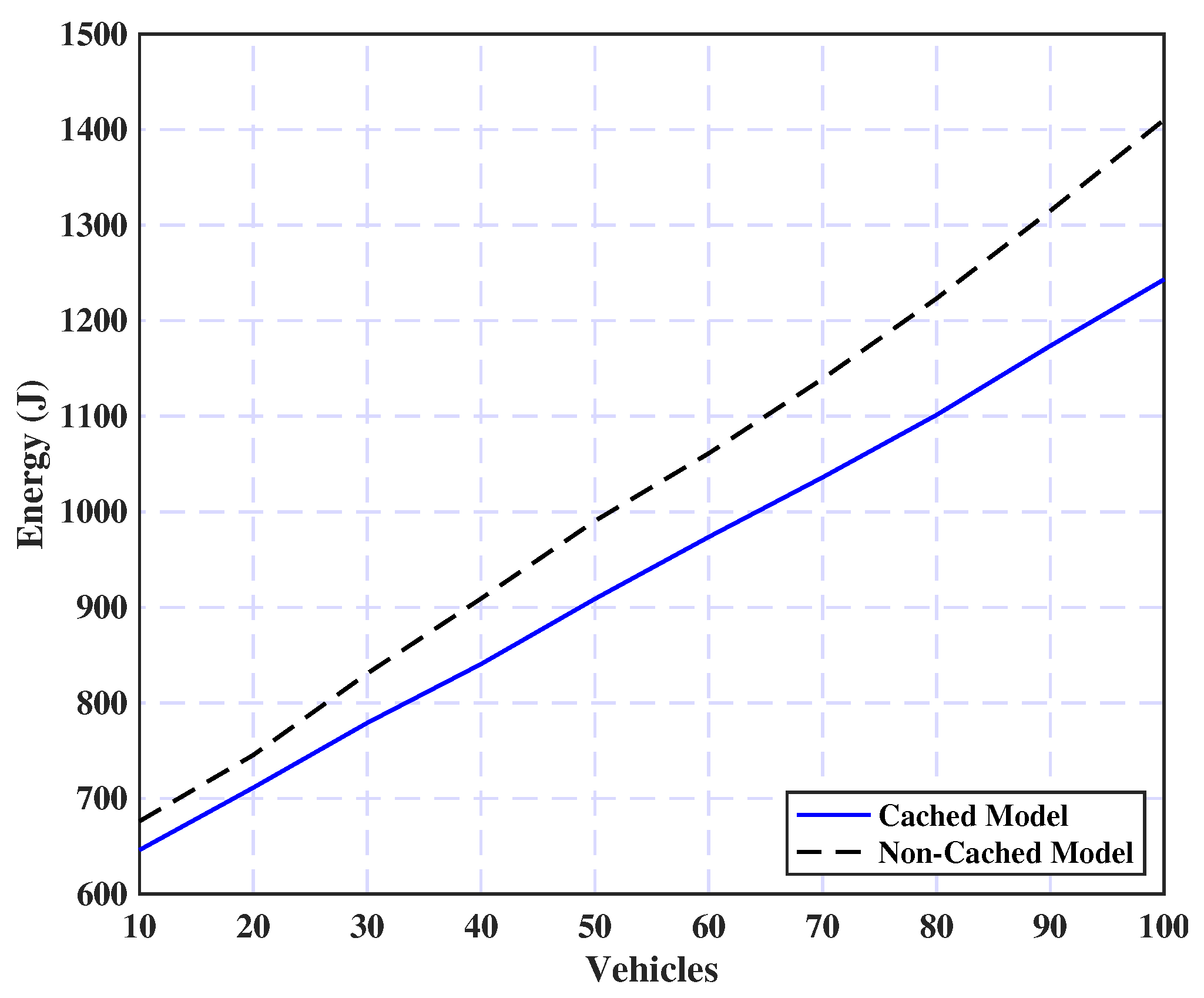

5.2.2. Task Caching Effect

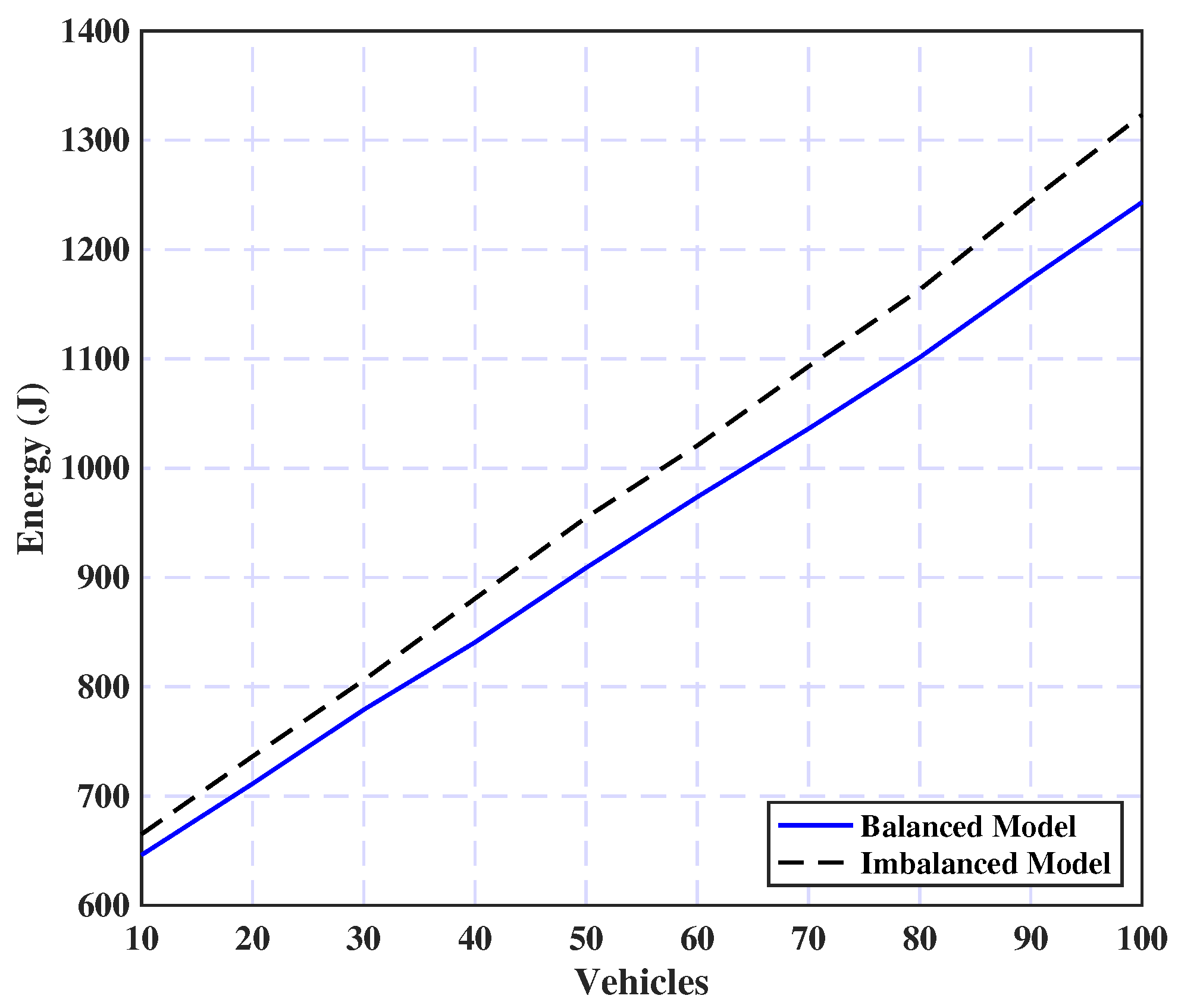

5.2.3. Load Balancing Effect

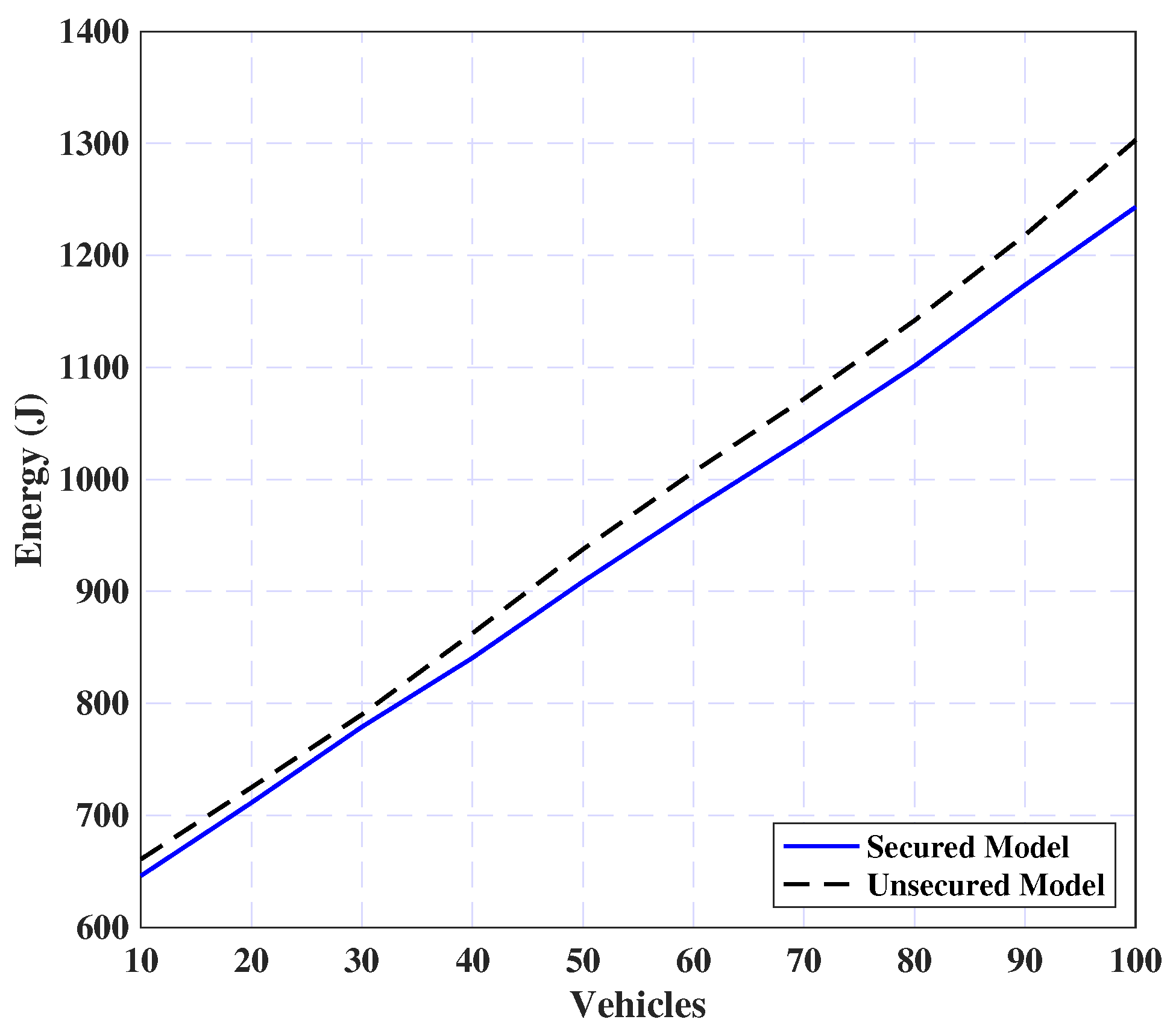

5.2.4. Security Effect

5.2.5. System Performance

- Local Execution: In this approach, all computational tasks are executed exclusively on the vehicles without utilizing external resources for offloading.

- RSU Execution: In contrast, this approach fully leverages the computational capabilities of nearby Roadside Units (RSUs), offloading all tasks for remote processing and relying entirely on their computing power.

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, L.; Lu, S.; Zhong, R.; Wu, B.; Yao, Y.; Zhang, Q.; Shi, W. Computing systems for autonomous driving: State of the art and challenges. IEEE Internet Things J. 2020, 8, 6469–6486. [Google Scholar]

- Zhao, J.; Zhao, W.; Deng, B.; Wang, Z.; Zhang, F.; Zheng, W.; Cao, W.; Nan, J.; Lian, Y.; Burke, A.F. Autonomous driving system: A comprehensive survey. Expert Syst. Appl. 2024, 242, 122836. [Google Scholar]

- Gugan, G.; Haque, A. Path planning for autonomous drones: Challenges and future directions. Drones 2023, 7, 169. [Google Scholar] [CrossRef]

- Alvarez-Diazcomas, A.; Estévez-Bén, A.A.; Rodríguez-Reséndiz, J.; Carrillo-Serrano, R.V.; Álvarez-Alvarado, J.M. A high-efficiency capacitor-based battery equalizer for electric vehicles. Sensors 2023, 23, 5009. [Google Scholar] [CrossRef]

- Fadhel, M.A.; Duhaim, A.M.; Saihood, A.; Sewify, A.; Al-Hamadani, M.N.; Albahri, A.; Alzubaidi, L.; Gupta, A.; Mirjalili, S.; Gu, Y. Comprehensive systematic review of information fusion methods in smart cities and urban environments. Inf. Fusion 2024, 107, 102317. [Google Scholar]

- Baccour, E.; Mhaisen, N.; Abdellatif, A.A.; Erbad, A.; Mohamed, A.; Hamdi, M.; Guizani, M. Pervasive AI for IoT applications: A survey on resource-efficient distributed artificial intelligence. IEEE Commun. Surv. Tutor. 2022, 24, 2366–2418. [Google Scholar]

- Yusuf, S.A.; Khan, A.; Souissi, R. Vehicle-to-everything (V2X) in the autonomous vehicles domain–A technical review of communication, sensor, and AI technologies for road user safety. Transp. Res. Interdiscip. Perspect. 2024, 23, 100980. [Google Scholar]

- Al-Ansi, A.; Al-Ansi, A.M.; Muthanna, A.; Elgendy, I.A.; Koucheryavy, A. Survey on intelligence edge computing in 6G: Characteristics, challenges, potential use cases, and market drivers. Future Internet 2021, 13, 118. [Google Scholar] [CrossRef]

- Elgendy, I.A.; Yadav, R. Survey on mobile edge-cloud computing: A taxonomy on computation offloading approaches. In Security and Privacy Preserving for IoT and 5G Networks: Techniques, Challenges, and New Directions; Springer: Berlin/Heidelberg, Germany, 2022; pp. 117–158. [Google Scholar]

- Wang, Y.; Yang, C.; Lan, S.; Zhu, L.; Zhang, Y. End-edge-cloud collaborative computing for deep learning: A comprehensive survey. IEEE Commun. Surv. Tutor. 2024, 26, 2647–2683. [Google Scholar]

- Whaiduzzaman, M.; Sookhak, M.; Gani, A.; Buyya, R. A survey on vehicular cloud computing. J. Netw. Comput. Appl. 2014, 40, 325–344. [Google Scholar]

- Masood, A.; Lakew, D.S.; Cho, S. Security and privacy challenges in connected vehicular cloud computing. IEEE Commun. Surv. Tutor. 2020, 22, 2725–2764. [Google Scholar]

- Hasan, M.K.; Jahan, N.; Nazri, M.Z.A.; Islam, S.; Khan, M.A.; Alzahrani, A.I.; Alalwan, N.; Nam, Y. Federated learning for computational offloading and resource management of vehicular edge computing in 6G-V2X network. IEEE Trans. Consum. Electron. 2024, 70, 3827–3847. [Google Scholar]

- Yan, G.; Liu, K.; Liu, C.; Zhang, J. Edge Intelligence for Internet of Vehicles: A Survey. IEEE Trans. Consum. Electron. 2024, 70, 4858–4877. [Google Scholar]

- Narayanasamy, I.; Rajamanickam, V. A Cascaded Multi-Agent Reinforcement Learning-Based Resource Allocation for Cellular-V2X Vehicular Platooning Networks. Sensors 2024, 24, 5658. [Google Scholar] [CrossRef]

- Dong, S.; Tang, J.; Abbas, K.; Hou, R.; Kamruzzaman, J.; Rutkowski, L.; Buyya, R. Task offloading strategies for mobile edge computing: A survey. Comput. Netw. 2024, 254, 110791. [Google Scholar]

- Sun, G.; Wang, Y.; Sun, Z.; Wu, Q.; Kang, J.; Niyato, D.; Leung, V.C. Multi-objective optimization for multi-uav-assisted mobile edge computing. IEEE Trans. Mob. Comput. 2024, 23, 14803–14820. [Google Scholar]

- Alhelaly, S.; Muthanna, A.; Elgendy, I.A. Optimizing task offloading energy in multi-user multi-UAV-enabled mobile edge-cloud computing systems. Appl. Sci. 2022, 12, 6566. [Google Scholar] [CrossRef]

- Mach, P.; Becvar, Z. Mobile edge computing: A survey on architecture and computation offloading. IEEE Commun. Surv. Tutor. 2017, 19, 1628–1656. [Google Scholar]

- Asghari, A.; Sohrabi, M.K. Server placement in mobile cloud computing: A comprehensive survey for edge computing, fog computing and cloudlet. Comput. Sci. Rev. 2024, 51, 100616. [Google Scholar]

- Xiao, Y.; Jia, Y.; Liu, C.; Cheng, X.; Yu, J.; Lv, W. Edge computing security: State of the art and challenges. Proc. IEEE 2019, 107, 1608–1631. [Google Scholar]

- Choudhury, A.; Ghose, M.; Islam, A.; Yogita. Machine learning-based computation offloading in multi-access edge computing: A survey. J. Syst. Archit. 2024, 148, 103090. [Google Scholar]

- Gill, S.S.; Golec, M.; Hu, J.; Xu, M.; Du, J.; Wu, H.; Walia, G.K.; Murugesan, S.S.; Ali, B.; Kumar, M.; et al. Edge AI: A taxonomy, systematic review and future directions. Clust. Comput. 2025, 28, 18. [Google Scholar]

- Wu, X.; Dong, S.; Hu, J.; Huang, Z. An efficient many-objective optimization algorithm for computation offloading in heterogeneous vehicular edge computing network. Simul. Model. Pract. Theory 2024, 131, 102870. [Google Scholar]

- Elgendy, I.A.; Zhang, W.Z.; Zeng, Y.; He, H.; Tian, Y.C.; Yang, Y. Efficient and secure multi-user multi-task computation offloading for mobile-edge computing in mobile IoT networks. IEEE Trans. Netw. Serv. Manag. 2020, 17, 2410–2422. [Google Scholar]

- Dai, X.; Xiao, Z.; Jiang, H.; Lui, J.C. UAV-assisted task offloading in vehicular edge computing networks. IEEE Trans. Mob. Comput. 2023, 23, 2520–2534. [Google Scholar]

- Almuseelem, W. Energy-efficient and security-aware task offloading for multi-tier edge-cloud computing systems. IEEE Access 2023, 11, 66428–66439. [Google Scholar]

- Yuan, H.; Wang, M.; Bi, J.; Shi, S.; Yang, J.; Zhang, J.; Zhou, M.; Buyya, R. Cost-efficient Task Offloading in Mobile Edge Computing with Layered Unmanned Aerial Vehicles. IEEE Internet Things J. 2024, 11, 30496–30509. [Google Scholar]

- Geng, L.; Zhao, H.; Wang, J.; Kaushik, A.; Yuan, S.; Feng, W. Deep-reinforcement-learning-based distributed computation offloading in vehicular edge computing networks. IEEE Internet Things J. 2023, 10, 12416–12433. [Google Scholar]

- Zhao, L.; Zhao, Z.; Zhang, E.; Hawbani, A.; Al-Dubai, A.Y.; Tan, Z.; Hussain, A. A digital twin-assisted intelligent partial offloading approach for vehicular edge computing. IEEE J. Sel. Areas Commun. 2023, 41, 3386–3400. [Google Scholar]

- Liu, X.; Zheng, J.; Zhang, M.; Li, Y.; Wang, R.; He, Y. Multi-User Computation Offloading and Resource Allocation Algorithm in a Vehicular Edge Network. Sensors 2024, 24, 2205. [Google Scholar] [CrossRef]

- Lu, H.; He, X.; Zhang, D. Security-Aware Task Offloading Using Deep Reinforcement Learning in Mobile Edge Computing Systems. Electronics 2024, 13, 2933. [Google Scholar] [CrossRef]

- Tian, H.; Zhu, L.; Tan, L. A joint task caching and computation offloading scheme based on deep reinforcement learning. Peer-Netw. Appl. 2025, 18, 57. [Google Scholar] [CrossRef]

- Tang, X.; Tang, T.; Shen, Z.; Zheng, H.; Ding, W. Double deep Q-network-based dynamic offloading decision-making for mobile edge computing with regular hexagonal deployment structure of servers. Appl. Soft Comput. 2025, 169, 112594. [Google Scholar] [CrossRef]

- Xue, J.; Wang, L.; Yu, Q.; Mao, P. Multi-Agent Deep Reinforcement Learning-based Partial Offloading and Resource Allocation in Vehicular Edge Computing Networks. Comput. Commun. 2025, 234, 108081. [Google Scholar]

- Zhu, L.; Li, B.; Tan, L. A vehicular edge computing offloading and task caching solution based on spatiotemporal prediction. Future Gener. Comput. Syst. 2025, 166, 107679. [Google Scholar] [CrossRef]

- Yang, J.; Yang, K.; Dai, X.; Xiao, Z.; Jiang, H.; Zeng, F.; Li, B. Service-Aware Computation Offloading for Parallel Tasks in VEC Networks. IEEE Internet Things J. 2024, 12, 2979–2993. [Google Scholar] [CrossRef]

- Min, H.; Rahmani, A.M.; Ghaderkourehpaz, P.; Moghaddasi, K.; Hosseinzadeh, M. A joint optimization of resource allocation management and multi-task offloading in high-mobility vehicular multi-access edge computing networks. Ad Hoc Netw. 2025, 166, 103656. [Google Scholar]

- Devarajan, G.G.; Thangam, S.; Alenazi, M.J.; Kumaran, U.; Chandran, G.; Bashir, A.K. Federated Learning and Blockchain-Enabled Framework for Traffic Rerouting and Task Offloading in the Internet of Vehicles (IoV). IEEE Trans. Consum. Electron. 2025. [Google Scholar] [CrossRef]

- Shakkeera, L.; Matheen, F.G. Efficient task scheduling and computational offloading optimization with federated learning and blockchain in mobile cloud computing. Results Control Optim. 2025, 18, 100524. [Google Scholar]

- Dong, S.; Wang, P.; Abbas, K. A survey on deep learning and its applications. Comput. Sci. Rev. 2021, 40, 100379. [Google Scholar]

- Kiran, B.R.; Sobh, I.; Talpaert, V.; Mannion, P.; Al Sallab, A.A.; Yogamani, S.; Pérez, P. Deep reinforcement learning for autonomous driving: A survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 4909–4926. [Google Scholar]

- He, H.; Meng, X.; Wang, Y.; Khajepour, A.; An, X.; Wang, R.; Sun, F. Deep reinforcement learning based energy management strategies for electrified vehicles: Recent advances and perspectives. Renew. Sustain. Energy Rev. 2024, 192, 114248. [Google Scholar] [CrossRef]

- Elgendy, I.A.; Zhang, W.; Tian, Y.C.; Li, K. Resource allocation and computation offloading with data security for mobile edge computing. Future Gener. Comput. Syst. 2019, 100, 531–541. [Google Scholar]

- Chen, X.; Jiao, L.; Li, W.; Fu, X. Efficient multi-user computation offloading for mobile-edge cloud computing. IEEE/ACM Trans. Netw. 2015, 24, 2795–2808. [Google Scholar] [CrossRef]

- Zhang, W.Z.; Elgendy, I.A.; Hammad, M.; Iliyasu, A.M.; Du, X.; Guizani, M.; Abd El-Latif, A.A. Secure and optimized load balancing for multitier IoT and edge-cloud computing systems. IEEE Internet Things J. 2020, 8, 8119–8132. [Google Scholar] [CrossRef]

- Deb, S.; Monogioudis, P. Learning-based uplink interference management in 4G LTE cellular systems. IEEE/ACM Trans. Netw. 2014, 23, 398–411. [Google Scholar]

- Selent, D. Advanced encryption standard. Rivier Acad. J. 2010, 6, 1–14. [Google Scholar]

- Li, G.; Zhang, Z.; Zhang, J.; Hu, A. Encrypting wireless communications on the fly using one-time pad and key generation. IEEE Internet Things J. 2020, 8, 357–369. [Google Scholar]

- Hao, Y.; Chen, M.; Hu, L.; Hossain, M.S.; Ghoneim, A. Energy efficient task caching and offloading for mobile edge computing. IEEE Access 2018, 6, 11365–11373. [Google Scholar]

- Xing, H.; Liu, L.; Xu, J.; Nallanathan, A. Joint task assignment and resource allocation for D2D-enabled mobile-edge computing. IEEE Trans. Commun. 2019, 67, 4193–4207. [Google Scholar]

- Ernst, D.; Louette, A. Introduction to Reinforcement Learning; Feuerriegel, S., Hartmann, J., Janiesch, C., Zschech, P., Eds.; Springer: Singapore, 2024; pp. 111–126. [Google Scholar]

- Yin, Q.; Yu, T.; Shen, S.; Yang, J.; Zhao, M.; Ni, W.; Huang, K.; Liang, B.; Wang, L. Distributed deep reinforcement learning: A survey and a multi-player multi-agent learning toolbox. Mach. Intell. Res. 2024, 21, 411–430. [Google Scholar]

- Huang, L.; Feng, X.; Zhang, L.; Qian, L.; Wu, Y. Multi-server multi-user multi-task computation offloading for mobile edge computing networks. Sensors 2019, 19, 1446. [Google Scholar] [CrossRef] [PubMed]

- He, X.; Cen, Y.; Liao, Y.; Chen, X.; Yang, C. Optimal Task Offloading Strategy for Vehicular Networks in Mixed Coverage Scenarios. Appl. Sci. 2024, 14, 10787. [Google Scholar] [CrossRef]

- Pang, S.; Hou, L.; Gui, H.; He, X.; Wang, T.; Zhao, Y. Multi-mobile vehicles task offloading for vehicle-edge-cloud collaboration: A dependency-aware and deep reinforcement learning approach. Comput. Commun. 2024, 213, 359–371. [Google Scholar]

| Ref. | Objective | Methodology | Security | Limitations |

|---|---|---|---|---|

| [24] | Optimize task completion time, energy consumption, resource costs, and load balance | Many-objective optimization algorithm for task offloading | Data security and privacy are not considered. | |

| [25] | Optimize secure multi-user multi-task offloading in mobile edge computing for IoT. | Utilize integrated optimization of resource allocation, compression, and security for efficient MEC offloading. | ✓ | Lacks efficient load balancing among edge nodes. Task caching is not considered. |

| [26] | Minimize vehicular task delay under a long-term UAV energy constraint. | Utilize Lyapunov optimization and Markov approximation for real-time UAV-assisted vehicular task offloading optimization. | Resource distribution lacks task specificity. Data security and privacy are not considered. | |

| [27] | Minimize energy consumption while ensuring secure task offloading. | Utilize AES encryption, fingerprint authentication, and load-balancing algorithms for secure and energy-efficient task offloading. | ✓ | Inefficient load balancing for non-intersection vehicles. Ignore task caching. |

| [28] | Minimize system cost. | An AGSP algorithm integrating PSO, GA, and SA for optimized UAV task offloading. | Lacks security considerations. Real-time adaptability. Scalability for large UAV networks. | |

| [29] | Minimize system cost. | Utilize multi-agent DRL with actor-critic networks for distributed computation offloading in vehicular edge networks. | Training is limited by large state and variable time. Mobility is not considered. Data security and privacy are not considered. | |

| [30] | Minimize the total system computational delay | An intelligent partial offloading scheme uses digital twins and clustering to optimize partial offloading. | Data security and privacy are not considered. Task caching is not considered. | |

| [31] | Minimize total system delay. | A DDPG-based reinforcement learning algorithm for multi-user task offloading and resource allocation optimization. | Energy consumption is ignored. Data security and privacy are not considered. | |

| [32] | Optimize secure task offloading while minimizing latency and energy consumption. | Utilize Proximal Policy Optimization (PPO)-based deep reinforcement learning for secure and efficient task offloading in MEC. | ✓ | Lacks efficient load balancing among edge nodes. Task caching is not considered. |

| [33] | Minimize the long-term computation overhead and energy consumption. | Employ Deep Reinforcement Learning for optimizing task caching and computation offloading in VECC. | Lacks efficient load balancing among edge nodes. Data security and privacy are not considered. | |

| [34] | Minimize task service latency. | A double deep Q-network-based approach with dynamic offloading. | Data security and privacy are not considered. | |

| [35] | Minimize long-term computation overhead | A multi-agent deep reinforcement learning framework with task migration for continuous, efficient vehicular service. | Lacks efficient load balancing among edge nodes. Data security and privacy are not considered. Task caching is not considered. | |

| [36] | Optimize task offloading and caching using spatiotemporal prediction in vehicular networks. | Utilize spatiotemporal prediction, deep reinforcement learning, and digital twin technology for efficient task offloading and caching. | Lacks efficient load balancing among edge nodes. Data security and privacy are not considered. Task caching is not considered. Data security and privacy are not considered. | |

| [37] | Minimize vehicular task delay. | A service-aware offloading strategy that utilizes real-world vehicular data for dynamic service prediction. | Lacks efficient load balancing among edge nodes. Data security and privacy are not considered. Task caching is not considered. | |

| [38] | Optimize resource allocation and task offloading in high-mobility vehicular edge networks. | Utilize a Double Deep Q-Network (DDQN) with multi-agent collaboration for dynamic task offloading and resource allocation. | Lacks efficient load balancing, data security, and privacy measures. Task caching is not considered. | |

| [39] | Optimize traffic rerouting and task offloading using federated learning and blockchain in IoV. | Utilize federated learning, blockchain, and hybrid ACO-DRL for secure task offloading and dynamic traffic rerouting. | ✓ | Load balancing and task caching among edge nodes are not considered. |

| [40] | Optimize task scheduling and offloading using federated learning and blockchain. | Utilize federated learning with blockchain for secure task scheduling and computational offloading in mobile cloud computing. | ✓ | Increased latency due to blockchain verification and consensus mechanisms. Scalability challenges in managing large user bases and diverse tasks. High energy consumption from frequent model updates and blockchain processing. |

| Proposed | Minimize energy consumption while considering a latency. | An energy optimization and security-aware deep reinforcement learning-enabled task offloading framework for multi-tier VECC networks. | ✓ | Vehicle mobility issues are not considered. |

| Vehicles | Input Size | CPU Cycles | Estimated Processing Time | Best RSU | |

|---|---|---|---|---|---|

| Transmission Time | Computation Time | ||||

| 15 | 15 | RSU | RSU | ||

| RSU | RSU | ||||

| 20 | 10 | RSU | RSU | ||

| RSU | RSU | ||||

| RSU | RSU | ||||

| 15 | 20 | RSU | RSU | ||

| RSU | RSU | ||||

| 10 | 15 | RSU | RSU | ||

| RSU | RSU | ||||

| 15 | 25 | RSU | RSU | ||

| RSU | RSU | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almuseelem, W. Deep Reinforcement Learning-Enabled Computation Offloading: A Novel Framework to Energy Optimization and Security-Aware in Vehicular Edge-Cloud Computing Networks. Sensors 2025, 25, 2039. https://doi.org/10.3390/s25072039

Almuseelem W. Deep Reinforcement Learning-Enabled Computation Offloading: A Novel Framework to Energy Optimization and Security-Aware in Vehicular Edge-Cloud Computing Networks. Sensors. 2025; 25(7):2039. https://doi.org/10.3390/s25072039

Chicago/Turabian StyleAlmuseelem, Waleed. 2025. "Deep Reinforcement Learning-Enabled Computation Offloading: A Novel Framework to Energy Optimization and Security-Aware in Vehicular Edge-Cloud Computing Networks" Sensors 25, no. 7: 2039. https://doi.org/10.3390/s25072039

APA StyleAlmuseelem, W. (2025). Deep Reinforcement Learning-Enabled Computation Offloading: A Novel Framework to Energy Optimization and Security-Aware in Vehicular Edge-Cloud Computing Networks. Sensors, 25(7), 2039. https://doi.org/10.3390/s25072039