A Multiscale Gradient Fusion Method for Color Image Edge Detection Using CBM3D Filtering

Abstract

1. Introduction

- ●

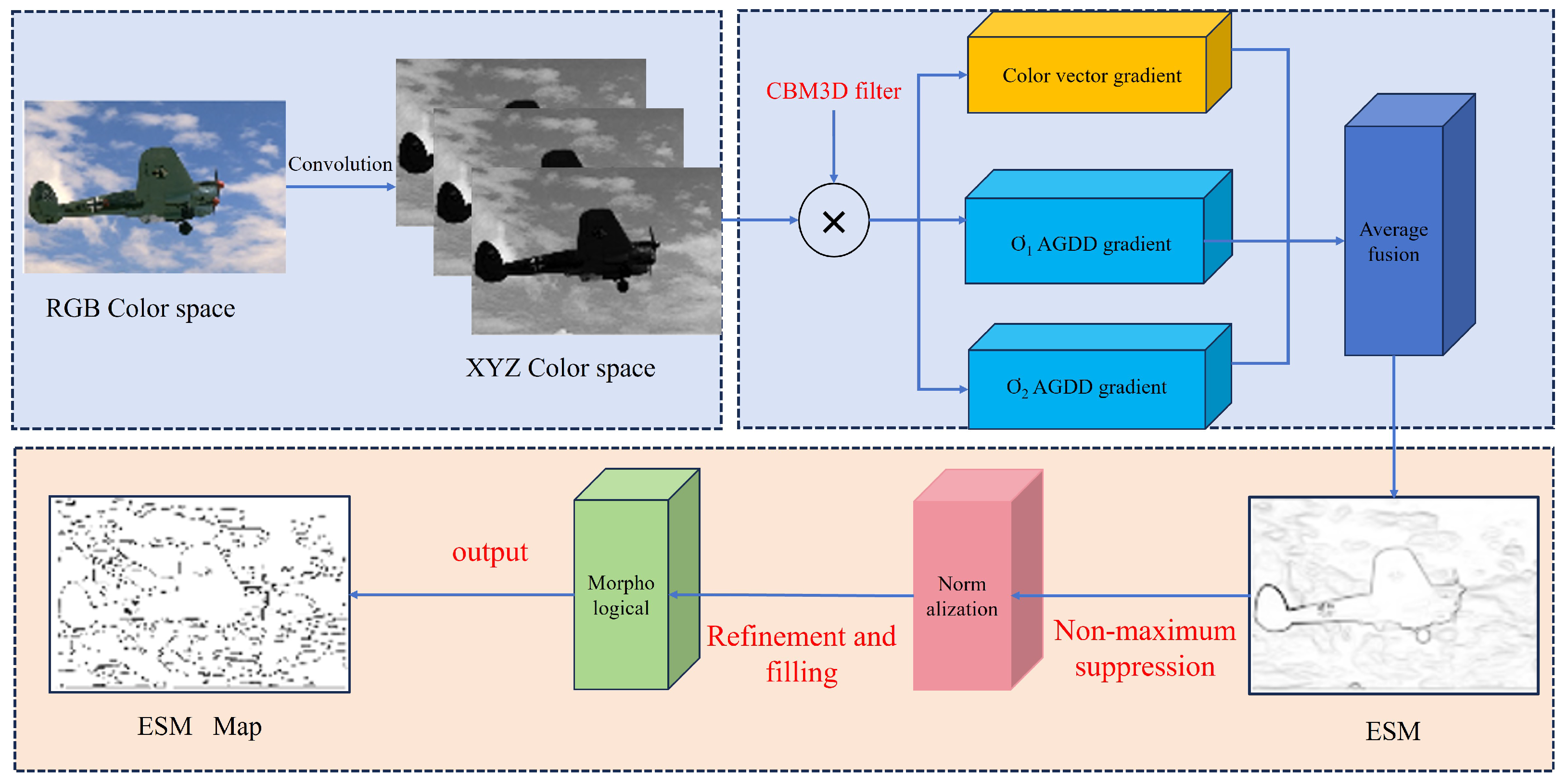

- The proposed algorithm combines multiscale gradient fusion with color BM3D filters for edge detection, harnessing the benefits of sparse coding and image denoising to significantly improve edge quality.

- ●

- By integrating the XYZ color space with anisotropic directional derivatives at multiple scales, a novel edge strength map (ESM) is generated, enhancing both the accuracy and robustness of edge detection.

- ●

- The ESM is optimized through pixel-average fusion and morphological filling techniques, effectively balancing large-scale edge elongation with small-scale resolution accuracy to achieve improved edge detection performance.

- ●

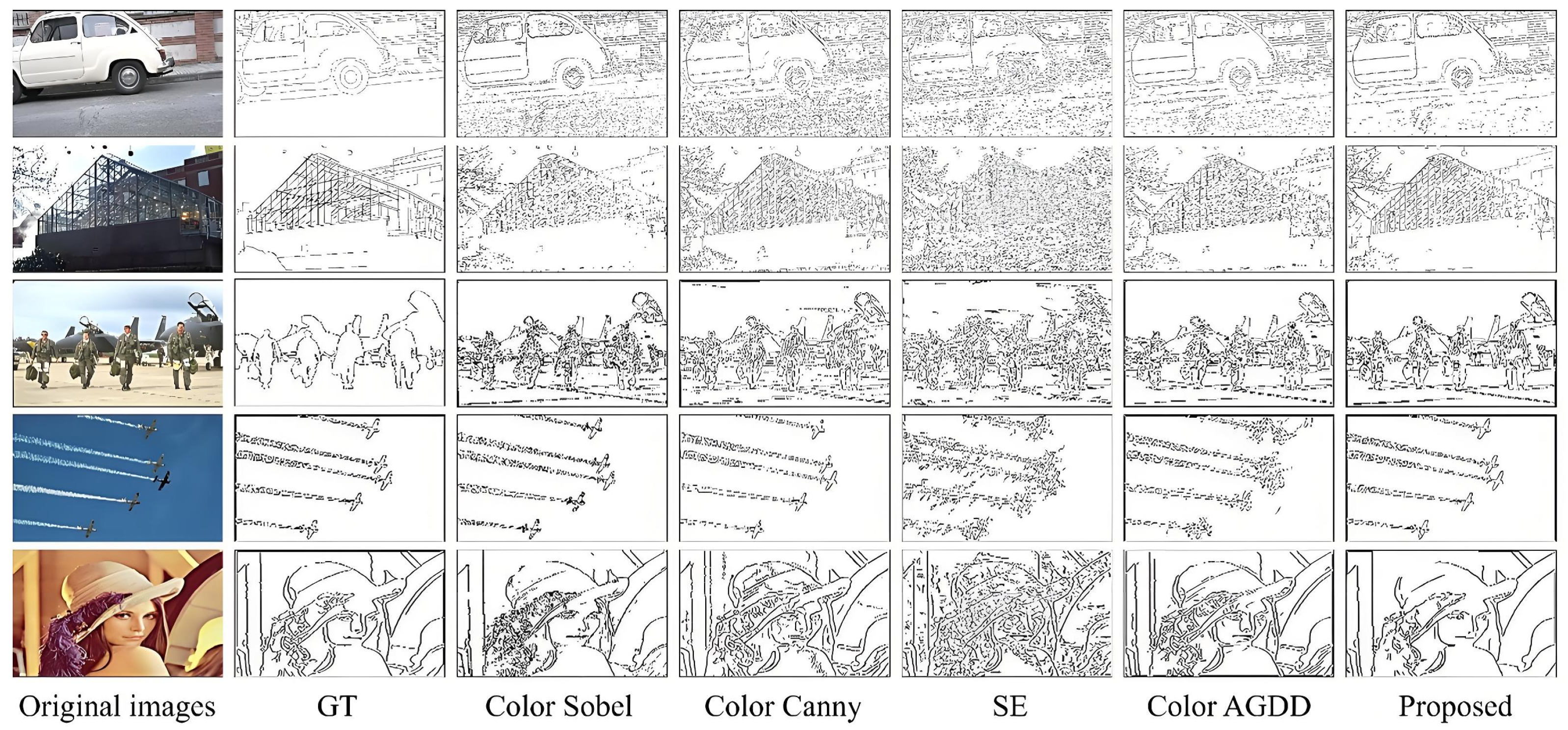

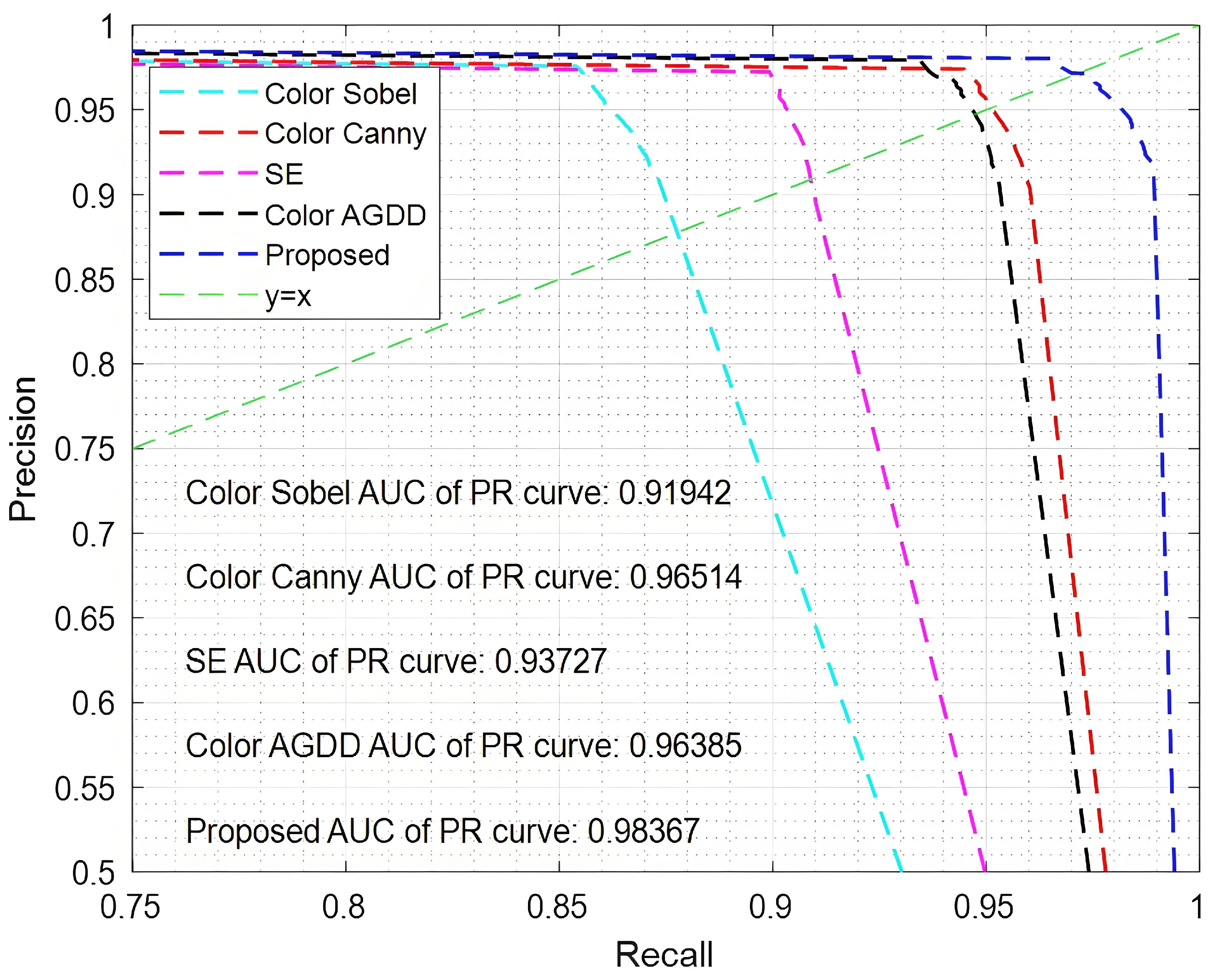

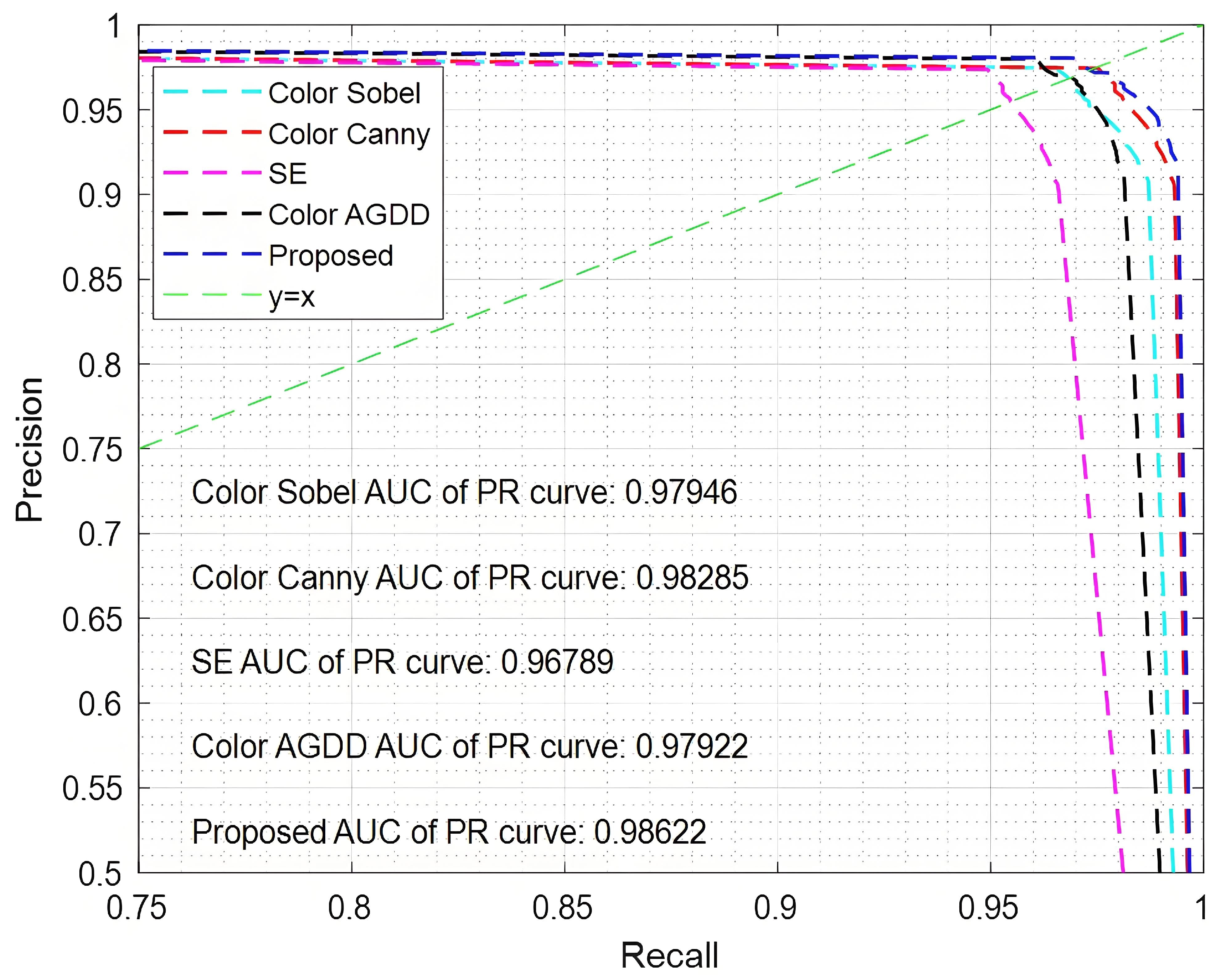

- The proposed algorithm is evaluated using standard metrics (PR curve, AUC, PSNR, MSE, FOM) and compared with leading edge detection methods, including Color Sobel, Color Canny, SE, and Color AGDD, showcasing its superior performance on both edge and non-edge detection datasets.

2. Related Work

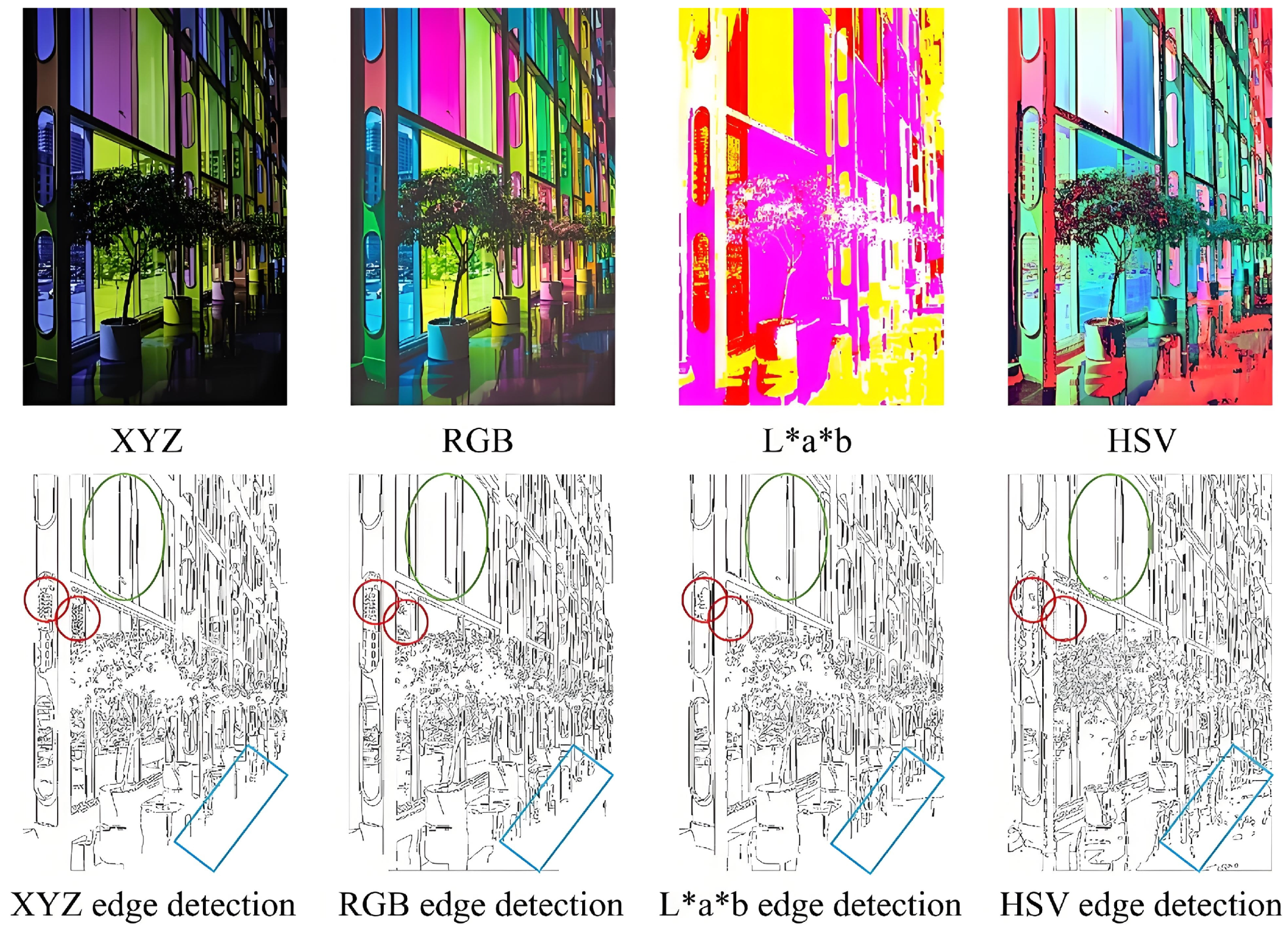

2.1. CIE XYZ Color Space

2.2. The Color Block-Matching and 3D Filter (CBM3D)

3. A New Color Image Edge Detection Method

3.1. Multiscale Gradient Fusion Approach

3.2. Color Image Edge Detection Process

4. Experimental Results and Performance Analysis

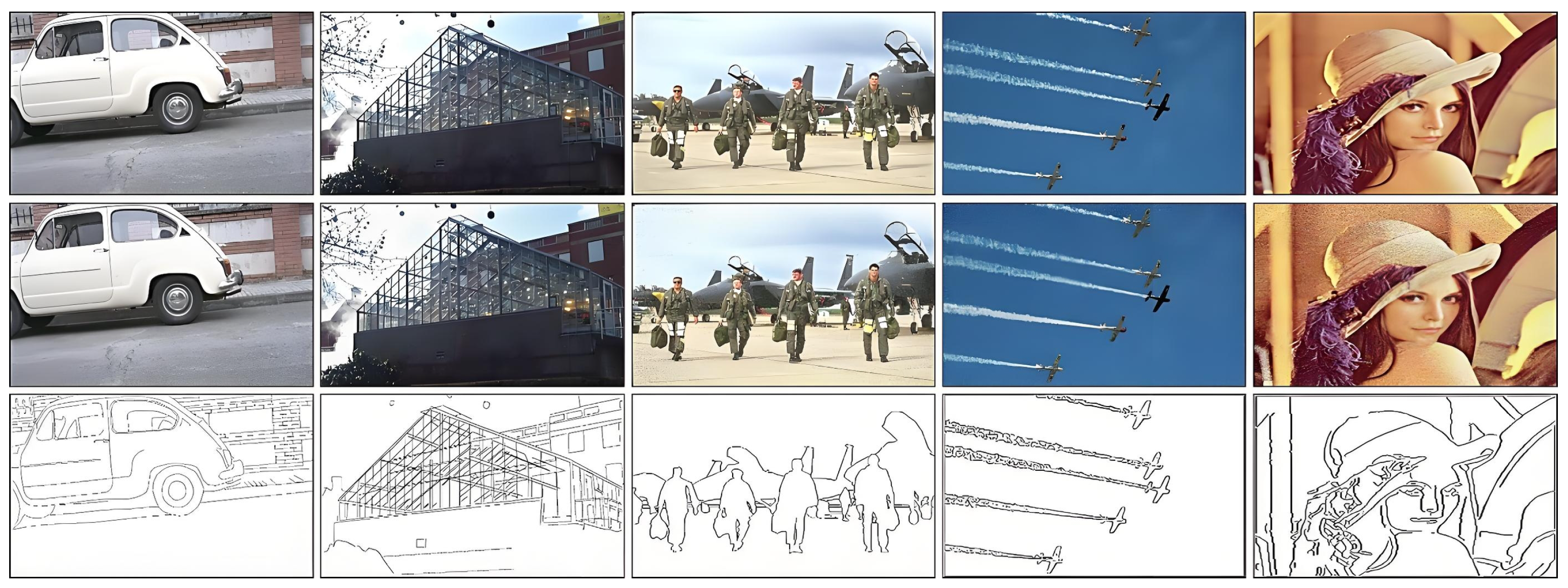

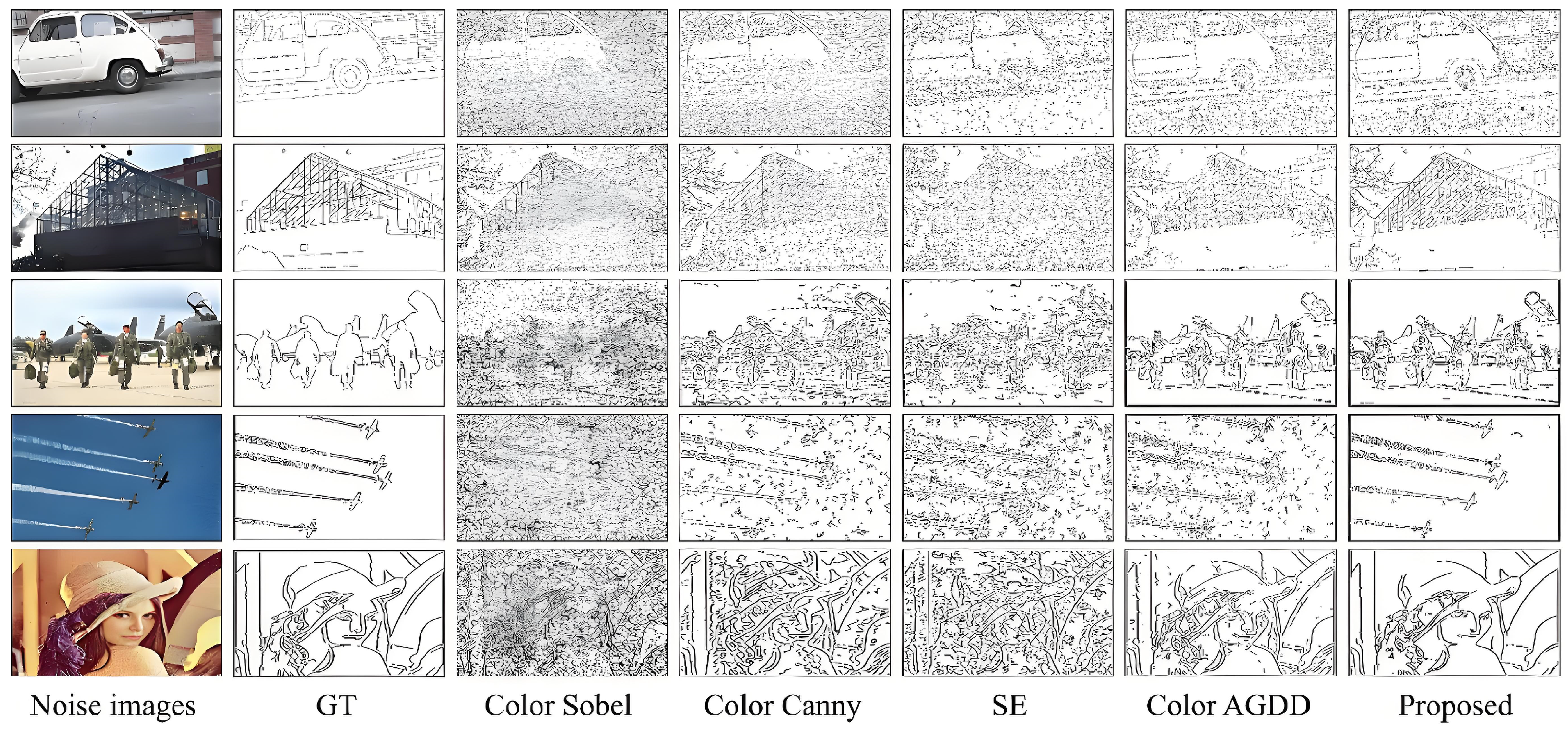

4.1. Experimental Results

4.2. Performance Analysis

4.3. Analysis of the Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mittal, P. A comprehensive survey of deep learning-based lightweight object detection models for edge devices. Artif. Intell. Rev. 2024, 57, 242. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, Y. A Novel Patch-Based Multi-Exposure Image Fusion Using Super-Pixel Segmentation. IEEE Access 2020, 8, 39034–39045. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. Adv. Neural Inf. Process. Syst. 2020, 33, 21002–21012. [Google Scholar]

- Li, W.; Zhang, W.; Liu, Y.; Liu, C.; Jing, R. Pixel-patch combination loss for refined edge detection. Int. J. Mach. Learn. Cyber. 2025, 16, 1341–1354. [Google Scholar] [CrossRef]

- Keerativittayanun, S.; Kondo, T.; Kotani, K.; Phatrapornnant, T.; Karnjana, J. Two-layer pyramid-based blending method for exposure fusion. Mach. Vis. Appl. 2021, 32, 48. [Google Scholar] [CrossRef]

- Nguyern, Q.; Hoang, N. Metaheuristic Optimized Edge Detection for Recognition of Concrete Wall Cracks: A Comparative Study on the Performances of Roberts, Prewitt, Canny, and Sobel Algorithms. Adv. Civ. Eng. 2018, 2018, 7163580. [Google Scholar]

- Yugander, P.; Tejaswini, C.H.; Meenakshi, J.; Kumar, K.S.; Varma, B.V.N.S.; Jagannath, M. MR Image Enhancement using Adaptive Weighted Mean Filtering and Homomorphic Filtering. Procedia Comput. Sci. 2020, 167, 677–685. [Google Scholar] [CrossRef]

- Huang, Q.; Huang, J. Comprehensive review of edge and contour detection: From traditional methods to recent advances. Neural Comput. Appl. 2025, 37, 2175–2209. [Google Scholar] [CrossRef]

- Arbelaez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour Detection and Hierarchical Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 898–916. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Tian, L.; Qiu, K.; Zhao, Y.; Wang, P. Edge Detection of Motion-Blurred Images Aided by Inertial. Sensors 2023, 23, 7187. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.-W.; Hua, Z.-X.; Li, H.-C.; Zhong, H.-Y. Flying Bird Object Detection Algorithm in Surveillance Video Based on Motion Information. IEEE Trans. Instrum. Meas. 2023, 73, 5002515. [Google Scholar] [CrossRef]

- Kumar, S.V.; Kondaveeti, H.K. Bird species recognition using transfer learning with a hybrid hyperparameter optimization scheme (HHOS). Ecol. Inform. 2024, 80, 102510. [Google Scholar] [CrossRef]

- Han, Y.; Cai, Y.; Cao, Y.; Xu, X. A new image fusion performance metric based on visual information fidelity. Inf. Fusion 2013, 14, 127–135. [Google Scholar] [CrossRef]

- Dollár, P.; Zitnick, C.L. Fast Edge Detection Using Structured Forests. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 58–1570. [Google Scholar] [CrossRef]

- Wang, F.-P.; Shui, P.-L. Noise-Robust Color Edge Detector Using Gradient Matrix and Anisotropic Gaussian Directional Derivative Matrix. Pattern Recognit. 2016, 52, 346–357. [Google Scholar] [CrossRef]

- Zhu, J.; Jin, W.; Li, L.; Han, Z.; Wang, X. Multiscale infrared and visible image fusion using gradient domain guided image filtering. Infrared Phys. Technol. 2018, 89, 8–19. [Google Scholar] [CrossRef]

- Sara, U.; Akter, M.; Uddin, M.S. Image Quality Assessment Through FSIM, SSIM, MSE, and PSNR—A Comparative Study. J. Comput. Commun. 2019, 7, 8–18. [Google Scholar] [CrossRef]

- Maksimovic, V.; Jaksic, B.; Milosevic, M.; Todorovic, J.; Mosurovic, L. Comparative Analysis of Edge Detection Operators Using a Threshold Estimation Approach on Medical Noisy Images with Different Complexities. Sensors 2025, 25, 87. [Google Scholar] [CrossRef]

- Wang, C.M.; He, C.; Xu, M.F. Fast exposure fusion of detail enhancement for brightest and darkest regions. Vis. Comput. 2021, 37, 1233–1243. [Google Scholar] [CrossRef]

- Oksuz, K.; Cam, B.C.; Kalkan, S.; Akbas, E. Imbalance problems in object detection: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3388–3415. [Google Scholar] [CrossRef] [PubMed]

- Jing, J.; Liu, S.; Wang, G.; Zhang, W.; Sun, C. Recent advances on image edge detection:A comprehensive review. Neurocomputing 2022, 503, 59–271. [Google Scholar] [CrossRef]

- Ulucan, O.; Karakaya, D.; Turkan, M. Multi-exposure image fusion based on linear embeddings and watershed masking. Signal Process. 2021, 178, 107791. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Li, S.; Shen, Y.; Wang, Y.; Zhang, J.; Li, H.; Zhang, D.; Li, H. Pidinet-tir: An improved edge detection algorithm for weakly textured thermal infrared images based on pidinet. Infrared Phys. Technol. 2024, 138, 105257. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Z. A general framework for image fusion based on multiscale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Huang, L.; Li, Z.; Xu, C.; Feng, B. Multi-exposure image fusion based on feature evaluation with adaptive factor. IET Image Process 2021, 15, 3211–3220. [Google Scholar] [CrossRef]

- Qi, G.; Chang, L.; Luo, Y.; Chen, Y.; Zhu, Z.; Wang, S. A Precise Multi-Exposure Image Fusion Method Based on Low-level Features. Sensors 2020, 20, 1597. [Google Scholar] [CrossRef]

- Li, H.; Xu, K. Innovative adaptive edge detection for noisy images using wavelet and Gaussian method. Sci. Rep. 2025, 15, 5838. [Google Scholar] [CrossRef]

- Maranga, J.O.; Nnko, J.J.; Xiong, S. Learned active contours via transformer-based deep convolutional neural network using canny edge detection algorithm. Signal Image Video Process. 2025, 19, 222. [Google Scholar] [CrossRef]

| PSNR/MSE | Images | ||||

|---|---|---|---|---|---|

| Car | House | Airman | Aircraft | Lena | |

| Color Sobel | 58.3943/0.0949 | 57.9004/0.1063 | 57.8936/0.1064 | 59.4183/0.0749 | 56.6504/0.1417 |

| Color Canny | 57.7694/0.1095 | 57.3236/0.1214 | 58.4272/0.0941 | 59.7929/0.0687 | 56.5378/0.1454 |

| SE | 58.0964/0.1016 | 55.8104/0.1720 | 57.7789/0.1093 | 58.4296/0.0941 | 55.4221/0.1880 |

| Color AGDD | 59.3272/0.0765 | 57.6517/0.1125 | 58.5668/0.0912 | 59.7459/0.0696 | 57.5576/0.1150 |

| Proposed | 59.7834/0.0689 | 58.0976/0.1016 | 59.0649/0.0813 | 60.5842/0.0573 | 57.6156/0.1135 |

| PSNR/MSE | Images | ||||

|---|---|---|---|---|---|

| Car | House | Airman | Aircraft | Lena | |

| Color Sobel | 54.4199/0.2369 | 54.5217/0.2314 | 54.7027/0.2219 | 54.0975/0.2551 | 53.5959/0.2470 |

| Color Canny | 56.4167/0.1496 | 56.6361/0.1422 | 57.5770/0.1145 | 58.2821/0.0973 | 55.7158/0.1445 |

| SE | 58.7171/0.1584 | 56.1659/0.1584 | 57.8254/0.1081 | 56.5962/0.1435 | 55.0543/0.1443 |

| Color AGDD | 57.8259/0.1081 | 57.6514/0.1014 | 58.9112/0.0842 | 58.3481/0.0959 | 57.2148/0.1435 |

| Proposed | 59.1469/0.0798 | 58.0410/0.1029 | 59.1629/0.0795 | 60.2004/0.0626 | 57.4664/0.1174 |

| Images | Car | House | Airman | Aircraft | Lena |

|---|---|---|---|---|---|

| Color Sobel | 0.9369/845722 | 0.9774/862113 | 0.9259/139695 | 0.9835/106130 | 0.9803/509976 |

| Color Canny | 0.9264/836760 | 0.9927/845996 | 0.9407/141995 | 0.9851/127551 | 0.9805/588674 |

| SE | 0.9321/841622 | 0.9027/796362 | 0.9324/139614 | 0.9847/158406 | 0.9447/566613 |

| Color AGDD | 0.9600/866915 | 0.9703/855833 | 0.9424/142501 | 0.9867/159053 | 0.9803/593848 |

| Proposed | 0.9660/872163 | 0.9832/867293 | 0.9541/143970 | 0.9873/160691 | 0.9827/607784 |

| Images | Car | House | Airman | Aircraft | Lena |

|---|---|---|---|---|---|

| Color Sobel | 0.7885/712008 | 0.8314/733244 | 0.8048/124160 | 0.9002/142312 | 0.8089/457610 |

| Color Canny | 0.8851/799586 | 0.9353/824910 | 0.9193/138744 | 0.9487/175579 | 0.9479/567214 |

| SE | 0.9472/855264 | 0.9137/809600 | 0.9259/139772 | 0.9484/150229 | 0.9524/522938 |

| Color AGDD | 0.9343/843733 | 0.9254/816180 | 0.9519/143666 | 0.9509/153138 | 0.9185/592720 |

| Proposed | 0.9546/861797 | 0.9814/865615 | 0.9558/144224 | 0.9871/179133 | 0.9861/599494 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, Z.; Shi, R.; Jiang, Y.; Han, Y.; Ma, Z.; Ren, Y. A Multiscale Gradient Fusion Method for Color Image Edge Detection Using CBM3D Filtering. Sensors 2025, 25, 2031. https://doi.org/10.3390/s25072031

Feng Z, Shi R, Jiang Y, Han Y, Ma Z, Ren Y. A Multiscale Gradient Fusion Method for Color Image Edge Detection Using CBM3D Filtering. Sensors. 2025; 25(7):2031. https://doi.org/10.3390/s25072031

Chicago/Turabian StyleFeng, Zhunruo, Ruomeng Shi, Yuhan Jiang, Yiming Han, Zeyang Ma, and Yuheng Ren. 2025. "A Multiscale Gradient Fusion Method for Color Image Edge Detection Using CBM3D Filtering" Sensors 25, no. 7: 2031. https://doi.org/10.3390/s25072031

APA StyleFeng, Z., Shi, R., Jiang, Y., Han, Y., Ma, Z., & Ren, Y. (2025). A Multiscale Gradient Fusion Method for Color Image Edge Detection Using CBM3D Filtering. Sensors, 25(7), 2031. https://doi.org/10.3390/s25072031