1. Introduction

In aerospace engineering and robotics, the estimation of attitude and position is an essential factor for precise autonomous operations [

1,

2,

3,

4,

5]. Attitude estimation enables one to determine the vehicle’s orientation in a three-dimensional space, while position estimation determines the vehicle’s translational coordinates in a three-dimensional space. Modern methods integrate optimal filtering techniques by fusing measurements from local or global sensors with knowledge of the vehicle’s motion dynamics. Traditional methods often involve sensor fusion techniques combining visual, inertial, and GPS data. Visual odometry and simultaneous localization and mapping (SLAM) have been widely adopted for pose estimation using cameras and other imaging sensors, while inertial measurement units (IMUs) are often used to provide complementary motion information [

6]. Techniques such as the Kalman filter, Extended Kalman Filter (EKF), and Unscented Kalman Filter (UKF) have been extensively applied. For instance, Zhang et al. [

7] demonstrated the effectiveness of EKF in fusing data from inertial measurement units (IMUs) and global navigation satellite systems (GNSSs) to improve the robustness of UAV positioning in urban environments. Similarly, Liu et al. [

8] explored the use of particle filters for attitude estimation in multi-sensor setups, highlighting their resilience to sensor noise and dynamic changes. One significant challenge in these methods is to deal with sensor noise and environmental conditions [

9] that affect sensor performance, such as poor lighting or featureless scenes. Several researchers have focused on enhancing these techniques through the integration of LiDAR and deep learning-based approaches [

10] to improve robustness and real-time performance, particularly in GPS-denied environments. Another challenge is associated with handling the coupled dynamics with the estimated position and attitude simultaneously [

11]. Therefore, this research develops a generalized formulation that combines the estimation of position and attitude based on the well-known q-method framework [

12].

Using the quaternion (q-method) for attitude estimation was a method developed by Paul Davenport [

12,

13] as a compact solution for optimal estimation of the rotation of a body relative to an Inertial frame. Quaternions are widely used because of their efficiency and computational advantages [

14,

15] over other three-parameter attitude representations, such as Euler angles [

16,

17]. They are ideal for avoiding singularities, such as gimbal lock, which can occur when using Euler angles during large rotations [

18]. Quaternions are also computationally lighter and more stable in dynamic conditions, which makes them popular in real-time applications [

19,

20]. The integration of a quaternion-based algorithm with the Kalman filter has been widely applied for real-time attitude estimation in aerospace applications [

21,

22]. Another progression has led to the development of more advanced algorithms, such as Unscented Kalman Filters (UKFs) and particle filters, which demonstrate enhanced performance in handling non-linear systems [

23]. Additionally, recent advancements have incorporated deep learning approaches to enhance the pose estimation process. For example, methods that fuse quaternions with convolutional neural networks (CNNs) have demonstrated high levels of accuracy in both orientation and position estimation [

24]. These developments have researched uncoupled attitude estimation without integrating the translational dynamics. The need for solutions that integrate the coupled dynamics is essential for many motion-based proximity applications.

This paper generalizes Davenport’s q-method to solve for the relative pose—the coupled state of attitude and translation—for estimation of UAVs with onboard measurements. This paper first provides a comprehensive overview of mathematical preliminaries and Davenport’s q-method for attitude estimation, including a background on the Wahba problem [

25] and laying a solid foundation for the subsequent advancements. The development of the quaternion-based approach is explained, highlighting its advantages over traditional methods in terms of simplicity and reduced computational overhead. Following this, the q-method pose estimation model is formulated as a novel, more generalized approach that optimally estimates a body’s position and orientation. This approach requires two sets of measurements for reference points, enabling the calculation of the optimal relative pose from an Inertial frame. This framework eliminates various assumptions inherent to the original technique for spacecraft application and provides a precise method for aircraft and spacecraft pose estimation when given known inertial reference data and body-generated measurements. The intricacies and methodologies here are fully documented. Subsequently, a simulation is constructed to corroborate the practical application of the newly devised pose estimation method in a controlled environment. Numerical methods are employed to solve the newly formed six degrees of freedom (6DoF) estimation equations to minimize the system error. This multifaceted analysis ensures empirical evidence of this effective technique in real-world settings.

By unifying the models for attitude and position estimation, the generalized q-method offers a comprehensive solution for the relative pose estimation problem, bridging the gap between quaternion-based rotation estimation and full pose determination. Through rigorous derivation of the governing equations and models, we provide a cohesive framework that extends the reach of the q-method, setting the stage for its practical application in aerospace systems and beyond. The main contribution is summarized as the development of a generalized q-method for pose estimation. This method is based on formulating the coupled dynamics of 6DoF motion in a singularity-free manner. The generalized q-method was tested using relative pose environments in two different attitude configurations with realistically acquired data and an experimental setup in the SPACE lab. The performance of the method was validated against ground truth data obtained from a Vicon system, which provides sub-millimeter accuracy.

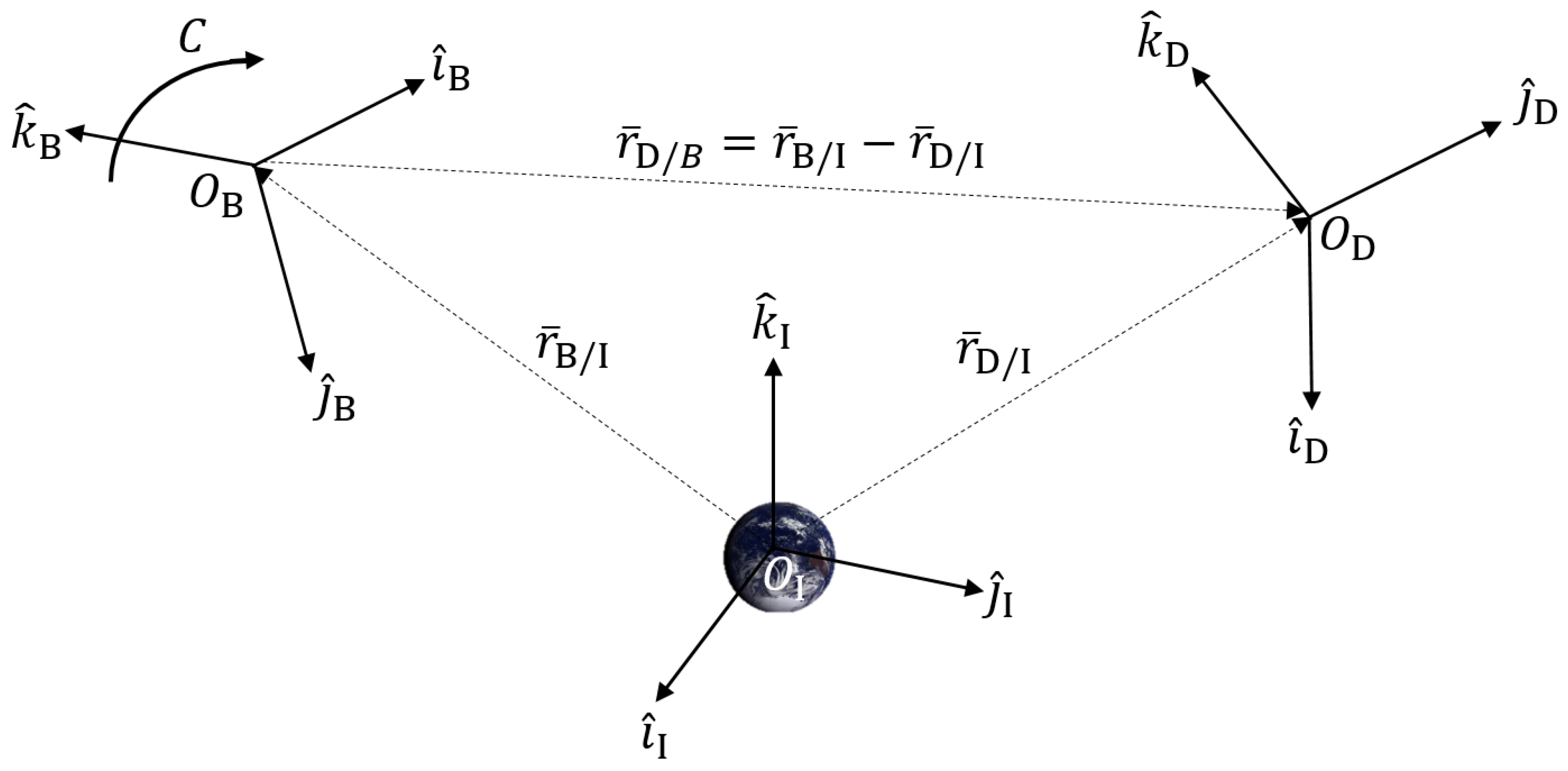

3. q-Method Pose Estimation Derivation

This section will now shift focus onto the newly developed q-method for pose estimation. Prior use of the q-method focused on spacecraft applications for attitude estimation, whereas this more generalized approach eliminates both the unit vector direction and

≈

assumptions for the ’known’ reference values of

. This indeed complicates the problem and makes it more difficult to estimate, but also incorporates inertial position

into the estimation; the final model shows promising compatibility for 6DoF drone pose estimation.

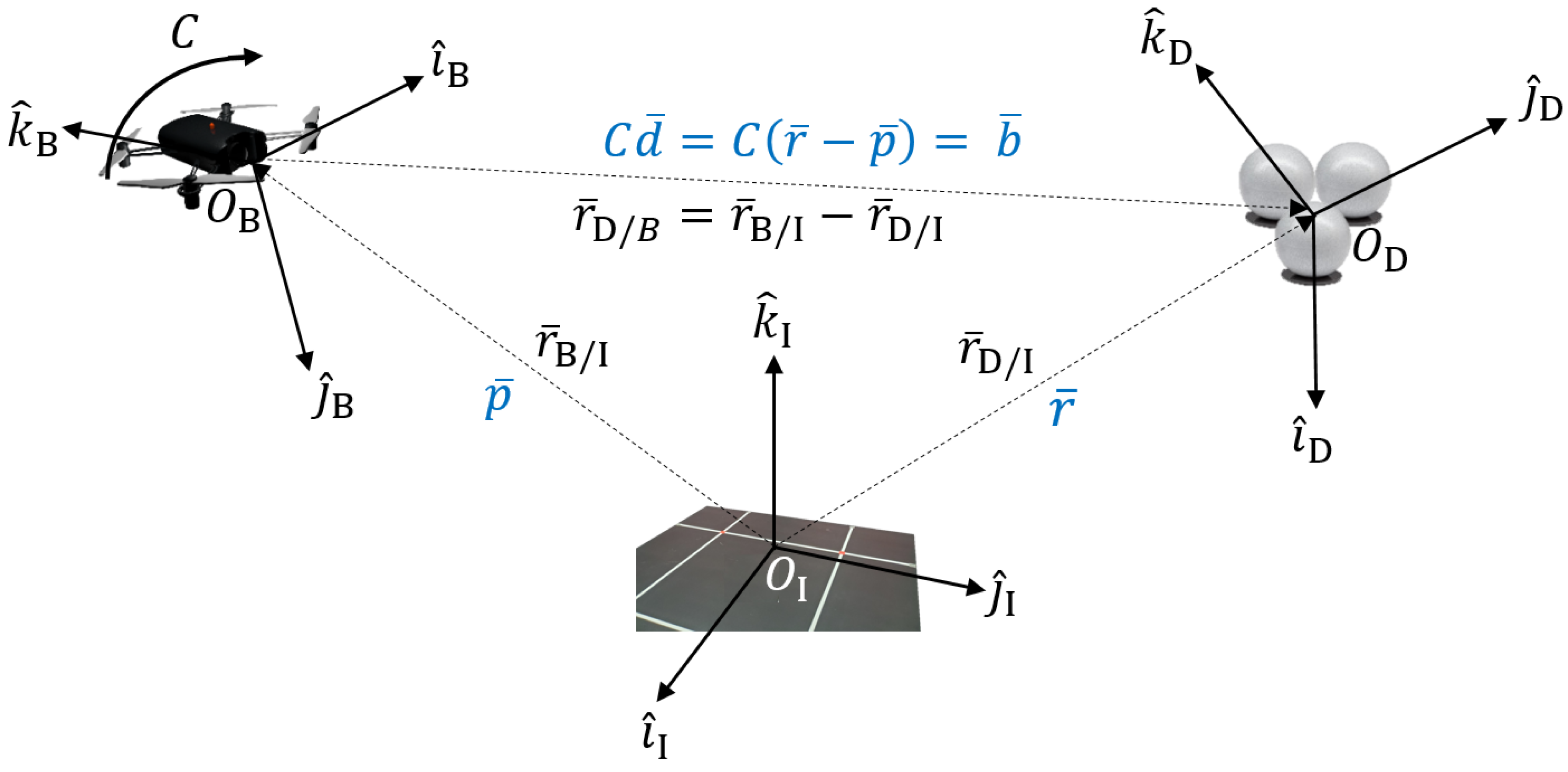

Figure 2 illustrates how the q-method pose estimation will relate back to the global system definitions in

Figure 1, differing from the original q-method. The objective is to estimate the attitude,

C, as well as the satellite position,

, in order to estimate the pose of the air vehicle. A list of variables is provided:

—Body position from Inertial Reference (Earth), to be estimated, .

—Known reference position from Inertial frame (Earth), .

—Measured position vectors in the Body frame, with error, .

—Unknown. Essentially requires prior knowledge. If we had this, position estimation would be easy. Let it be known as . We will use this to estimate given that by following the vector addition for the system definition.

We are able to take advantage of a simple substitution for

by virtue of system definition commonality. The q-method takes

. For the q-method pose derivation, let

. The relation for the drone body measurements with error continues as

. Substituting this equivalence into Equation (

1), a slight modification is made to the original Wahba problem. This straightforward substitution unifies the estimation derivations, allowing for a similar system of equations. Throughout this derivation, all aforementioned variables will be kept identical, including the weighting factor

. Curiously, there exists other research expanding upon the Wahba problem to incorporate position [

33,

34], but not for the quaternion-based purposes used here.

Further expanding the minimization cost function

, and then grouping terms, gives

Note that (

17) possesses two terms that Equation (

3) combines into one, due to unit vector measurements. Equation (

3) also converts the problem into a maximization problem for simplicity, but the above form continues with the form

and

, opting to keep the third term negative. Also, note the dimensions of

q (4 × 1) and

(3 × 1); the following equations make use of the aforementioned ’quaternionized’ vector format in Equation (

8) when required.

The third term also still resembles that in (

3), and subsequently will be equivalent to

. Like

B in Equation (

5),

is defined as

Similarly, the cost function is put into terms of

using Equation (

10). Only the third term is a function of

q (or

C), and substituting

for

allows for the use of (

11), as derived by Davenport. The cost function then becomes

where the third term

is expressed as

With the cost function

now in terms of the variables

of interest, the quaternion norm constraint

is imposed as an equality constraint

. The Lagrangian function [

35] is applied to obtain the optimal solution that minimizes the objective function

(minimizing the error), therefore obtaining the optimal solution for

q and

. The Lagrangian function is defined as

.

The necessary condition is implemented to minimize the system such that

and

. The partial differential equations used to solve the system are shown below.

Equations (

22) and (

23) can now be used to solve for the optimal position estimation

and optimal quaternion to describe the rotation

.

fortunately gives a solution comparable to the q-method, making use of

shown in (

20) to find

as the eigenvector for the corresponding maximum real eigenvalue. E.g., (

22) will substitute

so that

. The end set of equations for q-method pose estimation are hereby formed.

One last simplification is made due to the term

within Equation (

24). The partial differential of

w.r.t. a vector

yields a 4 × 4 × 3 higher-order tensor. The evaluation of this term can be found in a prior publication [

36] as well as validation of q-method pose estimation for simulated spacecraft applications. Thus, an intuitive equation for

exists by again referencing the system definition and taking a weight average for

=

. By definition,

, where

is the quaternion conjugate.

To summarize, the goal of q-method pose estimation is to find and . In order to do so, one must first formulate , making use of , which makes use of . If one of the optimal values ( or ) is known, the other can then easily be calculated. However, a solution can be found using any valid numerical solving method. The next sections will investigate the results using a numerical solving scheme in parallel with physical sensors in a controlled lab environment.

4. Experimental Setup and Methodology

4.1. Experimental Overview and Setup

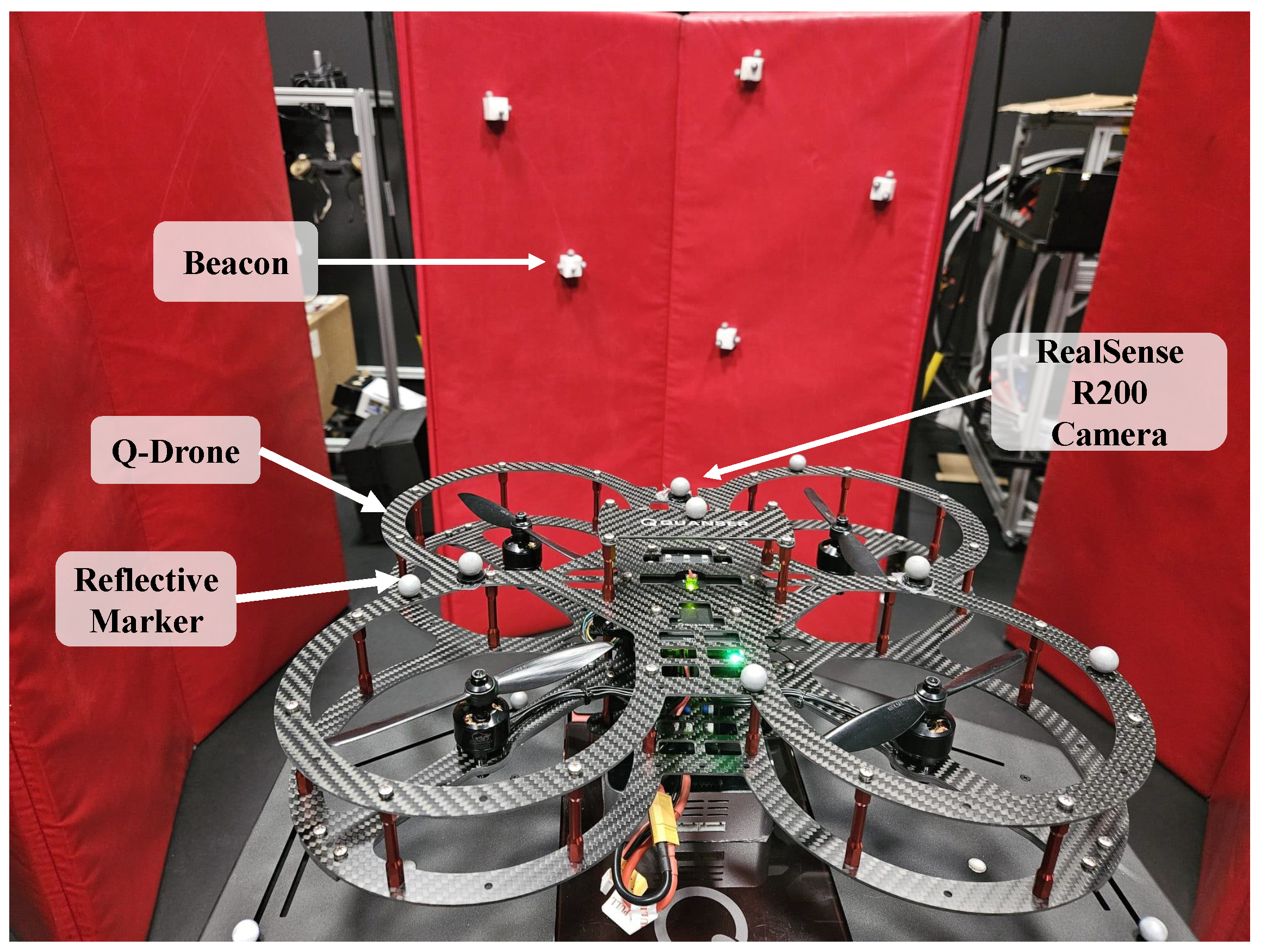

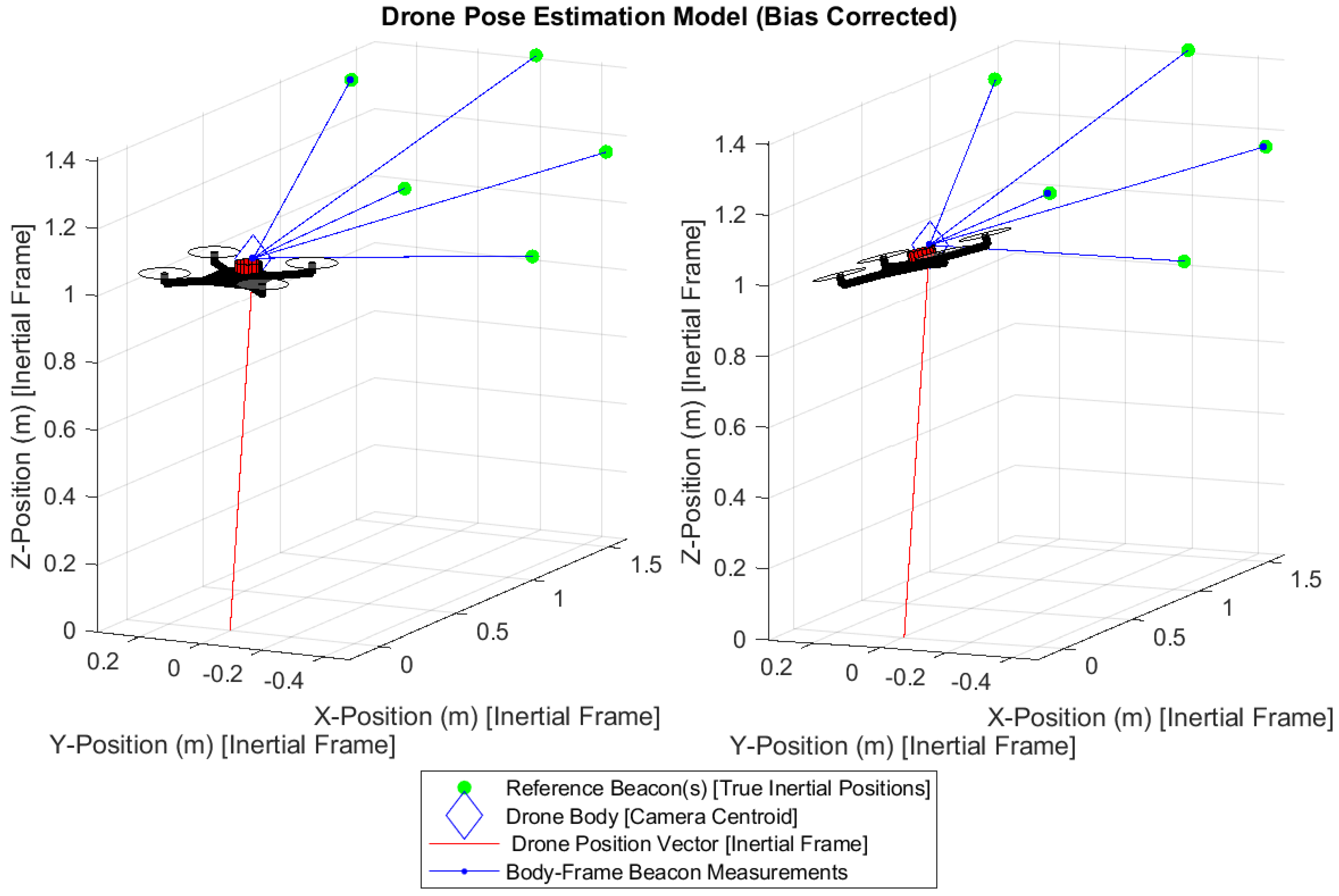

To further verify the functionality of the system of equations, the q-method pose estimation is incorporated with onboard measurements obtained from drone-camera experiments.

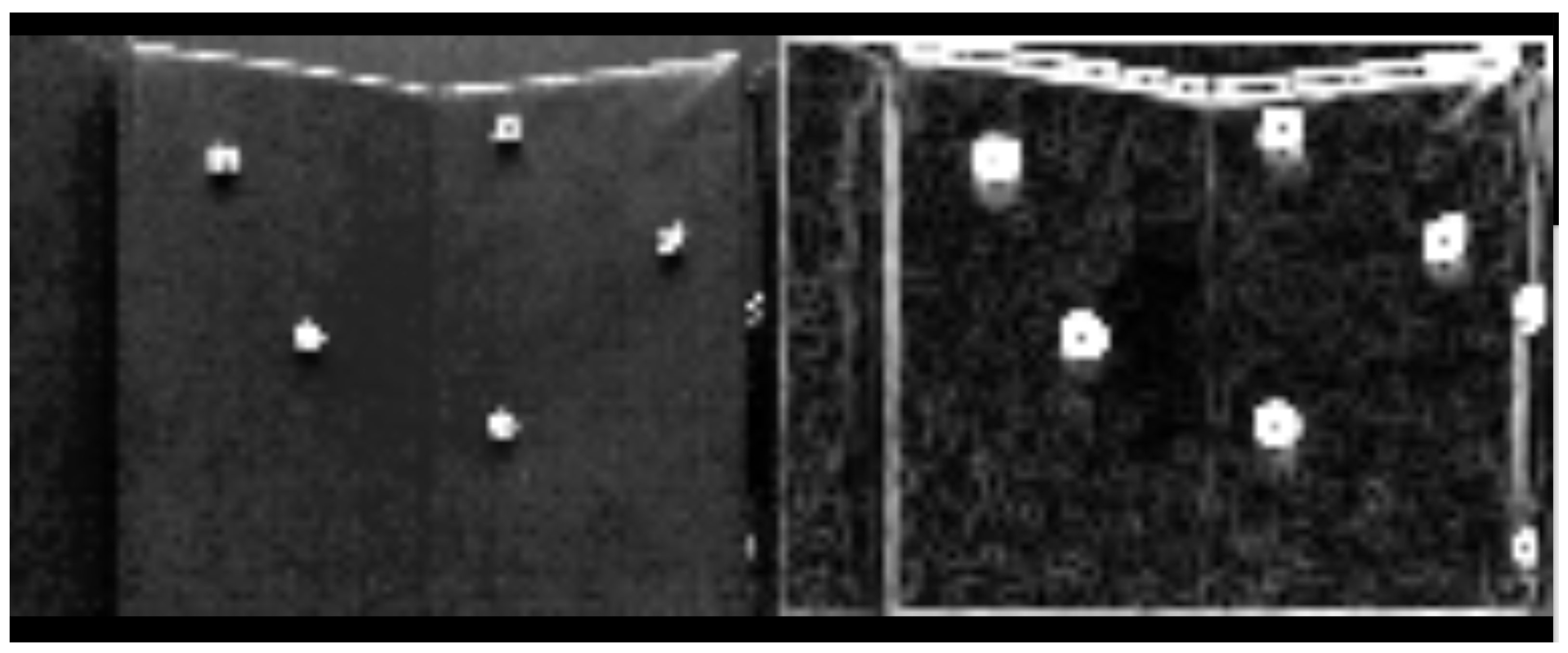

Figure 3 illustrates how a Quanser drone used an Intel RealSense (R200) camera to measure the locations of five objects/beacons in 3D space. The beacons are placed at varying locations and depths upon a curved surface. The camera’s depth-sensing feature was used to determine the location of each beacon in space relative to the camera/drone frame. Camera detection of each beacon is shown in

Figure 4 and

Figure 5. These are used to provide positional estimates in a pictured frame, and then converted into the body-centered drone frame.

To validate correct estimates, the results were compared against true reference data. Vicon’s motion capture system defined the locations of the five beacons as well as the drone. The Vicon system consists of eight high-resolution cameras that capture moving objects at a rate of 120 Hz. Each beacon and drone were defined as rigid bodies (objects) in three dimensions using reflective markers.

Figure 4 shows two of the eight Vicon cameras mounted across the lab and test setup, forming the Inertial frame. This provides a full overview of the hardware used for the experimental setup across the Body and Inertial frames.

To test the method for orientation and position estimation in a physical setting, the drone operated on a static mount while detecting the beacons to obtain reference measurements. The first experiment used a stabilized, nominally oriented (no-tilt) drone to measure beacons, as shown in

Figure 5 with the drone camera. A second experiment was performed where the drone was statically tilted at a roll angle of −15 degrees in the Body frame, as shown in the

Figure 6, also with the onboard camera.

Two corrections to the experimental setup were required while post-processing data. First, the camera position placed on the drone needed to be taken into account, as it was +15 cm ahead of the true Vicon-defined drone centroid. This fix was performed by adding a +15 cm bias to the Vicon positional truth data during the pose estimation analysis and validation. Second, the camera measurements contained a constant bias outside of the camera position that needed to be considered. This was achieved by performing a static calibration in the same position as the experiment for both drone orientations. Afterwards, the mean bias of the camera for each individual reference marker was subtracted. Further details will be provided in the following section after the inputs.

4.2. Initial Conditions and Inputs

The two experiments were performed and the resulting output data were fed into MATLAB 2018a for post-processing, algorithm implementation, and analysis.

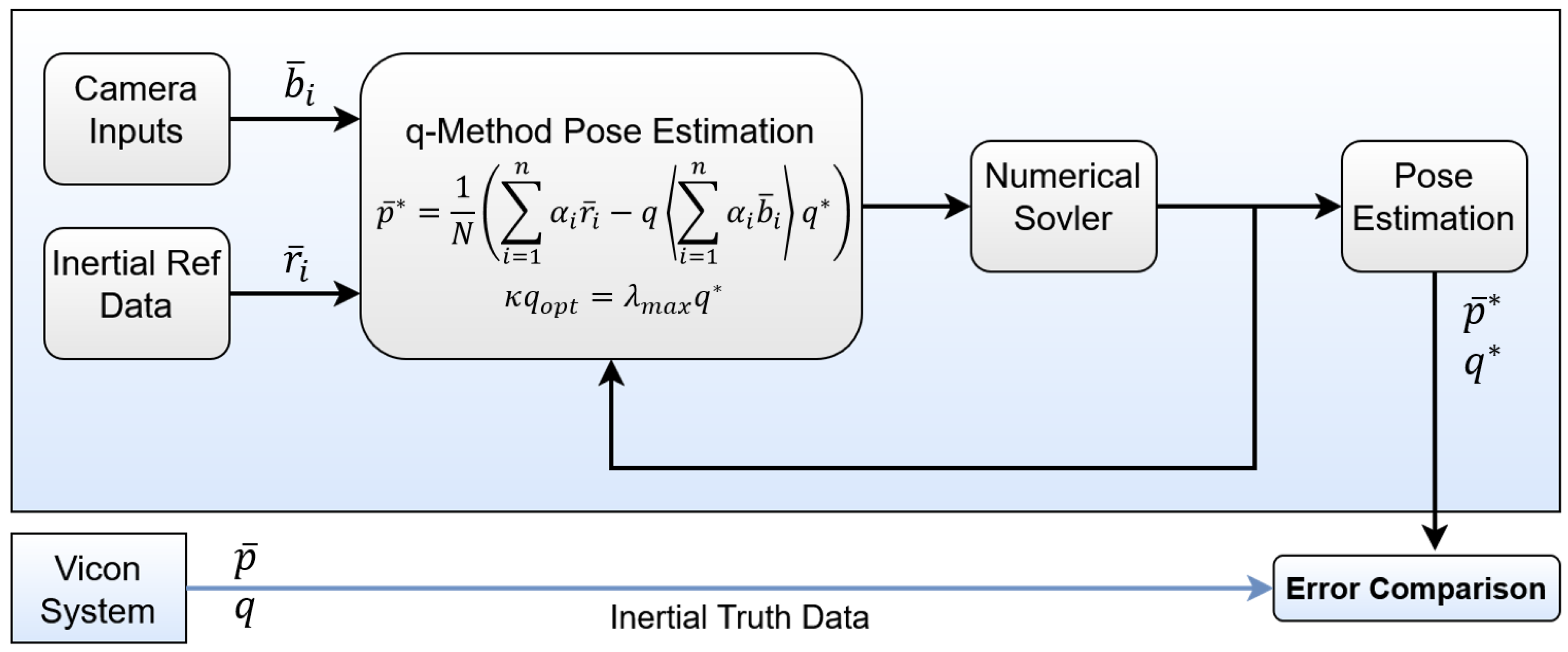

Figure 7 provides an illustration of or pseudo-code for the validation process for both configurations.

Figure 8 provides a visual representation of the true data within MATLAB using the Vicon system. A correction factor of +15 cm is added to account for the position of the camera on the drone with respect to the Body frame. This offset can be seen in the figure, as the camera centroid is offset from the drone body itself. Additionally, several hundred measurements were taken to characterize the average measurement bias of the camera sensor and correct the input reference measurements. The estimation algorithm and results come from a single instantaneous state—one each for the

roll and −

roll.

The pose estimation using the previously outlined system of equations was accomplished with the aid of the built-in MATLAB numerical equation solver fsolve for this multi-variable system. The function uses a Trust-Region Dogleg method to solve for the optimal solution that minimizes the residual error of the system. The following is the exact input to calculate the residual

used within the solver based on Equations (

25) and (

26).

The second row of is multiplied by in order to better condition the system. Equating both sides of the equation to 0, this multiplication scalar does not make a difference to the solution. is included for the purpose of solving the system numerically. Thus, where to minimize the system.

An initial guess is required to iteratively solve the function via numerical methods. As such, a nearby initial guess is arbitrarily selected for the quaternion , position , and of the system. For this experiment, let m, , and . This formulation for is obtained through empirical observations of what generally works well while using this estimation model in conjunction with a numerical equation solver.

Table 2 provides the value of each parameter to initialize the system. The results generated hereafter make use of these provided inputs. Given N reference points for known reference vectors

and N body measurements

with measurement error

, the objective is to estimate

and

to be as close to the true values

and

as possible.

Measurement errors and correction factors across each roll condition are all provided in

Table 2 for transparency. Starting with the reference measurement error

, a scalar percent error value is given for each on-board measurement of each reference vector in the Body frame.

is computed using the experimental and true positional values of the reference beacons with respect to the camera given as

. Mean measurement error

is the average error across all N measurements used in this procedure. This measurement error, while missing directional value, is moreso a simplified method to partially quantify the error in the system when comparing the accompanying algorithm results.

In addition, the average sensor error is the average error across time (700 data points) of the camera sensor for each reference beacon. A static collection of data identical to is performed to verify that the snapshot state obtained in is not drastically different from the average of the camera’s ordinary noisy measurements. The same static collection of data was also used to detect the sensor’s average experimental bias to correct the raw measurement vectors . Thus, the bias correction is made such that . Information for methods and error are disclosed, but procedures for absolute correction and minimization of sensor error are intentionally not incorporated here due to the desire to understand estimation performance with some degree of measurement error.

5. Results

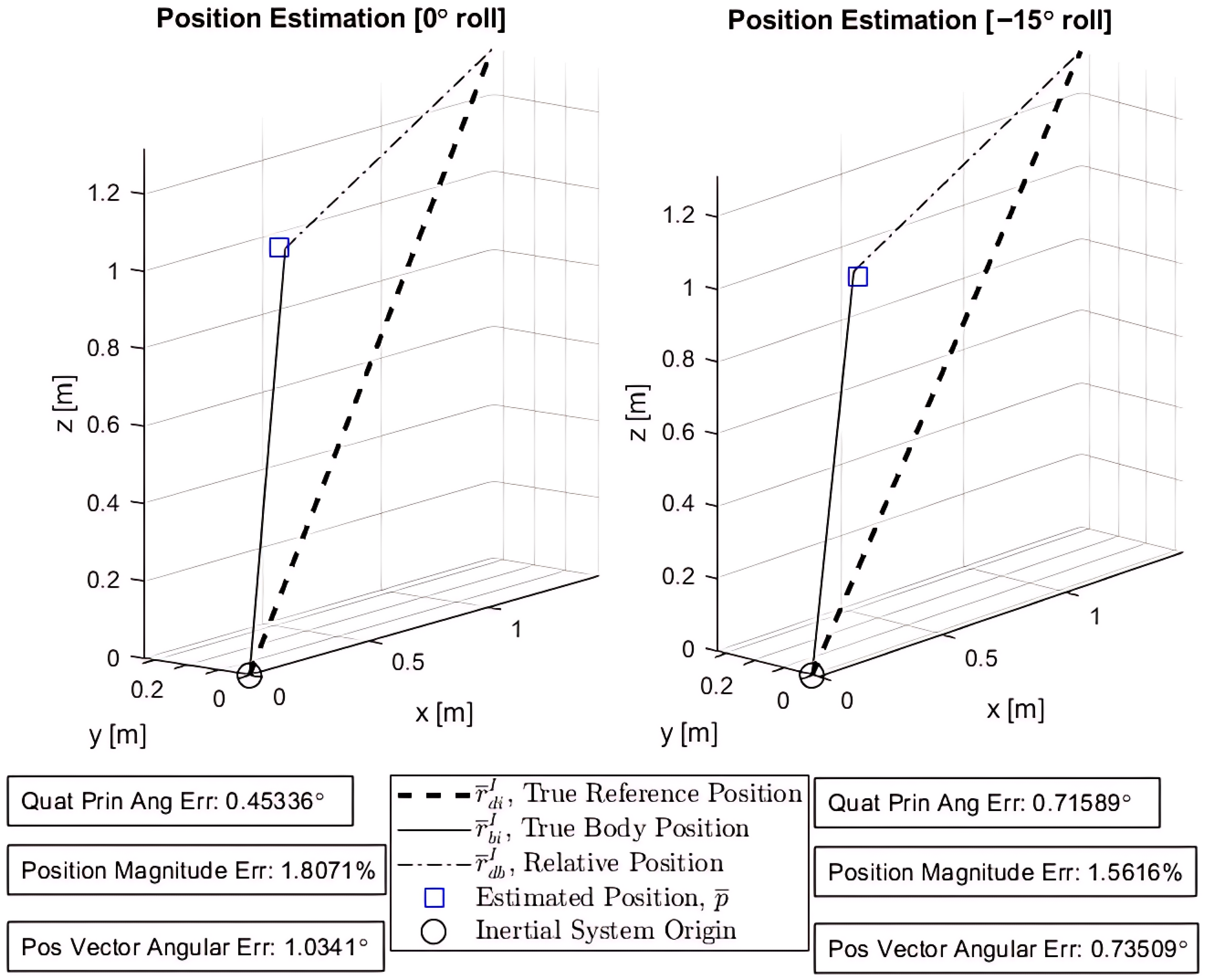

The generalized q-method pose estimation formulation was able to successfully estimate both the position

and orientation

of the drone for both attitude configurations. Both

and

are the optimal values for the pose that minimizes the error of the system given the drone camera measurements with sensor noise.

Figure 9 is designed to mirror the system definition (

Figure 2). It overlays the estimated position

onto the true body position vector

. Here, all inertial and truth data obtained from the Vicon system are used to validate the results of the pose estimation. The inertial system origin is the center floor of the laboratory in which the experiment is performed. True reference position vectors

are essential prior-knowledge of the Inertial frame or system, or wherever the drone intends to operate—also obtained by Vicon.

The position error (

and

) is calculated as

, and the position angular error is defined as

to represent the angular error between the true vector and estimated position. The quaternion attitude error is not as intuitive to represent, but is found by the principal angle

within the quaternion product

[

15] where

is the conjugate of the estimated quaternion. The equation for

is given by

from the prior quaternion definition. Observe that the quaternion error (principal angle error,

) for both test cases is

°and

° respectively, between the true and estimated quaternion. The small positional error and small quaternion error demonstrate the reliability and accuracy of the q-method for pose estimation despite the relatively few reference measurements.

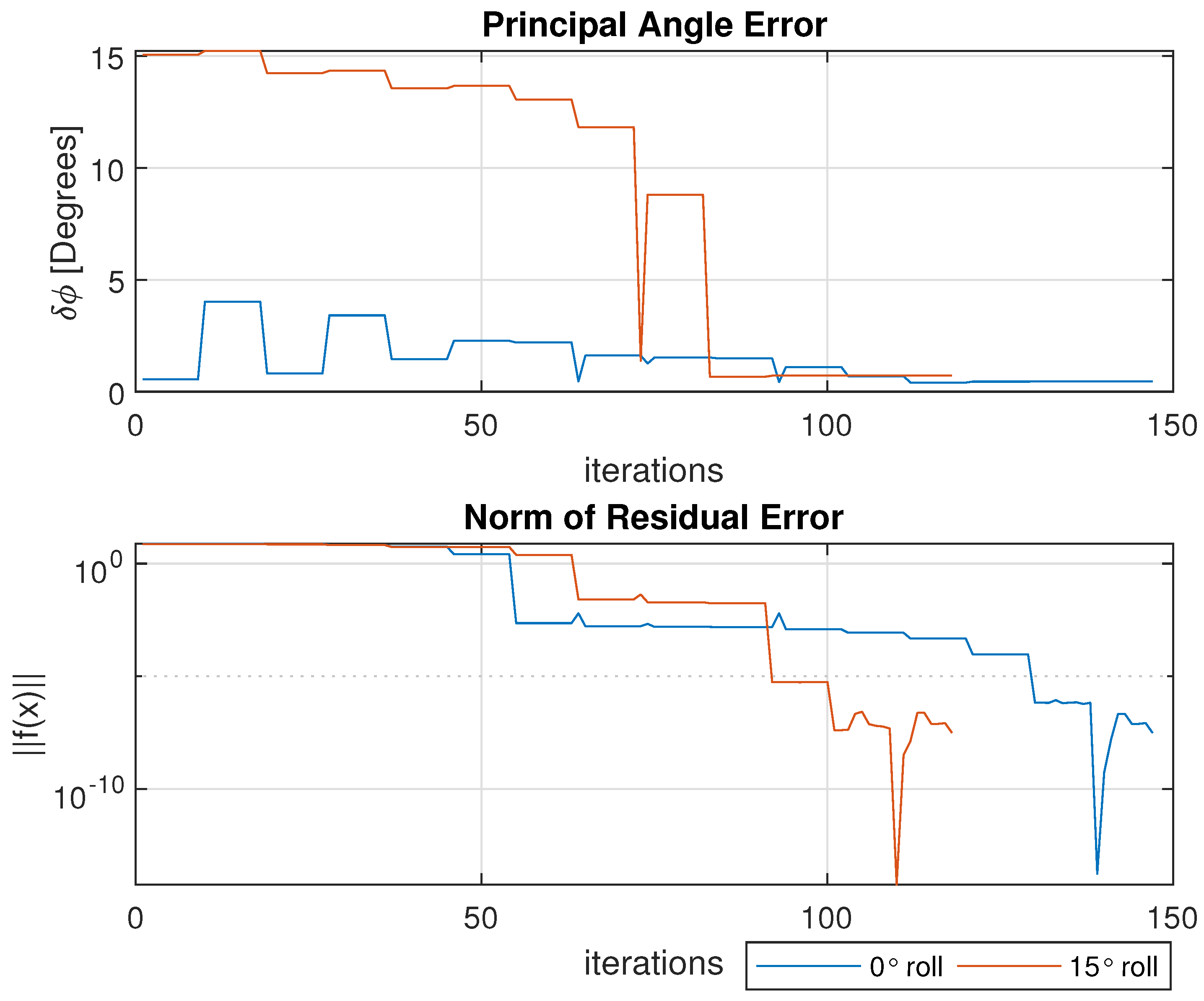

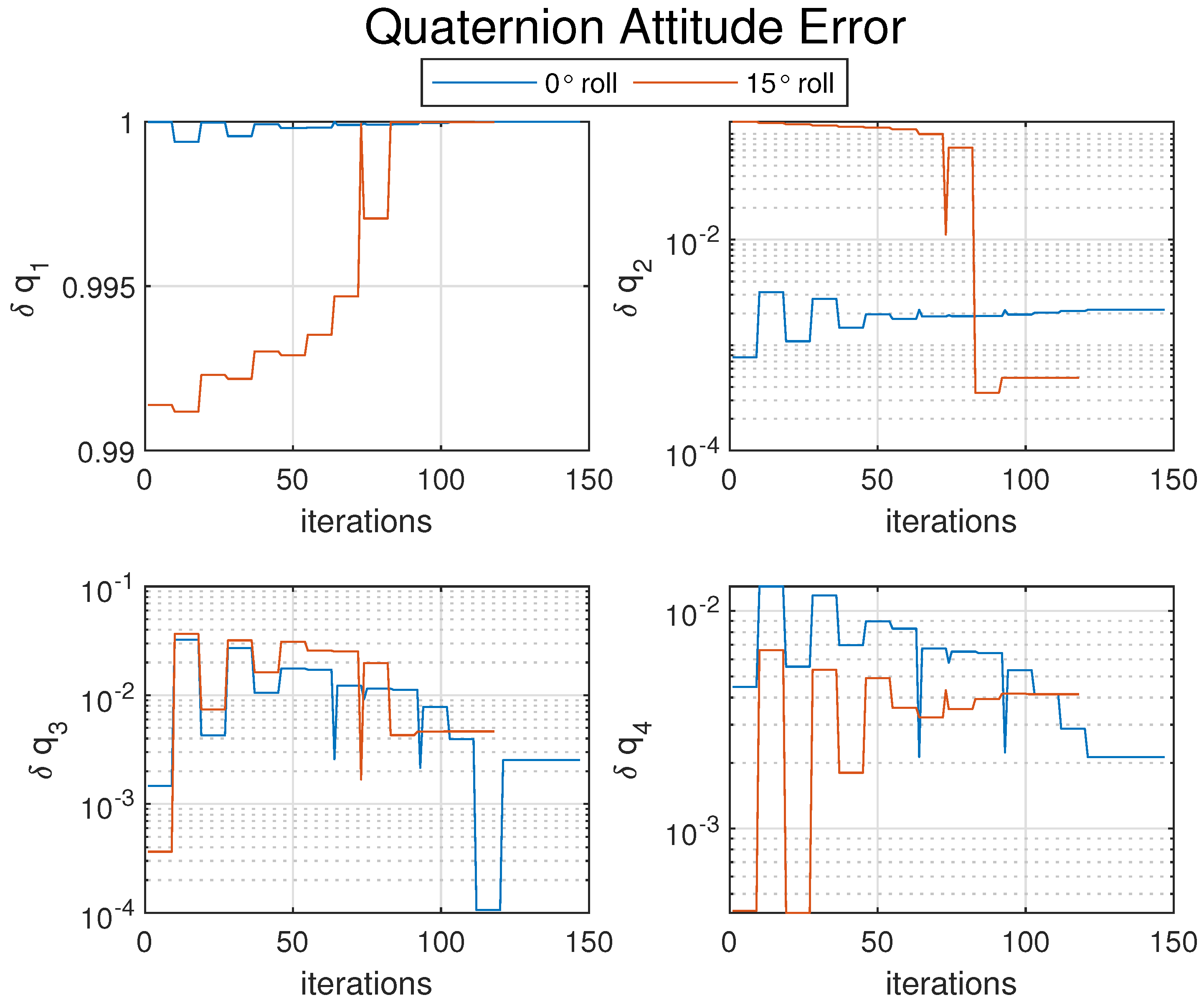

The remaining figures detail the results of interest across each iteration of the numerical solver until convergence is reached. Iterative results for both the 0° roll and

° roll cases are overlaid within

Figure 10,

Figure 11 and

Figure 12 for ease of presentation.

Figure 10 displays the principal angle error

for an understanding of the aggregate attitude error. The bottom plot of the same figure shows the norm of the residual error

as the function fsolve converges to a stopping condition.

Figure 11 itemizes each element of the attitude error

between the true quaternion

and the estimated quaternion

. Lastly,

Figure 12 shows the error for each element between the estimated and true positions. The deltas are not expected to be exactly zero; the expectation is not to find the exact true value, but rather to find the optimal estimation that minimizes the error of the system (introduced by the noisy measurements). Further performance enhancements could be made with the addition of more reference points. For an estimation model like this, the enhancements will directly lead to improved performance.

All figures and results presented show the advantage of the pose estimation q-method in its ability to solve for the state of the system with minimal error. In each instance, the numerical equation solver concluded its convergence and obtained a sufficient solution for the UAV. When compared to the inertial, Vicon truth data, the outcome for the predicted position and orientation is greatly comparable despite the error embedded in the attached camera measurement reference vectors.

Method Comparison and Evaluation

The generalization of the q-method focuses on adopting a solution to estimate both orientation and position simultaneously. The main aim of this paper is not to assess estimation performance or related metrics. Since the proposed algorithm solves the algebraic equation using an eigenvalue approach, it is not entirely fair to compare it with other methods that may involve additional computational steps beyond the scope of this approach. Nevertheless, tests were conducted to compare this method with the q-method (for orientation only) and the modified Optimal Linear Attitude Estimator, OLAE (for orientation and position) [

37], showing nearly identical performance results. The same sensor data and initial conditions were used for the comparison.

OLAE is a single-point real-time estimator that utilizes the Rodrigues (or Gibbs) vector, a minimal-element attitude parameterization. The optimality criterion, which differs from Wahba’s constrained criterion, is strictly quadratic and unconstrained. This method estimates both position and orientation based on vector observations obtained through vision-based camera technology. The generalized q-method also estimates the translation vector with the same accuracy and rate.

Table 3 summarizes the results.

The results show that both the generalized q-method and OLEA perform similarly in terms of position and orientation errors. At 0° roll angle, OLEA has a slightly smaller position error but a slightly higher orientation error. At 15° roll angle, the position errors for both methods are nearly identical, with OLEA having a marginally larger error. Both methods show an increase in orientation error at 15°, with the generalized q-method maintaining a slight advantage in orientation accuracy. Overall, the differences are small, with both methods offering comparable performance depending on the roll angle.

6. Conclusions

The q-method for pose estimation was designed to take the body-frame positional reference measurements and compare them to relative, known positions from an Inertial frame. Doing this, both the orientation and position of the body may be estimated to minimize the error introduced into the system via the measurements. This paper first provided a summary of Davenport’s q-method for attitude estimation and then built on the existing model, while also acknowledging the assumptions and differences that went into the original model. The pose estimation model presented here went to great lengths to keep a consistent system relation between the Inertial, Body, and reference frames in a way that mirrors the q-method. It is via this common system definition that the pose estimation equations were derived by mirroring the original q-method derivation. From this newly developed model, the pose estimation results proved to provide a very accurate solution with relatively simple equations. The computational cost for the numerical methods could be a limitation of this method, but further work could be performed to develop closed-form solutions for this system of equations. Nevertheless, with ample processing power and computing speed, any conventional numerical method used in conjunction with the q-method for pose estimation will provide reliable results.

While results within this paper are promising, the experiment performed was for a single steady-state estimation. Additional estimation approaches like these offer an extra layer of redundancy and dependability for any autonomous aircraft or spacecraft. Further integration into a dynamic system would be required with parallel use of filters, GPS, dynamic models, and/or accelerometer and gyroscopic sensors. Any hardware or communication failures for these flight-essential features would require backup methods for state estimation; the generalized q-method pose estimation provides a backup measure preventing loss of the vehicle, provided prior environmental reference knowledge is utilized.

The estimation error itself is determined by the optimality of the Wahba problem, which is formulated based on the measurement noise. This paper does not aim to reduce the estimation error further, but rather focuses on generalizing the classical q-method to provide pose estimation solutions for coupled vehicle dynamics. Certain validation steps of this pose estimation method were omitted due to redundancy, but additional validation was performed to verify equivalency between the generalized q-method and the classical q-method. Noise reduction and elimination can be fourthly investigated according to the estimation tolerance defined by the problem itself. Preceding work has also previously been performed as part of the validation of the q-method pose estimation by investigating error sensitivity to initial conditions and numerical convergence through a Monte Carlo analysis [

36].

Unlike Davenport’s q-method, which will often make the far-away star assumption and use a star-catalogue with respect to earth, this pose estimation method generalizes the model to be more mathematically intuitive for general applications. The pose estimation model is unrestricted to just satellite attitude estimation, as demonstrated in this paper using a drone/UAV. This itself is a benefit. In addition, if the position is already known, these equations will function identically to the q-method. In essence, the integration of pose estimation methodologies with the q-method represents a significant advancement, promising more robust and adaptable solutions for spatial perception and analysis across various domains.