Abstract

Natural sand-dust weather is complicated, and synthetic sand-dust datasets cannot accurately reflect the properties of real sand-dust images. Sand-dust image enhancement and restoration methods that are based on enhancement, on priors, or on data-driven may not perform well in some scenes. Therefore, it is important to develop a robust sand-dust image restoration method to improve the information processing ability of computer vision. In this paper, we propose a new zero-shot learning method based on an atmospheric scattering physics model to restore sand-dust images. The technique has two advantages: First, as it is unsupervised, the model can be trained without any prior knowledge or image pairs. Second, the method obtains transmission and atmospheric light by learning and inferring from a single real sand-dust image. Extensive experiments are performed and evaluated both qualitatively and quantitatively. The results show that the proposed method works better than the state-of-the-art algorithms for enhancing and restoring sand-dust images.

1. Introduction

Images captured in inclement weather conditions, such as snow [1], rain [2], haze [3], underwater [4], and sandstorms, often exhibit poor visual quality. The absorption of light by the turbid media reduces image contrast and obscures the details of the captured images. Particularly in sand-dust weather, the absorption and scattering of wavelength-dependent light by sand-dust particles can introduce color casts in the captured images [5,6]. Images with poor visibility due to sand-dust conditions can significantly degrade the performance of various vision tasks, including object recognition, intelligent transportation systems, surveillance, and oil pipeline inspection. To enhance visual perception, it is essential to develop robust techniques for image enhancement and restoration.

Generally, the degradation of sand-dust images is primarily influenced by light absorption and scattering, as well as by the imaging equipment [7,8,9,10]. Specifically, the degradation of sand-dust images can be attributed to the following factors: (1) Visible light is scattered by the properties of the turbid medium in the environment. Consequently, ambient light comprises both atmospheric light and scattered light. (2) The imaging process relies on the radiation intensity of light from the object. However, the absorption of visible light by the atmospheric medium reduces the reflection intensity. Moreover, the attenuation of light is related to the distance between the target and the imaging equipment. (3) Image quality degradation is also caused by the resource limitations of imaging equipment, such as storage and compression, etc. From the perspective of the imaging process, degraded sand-dust images typically exhibit characteristics such as color casts, low contrast, and blurring.

Many sand-dust image enhancement and restoration algorithms have been proposed and can be broadly categorized into three categories: (1) image enhancement methods, (2) image restoration methods, and (3) deep learning-based approaches. Image enhancement methods encompass color space transformation and enhancement [11,12,13,14], various improved histogram equalization techniques [8,9,10,13,15], white balance and filtering approaches [15,16], singular value decomposition methods [7,17], and tensor least squares optimization methods [18]. Additionally, methods based on the enhanced retinex model [19,20] and those based on diverse image fusion techniques [21,22,23,24] have been proposed. Although these enhancement methods have achieved marked improvements in contrast and tone adjustment, they rely on various hypotheses and priors. The performance of these algorithms is influenced by the varying degrees of degradation in sand-dust images. Additionally, the color casts and sand-dust in the degraded images cannot be adequately removed.

To enhance image details more effectively, numerous image restoration methods based on atmospheric scattering models have been proposed. These methods employ various strategies to optimize transmission and atmospheric light, such as imposing information loss constraints [25], utilizing diverse optimization algorithms (e.g., genetic algorithms) [26,27], and addressing ambient light differences [28] and light compensation [29]. Additionally, to estimate the unconstrained atmospheric model, several improved methods based on the dark channel prior have been developed to enhance the restoration quality of sand-dust images. These methods include blue channel inversion [6], adaptive technologies [30,31], gamma correction techniques [32], Laplacian distribution models [31], regression analysis [5], and the rank-one prior [33]. These methods can accurately restore sand-dust images, but they rely on prior knowledge to calculate the transmission and atmospheric light in the model, which limits the robustness and generalizability of the algorithms.

With the development of deep learning, deep learning-based methods have been tested for processing sand-dust images [34,35,36,37,38,39,40]. However, real sand-dust images are highly complex, and synthetic sand-dust images fail to capture their characteristics accurately. Consequently, methods that rely on synthetic sand-dust image data for model training often exhibit poor performance on real sand-dust images.

To address the aforementioned issues, this paper proposes a zero-shot realistic sand-dust image restoration method. We view the degraded image as a combination of a clear image, transmission map, and atmospheric light. Based on this concept, we design a specific network model to estimate the parameters in the atmospheric scattering physical model. Subsequently, we restore clear images using the inverse model of the atmospheric scattering model. The proposed method is markedly different from existing deep learning-based methods and zero-shot learning methods, primarily in the following aspects: First, unlike data-driven deep learning methods, the proposed method employs an unsupervised and zero-shot learning approach, requiring only a degraded image as input to produce a corresponding clear image. Second, compared with existing zero-shot learning methods, the proposed method relies entirely on the atmospheric scattering model for network design and does not require any prior knowledge or controlled parameters for model training.

To highlight them, this study makes the following primary contributions:

- To the best of our knowledge, this paper is the first to propose a zero-shot learning method for sand-dust image restoration. Despite being a zero-shot learning method, the proposed method achieves superior performance in restoring real sand-dust images.

- A new joint-learning network structure model is proposed, and the network model is designed entirely based on the imaging principles of the physical model of atmospheric scattering. A modified U-Net network is used to obtain a clear image and transmission map. Considering the relationship among the input image, clear image and transmission map in the atmospheric scattering model, a convolutional network and fully connected layers are designed to obtain atmospheric light.

2. Related Work

2.1. Sand-Dust Image Enhancement and Restoration Methods

In recent years, an increasing number of scholars have conducted extensive research and made significant progress in image enhancement and restoration. While many scholars have focused on recovering images captured under specific weather conditions such as rain, snow, and fog, the enhancement and restoration of sand-dust images have also emerged as a hot topic in recent years. Several traditional image enhancement methods have been employed to improve the quality of sand-dust images [7,8,9,10,11,12,13,14,15,16,18,41,42]. For example, Shi [9] proposed an adaptive histogram equalization method based on the normalized gamma transform. This method only enhanced the visual quality but did not remove dust haze from sand-dust images, and it fails in extreme cases. Cheng [16] proposed a method involving blue channel compensation and guided filtering to enhance sand-dust images. Since the blue channel of sand-dust images decays the fastest, blue channel compensation was initially applied. The white balance technique was then used to adjust the color cast, and finally, guided filtering was employed to enhance contrast and detail. However, this method relies on the quality of the guided image and the compensation coefficient. Park [10] proposed a continuous color correction method based on coincident histograms. First, the color correction method relies on mean and variance statistics for color correction. Then, the image is normalized using the green channel mean preservation method. Finally, the maximum histogram overlap method is employed to remove color casts. However, this algorithm depends on the number of channel histogram offset steps. Xu [18] discovered that a key intrinsic characteristic of sandstorm-free outdoor scenes is the rough similarity in the contours of the RGB channels. Based on this finding, Xu proposed a tensor least squares optimization method to enhance sand-dust images. This method improves contrast and reduces chromatic aberration to some extent. However, it also amplifies image noise during the enhancement process. To enhance the details of the image, some researchers introduced fusion technology [21,22,23,24]. For example, Fu [21] proposed an approach that employs an adaptive statistics strategy to correct color. Subsequently, two gamma coefficients are applied to enhance the image. Finally, a weight fusion strategy is used to fuse the image. However, this method relies on manual parameters. Although the aforementioned methods have achieved satisfactory results in enhancing sand-dust images, their restoration performance is contingent upon the settings of certain hyperparameters.

To restore image details while enhancing the image, methods based on the atmospheric scattering physical model and retinex theory have been employed to restore and enhance sand-dust images [5,6,17,25,26,27,28,29,30,31,32,33,43,44,45]. These methods, which leverage atmospheric physical models, have achieved marked progress. In particular, dark channel prior (DCP) methods, which are based on atmospheric scattering models, have achieved significant results in image dehazing. These methods have been adapted and improved for processing sand-dust images. For example, Peng [5] conducted regression analysis on the scene depth of the input image to obtain depth information and calculated the ambient light difference to estimate the transmission map. Finally, adaptive gamma correction was employed to eliminate color casts. However, this method relies on scene depth estimation and fails when the sand image has lights at different depths. Shi [43] proposed a sand-dust image enhancement method based on the halo-reduced dark channel prior. This method includes color correction based on the gray world theory, sand-dust removal, and gamma correction. Gao [6] proposed a method involving the reversal of the blue channel prior for restoring sand-dust images. This method relies on adjusting the blue channel, which may introduce a new blue color cast in the restored image. Liu [33] introduce a rank-one matrix prior to estimate scattering map, but this method can not completely remove sand-dust in the image. In addition, the method based on retinex theory was also applied to enhance the dust image [19,20]. For example, Li [19] proposed an improved retinex method, which focused on a noise map and introduced the L1 regularization term to optimize brightness and reflectivity. White balance and gamma correction technology were used to enhance contrast in [20] by Kenk, and the Laplacian pyramid filter was used to enhance the details of reflected components. Although model-based methods have achieved significant progress, their effectiveness depends on the prior assumptions and the distribution of the real data. If the prior knowledge is not applicable to certain scenes, the desired results may not be achieved.

Currently, many deep learning-based methods have achieved significant results in dehazing, rain removal, and snow removal. However, due to the lack of large-scale paired real degraded image datasets, most learning-based methods rely on synthetic degraded images to train network models.Because paired real sand-dust image data are difficult to obtain, Si [34] proposed a synthesis algorithm for sand-dust images, which attempts to recover sand-dust images using a deep learning approach. To obtain better results, Si et al. [35] proposed a fusion algorithm combining a color correction algorithm with deep learning. Shi [36] proposed a convolutional neural network incorporating color recovery to enhance sand-dust images. Gao [38] proposed a novel two-in-one low-visibility enhancement network that can enhance both haze and sand-dust images. However, it is challenging to achieve satisfactory results on real sand-dust images. As reported by Golts [46], synthetic data cannot describe the characteristics of real degraded images, leading to a domain shift. To reduce the impact of synthetic data on the model, Ding [37] proposed a single-image sand-dust restoration algorithm based on style transformation and unsupervised adversarial learning. Gao [39] proposed a two-step unsupervised approach for enhancing sand-dust images. First, an adaptive color cast correction factor was developed. Then, an unsupervised generative adversarial network (GAN) was used to further enhance the images. However, this method may introduce color distortion and halos in some sand-dust scenes. Meng [40] proposed an unpaired GAN method for image dedusting based on retinex. However, this method fails in high-concentration and complex scenes. Despite their effectiveness in dedusting, deep learning-based methods still rely on artificial priors and synthetic data, which can limit the quality of image restoration. To address the lack of paired real sand-dust samples and reduce the dependence on prior knowledge, inspired by atmospheric scattering models and zero-shot learning, we propose a zero-shot sand-dust image enhancement method.

2.2. U-Net Architecture

Olaf [47] initially proposed the U-Net architecture for biomedical image segmentation, utilizing an encoder–decoder framework augmented with skip connections to fuse shallow detail with deep semantic information, thereby achieving remarkable performance even with limited sample data. Some improved U-Net network frameworks have been proposed for various scenarios. Zhou [48] proposed an advanced medical image segmentation architecture, termed UNet++, which introduces dense convolutional blocks between the encoder and decoder to gradually fuse multi-level features, and effectively address the issue of detail loss in complex backgrounds. Chen [49] combined the global context modeling capability of Transformer with the local detail capturing ability of U-Net, and proposed the first TransUNet for medical image segmentation, achieving excellent results in the segmentation of small organs and complex boundaries. Cao [50] constructed a U-shaped network based on Swin Transformer blocks, named Swin-Unet, which significantly improved the accuracy of medical image segmentation and edge prediction effects. Liao [51] proposed a distortion rectification generative adversarial network, and the architecture of the generator is designed based on U-Net. This method can directly learn the end-to-end mapping from distorted images to undistorted images, and has achieved good performance on both synthetic and real distorted images. Li [52] proposed a deep learning framework based on residual blocks for solving multiple types of distortions with a single model; it includes the single-model and multi-model distortion estimation networks. This method combines the displacement field prediction of a convolutional neural network with model fitting optimization to achieve general correction for multiple types of distortions. Liao [53] introduced an innovative U-shaped MOWA model, by decoupling motion estimation at the region level and the pixel level, as well as conducting dynamic task-aware prompt learning; it succeeds in addressing multiple image deformation tasks inside a single model. However, when confronted with complex boundary scenarios, the control point prediction of this method may fail. Our network is designed based on the atmospheric scattering model with a U-Net architecture and achieves more accurate results on sand-dust images.

3. Proposed Zero-Shot Method

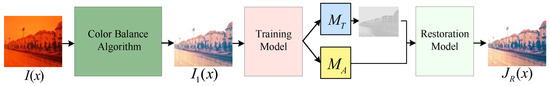

In this section, we introduce the proposed image restoration framework based on the atmospheric scattering model (see Figure 1). This framework includes the adaptive sand-dust image color cast balance method, which is a zero-shot neural network learning model that combines sharp images, transmission, and atmospheric light, and the image restoration process. The following sections provide a detailed introduction.

Figure 1.

Proposed image restoration framework based on the atmospheric scattering model.

Above, represents the input image, indicates the result after color balance algorithm processing, and denote the atmospheric light and transmission map estimation networks, respectively, and stands for the restored image.

3.1. Image Color Balance

Many methods have been used to correct the color casts of sand-dust images, such as the grayscale world hypothesis theory [6,32,43], strategies for adaptive statistics [21], white balance [15,16], color channel compensation [14,23], and histogram equalization [8,10,13]. Because the sand-dust images captured under different weather conditions have different color casts, it is particularly difficult to design an effective method that can adaptively adjust various color casts. In this paper, we propose a simple and effective adaptive color cast adjustment algorithm based on wavelength-dependent light attenuation characteristics. First, Equation (1) is used for color fine-tuning; this step is particularly important for the images obtained in strong or extremely strong sandstorm environments. Then, the color casts are removed by Equation (3).

where represents the image after color cast removal, represents the input color image pixel value, c is the color channel, denotes the green channel pixel values of a color image, and is the weight of the reserved green channel pixel value, which is expressed as Equation (2):

where is the mean value of the corresponding channels of the color image, c is the color channel, g is the green channel, and is the mean value of the three channels of the RGB color image.

To adjust various color deviations better, according to the statistical characteristics that blue channels decay the fastest and red channels decay the slowest in sand-dust images, a method of maximum green mean value preservation, with red channel and reverse green blue channel compensation is proposed in this paper, which is expressed as Equation (3):

where represents the image after color cast correction, and “max” and “min” represent the operations of finding the maximum value and the minimum value, respectively. is the RGB channel compensation factor, which consists of the following three items. is the maximum green mean preservation compensation coefficient, is the compensation coefficient of the red channel’s mean value and the reversed blue channel’s mean value, and is the compensation coefficient of the inverted green channel’s mean value. Figure 2 shows the color correction results using the color balance algorithm that is proposed in this paper.

Figure 2.

Results of the proposed color balance method. (a) Sand-dust image. (b) Color balance result by proposed method.

3.2. Proposed Method Framework

The atmospheric scattering model [54] is commonly used to describe the degradation process of images, which is expressed as

where x is the position of pixels in the image, indicates the captured degraded image, represents a sharp image, A represents atmospheric light, and represents medium transmission. The transmission can be expressed as , where is the scene depth between the camera and the object, and is the atmospheric scattering coefficient. By inverting Equation (4), the sharp image can be obtained according to Equation (5),

Equation (4) shows that the degraded image is formed by the interaction of the sharp image , the transmission map , and the atmospheric light A. Therefore, according to the principle of imaging in the atmosphere, a depth network relying on , , and A interrelated can be designed to train the model using a single sand-dust image to obtain the transmission map and the atmospheric light A, and finally, a sharp image can be recovered by Equation (5). Although the network for generating sharp images is designed in this model, it is a challenging task to generate high-quality clear images due to the severe degradation of input images. Therefore, the clear image in the proposed method is only used to constrain the model training. In addition, unlike other degraded images such as fog, sand-dust images exhibit varying degrees of color cast due to the absorption and scattering of light by sand-dust particles. To mitigate the impact of color cast on model training, an effective color cast correction algorithm is proposed to preprocess the input sand-dust images.

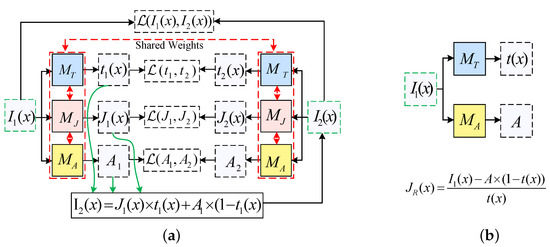

Inspired by atmospheric scattering model, a zero-shot learning method for sand-dust image restoration is proposed in this paper. The framework is shown in Figure 3, where Figure 3a shows the zero-shot training model designed based on the atmospheric scattering model, based on the obtained by the color balance algorithm, the transmission map , and the atmospheric light A, and sharp image obtained by the training model , , and . To be more specific, start with a color-corrected input sand-dust image . , , and A are first estimated by the designed feature-sharing network. Then, the reconstructed image is generated by the atmospheric scattering physical model, and the reconstructed image is used as input to train the model by constraining the structure of atmospheric light and , transmission maps and , reconstructed image , and input image to obtain A and accurately. The image restoration model is shown in Figure 3b; a sharp image is restored according to Equation (5).

Figure 3.

Proposed zero-shot learning framework for sand-dust images restoration. (a) Training model. (b) Restoration model.

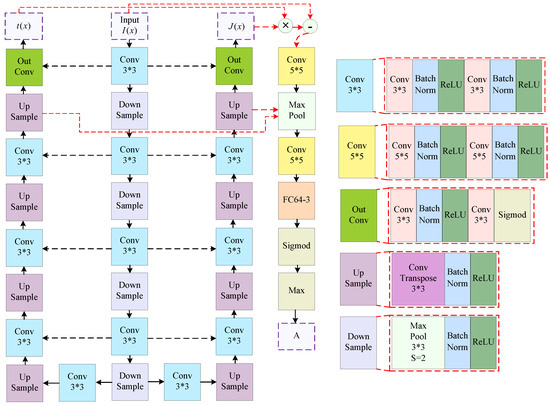

3.3. Zero-Shot Network Architecture

In this section, we introduce the architecture and implement the model. Figure 3a shows the zero-shot learning model framework that is proposed in this paper. Because the model is designed entirely based on the atmospheric scattering physical model, we consider that the three networks , , and are interrelated. Inspired by U-Net [47], we proposed a new model architecture shown in Figure 4, where the , , and models share weights. The transmission map estimation network located on the left side of the entire network, while the sharp image estimation network is another U-shaped network situated on the right side. The atmospheric light estimation network is designed based on Equation (4); it carries out a joint estimation by integrating the outputs of networks and together with the input image. FCM64-3 indicates converting a 64-channel image into a 3-channel image through fully connected layers. * represents convolution operation.

Figure 4.

Architecture of our proposed method.

3.4. Loss Function

The idea comes from the widely used atmospheric scattering physical model that describes the image degradation process, and the model is expressed as Equation (4). Without real clear images and additional information, to better estimate , , and A depending on the feature information of a single image, we define Equation (6) as the total loss function to jointly train the neural network model:

where is the gradient loss of the reconstructed clear image and the model-generated clear image ; is defined as the atmospheric light loss between and ; is the transmission loss of the sharp image generated by the model; and is the image saturation-penalized loss function.

Specifically, ensures neighborhood consistency in the restored image and thus reduces the local variation. The loss function is defined as

where x is the position of pixels in the image, c represents the color channel of the color image, denotes the reconstructed clear image and the model-generated clear image , respectively, and and represent the horizontal and vertical gradient operators, respectively.

The transmission loss and the atmospheric light loss are two important loss functions, which primarily keep equal to and equal to . The transmission loss and atmospheric light loss are defined as Equations (8) and (9), respectively.

where x represents image pixels; , , , and ; and and are the atmospheric light and transmission estimation networks proposed in this paper. We also included the saturation penalty loss in [55].

4. Experiments

To verify the effectiveness of the proposed algorithm, we conducted experiments using numerous images collected under various sand-dust weather conditions and compared the results with those of 12 state-of-the-art sand-dust image enhancement and restoration algorithms using six metrics. The following sections will introduce the experimental configuration and the qualitative and quantitative evaluation results.

4.1. Experimental Configurations

In this section, we describe the dataset, comparison methods, evaluation metrics, and training procedure used in the experiments.

(1) Dataset: Due to the lack of a unified public dataset for sand-dust images, we collected 1070 real images under various sand-dust weather conditions from the Internet to more thoroughly validate the algorithm. Additionally, we captured 460 high-resolution real images under sand-dust weather conditions in Xinjiang for this study. All images were cropped to a size of 640 × 480.

(2) Comparative Methods: For comprehensive comparison, the proposed method is compared with 12 state-of-the-art sand-dust image processing algorithms, including the generalized dark channel prior method (GDCP) [5], reverse blue channel prior method (RBCP) [6], color balance method based on maximum histogram overlap (SCBCH) [10], adaptive histogram equalization method based on normalized gamma transform (NGT) [9], rank-one matrix prior (ROP) [33], blue channel compensation and guided filtering technique (BCGF) [16], tensor least squares optimization method (TLS) [18], image fusion-based approach (FBE) [21], two-in-one low-visibility enhancement network (TOENet) [38], dark channel prior method based on halo elimination (HDCP) [43], unpaired GANs method for image dedusting (DedustGAN) [40], and unsupervised generative adversarial network for sand-dust image enhancement (SIENet) [39].

(3) Evaluation Metrics: Because there are no corresponding sharp images, non-reference evaluation methods are applied to assess the performance of the algorithms. Six evaluation metrics are introduced for comprehensive assessment, including the evaluation of image integrity and authenticity based on distortion identification (DIIVINE) [56], blind image evaluation method based on scene statistics and perceptual features (NPQI) [57], natural scene image evaluation method based on multivariate Gaussian model (NIQE) [58] and the visual edge restoration percentage e, black and white pixel saturation percentage , and contrast recovery percentage as suggested in [59]. The smaller the DIIVINE the lower the distortion of the recovered image and the higher the quality of the image; the smaller the NPQI and NIQE, the better the quality of the recovered image; the larger the e and the closer the is to 0, the better the image quality; and the larger the , the higher the contrast of the recovered image and the better the quality of the image.

(4) Training Procedure: The loss function is shown in Equation (6), where the coefficients are set as follows: , . Rotation and mirroring improve the input image in the training stage. This method has been shown to be useful in unsupervised learning [55,60]. The weights in the model follow a normal distribution with a mean value of 0 and a standard deviation of 0.001. The optimization process uses the default Adam optimizer [61], and the learning rate is set to 0.001. The model is trained 1000 times on an 3090Ti GPU from NVIDIA, Santa Clara, CA, USA. All images used in the experiment were cropped to 640 × 480.

4.2. Qualitative Evaluation

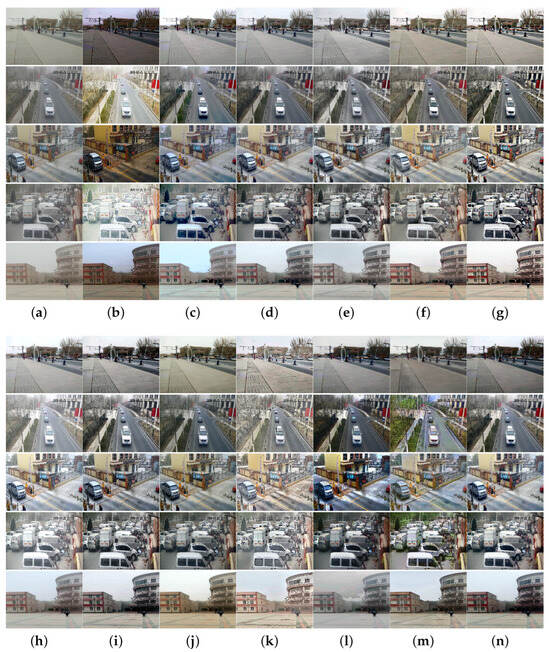

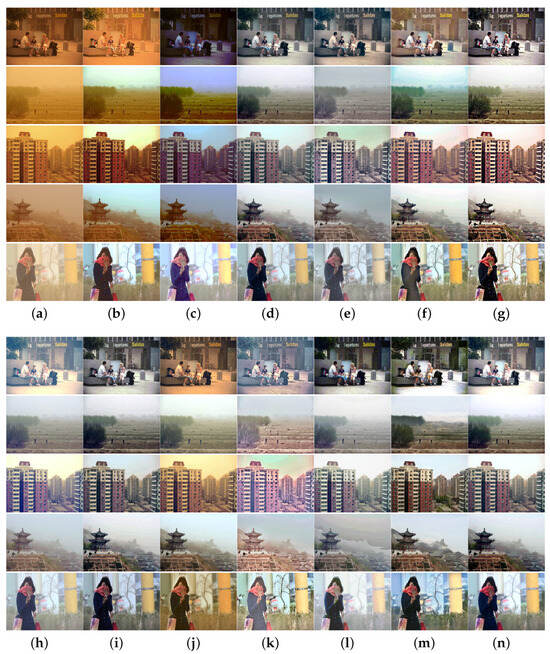

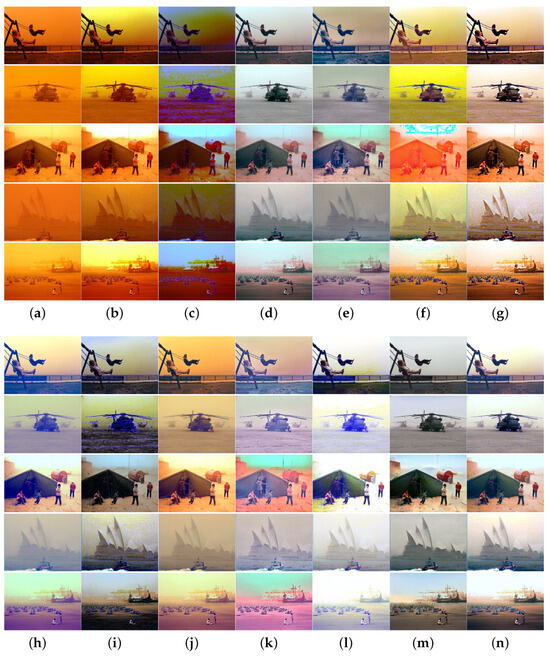

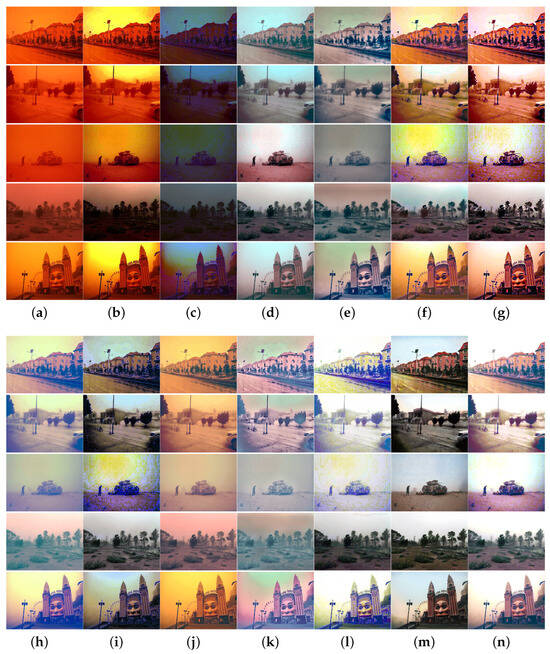

To more thoroughly verify the algorithm performance, we collected sand-dust images captured under five types of sand-dust weather conditions: floating dust, sand, sandstorms, strong sandstorms, and extremely strong sandstorms. Extensive comparison experiments were conducted using these sand-dust images, and the experimental results are shown in Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9.

Figure 5.

Visual comparisons in real floating dust scenes. (a) Sand-dust images. (b) GDCP [5]. (c) RBCP [6]. (d) SCBCH [10]. (e) NGT [9]. (f) ROP [33]. (g) BCGF [16]. (h) TLS [18]. (i) FBE [21]. (j) TOENet [38]. (k) HDCP [43]. (l) DedustGAN [40]. (m) SIENet [39]. (n) Ours.

Figure 6.

Visual comparisons of real sand-dust images with color cast. (a) Sand-dust images. (b) GDCP [5]. (c) RBCP [6]. (d) SCBCH [10]. (e) NGT [9]. (f) ROP [33]. (g) BCGF [16]. (h) TLS [18]. (i) FBE [21]. (j) TOENet [38]. (k) HDCP [43]. (l) DedustGAN [40]. (m) SIENet [39]. (n) Ours.

Figure 7.

Visual comparisons in sandstorm scenes. (a) Sand-dust images. (b) GDCP [5]. (c) RBCP [6]. (d) SCBCH [10]. (e) NGT [9]. (f) ROP [33]. (g) BCGF [16]. (h) TLS [18]. (i) FBE [21]. (j) TOENet [38]. (k) HDCP [43]. (l) DedustGAN [40]. (m) SIENet [39]. (n) Ours.

Figure 8.

Visual comparisons in strong sandstorm scenes. (a) Sand-dust images. (b) GDCP [5]. (c) RBCP [6]. (d) SCBCH [10]. (e) NGT [9]. (f) ROP [33]. (g) BCGF [16]. (h) TLS [18]. (i) FBE [21]. (j) TOENet [38]. (k) HDCP [43]. (l) DedustGAN [40]. (m) SIENet [39]. (n) Ours.

Figure 9.

Visual comparisons in extremely strong sandstorm scenes. (a) Sand-dust images. (b) GDCP [5]. (c) RBCP [6]. (d) SCBCH [10]. (e) NGT [9]. (f) ROP [33]. (g) BCGF [16]. (h) TLS [18]. (i) FBE [21]. (j) TOENet [38]. (k) HDCP [43]. (l) DedustGAN [40]. (m) SIENet [39]. (n) Ours.

Figure 5 shows the experimental results of various algorithms for images captured under sand-dust weather conditions in Xinjiang. The images obtained in this scenario are more similar to haze images. This similarity is due to the small size of sand-dust particles in the air, which results in minimal absorption and scattering of light and images with almost no color casts and only a thin dust mist. Figure 5 shows that all algorithms achieve some results in processing floating dust images. However, methods GDCP [5], TLS [18], ROP [33], TOENet [38], and HDCP [43] cannot remove dust haze from some images, such as the fourth image. Color distortion appears in images processed by RBCP [6] and SIENet [39]. The images processed with methods SCBCH [10], NGT [9], BCGF [16], FBE [21], and ours obtained better visual effects, and the contrast of the images was improved. The image restored with the DedustGAN [40] is distorted, which is evident in the sky region in the fifth image.

Figure 6 shows the processing results of various methods for sand-dust images with weak color casts captured under sand-dust weather in Xinjiang. As shown in Figure 6, FBE [21] can obtain better results with more natural image tones. GDCP [5] and TOENet [38] cannot remove color cast. The images recovered with RBCP [6], BCGF [16], and DedustGAN [40] show local darkness and color distortions. The SCBCH [10], ROP [33], NGT [9], and TLS [18] methods cannot remove the dust haze from the image, but improve the contrast of the restored image. Images restored by SIENet [39] and HDCP [43] have enhanced detail and noise, but the restored image is distorted. Due to the influence of prior knowledge and synthetic dataset training models, some algorithms obtain poor results when processing sand-dust images with slight color casts, mainly because the estimated parameters are inaccurate.

The results of images captured under sandstorm weather are shown in Figure 7. The SCBCH [10] and FBE [21] methods can eliminate color casts more effectively than other methods. The GDCP [5] and TOENet [38] methods cannot eliminate color casts. The images restored by other comparison algorithms such as SIENet [39] and RBCP [6] have varying degrees of color casts. The NGT [9], DedustGAN [40], and SIENet [39] methods failed to effectively restore images, as they were unable to adequately correct for image distortion.

Figure 8 and Figure 9 illustrate the results of images captured under severe and extremely strong sandstorm conditions, respectively. As shown in Figure 8 and Figure 9, although the above algorithms achieve satisfactory results for floating dust images and sand-dust images with weak color casts, they fail to effectively recover images taken under strong dust storm conditions with significant color shifts. Methods SCBCH [10] and SIENet [39] can effectively eliminate color casts but do not enhance the image. Other comparison algorithms such as GDCP [5], RBCP [6], NGT [9], ROP [33], BCGF [16], TLS [18], FBE [21], TOENet [38], HDCP [43], and DedustGAN [40] fail to produce satisfactory results for images captured under strong sandstorm conditions, and not only fail to eliminate color deviation, but also cause new color deviations as well as distortions in the recovered image. In contrast, the proposed algorithm consistently yields superior results across various sand-dust scenarios.

In general, the subjective comparison results of various sand-dust images demonstrate that the proposed algorithm outperforms the state-of-the-art methods. The proposed algorithm effectively restores images captured in diverse sand-dust scenarios, achieving superior visual effects. Specifically, the contrast of the restored images is enhanced, and the details of objects within the images are more clearly delineated.

4.3. Quantitative Evaluation

To comprehensively evaluate the proposed algorithm, we employed a suite of quantitative metrics, including e, , , DIIVINE, NPQI, and NIQE, as detailed in Section 4.1. These metrics were used to compare the performance of the proposed algorithm against 12 state-of-the-art comparison algorithms.

Table 1 shows the average evaluation results of the 25 images in Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9. As shown in Table 1, the proposed algorithm can obtain larger e and values as well as a smaller smaller , Additionally, it consistently ranks among the top-performing algorithms in other metrics. The lowest NIQE and NPQI values obtained by the proposed algorithm suggest that the restored images exhibit superior quality and are rich in natural scene statistical feature information. Although the DIIVINE metric for RBCP, SCBCH, and TLS consistently outperform the proposed method, the subjective results are unsatisfactory, with the restored images exhibiting low brightness and the presence of sand and dust fog. The high DIIVINE scores may be attributed to the feature extraction based on statistical models and the distortion classification mechanism, which fail to fully encompass the diversity of complex visual perception. Haze and low lighting conditions can potentially “deceive” the algorithm, causing its statistical features to align more closely with the high-score patterns in the training data.

Table 1.

Average results of 25 sand-dust images.

To comprehensively evaluate the performance of the proposed algorithm, we collected a large number of real sand-dust images. Table 2 presents the average results of 1070 sand-dust images with various color casts collected from the Internet. Additionally, Table 3 provides the average evaluation results of 460 images captured under real sand-dust weather conditions. The e in Table 2 cannot be calculated due to the high distortion of the compressed sand-dust image collected from the Internet. Although the HDCP [43] method achieves the best , the real restoration performance is poor, as shown in Figure 8 and Figure 9. In addition, the proposed algorithm achieved the best NIQE and NPQI scores and superior DIIVINE values, indicating its ability to produce high-quality restored images with minimal distortion and rich natural scene statistical feature information when processing various distorted sand-dust images.

Table 2.

Average result of 1070 various sand-dust images.

Table 3.

Average results of 460 real sand-dust images captured in Xinjiang.

To mitigate the impact of image distortion on the performance evaluation of the algorithm, we collected 460 high-resolution sand-dust images from real-world scenarios. These images were used to comprehensively measure the algorithm’s performance. The results in Table 3 also show that the overall performance of the proposed method is better than other state-of-the-art algorithms. The qualitative analyses in Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9 and quantitative analyses in Table 1, Table 2 and Table 3 show that the sand-dust images recovered by the proposed algorithm have better visual qualities, and the performance of the proposed algorithm is better. This is due to the fact that the designed U-Net network structure extracts features better.

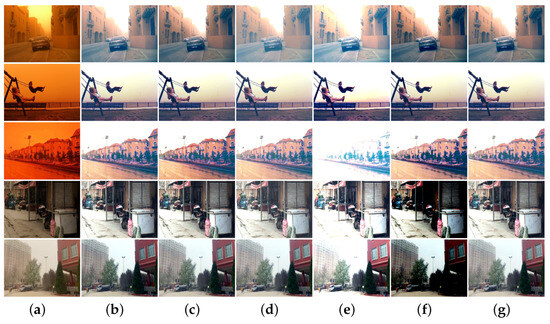

4.4. Ablation Study

To verify the effectiveness of the loss functions used for model training, ablation experiments are conducted for different loss functions in this section. Figure 10 shows the ablation results of the loss function. As shown in Figure 10c, when the atmospheric light loss function is not used, the restored image is darker overall. As shown in Figure 10d, when the transmission loss function is not used, the fog in the recovered image cannot be completely eliminated. Figure 10e,f show that without a saturation penalty function for bright and dark pixels, the reconstructed image is either very brilliant or very dark. As shown in Figure 10g, the main contribution of the gradient loss function is to improve the fidelity of the recovered image.

Figure 10.

Ablation study of loss function. (a) Sand-dust images. (b) The proposed method. (c) Without atmospheric light loss . (d) Without transmission loss . (e) Without bright pixel saturation-penalized loss . (f) Without dark pixel saturation-penalized loss . (g) Without gradient loss .

To further evaluate the performance of the proposed algorithm, we conducted a quantitative assessment of the loss function using 1530 real sand-dust images. The quantitative results are shown in Table 4. As can be seen from Table 4, the joint effect of all loss functions achieved significant improvements.

Table 4.

Ablation results of 1530 real sand-dust images with different loss functions.

5. Conclusions

In this paper, we propose a zero-shot learning-based dust image restoration method based on the imaging model of images in the atmosphere that does not rely on prior knowledge of images or the setting of hyperparameters. The training of the model can be completed by inputting only a single sand-dust image, and the sharp image can be recovered by the atmospheric scattering model. We conduct experiments on many real sand-dust images and perform qualitative and quantitative analyses. Experimental results show that the proposed algorithm can restore images under various sand-dust weather conditions. The proposed method can eliminate color casts and enhance contrast and details of the images more effectively than existing algorithms, and the robustness and performance of the proposed method are better than those of state-of-the-art algorithms. In the algorithmic framework proposed in this paper, color cast elimination is considered during preprocessing for sand-dust images before model training. In the future, we plan to investigate the design of a model that can eliminate color casts adaptively and restore sand-dust images accurately.

Author Contributions

Conceptualization, F.S. and Z.J.; methodology, F.S. and Z.J.; software, F.S. and Y.Z.; validation, F.S. and Y.Z.; formal analysis, F.S. and Z.J.; investigation, F.S. and Y.Z.; resources, F.S.; data curation, F.S. and Y.Z.; writing—original draft preparation, F.S.; writing—review and editing, F.S., Y.Z., and Z.J.; visualization, F.S.; supervision, Z.J.; project administration, F.S., Y.Z. and Z.J.; funding acquisition, F.S. and Z.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Natural Science Foundation of XinJiang province under Grants 2022D01C58, National Natural Science Foundation of China under Grant 62261053, Tianshan Talent Training Project–Xinjiang Science and Technology Innovation Team Program (2023TSYCTD0012), Tianshan Innovation Team Program of Xinjiang Uygur Autonomous Region of China (2023D14012).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available because the data were collected by the authors themselves.

Acknowledgments

We sincerely thank the editors and reviewers for taking the time to review our manuscript.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| NGT | Normalized gamma transformation |

| SCBCH | Continuous color balancing method with overlapping histograms |

| BCGF | Blue channel compensation and guided image filtering |

| TLS | Tensor least squares optimization |

| DCP | Dark channel prior |

| GDCP | Generalization of the dark channel prior |

| HDCP | Halo-reduced dark channel prior |

| RBCP | Reversing the blue channel prior |

| FBE | Fusion-based enhancement |

| NPQI | Natural scene statistics and perceptual characteristics-based quality index |

| NIQE | Natural image quality evaluator |

References

- Quan, Y.; Tan, X.; Huang, Y.; Xu, Y.; Ji, H. Image desnowing via deep invertible separation. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3133–3144. [Google Scholar] [CrossRef]

- Li, Y.; Lu, J.; Chen, H.; Wu, X.; Chen, X. Dilated Convolutional Transformer for High-Quality Image Deraining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Vancouver, BC, Canada, 17–24 June 2023; pp. 4199–4207. [Google Scholar]

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision transformers for single image dehazing. IEEE Trans. Image Process. 2023, 32, 1927–1941. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Zhang, W.; Bai, L.; Ren, P. Metalantis: A comprehensive underwater image enhancement framework. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–19. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cao, K.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef]

- Gao, G.; Lai, H.; Jia, Z.; Liu, Y.; Wang, Y. Sand-dust image restoration based on reversing the blue channel prior. IEEE Photonics J. 2020, 12, 1–16. [Google Scholar] [CrossRef]

- Ning, Z.; Shanjun, M.; Mei, L. Visibility restoration algorithm of dust-degraded images. J. Image Graph. 2016, 21, 1585–1592. [Google Scholar]

- Yan, T.; Wang, L.; Wang, J. Method to Enhance Degraded Image in Dust Environment. J. Softw. 2014, 9, 2672–2677. [Google Scholar] [CrossRef]

- Shi, Z.; Feng, Y.; Zhao, M.; Zhang, E.; He, L. Normalised gamma transformation-based contrast-limited adaptive histogram equalisation with colour correction for sand-dust image enhancement. IET Image Process. 2020, 14, 747–756. [Google Scholar] [CrossRef]

- Park, T.H.; Eom, I.K. Sand-dust image enhancement using successive color balance with coincident chromatic histogram. IEEE Access 2021, 9, 19749–19760. [Google Scholar] [CrossRef]

- Alruwaili, M.; Gupta, L. A statistical adaptive algorithm for dust image enhancement and restoration. In Proceedings of the 2015 IEEE International Conference on Electro/Information Technology (EIT), Dekalb, IL, USA, 21–23 May 2015; pp. 286–289. [Google Scholar]

- Wang, J.; Pang, Y.; He, Y.; Liu, C. Enhancement for dust-sand storm images. In MultiMedia Modeling, Proceedings of the MultiMedia Modeling: 22nd International Conference, MMM 2016, Miami, FL, USA, 4–6 January 2016; Proceedings, Part I 22; Springer: Cham, Switzerland, 2016; pp. 842–849. [Google Scholar]

- Gao, G.; Lai, H.; Liu, Y.; Wang, L.; Jia, Z. Sandstorm image enhancement based on YUV space. Optik 2021, 226, 165659. [Google Scholar] [CrossRef]

- Gao, G.; Lai, H.; Wang, L.; Jia, Z. Color balance and sand-dust image enhancement in lab space. Multimed. Tools Appl. 2022, 81, 15349–15365. [Google Scholar] [CrossRef]

- Hua, Z.; Nie, B. Enhancement Algorithm for Sandstorm Images. In Proceedings of the 2021 2nd International Conference on Control, Robotics and Intelligent System, Qingdao, China, 20–22 August 2021; pp. 273–278. [Google Scholar]

- Cheng, Y.; Jia, Z.; Lai, H.; Yang, J.; Kasabov, N.K. A fast sand-dust image enhancement algorithm by blue channel compensation and guided image filtering. IEEE Access 2020, 8, 196690–196699. [Google Scholar] [CrossRef]

- Lee, H.S. Efficient Color Correction Using Normalized Singular Value for Duststorm Image Enhancement. J 2022, 5, 15–34. [Google Scholar] [CrossRef]

- Xu, G.; Wang, X.; Xu, X. Single image enhancement in sandstorm weather via tensor least square. IEEE/CAA J. Autom. Sin. 2020, 7, 1649–1661. [Google Scholar] [CrossRef]

- Li, M.; Liu, J.; Yang, W.; Sun, X.; Guo, Z. Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans. Image Process. 2018, 27, 2828–2841. [Google Scholar] [CrossRef]

- Kenk, M.A.; Hassaballah, M.; Hameed, M.A.; Bekhet, S. Visibility Enhancer: Adaptable for Distorted Traffic Scenes by Dusty Weather. In Proceedings of the 2020 2nd Novel Intelligent and Leading Emerging Sciences Conference (NILES), Giza, Egypt, 24–26 October 2020; pp. 213–218. [Google Scholar]

- Fu, X.; Huang, Y.; Zeng, D.; Zhang, X.P.; Ding, X. A fusion-based enhancing approach for single sandstorm image. In Proceedings of the 2014 IEEE 16th International Workshop on Multimedia Signal Processing (MMSP), Jakarta, Indonesia, 22–24 September 2014; pp. 1–5. [Google Scholar]

- Cheng, Y.; Jia, Z.; Lai, H.; Yang, J.; Kasabov, N.K. Blue channel and fusion for sandstorm image enhancement. IEEE Access 2020, 8, 66931–66940. [Google Scholar] [CrossRef]

- Wang, B.; Wei, B.; Kang, Z.; Hu, L.; Li, C. Fast color balance and multi-path fusion for sandstorm image enhancement. Signal Image Video Process. 2021, 15, 637–644. [Google Scholar] [CrossRef]

- Hao, C.; Huicheng, L.; Guxue, G.; Hao, W.; Xuze, Q. Sand-dust Image Enhancement Based on Multi-exposure Image Fusion. Acta Photonica Sin. 2021, 50, 0910003. [Google Scholar]

- Yu, S.; Zhu, H.; Wang, J.; Fu, Z.; Xue, S.; Shi, H. Single sand-dust image restoration using information loss constraint. J. Mod. Opt. 2016, 63, 2121–2130. [Google Scholar] [CrossRef]

- Wang, Y.; Li, Y.; Zhang, T. The method of image restoration in the environments of dust. In Proceedings of the 2010 IEEE International Conference on Mechatronics and Automation, Xi’an, China, 4–7 August 2010; pp. 294–298. [Google Scholar]

- Wang, Y.; Li, Y.; Zhang, T. Study on the Method of Image Restoration in the Environment of Dust. In Proceedings of the 2010 6th International Conference on Wireless Communications Networking and Mobile Computing (WiCOM), Chengdu, China, 23–25 September 2010; pp. 1–4. [Google Scholar]

- Peng, Y.T.; Cosman, P.C. Single image restoration using scene ambient light differential. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 1953–1957. [Google Scholar]

- Yan, Y.; Chen, G.k. Single image visibility restoration using optical compensation and pixel-by-pixel transmission estimation. J. Commun. 2017, 38, 48. [Google Scholar]

- Lee, H.S. Efficient Sandstorm Image Enhancement Using the Normalized Eigenvalue and Adaptive Dark Channel Prior. Technologies 2021, 9, 101. [Google Scholar] [CrossRef]

- Huang, S.C.; Ye, J.H.; Chen, B.H. An advanced single-image visibility restoration algorithm for real-world hazy scenes. IEEE Trans. Ind. Electron. 2014, 62, 2962–2972. [Google Scholar] [CrossRef]

- Huang, S.C.; Chen, B.H.; Wang, W.J. Visibility restoration of single hazy images captured in real-world weather conditions. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 1814–1824. [Google Scholar] [CrossRef]

- Liu, J.; Liu, R.W.; Sun, J.; Zeng, T. Rank-One Prior: Real-Time Scene Recovery. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 8845–8860. [Google Scholar] [CrossRef] [PubMed]

- Si, Y.; Yang, F.; Guo, Y.; Zhang, W.; Yang, Y. A comprehensive benchmark analysis for sand dust image reconstruction. J. Vis. Commun. Image Represent. 2022, 89, 103638. [Google Scholar] [CrossRef]

- Si, Y.; Yang, F.; Liu, Z. Sand dust image visibility enhancement algorithm via fusion strategy. Sci. Rep. 2022, 12, 13226. [Google Scholar] [CrossRef]

- Shi, Z.; Liu, C.; Ren, W.; Shuangli, D.; Zhao, M. Convolutional neural networks for sand dust image color restoration and visibility enhancement. J. Image Graph. 2022, 27, 1493–1508. [Google Scholar] [CrossRef]

- Ding, B.; Chen, H.; Xu, L.; Zhang, R. Restoration of single sand-dust image based on style transformation and unsupervised adversarial learning. IEEE Access 2022, 10, 90092–90100. [Google Scholar] [CrossRef]

- Gao, Y.; Xu, W.; Lu, Y. Let you see in haze and sandstorm: Two-in-one low-visibility enhancement network. IEEE Trans. Instrum. Meas. 2023, 72, 1–12. [Google Scholar] [CrossRef]

- Gao, G.; Lai, H.; Jia, Z. Two-Step Unsupervised Approach for Sand-Dust Image Enhancement. Int. J. Intell. Syst. 2023, 2023, 4506331. [Google Scholar] [CrossRef]

- Meng, X.; Huang, J.; Li, Z.; Wang, C.; Teng, S.; Grau, A. DedustGAN: Unpaired learning for image dedusting based on Retinex with GANs. Expert Syst. Appl. 2024, 243, 122844. [Google Scholar] [CrossRef]

- Al-Ameen, Z. Visibility enhancement for images captured in dusty weather via tuned tri-threshold fuzzy intensification operators. Int. J. Intell. Syst. Appl. 2016, 8, 10. [Google Scholar] [CrossRef]

- Li, H.C.; Liu, X.L.C.; Pan, Z.R. Enhancement Algorithm of Dust Image Based on Multi-perceptual Feature Calculation. J. Lanzhou Univ. Technol. 2018, 4, 90–95. [Google Scholar]

- Shi, Z.; Feng, Y.; Zhao, M.; Zhang, E.; He, L. Let you see in sand dust weather: A method based on halo-reduced dark channel prior dehazing for sand-dust image enhancement. IEEE Access 2019, 7, 116722–116733. [Google Scholar] [CrossRef]

- Chen, B.H.; Huang, S.C. Improved visibility of single hazy images captured in inclement weather conditions. In Proceedings of the 2013 IEEE International Symposium on Multimedia, Anaheim, CA, USA, 9–11 December 2013; pp. 267–270. [Google Scholar]

- Zhang, X.S.; Yang, K.F.; Li, Y.J. Haze removal with channel-wise scattering coefficient awareness based on grey pixels. Opt. Express 2021, 29, 16619–16638. [Google Scholar] [CrossRef] [PubMed]

- Golts, A.; Freedman, D.; Elad, M. Unsupervised single image dehazing using dark channel prior loss. IEEE Trans. Image Process. 2019, 29, 2692–2701. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention, Proceedings of the MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2018, Granada, Spain, 20 September 2018; Springer: Cham, Switzerland, 2018; Volume 11073, pp. 3–11. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. IEEE Trans. Med Imaging 2022, 71, 4189–4198. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-like Pure Transformer for Medical Image Segmentation. In Computer Vision—ECCV 2022 Workshops, Proceedings of the ECCV 2022, Tel Aviv, Israel, 23—27 October 2022; Springer: Cham, Switzerland, 2022; Volume 13435, pp. 205–218. [Google Scholar]

- Liao, K.; Lin, C.; Zhao, Y.; Gabbouj, M. DR-GAN: Automatic radial distortion rectification using conditional GAN in real-time. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 725–733. [Google Scholar] [CrossRef]

- Li, X.; Zhang, B.; Sander, P.V.; Liao, J. Blind geometric distortion correction on images through deep learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4855–4864. [Google Scholar]

- Liao, K.; Yue, Z.; Wu, Z.; Loy, C.C. MOWA: Multiple-in-One Image Warping Model. arXiv 2024, arXiv:2404.10716. [Google Scholar]

- Koschmieder, H. Theorie der horizontalen Sichtweite. In Beitrage zur Physik der freien Atmosphare; Keim & Nemnich: Munich, Germany, 1924; pp. 33–53. [Google Scholar]

- Kar, A.; Dhara, S.K.; Sen, D.; Biswas, P.K. Zero-Shot Single Image Restoration Through Controlled Perturbation of Koschmieder’s Model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16205–16215. [Google Scholar]

- Moorthy, A.K.; Bovik, A.C. Blind image quality assessment: From natural scene statistics to perceptual quality. IEEE Trans. Image Process. 2011, 20, 3350–3364. [Google Scholar] [CrossRef]

- Liu, Y.; Gu, K.; Li, X.; Zhang, Y. Blind image quality assessment by natural scene statistics and perceptual characteristics. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2020, 16, 1–91. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Hautiere, N.; Tarel, J.P.; Aubert, D.; Dumont, E. Blind contrast enhancement assessment by gradient ratioing at visible edges. Image Anal. Stereol. 2008, 27, 87–95. [Google Scholar] [CrossRef]

- Shocher, A.; Cohen, N.; Irani, M. “zero-shot” super-resolution using deep internal learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3118–3126. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).