1. Introduction

Water level measurement is fundamental to the hydrological monitoring of rivers, lakes, and reservoirs, and timely and accurate water level measurements are crucial for water resource management and the early warning and forecasting of water disasters [

1]. In the field of hydrological monitoring, existing water level measurement techniques are primarily divided into two categories: contact and non-contact [

2].

Contact water level meters primarily include the following: (1) float-type water level meters [

3,

4,

5,

6,

7], which offer good stability and high reliability and are generally applicable within a 40 m range of water level variation, but require the use of logging wells, resulting in high construction, operation, and maintenance costs, while also being unsuitable for rivers with severe siltation or gently sloping cross-sections that present sizing problems; (2) pressure-type water level meters, which have a simple structure, are inexpensive, and are typically used within a 20 m range of water level variation; however, they are not suitable for water bodies with high sand content, nor are they suitable for estuaries and other areas where seawater is affected by changes in water density and severe siltation at measurement points; (3) liquid-mediated ultrasonic water level meters, which are ideal for measurement points without the option to construct water level wells. They usually require installation at least 0.5 m below the lowest water level and are unsuitable for silt channels. They also experience problems with temperature and time drift [

4].

Non-contact water level meters primarily include the following. (1) Gas-mediated ultrasonic water level meters [

5] have a simple principle and are cost-effective, suitable for installation at least 0.5 m above the highest water level. However, they require ensuring that the transducer’s beam angle is not obstructed within the reflector’s range and are not suitable for measurements on gentle slopes. Additionally, they experience more significant temperature and time drift issues. (2) Laser water level meters offer high ranging accuracy up to the millimeter level but require a water level logging well [

5]. (3) Radar water level meters [

8,

9,

10,

11] have higher measurement accuracy, reaching the centimeter level, and exhibit no significant temperature or time drift issues. However, they must be installed vertically above the measured water body and are not suitable for measurements on gentle bank slopes. Additionally, the radar signal beam angle range must be unobstructed, and rain or snow may interrupt the measurement [

6]. (4) Visual water level meters primarily function by recognizing the water body in the water ruler and other references for water level line readings to convert water levels. In principle, they exhibit no temperature or time drift, and can perform tilt detection, providing intuitive results that facilitate visual calibration. However, this method is highly sensitive to complex lighting conditions (such as reflection and flare) [

12], adverse weather (like fog and heavy rain), and water flow disturbances (including waves and floating objects) on the water surface. Entanglement of the water ruler by floating objects is also a significant concern, as it can lead to measurement failure or even damage to the water ruler, requiring manual intervention for maintenance. Consequently, visual water level meter implementation is typically limited to scenarios with vertical slopes or bridge abutments, as well as other stable installation settings.

Binocular stereo vision technology [

13], known for its contactless and simple structure, is widely used in fields such as industrial precision measurement, autonomous driving, robot navigation, and medical diagnosis [

14,

15,

16], making it one of the most prevalent methods for three-dimensional information perception. Binocular stereo vision places two vision sensors at different positions and observes the same scene from different perspectives at the same time to obtain a set of stereo image pairs containing scene depth information and then calculates depth information using an appropriate algorithm.

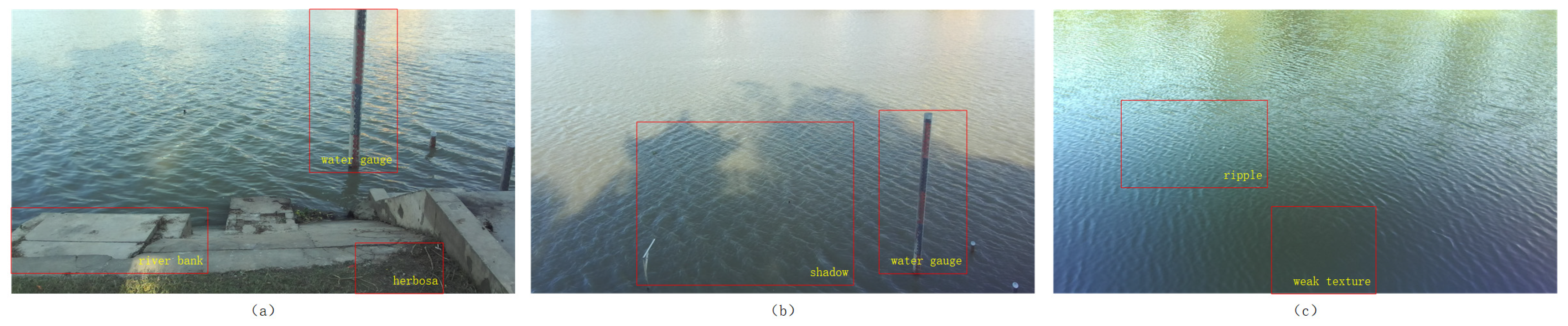

In stereo vision applications, the baseline length of the binocular camera directly influences the measurement range and accuracy [

17]; thus, the appropriate baseline length should be determined based on the camera’s focal length and the actual detection distance. The technical core of the water level measurement method based on binocular stereo vision is obtaining accurate parallax maps from the left and right eye images and using the parallax principle to obtain 3D point cloud data. Currently, parallax map acquisition methods can be divided into two categories: traditional stereo matching and deep learning stereo matching. Among these, traditional stereo matching algorithms include BM (block matching) [

18], SGBM (semi-global block matching) [

19], Census [

20], and others. Water surface images are prone to specular reflection, shadows, flare, ripples, and other optical noise, complicating feature extraction and matching in areas with weak and repeated textures. Moreover, bank slopes, vegetation, and floating objects can obstruct the water surface, leading to voids in the parallax map or inaccuracies in representing real water surface anomalies. Consequently, using the parallax map to obtain three-dimensional point cloud data and performing point cloud plane fitting are essential steps to acquire the water level value. Classical plane fitting methods [

21,

22,

23,

24] include the least squares methods and eigenvalue methods, among others. However, these methods cannot eliminate the influence of outliers on plane fitting, leading to low accuracy and poor robustness in horizontal plane fitting. Deep learning methods can extract complex global features and contextual information and offer more significant advantages in handling water surface environments with specular reflection, weakly textured, or even untextured regions. However, no high-precision parallax map datasets are available for real water surface environments in the stereo vision research field [

25,

26,

27,

28]. Consequently, deep learning stereo matching models trained directly on existing terrestrial and synthetic datasets often lack sufficient generalization ability.

The proposed method of using binocular stereo vision for water surface height measurement represents a significant advancement compared to existing techniques. Unlike traditional contact methods, which require physical contact with the water and are susceptible to environmental interference, our approach is entirely non-contact. This eliminates the need for costly and complex infrastructure such as logging wells, making it more practical for a wide range of hydrological monitoring scenarios. Additionally, compared to other non-contact methods, binocular stereo vision offers several unique advantages. For instance, it does not require the installation of additional reference markers or devices, such as water rulers or laser targets, which are often necessary for visual water level meters or laser-based systems. This reduces the complexity and potential for errors associated with these methods.

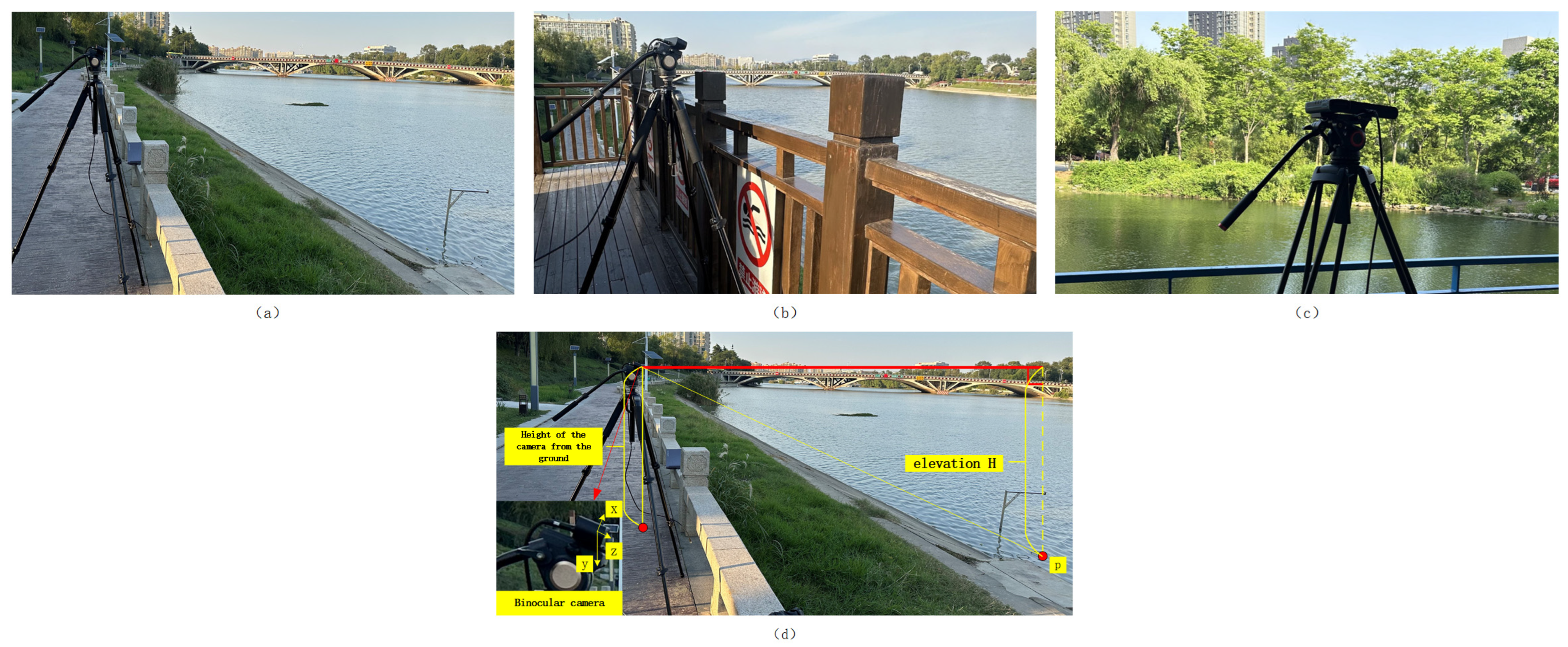

In this context, this study examines the measurement accuracy of the binocular system using the binocular stereo vision method, tailored to the actual measurement scenarios. Subsequently, a sequence of water surface images is synchronously captured in grayscale using binocular cameras, and stereo matching algorithms are employed to obtain the parallax values of the left and right images, thereby reconstructing a three-dimensional water surface point cloud. The water surface point cloud was fitted to a plane to obtain elevation values from the camera to the fitted horizontal plane, and the acquired elevation data were verified against laser rangefinder measurements.

2. Materials and Methods

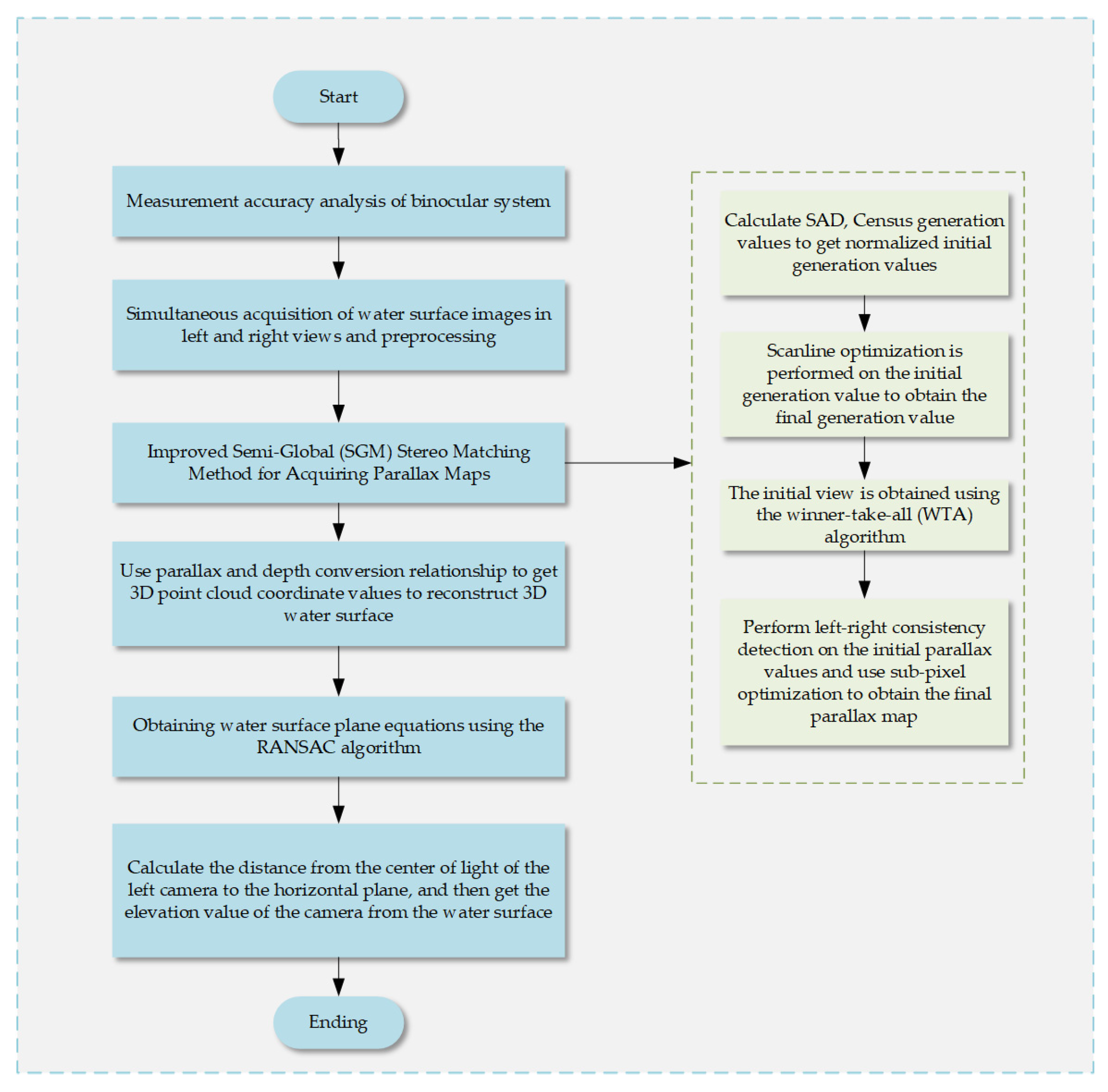

The implementation process of the water level measurement system, depicted in

Figure 1, comprises five steps: selecting the binocular camera, simultaneously acquiring and preprocessing water surface images, performing stereo matching, obtaining 3D point cloud coordinate values, fitting a horizontal plane, and acquiring elevation values. First, the stereo-corrected initial image of the water surface, synchronously acquired by the binocular camera, is converted to grayscale and preprocessed. Then, the SAD-Census algorithm calculates the cost on a pixel-by-pixel basis, and an iterative SGM (semi-global matching) algorithm is employed for matching cost aggregation to generate the parallax map. Next, based on the binocular camera’s calibration results, the relationship between parallax and depth is used to obtain the 3D point cloud coordinate values of the water surface image. The point cloud is fitted to a plane using the RANSAC (random sample consensus) algorithm [

29,

30,

31] to generate the horizontal plane equations. Finally, the distance from the optical center of the left camera to the horizontal plane is calculated to obtain the elevation value of the camera from the fitted horizontal plane.

2.1. Principle of Binocular Vision

When a spatial point is projected onto the two cameras of the binocular vision system via pinhole imaging, the two image points and the target point form a triangle. Using the parallax principle and the camera’s internal and external parameters, the 3D coordinates are determined. This process employs the principle of triangular similarity, thereby obtaining the spatial position information of the point. Assuming that the spatial target point has the coordinates in the pixel coordinate system as

, the coordinates under the image coordinate system as

, the coordinates in the camera coordinate system as

, and the coordinates in the world coordinate system as

, this is obtained according to the pinhole camera imaging principle [

32]:

where

and

are the focal lengths of the camera (in pixels) along and in the direction of the image;

and

are the coordinates of the principal point (the center of light of the image);

is the chi-square coordinate of the measurement point in the image coordinate system;

is the chi-square coordinate of the measurement point in the camera coordinate system;

is the camera’s internal reference matrix; and

and

denote the rotation and translation matrices between the camera coordinate system and the world coordinate system, respectively, with the origin of the world coordinate system being the left camera’s optical center.

Based on the principle of triangulation [

33], the expression for depth

can be deduced as the following:

where

and

are the coordinates of the intersection of the measurement point

and the imaging plane in the direction

, and

is the difference in u-direction coordinates of the point of the same name, also known as parallax.

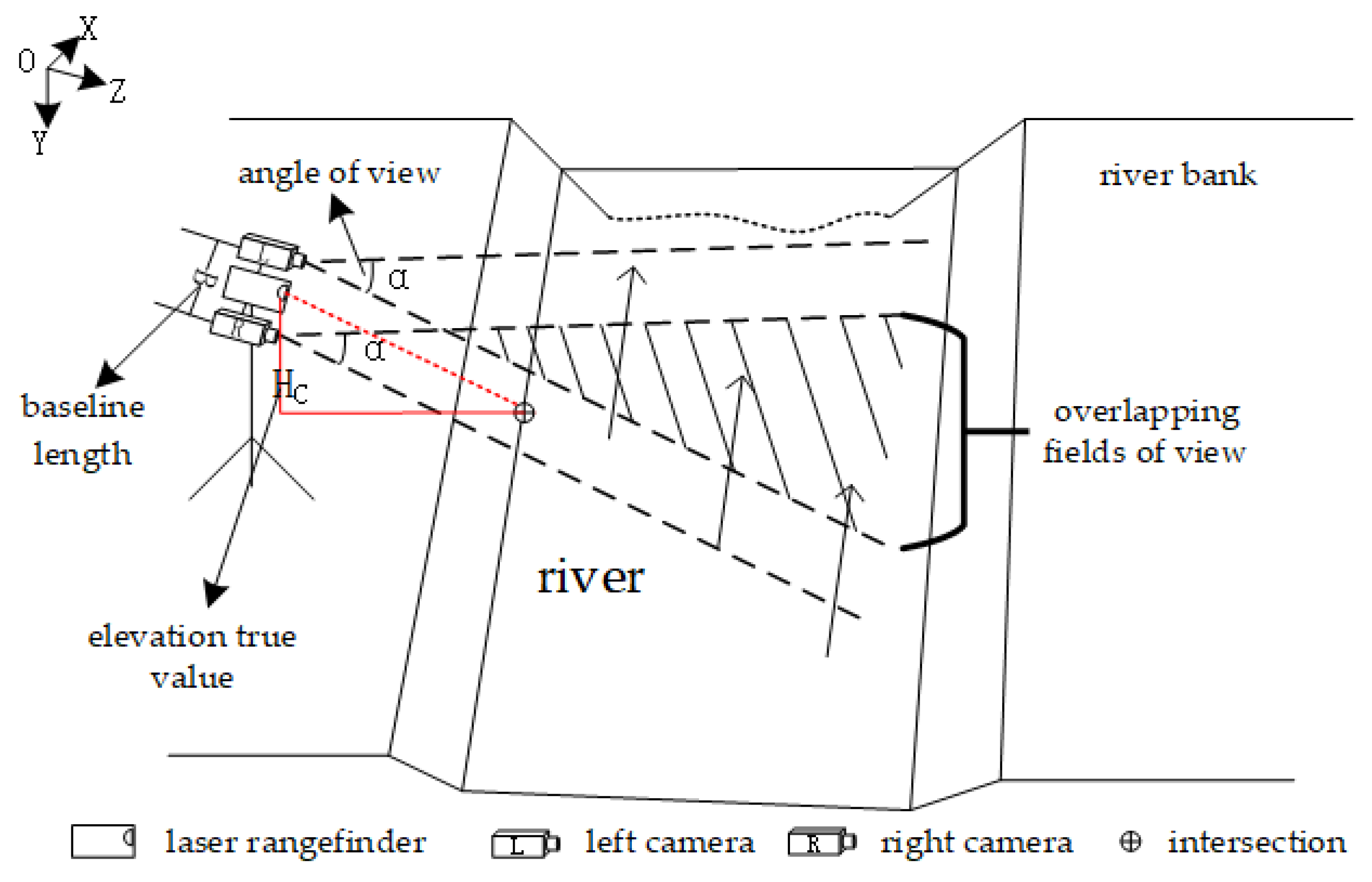

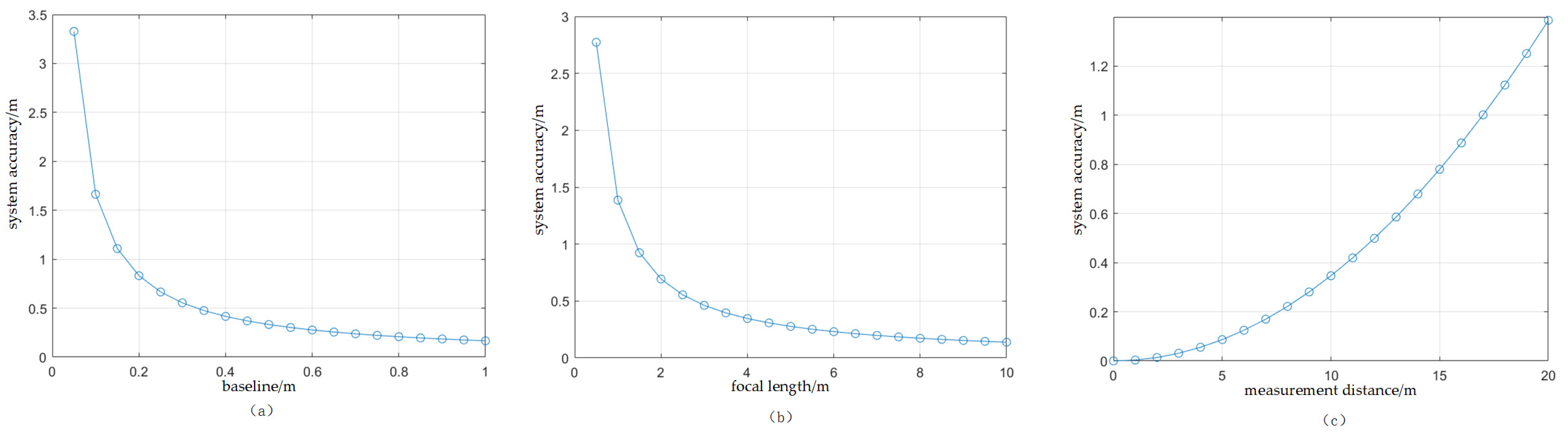

2.2. Measurement Accuracy Analysis of Binocular System

This study examines the accuracy of binocular stereo vision in real-world measurement scenarios. To ensure that the common field of view of the binocular camera covers at least two thirds of the field of view of either the left or right eye during measurement, I analyzed how the field of view angle and baseline length of the binocular camera affected the depth measurement distance, as per Equation (2). Equation (3) is derived from

Figure 2:

For

,

is the independent variable and a partial derivative is applied to

:

By multiplying Equation (4) by the parallax accuracy

, I derived Equation (5). It is evident that the focal length

, baseline length

, and measurement distance

significantly impact system accuracy

, particularly when the parallax accuracy

is one pixel.

2.3. Parallax Map Acquisition Methods

In this study, I employ an iterative optimization-based SGM stereo matching algorithm, considering that the matching cost at a single pixel is often influenced by image noise, uneven illumination, and other factors. Initially, I use the SAD and Census algorithms to compute the initial proxy value for each pixel in the water surface image, leveraging neighborhood pixel information to mitigate brightness anomalies caused by water surface reflection. Then, an iterative scanline optimization algorithm optimizes the aggregation of the initial generation values, and for each pixel, the parallax value corresponding to the smallest optimized generation value is selected to form the initial parallax map. Finally, leveraging the parallax consistency constraints, the initial parallax values are optimized through consistency detection and sub-pixel optimization methods to generate the final parallax map.

2.3.1. Calculation of Consideration

The fundamental principle of the SAD algorithm involves using a fixed window size. This window moves horizontally from left to right across the image, calculating the absolute difference of the gray values between corresponding pixels in the left and right images within this window. The SAD cost calculation function is expressed as the following:

where

and

are the grayscale values of the left and right views;

is any value between the maximum parallax

and the minimum parallax

; and

and

are the length and width of the computational window.

The Census transform generates a bit string by comparing the gray value of the center pixel with those of the surrounding pixels, and uses the comparison result in the following formula:

where

,

are the gray values at pixel points

,

;

denotes a bitwise connection;

denotes the neighborhood of the pixel

; and

is the binary code obtained by comparing the size relationship at any point of the left view. Based on the above equation, a bit string is obtained, and the Hamming distance between the bit strings of corresponding pixels in the left and right views is calculated to obtain the surrogate value, and the Census cost calculation function is derived:

where

is the bit string corresponding to the pixel point in the left view;

is the bit string corresponding to the pix point in the right view; and

is the Hamming distance.

The SAD and Census transformation algorithms are normalized to obtain the initial proxy value, and the function is calculated as shown in Equation (9):

2.3.2. Cost Aggregation

After obtaining the initial surrogate value, it is propagated from the high SNR to the low SNR region by multipath cost aggregation, and the surrogate value of the weak texture region is obtained by extrapolating the surrogate value from the rich texture region based on the smoothness and continuity of the water surface to obtain the optimized surrogate value of the left and right water surface images, which improves the matching accuracy of the weak texture region. In this paper, I use 8-path cost aggregation with multiple iterations, and the calculation formula is as follows:

where

is the algebraic value of the pixel at parallax. The second item is a smoothing item;

refers to the value of the aggregation generation for one pixel point along the direction of aggregation.

refers to the value of aggregated surrogates that differ in parallax value along the direction of aggregation within one pixel of each other, and

refers to the minimum of all surrogate values where the parallax values differ by more than one pixel. The third term,

, ensures that the new path generation value does not exceed a certain value. Ultimately, the aggregated surrogate value

of pixel

at parallax

is as follows:

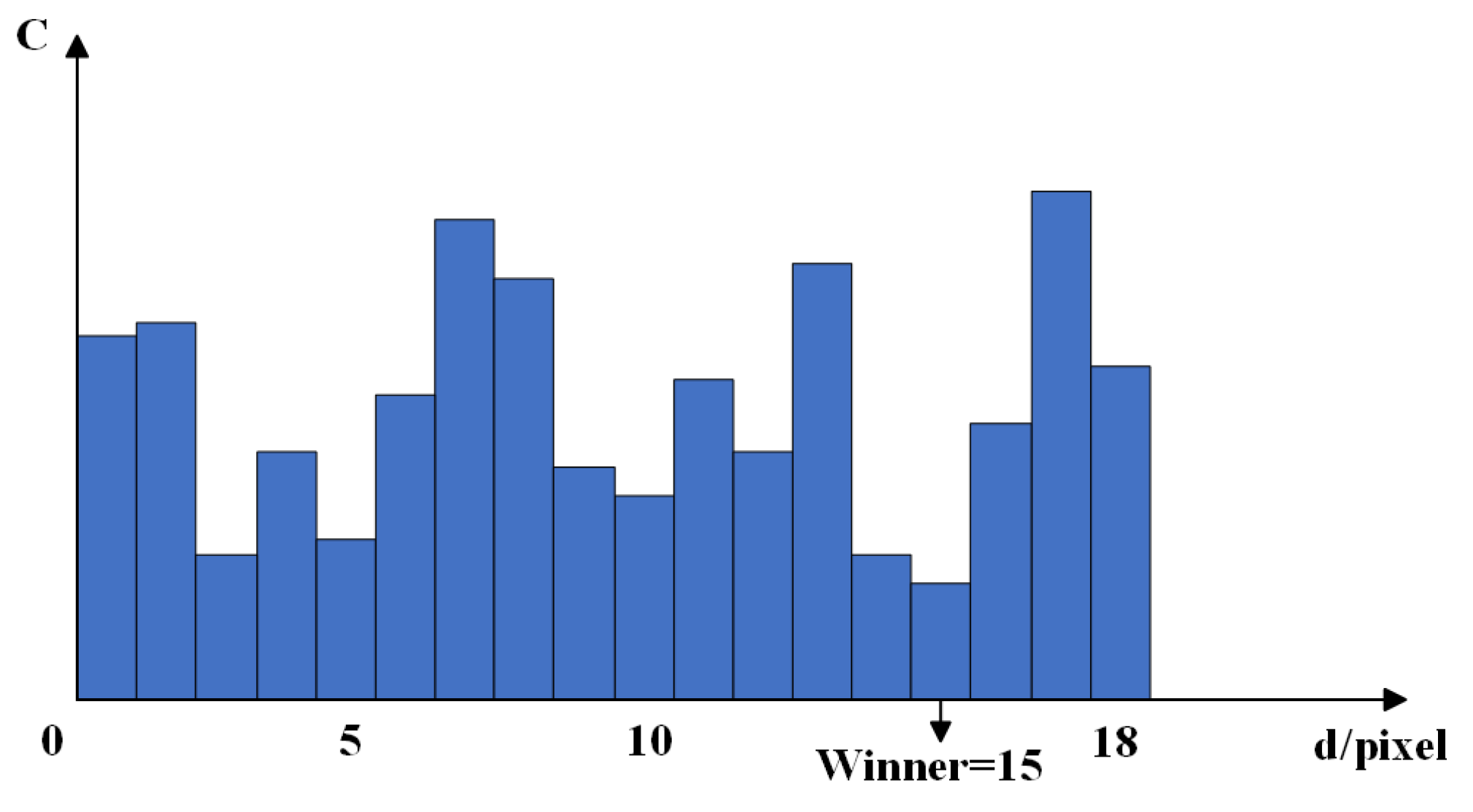

2.3.3. Parallax Calculation and Optimization

Parallax calculations for the aggregated proxy values are performed using the winner-take-all (WTA) algorithm. That is, for each pixel, the parallax value corresponding to the smallest proxy value is selected as the initial parallax. The algorithm is schematically illustrated in

Figure 3.

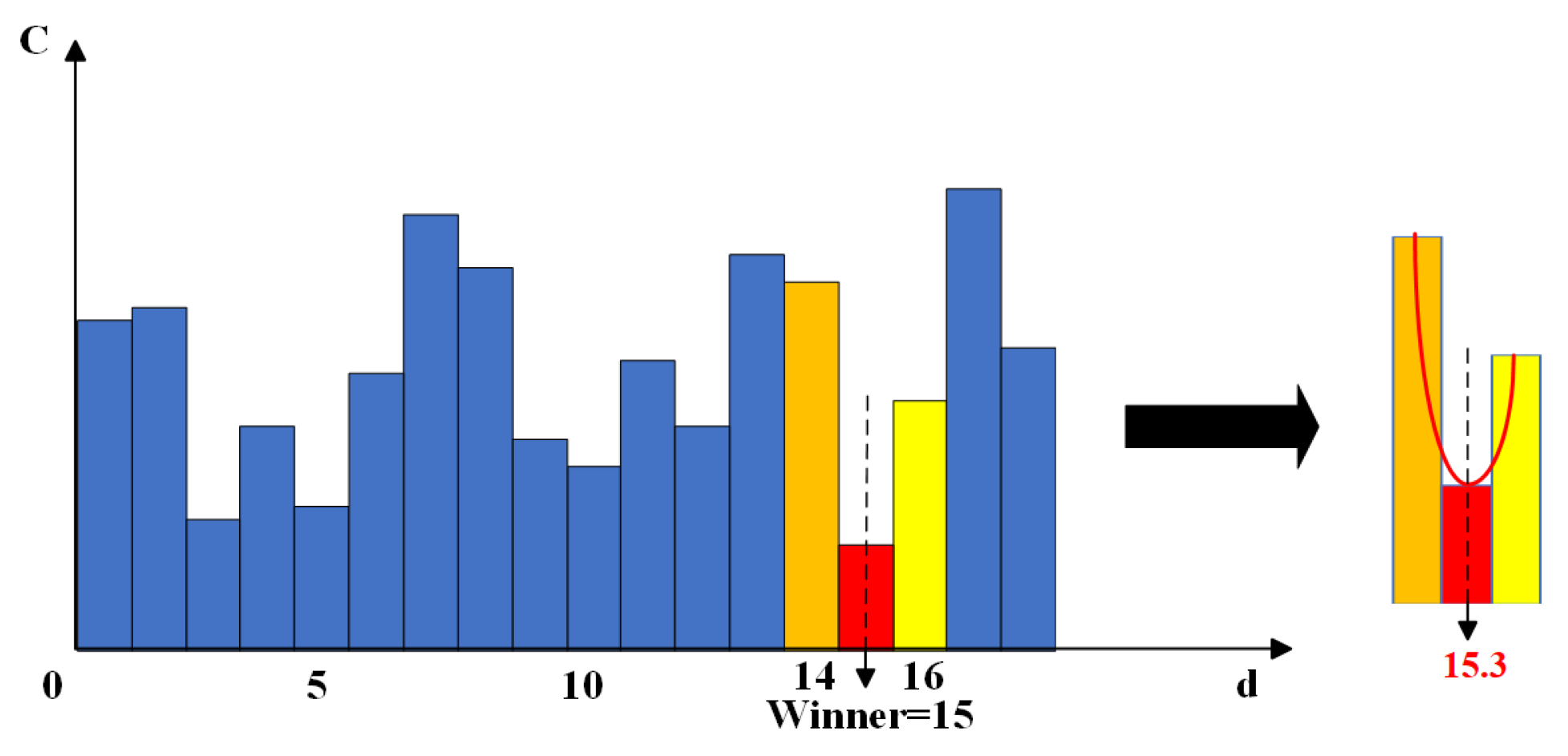

To mitigate the high false matching rate attributed to brightness disparities between the left and right images, I applied the parallax consistency constraint, conducted a consistency test on the initial parallax map, and eliminated invalid pixel points. Following the consistency check, the sub-pixel optimization technique was employed to enhance parallax accuracy. This technique further refines the pixel-level divisions by forming a parabola using the optimal parallax value and its adjacent left and right parallax values, substituting a very small value for the previously calculated optimal value to achieve sub-pixel level parallax optimization. The calculation function is presented in Equation (12). The principle is illustrated in

Figure 4.

where

is the optimized parallax value;

is the parallax value of the pixel

; and

and

are the aggregation costs of

at the parallaxes of

and

, respectively.

2.4. Methods for Obtaining Water Level Values

Utilizing the dense parallax map obtained, I initially acquired the 3D point cloud coordinates for each pixel in the water surface image using Equation (2) to reconstruct the water surface in three dimensions. Subsequently, employing the RANSAC algorithm, I processed the 3D point cloud to fit a plane and generated the equation of the horizontal plane. Lastly, I calculated the distance from the optical center of the left camera to the horizontal plane to determine the elevation of the water surface relative to the binocular camera.

The fundamental principle of the RANSAC algorithm for fitting a horizontal surface model is as follows:

- (1)

Three randomly selected non-collinear points from the water surface 3D point cloud coordinate dataset are used to determine preliminary planar model , where, , , , are the coefficients of the model;

- (2)

The distance

of every other data point

to this plane is calculated according to Equation (13); threshold

is set. If

, then

denotes the interior point and the number of interior points is denoted as

;

where

is an indicator function that takes the value 1 when condition

holds and 0 otherwise and is the total number of data in the dataset, and

is the total number of data in dataset

;

- (3)

According to Equation (14), if the number of interior points of the current model exceeds the previous maximum number of interior points , then the optimal model parameters are updated to ;

- (4)

The above steps are repeated until a preset number of iterations (1000) is reached, and the model with the highest number of interior points is selected as the final result.

The camera elevation value

from the water surface is calculated as follows:

4. Discussion

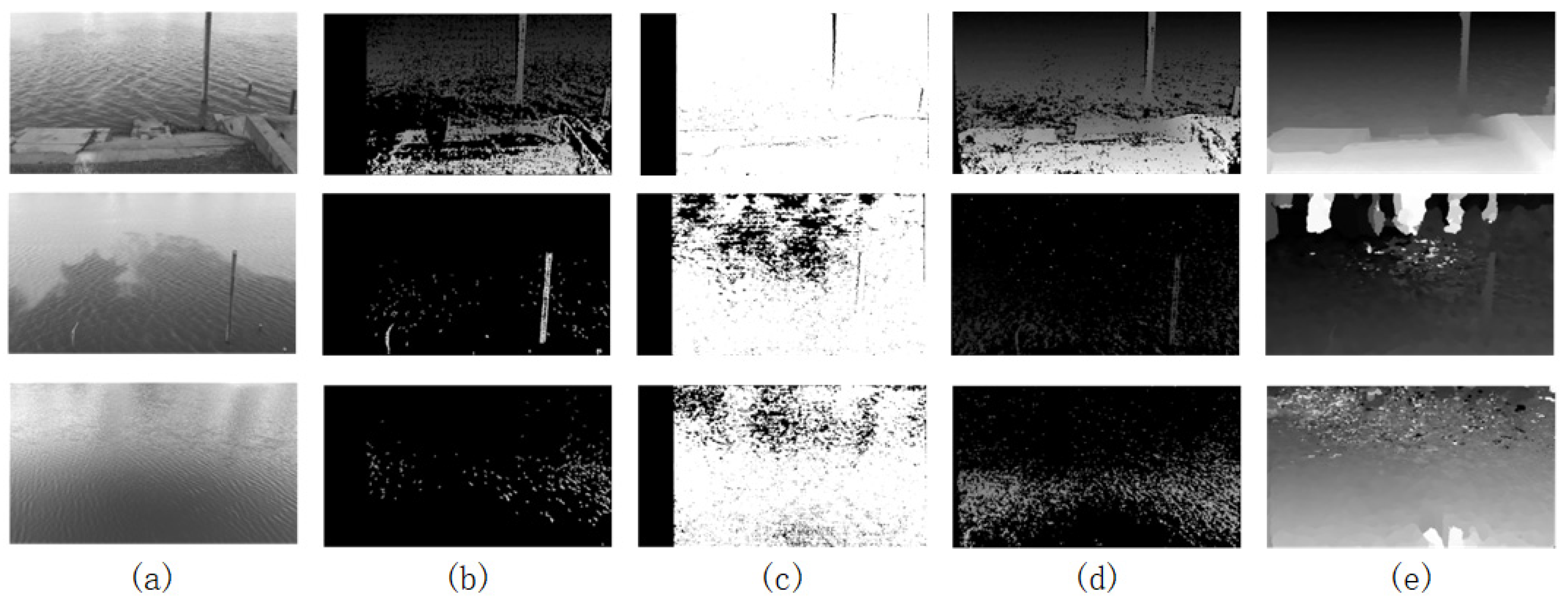

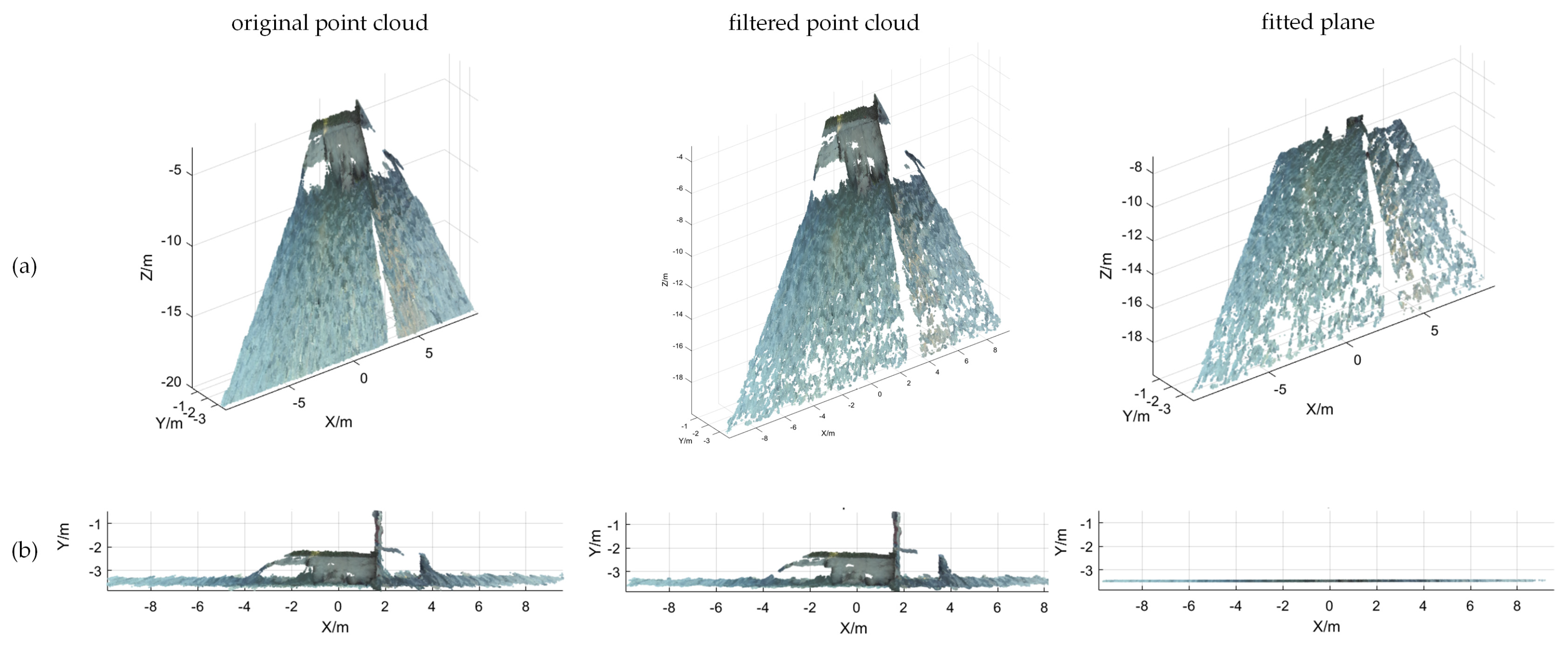

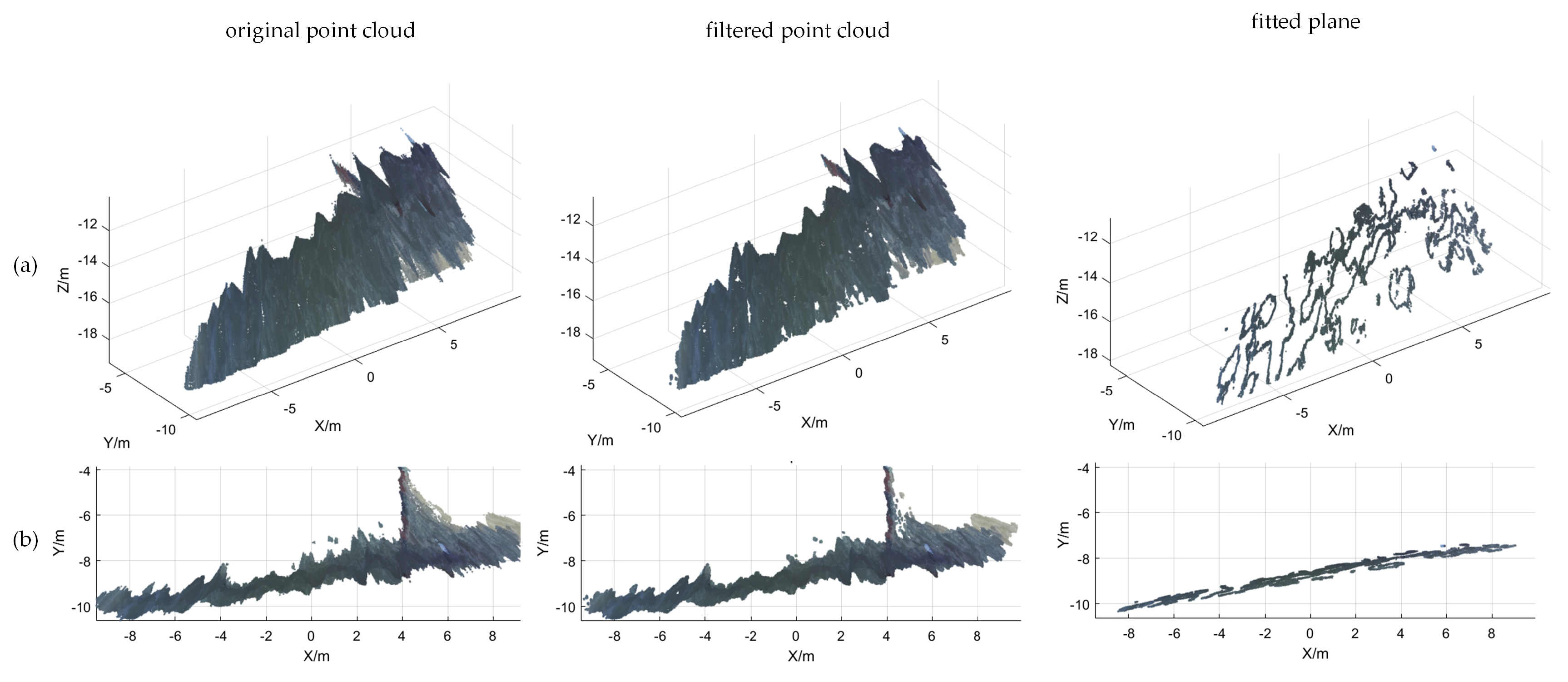

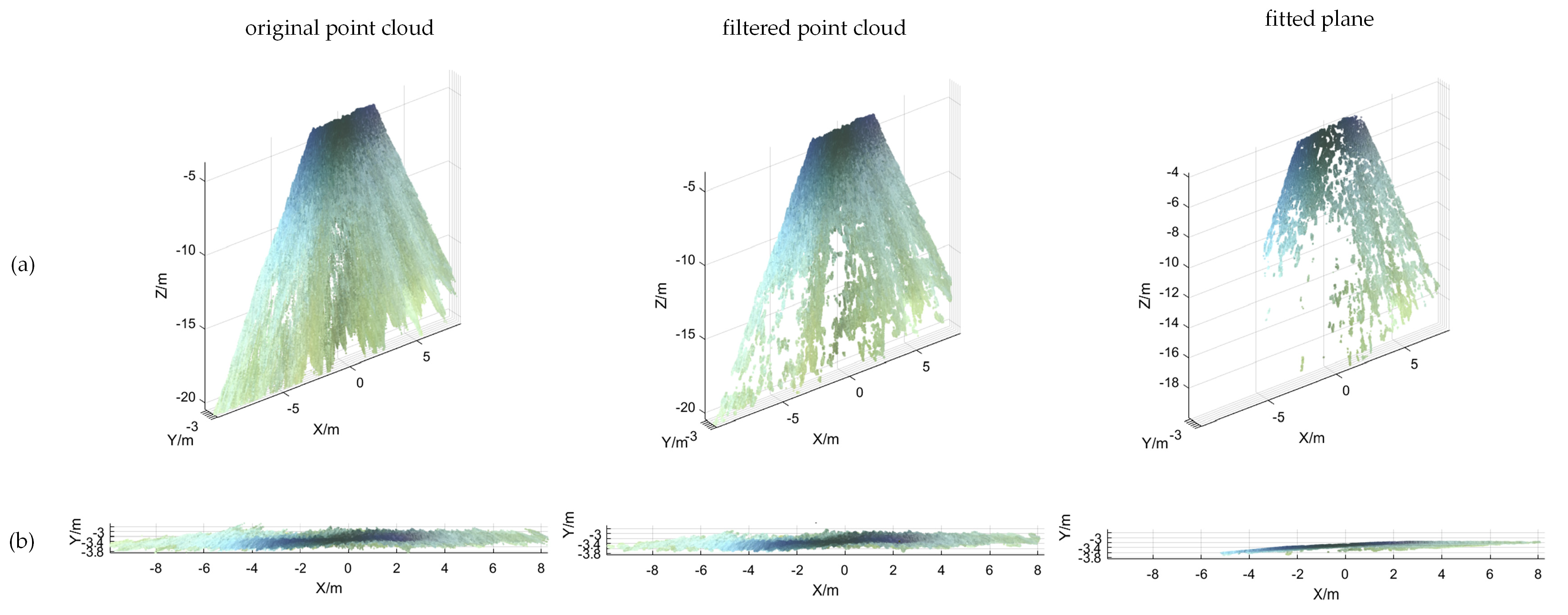

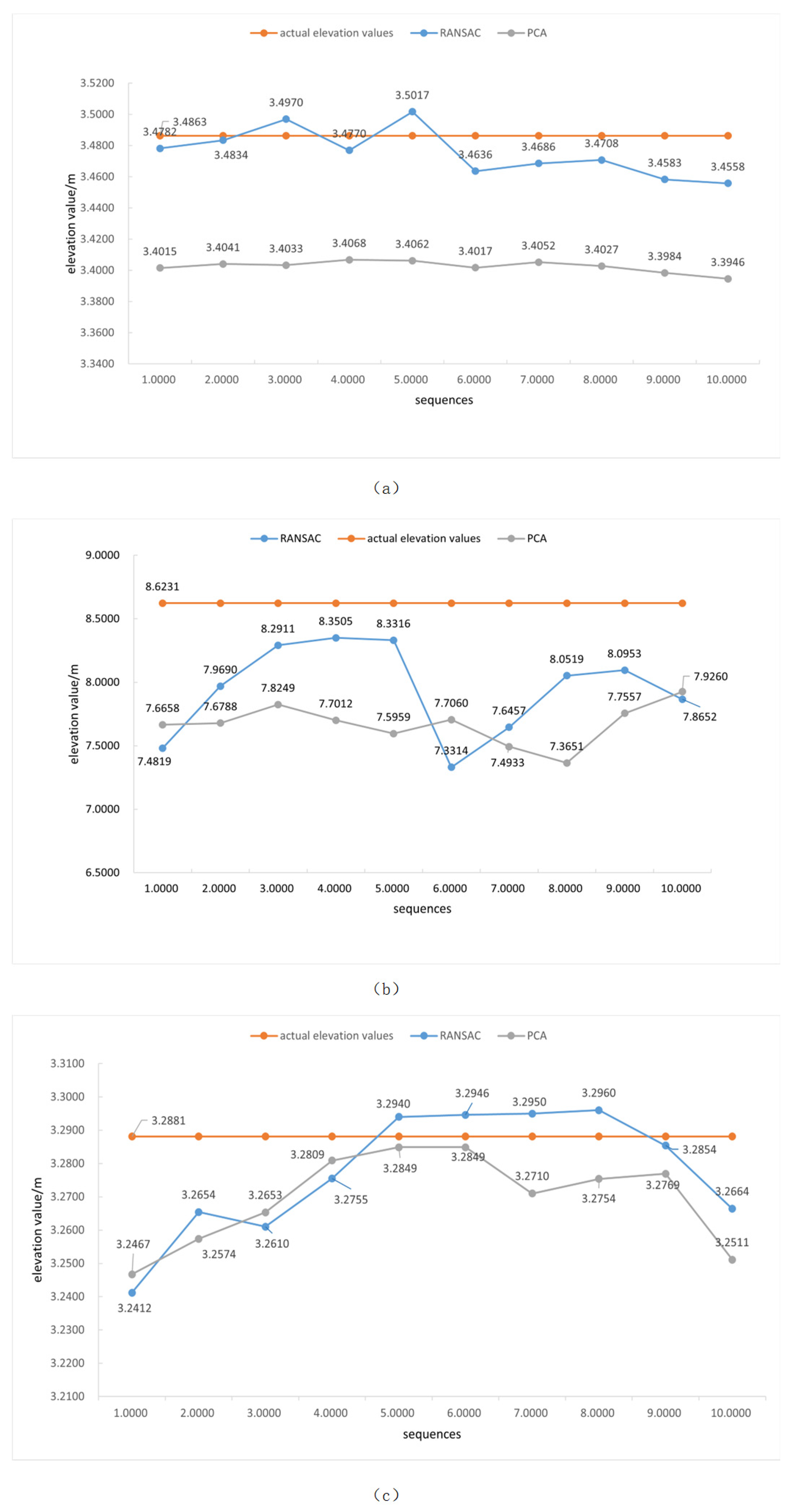

From

Figure 8, it is evident that the BM and SGBM algorithms obtain less effective information in environments affected by water surface shadows, and the generated parallax maps are more sparse and can only reflect the contour of the water ruler. The parallax map provides richer depth information in strongly textured scenes, such as riverbanks. However, it still contains more holes in the water surface area and fails to reflect the structural characteristics of the water surface. The water surface parallax map generated by the traditional SGM algorithm recovers clearer water surface ripples in regions with obvious water surface texture compared to the previous two algorithms. However, it still struggles to handle large areas with weak texture effectively. The algorithm proposed herein acquires more effective information.

As shown in

Figure 8e, in the first scene, the water scale and near shore show clearer water surface ripples compared to the previous three methods, which lack obvious depth information. In the second scenario, the algorithm presented herein obtains an extremely dense parallax map, with obvious water surface ripples and rich depth information of the riverbank, demonstrating its effectiveness. The third scenario obtained more water surface information compared to the first three methods but still exhibited many holes, with no depth information for the far shore and highly reflective water surface. This is achieved through enhanced cost calculation methods and the iterative optimization of cost aggregation, thereby obtaining relatively clear water surface structural features in complex water surface scenes while still achieving a more accurate parallax value in large, texture-free areas. Moreover, compared to traditional methods, the algorithm presented herein only takes longer than BM in terms of execution time. In all three scenarios, the algorithm demonstrates significant real-time performance advantages.

The maximum effective distance of the ZED 2i binocular camera used in this study for water level measurement is primarily constrained by the camera’s parallax range and measurement accuracy. In my experiments, the measurement error of this method was found to be less than 2 cm within a 5 m elevation range. However, as the distance increases, the parallax value decreases, leading to reduced measurement accuracy. In practice, it is recommended to use this method within 5 m to ensure high measurement accuracy. The baseline distance of the stereo camera (i.e., the distance between the two camera lenses) has a significant effect on measurement accuracy. Longer baseline distances enhance parallax resolution, thereby improving measurement accuracy, but they also increase the risk of occlusion. In this study, the ZED 2i camera features a baseline distance of 12 cm, which has been experimentally verified to provide a good balance between accuracy and occlusion within the current measurement range. If longer distances need to be measured or higher accuracy is required, a binocular camera with a larger baseline distance should be considered, while the matching algorithm must be optimized simultaneously to minimize errors caused by occlusions.

Highly reflective or uniform water surfaces, such as calm water, pose a challenge for binocular stereo vision measurements. As these surfaces lack sufficient textural features, stereo matching becomes significantly more challenging. Although grayscale and gradient information were combined to improve matching accuracy in this study, and the images were captured under uniform lighting conditions whenever possible, significant challenges remain in extreme conditions, such as very calm water.

5. Conclusions

A shore-based water level measurement system was constructed using the principles of binocular stereo vision and point cloud processing. First, the binocular camera was selected. It was used to synchronously acquire the left and right eye water surface images. Then, the iterative SGM stereo matching algorithm was applied to obtain the water surface parallax map. Finally, the water level value was determined based on the reconstructed water surface three-dimensional data. The feasibility of the method for water level measurement was verified by analyzing the measurement results.

With the advancement of smart water resources and applications of binocular stereo vision, the following directions can be considered for future research to further enhance measurement accuracy: first, optimizing the stereo matching algorithm to improve matching accuracy under varying texture conditions; next, adjusting camera parameters, such as baseline distance and focal length, to accommodate measurements at different distances; additionally, exploring the use of environmental features, such as rippling water, to enhance the three-dimensional matching effect; and finally, investigating how to minimize the effects of viewing angle and lighting conditions on stereo matching accuracy during remote measurement. Through the combined application of these methods, it is anticipated that more accurate 3D measurement techniques will be developed in the future.