Abstract

Brain Tumor Segmentation (BraTS) challenges have significantly advanced research in brain tumor segmentation and related medical imaging tasks. This paper provides a comprehensive review of the BraTS datasets from 2012 to 2024, examining their evolution, challenges, and contributions to MRI-based brain tumor segmentation. Over the years, the datasets have grown in size, complexity, and scope, incorporating refined pre-processing and annotation protocols. By synthesizing insights from over a decade of BraTS challenges, this review elucidates the progression of dataset curation, highlights the impact on state-of-the-art segmentation approaches, and identifies persisting limitations and future directions. Crucially, it provides researchers, clinicians, and industry stakeholders with a single, in-depth resource on the evolution and practical utility of BraTS datasets—demonstrating year-by-year improvements in the field and discussing their potential for enabling robust, clinically relevant segmentation methods that can further advance precision medicine. Additionally, an overview of the upcoming BraTS 2025 Challenge—currently in planning—is presented, highlighting its expanded focus across further clinical needs.

1. Introduction

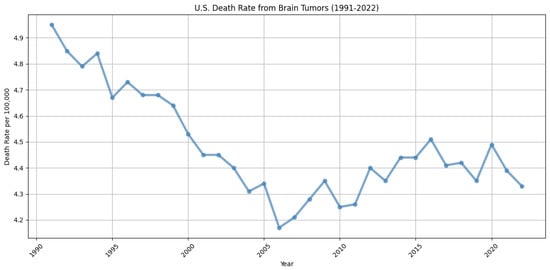

Brain tumors are among the most lethal forms of cancer. Notably, glioblastoma and diffuse astrocytic glioma with molecular features of glioblastoma (WHO Grade 4 astrocytoma) stand out as the most prevalent and aggressive malignant primary tumors of the central nervous system in adults. These tumors are marked by profound intrinsic heterogeneity in terms of appearance, shape, and histology, and they typically have a median survival rate of about 15 months. The diagnosis and treatment of brain tumors present significant complexities and challenges. They are notably resistant to standard therapies, partly because of the challenges in drug delivery to the brain and the extensive heterogeneity observed in their radiographic, morphological, and molecular profiles. Nevertheless, decades of intensive research have resulted in a 12.5% reduction in brain and other nervous system cancer mortality rates from 1991 to 2022 in the U.S., underscoring the critical need for ongoing advancements in the diagnosis, characterization, and treatment of these conditions [1]. The trend in death rates over the past three decades is illustrated in Figure 1, showing a marked decline during the 1990s but relative stability in more recent years. This plateau underscores the continued need for ongoing research and innovation to achieve further improvements in diagnosing and treating brain tumors.

Figure 1.

Trends in brain tumor and other nervous system cancer mortality rates in the U.S. from 1991 to 2022, derived from data in [1]. The figure shows a generally declining trend throughout the 1990s, followed by fluctuations and a near plateau in more recent years, indicating some progress, yet highlighting the continued need for innovations in diagnosis and treatment to further reduce mortality.

Brain tumor segmentation plays a crucial role in medical imaging, serving as a cornerstone for accurate diagnosis, effective treatment planning, and continuous monitoring of patients with brain tumors. Magnetic resonance imaging (MRI), as a non-invasive and highly detailed imaging modality, has become the gold standard for visualizing brain structures and pathologies. Its ability to provide high-resolution, multi-contrast images of soft tissues makes it uniquely suited for identifying and delineating abnormalities, including brain tumors. However, the complexity and heterogeneity of the appearance of brain tumors, coupled with the intricate structure of the human brain, present significant challenges for manual segmentation. In addition, manually segmenting brain tumors from magnetic resonance (MR) images is an extremely time-consuming process. Moreover, human subjectivity and artifacts introduced during the imaging process can further complicate the interpretation. As a result, manual brain MRI segmentation is susceptible to both inter- and intra-observer variability.

One of the key advancements in brain tumor segmentation is the ability to delineate tumors into specific areas such as the necrotic core (NCR), enhancing tumor (ET), and peritumoral edema (ED). This fine-grained approach is critical for understanding the biological behavior of tumors, guiding surgical resections, and tailoring radiotherapy and chemotherapy protocols. The Brain Tumor Segmentation (BraTS) challenges have played a crucial role in standardizing the segmentation of these sub-regions. This standardization has facilitated the development and benchmarking of automated algorithms, specifically designed to tackle the unique complexities of gliomas and other brain tumors. Machine learning and deep learning methods have revolutionized the field, enabling automated and precise delineation of these sub-regions. The success of these methods hinges on the availability of high-quality datasets that reflect the complexity and diversity of real-world clinical scenarios. Datasets serve as the foundation for developing, training, and benchmarking segmentation algorithms. In the context of MRI-based brain tumor analysis, these datasets must offer rich, annotated data that capture the variability in tumor size, shape, and location, as well as differences in scanner types [2,3].

This paper provides a comprehensive review of the Brain Tumor Segmentation (BraTS) challenges and their datasets, spanning from 2012 to 2024, and offers an overview of the planned BraTS 2025 challenge. By examining the evolution of these datasets, we aim to highlight their unique features, such as expert-annotated ground truths, standardized imaging protocols, and incorporation of diverse clinical contexts, including post-treatment scenarios and underrepresented populations. Unlike previous works that have focused on either a single challenge year or a narrower scope, this review merges all publicly available BraTS datasets from its inception to the most recent edition, establishing a uniquely comprehensive perspective on both dataset progression and the algorithmic milestones achieved over time. Crucially, this review provides new insights into how the iterative changes in dataset size, annotation standards, and inclusion criteria are shaping the latest approaches to clinically oriented segmentation tasks, thereby guiding future research toward more robust and generalizable MRI-based segmentation methodologies. This review highlights the transformative impact of the BraTS challenges, emphasizing how the massive evolution of these datasets has driven advancements in segmentation algorithms and contributed to the development of applications with significant potential in clinical practice. By articulating these novel contributions and emphasizing gaps that remain to be explored, we position this review as a key resource for researchers aiming to push the boundaries of precision medicine through high-quality, large-scale MRI datasets.

2. MRI Technology: A Critical Tool for Brain Tumor Segmentation

MRI technology stands out as the only imaging modality that offers such comprehensive and detailed visualization capabilities for brain tumor segmentation. Its unique ability to provide high-contrast images of soft tissues makes it indispensable in neuro-oncology. The technical aspects of MRI, especially the use of its four primary modalities—T1, T2, T1 with contrast (T1C), and fluid-attenuated inversion recovery (FLAIR)—are essential for effective segmentation and analysis of brain tumors. Each modality offers unique information about the brain’s anatomy and pathology, enhancing the accuracy of tumor detection and characterization.

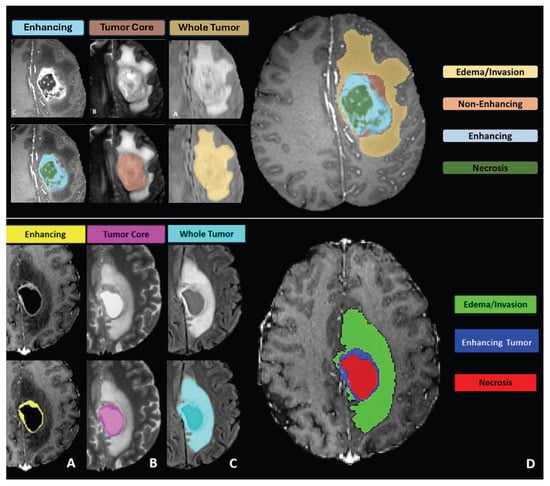

T1-weighted images help define the anatomical structure of the brain, T2-weighted images highlight fluid and edema, and T1C enhances the visualization of active tumor regions by highlighting areas where the blood–brain barrier is disrupted. FLAIR images are particularly useful in suppressing the effects of cerebrospinal fluid in the brain to better delineate the peritumoral edema and infiltrative tumor edges. As illustrated in Figure 2, these four modalities and their corresponding ground truth (GT) segmentations reveal distinct, yet complementary tumor features, providing a multi-faceted perspective. These modalities collectively provide a comprehensive view that is critical for diagnosing brain tumors, planning treatment, and monitoring progression or response to therapy.

Figure 2.

Image illustrating the four MRI modalities (T1, T2, T1C, FLAIR) alongside their corresponding ground truth (GT) segmentations. T1 emphasizes structural detail, T2 highlights fluid and edema, T1C reveals enhancing tumor regions by showing areas where the blood–brain barrier is disrupted, and FLAIR better delineates peritumoral edema by suppressing cerebrospinal fluid signals. Together, these modalities provide a comprehensive view of the tumor’s morphology and pathology, underscoring their critical role in accurate segmentation and informed treatment planning. Figure adapted from [4], licensed under the CC BY 4.0: https://creativecommons.org/licenses/by/4.0/ (accessed on 15 November 2024).

The integration of these MRI modalities into segmentation tasks enables the development of algorithms that can accurately reflect the complex reality of brain tumor pathology, thus supporting advanced diagnostic and therapeutic strategies in clinical practice. The precision and depth of MRI data directly support the goals of precision medicine by enabling tailored treatment plans based on detailed, individualized tumor characteristics [5,6].

Evolution of MRI Extraction Technologies from 2012 to Present

The evolution of MRI extraction technologies over the last decade has been marked by significant technological advancements that have expanded both the capabilities and the clinical utility of MRI systems. Since 2012, there have been transformative changes in how MRI data are acquired, processed, and utilized, driven by advancements in both hardware and software technologies.

Early MRI systems were primarily focused on structural imaging, with a limited ability to capture the dynamic processes of the human body. However, recent years have seen a substantial improvement in both the spatial and temporal resolution of MRI scans. This is largely due to the advent of high-density phased array coils and the implementation of parallel imaging techniques, which have drastically reduced scan times and improved image quality. These advances have been crucial in the development of functional MRI (fMRI) and diffusion tensor imaging (DTI), which provide insights into brain activity and neural connectivity. Moreover, the integration of machine learning algorithms, particularly deep learning, has revolutionized MRI data processing. These technologies enable more accurate and faster image reconstruction and have been pivotal in developing applications such as real-time imaging and automated anomaly detection. The utilization of artificial intelligence in MRI systems not only enhances the detection and characterization of pathologies but also optimizes the MRI workflow, reducing the dependency on operator expertise and improving diagnostic accuracy. The adoption of transformers in medical imaging has further enhanced capabilities, particularly in the area of feature extraction, by enabling the analysis of large data sequences with attention mechanisms that focus on relevant parts of the data, leading to more precise and efficient diagnosis.

Another notable advancement has been in the field of ultra-high-field MRI systems, which operate at field strengths of 7 Tesla or higher. These systems offer unparalleled image resolution and contrast, making them exceptionally useful for detailed anatomical studies and complex clinical research projects. The increased signal-to-noise ratio provided by these high-field systems allows for more detailed visualization of minute anatomical structures and pathological changes, which is critical for early diagnosis and treatment planning.

The period from 2012 to the present has also seen efforts to standardize MRI protocols and techniques across different centers and platforms. This standardization is crucial for multi-center studies and broad clinical applications, ensuring that MRI results are consistent and comparable regardless of where or how the data were obtained.

Recent developments hint at a future where the Mamba architecture could play a pivotal role, potentially overtaking transformers as the state-of-the-art due to its innovative approach to handling sequential data. Mamba is designed to optimize the processing of time-series data, which is particularly relevant in medical imaging where changes over time can be critical to accurate diagnoses. Unlike traditional models, Mamba incorporates mechanisms that can more effectively capture temporal dependencies and variations within the data, providing a more dynamic and responsive analysis. Furthermore, Mamba’s potential to integrate with existing deep learning frameworks offers a promising avenue for refining diagnostic tools and treatment planning systems, thereby pushing the boundaries of medical imaging technology with more precise and actionable insights [7].

In conclusion, the evolution of MRI extraction technologies over the past decade has not only enhanced the technical capabilities of MRI systems but also significantly broadened their clinical applications, making MRI an indispensable tool in modern medicine. These advancements underscore the importance of continual investment in MRI technology development, aiming for even greater accuracy, efficiency, and applicability in the years to come [8,9].

3. Overview of BraTS Challenges

Precise delineation of brain tumor sub-regions in MRI scans is critically important for numerous clinical practices, including planning surgical treatments, guiding image-based interventions, tracking tumor progression, and creating maps for radiotherapy [3]. Additionally, accurate segmentation of these tumor sub-regions is increasingly recognized as a foundation for quantitative image analysis that can predict overall patient survival [2].

However, manual identification and delineation of tumor sub-regions is a labor-intensive, time-consuming, and subjective task. In clinical settings, this process is typically manually performed by radiologists through qualitative visual assessment, making it impractical for handling large patient volumes. This underscores the pressing need for automated, reliable segmentation solutions to streamline and accelerate the process [3].

Since its launch in 2012, the Brain Tumor Segmentation (BraTS) challenge has been dedicated to assessing advanced methods for the segmentation of brain tumors from mpMRI scans. Initially, BraTS aimed to provide a public dataset and establish a community benchmark. It primarily utilized pre-operative mpMRI scans sourced from various institutions, focusing on the segmentation of brain tumors, especially gliomas, which exhibit extensive variability in appearance, shape, and histology. Moreover, between 2017 and 2020, BraTS expanded its scope to include predictions of patient overall survival (OS) for glioblastoma cases following gross total resection. This expansion allowed for the integration of radiomic feature analysis and machine learning algorithms to highlight the clinical importance of the segmentation task [2,3].

In the 2021–2022 iterations, BraTS continued to concentrate on segmenting glioma sub-regions, supported by a substantially increased dataset. The 2021 challenge also introduced the critical clinical task of determining the methylation status of the O6-methylguanine-DNA methyltransferase (MGMT) promoter in tumors, classifying them as either methylated or unmethylated [3].

BraTS 2023 further refined its benchmarking framework and expanded its dataset to encompass a wider patient demographic, including regions like sub-Saharan Africa, various tumor types such as meningiomas, and new clinical challenges like handling incomplete data. This edition introduced several sub-challenges addressing specific clinical needs and technical issues, such as data augmentation [10].

The 2024 edition of BraTS extended its research scope further by introducing new sub-challenges, including those focusing on post-treatment glioma outcomes. The Pathology challenge specifically aims to develop deep learning models capable of identifying distinct histological features within tumor sub-regions, thus enhancing the accuracy of brain tumor diagnosis and grading [11].

Alongside the data, the classes used for segmentation have also seen significant changes to better capture the nuances of tumor behavior and the need for more effective algorithm training. Initially, through 2016, tumor sub-regions were classified into four distinct categories. However, from 2017 onwards, the segmentation was streamlined to encompass just three categories. The editions of 2023 and 2024 further diversified the annotations and classes used, tailoring them to specific datasets and tasks, thereby adding layers of complexity to the segmentation challenges [2].

4. Evolution of BraTS Datasets (2012–2025)

This section explores the development and diversification of the Brain Tumor Segmentation (BraTS) challenge datasets from its inception in 2012 up to 2025. Initially focused on the segmentation of glioma sub-regions, the scope of the BraTS challenges has significantly expanded to include various brain conditions and an increased complexity in the tasks. We will examine how these datasets have evolved over time, with a particular focus on the notable shift in the variety of the challenges introduced in recent years. This evolution is documented in detail in Table 1, which provides an overview of the datasets employed each year and the specific tasks associated with them. Notably, the table shows how each BraTS edition gradually increased dataset size and diversity, culminating in more advanced tasks from 2023 onward (e.g., post-treatment imaging, synthesis challenges, meningioma segmentation). By capturing these incremental changes in case numbers, task complexity, and clinical timepoints, it provides researchers with valuable insights into the evolution of the datasets—informing the design of future segmentation models and guiding research strategies in neuro-oncology. The recent expansions reflect broader clinical applications and the integration of advanced computational techniques in the medical imaging field, necessitating more elaborate segmentation models. The latest contests are not only more complex but also occupy a significantly larger portion of the discussion due to their increased relevance and the broadened scope of the challenge.

Table 1.

Summary of BraTS Challenge data distribution across training, validation, and test cohorts from 2012 to 2024, along with associated tasks and clinical timepoints. By tracking changes in dataset size, variety of tasks, and targeted clinical settings, this table underscores the growing complexity of BraTS challenges and illustrates how each year’s iteration provides a foundation for new research directions in MRI-based brain tumor segmentation. The data for the table were collected through extensive research of the challenges’ websites and related manuscripts.

4.1. Dataset Review: BraTS Challenge 2012

- Number of tasks: 1: Multimodal Brain Tumor Image Segmentation—segmentation of gliomas in pre-operative scans.

- Initial number of classes: two class labels:

- Label 1—ED: edema;

- Label 2—TC: tumor core.

- Final number of classes: four class labels:

- Label 1—NCR: necrotic tumor;

- Label 2—ED: peritumoral edema;

- Label 3—NET: non-enhancing tumor;

- Label 4—ET: enhancing tumor;

- Label 0: everything else.

- MRI modalities: All four modalities were available: T1-weighted, native image (T1); T1-weighted, contrast-enhanced (Gadolinium) image (T1c); T2-weighted image (T2); and T2-weighted FLAIR image (FLAIR). In subsequent BraTS challenges, these four modalities were consistently employed unless noted otherwise in the challenge description.

Challenge Data: The BraTS 2012 Challenge on Multimodal Brain Tumor Image Segmentation was designed to advance the state-of-the-art methods in the segmentation of brain tumors, specifically gliomas, from multimodal MRI images. The goal was to create a benchmark and encourage the development of innovative segmentation algorithms that could efficiently navigate the complexities found in tumor imaging. By offering a platform for a detailed comparison using a standardized collection of both authentic and synthetic MRI scans, they endeavored to improve the precision and clinical utility of these technologies in neuro-oncology.

All participants received training data consisting of multi-contrast MRI scans from 10 low-grade and 20 high-grade glioma patients, originally manually annotated with two tumor labels—“Edema” and “Core”—by a trained expert. Additionally, the training set included simulated images for 25 high-grade and 25 low-grade glioma subjects labeled with the same two ground truth categories. The test set included 11 high-grade and 4 low-grade real cases, as well as 10 high-grade and 5 low-grade simulated cases [12,13].

During subsequent discussions, it became clear that categorizing tumor sub-regions into two types was overly simplistic. Specifically, the category labeled “core” was found to include substructures that appeared differently across various imaging modalities. They, therefore, had the training data re-annotated with four distinct tumor labels, as illustrated in Figure 3, where the initial two classes, edema (ED) and tumor core (TC), were refined to edema (ED), necrotic tumor (NCR), non-enhancing tumor (NET), and enhancing tumor (ET). The 30 training cases were re-labeled by four different raters, and the 2012 test set was annotated by three. For datasets with multiple annotations, they fused the resulting label maps by assuming increasing ‘severity’ of the disease from edema to non-enhancing (solid) core to necrotic (or fluid-filled) core to enhancing core, using a hierarchical majority voting scheme that assigns a voxel to the highest class to which at least half of the raters agree. The synthetic images were generated using TumorSim software, which uses physical and statistical models to produce MRI images that emulate real-life tumor scenarios. These simulations were conducted with the same modalities and 1 mm resolution as the real data, using a tumor growth model to simulate infiltrating edema, local tissue distortion, and central contrast enhancement, effectively mimicking the texture of actual MRI scans.

Figure 3.

This figure illustrates the segmentation labeling used in BraTS 2012. The (left image) shows the initial two classes for the challenge—edema (ED) and tumor core (TC)—while the (right image) demonstrates the subsequent relabeling into four more specific classes: edema (ED), necrotic tumor (NCR), non-enhancing tumor (NET), and enhancing tumor (ET). By refining the broad “core” label, this updated annotation better reflects the distinct substructures observed in different MRI modalities, ultimately improving the clinical relevance. Figure adapted and modified from [14,15].

However, in evaluating the performance of segmentation algorithms, they organized the different structures into three mutually inclusive tumor regions, which better reflect clinical tasks, such as tumor volumetry [13], and obtained the following:

- Whole tumor region (WT): Includes all four tumor structures, corresponding to labels 1 + 2 + 3 + 4.

- Tumor core region (TC): Contains all tumor structures except for “edema”, corresponding to labels 1 + 3 + 4.

- Active tumor region (AT): Includes only the “enhancing core” structures that are unique to high-grade cases, corresponding to labels 1 + 4.

- Pre-Processing Data Protocol: To ensure uniformity across the dataset, the volumetric images of each subject were rigidly aligned to their respective T1c MRI scans. Subsequently, these images were resampled to a standard resolution of 1mm³ using linear interpolation and reoriented to a consistent axial plane. The alignment was facilitated by a rigid registration model that utilized mutual information as the similarity measure, specifically using the “VersorRigid3DTransform” with the “MattesMutualInformation” metric and implemented across three multi-resolution levels within The Insight Toolkit (ITK) software. No attempt was made to align the individual patients within a common reference space. Additionally, all images underwent skull stripping to ensure the anonymization of patient data [13].

- Annotation Method: The simulated images were provided with predefined “ground truth” data for the location of various tumor structures, whereas the clinical images were labeled manually. They established four categories of tumor sub-regions: “edema”, “non-enhancing (solid) core”, “necrotic (or fluid-filled) core”, and “enhancing core”. The annotation protocol for identifying these visual structures in both low- and high-grade cases was as follows:

- The “edema” was primarily segmented from T2-weighted images. FLAIR sequences were used to verify the extent of the edema and to differentiate it from ventricles and other fluid-filled structures. Initial segmentation in T2 and FLAIR included the core structures, which were then reclassified in subsequent steps.

- The gross tumor core, encompassing both enhancing and non-enhancing structures, was initially segmented by assessing hyper-intensities on T1c images (for high-grade tumors) along with inhomogeneous components of the hyper-intense lesion evident in T1 and the hypo-intense areas seen in T1.

- The “enhancing core” of the tumor was subsequently segmented by thresholding T1c intensities within the resulting gross tumor core. This segmentation included the Gadolinium-enhancing tumor rim while excluding the necrotic center and blood vessels. The intensity threshold for segmentation was determined visually for each case.

- The “necrotic (or fluid-filled) core” was identified as irregular, low-intensity necrotic areas within the enhancing rim on T1c images. This label was also applied to the occasional hemorrhages observed in the BRATS dataset.

- The “non-enhancing (solid) core” was characterized as the part of the gross tumor core remaining after the exclusion of the “enhancing core” and the “necrotic (or fluid-filled) core”.

Following this method, MRI scans were annotated by a combined team of seven radiologists and radiographers, distributed across Bern, Debrecen, and Boston. They marked structures on every third axial slice, used morphological operators for interpolation (e.g., region growing), and conducted visual inspections to make any necessary manual corrections. All segmentations were carried out using 3D Slicer software, with each subject taking approximately 60 min to process.

- Multi-Center Imaging Data Acquisition Details: The data were collected from four distinct institutions: Bern University, Debrecen University, Heidelberg University, and Massachusetts General Hospital. These images were gathered over several years, utilizing MRI scanners from various manufacturers, featuring varying magnetic field strengths (1.5 T and 3 T) and different implementations of imaging protocols (such as 2D or 3D) [12,13].

4.2. Dataset Review: BraTS Challenge 2013

- Number of tasks: 1: Multimodal Brain Tumor Image Segmentation—segmentation of gliomas in pre-operative scans.

- Number of classes: four class labels:

- Label 1—NCR: necrotic tumor;

- Label 2—ED: peritumoral edema;

- Label 3—NET: non-enhancing tumor;

- Label 4—ET: enhancing tumor;

- Label 0: everything else.

- Challenge Data: BraTs 2013 had the same objective as BraTS 2012. The training data for BraTS 2013 was identical to the real training data of the 2012 Challenge but with the updated labels (4-class labels). No synthetic cases were evaluated in 2013, and therefore, no synthetic training data were provided. In addition, 10 new data were added to the test set. To summarize, the training data consisted of 30 multi-contrast MRI scans of 10 low- and 20 high-grade glioma patients, while the test images consisted of 25 MRI scans of 11 high- and 4 low-grade real cases from BraTS 2012, as well as 10 new high-grade real cases. Note that the pre-processing Data Protocol, the Annotation Method, and the contributing institutions (Multi-Center Imaging Data Acquisition Details) are the same as in BraTS 2012 [13].

4.3. Dataset Review: BraTS Challenge 2014–2016

- Number of tasks: 2:

- Multimodal Brain Tumor Image Segmentation—segmentation of gliomas in pre-operative scans;

- Disease progression assessment.

- Number of classes: four class labels:

- Label 1—NCR: necrotic tumor;

- Label 2—ED: peritumoral edema;

- Label 3—NET: non-enhancing tumor;

- Label 4—ET: enhancing tumor;

- Label 0: everything else.

- Challenge Data: BraTS has predominantly been engaged in the segmentation of brain tumor sub-regions. Yet, beyond its initial editions in 2012–2013, the challenge’s potential for clinical impact became evident. Subsequently, BraTS introduced secondary tasks, leveraging the outcomes of brain tumor segmentation algorithms to enhance further analyses. Particularly, to highlight the clinical relevance of these segmentation tasks, the BraTS challenges from 2014 to 2016 incorporated longitudinal scans into the datasets. These additions aimed to assess the efficacy and potential of automated tumor volumetry in monitoring disease progression [2].

For the years 2014 to 2016, the dataset was an expanded version of the one used in the 2013 challenge, featuring 300 high-grade (HG) and low-grade (LG) glioma brain scans from the NIH Cancer Imaging Archive (TCIA). Segmentation was performed both manually and algorithmically, following the same classification system of the years before. The ground truth annotation was derived by the fusion of segmentation results from top-performing algorithms from the 2012 and 2013 BraTS challenges, which were subsequently validated through visual inspections by experienced raters. Moreover, the 2014 challenge introduced a dataset comprising longitudinal series of scans for individual patients aimed at assessing disease progression. This dataset included 25 cases, each documented at 3 to 10 different time points. While training dataset annotations in some challenges were automated, the annotation of the test dataset was always performed manually by expert raters in all BraTS challenges [16]. Variations in the datasets from BraTS 2014, 2015, and 2016 are detailed in Table 2. Due to the algorithmic assessment of the ground truth (GT), datasets from these years were subsequently discarded and manually reannotated by experts in the following years.

Table 2.

Summary of dataset composition across BraTS Challenges from 2014 to 2016. Although these datasets introduced longitudinal data and expanded upon earlier challenges, they were later discarded (from 2017 onward) and manually re-annotated by experts due to their reliance on algorithmic ground truth (GT) fusion. Table adapted and modified from [16].

- Annotation Method: The annotation was accomplished by fusing results of high-ranked segmentation algorithms in BraTS 2012 and BraTS 2013 challenges. These annotations were then approved by visual inspection of experienced raters [16].

4.4. Dataset Review: BraTS Challenge 2017

- Number of tasks: 2:

- Multimodal Brain Tumor Image Segmentation—segmentation of gliomas in pre-operative scans;

- Prediction of patient overall survival (OS) from pre-operative scans.

- Number of classes: three class labels:

- Label 1—NCR/NET: necrotic and non-enhancing tumor;

- Label 2—ED: the peritumoral edema;

- Label 4—ET: the GD-enhancing tumor;

- Label 0: everything else.

Experts noted that the NET (non-enhancing tumor, labeled as ‘Label 3’) can sometimes be overestimated by annotators, with limited evidence available in the imaging data to clearly define this sub-region. Figure 4 illustrates a sample slice where the non-enhancing tumor region is ambiguous; the overlaid ground truth segmentation reveals how such labeling can be influenced by minimal or uncertain imaging signals. Imposing labels where the imaging data do not clearly support them can lead to inconsistencies, resulting in ground truth labels that vary widely among different institutions. Such discrepancies could influence the rankings of BraTS participants, skewing results in favor of an annotator’s interpretation over the actual efficacy of the segmentation algorithms. To solve this problem, starting in 2017, the BraTS challenge removed the NET label, combining it with the necrotic core (NCR, labeled as ‘Label 1’). This adjustment was made to simplify annotations and enhance the consistency of algorithm evaluations across different datasets.

Figure 4.

Example highlighting the limited imaging evidence for Label 3 (non-enhancing tumor), as seen in the overlaid ground-truth segmentation. The subtlety of the non-enhancing region can lead to potential overestimation and variability in annotations across different institutions. Figure adapted and modified from https://academictorrents.com/details/c4f39a0a8e46e8d2174b8a8a81b9887150f44d50. Originally published under the CC BY-NC-SA 3.0: https://creativecommons.org/licenses/by-nc-sa/3.0/it/deed.en (accessed on 15 September 2024).

Additionally, areas of T2-FLAIR hyper-intensity in contralateral and periventricular regions were no longer included in the edema (ED) region, unless directly adjacent to peritumoral ED. These areas are often indicative of chronic microvascular alterations or age-related demyelination rather than active tumor infiltration [2].

- Challenge Data: Task 1 involved developing methodologies for segmenting gliomas on pre-operative scans, utilizing clinically-acquired training data. The analyzed sub-regions included: (1) the enhancing tumor (ET), (2) the tumor core (TC), and (3) the whole tumor (WT). Specifically, WT is defined as the combination of labels 1, 2, and 4; TC comprises labels 1 and 4; and ET corresponds solely to label 4. Figure 5 illustrates how the previous four-class labeling (top panel) was refined in 2017 by omitting the non-enhancing tumor label (NET) and merging it with the necrotic core (NCR). This shift simplified annotations and ensured greater consistency in segmentation tasks across diverse data sources.

Figure 5. At the top, the image presents the four-class labeling system used for BraTS challenges up to 2017, including edema (ED), necrotic tumor (NCR), non-enhancing tumor (NET), and enhancing tumor (ET). Below, the image shows the revised four-class labeling system introduced in 2017, which merges NET with NCR to reduce annotation ambiguity and improve consistency. Both the top and bottom images depict the labeling system from left to right: Panel (A) shows the enhancing tumor structures visible in a T1c scan surrounding the cystic/necrotic components of the core; Panel (B) displays the tumor core visible in a T2 scan; Panel (C) illustrates the whole tumor visible in a FLAIR scan; and Panel (D) depicts the combined segmentations generating the final tumor sub-region labels. These panels apply to both the original and revised three-class labeling systems shown in the image. This streamlined labeling approach underpinned the BraTS 2017 segmentation tasks and facilitated more reliable comparisons of algorithmic performance. Figure adapted and modified from [3], licensed under the CC BY 4.0: https://creativecommons.org/licenses/by/4.0/, and [13], licensed under the CC BY-NC-SA 4.0: https://creativecommons.org/licenses/by-nc-sa/4.0/ (accessed on 15 September 2024).

Figure 5. At the top, the image presents the four-class labeling system used for BraTS challenges up to 2017, including edema (ED), necrotic tumor (NCR), non-enhancing tumor (NET), and enhancing tumor (ET). Below, the image shows the revised four-class labeling system introduced in 2017, which merges NET with NCR to reduce annotation ambiguity and improve consistency. Both the top and bottom images depict the labeling system from left to right: Panel (A) shows the enhancing tumor structures visible in a T1c scan surrounding the cystic/necrotic components of the core; Panel (B) displays the tumor core visible in a T2 scan; Panel (C) illustrates the whole tumor visible in a FLAIR scan; and Panel (D) depicts the combined segmentations generating the final tumor sub-region labels. These panels apply to both the original and revised three-class labeling systems shown in the image. This streamlined labeling approach underpinned the BraTS 2017 segmentation tasks and facilitated more reliable comparisons of algorithmic performance. Figure adapted and modified from [3], licensed under the CC BY 4.0: https://creativecommons.org/licenses/by/4.0/, and [13], licensed under the CC BY-NC-SA 4.0: https://creativecommons.org/licenses/by-nc-sa/4.0/ (accessed on 15 September 2024).

After generating the segmentation labels on the pre-operative scans for Task 1, participants proceeded to Task 2. Here, they leveraged these labels along with provided multimodal MRI data to identify and analyze appropriate imaging/radiomic features using machine learning algorithms, aiming to predict patient overall survival (OS).

The dataset provided in BraTS 2017 differed significantly from the datasets used in previous challenges from 2014 to 2016. Crucially, the data from these years, sourced from TCIA and containing a mix of pre- and post-operative scans, were discarded not only because they included post-operative cases but mainly due to concerns regarding the reliability of their ground truth labels. These labels were generated by merging results from algorithms that performed well in the BraTS 2012 and 2013 challenges, rather than from manual expert annotations. This raised issues about the consistency and accuracy of these labels. For BraTS 2017 and subsequent challenges, only data from BraTS 2012–2013, which had been manually annotated by clinical experts, were retained. The datasets from BraTS 2014–2016 were excluded to ensure the ground truth labels used going forward were derived from expert manual annotations. All pre-operative scans from the TCIA collections (TCGA-GBM, n = 262 and TCGA-LGG, n = 199) were reassessed by board-certified neuroradiologists who then annotated the identified pre-operative scans (135 GBM and 108 LGG) for various glioma sub-regions [17]. Detailed procedures for these annotations are outlined in the referenced paper [18].

In 2017, BraTS introduced the new challenge of predicting patient overall survival (OS) based on pre-operative MRI scans from patients who had undergone gross total resection. For this task, the survival data (in days) for 163 training cases were provided. Participants used their segmentation predictions to extract relevant radiomic features from the MRI scans, which were then processed through machine-learning models to predict OS [16].

- Pre-Processing Data Protocol: A consistent pre-processing protocol has been applied to all BraTS mpMRI scans. This protocol involves converting DICOM files to the NIfTI format, co-registering images to a standard anatomical template (SRI24), resampling them to a uniform isotropic resolution (1 ), and performing skull-stripping. Detailed information on the entire pre-processing pipeline is available through the Cancer Imaging Phenomics Toolkit (CaPTk) and the Federated Tumor Segmentation (FeTS) tool. Converting to NIfTI format removes accompanying metadata from the original DICOM images, effectively stripping out all Protected Health Information (PHI) from the DICOM headers. Additionally, skull-stripping helps prevent any potential facial reconstruction or patient identification. Note that in subsequent challenges, this standardized pre-processing protocol has been consistently employed unless otherwise specified in the challenge description.

- Annotation Method: All ground truth labels from BraTS 2016 were meticulously revised by expert board-certified neuroradiologists. Each imaging dataset underwent manual segmentation by one to four raters, adhering to a consistent annotation protocol. These annotations, which include the GD-enhancing tumor (ET—label 4), the peritumoral edema (ED—label 2), and the necrotic and non-enhancing tumor (NCR/NET—label 1), received approval from experienced neuroradiologists. For further details on the pre-processing and annotation protocols, refer to the following papers: [2,13,18].

- Multi-Center Imaging Data Acquisition Details: The multimodal MRI data were acquired with different clinical protocols and various scanners from multiple (n = 19) institutions. The data contributors are located in the following countries: United States, Switzerland, Hungary, and Germany.

4.5. Dataset Review: BraTS Challenge 2018

- Number of tasks: 2:

- Multimodal Brain Tumor Image Segmentation—segmentation of gliomas in pre-operative scans;

- Prediction of patient overall survival (OS) from pre-operative scans.

- Number of classes: three class labels:

- Label 1—NCR/NET: necrotic and non-enhancing tumor;

- Label 2—ED: the peritumoral edema;

- Label 4—ET: the GD-enhancing tumor;

- Label 0: everything else.

- Challenge Data: The tasks for BraTS 2018 remained unchanged from BraTS 2017: participants were required to develop methods for generating segmentation labels for various glioma sub-regions and to extract imaging/radiomic features to predict patient overall survival (OS) using machine learning techniques. The dataset for BraTS 2018 utilized the same training set as in 2017, but featured different validation and test sets. All ground truth labels were carefully revised by expert board-certified neuroradiologists, and the validation set’s ground truth was not disclosed to participants [16,19].

- It is important to note that only patients who underwent Gross Total Resection (GTR) were considered for the OS prediction analyses [19].

- Annotation Method: All the imaging datasets underwent manual segmentation by one to four raters, following a consistent annotation protocol. All annotations received approval from experienced neuroradiologists. For additional information on the pre-processing and annotation protocols, refer to these papers: [2,13,18].

- Multi-Center Imaging Data Acquisition Details: All BraTS multimodal scans were acquired with different clinical protocols and various scanners from multiple (n = 19) institutions. The institutions contributing to the data are located in the following countries: United States, Switzerland, Hungary, Germany, and India.

4.6. Dataset Review: BraTS Challenge 2019

- Number of tasks: 3:

- Multimodal Brain Tumor Image Segmentation—segmentation of gliomas in pre-operative scans;

- Prediction of patient overall survival (OS) from pre-operative scans;

- Quantification of Uncertainty in Segmentation.

- Number of classes: three class labels:

- Label 1—NCR/NET: necrotic and non-enhancing tumor;

- Label 2—ED: the peritumoral edema;

- Label 4—ET: the GD-enhancing tumor;

- label 0: everything else.

- Challenge Data: In addition to Tasks 1 and 2 from BraTS 2018 and 2017, BraTS 2019 introduced a new challenge focused on evaluating uncertainty measures in glioma segmentation. This task aimed to encourage methods that yield high confidence when predictions are accurate and low confidence when they are not. Participants were required to submit three separate uncertainty maps—one for each voxel label corresponding to: (1) enhancing tumor (ET), (2) tumor core (TC), and (3) whole tumor (WT). These maps were to be evaluated in conjunction with the established BraTS Dice metric.

- The BraTS 2019 challenge provided participants with multi-institutional, routinely acquired pre-operative multimodal MRI scans of high-grade glioblastoma (GBM/HGG) and lower-grade glioma (LGG), all of which had a pathologically confirmed diagnosis and available OS data. The datasets for this year’s challenge were enhanced since BraTS 2018, incorporating more routinely acquired 3T multimodal MRI scans, each annotated with ground truth labels by expert board-certified neuroradiologists. In this challenge, as well as in all subsequent ones, the ground truth labels for the validation data were not provided to the participants. Consistent with prior iterations, only patients who had undergone a gross total resection (GTR) were eligible for the OS prediction assessment [20].

- Annotation Method: All the imaging datasets underwent manual segmentation by one to four raters, following a consistent annotation protocol. All annotations received approval from experienced neuroradiologists. For additional information on the pre-processing and annotation protocol, refer to these papers: [2,13,18].

- Multi-Center Imaging Data Acquisition Details: All BraTS multimodal MRI data were acquired using different clinical protocols and various scanners from multiple (n = 19) institutions. The institutions contributing to the data are located in the following countries: United States, Switzerland, Hungary, Germany, and India.

4.7. Dataset Review: BraTS Challenge 2020

- Number of tasks: 3:

- Multimodal Brain Tumor Image Segmentation—segmentation of gliomas in pre-operative scans;

- Prediction of patient overall survival (OS) from pre-operative scans;

- Quantification of Uncertainty in Segmentation.

- Number of classes: three class labels:

- Label 1—NCR/NET: necrotic and non-enhancing tumor;

- Label 2—ED: peritumoral edema;

- Label 4—ET: GD-enhancing tumor;

- Label 0: everything else.

- Challenge Data: For this year’s BraTS challenge, the dataset was enhanced with an increased number of routinely acquired 3T multimodal MRI scans, all annotated with ground truth labels by expert board-certified neuroradiologists. The format of the overall survival (OS) data remained consistent with previous years. Moreover, to aid in broader research efforts, they have provided a naming convention and direct filename mapping between the data from BraT 2020 to 2017 and the TCGA-GBM and TCGA-LGG collections hosted by The Cancer Imaging Archive (TCIA), thereby supporting studies that extend beyond the immediate scope of the BraTS tasks [21].

- Annotation Method: All datasets underwent manual segmentation by one to four raters, adhering to a consistent annotation protocol. All annotations received approval from experienced neuroradiologists. For additional information on the pre-processing and annotation protocols, refer to these papers: [2,13,18].

- Multi-Center Imaging Data Acquisition Details: All BraTS multimodal MRI data were acquired with different clinical protocols and various scanners from multiple (n = 19) institutions. The institutions contributing to the data are located in the following countries: United States, Switzerland, Hungary, Germany, and India.

4.8. Dataset Review: BraTS Challenge 2021

- Number of tasks: 2:

- Multimodal Brain Tumor Image Segmentation—segmentation of gliomas in pre-operative scans;

- Radiogenomic Classification—evaluation of classification methods to predict the MGMT promoter methylation status at pre-operative baseline scans.

- Number of classes: three class labels:

- Label 1—NCR: necrotic tumor;

- Label 2—ED: peritumoral edema;

- Label 4—ET: GD-enhancing tumor;

- Label 0: everything else.

- Challenge Data: The BraTS Challenge 2021 was hosted by the Radiological Society of North America (RSNA), the American Society of Neuroradiology (ASNR), and the Medical Image Computing and Computer-Assisted Interventions (MICCAI) Society. The RSNA-ASNR-MICCAI BraTS 2021 challenge utilized multi-institutional multi-parametric magnetic resonance imaging (mpMRI) scans, continuing to focus on Task 1: assessing advanced methods for segmenting brain glioblastoma sub-regions in mpMRI scans.

- The 2021 revision of the World Health Organization (WHO) classification of CNS tumors underscored the importance of integrated diagnostics, transitioning from solely morphologic–histopathologic classifications to incorporating molecular–cytogenetic features. One such feature, the methylation status of the O6-methylguanine-DNA methyltransferase (MGMT) promoter in newly diagnosed GBM, was recognized as a significant prognostic factor and predictor of chemotherapy response. Consequently, determining the MGMT promoter methylation status in newly diagnosed GBM became crucial for guiding treatment decisions. In response, BraTS 2021 introduced Task 2, which evaluated classification methods for predicting the MGMT promoter methylation status from pre-operative baseline scans, specifically distinguishing between methylated (MGMT+) and unmethylated (MGMT-) tumors.

- The RSNA-ASNR-MICCAI BraTS 2021 Challenge released the largest and most diverse retrospective cohort of glioma patients to date. The impact of this challenge is underscored by the dramatic increase in the number of participating teams, which rose from 78 in 2020 to over 2300 in 2021. The datasets for this year’s challenge were notably expanded since BraTS 2020 with a significant increase in routine clinically-acquired mpMRI scans, raising the total number of cases from 660 to 2040 and thereby broadening the demographic diversity of the patient population represented. Ground truth annotations of the tumor sub-regions were all verified by expert neuroradiologists for Task 1, while the MGMT methylation status was determined based on laboratory assessments of the surgical brain tumor specimens [3,22].

- Pre-Processing Data Protocol: For Task 1, which involves the segmentation of tumor sub-regions, the standardized pre-processing protocol established by BraTS has been applied to all mpMRI scans. For Task 2, focusing on radiogenomic classification, all imaging volumes were initially processed as in Task 1 to produce skull-stripped volumes. These were then reconverted from NIfTI back to the DICOM format. Once the mpMRI sequences were reverted to DICOM, further de-identification was implemented through a two-step process. For more details on the pre-processing protocols employed for both tasks, refer to this paper [3].

- Annotation Protocol: In earlier BraTS challenges, specifically for Task 1, the annotation process typically began with manual delineation of the abnormal signal on T2-weighted images to define the WT, followed by the TC, and ultimately addressing the enhancing and non-enhancing/necrotic core, often employing semi-automatic tools. For BraTS 2021, to streamline the annotation process, initial automated segmentations were produced by combining methods from previously top-performing algorithms at BraTS. These included DeepMedic, DeepScan, and nnU-Net, all of which were trained on the BraTS 2020 dataset. The STAPLE label fusion technique was utilized to merge the segmentations from these individual methods, helping to address systematic errors from each. This entire segmentation process, including the automated fusion technique, has been made accessible on the Federated Tumor Segmentation (FeTS) platform.

- Volunteer expert neuroradiology annotators were provided with all four mpMRI modalities alongside the automated fused segmentation volumes to begin manual adjustments. ITK-SNAP software was employed for these refinements. After the initial automated segmentations were refined, they were reviewed by two senior attending board-certified neuroradiologists, each with over 15 years of experience. Based on their evaluation, these segmentations were either approved or sent back to the annotators for further adjustments. This iterative process continued until the refined tumor sub-region segmentations were deemed satisfactory for public release and use in the challenge. The tumor sub-regions were delineated according to established radiological observations (VASARI features) and consist of the Gd-enhancing tumor (ET—label 4), the peritumoral edematous or invaded tissue (ED—label 2), and the necrotic tumor core (NCR—label 1) [3]. The significant annotation contributions to the dataset were made possible by over forty volunteer neuroradiology experts from around the world [22].

- The determination of the MGMT promoter methylation status in the BraTS 2021 dataset at each hosting institution was performed using various methods, including pyrosequencing and next-generation quantitative bisulfite sequencing of promoter CpG sites. Adequate tumor tissue collected at the time of surgery was required for these assessments.

- Multi-Center Imaging Data Acquisition Details: The dataset describes a collection of brain tumor mpMRI scans acquired from multiple different institutions under standard clinical conditions, but with different equipment and imaging protocols, resulting in a vastly heterogeneous image quality reflecting diverse clinical practice across different institutions [3]. The multimodal scans were collected from fourteen distinct institutions that are located in the following countries: United States, Germany, Switzerland, Canada, Hungary, and India.

4.9. Dataset Review: BraTS Challenge 2022

The BraTS 2022 Challenge continued the evaluation of Task 1 from BraTS 2021. These algorithms were trained on the 2021 dataset and tested on three distinct datasets: (i) the 2021 testing dataset of adult-type diffuse gliomas (n = 570), (ii) an independent multi-institutional dataset (Africa-BraTS) representing underrepresented Sub-Saharan African (SSA) patient populations with adult-type diffuse gliomas (n = 150), and (iii) an independent dataset comprising pediatric-type diffuse glioma cases (n = 95). The latter two additional test sets were included to evaluate the generalizability of the algorithms [23,24].

4.10. Dataset Review: BraTS Challenge 2023

- Number of tasks: 8:

- Segmentation—adult glioma: RSNA-ASNR-MICCAI BraTS Continuous Evaluation Challenge;

- Segmentation—BraTS-Africa: BraTS Challenge on Sub-Sahara-Africa Adult Glioma;

- Segmentation—meningioma: ASNR-MICCAI BraTS Intracranial Meningioma Challenge;

- Segmentation—brain metastases: ASNR-MICCAI BraTS Brain Metastasis Challenge;

- Segmentation—pediatric tumors: ASNR-MICCAI BraTS Pediatrics Tumor Challenge;

- Synthesis (Global)—missing MRI: ASNR-MICCAI BraTS MRI Synthesis Challenge (BraSyn);

- Synthesis (Local)—inpainting: ASNR-MICCAI BraTS Local Synthesis of Tissue via Inpainting;

- Evaluating Augmentations for BraTS: BraTS Challenge on Relevant Augmentation Techniques.

- Number of classes for tasks 1–8: three class labels:

- Label 1—NCR: necrotic tumor core;

- Label 2—ED: peritumoral edematous/invaded tissue;

- Label 3—ET: GD-enhancing tumor;

- Label 0: everything else.

It is important to note that starting this year, the label value for enhancing tumor (ET) has been updated to “3”, replacing the previous value of “4” used in earlier BraTS Challenges.

- Number of classes for tasks 2–4: three class labels:

- Label 1—NETC: non-ehancing tumor core;

- Label 2—SNFH: surrounding non-enhancing FLAIR hyperintensity;

- Label 3—ET: enhancing tumor;

- Label 0: everything else.

- Number of classes for tasks 5: three class labels:

- Label 1—NC: non-enhancing component (a combination of nonenhancing tumor, cystic component, and necrosis);

- Label 2—ED: peritumoral edematous area;

- Label 3—ET: enhancing tumor;

- Label 0: everything else.

- MRI modalities: All four modalities were available: T1-weighted, native image (T1); T1-weighted, contrast-enhanced (Gadolinium) image (T1c); T2-weighted image (T2), and T2-weighted FLAIR image (FLAIR).

- Primarily due to computational constraints, the synthesis (local)–inpainting task exclusively utilized T1 scans.

- Challenge Data:

- Segmentation—adult glioma: RSNA-ASNR-MICCAI BraTS Continuous Evaluation Challenge: The BraTS Continuous Challenge is a continuation of the 2021 challenge. The training and validation datasets, identical to those used for the RSNA-ASNR-MICCAI BraTS 2021 Challenge, encompass a total of 5880 MRI scans from 1470 patients with brain diffuse gliomas. While the training and validation data remained consistent with those used in BraTS 2021, the testing dataset for this year’s challenge was significantly expanded to include a larger number of routine clinically-acquired mpMRI scans [10].

- Segmentation—BraTS-Africa: BraTS Challenge on Sub-Sahara-Africa Adult Glioma: The BraTS-Africa Challenge focuses on addressing the disparity in glioma treatment outcomes between high-income regions and Sub-Saharan Africa (SSA), where survival rates have not improved significantly due to factors such as the use of lower-quality MRI technology (see Figure 6), late-stage disease presentation, and unique glioma characteristics. Brain MRI scans from SSA typically exhibit reduced contrast and resolution, as demonstrated in Figure 6, which underscores the need for advanced pre-processing to enhance image quality prior to ML-based segmentation. This challenge is part of a broader effort to adapt and evaluate computer-aided diagnostic (CAD) tools for glioma detection in resource-limited settings, aiming to bridge the gap between research and clinical practice.

Figure 6. Typical clinical brain MRI obtained in Sub-Saharan Africa (left) compared to a representative case from the 2021 BraTS challenge (right). The images display notably lower resolution—especially in the sagittal plane—when compared to standard clinical imaging from high-income regions. These variations in image quality and acquisition parameters can pose additional challenges for automated algorithms. Figure adapted from [25], licensed under CC BY 4.0: https://creativecommons.org/licenses/by/4.0/ (accessed on 25 September 2024).The MICCAI-CAMERA-Lacuna Fund BraTS-Africa 2023 Challenge has assembled the largest publicly available retrospective cohort of pre-operative glioma MRI scans from adult Africans, including both low-grade glioma (LGG) and glioblastoma/high-grade glioma (GBM/HGG). The BraTS-Africa challenge involves developing machine learning algorithms to automatically segment intracranial gliomas into three distinct classes using a new 3-label system. The sub-regions for evaluation are enhancing tumor (ET), non-enhancing tumor core (NETC), and surrounding non-enhancing FLAIR hyperintensity (SNFH), which are crucial for enhancing diagnostic accuracy and treatment planning in these underserved populations [10,25].

Figure 6. Typical clinical brain MRI obtained in Sub-Saharan Africa (left) compared to a representative case from the 2021 BraTS challenge (right). The images display notably lower resolution—especially in the sagittal plane—when compared to standard clinical imaging from high-income regions. These variations in image quality and acquisition parameters can pose additional challenges for automated algorithms. Figure adapted from [25], licensed under CC BY 4.0: https://creativecommons.org/licenses/by/4.0/ (accessed on 25 September 2024).The MICCAI-CAMERA-Lacuna Fund BraTS-Africa 2023 Challenge has assembled the largest publicly available retrospective cohort of pre-operative glioma MRI scans from adult Africans, including both low-grade glioma (LGG) and glioblastoma/high-grade glioma (GBM/HGG). The BraTS-Africa challenge involves developing machine learning algorithms to automatically segment intracranial gliomas into three distinct classes using a new 3-label system. The sub-regions for evaluation are enhancing tumor (ET), non-enhancing tumor core (NETC), and surrounding non-enhancing FLAIR hyperintensity (SNFH), which are crucial for enhancing diagnostic accuracy and treatment planning in these underserved populations [10,25]. - Segmentation—meningioma: ASNR-MICCAI BraTS Intracranial Meningioma Challenge: Meningioma is the most prevalent intracranial brain tumor in adults, often causing significant health issues. While about 80% of these tumors are benign WHO grade 1 meningiomas, effectively managed through observation or therapy, the more aggressive grades 2 and 3 meningiomas pose greater risks, frequently recurring despite comprehensive treatment. Currently, there is no effective noninvasive technique for determining the grade of meningioma, its aggressiveness, or for predicting outcomes.Automated brain MRI tumor segmentation has evolved into a clinically useful tool that provides precise measurements of tumor volume, aiding in surgical and radiotherapy planning and monitoring treatment responses. Yet, most segmentation research has primarily focused on gliomas. Meningiomas present unique challenges for segmentation due to their extra-axial location and the likelihood of involving the skull base. Moreover, since meningiomas are often identified through imaging alone, accurate MRI analysis becomes crucial for effective treatment planning.The aim of the BraTS 2023 Meningioma Challenge was to develop an automated multi-compartment brain MRI segmentation algorithm specifically for meningiomas. This tool was intended not only to assist in accurate surgical and radiotherapy planning but also to pave the way for future research into meningioma classification, aggressiveness evaluation, and recurrence prediction based solely on MRI scans. For this challenge, all meningioma MRI scans were taken pre-operatively and pre-treatment. The objective was to automate the segmentation of these tumors using a three-label system: enhancing tumor (ET), non-enhancing tumor core (NETC), and surrounding non-enhancing FLAIR hyperintensity (SNFH) [10].

- Segmentation—brain metastases: ASNR-MICCAI BraTS Brain Metastasis Challenge: Brain metastases are the most prevalent type of CNS malignancy in adults and pose significant challenges in clinical assessments. This complexity is mainly due to the common occurrence of multiple, often small, metastases within a single patient. Additionally, the extensive time required to meticulously analyze multiple lesions across consecutive scans complicates detailed evaluations. Consequently, the development of automated segmentation tools for brain metastases is vital for enhancing patient care. Precisely detecting small metastatic lesions, especially those under 5 mm, is crucial for improving patient prognosis, as overlooking even a single lesion could result in treatment delays and repeated medical procedures. Moreover, the total volume of brain metastases is a critical indicator of patient outcomes, yet it remains unmeasured in routine clinical settings due to the lack of effective volumetric segmentation tools.The solution lies in crafting innovative segmentation algorithms capable of identifying and accurately quantifying the volume of all lesions. While some algorithms perform well with larger metastases, they often fail to detect or accurately segment smaller metastases. The inclusion criteria for the BraTS 2023 Brain Metastases challenge were MRI scans that showed untreated brain metastases with all four MRI modalities. Exclusion criteria included scans with prior treatment effects, missing required MRI sequences, or poor-quality images due to motion or other significant artifacts. Cases with post-treatment changes were deferred to BraTS-METS 2024.The dataset encompasses a collection of treatment-naive brain metastases mpMRI scans from various institutions, captured under standard clinical protocols. A total of 1303 studies were annotated, with 402 studies comprising 3076 lesions made publicly available for the competition. Additionally, 31 studies with 139 lesions were set aside for validation, and 59 studies with 218 lesions were designated for testing. Notably, the Stanford University dataset, despite being publicly accessible, was excluded from the primary dataset due to the absence of T2 sequences but was available for optional additional training [10,26].

- Segmentation—pediatric tumors: ASNR-MICCAI BraTS Pediatrics Tumor Challenge: The ASNR-MICCAI BraTS Pediatrics Tumor 2023 Challenge marked the inaugural focus on pediatric brain tumors within the BraTS series, targeting a significant area of concern as pediatric central nervous system tumors are the leading cause of cancer-related mortality in children. The five-year survival rate for high-grade gliomas in children is less than 20%. Furthermore, due to their rarity, the diagnosis is often delayed and treatment relies on historical methods, with clinical trials requiring collaboration across multiple institutions.The 2023 Pediatrics Tumor Challenge leveraged a comprehensive international dataset gathered from consortia dedicated to pediatric neuro-oncology. Notably, it provided the largest annotated public retrospective cohort of high-grade pediatric glioma cases.The dataset for BraTS-PEDs 2023 included a multi-institutional cohort of standard clinical MRI scans, with inherent variations in imaging protocols and equipment across different institutions contributing to the diversity in image quality. The inclusion criteria for the challenge were pediatric patients with histologically confirmed high-grade gliomas, ensuring the availability of all four mpMRI sequences on treatment-naive scans. Exclusion criteria included images of poor quality or containing artifacts that hinder reliable tumor segmentation and infants younger than one month.Data for a total of 228 pediatric patients with high-grade gliomas was sourced from CBTN, Boston Children’s Hospital, and Yale University. This cohort was divided into subsets for training (99 cases), validation (45 cases), and testing (84 cases) [27].

- Synthesis (Global)—missing MRI: ASNR-MICCAI BraTS MRI Synthesis Challenge (BraSyn). Most segmentation algorithms depend on the availability of four standard MRI modalities: T1-weighted images with and without contrast, T2-weighted images, and FLAIR images. However, in clinical settings, certain sequences might be missing due to factors like time constraints or patient movement, which can introduce artifacts. Therefore, developing methods to effectively substitute missing modalities and maintain segmentation accuracy is crucial for these algorithms to be widely used in clinical routines. To address this, BraTS 2023 launched the Brain MR Image Synthesis (BraSyn) Challenge, aimed at evaluating methods that can realistically synthesize absent MRI modalities given multiple available images.The BraSyn-2023 dataset is based on the RSNA-ASNR-MICCAI BraTS 2021 dataset, comprising 1251 training cases, 219 validation cases, and 570 testing cases. The challenge required participants to handle scenarios where one of the four modalities was randomly absent (‘dropout’) in the test set provided for each subject, leaving only three modalities available. Participants’ algorithms were expected to generate plausible images for the missing modality. Figure 7 illustrates the BraSyn-2023 design, where one of the four modalities is randomly dropped during the validation and test phases, requiring participants to synthesize the missing modality and still achieve accurate segmentation. The synthesized images were evaluated on three criteria: their overall quality, the accuracy of segmentation within the generated images, and the effectiveness of a subsequent tumor segmentation algorithm when applied to the completed image set [28].

Figure 7. Structure of the BraSyn-2023 training, validation, and test sets. During the validation and test phases, one of the four modalities will be randomly excluded (referred to as ‘dropout’). This setup compels participants to synthesize the missing modality and maintain robust segmentation performance, reflecting real-world scenarios where complete imaging data may not always be available. Figure adapted from [28], licensed under the Creative Commons Attribution 4.0 International License: https://creativecommons.org/licenses/by/4.0/ (accessed on 25 September 2024).

Figure 7. Structure of the BraSyn-2023 training, validation, and test sets. During the validation and test phases, one of the four modalities will be randomly excluded (referred to as ‘dropout’). This setup compels participants to synthesize the missing modality and maintain robust segmentation performance, reflecting real-world scenarios where complete imaging data may not always be available. Figure adapted from [28], licensed under the Creative Commons Attribution 4.0 International License: https://creativecommons.org/licenses/by/4.0/ (accessed on 25 September 2024). - Synthesis (Local)—inpainting: ASNR-MICCAI BraTS Local Synthesis of Tissue via Inpainting: In the BraTS 2023 inpainting challenge, participants were required to develop algorithmic solutions for synthesizing 3D healthy brain tissue in regions affected by glioma. This challenge arose from the need for improved tools to assist clinicians in decision-making and care provision, as most existing brain MR image analysis algorithms are optimized for healthy brains and may underperform on pathological images. These algorithms include, but are not limited to, brain anatomy parcellation, tissue segmentation, and brain extraction.The inpainting challenge addressed this issue by assigning the task of filling in pathological brain areas with synthesized healthy tissue, utilizing provided images and inpainting masks (See Figure 8). Since no actual healthy tissue data existed for tumor regions, surrogate inpainting masks created from the healthy portions of the brain were crafted using a specific protocol and provided to participants to train these algorithms. During training, participants were provided with T1 images featuring voided regions along with a corresponding void mask, the original T1 scans, and two additional masks: one for the tumor and one for the healthy area. This challenge exclusively utilized T1 scans for two main reasons: to reduce the computational demands on participants and to develop algorithms that could be generalized to other brain pathologies, as T1 scans are commonly included in MRI protocols for various conditions [29].

Figure 8. The participant’s task is to create models that synthesize healthy brain tissue within voided regions, guided by an inpainting mask, thereby reconstructing missing anatomy. This approach enables standard analysis tools to operate on tumor-affected brains by replacing pathological regions with plausible healthy tissue. Figure adapted from [29], licensed under the non-exclusive license to distribute: https://arxiv.org/licenses/nonexclusive-distrib/1.0/license.html (accessed on 25 September 2024).In addition to enabling the application of standard brain image segmentation algorithms to tumor-affected brains, local inpainting also allows for the synthetic removal of tumor areas from images. This could deepen the understanding of the interplay between different brain regions and abnormal brain tissue, and is crucial for tasks such as brain tumor modeling. Moreover, inpainting helps manage local artifacts like B-field inhomogeneities, which sometimes impair the quality of brain tumor images.

Figure 8. The participant’s task is to create models that synthesize healthy brain tissue within voided regions, guided by an inpainting mask, thereby reconstructing missing anatomy. This approach enables standard analysis tools to operate on tumor-affected brains by replacing pathological regions with plausible healthy tissue. Figure adapted from [29], licensed under the non-exclusive license to distribute: https://arxiv.org/licenses/nonexclusive-distrib/1.0/license.html (accessed on 25 September 2024).In addition to enabling the application of standard brain image segmentation algorithms to tumor-affected brains, local inpainting also allows for the synthetic removal of tumor areas from images. This could deepen the understanding of the interplay between different brain regions and abnormal brain tissue, and is crucial for tasks such as brain tumor modeling. Moreover, inpainting helps manage local artifacts like B-field inhomogeneities, which sometimes impair the quality of brain tumor images. - Evaluating Augmentations for BraTS: BraTS Challenge on Relevant Augmentation Techniques: The concept of data-centric machine learning focuses on enhancing model performance through the use of high-quality, meaningful training data. While data augmentation is recognized for boosting the robustness of machine learning models, the specific types of augmentations that benefit biomedical imaging remain uncertain. Participants were asked to develop methods to augment training data, aiming to enhance the robustness of a baseline model on newly introduced data.Software as a Medical Device (SaMD), as defined by the International Medical Device Regulators Forum (IMDRF), refers to software designed for medical use that operates independently of any hardware device. The challenge directly addressed concerns about algorithmic bias—where model predictions could be unfairly skewed against certain groups—and sought to improve algorithm robustness. Participants were tasked with creating computational methods that augmented a dataset of medical images, such that retraining a baseline AI/ML model on this augmented dataset led to improved accuracy and robustness on independent test data not previously exposed to the model. This approach would ensure that the AI/ML models used in SaMD are both effective and reliable, particularly in imaging-focused applications.The challenge used the RSNA-ASNR-MICCAI BraTS 2021 dataset to allow participants to develop and test their augmentation techniques. The goal was to improve a segmentation model with enhanced training data [10].

- Pre-Processing Data Protocol: The standardized pre-processing protocol established by BraTS has been applied to all mpMRI scans.

- Annotation Method:

- Segmentation—Adult Glioma Dataset: The BraTS Adult Glioma training and validation data were identical to the RSNA-ASNR-MICCAI BraTS 2021 dataset, and thus used the same annotation protocol. The only change, implemented starting in 2023, was a new naming convention where the ET label value was updated from ‘4’ to ‘3’. The annotations include the GD-enhancing tumor (ET—label 3), the peritumoral edematous/invaded tissue (ED—label 2), and the necrotic tumor core (NCR—label 1) [10].

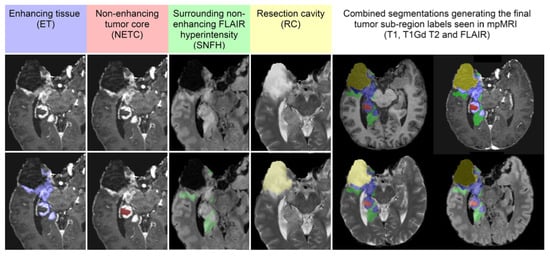

- Segmentation—BraTS-Africa Dataset: All imaging data were reviewed and manually annotated by board-certified radiologists specializing in neuro-oncology, following the BraTS pre-processing and annotation protocols. Figure 9 demonstrates the new segmentation labeling introduced in 2023, which is also employed in the BraTS-Meningioma and BraTS-Metastasis 2023 challenge. This labeling system delineates three tumor sub-regions:

Figure 9. The image panels (A–C) identify the regions used for evaluating the performance of participating algorithms in some of the BraTS 2023 Challenge, including BraTS-Africa, BraTS-Meningioma, and BraTS-Metastasis. Specifically, panel (A) shows the enhancing tumor (ET, blue) in a T1Gd scan; panel (B) displays the non-enhancing tumor core (NETC, red) also in a T1Gd scan; and panel (C) highlights the surrounding non-enhancing FLAIR hyperintensity (SNFH, green) in a T2-FLAIR scan. Panel (D) illustrates the combined segmentations that generate the final tumor sub-region labels as introduced in the new segmentation labeling: enhancing tumor (ET, blue), non-enhancing tumor core (NETC, red), and edema (SNFH, green), as provided to the challenge participants. Figure adapted from [30], licensed under the non-exclusive license to distribute: https://arxiv.org/licenses/nonexclusive-distrib/1.0/license.html (accessed on 25 September 2024).

Figure 9. The image panels (A–C) identify the regions used for evaluating the performance of participating algorithms in some of the BraTS 2023 Challenge, including BraTS-Africa, BraTS-Meningioma, and BraTS-Metastasis. Specifically, panel (A) shows the enhancing tumor (ET, blue) in a T1Gd scan; panel (B) displays the non-enhancing tumor core (NETC, red) also in a T1Gd scan; and panel (C) highlights the surrounding non-enhancing FLAIR hyperintensity (SNFH, green) in a T2-FLAIR scan. Panel (D) illustrates the combined segmentations that generate the final tumor sub-region labels as introduced in the new segmentation labeling: enhancing tumor (ET, blue), non-enhancing tumor core (NETC, red), and edema (SNFH, green), as provided to the challenge participants. Figure adapted from [30], licensed under the non-exclusive license to distribute: https://arxiv.org/licenses/nonexclusive-distrib/1.0/license.html (accessed on 25 September 2024).- (a)

- Enhancing tumor (ET): represents all tumor portions with a noticeable increase in T1 signal on post-contrast images compared to pre-contrast images, excluding adjacent blood vessels, intrinsic T1 hyperintensity, or abnormal signal in non-tumor tissues.

- (b)

- Non-enhancing tumor core (NETC): includes all non-enhancing tumor core areas, such as necrosis, cystic changes, calcification, and other non-enhancing components. Intrinsic T1 hyperintensity (e.g., intratumoral hemorrhage or fat) is also included.

- (c)

- Surrounding non-enhancing FLAIR hyperintensity (SNFH): covers the full extent of FLAIR signal abnormalities surrounding the tumor that are unrelated to the tumor core. For meningiomas, this corresponds to “vasogenic edema”, excluding non-tumor-related FLAIR abnormalities like prior infarcts or microvascular ischemic changes [10].