Continuous Monitoring of Recruits During Military Basic Training to Mitigate Attrition

Abstract

1. Introduction

2. Related Work

3. Methodology

3.1. Wearable Selection

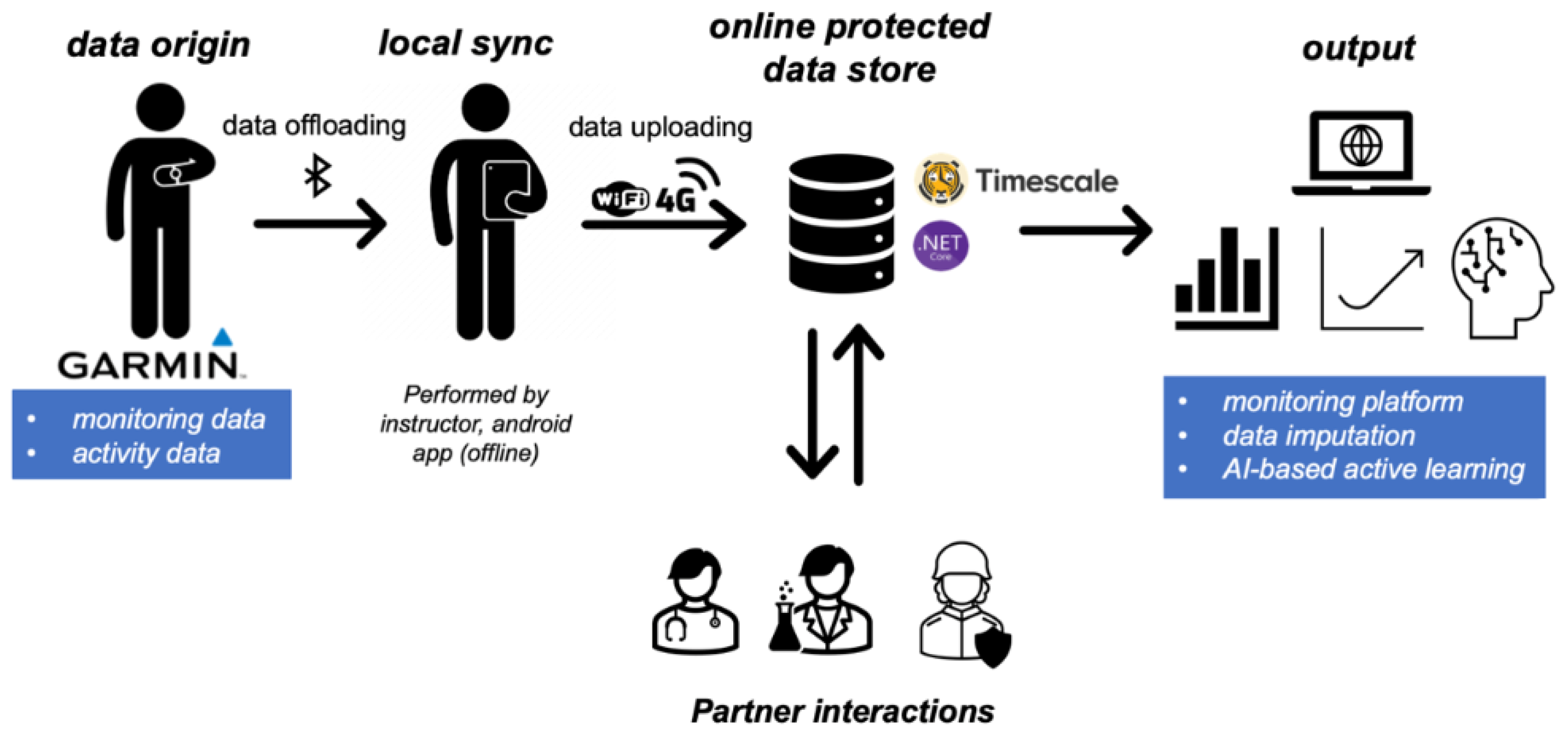

3.2. Wearable Management and Data Synchronization

3.2.1. Integrating the Garmin Health SDK

3.2.2. Offloading and Processing of the Wearable Data

3.3. Data Processing

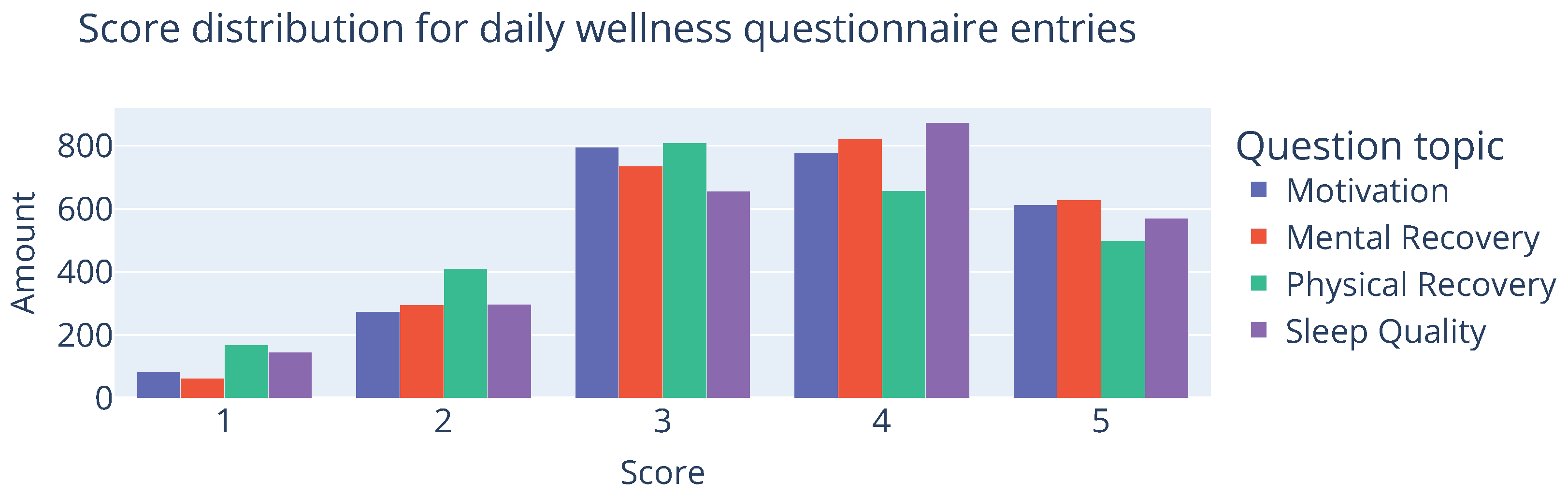

4. Dataset

5. Results and Discussion

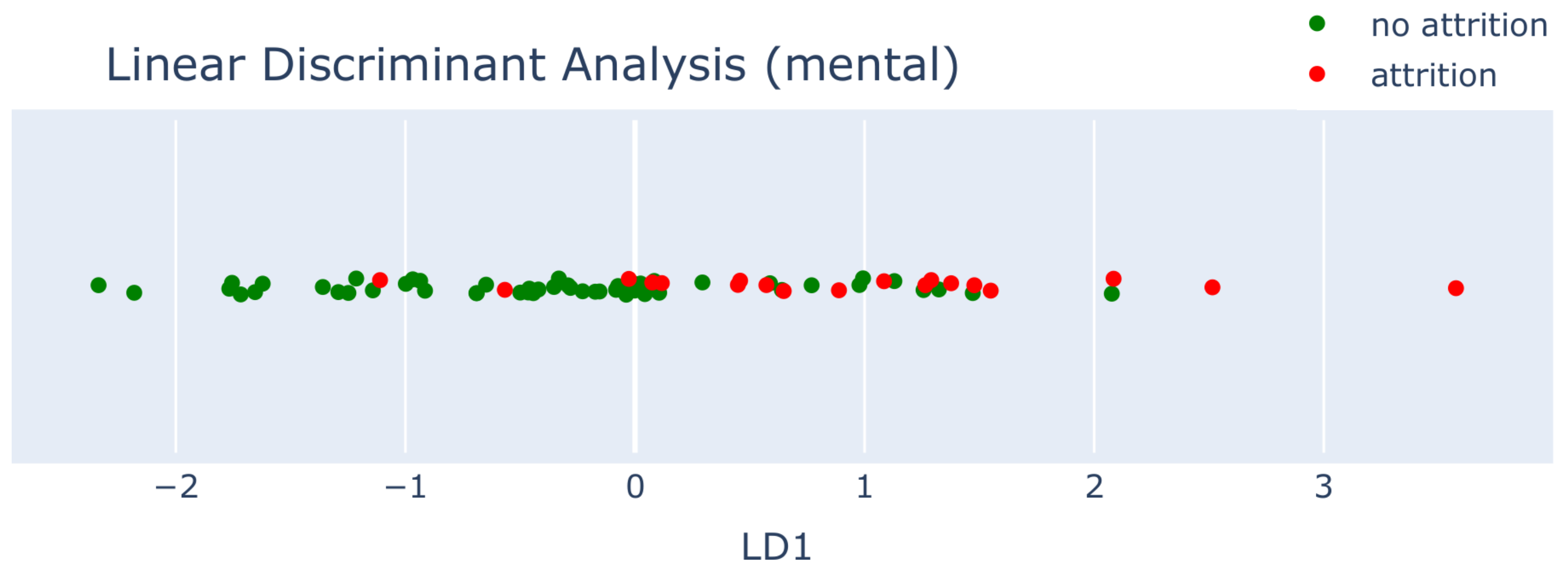

5.1. Baseline Classification

Mental and Physical Baseline

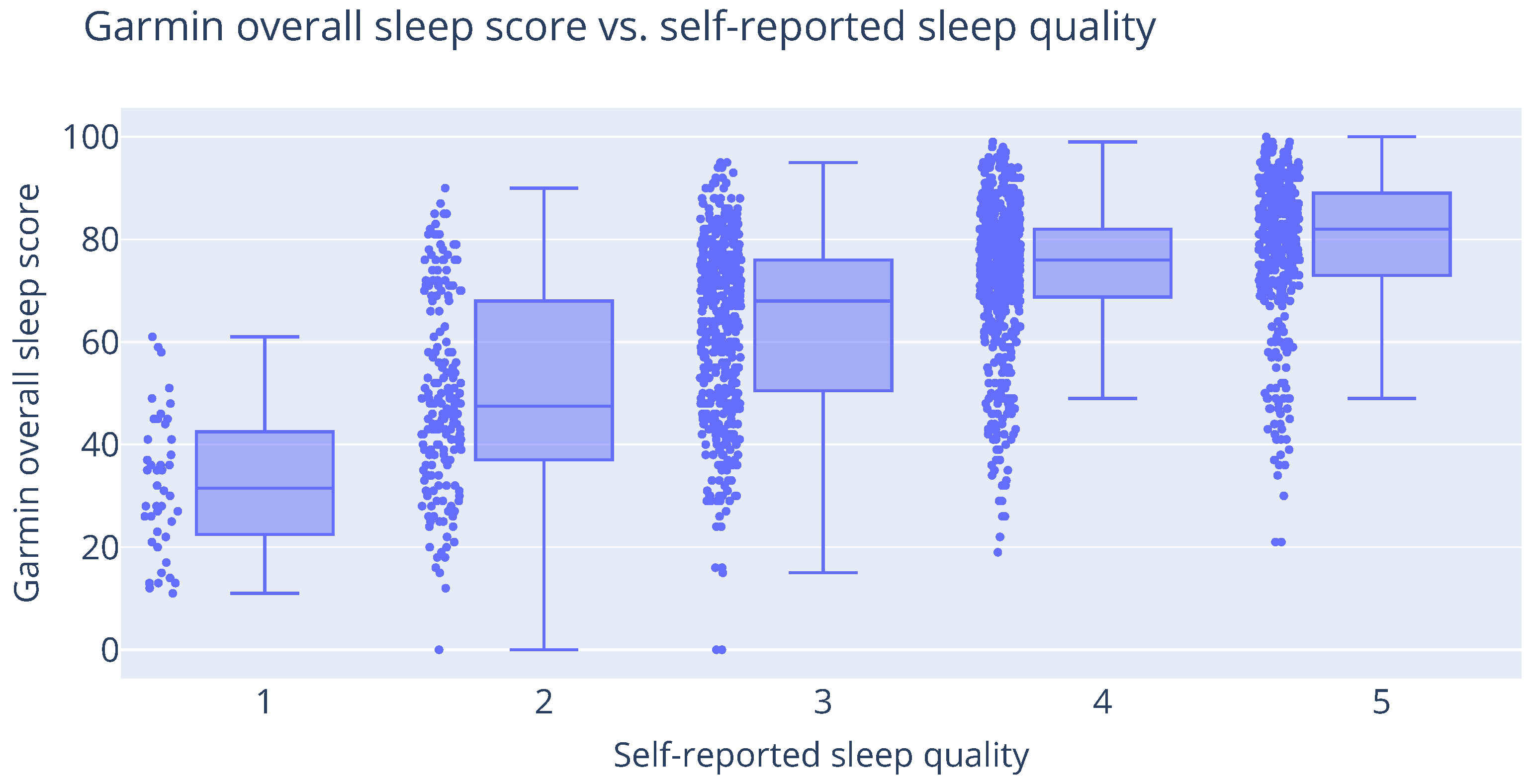

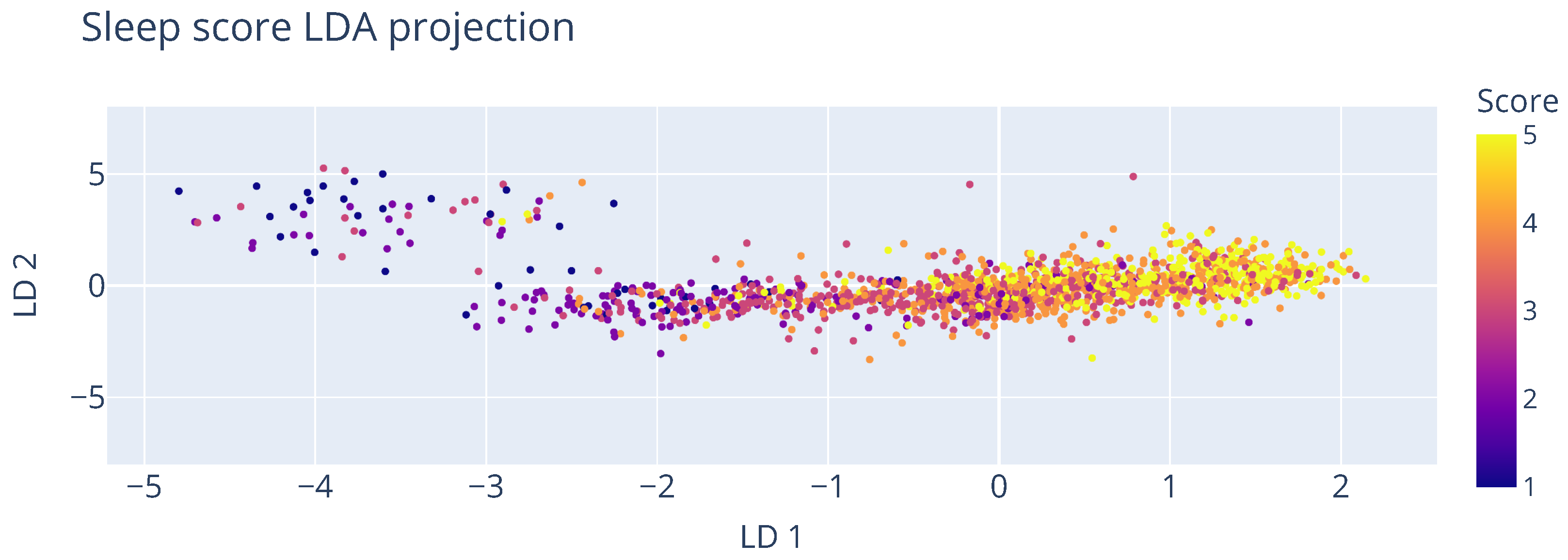

5.2. Predicting Self-Reported Sleep Scores

5.3. Garmin Fenix 7 Synchronization Benchmark

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Vrijkotte, S.; Roelands, B.; Pattyn, N.; Meeusen, R. The overtraining syndrome in soldiers: Insights from the sports domain. Mil. Med. 2019, 184, e192–e200. [Google Scholar] [CrossRef] [PubMed]

- Schram, B.; Pope, R.; Orr, R. Injuries in Australian Army full-time and part-time personnel undertaking basic training. BMC Musculoskelet. Disord. 2019, 20, 6. [Google Scholar] [CrossRef] [PubMed]

- Hauschild, V.D.; Lee, T.; Barnes, S.; Forrest, L.; Hauret, K.; Jones, B.H. The etiology of injuries in US Army initial entry training. US Army Med. Dep. J. 2018, 22–29. [Google Scholar]

- Jat, A.S.; Grønli, T.M. Smart Watch for Smart Health Monitoring: A Literature Review. In Proceedings of the Bioinformatics and Biomedical Engineering; Rojas, I., Valenzuela, O., Rojas, F., Herrera, L.J., Ortuño, F., Eds.; Springer: Cham, Switzerland, 2022; pp. 256–268. [Google Scholar]

- Krizea, M.; Gialelis, J.; Protopsaltis, G.; Mountzouris, C.; Theodorou, G. Empowering People with a User-Friendly Wearable Platform for Unobtrusive Monitoring of Vital Physiological Parameters. Sensors 2022, 22, 5226. [Google Scholar] [CrossRef]

- Wolfe, J.; Turner, K.; Caulfield, M.; Newton, T.L.; Melia, K.; Martin, J.; Goldstein, J. Gender and Trauma as Predictors of Military Attrition: A Study of Marine Corps Recruits. Mil. Med. 2005, 170, 1037–1043. [Google Scholar] [CrossRef]

- Molloy, J.M.; Feltwell, D.N.; Scott, S.J.; Niebuhr, D.W. Physical training injuries and interventions for military recruits. Mil. Med. 2012, 177, 553–558. [Google Scholar] [CrossRef]

- Huijzer, R.; de Jonge, P.; Blaauw, F.J.; Baatenburg de Jong, M.; de Wit, A.; Den Hartigh, R.J.R. Predicting special forces dropout via explainable machine learning. Eur. J. Sport Sci. 2024, 11, 1564–1572. [Google Scholar] [CrossRef]

- den Hartigh, R.J.R.; Huijzer, R.; Blaauw, F.J.; de Wit, A.; de Jonge, P. Early identification of dropouts during the special forces selection program. Sci. Rep. 2025, 15, 3242. [Google Scholar] [CrossRef]

- Kubala, A.G.; Roma, P.G.; Jameson, J.T.; Sessoms, P.H.; Chinoy, E.D.; Rosado, L.R.; Viboch, T.B.; Schrom, B.J.; Rizeq, H.N.; Gordy, P.S.; et al. Advancing a U.S. navy shipboard infrastructure for sleep monitoring with wearable technology. Appl. Ergon. 2024, 117, 104225. [Google Scholar] [CrossRef]

- Abuwarda, Z.; Hegazy, T.; Oetomo, A.; Morita, P.P. Using wearables to monitor and mitigate workers’ fatigue. In Proceedings of the Canadian Society of Civil Engineering Annual Conference; Springer: Berlin/Heidelberg, Germany, 2021; pp. 587–597. [Google Scholar]

- Stephenson, M.D.; Thompson, A.G.; Merrigan, J.J.; Stone, J.D.; Hagen, J.A. Applying heart rate variability to monitor health and performance in tactical personnel: A narrative review. Int. J. Environ. Res. Public Health 2021, 18, 8143. [Google Scholar] [CrossRef]

- Addleman, J.S.; Lackey, N.S.; DeBlauw, J.A.; Hajduczok, A.G. Heart Rate Variability Applications in Strength and Conditioning: A Narrative Review. J. Funct. Morphol. Kinesiol. 2024, 9, 93. [Google Scholar] [CrossRef] [PubMed]

- Kutilek, P.; Volf, P.; Viteckova, S.; Smrcka, P.; Krivanek, V.; Lhotska, L.; Hana, K.; Doskocil, R.; Navratil, L.; Hon, Z.; et al. Wearable systems for monitoring the health condition of soldiers: Review and application. In Proceedings of the 2017 International Conference on Military Technologies (ICMT), Brno, Czech Republic, 31 May–2 June 2017; pp. 748–752. [Google Scholar]

- Saxon, L.; DiPaula, B.; Fox, G.R.; Ebert, R.; Duhaime, J.; Nocera, L.; Tran, L.; Sobhani, M. Continuous Measurement of Reconnaissance Marines in Training With Custom Smartphone App and Watch: Observational Cohort Study. JMIR Mhealth Uhealth 2020, 8, e14116. [Google Scholar] [CrossRef] [PubMed]

- Grandner, M.A.; Lujan, M.R.; Ghani, S.B. Sleep-tracking technology in scientific research: Looking to the future. Sleep 2021, 44, zsab071. [Google Scholar] [CrossRef]

- Chinoy, E.D.; Cuellar, J.A.; Huwa, K.E.; Jameson, J.T.; Watson, C.H.; Bessman, S.C.; Hirsch, D.A.; Cooper, A.D.; Drummond, S.P.A.; Markwald, R.R. Performance of seven consumer sleep-tracking devices compared with polysomnography. Sleep 2020, 44, zsaa291. [Google Scholar] [CrossRef]

- Garmin. Fenix 7 Standard/Solar/Pro Series Owner’s Manual. Available online: https://www8.garmin.com/manuals/webhelp/GUID-C001C335-A8EC-4A41-AB0E-BAC434259F92/EN-US/fenix_7_Series_OM_EN-US.pdf (accessed on 22 August 2024).

- Fitbit. Charge 5 User Manual. Available online: https://help.fitbit.com/manuals/charge_5/Content/manuals/Topics/Basics/Adjust%20settings.htm (accessed on 22 August 2024).

- Movesense. MOVESENSE MD User Guide. Available online: https://www.movesense.com/wp-content/uploads/2021/02/Movesense-MD-User-Guide-R78-Public.pdf (accessed on 22 August 2024).

- Equivital. Eqlifemonitor: World-Class Physiological Data Monitoring That Makes a Difference. Available online: https://equivital.com/products/eq02-lifemonitor (accessed on 22 August 2024).

- Axiamo. Axiamo PADIS 2.0 Long Term Activity Monitoring for Soldiers. Available online: https://www.axiamo.com/axiamo-padis-2-0/ (accessed on 12 March 2025).

- Garmin. Fenix 7 Standard/Solar/Pro Series Owner’s Manual-Battery Information. Available online: https://www8.garmin.com/manuals/webhelp/GUID-C001C335-A8EC-4A41-AB0E-BAC434259F92/EN-US/GUID-694C4E14-D875-479F-AFB1-2A6A582FF506.html (accessed on 22 August 2024).

- Garmin. Take Control of Garmin Wearables and Stream Real-Time Data. Available online: https://developer.garmin.com/health-sdk/overview/ (accessed on 12 March 2025).

- Timescale Inc. TimescaleDB: SQL Made Scalable for Time-Series Data. 2017. Available online: https://caxapa.ru/thumbs/880838/timescaledb.pdf (accessed on 12 June 2024).

- Fernández, A.P.; Leenders, C.; Aerts, J.M.; Berckmans, D. Emotional States versus Mental Heart Rate Component Monitored via Wearables. Appl. Sci. 2023, 13, 807. [Google Scholar] [CrossRef]

- Bourgois, J.G.; Bourgois, G.; Boone, J. Perspectives and Determinants for Training-Intensity Distribution in Elite Endurance Athletes. Int. J. Sport. Physiol. Perform. 2019, 14, 1151–1156. [Google Scholar] [CrossRef]

- Mullie, P. Impact of a Special Operations Forces Basic Training on Body Composition and Energy Expenditure. Mil. Med. 2024, 189, e1588–e1592. [Google Scholar] [CrossRef]

- Xuan, X.; Pérez-Ràfols, C.; Chen, C.; Cuartero, M.; Crespo, G.A. Lactate Biosensing for Reliable On-Body Sweat Analysis. ACS Sens. 2021, 6, 2763–2771. [Google Scholar] [CrossRef]

- Connor, K.M.; Davidson, J.R. Development of a new resilience scale: The Connor-Davidson resilience scale (CD-RISC). Depress. Anxiety 2003, 18, 76–82. [Google Scholar] [CrossRef]

- Crum, A.J.; Salovey, P.; Achor, S. Rethinking stress: The role of mindsets in determining the stress response. J. Personal. Soc. Psychol. 2013, 104, 716. [Google Scholar] [CrossRef]

- Oginska, H.; Mojsa-Kaja, J.; Mairesse, O. Chronotype description: In search of a solid subjective amplitude scale. Chronobiol. Int. 2017, 34, 1388–1400. [Google Scholar] [CrossRef] [PubMed]

- Duckworth, A.L.; Quinn, P.D. Development and validation of the Short Grit Scale (GRIT–S). J. Personal. Assess. 2009, 91, 166–174. [Google Scholar] [CrossRef] [PubMed]

- Niebuhr, D.W.; Gubata, M.E.; Oetting, A.A.; Weber, N.S.; Feng, X.; Cowan, D.N. Personality Assessment Questionnaire as a pre-accession screen for risk of mental disorders and early attrition in U. S. Army recruits. Psychol. Serv. 2013, 10, 378–385. [Google Scholar] [CrossRef] [PubMed]

- Mobley, W.; Hand, H.H.; Baker, R.M.L.; Meglino, B.M. Conceptual and empirical analysis of military recruit training attrition. J. Appl. Psychol. 1979, 64, 10–18. [Google Scholar] [CrossRef]

- Taylor, D.J.; Straud, C.; Hale, W.; Grieser, E.; Garb, L.; Garb, H. Sleep difficulties as a predictor of attrition in United States Air Force recruits. Sleep Health 2020, 6, 338–343. [Google Scholar] [CrossRef]

- Bulmer, S.; Aisbett, B.; Drain, J.R.; Roberts, S.; Gastin, P.B.; Tait, J.; Main, L.C. Sleep of recruits throughout basic military training and its relationships with stress, recovery, and fatigue. Int. Arch. Occup. Environ. Health 2022, 95, 1331–1342. [Google Scholar] [CrossRef]

- Van Der Donckt, J.; Van Der Donckt, J.; Deprost, E.; Van Hoecke, S. tsflex: Flexible time series processing & feature extraction. SoftwareX 2021. [Google Scholar] [CrossRef]

- Garmin. What is the Sleep Score and Insights Feature on My Garmin® Watch? | Garmin Customer Support. Available online: https://support.garmin.com/en-US/?faq=DWcdBazhr097VgqFufsTk8 (accessed on 12 June 2024).

- Garmin. What Is Advanced Sleep Monitoring in Garmin Connect? | Garmin Customer Support. Available online: https://support.garmin.com/en-US/?faq=mBRMf4ks7XAQ03qtsbI8J6 (accessed on 12 June 2024).

- Garmin. Garmin Technology: Sleep Tracking. Available online: https://www.garmin.com/en-US/garmin-technology/health-science/sleep-tracking/ (accessed on 12 June 2024).

- Hirshkowitz, M.; Whiton, K.; Albert, S.M.; Alessi, C.; Bruni, O.; DonCarlos, L.; Hazen, N.; Herman, J.; Adams Hillard, P.J.; Katz, E.S.; et al. National Sleep Foundation’s updated sleep duration recommendations: Final report. Sleep Health 2015, 1, 233–243. [Google Scholar] [CrossRef]

- Ohayon, M.; Wickwire, E.M.; Hirshkowitz, M.; Albert, S.M.; Avidan, A.; Daly, F.J.; Dauvilliers, Y.; Ferri, R.; Fung, C.; Gozal, D.; et al. National Sleep Foundation’s sleep quality recommendations: First report. Sleep Health 2017, 3, 6–19. [Google Scholar] [CrossRef]

- Chen, C.; Liaw, A.; Breiman, L. Using Random Forest to Learn Imbalanced Data. Department of Statistics, UC Berkeley, July 1, 2004. Available online: https://statistics.berkeley.edu/sites/default/files/tech-reports/666.pdf (accessed on 12 March 2025).

| Garmin Fenix 7 [18] | Fitbit Charge 5 [19] | Movesense [20] | eqLifemonitor [21] | Axioma Padis [22] | |

|---|---|---|---|---|---|

| Device type | Smartwatch | Smartwatch | Wearable sensor | Wearable sensor | Wearable sensor |

| Body position | Wrist | Wrist | Upper arm/chest | Chest | Wrist, belt, backpack |

| Price | EUR 599.99 | EUR 150 | EUR 104 | EUR 1650 | EUR 800 |

| Battery life | 18 days | 4 days | 16 days HR 7 days ECG | 2 days | 7 days |

| Charging type | Cable | Cable | Replacable battery | Charging case | Charging case |

| Heart rate | Yes | Yes | Yes | Yes | Yes |

| Raw accel. | Yes * | No | Yes | Yes | Yes |

| Raw HRV | Yes * | Only during sleep | Yes | Yes | No |

| ECG | No | External app | Yes | Yes | No |

| GPS support | Yes | Yes | No | Yes | No |

| Synchronization | WiFi/ANT+/BLE/USB | BLE/USB | BLE ‡ | BLE/USB | BLE/USB |

| Cloud storage | Yes | Yes | No | Yes † | Yes |

| On-premise | Yes * | No | No | Yes | Yes |

| (a) | |||

|---|---|---|---|

| Study | Start date | End date | # recruits |

| Test phase 1 | 31 August 2023 | 27 October 2023 | 17 |

| Test phase 2 | 30 January 2024 | 31 May 2024 | 46 |

| (b) | |||

| Reason | Test phase 1 | Test phase 2 | |

| Drop-On-Request | 3 | 7 | |

| Medical | 0 | 4 | |

| Safety | 0 | 0 | |

| Performance | 0 | 0 | |

| Total | 3 | 11 | |

| Variable | Questionnaire | Reference |

|---|---|---|

| Resilience | CD-RISC-25 | [30] |

| Stress mindset | Stress mindset measure | [31] |

| Sleep | CHR-NL 1–SIF–SIC | [32] |

| Grit | Short Grit Scale | [33] |

| Motivation, self-reported health | Custom | |

| injury, and sports history |

| Measurement | Description |

|---|---|

| Sleep duration score | How long the recruit slept compared to globally accepted age-based recommendations [42]. |

| Awakenings count score | A high score corresponds to continuous sleep through the night, with few to no stretches of awake time. |

| Awake time score | Score based on the total time spent awake during the recorded sleep interval. |

| Interruptions score | Score based on the number of times you are awake for longer than 5 min. |

| Light sleep score | Score based on time spent in the first stage of sleep. Eye movements and muscle activity slow during light sleep as your body gets ready for deep sleep. |

| Deep sleep score | Score based on time spent in deep sleep stage. Eye and muscle movements stop completely. Your heart rate and breathing slow. This stage can be referred to as restoration mode, where the body will recover, building bone and muscle, and boosting your immune system. |

| REM sleep score | Score based on time spent in REM sleep stage. Brain activity is almost as active as when you are awake. |

| Sleep quality score | Quality aspects of the sleep score come from a combination of sleep architecture, stress data, interruptions during the night and other factors [43]. |

| Sleep recovery score | N/A, no Garmin provided description of this score available. |

| Sleep restlessness score | This feature indicates sudden movement, typically detected in light sleep. |

| Overall sleep score | Calculated based on a blend of how long you slept, how well you slept, and evidence of recovery activity occurring in your autonomic nervous system derived from heart rate variability data. This score is calculated on a scale of 0–100 (Excellent: 90–100, Good: 80–89, Fair: 60–79, Poor: Below 60). |

| Score 1 | Score 2 | Score 3 | Score 4 | Score 5 | Recruits | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Train | Test | Train | Test | Train | Test | Train | Test | Train | Test | Train | Test | |

| Split 1 | 36 | 5 | 152 | 19 | 395 | 47 | 452 | 101 | 259 | 123 | 26 | 7 |

| Split 2 | 29 | 12 | 123 | 48 | 330 | 112 | 422 | 131 | 328 | 54 | 26 | 7 |

| Split 3 | 33 | 8 | 126 | 45 | 304 | 138 | 434 | 119 | 334 | 48 | 26 | 7 |

| Split 4 | 31 | 10 | 131 | 40 | 366 | 76 | 438 | 115 | 305 | 77 | 26 | 7 |

| Split 5 | 35 | 6 | 152 | 19 | 373 | 87 | 466 | 87 | 302 | 80 | 28 | 5 |

| Score 1 | Score 2 | Score 3 | Score 4 | Score 5 | Macro MAE | |

|---|---|---|---|---|---|---|

| Split 1 | 0.00 | 0.53 | 1.00 | 1.01 | 1.21 | 0.75 ± 0.44 |

| Split 2 | 0.67 | 0.94 | 0.92 | 0.76 | 0.61 | 0.79 ± 0.13 |

| Split 3 | 0.75 | 1.02 | 0.93 | 0.79 | 0.48 | 0.79 ± 0.18 |

| Split 4 | 0.50 | 0.90 | 0.87 | 0.74 | 0.73 | 0.75 ± 0.14 |

| Split 5 | 0.17 | 0.90 | 0.87 | 0.76 | 0.53 | 0.64 ± 0.27 |

| Classwise MAE | 0.42 ± 0.29 | 0.86 ± 0.17 | 0.92 ± 0.05 | 0.81 ± 0.1 | 0.71 ± 0.26 |

| # Watches | Round 1 | Round 2 | Round 3 | Round 4 | Round 5 | Mean Agg. | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ind. | Agg. | Ind. | Agg. | Ind. | Agg. | Ind. | Agg. | Ind. | Agg. | ||

| 1 | 109 | - | 128 | - | 156 | - | 125 | - | 123 | - | 128 ± 15 |

| 2 | 318 ± 7 | 324 | 343 ± 15 | 354 | 217 ± 6 | 234 | 271 ± 12 | 290 | 276 ± 36 | 302 | 300 ± 40 |

| 3 | 346 ± 52 | 391 | 459 ± 31 | 492 | 217 ± 69 | 369 | 414 ± 18 | 454 | 401 ± 41 | 458 | 425 ± 45 |

| 4 | 476 ± 181 | 631 | 549 ± 31 | 600 | 434 ± 184 | 579 | 451 ± 162 | 585 | 521 ± 51 | 598 | 599 ± 18 |

| 5 | 514 ± 216 | 730 | 528 ± 200 | 719 | 557 ± 151 | 701 | 579 ± 290 | 818 | 615 ± 161 | 755 | 744 ± 41 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Decorte, R.; Vanhaeverbeke, J.; VanDen Berghe, S.; Slembrouck, M.; Verstockt, S. Continuous Monitoring of Recruits During Military Basic Training to Mitigate Attrition. Sensors 2025, 25, 1828. https://doi.org/10.3390/s25061828

Decorte R, Vanhaeverbeke J, VanDen Berghe S, Slembrouck M, Verstockt S. Continuous Monitoring of Recruits During Military Basic Training to Mitigate Attrition. Sensors. 2025; 25(6):1828. https://doi.org/10.3390/s25061828

Chicago/Turabian StyleDecorte, Robbe, Jelle Vanhaeverbeke, Sarah VanDen Berghe, Maarten Slembrouck, and Steven Verstockt. 2025. "Continuous Monitoring of Recruits During Military Basic Training to Mitigate Attrition" Sensors 25, no. 6: 1828. https://doi.org/10.3390/s25061828

APA StyleDecorte, R., Vanhaeverbeke, J., VanDen Berghe, S., Slembrouck, M., & Verstockt, S. (2025). Continuous Monitoring of Recruits During Military Basic Training to Mitigate Attrition. Sensors, 25(6), 1828. https://doi.org/10.3390/s25061828