Method for Using Functional Near-Infrared Spectroscopy (fNIRS) to Explore Music-Induced Brain Activation in Orchestral Musicians in Concert

Abstract

1. Introduction

- How will an fNIRS hemodynamic 3D model representation of the experience of performing the violin in a symphony orchestra change in real time?

- Will a method for studying/averaging the hemodynamic changes in recordings lasting the duration of each piece show systematic changes related to each performer?

- Will a method for estimating the sources responsible for the hemodynamic changes show alterations in the most active brain regions throughout the seven concerts?

- Regions of the brain with characteristic hemodynamic changes should alter with experience in line with the Predictive Coding of Music model. Will this technological setup corroborate such a statement?

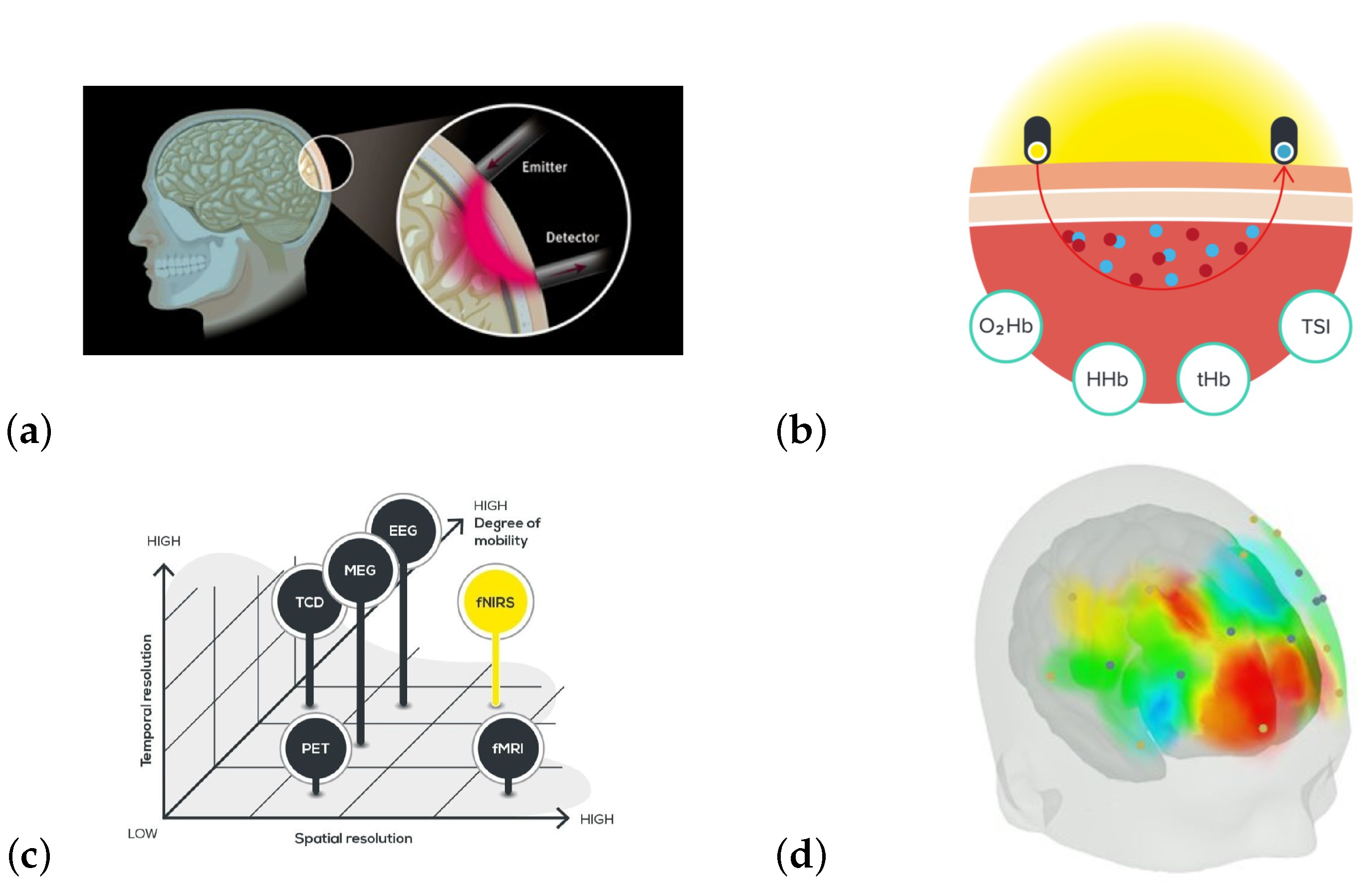

Measuring Music-Induced Brain Activation

2. Materials and Methods

2.1. Motor Paradigm, Live Concerts

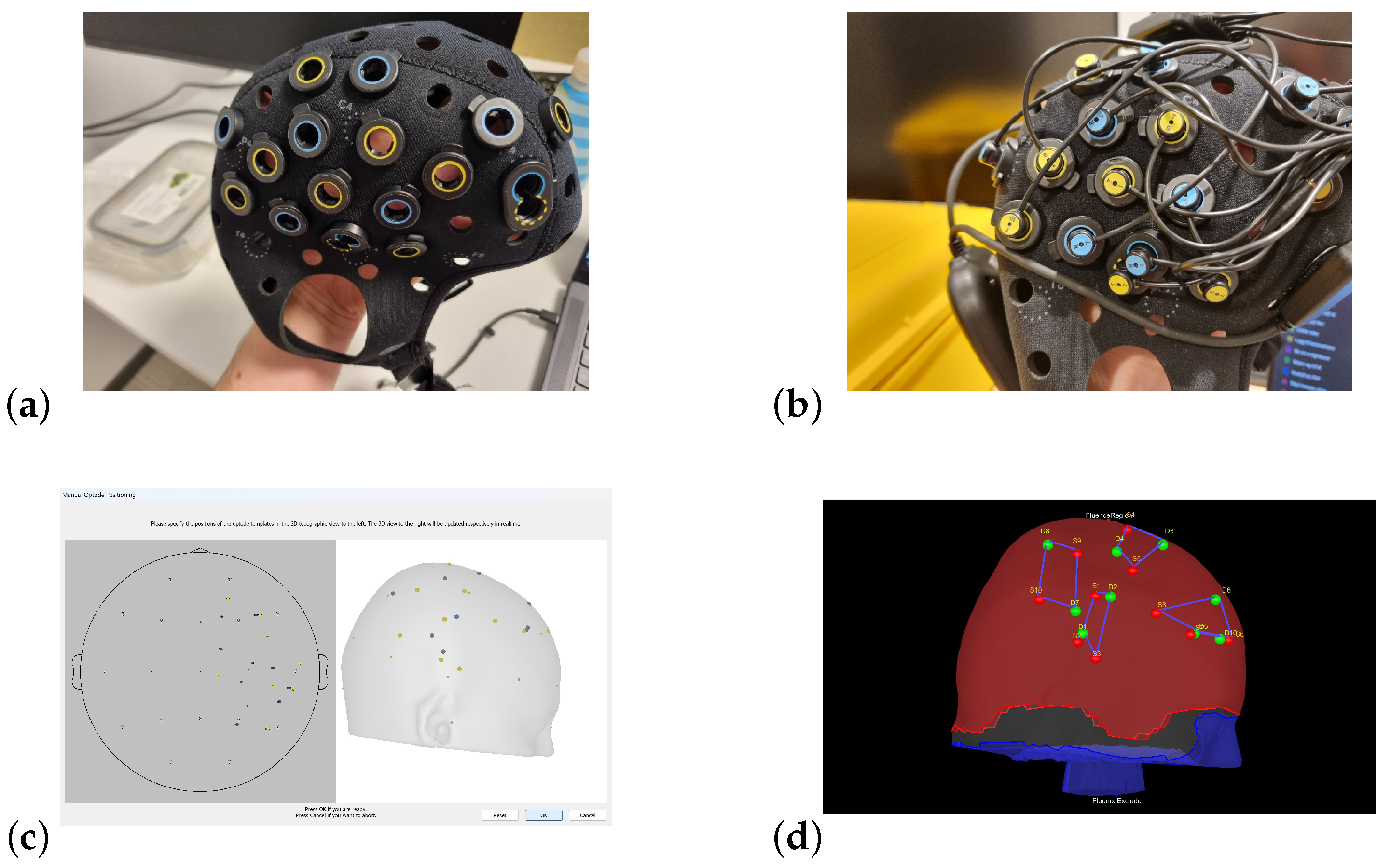

2.2. fNIRS Acquisition Protocol

2.3. fNIRS Real-Time Data Visualization

2.4. fNIRS Preprocessing

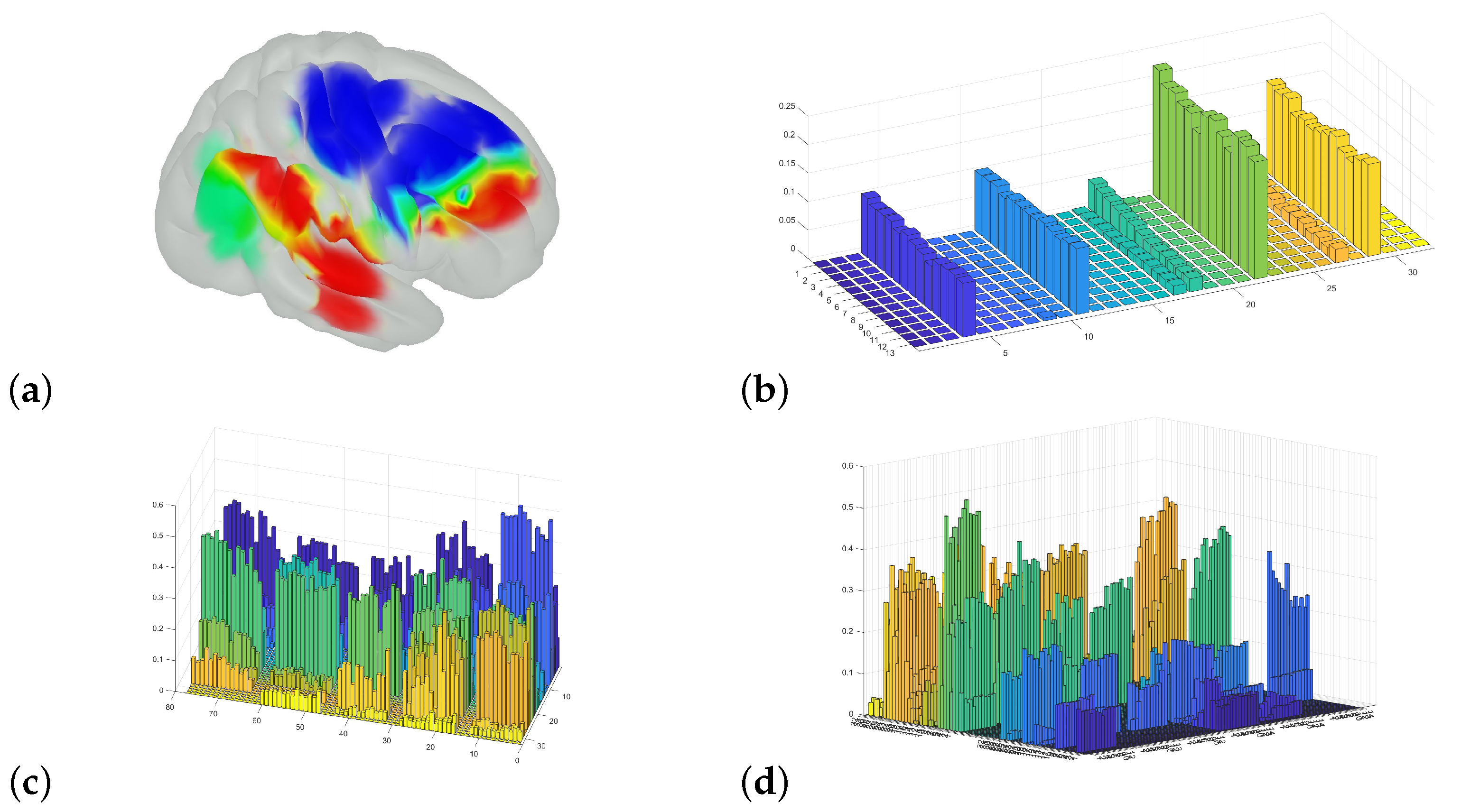

2.5. Estimating Sources of Hemodynamic Change

2.5.1. Overall Channel Average

2.5.2. Changes, Initial Sections

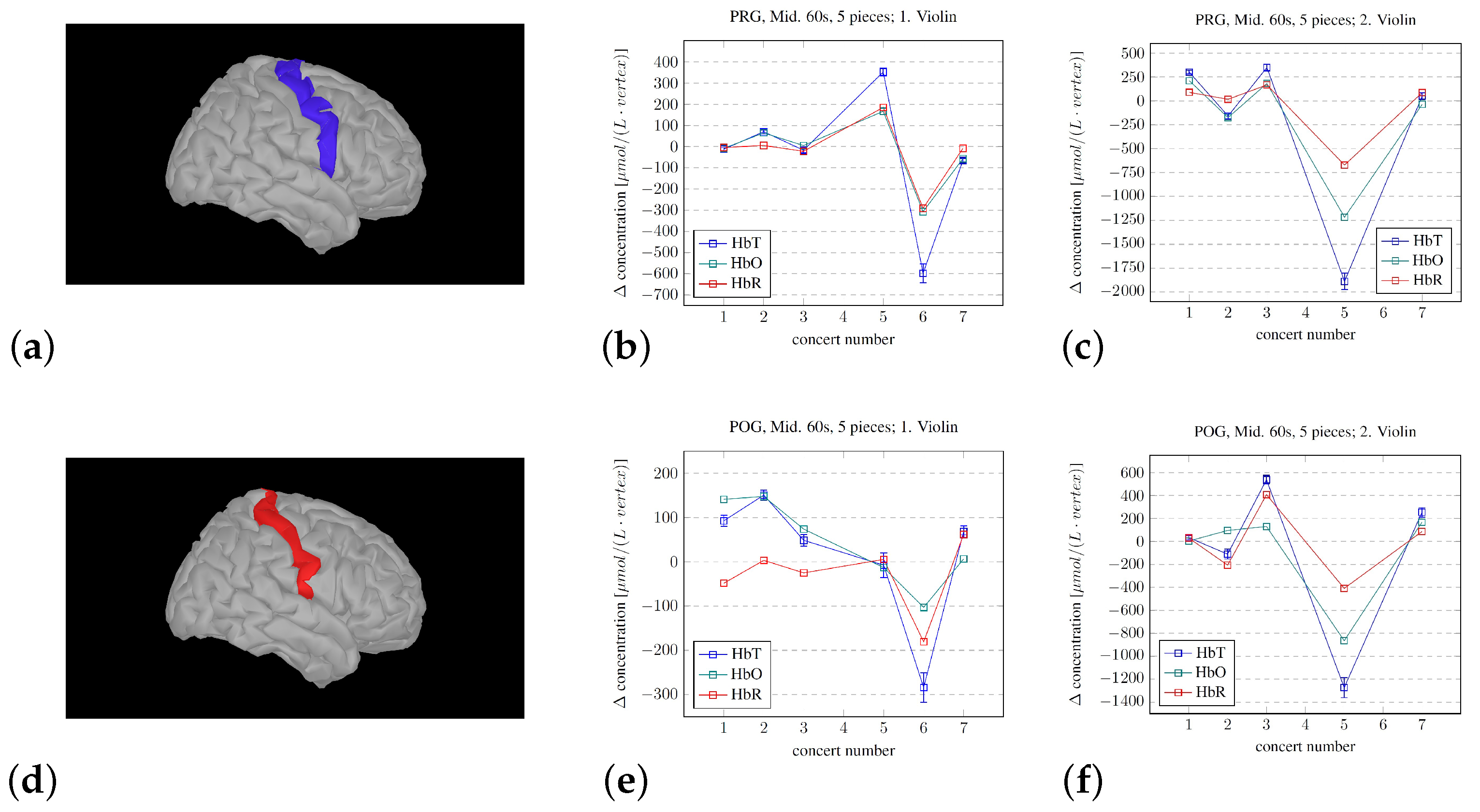

2.5.3. Changes, Mid Sections

2.6. Averaging and Confidence Intervals

3. Findings

4. Discussion

- We were able to develop a protocol for displaying changes in the brain—in real time—that reflect the experience of performing the violin in a symphony orchestra.

- The development of the procedure for averaging the hemodynamic changes lasting the longevity of the pieces did show systematic changes. The observed difference between the musicians in this pilot study may corroborate the results from [38], where they found that the violinists experienced leader and follower roles while playing first and second violin, respectively. But, regardless of the roles, the activity in the measured parts of the right hemisphere seemed to decrease. But, we cannot disregard the influence/disturbance by hair. The musician with the most hair did have a very high average signal for the SSC, which, for most channels, became the dominant signal.

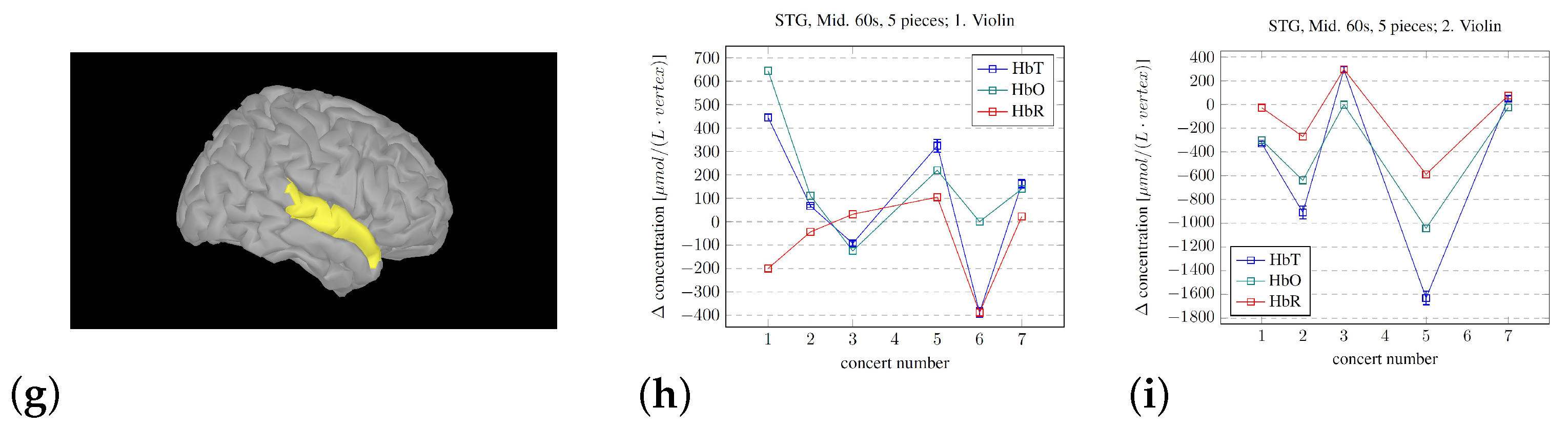

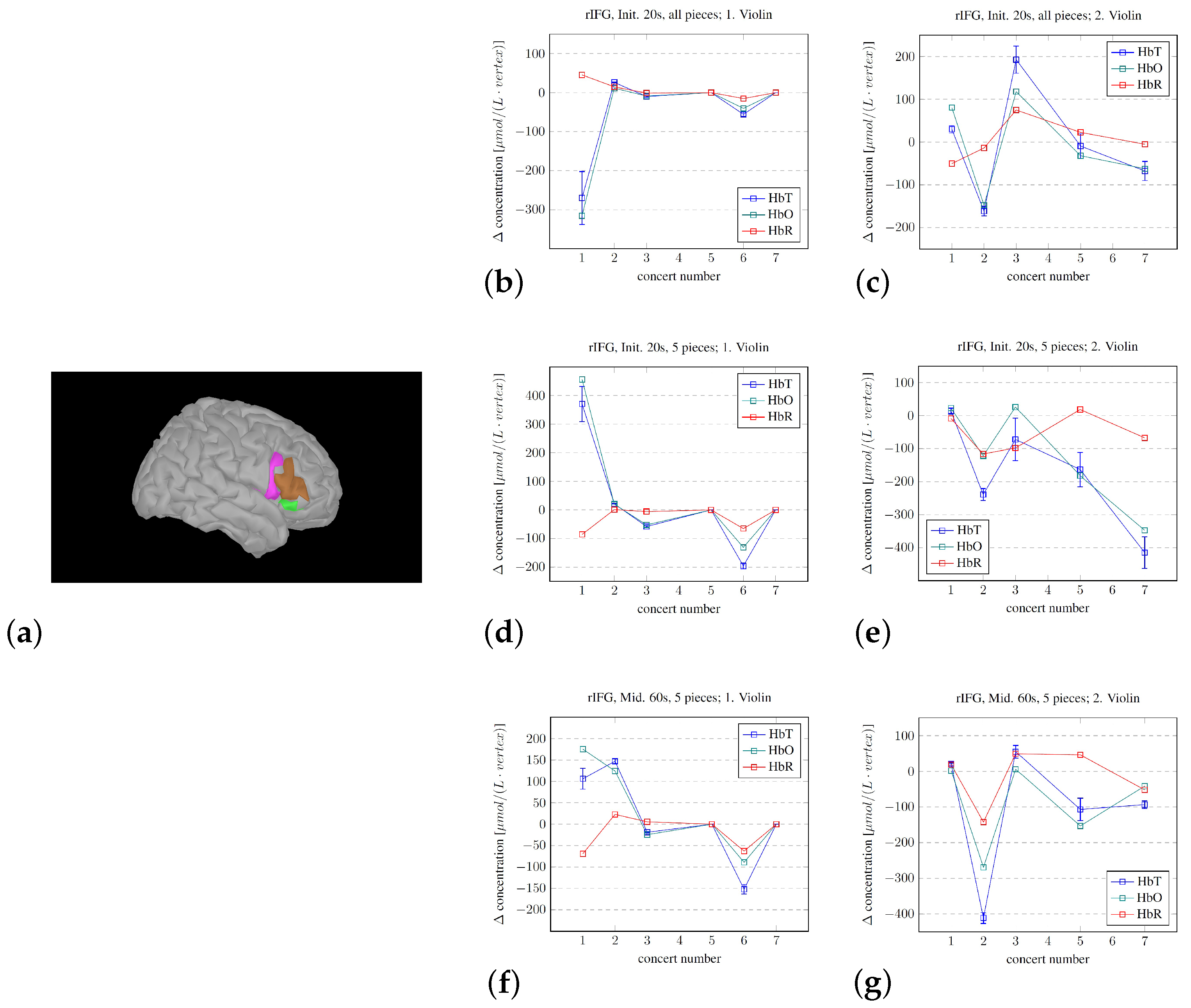

- The Brainstorm modeling estimating the fNIRS signal sources does indicate a relative activity change of the subcomponents throughout the concert sequence. Such modeling may be used to identify the brain regions that are most active or important for the overall experience.

- At least on a “proof of principle” basis, there are several limitations in our approach, but we showed that the procedures that we developed may be used to track the developing experience of professional musicians. However, the mere fact that the volunteers were indeed professionals likely reduced both the change in and the variability of the measured fNIRS signals during the concerts in the chosen regions of the right hemisphere. If we assume that attention is required for updating hypotheses, the decrease in the variability of the activity level of the rIFG throughout the concerts implies that a decreasing level of attention was needed over time. This again implies that the hypotheses became better through practice/exposure, which is in line with the PCM model.

5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- García-Casares, N.; Martín-Colom, J.E.; García-Arnés, J.A. Music Therapy in Parkinson’s Disease. J. Am. Med. Dir. Assoc. 2018, 19, 12. [Google Scholar] [CrossRef] [PubMed]

- Sánchez, V.G.; Żelechowska, A.; Jensenius, A.R. Analysis of the Movement-Inducing Effects of Music through the Fractality of Head Sway during Standstill. J. Mot. Behav. 2020, 52, 6. [Google Scholar] [CrossRef]

- Jensenius, A.R. Sound Actions; The MIT Press: Oslo, Norway, 2022. [Google Scholar] [CrossRef]

- Peters, V.; Bissonnette, J.; Nadeau, D.; Gauthier-Légaré, A.; Noël, M.A. The impact of musicking on emotion regulation: A systematic review and meta-analysis. Psychol. Music. 2023, 52, 548–568. [Google Scholar] [CrossRef] [PubMed]

- Pinti, P.; Tachtsidis, I.; Hamilton, A.; Hirsch, J.; Aichelburg, C.; Gilbert, S.; WBurgess, P. The present and future use of functional near-infrared spectroscopy (fNIRS) for cognitive neuroscience. Ann. N. Y. Acad. Sci. 2020, 1464, 5–29. [Google Scholar] [CrossRef]

- Curzel, F.; Tillmann, B.; Ferreri, L. Lights on music cognition: A systematic and critical review of fNIRS applications and future perspectives. Brain Cogn. 2024, 180, 106200. [Google Scholar] [CrossRef]

- Ding, K.; Li, J.; Li, X.; Li, H. Understanding the Effect of Listening to Music, Playing Music, and Singing on Brain Function A Scoping Review of fNIRS Studies. Brain Sci. 2024, 14, 751. [Google Scholar] [CrossRef]

- Vuust, P.; Heggli, O.A.; Friston, K.J.; Kringelbach, M.L. Music in the brain. Nat. Rev. Neurosci. 2022, 23, 287–305. [Google Scholar] [CrossRef]

- Dura, M.T. The Phenomenology of the Music-listening Experience. Arts Educ. Policy Rev. 2006, 107, 25–32. [Google Scholar] [CrossRef]

- Schäfer, T.; Sedlmeier, P.; Städtler, C.; Huron, D. The psychological functions of music listening. Front. Psychol. 2013, 4, 511. [Google Scholar] [CrossRef]

- Finn, S.; Fancourt, D. Chapter 9—The biological impact of listening to music in clinical and nonclinical settings: A systematic review. Prog. Brain Res. 2018, 237, 173–200. [Google Scholar] [CrossRef]

- Davidson, J.W.; Correia, J.S. Meaningful musical performance: A bodily experience. Res. Stud. Music. Educ. 2001, 17, 70–83. [Google Scholar] [CrossRef]

- Wrigley, W.J.; Emmerson, S.B. The experience of the flow state in live music performance. Psychol. Music. 2011, 41, 292–305. [Google Scholar] [CrossRef]

- Volta, E.; Di Stefano, N. Using Wearable Sensors to Study Musical Experience: A Systematic Review. Sensors 2024, 24, 5783. [Google Scholar] [CrossRef] [PubMed]

- Moura, N.; Dias, P.; Veríssimo, L.; Oliveira-Silva, P.; Serra, S. Solo music performance assessment criteria: A systematic review. Front. Psychol. 2024, 15, 1467434. [Google Scholar] [CrossRef] [PubMed]

- Jentschke, S.; Koelsch, S. Musical training modulates the development of syntax processing in children. Neuroimage 2009, 47, 735–744. [Google Scholar] [CrossRef]

- Fischer, C.E.; Churchill, N.; Leggieri, M.; Vuong, V.; Tau, M.; Fornazzari, L.R.; Thaut, M.H.; Schweizer, T.A. Long-Known Music Exposure Effects on Brain Imaging and Cognition in Early-Stage Cognitive Decline: A Pilot Study. J. Alzheimer’s Dis. 2021, 84, 819–833. [Google Scholar] [CrossRef]

- Brattico, E.; Bogert, B.; Jacobsen, T. Toward a neural chronometry for the aesthetic experience of music. Front. Psychol. 2013, 4, 206. [Google Scholar] [CrossRef]

- Koelsch, S. Brain correlates of music-evoked emotions. Nat. Rev. Neurosci. 2014, 15, 170–180. [Google Scholar] [CrossRef]

- Zhang, H.; Zhou, Q.; Chen, H.; Hu, X.; Li, W.; Bai, Y.; Han, J.; Wang, Y.; Liang, Z.; Chen, D.; et al. The applied principles of EEG analysis methods in neuroscience and clinical neurology. Mil. Med. Res. 2023, 10, 67. [Google Scholar] [CrossRef]

- Poliakova, E.; Conrad, A.L.; Schieltz, K.M.; O’Brien, M.J. Using fNIRS to evaluate ADHD medication effects on neuronal activity: A systematic literature review. Front. Neuroimaging 2023, 2, 1083036. [Google Scholar] [CrossRef]

- Lv, H.; Wang, Z.; Tong, E.; Williams, L.M.; Zaharchuk, G.; Zeineh, M.; Goldstein-Piekarski, A.N.; Ball, T.M.; Liao, C.; Wintermark, M. Resting-State Functional MRI: Everything That Nonexperts Have Always Wanted to Know. AJNR Am. J. Neuroradiol. 2018, 39, 1390–1399. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Li, X.; Lv, J.; Jiang, X.; Guo, L.; Liu, T. Characterizing and Differentiating Task-based and Resting State FMRI Signals via Two-stage Sparse Representations. Brain Imaging Behav. 2016, 10, 21–32. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Wang, J.; Zhang, J.; Wen, H.; Zhang, Y.; Kang, H.; Wang, X.; Li, W.; He, H.; Peng, Y. Altered Spontaneous Brain Activity in Children with Early Tourette Syndrome: A Resting-state fMRI Study. Sci. Rep. 2017, 7, 4808. [Google Scholar] [CrossRef]

- Zhang, L.; Li, W.; Wang, L.; Bai, T.; Ji, G.-J.; Wang, K.; Tian, Y. Altered functional connectivity of right inferior frontal gyrus subregions in bipolar disorder: A resting state fMRI study. J. Affect. Disord. 2018, 272, 58–65. [Google Scholar] [CrossRef]

- Bolstad, I.; Andreassen, O.A.; Groote, I.; Server, A.; Sjaastad, I.; Kapur, S.; Jensen, J. Effects of haloperidol and aripiprazole on the human mesolimbic motivational system: A pharmacological fMRI study. Eur. Neuropsychopharmacol. 2015, 25, 2252–2261. [Google Scholar] [CrossRef]

- Kim, H.-C.; Tegethoff, M.; Meinlschmidt, G.; Stalujanis, E.; Belardi, A.; Jo, S.; Lee, J.; Kim, D.-Y.; Yoo, S.-S.; Lee, J.-H. Mediation analysis of triple networks revealed functional feature of mindfulness from real-time fMRI neurofeedback. NeuroImage 2019, 195, 409–432. [Google Scholar] [CrossRef]

- Xu, Z.; Liu, C.; Fan, W.; Li, S.; Li, Y. Effect of music therapy on anxiety and depression in breast cancer patients: Systematic review and meta-analysis. Sci. Rep. 2024, 14, 16532. [Google Scholar] [CrossRef]

- Giordano, F.; Losurdo, A.; Quaranta, V.N.; Campobasso, N.; Daleno, A.; Carpagnano, E.; Gesualdo, L.; Moschetta, A.; Brienza, N. Effect of single session receptive music therapy on anxiety and vital parameters in hospitalized COVID-19 patients: A randomized controlled trial. Sci. Rep. 2022, 12, 3154. [Google Scholar] [CrossRef]

- Zhang, Y.; Cai, J.; Zhang, Y.; Ren, T.; Zhao, M.; Zhao, Q. Improvement in Stroke-induced Motor Dysfunction by Music-supported Therapy: A Systematic Review and Meta-analysis. Sci. Rep. 2016, 6, 38521. [Google Scholar] [CrossRef]

- Koelsch, S. Toward a Neural Basis of Music Perception—A Review and Updated Model. Front. Psychol. 2011, 2, 110. [Google Scholar] [CrossRef]

- Brodal, H.P.; Osnes, B.; Specht, K. Listening to Rhythmic Music Reduces Connectivity within the Basal Ganglia and the Reward System. Front. Neurosci. 2017, 11, 153. [Google Scholar] [CrossRef]

- Rajakumar, K.D.; Mohan, J. A systematic review on effect of music intervention on cognitive impairment using EEG, fMRI, and cognitive assessment modalities. Res. Eng. 2024, 22, 102224. [Google Scholar] [CrossRef]

- Yücel, M.A.; Selb, J.J.; Huppert, T.J.; Franceschini, M.A.; Boas, D.A. Functional Near Infrared Spectroscopy: Enabling routine functional brain imaging. Curr. Opin. Biomed. Eng. 2017, 4, 78–86. [Google Scholar] [CrossRef] [PubMed]

- Pereira, J.; Direito, B.; Lührs, M.; Castelo-Branco, M.; Sousa, T. Multimodal assessment of the spatial correspondence between fNIRS and fMRI hemodynamic responses in motor tasks. Sci. Rep. 2023, 13, 2244. [Google Scholar] [CrossRef]

- Klein, F.; Debener, S.; Witt, K.; Kranczioch, C. fMRI based validation of continuous wave fNIRS of supplementary motor area activation during motor execution and motor imagery. Sci. Rep. 2022, 12, 3570. [Google Scholar] [CrossRef]

- Chen, W.-L.; Wagner, J.; Heugel, N.; Sugar, J.; Lee, Y.-W.; Conant, L.; Malloy, M.; Heffernan, J.; Quirk, B.; Zinos, A.; et al. Functional Near-Infrared Spectroscopy and Its Clinical Application in the Field of Neuroscience: Advances and Future Directions. Front. Neurosci. 2020, 14, 724. [Google Scholar] [CrossRef]

- Vanzella, P.; Balardin, J.B.; Furucho, R.A.; Morais, G.A.Z.; Janzen, T.B.; Sammler, D.; Sato, J.R. fNIRS Responses in Professional Violinists While Playing Duets: Evidence for Distinct Leader and Follower Roles at the Brain Level. Front. Psychol. 2019, 10, 164. [Google Scholar] [CrossRef]

- Babiloni, F.; Astolfi, L. Social neuroscience and hyperscanning techniques: Past, present and future. Neurosci. Biobehav. Rev. 2014, 44, 76–93. [Google Scholar] [CrossRef]

- Koelsch, S.; Vuust, P.; Friston, K. Predictive Processes and the Peculiar Case of Music. Trends Cogn. Sci. 2019, 23, 63–77. [Google Scholar] [CrossRef]

- Banker, L.; Tadi, P. Neuroanatomy, Precentral Gyrus; StatPearls Publishing: Treasure Island, FL, USA, 2023; Volume 24. Available online: https://www.ncbi.nlm.nih.gov/books/NBK544218/ (accessed on 2 January 2025).

- DiGuiseppi, J.; Tadi, P. Neuroanatomy, Postcentral Gyrus; StatPearls Publishing: Treasure Island, FL, USA, 2023; Volume 24. Available online: https://www.ncbi.nlm.nih.gov/books/NBK549825/ (accessed on 2 January 2025).

- Purves, D.; Augustine, G.J.; Fitzpatrick, D.; Katz, L.C.; LaMantia, A.-S.; McNamara, J.O.; Williams, S.M. Neuroscience, 2nd ed.; Sinauer Associates: Sunderland, MA, USA, 2001; chapter The Auditory Cortex. [Google Scholar]

- Etchell, A.; Adhikari, A.; Weinberg, L.S.; Choo, A.L.; Garnett, E.O.; Chow, H.M.; Chang, S.-E. A systematic literature review of sex differences in childhood language and brain development. Neuropsychologia 2018, 114, 19–31. [Google Scholar] [CrossRef]

- Hartwigsen, G.; Neef, N.E.; Camilleri, J.A.; Margulies, D.S.; Eickhoff, S.B. Functional Segregation of the Right Inferior Frontal Gyrus: Evidence From Coactivation-Based Parcellation. Cereb. Cortex 2019, 29, 1532–1546. [Google Scholar] [CrossRef] [PubMed]

- Destrieux, C.; Fischl, B.; Dale, A.; Halgren, E. Automatic parcellation of human cortical gyri and sulci using standard anatomical nomenclature. NeuroImage 2010, 53, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Cheng, S.; Wang, J.; Luo, R.; Hao, N. Brain to brain musical interaction: A systematic review of neural synchrony in musical activities. Neurosci. Biobehav. Rev. 2024, 164, 105812. [Google Scholar] [CrossRef] [PubMed]

- Hult, M. Stavanger Symfoniorkester. Available online: https://sso.no/om-oss/ (accessed on 25 October 2024).

- Artinis. Brite MKII. Available online: https://www.artinis.com/blogpost-all/2020/packed-with-new-features-and-remarkable-improvements-the-brite-mkii-makes-optical-imaging-easier-than-ever-7fg2r (accessed on 25 October 2024).

- ISS. fNIRS, Imagent. Available online: https://iss.com/biomedical/imagent (accessed on 27 November 2024).

- Artinis Medical Systems. Theory of NIRS. Available online: https://www.artinis.com/theory-of-nirs (accessed on 18 August 2024).

- Artinis Medical Systems. 3D Digitization and Co-Registration to the MNI Brain Template Using OxySoft. Available online: https://www.artinis.com/blogpost-all/2017/5/30/3d-digitization-and-co-registration-to-the-mni-brain-template-using-oxysoft (accessed on 21 October 2024).

- Zhou, X.; Sobczak, G.; McKay, C.M.; Litovsky, R.Y. Comparing fNIRS signal qualities between approaches with and without short channels. PLoS ONE 2024, 15, e0244186. [Google Scholar] [CrossRef]

- Kothe, C. Lab Streaming Layer. Available online: https://labstreaminglayer.org/#/ (accessed on 25 October 2024).

- Artinis Medical Systems. OxySoft. Available online: https://www.artinis.com/oxysoft (accessed on 4 August 2024).

- The MathWorks Inc. MATLAB, version: 9.14.0.2489007 (R2023a); The MathWorks Inc.: Natick, MA, USA, 2024.

- David Boas. Homer2. Available online: https://se.mathworks.com/matlabcentral/fileexchange/54803-homer2 (accessed on 25 October 2024).

- David Boas. Homer3. Available online: https://se.mathworks.com/matlabcentral/fileexchange/97402-homer3 (accessed on 25 October 2024).

- Delaire, É.; Vincent, T.; Cai, Z.; Stojan, R.; Machado, A. NIRSTORM: A Brainstorm Plugin Specialized in the Analysis of fNIRS Data/. Available online: https://github.com/Nirstorm/nirstorm (accessed on 28 September 2024).

- Delaire, É.; Vincent, T.; Cai, Z.; Grova, C. Workshop fNIRS 2022. Available online: https://github.com/Nirstorm/nirstorm/wiki/Workshop-fNIRS-2022#nirstorm (accessed on 28 August 2024).

- Vincent, T.; Cai, Z.; Machado, A.; Bherer, L.; Lina, J.-M.; Grova, C. Workshop fNIRS 2018. Available online: https://github.com/Nirstorm/nirstorm/wiki/Workshop-PERFORM-Week-2018 (accessed on 29 December 2024).

- Huang, M.X.; Mosher, J.C.; Leahy, R.M. A sensor-weighted overlapping-sphere head model and exhaustive head model comparison for MEG. Phys. Med. Biol. 1999, 44, 423. [Google Scholar] [CrossRef]

- Tadel, F.; Bock, E.; Mosher, J.C.; Leahy, R.; Baillet, S. Brainstorm, Tutorial 20: Head Modeling. Available online: https://neuroimage.usc.edu/brainstorm/Tutorials/HeadModel (accessed on 1 January 2025).

- Baillet, S.; Mosher, J.C.; Leahy, R.M. Electromagnetic Brain Mapping. IEEE Signal Process. Mag. 2001, 18, 14–30. [Google Scholar] [CrossRef]

- Tadel, F.; Bock, E.; Ramirez, R.R.; Mosher, J.C.; Leahy, R.M.; Baillet, S. Tutorial 22: Source Estimation. Available online: https://neuroimage.usc.edu/brainstorm/Tutorials/SourceEstimation (accessed on 29 December 2024).

- Tadel, F.; Baillet, S.; Mosher, J.C.; Pantazis, D.; Leahy, R.M. Brainstorm: A User-Friendly Application for MEG/EEG Analysis. Comput. Intell. Neurosci. 2011, 13, 879716. [Google Scholar] [CrossRef]

- Strangman, G.E.; Li, Z.; Zhang, Q. Depth Sensitivity and Source-Detector Separations for Near Infrared Spectroscopy Based on the Colin27 Brain Template. PloS ONE 2013, 8, e66319. [Google Scholar] [CrossRef]

- Vincent, T. fNIRS Fluences. Available online: https://thomasvincent.xyz/nst_data/ (accessed on 20 September 2024).

- Gaser, C.; Dahnke, R.; Thompson, P.M.; Kurth, F.; Luders, E.; The Alzheimer’s Disease Neuroimaging Initiative. CAT: A computational anatomy toolbox for the analysis of structural MRI data. GigaScience 2024, 13, giae049. [Google Scholar] [CrossRef]

- Artinis Medical Systems. oxysoft2matlab. Available online: https://github.com/jayd1860/oxysoft2matlab (accessed on 25 October 2024).

- Rubia, K.; Alegria, A.A.; Cubillo, A.I.; Smith, A.B.; Brammer, M.J.; Radua, J. Effects of Stimulants on Brain Function in Attention-Deficit/Hyperactivity Disorder; A Systematic Review and Meta-Analysis. Biol. Psychiatry 2014, 76, 616–628. [Google Scholar] [CrossRef]

- Hart, H.; Radua, J.; Nakao, T.; Mataix-Cols, D.; Rubia, K. Meta-analysis of Functional Magnetic Resonance Imaging Studies of Inhibition and Attention in Attention-deficit/Hyperactivity Disorder. JAMA Psychiatry 2013, 70, 185–198. [Google Scholar] [CrossRef]

- Monden, Y.; Dan, H.; Nagashima, M.; Dan, I.; Kyutoku, Y.; Okamoto, M.; Yamagata, T.; Momoi, M.Y.; Watanabe, E. Clinically-oriented monitoring of acute effects of methylphenidate on cerebral hemodynamics in ADHD children using fNIRS. Clin. Neurophysiol. 2012, 123, 1147–1157. [Google Scholar] [CrossRef] [PubMed]

- Alegria, A.A.; Wulff, M.; Brinson, H.; Barker, G.J.; Norman, L.J.; Brandeis, D.; Stahl, D.; David, A.S.; Taylor, E.; Giampietro, V.; et al. Real-Time fMRI Neurofeedback in Adolescents with Attention Deficit Hyperactivity Disorder. Hum. Brain Mapp. 2017, 38, 3190–3209. [Google Scholar] [CrossRef] [PubMed]

- Lam, S.L.; Criaud, M.; Lukito, S.; Westwood, S.J.; Agbedjro, D.; Kowalczyk, O.S.; Curran, S.; Barret, N.; Abbott, C.; Liang, H.; et al. Double-blind, sham-controlled randomized trial testing the efficacy of fMRI neurofeedback on clinical and cognitive measures in children with ADHD. Am. J. Psychiatry 2022, 179, 947–958. [Google Scholar] [CrossRef]

- Gu, Y.; Yang, L.; Chen, H.; Liu, W.; Liang, Z. Improving Attention through Individualized fNIRS Neurofeedback Training: A Pilot Study. Brain Sci. 2022, 12, 862. [Google Scholar] [CrossRef] [PubMed]

- Fagerland, S.M.; Berntsen, H.R.; Fredriksen, M.; Endestad, T.; Skouras, S.; Rootwelt-Revheim, M.E.; Undseth, R.M. Exploring protocol development: Implementing systematic contextual memory to enhance real-time fMRI neurofeedback. J. Electr. Bioimpedance 2024, 15, 41. [Google Scholar] [CrossRef]

- Zinos, A.; Wagner, J.C.; Beardsley, S.A.; Chen, W.-L.; Conant, L.; Malloy, M.; Heffernan, J.; Quirk, B.; Prost, R.; Maheshwari, M.; et al. Spatial correspondence of cortical activity measured with whole head fNIRS and fMRI: Toward clinical use within subject. NeuroImage 2024, 290, 120569. [Google Scholar] [CrossRef]

- Matziorinis, A.M.; Koelsch, S. The promise of music therapy for Alzheimer’s disease: A review. Ann. N. Y. Acad. Sci. 2022, 1516, 11–17. [Google Scholar] [CrossRef]

- Matziorinis, A.M.; Flo, B.K.; Skouras, S.; Dahle, K.; Henriksen, A.; Hausmann, F.; Sudmann, T.T.; Gold, C.; Koelsch, S. A 12-month randomised pilot trial of the Alzheimer’s and music therapy study: A feasibility assessment of music therapy and physical activity in patients with mild-to-moderate Alzheimer’s disease. Pilot Feasibility Stud. 2023, 9, 61. [Google Scholar] [CrossRef]

- Balinisteanu, T.; Priest, K. Neuroaesthetics; A Methods-Based Introduction; Palgrave Macmillan: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Tachibana, A.; Noah, J.A.; Ono, Y.; Irie, S.; Tatsumoto, M.; Taguchi, D.; Tokuda, N.; Ueda, S. Rock music improvisation shows increased activity in Broca’s area and its right hemisphere homologue related to spontaneous creativity. BMC Res. Notes 2024, 17, 61. [Google Scholar] [CrossRef]

- Høffding, S.; Nielsen, N.; Laeng, B. Mind surfing: Attention in musical absorption. Cogn. Syst. Res. 2024, 83, 101180. [Google Scholar] [CrossRef]

- Klein, F. Optimizing spatial specificity and signal quality in fNIRS: An overview of potential challenges and possible options for improving the reliability of real-time applications. Front. Neuroergonomics 2024, 5, 1286586. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fagerland, S.M.; Løve, A.; Helliesen, T.K.; Martinsen, Ø.G.; Revheim, M.-E.; Endestad, T. Method for Using Functional Near-Infrared Spectroscopy (fNIRS) to Explore Music-Induced Brain Activation in Orchestral Musicians in Concert. Sensors 2025, 25, 1807. https://doi.org/10.3390/s25061807

Fagerland SM, Løve A, Helliesen TK, Martinsen ØG, Revheim M-E, Endestad T. Method for Using Functional Near-Infrared Spectroscopy (fNIRS) to Explore Music-Induced Brain Activation in Orchestral Musicians in Concert. Sensors. 2025; 25(6):1807. https://doi.org/10.3390/s25061807

Chicago/Turabian StyleFagerland, Steffen Maude, Andreas Løve, Tord K. Helliesen, Ørjan Grøttem Martinsen, Mona-Elisabeth Revheim, and Tor Endestad. 2025. "Method for Using Functional Near-Infrared Spectroscopy (fNIRS) to Explore Music-Induced Brain Activation in Orchestral Musicians in Concert" Sensors 25, no. 6: 1807. https://doi.org/10.3390/s25061807

APA StyleFagerland, S. M., Løve, A., Helliesen, T. K., Martinsen, Ø. G., Revheim, M.-E., & Endestad, T. (2025). Method for Using Functional Near-Infrared Spectroscopy (fNIRS) to Explore Music-Induced Brain Activation in Orchestral Musicians in Concert. Sensors, 25(6), 1807. https://doi.org/10.3390/s25061807