4.2. Evaluation Metrics

We used four commonly used evaluation metrics—Mean Intersection over Union (mIoU), Mean Precision (MP), Mean Pixel Accuracy (MPA), and Dice coefficient—to assess the model’s performance in the leaf lesion segmentation task. Below are the definitions of these metrics.

Mean Intersection over Union (MIoU): The MIoU value is an important metric for measuring image segmentation accuracy, representing the average ratio of the intersection and union of the true labels and predicted values for a given class. It is defined as

where

k denotes the number of disease categories,

i represents the ground truth,

j represents the predicted value,

indicates the number of pixels in class

i predicted as class

j,

and

represent false positives and false negatives, respectively, and

is the number of true positives.

Mean Pixel Accuracy (MPA) evaluates the pixel-level prediction accuracy of the model, representing the proportion of correctly classified pixels to the total number of pixels. It is defined as

where

N represents the number of disease categories detected, TP is the number of true positives, and FN is the number of false negatives.

The Dice coefficient measures the similarity between the predicted results and the ground truth. It is defined as

where

A represents the predicted region, and

B represents the ground truth region.

In addition to these performance metrics, we also evaluated the model’s parameter count and frames per second (FPS) to assess the computational demand and efficiency of the model.

4.3. Comparison of Pixels and Base Channels

In

Table 3, we detail the specific impact of different input image pixels on the segmentation performance of FFAE-UNet. Through experiments, we compared various pixels settings to evaluate the balance between accuracy, computational burden, and segmentation effectiveness. The results show that the input image pixels directly affects the segmentation accuracy of the model, particularly in recognizing edge details and small-scale diseased areas.

For example, the model’s MIoU at pixels of 128 × 128, 224 × 224, and 256 × 256 are 82.03%, 83.64%, and 84.35%, respectively. While the segmentation performance declines at lower pixels, despite the significant reduction in computational cost, this might be due to the loss of detail information leading to reduced segmentation accuracy. At medium and high pixels settings (e.g., 384 × 384 and 448 × 448), the model achieves better segmentation results with balanced computational efficiency, capturing details accurately while maintaining controlled inference time.

These findings indicate that appropriately increasing the input pixels can significantly enhance the model’s segmentation performance, especially for refined segmentation in boundary regions and small diseased areas.

As shown in

Table 4, we configured multiple input convolution feature map channel numbers to further evaluate the impact of initial channel numbers on the segmentation performance of FFAE-UNet. By comparing different channel configurations through experiments, we observed that changes in the feature map channel numbers significantly influence the model’s feature extraction capability, segmentation accuracy, and computational cost.

Lower channel numbers reduce computational cost but may result in insufficient feature representation, thereby affecting segmentation performance. Conversely, higher channel numbers enable richer feature representation, particularly excelling in the recognition of complex diseased areas. The experimental results demonstrate that selecting an appropriate initial feature map channel number achieves an optimal balance between accuracy and efficiency, enabling FFAE-UNet to perform more effectively in pear leaf disease segmentation tasks.

Overall, the choice of pixels and initial channel numbers significantly impacts the model’s computational cost and efficiency. In particular, higher pixels increase inference time, which is especially critical for large-scale datasets or real-time applications. These comparative results provide valuable guidance for selecting pixels in practical applications to achieve an optimal balance between accuracy and efficiency.

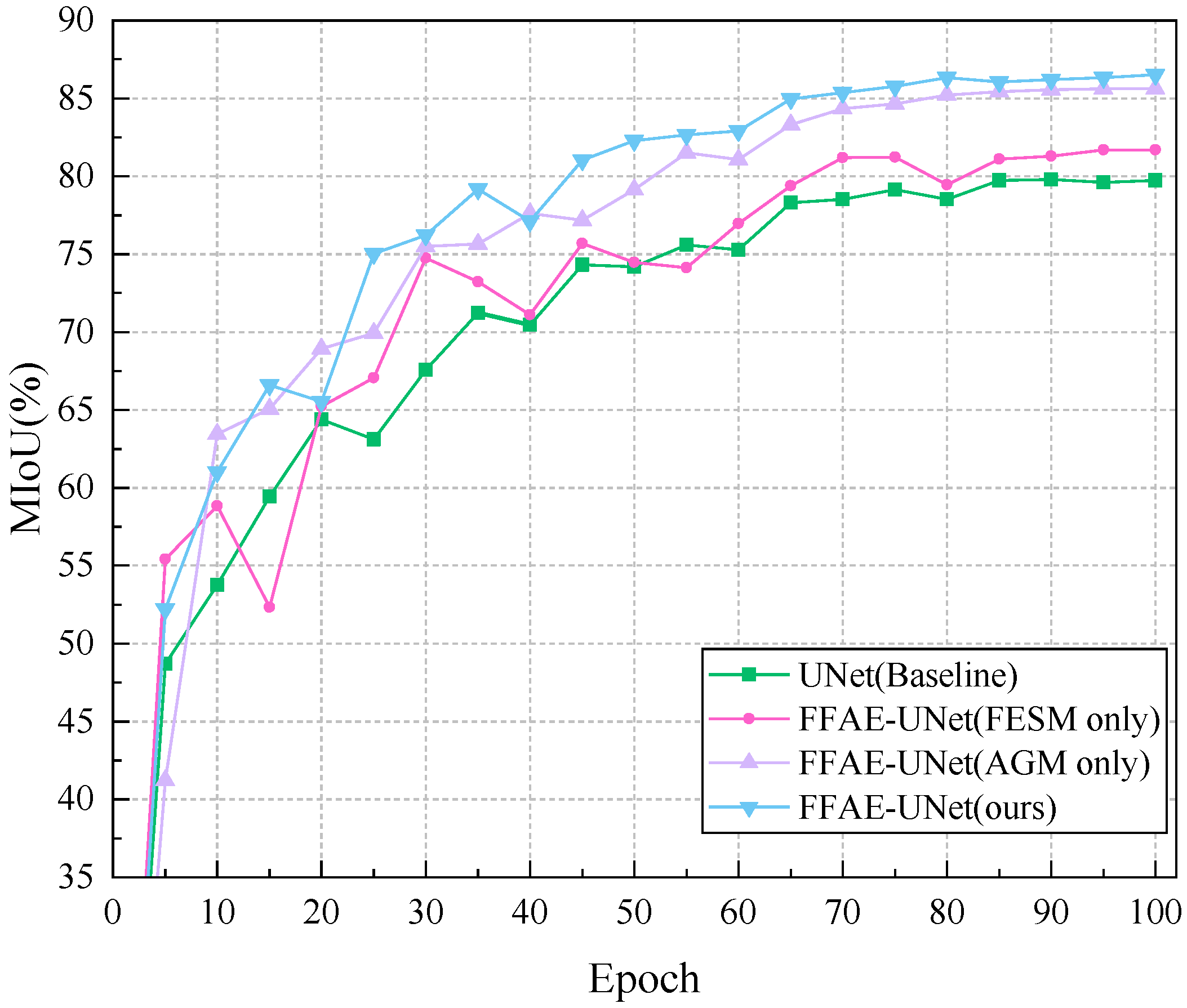

4.4. Effectiveness Analysis of Individual Modules

In this section, we analyze the impact of specific module adjustments within the model on segmentation performance. The primary evaluation metrics selected are MIoU, MPA, and Dice coefficient.

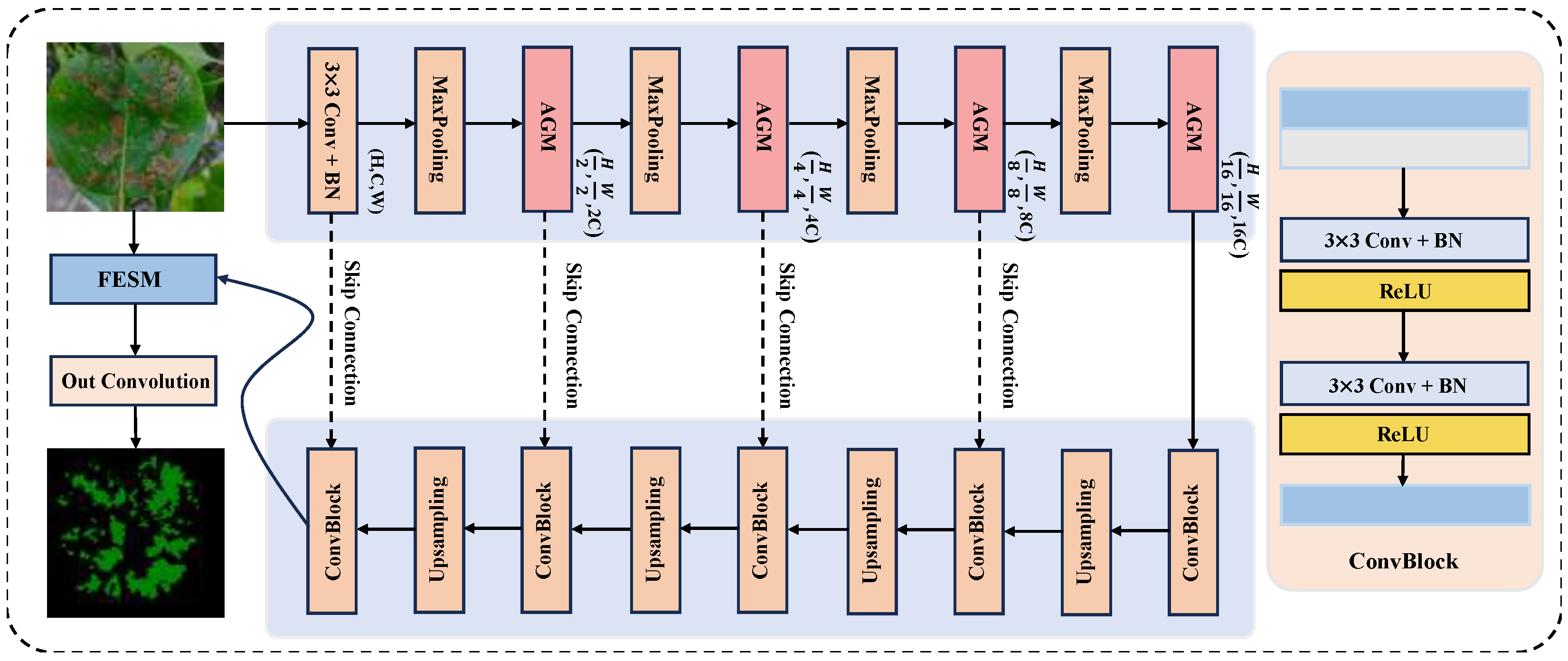

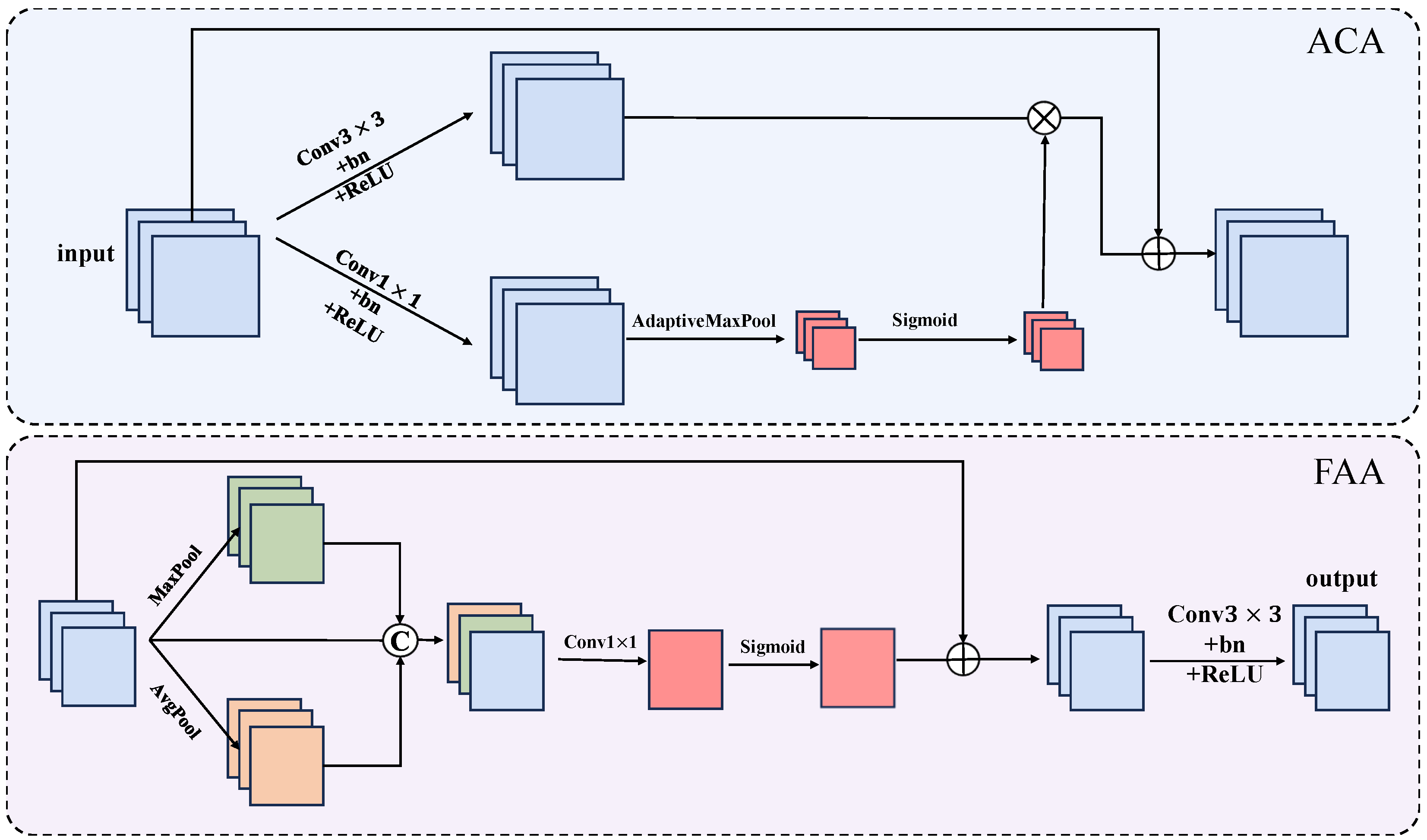

In

Table 5, to validate the effectiveness of the proposed AGM module, we replace it with various commonly used attention mechanisms in the FFAE-UNet and conducted comparative experiments. In the first set of experiments, the AGM was replaced with the ECA module. In the second set, it was substituted with the BAM module. For the third set, the SE module was used in place of AGM. In the fourth set, the GAM module was introduced as a comparison. Finally, in the last set of experiments, the AGM was replaced with the CBAM module.

In each replacement experiment, we evaluated the feature extraction performance of various modules using metrics such as MIoU, cross-entropy loss, and Dice coefficient, and compared them with the FESM module. The replacement experiments validated the effectiveness of the AGM module in the FFAE-UNet model. The experimental results demonstrated that the AGM module significantly outperformed other attention mechanism modules in handling complex backgrounds and detailed features. Its outstanding performance, with an MIoU of 86.60%, MPA of 91.85%, and Dice coefficient of 92.58%, highlights its advantages in enhancing the model’s ability to focus on diseased areas and improve segmentation accuracy.

In

Figure 5, we perform Grad-CAM [

27] heatmap analysis on the models from the replacement experiments and compare them with the FFAE-UNet. In the heatmap, the deeper red areas indicate a higher attention of the model to those regions, reflecting the model’s certainty in identifying the disease areas. Especially in the smaller or more complex disease regions marked by red boxes, FFAE-UNet demonstrates superior segmentation results compared to other models, which show missed detections or lower certainty. For more prominent disease areas, FFAE-UNet shows significantly better attention to the disease regions compared to the models with CBAM, ECA, and SE modules as replacements.

The above experiments demonstrate that incorporating the AGM module leads to better performance in disease boundary localization and handling detailed features. This validates the effectiveness of the proposed module in accurately capturing key features for the pear leaf disease segmentation task.

4.5. Comparison with Other Model

In order to verify the model effect of our proposed FFAE-UNet, we compare with some widely used network structures that show excellent performance, as well as architectures also based on UNet. In order to ensure that the experiments are not affected by other factors, all experiments are conducted under a unified benchmark and evaluation index. In the comparative experiments, our proposed network model has obvious advantages in MIoU, MPA, and Dice coefficient. The specific experimental results are shown in

Table 6.

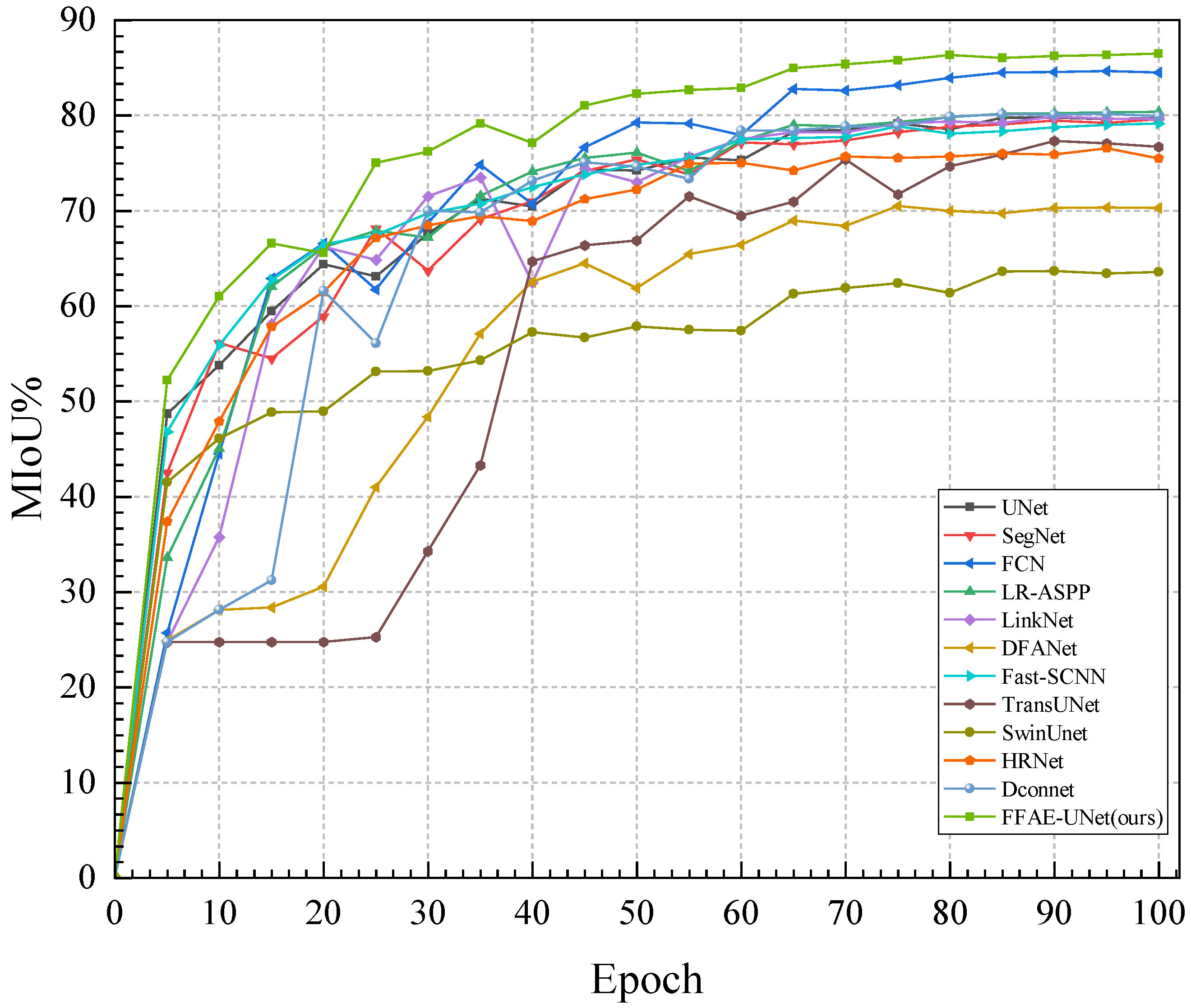

Specifically, on this dataset, the MIoU index of FFAE-UNet reaches 86.50%, which shows that FFAE-UNet has significantly improved the segmentation effect. Compared with other comparative segmentation methods in the experiments, FFAE-UNet has better segmentation performance in almost all other indicators. As shown in

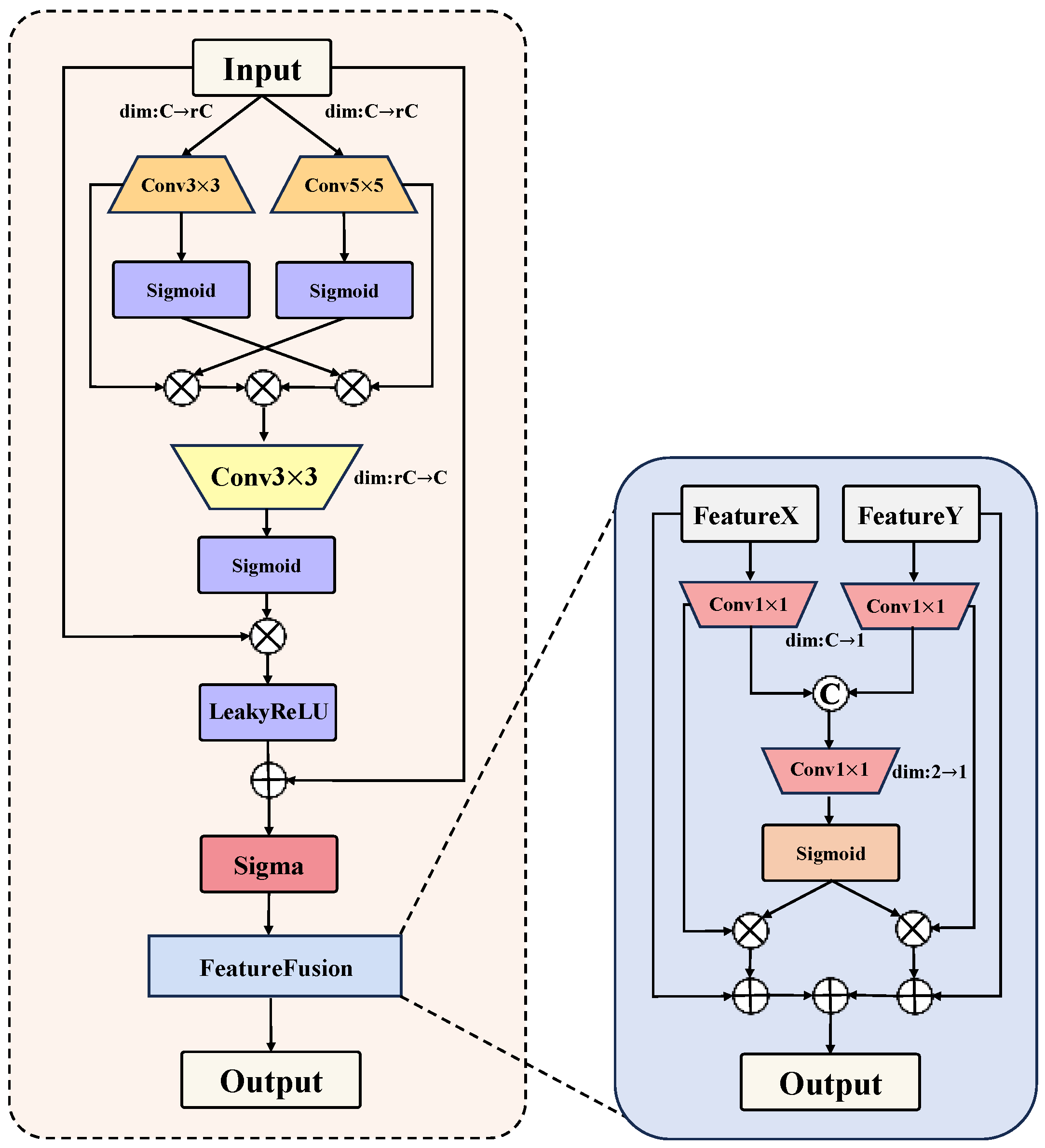

Figure 6. The MIoU of Unet, SegNet, FCN, LR-ASPP, LinkNet, DFANet, and Fast-SCNN based on CNN methods are 79.73%, 79.49%, 84.63%, 80.37%, 79.61%, 70.47%, and 78.20%, respectively. The MIoU of TransUNet and SwinUnet based on the Transformer method are 77.43% and 63.57%, respectively. The MIoU of HRNet and Dconnet based on the existing hybrid method are 76.53% and 80.28%, respectively. The improvement in MIoU is primarily due to the attention mechanism introduced in our model, which effectively captures the required features while ignoring irrelevant information. Additionally, the introduced feature enhancement module combines multi-scale convolutions and performs channel aggregation, effectively enhancing detailed features and integrating them with the upsampling results to compensate for the information loss caused by the encoding–decoding process.

The excellent performance of FFAE-UNet in the Average Pixel Accuracy (MPA) and Dice coefficient experiments shows that the proposed model can effectively locate and accurately identify the boundary area of pear leaf diseases. In contrast, the segmentation effect of other models in this dataset is inferior. For example, although DconnNet, LR-ASPP and TransUNet perform better in their respective types of networks with high MIoU values, there is a certain gap in the MPA and Dice coefficient, which is indicated by MPA values of 88.96%, 88.53%, and 85.33%, respectively. The Dice coefficients are 88.65%, 88.63%, and 86.58%, respectively. In contrast, the MPA and Dice values of our proposed FFAE-UNet reach 91.85% and 92.58%, respectively, showing a significant improvement. The above experimental results fully verify the superior performance of FFAE-UNet in plant disease segmentation tasks.

The parameters and FPS of FFAE-UNet are 22.68 M and 24.75, respectively, which are within an acceptable range, and are much smaller than that of SwinUnet and other models. Through the above experiments, FFAE-UNet is able to achieve the accurate and stable segmentation of pear leaf diseases in complex background environments. Additionally, when handling large-scale complex tasks, it effectively optimizes the use of computational resources, demonstrating good computational efficiency.

To comprehensively evaluate the performance of the proposed model in the task of pear leaf disease segmentation, the segmentation results of various models on three different diseases are shown in

Figure 7. The yellow markings represent the segmentation results for Curl disease, the red markings indicate the segmentation results for Rust disease, and the green markings correspond to the segmentation results for Slug disease.

From the segmentation results, it can be seen that some networks (such as UNet, SegNet, FCN and DconnNet) have the missegmentation of pear leaf disease regions in the segmentation task; especially when dealing with the vein, shadow, and background junction regions, the disease feature extraction ability is weak. In contrast, our FFAE-UNet can segment the disease region more accurately, and the segmentation results are basically consistent with the actual disease distribution. Compared with other networks such as UNet, FFAE-UNet performs well in distinguishing the edge area of the disease from the shadow part, and can achieve accurate segmentation even in complex backgrounds.

In addition, FFAE-UNet can effectively retain semantic information through multi-scale feature extraction, enhance the capture of detailed features, and enrich the feature information of the network when dealing with uncontrollable natural factors such as leaf overlap, occlusion, and illumination changes at different angles. Experimental results show that FFAE-UNet can effectively focus on the disease area by feature enhancement in the channel dimension and spatial dimension respectively. Multi-scale feature capture further improves the model’s ability to perceive the target, especially in the segmentation accuracy of details and edge regions.

To evaluate the performance of the model more clearly, we used Grad-CAM to visualize the attention of the model to the diseased area and generate the corresponding heat map. In

Figure 8, we selected the network with a higher Dice coefficient for comparison. Through these visualizations, it is clearly observed that FFAE-UNet shows excellent ability to identify and precisely locate pear leaf disease features.

The model shows excellent accuracy and coverage under a variety of disease types, and especially in the small and dense spots formed by rust disease, FFAE-UNet is more accurate in locating the focus. Thanks to the channel aggregation and multi-scale convolution module in the model, it has high sensitivity to the disease area of complex background. In the curl area of leaf edge caused by leaf curl disease, the performance advantage of FFAE-UNet is more significant, which can accurately highlight the disease area and effectively reduce the interference of background noise. In the case of large disease spots, FFAE-UNet can still accurately focus on the disease site. This is thanks to the adaptive channel and spatial attention mechanism of FFAE-UNet, which can accurately highlight the diseased area while reducing the attention to background noise.

Through these heat map analysis, the design advantage of FFAE-UNet lies in its significant attention and accurate positioning of the disease area, which makes it show higher robustness and detail processing ability in pear leaf disease detection tasks.