Abstract

Laser space networks are an important development direction for inter-satellite communication. Detecting the angle of arrival (AOA) of multiple satellites in a wide field of view (FOV) is the key to realize inter-satellite laser communication networking. The traditional AOA detection method based on the lens system has a limited FOV. In this paper, we demonstrate a system that uses a spatially distributed sensor array to detect the AOA in a wide FOV. The basic concept is to detect AOA using the signal strength of each sensor at different spatial angles. An AOA detection model was developed, and the relationship of key structural parameters of the spatially distributed sensor array on the FOV and angular resolution was analyzed. Furthermore, a spatially distributed sensor array prototype consisting of 5 InGaAs PIN photodiodes distributed on a 3D-printed structure with an inclination angle of 30° was developed. In order to improve the angle calculation accuracy, a multi-sensor data fusion algorithm is proposed. The experimental results show that the prototype’s maximum FOV is 110°. The root mean square error (RMSE) for azimuth is 0.6° within a 60° FOV, whereas the RMSE for elevation is 0.67°. The RMSE increases to 1.1° for azimuth and 1.7° for elevation when the FOV expands to 110°. The designed spatially distributed sensor array has the advantages of a wide FOV and low size, weight, and power (SWaP), presenting great potential for multi-satellite laser communication applications.

1. Introduction

In recent years, low-earth-orbit satellite (LEO) constellations have been developing rapidly. Through inter-satellite laser links, satellites can achieve high-speed data exchange, significantly enhancing global coverage and low-latency communication performance [1,2,3,4]. However, current LEO satellite laser communication terminals mainly operate in a point-to-point configuration, requiring multiple communication terminals for inter-satellite connectivity. This approach significantly increases the satellite’s size, weight, and power (SWaP), as well as system complexity. To address this problem, a laser terminal using a low-switching wide field of view (FOV) transceiver is proposed to achieve one-to-many communication [5,6,7,8]. Through distributed multi-transmitting units, these optical terminals facilitate flexible transmission across multiple beams and angles. Furthermore, the laser communication terminal must possess wide field of view detection capabilities to obtain the approximate position information of target satellites for the requirements of multi-target positioning and tracking, which is used for the multi-beam coarse alignment. With the integrated precise tracking module, the terminal can achieve rapid switching between one-to-many links, ensuring efficient and reliable inter-satellite communication.

The popular approach for angle of arrival (AOA) wide field of view detection involves utilizing a lens system to focus the incident light onto a sensor array or CCD. The position of the beam is calculated by the change of the centroid of the light spot. In 2017, Williams AJ et al. used a 4 × 4 silicon photomultiplier (SiPM) array with a 20° FOV [9]. In 2022, Zhang et al. proposed a detection system that consisted of a fisheye lens and a CCD with a resolution of 640 pixels × 512 pixels. The system’s measurement FOV was 120° × 96°, and the angle error was 0.5° [10]. The lens-based AOA detection has limitations such as a restricted field of view, optical distortions, and large SWaP consumption, which decrease its applicability in multi-satellite laser communication applications [11,12].

Another approach is to detect the AOA using the signal strength of the sensor array at different spatial angles. It has no lens system and consists of multiple photodiode sensors, which ha the advantages of a wide field of view and low-SWaP. In 2017, Eren F et al. designed a hemispherical 5 × 5 photodetector array and evaluated it using Monte Carlo simulations, showing an estimation error within 5° for elevation and azimuth [13]. In 2018, Jakubaszek M et al. proposed a system that used five sensors distributed on the side panels of a truncated pyramid to detect the AOA. Within a 120° field of view, the estimated errors of elevation and azimuth angles were mostly less than 6° [14]. In 2020, Velazco JE et al. proposed an omnidirectional optical communication/navigation device based on a truncated icosahedron with a measurement field of view of 4π and an RMS of 0.076° [15,16]. In 2024, Patel VH et al. designed a deployable optical receiving aperture consisting of five sensors and tested it for six angles. The maximum angle error exceeded 5°, and no two-dimensional test was performed [17,18].

These research institutions have clarified the AOA detection principle of spatially distributed sensor arrays, developed prototypes, and conducted verification experiments, but the impact and optimization design of key parameters of such arrays, data processing methods, and error analysis are not in-depth enough. There is also a lack of systematic design methods for spatially distributed sensor arrays.

In this paper, we first present the principle of AOA detection using the spatially distributed sensor array. Subsequently, a detailed analysis is conducted to evaluate the influence of the structure on the field of view and angular resolution, leading to the optimization of the spatially distributed sensor array design. A multi-sensor data fusion algorithm is proposed to enhance measurement accuracy. Finally, the primary sources of measurement errors in the spatially distributed sensor array were discussed, and error calibration was implemented. The two-dimensional angle of arrival was also tested, and the test results verified the effectiveness of the proposed multi-sensor data fusion algorithm.

2. Methods and Design

2.1. Detection Principle

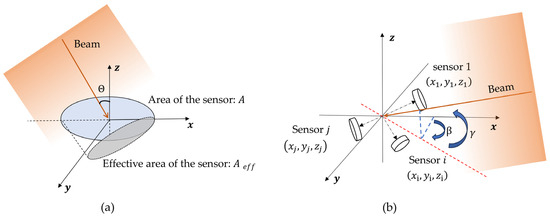

The spatially distributed sensor array is a polyhedral structure, with each face equipped with a sensor that has identical spectral responsivity and photosensitive area. The output of the optical sensor has an angular dependence on the incident light [14], as shown in Figure 1a. The effective area of the detector can be expressed as:

where is the effective area of the sensor, is the area of the sensor, and is the angle between the incident light and the sensor normal vector.

Figure 1.

(a) Schematic diagram of the relationship between sensor effective area and incident light. (b) Schematic diagram of sensor position and AOA.

The incident light generates photocurrent proportional to the irradiance and the effective area. It can be expressed by the following equation:

where is the voltage signal on the load resistor , is the responsivity of the photodetector at a wavelength of , and is the light flux received by the sensor from normal incident light. AOA is calculated by combining the optical power received by each sensor at different positions in space. The position of each sensor in the spatially distributed sensor array is shown in Figure 1b. The matrix , composed of the coordinate matrices of the sensor normal vectors, can be expressed as:

where represents the position coordinate vector of each sensor, which is expressed as:

The optical power incident on each sensor is given by:

where is the energy flux density vector of the incident light, which can be expressed in the Cartesian coordinate system as follows:

where is the total energy flux density of the incident light, is the azimuth angle, and is the elevation angle. The output matrix of the spatially distributed sensor array is expressed as:

According to Equation (6), there are three parameters to be measured: and . Therefore, at least three sensor output powers with uncorrelated normal vectors should be selected from to calculate the AOA. Matrix is extracted as a block from matrix . The rank of matrix satisfies and its corresponding power output matrix is . The vector can be calculated by:

Thus the and for the angle of arrival are given by: [18]:

2.2. Structural Design of the Spatially Distributed Sensor Array

The number and location of sensors have a direct relationship with the field of view and measurement accuracy of the spatially distributed sensor array. We developed a support structure consisting of one top panel and side panels, where the angle between the top panel and the side panels is , and each panel is equipped with the same sensor. According to Equations (7) and (8), the rank of matrix should satisfy:

It indicates that the minimum number of is 2. The side panels are evenly distributed around the top panel, which is beneficial for analyzing the sensor field of view and error. The field of view of a single sensor is denoted as . In practical applications, the sensor is encapsulated, resulting in . The number of side panels should satisfy:

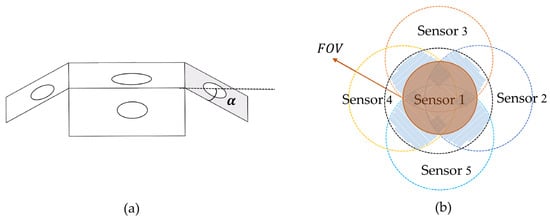

The commercially packaged photodiodes have a field of view of 120°, so we choose to design the spatially distributed sensor array and improve the redundancy of the sensors. Figure 2a shows a schematic diagram of a spatially distributed sensor array when . At this time, the matrix can be expressed as:

Figure 2.

(a) Schematic diagram of a spatially distributed sensor array when . (b) Field of view of spatially distributed sensor array when .

Substituting into Equation (7), the output power of each sensor can be expressed as:

The output power in the field of view of each sensor should be satisfied:

The top view of the field of view of the spatially distributed sensor array when M = 4 is shown in Figure 2b. It can be seen that the field of view of the spatially distributed sensor array is not a regular area. The solid angle formed by the intersection of the fields of view of Sensor 3 and Sensor 5 with those of Sensor 2 and Sensor 4 is defined as the field of view of the spatially distributed sensor array in this paper. Substituting and Equation (15) into Formula (18), the field of view of the spatially distributed sensor array can be expressed as:

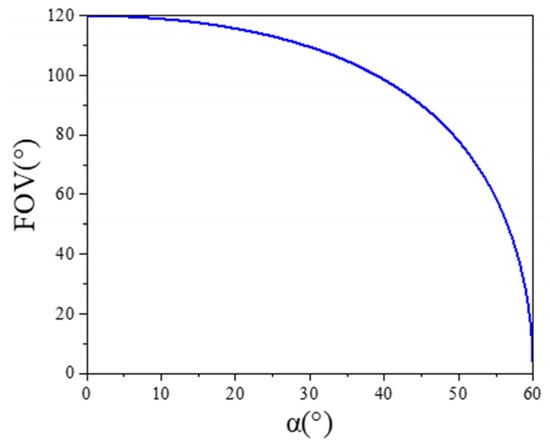

The relationship between the FOV of the spatially distributed sensor array and of the spatially distributed sensor array is shown in the Figure 3. As increases, the field of view of a spatially distributed sensor array decreases.

Figure 3.

Schematic diagram of the relationship between the FOV of the spatially distributed sensor array and .

From Equation (8), we can see that the sensor combination matrix involved in the AOA solution needs to satisfy . The spatially distributed sensor is a symmetrical structure. Therefore, Sensors 1, 2, and 3 are selected to analyze the impact of on sensor measurement resolution. The azimuth and elevation angles can be expressed as:

The resolution of a spatially distributed sensor array can be expressed as:

where , , and represent the changes in sensor output power caused by changes in the incident angle. The resolution of the spatially distributed sensor array is determined by , , and . However, according to Equation (2), the sensor output is proportional to the cosine of the incident angle. When and , small changes in the azimuth angle result in negligible changes in the output power of Sensors 1 and 3. Similarly, small changes in the elevation angle result in negligible changes in the output power of Sensors 1 and 2. In this case, the resolution of the spatially distributed sensor array is determined by a single sensor, leading to a relatively poor resolution, which can be expressed as:

Substituting Equations (13)–(15) into Equations (24) and (25), the elevation and azimuth resolutions can be expressed as:

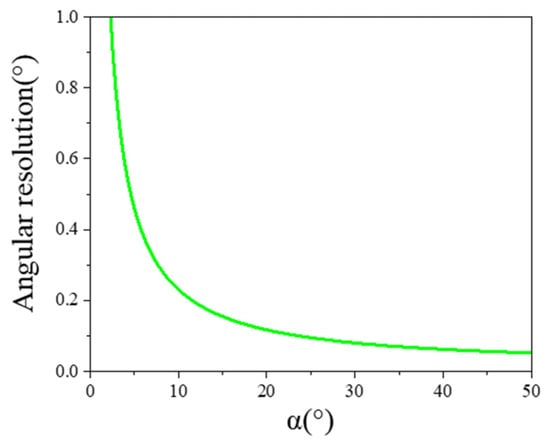

and represent the minimum resolvable power provided by the sensors, and they are equal. When and , based on Equations (26) and (27), both the elevation and azimuth angles exhibit the same resolution. The relationship between angular resolution and is shown in Figure 4. As increases, the resolution of the spatially distributed sensor array improves.

Figure 4.

Schematic diagram of the relationship between angular resolution of the spatial distribution sensor and .

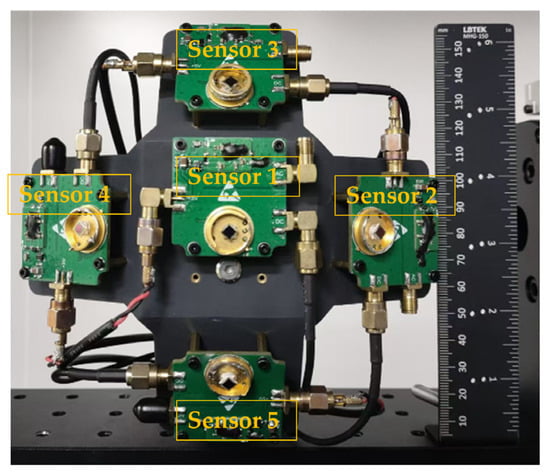

Based on the above analysis, as increases, the field of view decreases while the resolution improves. Conversely, as decreases, the field of view increases and the resolution decreases. Considering both the field of view and angular resolution, this paper selects to achieve a field of view of 110° with a detection accuracy better than 1°. The spatially distributed sensor array designed in this paper is shown in Figure 5. The five sensors are placed on a 3D-printed panel with a size of 150 × 150 mm, and the total weight is only 140 g. The 3D printed panel consists of a top panel and four side panels, with α being 30°. After actual measurements, the error in α is within 0.1°.

Figure 5.

Image of the spatially distributed sensor array.

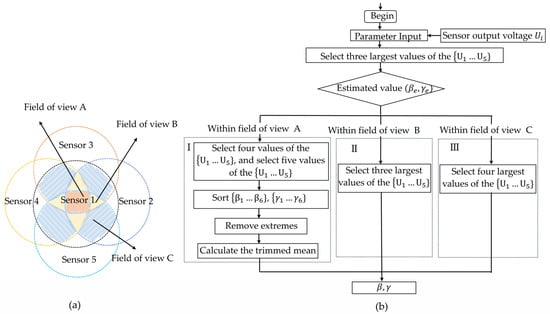

2.3. Multi-Sensor Data Fusion Algorithm for the Spatially Distributed Sensor Array

Fluctuations in power density during beam propagation and interference from background light can introduce noise, leading to inaccuracies in the sensor output. When angle calculations are performed using only three sensors, significant angular errors may occur. In fact, the spatially distributed sensor array has sensor redundancy. As shown in Figure 6a, within field of view A and field of view B, data from multiple sensors can be utilized to conduct linear estimation of the AOA, thereby reducing errors caused by noise and improving the accuracy of the sensor output. Therefore, we propose a multi-sensor data fusion algorithm. Among the outputs of five sensors, the three maximum values are selected to calculate the AOA and estimate the direction of the incident beam. Different solution strategies are applied depending on the field of view in which the AOA is located. The flowchart of the multi-sensor data fusion algorithm is shown in Figure 6b.

Figure 6.

(a) Schematic diagram of the field of view division of a spatially distributed sensor array. (b) Multi-sensor data fusion algorithm flow chart.

Initially, the three maximum values in are substituted into Equations (8) and (9) to estimate AOA.Then, the following algorithm is employed based on the range of the estimated AOA.

I: If estimating the AOA within field of view A, any four power values can be selected from the five power values derived from the outputs of the five sensors, yielding five combinations. Each combination is substituted into Equations (8) and (9) to obtain five AOA solutions. By substituting all five power values into Equations (8) and (9), one AOA solution is obtained. The azimuth and elevation angles of the six solutions are then sorted in ascending order, with the maximum and minimum values removed, and the trimmed mean is calculated as and .

II: If the estimated AOA is in the field of view B, the four maximum values in are substituted into Equations (8) and (9) to calculate and .

III: If the estimated AOA is in the field of view C, the three maximum values in are substituted into Equations (8) and (9) to calculate and .

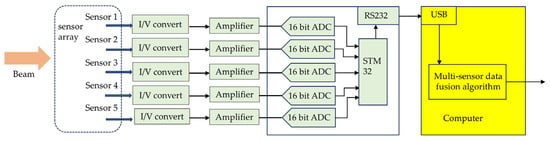

3. Digital Processing of Spatially Distributed Sensor Array

The signal acquisition and data processing module of the AOA detection system using a spatially distributed sensor array are shown in Figure 7. A spatially distributed sensor array outputs five currents independently, which are converted into voltage signals. After amplification, they are sent to the A/D converter (ADC) and converted into digital signals. The microcontroller reads values from five channels and transmits them to the computer via a USB port, where they are processed using a multi-sensor data fusion algorithm to calculate the AOA. In future research, we plan to use FPGA boards for AOA calculation to improve the output bandwidth of the spatially distributed sensor array.

Figure 7.

Schematic circuit diagram of digital processing.

4. Experiment Results

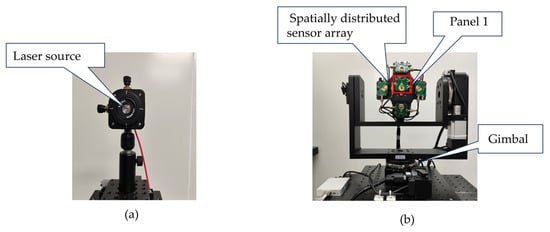

In order to analyze the angular measurement performance of the developed spatially distributed sensor array, we constructed an indoor angle of arrival testing platform. The experimental system uses a 1550 nm fiber laser as the light source, as shown in Figure 8a. The divergence angle of the beam is 5°, and the distance to the spatially distributed sensor array is 6.5 m, with the radius of the light spot reaching the sensor array being approximately 570 mm. The ADC output data width is 16 bits. The spatially distributed sensor array is mounted on a two-dimensional gimbal with an accuracy of 0.001°. By adjusting the angles of the gimbal, the orientation of the sensor can be altered, enabling the measurement of the incident angle of the beam, as shown in Figure 8b.

Figure 8.

The experimental platform. (a) Laser source. (b) Spatially distributed sensor array and two-dimensional gimbal.

Before the testing, the primary sources of errors in the angle of arrival detection system of the spatially distributed sensor array were analyzed, and the error calibration was implemented. Firstly, this work uses five identical photodetectors and photoelectric conversion circuit modules. However, sensor response non-uniformity and differences in circuit signal gains can affect the sensor outputs, thus reducing the measurement accuracy of the AOA. Secondly, the sensor installation error arising from the fixation of the photoelectric conversion circuit module on the 3D-printed panel, along with the error in , can result in sensor position error.

The spatially distributed sensor array is installed in a darkroom without light. The output voltage of each sensor is generated by dark current. The dark current noise in each sensor can be considered uniform, so the difference in circuit signal gain of each sensor circuit can be calibrated to obtain the gain correction coefficient of each sensor. The laser light source is turned on, and five sensors are placed one after another at the position of panel 1 in Figure 8b. Each sensor is irradiated by a light source with the same energy. By varying the intensity of the incident light, the actual optical response of each sensor is calibrated based on the changes in the output voltage.

Taking the position of sensor 1 as a reference, by comparing the angle difference between the actual output and theoretical output of each sensor at different incident angles, the position errors of the five sensors in the azimuth and elevation directions are analyzed. The normal vector coordinate matrix of the spatially distributed sensor array after correction is expressed as:

where is the angle error in the azimuth direction for each sensor, and is the angle error in the elevation direction.

Due to the long propagation distance of light in free space and the beam diameter being much larger than the diameter of the spatial distribution sensor, the AOA detection algorithm for the spatial distribution sensor array is based on the assumption of a uniform energy distribution of the light spot. However, in this experiment, where the distance between the light source and the spatially distributed sensor array is relatively short, it is necessary to correct the sensor output based on the intensity distribution of the Gaussian beam.

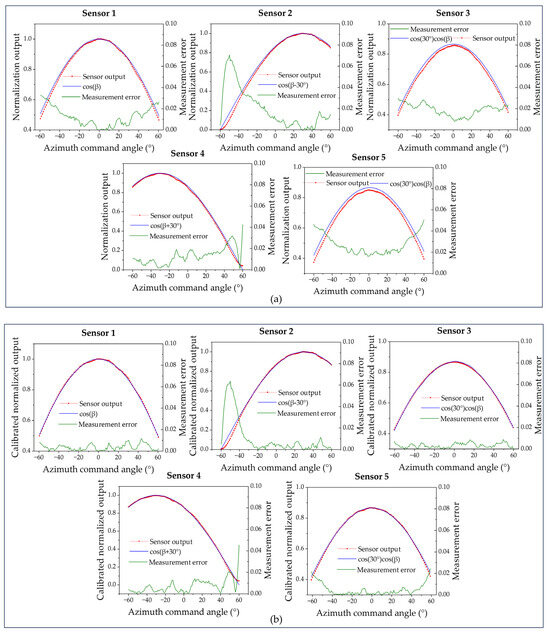

The normalized output of each sensor is shown in Figure 9a, with the elevation angle set to 0° and the azimuth angle moving from −60° to 60° in 1° intervals, and Figure 9b demonstrates the normalized output of five sensors after calibration, which significantly improves the measurement accuracy of the sensors.

Figure 9.

(a) Normalized output of five sensors. (b) Normalized output of five sensors after error calibration.

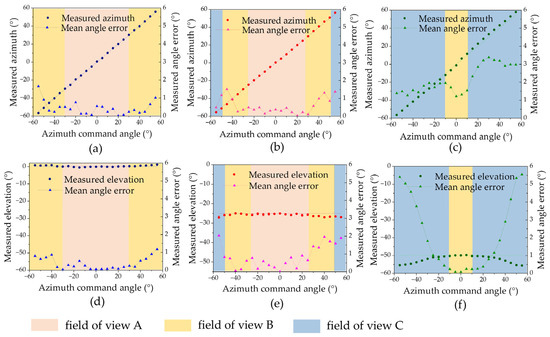

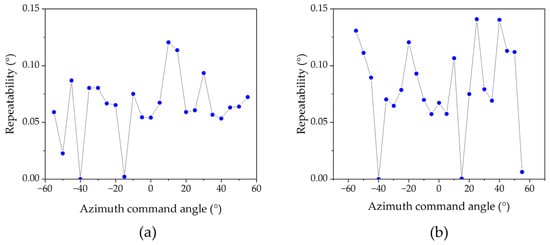

After correcting the systematic errors of the spatially distributed sensor array, the multi-sensor data fusion algorithm was used to calculate the azimuth and elevation angles within a 110° field of view. Figure 10 shows the calculated AOA results of five measurements at 5° intervals of azimuth angle from −55° to 55° at elevation angles of 0°, 25°, and 50°. The results show that they are close to the actual values, with mean angle errors of 0.44°, 0.58°, and 2.15° for azimuth and 0.4°, 0.85°, and 2.14° for elevation. In the fields of view A and B, higher measurement accuracy is achieved by fusing the outputs of multiple sensors. Repeatability results with the elevation angle set to 0° and the azimuth angle moving from −50° to 50° in 5° intervals are shown in Figure 11, which is better than 0.15°.

Figure 10.

AOA detection results at different elevation angles after error correction. (a) The calculated azimuth coordinate curves when . (b) The calculated azimuth coordinate curves when . (c) The calculated azimuth coordinate curves when . (d) The calculated elevation coordinate curves when . (e) The calculated elevation coordinate curves when . (f) The calculated elevation coordinate curves when .

Figure 11.

Repeatability results with the elevation angle set to 0° and the azimuth angle moving from −50° to 50° in 5° intervals. (a) Repeatability results for azimuth. (b) Repeatability results for elevation.

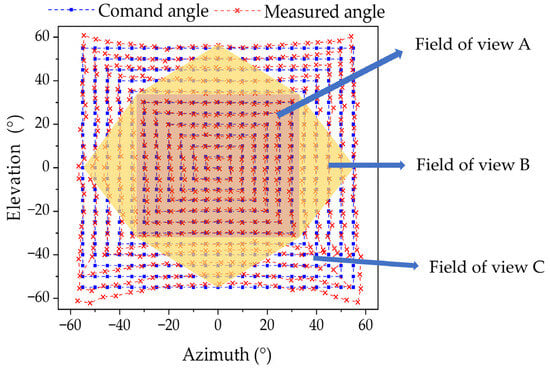

The 2D AOA detection performance of the system is measured, simultaneously verifying the effectiveness of the multi-sensor data fusion algorithm. The test results are shown in Figure 12. After using the data fusion algorithm, the maximum errors of azimuth and elevation angle in field of view A are 1.59° and 1.7°, respectively, and the RMSE is 0.6° and 0.67°. The maximum measurements of azimuth and elevation angle in the 110° field of view are 4.3° and 6.7°, respectively, with RMSE of 1.1° and 1.7°. In the fields of view A and B, higher measurement accuracy is achieved by fusing the outputs of multiple sensors. The 2D experimental results demonstrate that the spatially distributed sensor array exhibits high accuracy within a large field of view.

Figure 12.

Two-dimensional experimental AOA results with the spatially distributed sensor array.

However, conventional spot position detection systems often require complex optical components, such as fisheye lenses, high-resolution CCDs, and multi-unit sensor arrays, to achieve a wide field of view [9,10]. The fisheye lens, composed of multiple optical elements, contributes significantly to the system’s overall volume and weight. Furthermore, the associated processing circuitry tends to be more complex, resulting in higher power consumption. In contrast, the spatially distributed sensor array proposed in this study positions the sensors on a 150 mm × 150 mm panel, with a total mass of merely 140 g. This design not only simplifies the circuitry but also substantially reduces power consumption, presenting a more compact, lightweight, and energy-efficient solution.

5. Conclusions

This paper develops an AOA detection model for the spatially distributed sensor array and analyzes the impact of structural design on the field of view and detection accuracy. Based on it, a spatially distributed sensor array was designed, consisting of five InGaAs PIN photodiodes mounted on a 3D-printed panel with a 30° angle between the top panel and the side panels. A multi-sensor data fusion algorithm is proposed to mitigate measurement errors caused by the random variations in power density during the beam propagation process. The accuracy and repeatability of two-dimensional AOA detection were evaluated by indoor experiments. The results demonstrate that within a 60° field of view, the measurement accuracy of AOA is significantly improved, with a maximum angular error of 1.7° and an RMSE of 0.67°, validating the effectiveness of the multi-sensor data fusion algorithm. Compared to the systems that detect the AOA based on light spot position, the proposed design significantly reduces the SWaP, which has broad application prospects in future multi-satellite laser communication.

Furthermore, we will conduct multi-environment optimization design and testing. By encoding the received beam and designing filters to improve the signal-to-noise ratio (SNR), the performance of the sensor will be further enhanced.

Author Contributions

Conceptualization, X.W. (Xiaodong Wang) and L.Z.; methodology, S.Z., X.W. (Xiaodong Wang) and L.Z.; software, S.Z. and X.W. (Xiangyu Wang); validation, S.Z. and S.S.; formal analysis, S.Z. and S.S.; investigation, S.Z. and X.W. (Xiaodong Wang); resources, L.Z. and X.W. (Xiaodong Wang); data curation, S.Z., L.C. and H.D.; writing—original draft preparation, S.Z.; writing—review and editing, S.Z., X.W. (Xiaodong Wang), L.Z. and X.W. (Xiangyu Wang); visualization, S.Z. and X.W. (Xiangyu Wang); supervision, L.Z. and X.W. (Xiaodong Wang); project administration, L.Z. and X.W. (Xiaodong Wang); funding acquisition, L.Z. and X.W. (Xiaodong Wang). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

Thanks to the School of Optoelectronic Engineering, Chongqing University of Posts and Telecommunications for scientific inspiration and advice. Thanks Chongqing Institute of Green and Intelligent Technology, Chinese Academy of Sciences, for assistance with the instrument and equipment.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chaudhry, A.U.; Yanikomeroglu, H. Laser intersatellite links in a starlink constellation: A classification and analysis. IEEE Veh. Technol. Mag. 2021, 16, 48–56. [Google Scholar] [CrossRef]

- Gao, X.Z.; Xie, Z.; Ma, R.; Wang, W.; Bai, Z.F. Development current status and trend analysis of satellite laser communication. Acta Photonica Sin. 2021, 50, 9–29. [Google Scholar]

- Wang, G.; Yang, F.; Song, J.; Han, Z. Free Space Optical Communication for Inter-Satellite Link: Architecture, Potentials and Trends. IEEE Commun. Mag. 2024, 62, 110–116. [Google Scholar] [CrossRef]

- Li, R.; Lin, B.; Liu, Y.; Dong, M.; Zhao, S. A Survey on Laser Space Network: Terminals, Links, and Architectures. IEEE Access 2022, 10, 34815–34834. [Google Scholar] [CrossRef]

- Talamante, A.; Bowman, J.D.; Jacobs, D.C.; Hoffman, Z.; Horne, M.; McCormick, C.; Velazco, J.E.; Arnold, J.; Cornish, S.E.; Escobar, U.S.; et al. Deployable optical receiver array cubesat. In Proceedings of the 2021 Small Satellite Conference, Logan, UT, USA, 7–9 August 2021. [Google Scholar]

- Wong, Y.; Velazco, J. Distributed Spacecraft Mission Optical Multiple Access Inter-Satellite Communication Solution. In Proceedings of the 2024 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2024. [Google Scholar]

- Zaman, I.U.; Torum, R.; Janzen, A.; Peng, M.Y.; Velazco, J.; Boyraz, O. Design tradeoffs and challenges of omnidirectional optical antenna for high speed, long range inter CubeSat data communication. In Proceedings of the 32nd Annual AIAA/USU Conference on Small Satellites, Logan, UT, USA, 4 August 2018. [Google Scholar]

- Carrasco-Casado, A.; Shiratama, K.; Trinh, P.V.; Kolev, D.; Munemasa, Y.; Fuse, T.; Tsuji, H.; Toyoshima, M. Development of a miniaturized laser-communication terminal for small satellites. Acta Astronaut. 2022, 197, 1–5. [Google Scholar] [CrossRef]

- Williams, A.J.; Laycock, L.L.; Griffith, M.S.; McCarthy, A.G.; Rowe, D.P. Acquisition and tracking for underwater optical communications. In Advanced Free-Space Optical Communication Techniques and Applications III; SPIE: Bellingham, WA, USA, 2017; Volume 10437, pp. 44–54. [Google Scholar]

- Zhang, R.; Yang, X.; Shi, J.; Wu, Q.; Wang, Z.; Li, M. Integrated optical system design for large-field-of-view and broad-spectrum laser warning. Appl Opt. 2022, 61, 4187–4194. [Google Scholar] [CrossRef]

- Zhang, J.; Qian, W.; Gu, G.; Mao, C.; Ren, K.; Cai, G.; Liu, Z.; Yang, J.; Chen, Q. The effect of lens distortion in angle measurement based on four-quadrant detector. Infrared Phys. Technol. 2020, 104, 103060. [Google Scholar] [CrossRef]

- Pagano, T.S.; Norton, C.D.; Boyraz, O.; Velazco, J.E.; Peng, M.; Torun, R.; Janzen, A.W.; Zaman, I.U. Omnidirectional optical transceiver design techniques for multi-frequency full duplex CubeSat data communication. In CubeSats and NanoSats for Remote Sensing II; SPIE: Bellingham, WA, USA, 2018; Volume 10769, pp. 281–288. [Google Scholar]

- Eren, F.; Pe’eri, S.; Thein, M.W.; Rzhanov, Y.; Celikkol, B.; Swift, M.R. Position, orientation and velocity detection of unmanned underwater vehicles (UUVs) using an optical detector array. Sensors 2017, 17, 1741. [Google Scholar] [CrossRef] [PubMed]

- Jabczynski, J.K.; Romaniuk, R.S.; Jakubaszek, M.; Młodzianko, A.; Zygmunt, M.; Wojtanowski, J. Sensor of light incidence angle. In Laser Technology 2018: Progress and Applications of Lasers; SPIE: Bellingham, WA, USA, 2018; Volume 109740H, pp. 135–143. [Google Scholar]

- Velazco, J.E. Omnidirectional optical communicator. In Proceedings of the 2019 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2019; pp. 1–6. [Google Scholar]

- Velazco, J.E.; Aguilar, A.C.; Klaib, A.R.; Escobar, U.S.; Cornish, S.E.; Griffin, J.C. Development of Omnidirectional Optical Terminals for Swarm Communications and Navigation. In Interplanetary Network Progress Report; IPN PR 41-221; California Institute of Technology: Pasadena, CA, USA, 2020. [Google Scholar]

- Escobar, U.S.; Velazco, J.E.; Cornish, S.E.; Klaib, A.R.; Arnold, J.M.; Jacobs, D.; Bowmen, J.; Yi, L. Development of a deployable optical receive aperture. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022; pp. 1–8. [Google Scholar]

- Patel, V.H.; Escobar, U.; Klaib, A.; Montanez, S.; Yi, L.; Jacobs, D.; Bowman, J. Ground Terminal Evaluation for Deployable Optical Receiver Aperture (DORA). In Proceedings of the 2024 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2024; pp. 1–11. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).