Artificial Vision Systems for Fruit Inspection and Classification: Systematic Literature Review

Abstract

1. Introduction

2. Objectives

- ○

- RQ1: What are the application fields where it is required to classify and inspect fruit using artificial vision?

- ○

- RQ2: What are the typical hardware configurations in machine vision systems used for image acquisition in fruit classification and inspection?

- ○

- RQ3: What are the most used image-processing algorithms and techniques in fruit classification and inspection?

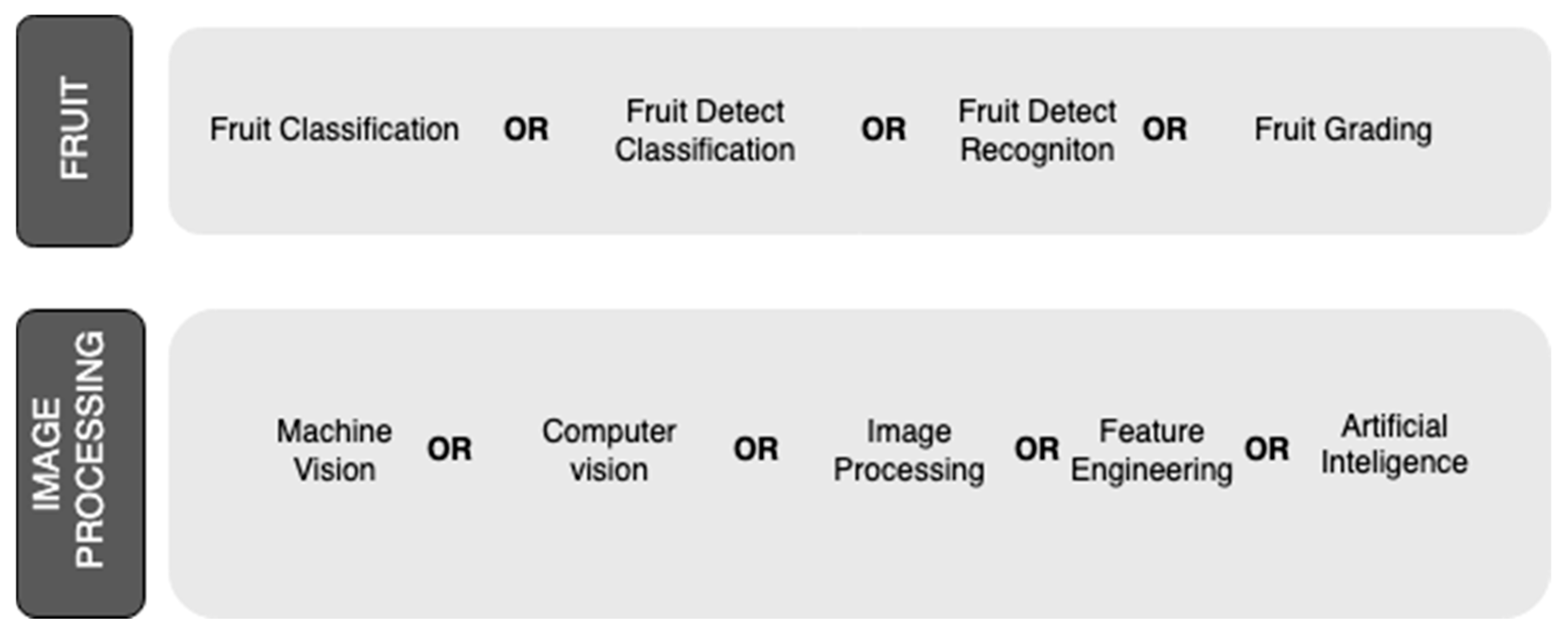

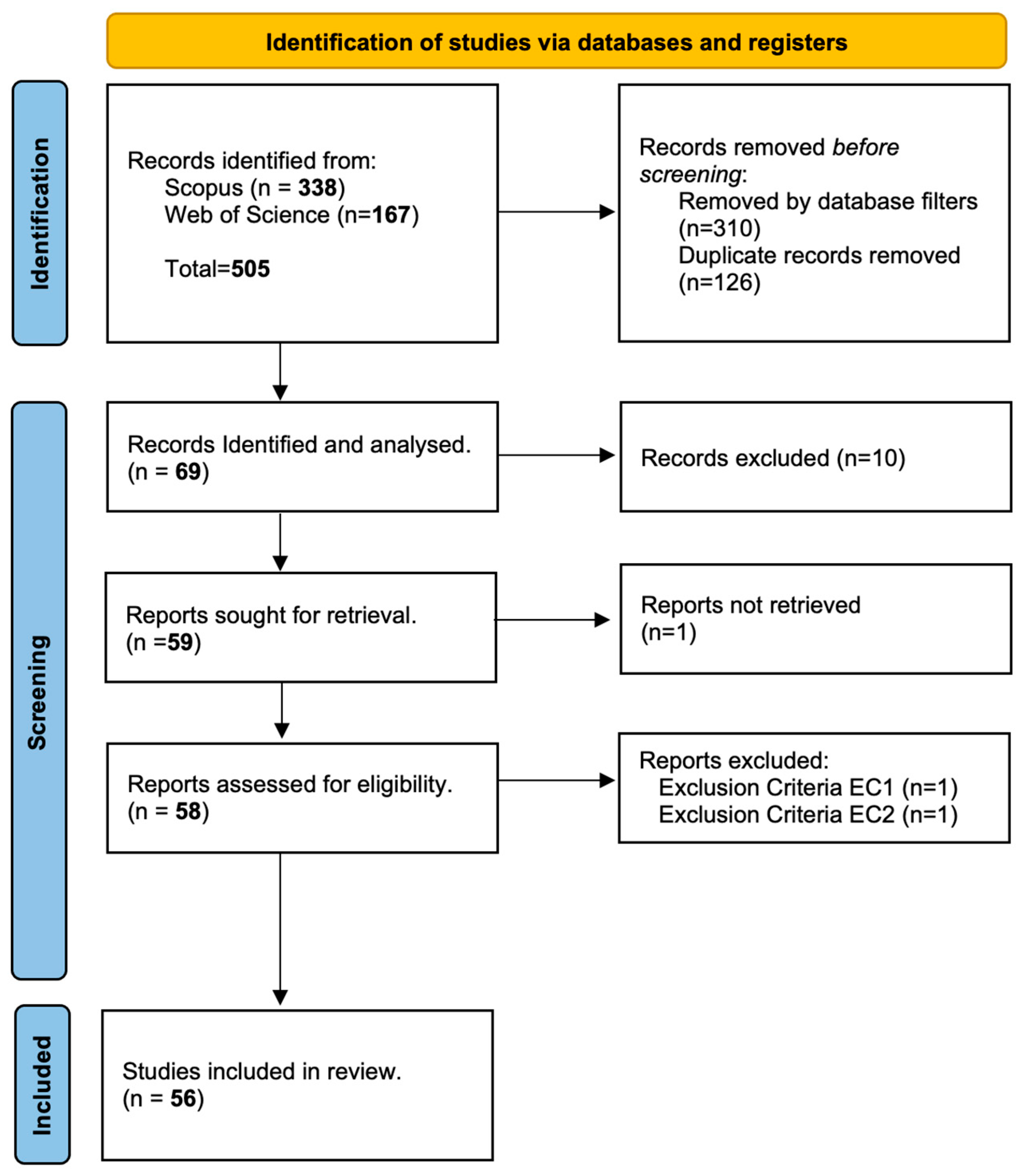

3. Methodology

4. Results

4.1. Data Synthesis

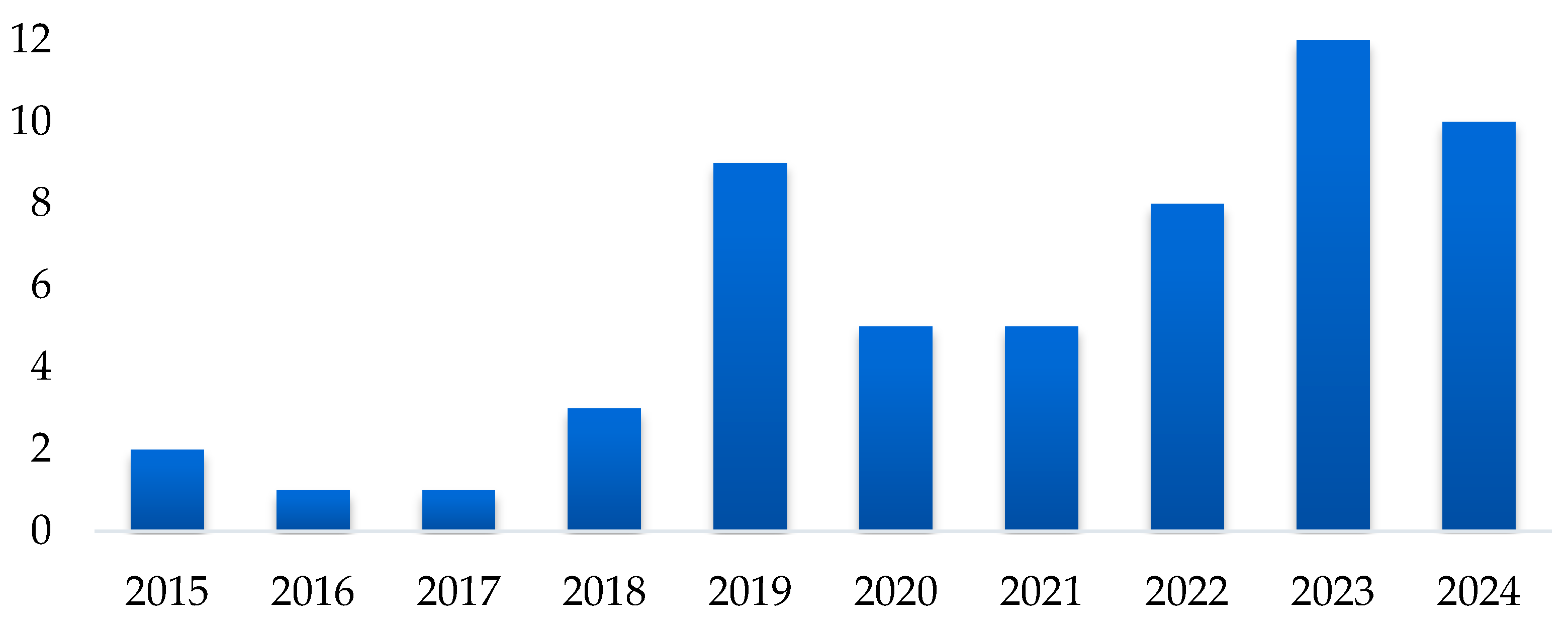

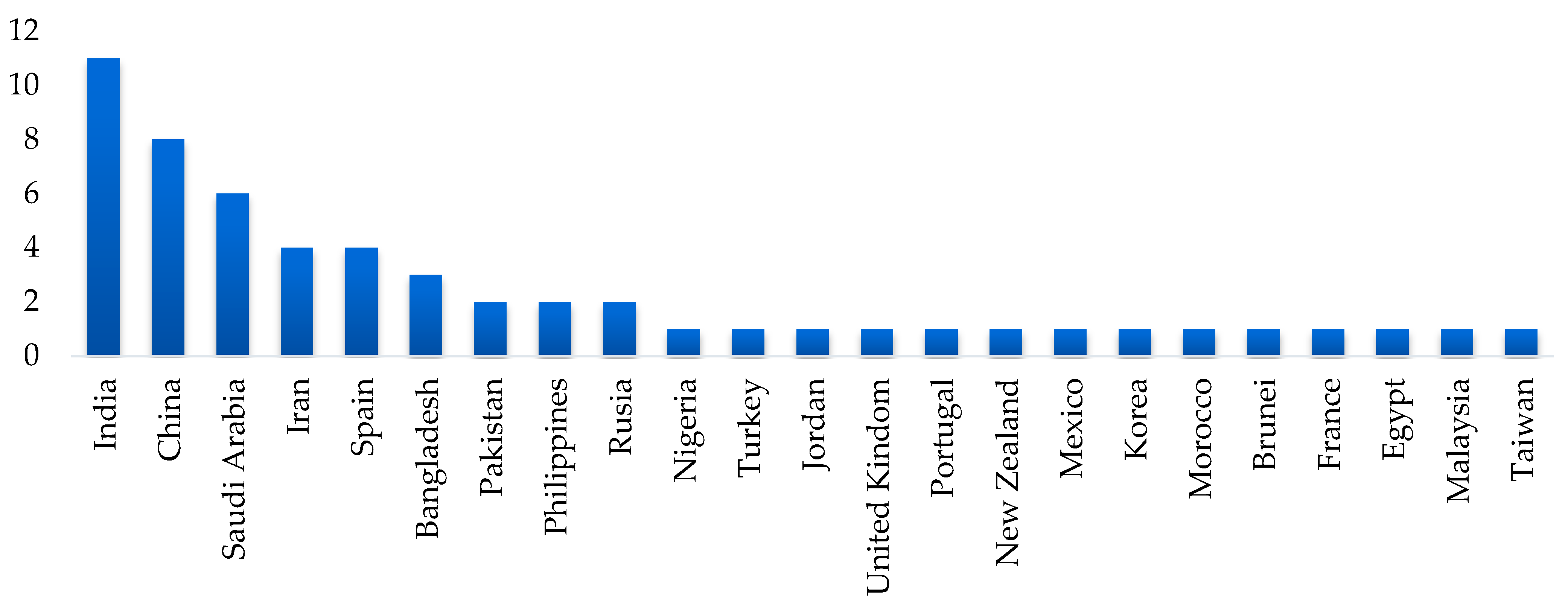

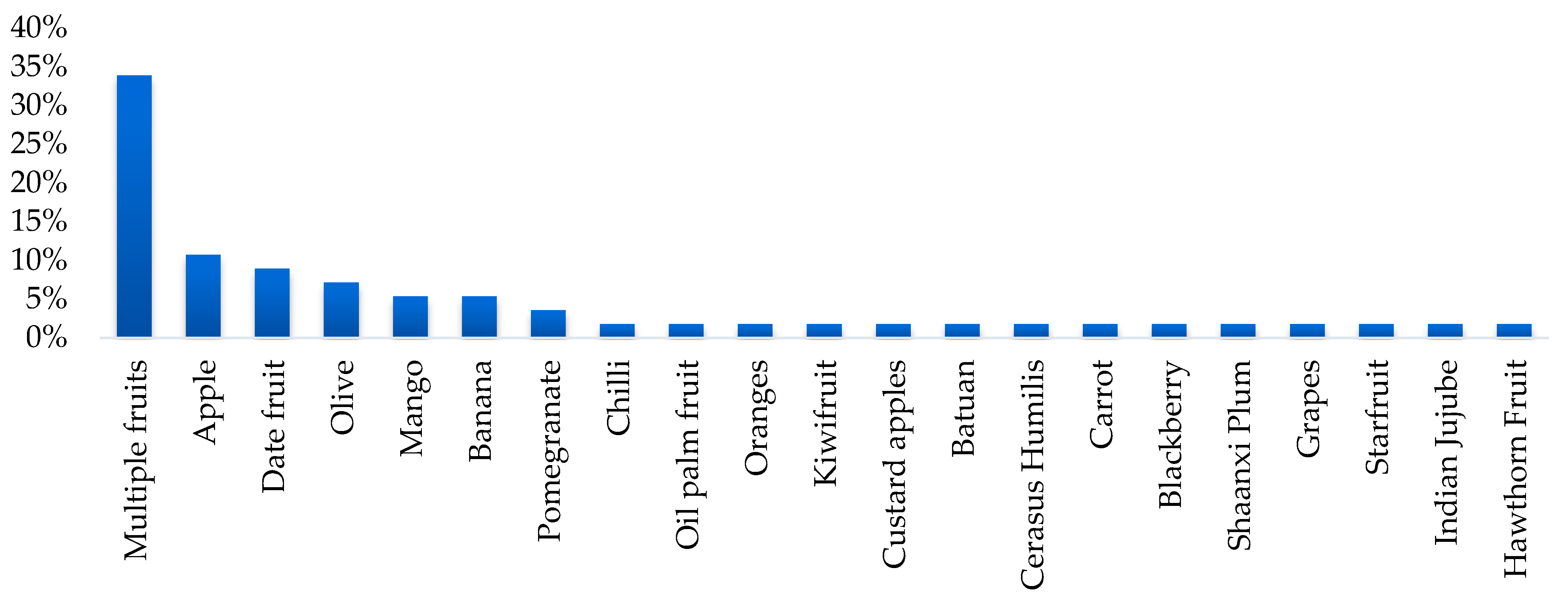

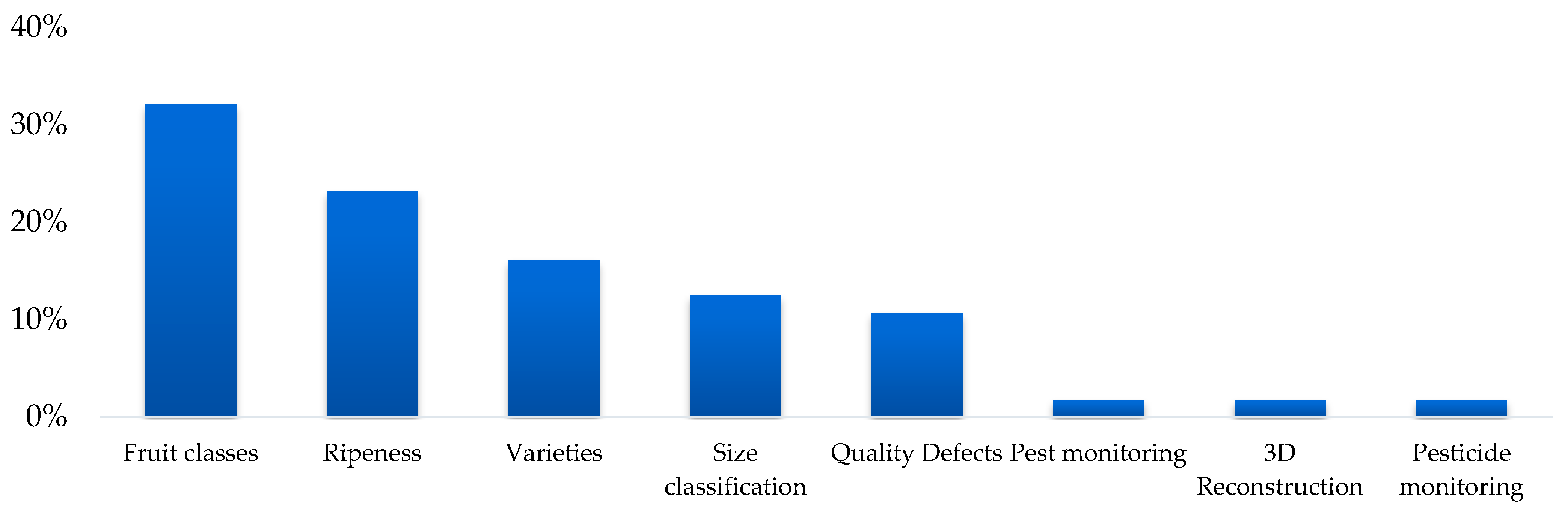

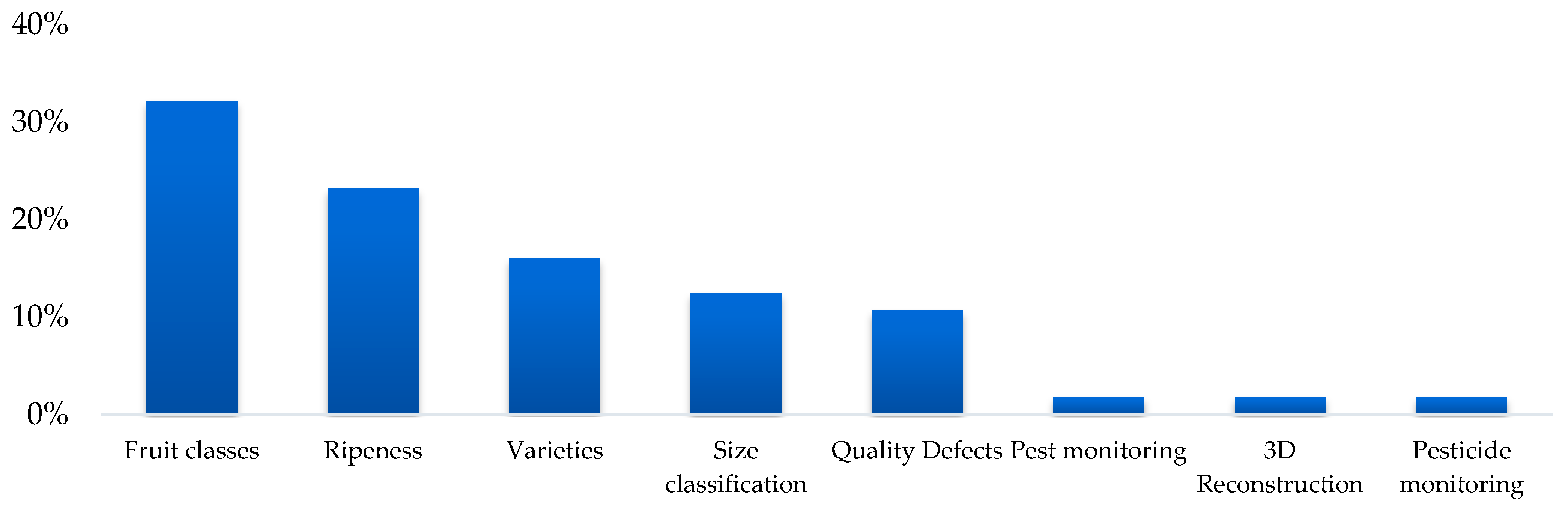

4.2. General Articles Characteristics

4.3. Answering the Research Questions

- A.

- Orchard: At this stage, machine vision systems are used to automate the harvesting and sorting of fruit directly in the field. These systems are useful for identifying the fruit classes [32,38,43], assessing maturity levels [23,24,28,30,51], pest monitoring [49], and pesticide monitoring [65]. In addition, their implementation makes it possible to address the challenges related to labor shortages in agricultural activities.

- B.

- Fruit-processing industries: In industrial processing lines, machine vision is used for tasks such as varieties detection [14,20,50,54,64,70], fruit classes [18,19,35], ripeness level [21,25,27,55,57,66], size classification [22,26,29,33,36,60,63], and quality defects sorting [34,42,52,56,62,67]. This use stands out for its ability to reduce human error, increase inspection speed, and improve consistency in product quality.

- C.

- Retail or Final Consumption Points: In this emerging area, artificial vision systems are designed to assist the consumer or distribution chains in assessing freshness [48], [53], identifying varieties [17,58,61], and detecting the type of fruit [15,16,31,37,39,40,41,44,45,46,59,69]. Recent advances have allowed for technologies integrated into smartphones to classify fruit in real time, facilitating informed purchasing decisions.

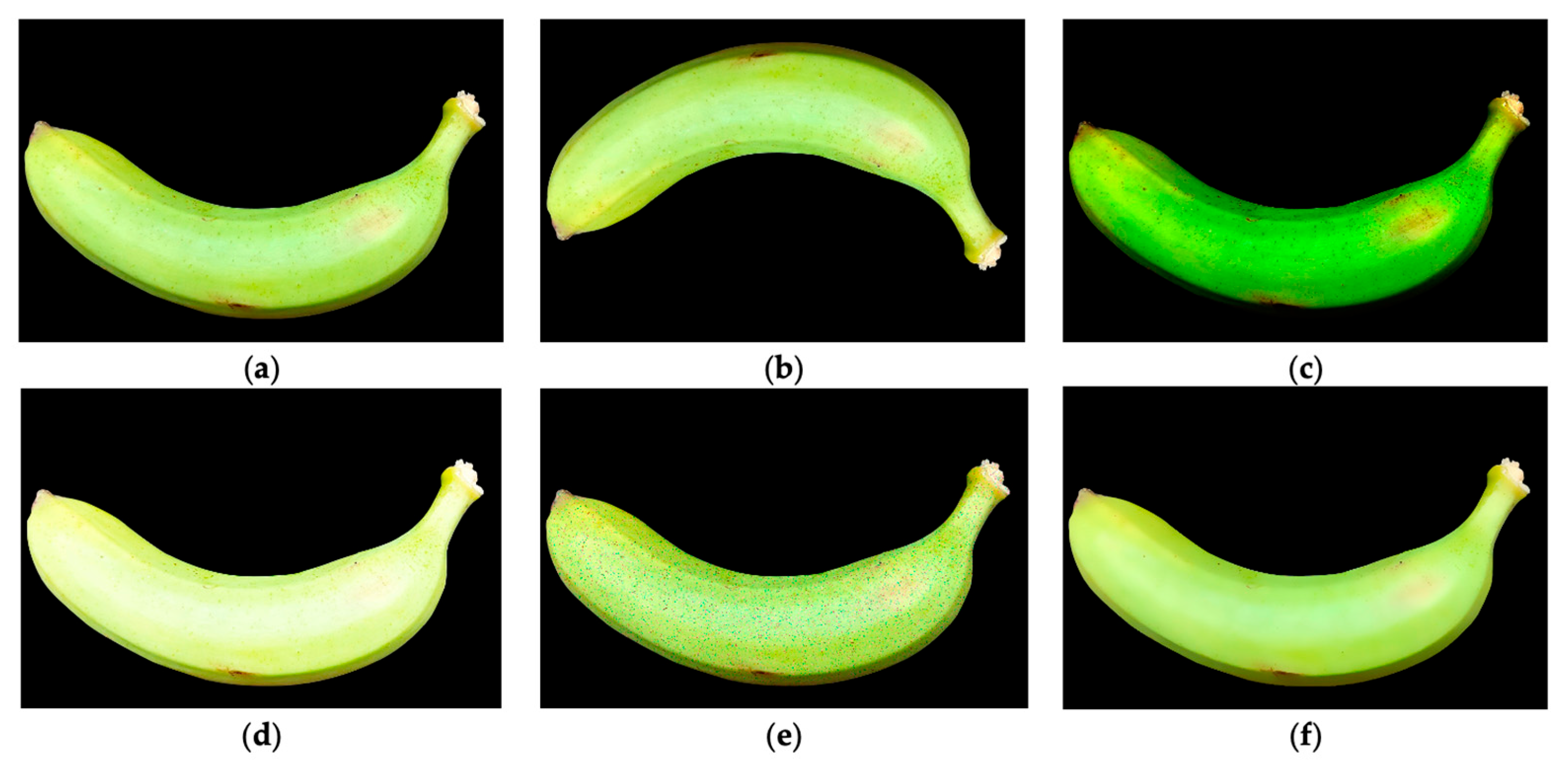

- A.

- Image capture:

- B.

- Acquisition Conditions:

- C.

- Capture speed:

- A.

- Preprocessing:

- Image filtering and enhancement

- Color adjustment and lighting correction

- Geometric transformations

- A.

- Segmentation:

- Segmentation algorithms

- ○

- Sobel Filter [17,27]: It is an edge detection technique that calculates the derivative of pixel intensity in horizontal and vertical directions, highlighting areas where sharp changes in intensity occur. This method uses two convolutional masks (kernels), one for each direction, and combines the results to obtain a gradient image. It is useful for identifying contours in images where the edges are sharp and well defined, providing key information for segmenting objects such as fruits. Although it is efficient, its performance can be affected in noisy images, so it is often combined with pre-filtering techniques to improve the quality of the results.

- ○

- Canny Filter [22,33,57]: This algorithm is a more advanced technique for edge detection. It works in several stages: First, it applies a Gaussian filter to smooth the image and reduce noise; then, it calculates intensity gradients to identify areas with pronounced changes. Next, it uses a “non-maximum suppression” process to refine the detected edges and remove spurious lines. Finally, it applies a double threshold to identify strong and weak edges, connecting weak ones to strong ones if they are related. The Canny filter is especially effective on complex images, as it generates more accurate edges than other techniques.

- ○

- Otsu Thresholding [42,46,52,55,64]: Otsu is a threshold-based segmentation technique used to binarize images. This algorithm automatically determines the optimal threshold value by minimizing the intra-class variance and maximizing the inter-class variance. In practical terms, it searches for the ideal cut-off point to separate pixels into two groups: background and object. It is especially useful when the image histogram shows a bimodal distribution, meaning there are two distinct classes (for example, a fruit and its background). Otsu is commonly used on images with uniform illumination and is efficient for applications where an automatic and fast segmentation process is required.

- ○

- Mean Shift Clustering [66]: It is a method based on grouping pixels according to their similarity in features such as color or intensity. This algorithm iterates to find the highest densities in the feature space, moving a kernel towards areas with higher density until reaching convergence. It is particularly useful for segmenting images with homogeneous color regions, such as fruits on uniform backgrounds, since it does not require a fixed number of clusters to be specified.

- ○

- Watershed Segmentation [20,66,70]: It is based on interpreting the intensity of pixels as a topography where the lowest values represent valleys and the highest, ridges. This method floods the valleys of the image with “water” from marked points, separating regions based on their natural boundaries. It is ideal for segmenting objects that are superimposed or in contact, such as stacked fruit, and allows for obtaining precise contours in complex images. To avoid over-segmentation, it is often combined with preprocessing techniques, such as smoothing and edge detection.

- ○

- Combined Applications: In the reviewed studies, it was observed that segmentation techniques are often applied in combination to improve accuracy. The Sobel filter is employed to detect initial contours, which are then refined using the Canny algorithm. Mean Shift Clustering is used to cluster pixels before applying Watershed [66], which reduces noise and improves object separation. In complex or noisy images, these techniques are combined with transformations to color spaces, such as CIE L*a*b* or HSV [27], where chromatic differences between the object and the background are more pronounced, facilitating segmentation. The use of binarized masks not only facilitates background removal but also enables the analysis of physical features, such as measuring longitudinal and transverse axes, calculating area, or identifying specific shapes [26].

- ○

- Discontinuity-based: Identify abrupt changes in pixel intensity, such as edges or lines. This approach is useful for detecting contours and separating regions with defined boundaries.

- ○

- Similarity-based: Groups regions with homogeneous characteristics, such as color intensity or texture. An example of this method is Otsu Thresholding.

- Segmentation Objectives

- ○

- Object Detection: Identify and isolate the fruit from the background to perform a specific analysis.

- ○

- Shape Analysis: Extract the geometry and dimensions of the fruit to evaluate its quality or classify it according to specific standards.

- ○

- Color Detection: Identify shades that allow determining the level of ripeness, freshness or presence of defects.

- ○

- Defect Detection: Highlight imperfections such as bruises, stains, or physical damage that affect the quality of the fruit.

- B.

- Feature extraction:

- 1.

- Type of extracted features

- 2.

- Feature Extraction Methods

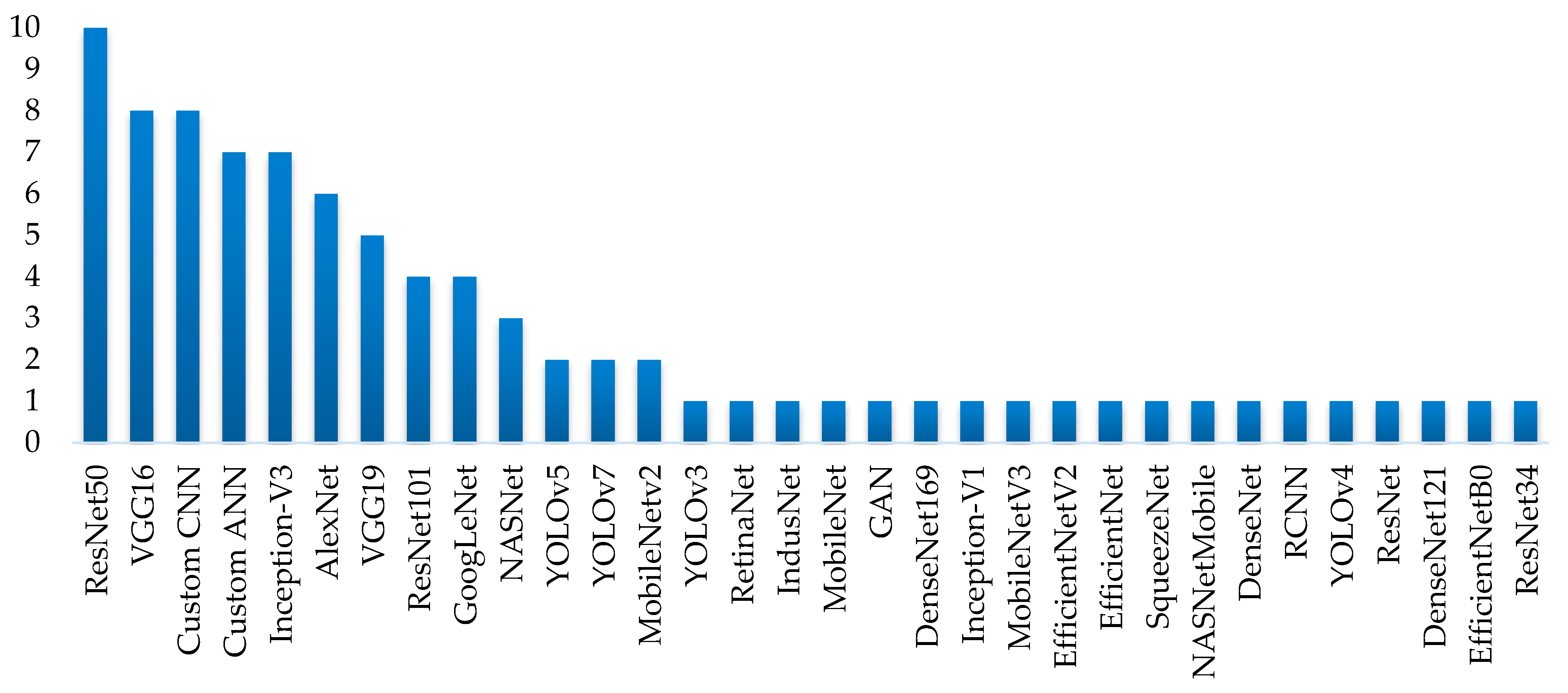

- Deep Learning-Based Approaches: Deep learning models, such as VGG-16, ResNet, DenseNet, and YOLO, are widely used for feature extraction. These models can identify complex patterns related to texture, color, and shape. In the context of fruit classification, these features are essential for tasks, such as defect detection, quality assessment, and classification by type or variety.

- Use of Color Spaces: The reviewed studies reveal the importance of using different color spaces in feature extraction for fruit classification, as these provide more specific and discriminative representations compared to the standard RGB color space.

- C.

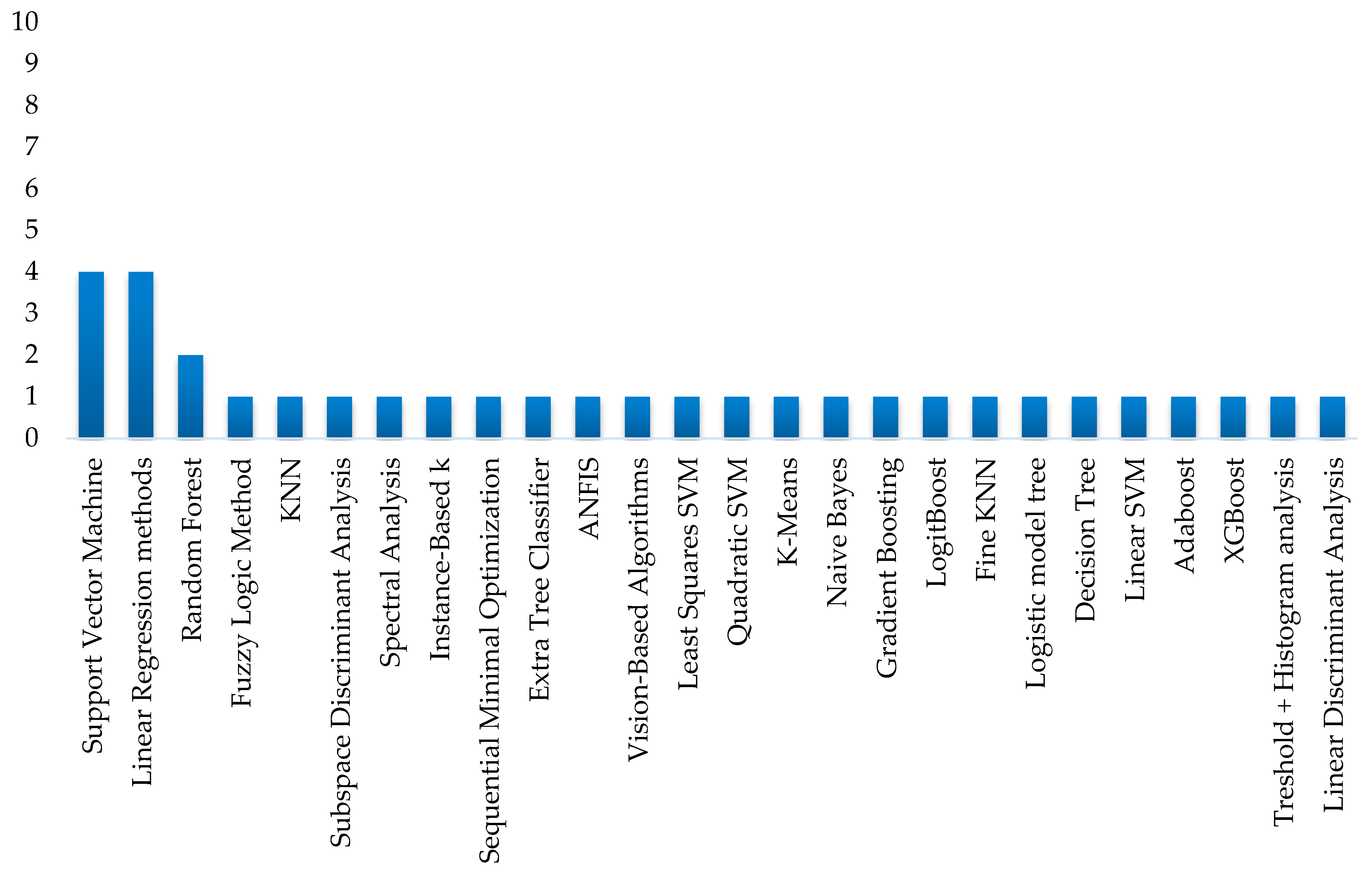

- Classification:

- Classification algorithms used

- 2.

- Model accuracy and performance

- 3.

- Classification Objective

- 4.

- Techniques and algorithms

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Economic Research Service U.S. Department of Agriculture. Ag and Food Sectors and the Economy. Available online: https://www.ers.usda.gov/data-products/ag-and-food-statistics-charting-the-essentials/ag-and-food-sectors-and-the-economy/ (accessed on 27 September 2024).

- U.S. Food & Drug Administration. Inspections to Protect the Food Supply. Available online: https://www.fda.gov/food/compliance-enforcement-food/inspections-protect-food-supply (accessed on 27 September 2024).

- FAO; WHO. Codex Alimentarius Commission Procedural Manual; Twenty-Eighth: Rome, Italy, 2023; Available online: https://openknowledge.fao.org/items/dfc93e42-67f3-4de9-9dad-b33fb1600b32 (accessed on 27 September 2024).

- Goyal, K.; Kumar, P.; Verma, K. AI-based fruit identification and quality detection system. Multimed. Tools Appl. 2022, 82, 24573–24604. [Google Scholar] [CrossRef]

- Potdar, R.M.; Sahu, D. Defect Identification and Maturity Detection of Mango Fruits Using Image Analysis. Artic. Int. J. Artif. Intell. 2017, 1, 5–14. [Google Scholar] [CrossRef]

- Albarrak, K.; Gulzar, Y.; Hamid, Y.; Mehmood, A.; Soomro, A.B. A Deep Learning-Based Model for Date Fruit Classification. Sustainability 2022, 14, 6339. [Google Scholar] [CrossRef]

- Tianjing, Y.; Mhamed, M. Developments in Automated Harvesting Equipment for the Apple in the orchard: Review. Smart Agric. Technol. 2024, 9, 100491. [Google Scholar] [CrossRef]

- Chhetri, K.B. Applications of Artificial Intelligence and Machine Learning in Food Quality Control and Safety Assessment. Food Eng. Rev. 2023, 16, 1–21. [Google Scholar] [CrossRef]

- Yue, Z.; Miao, Z. Research on Fruit and Vegetable Classification and Recognition Method Based on Depth-Wise Separable Convolution. 2023. Available online: https://www.researchsquare.com/article/rs-3326048/v1 (accessed on 12 February 2025). [CrossRef]

- Miranda, J.C.; Gené-Mola, J.; Zude-Sasse, M.; Tsoulias, N.; Escolà, A.; Arnó, J.; Rosell-Polo, J.R.; Sanz-Cortiella, R.; Martínez-Casasnovas, J.A.; Gregorio, E. Fruit sizing using AI: A review of methods and challenges. Postharvest Biol. Technol. 2023, 206, 112587. [Google Scholar] [CrossRef]

- Sundaram, S.; Zeid, A. Artificial Intelligence-Based Smart Quality Inspection for Manufacturing. Micromachines 2023, 14, 570. [Google Scholar] [CrossRef]

- Espinoza, S.; Aguilera, C.; Rojas, L.; Campos, P.G. Analysis of Fruit Images With Deep Learning: A Systematic Literature Review and Future Directions. IEEE Access 2023, 12, 3837–3859. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, 71. [Google Scholar] [CrossRef]

- Sabzi, S.; Abbaspour-Gilandeh, Y.; García-Mateos, G. A new approach for visual identification of orange varieties using neural networks and metaheuristic algorithms. Inf. Process. Agric. 2018, 5, 162–172. [Google Scholar] [CrossRef]

- Shankar, K.; Kumar, S.; Dutta, A.K.; Alkhayyat, A.; Jawad, A.J.M.; Abbas, A.H.; Yousif, Y.K. An Automated Hyperparameter Tuning Recurrent Neural Network Model for Fruit Classification. Mathematics 2022, 10, 2358. [Google Scholar] [CrossRef]

- Murthy, T.S.; Kumar, K.V.; Alenezi, F.; Lydia, E.L.; Park, G.-C.; Song, H.-K.; Joshi, G.P.; Moon, H. Artificial Humming Bird Optimization with Siamese Convolutional Neural Network Based Fruit Classification Model. Comput. Syst. Sci. Eng. 2023, 47, 1633–1650. [Google Scholar] [CrossRef]

- Ratha, A.K.; Barpanda, N.K.; Sethy, P.K.; Behera, S.K. Automated Classification of Indian Mango Varieties Using Machine Learning and MobileNet-v2 Deep Features. Trait. Du Signal 2024, 41, 669–679. [Google Scholar] [CrossRef]

- Zhang, W. Automated Fruit Grading in Precise Agriculture using You Only Look Once Algorithm. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 1136–1144. [Google Scholar] [CrossRef]

- Adigun, J.O.; Okikiola, F.M.; Aigbokhan, E.E.; Rufai, M.M. Automated System for Grading Apples using Convolutional Neural Network. Int. J. Innov. Technol. Explor. Eng. 2019, 9, 1458–1464. [Google Scholar] [CrossRef]

- Ponce, J.M.; Aquino, A.; Millan, B.; Andujar, J.M. Automatic Counting and Individual Size and Mass Estimation of Olive-Fruits Through Computer Vision Techniques. IEEE Access 2019, 7, 59451–59465. [Google Scholar] [CrossRef]

- Yang, L.; Cui, B.; Wu, J.; Xiao, X.; Luo, Y.; Peng, Q.; Zhang, Y. Automatic Detection of Banana Maturity—Application of Image Recognition in Agricultural Production. Processes 2024, 12, 799. [Google Scholar] [CrossRef]

- Abbaspour-Gilandeh, S.S. Automatic grading of emperor apples based on image processing and ANFIS. J. Agric. Sci. Bilim. Derg. 2015, 21, 326–336. [Google Scholar] [CrossRef]

- Morales-Vargas, E.; Fuentes-Aguilar, R.Q.; De-La-Cruz-Espinosa, E.; Hernández-Melgarejo, G. Blackberry Fruit Classification in Underexposed Images Combining Deep Learning and Image Fusion Methods. Sensors 2023, 23, 9543. [Google Scholar] [CrossRef]

- Patkar, G.S.; Anjaneyulu, G.S.G.N.; Mouli, P.V.S.S.R.C. Challenging Issues in Automated Oil Palm Fruit Grading. IAES Int. J. Artif. Intell. (IJ-AI) 2018, 7, 111–118. [Google Scholar] [CrossRef]

- Hanafi, M.; Shafie, S.M.; Ibrahim, Z. Classification of C. annuum and C. frutescens Ripening Stages: How Well Does Deep Learning Perform? IIUM Eng. J. 2024, 25, 167–178. [Google Scholar] [CrossRef]

- Fu, L.; Sun, S.; Li, R.; Wang, S. Classification of Kiwifruit Grades Based on Fruit Shape Using a Single Camera. Sensors 2016, 16, 1012. [Google Scholar] [CrossRef] [PubMed]

- Saha, K.K.; Rahman, A.; Moniruzzaman, M.; Syduzzaman, M.; Uddin, Z.; Rahman, M.; Ali, A.; al Riza, D.F.; Oliver, M.H. Classification of starfruit maturity using smartphone-image and multivariate analysis. J. Agric. Food Res. 2022, 11, 100473. [Google Scholar] [CrossRef]

- Altaheri, H.; Alsulaiman, M.; Muhammad, G. Date Fruit Classification for Robotic Harvesting in a Natural Environment Using Deep Learning. IEEE Access 2019, 7, 117115–117133. [Google Scholar] [CrossRef]

- Alturki, A.S.; Islam, M.; Alsharekh, M.F.; Almanee, M.S.; Ibrahim, A.H. Date Fruits Grading and Sorting Classification Algoritham Using Colors and Shape Features. Int. J. Eng. Res. Technol. 2020, 13, 1917–1920. [Google Scholar] [CrossRef]

- Faisal, M.; Albogamy, F.; Elgibreen, H.; Algabri, M.; Alqershi, F.A. Deep Learning and Computer Vision for Estimating Date Fruits Type, Maturity Level, and Weight. IEEE Access 2020, 8, 206770–206782. [Google Scholar] [CrossRef]

- Nasir, I.M.; Bibi, A.; Shah, J.H.; Khan, M.A.; Sharif, M.; Iqbal, K.; Nam, Y.; Kadry, S. Deep Learning-based Classification of Fruit Diseases: An Application for Precision Agriculture. Comput. Mater. Contin. 2021, 66, 1949–1962. [Google Scholar] [CrossRef]

- Khalifa, N.E.M.; Wang, J.; Taha, M.H.N.; Zhang, Y. DeepDate: A deep fusion model based on whale optimization and artificial neural network for Arabian date classification. PLoS ONE 2024, 19, e0305292. [Google Scholar] [CrossRef]

- Örnek, M.N.; Haciseferoğullari, H. Design of Real Time Image Processing Machine for Carrot Classification. Yuz. Yıl Univ. J. Agric. Sci. 2020, 30, 355–366. [Google Scholar] [CrossRef]

- Wang, B.; Yang, H.; Zhang, S.; Li, L. Detection of Defective Features in Cerasus Humilis Fruit Based on Hyperspectral Imaging Technology. Appl. Sci. 2023, 13, 3279. [Google Scholar] [CrossRef]

- Liu, H.; He, J.; Fan, X.; Liu, B. Detection of variety and wax bloom of Shaanxi plum during post-harvest handling. Chemom. Intell. Lab. Syst. 2024, 246, 105066. [Google Scholar] [CrossRef]

- Tai, N.D.; Lin, W.C.; Trieu, N.M.; Thinh, N.T. Development of a Mango-Grading and -Sorting System Based on External Features, Using Machine Learning Algorithms. Agronomy 2024, 14, 831. [Google Scholar] [CrossRef]

- Yogesh; Dubey, A.K.; Ratan, R. Development of Feature Based Classification of Fruit using Deep Learning. Int. J. Innov. Technol. Explor. Eng. 2019, 8, 3285–3290. [Google Scholar] [CrossRef]

- Gill, H.S.; Khehra, B.S. Efficient image classification technique for weather degraded fruit images. IET Image Process. 2020, 14, 3463–3470. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, Y.; Ji, G.; Yang, J.; Wu, J.; Wei, L. Fruit Classification by Wavelet-Entropy and Feedforward Neural Network Trained by Fitness-Scaled Chaotic ABC and Biogeography-Based Optimization. Entropy 2015, 17, 5711–5728. [Google Scholar] [CrossRef]

- Chen, Y.; Sun, H.; Zhou, G.; Peng, B. Fruit Classification Model Based on Residual Filtering Network for Smart Community Robot. Wirel. Commun. Mob. Comput. 2021, 2021, 5541665. [Google Scholar] [CrossRef]

- Gom-Os, D.F.K. Fruit Classification using Colorized Depth Images. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 01405106. [Google Scholar] [CrossRef]

- Militante, S.V. Fruit Grading of Garcinia Binucao (Batuan) using Image Processing. Int. J. Recent Technol. Eng. 2019, 8, 1828–1832. [Google Scholar] [CrossRef]

- Gill, H.S.; Khalaf, O.I.; Alotaibi, Y.; Alghamdi, S.; Alassery, F. Fruit Image Classification Using Deep Learning. Comput. Mater. Contin. 2022, 71, 5135–5150. [Google Scholar] [CrossRef]

- Siddiqi, R. Fruit-classification model resilience under adversarial attack. SN Appl. Sci. 2022, 4, 1–22. [Google Scholar] [CrossRef]

- Mimma, N.-E.; Ahmed, S.; Rahman, T.; Khan, R. Fruits Classification and Detection Application Using Deep Learning. Sci. Program. 2022, 2022, 1–16. [Google Scholar] [CrossRef]

- Hayat, A.; Morgado-Dias, F.; Choudhury, T.; Singh, T.P.; Kotecha, K. FruitVision: A deep learning based automatic fruit grading system. Open Agric. 2024, 9, 20220276. [Google Scholar] [CrossRef]

- Hartley, Z.K.; Jackson, A.S.; Pound, M.; French, A.P. GANana: Unsupervised Domain Adaptation for Volumetric Regression of Fruit. Plant Phenomics 2021, 2021, 9874597. [Google Scholar] [CrossRef] [PubMed]

- Fu, Y.; Nguyen, M.; Yan, W.Q. Grading Methods for Fruit Freshness Based on Deep Learning. SN Comput. Sci. 2022, 3, 1–13. [Google Scholar] [CrossRef]

- Hu, Y.; Chang, J.; Li, Y.; Zhang, W.; Lai, X.; Mu, Q. High Zoom Ratio Foveated Snapshot Hyperspectral Imaging for Fruit Pest Monitoring. J. Spectrosc. 2023, 2023, 1–13. [Google Scholar] [CrossRef]

- Al-Saif, A.M.; Abdel-Sattar, M.; Aboukarima, A.M.; Eshra, D.H. Identification of Indian jujube varieties cultivated in Saudi Arabia using an artificial neural network. Saudi J. Biol. Sci. 2021, 28, 5765–5772. [Google Scholar] [CrossRef]

- Faisal, M.; Alsulaiman, M.; Arafah, M.; Mekhtiche, M.A. IHDS: Intelligent Harvesting Decision System for Date Fruit Based on Maturity Stage Using Deep Learning and Computer Vision. IEEE Access 2020, 8, 167985–167997. [Google Scholar] [CrossRef]

- Pushpavalli, M. Image Processing Technique for Fruit Grading. Int. J. Eng. Adv. Technol. 2019, 8, 3894–3897. [Google Scholar] [CrossRef]

- Mukhiddinov, M.; Muminov, A.; Cho, J. Improved Classification Approach for Fruits and Vegetables Freshness Based on Deep Learning. Sensors 2022, 22, 8192. [Google Scholar] [CrossRef]

- Noutfia, Y.; Ropelewska, E. Innovative Models Built Based on Image Textures Using Traditional Machine Learning Algorithms for Distinguishing Different Varieties of Moroccan Date Palm Fruit (Phoenix dactylifera L.). Agriculture 2022, 13, 26. [Google Scholar] [CrossRef]

- Azadnia, R.; Fouladi, S.; Jahanbakhshi, A. Intelligent detection and waste control of hawthorn fruit based on ripening level using machine vision system and deep learning techniques. Results Eng. 2023, 17, 100891. [Google Scholar] [CrossRef]

- Rezaei, P.; Hemmat, A.; Shahpari, N.; Mireei, S.A. Machine vision-based algorithms to detect sunburn pomegranate for use in a sorting machine. Measurement 2024, 232, 114682. [Google Scholar] [CrossRef]

- Wakchaure, G.; Nikam, S.B.; Barge, K.R.; Kumar, S.; Meena, K.K.; Nagalkar, V.J.; Choudhari, J.; Kad, V.; Reddy, K. Maturity stages detection prototype device for classifying custard apple (Annona squamosa L.) fruit using image processing approach. Smart Agric. Technol. 2023, 7, 100394. [Google Scholar] [CrossRef]

- Meshram, V.A.; Patil, K.; Ramteke, S.D. MNet: A Framework to Reduce Fruit Image Misclassification. Ing. Des Syst. D’Inf. 2021, 26, 159–170. [Google Scholar] [CrossRef]

- Khan, R.; Debnath, R. Multi Class Fruit Classification Using Efficient Object Detection and Recognition Techniques. Int. J. Image Graph. Signal Process. 2019, 11, 1–18. [Google Scholar] [CrossRef]

- Kumar, R.A.; Rajpurohit, V.S.; Bidari, K.Y. Multi Class Grading and Quality Assessment of Pomegranate Fruits Based on Physical and Visual Parameters. Int. J. Fruit Sci. 2018, 19, 372–396. [Google Scholar] [CrossRef]

- Aldakhil, L.A.; Almutairi, A.A. Multi-Fruit Classification and Grading Using a Same-Domain Transfer Learning Approach. IEEE Access 2024, 12, 44960–44971. [Google Scholar] [CrossRef]

- Shurygin, B.; Smirnov, I.; Chilikin, A.; Khort, D.; Kutyrev, A.; Zhukovskaya, S.; Solovchenko, A. Mutual Augmentation of Spectral Sensing and Machine Learning for Non-Invasive Detection of Apple Fruit Damages. Horticulturae 2022, 8, 1111. [Google Scholar] [CrossRef]

- Ponce, J.M.; Aquino, A.; Millán, B.; Andújar, J.M. Olive-Fruit Mass and Size Estimation Using Image Analysis and Feature Modeling. Sensors 2018, 18, 2930. [Google Scholar] [CrossRef]

- Ponce, J.M.; Aquino, A.; Andujar, J.M. Olive-Fruit Variety Classification by Means of Image Processing and Convolutional Neural Networks. IEEE Access 2019, 7, 147629–147641. [Google Scholar] [CrossRef]

- Lopez-Ruiz, N.; Granados-Ortega, F.; Carvajal, M.A.; Martinez-Olmos, A. Portable multispectral imaging system based on Raspberry Pi. Sens. Rev. 2017, 37, 322–329. [Google Scholar] [CrossRef]

- Ismail, N.; Malik, O.A. Real-time Visual Inspection System for Grading Fruits using Computer Vision and Deep Learning Techniques. Inf. Process. Agric. 2021, 9, 24–37. [Google Scholar] [CrossRef]

- Sajitha, P.; Andrushia, A.D.; Mostafa, N.; Shdefat, A.Y.; Suni, S.; Anand, N. Smart farming application using knowledge embedded-graph convolutional neural network (KEGCNN) for banana quality detection. J. Agric. Food Res. 2023, 14, 100767. [Google Scholar] [CrossRef]

- Ma, H.; Chen, M.; Zhang, J. Study on the Fruit Grading Recognition System Based on Machine Vision. Adv. J. Food Sci. Technol. 2015, 8, 777–780. [Google Scholar] [CrossRef]

- Tran, V.L.; Doan, T.N.C.; Ferrero, F.; Le Huy, T.; Le-Thanh, N. The Novel Combination of Nano Vector Network Analyzer and Machine Learning for Fruit Identification and Ripeness Grading. Sensors 2023, 23, 952. [Google Scholar] [CrossRef]

- Hayajneh, A.M.; Batayneh, S.; Alzoubi, E.; Alwedyan, M. TinyML Olive Fruit Variety Classification by Means of Convolutional Neural Networks on IoT Edge Devices. Agriengineering 2023, 5, 2266–2283. [Google Scholar] [CrossRef]

- Rocha, A.; Hauagge, D.C.; Wainer, J.; Goldenstein, S. Automatic fruit and vegetable classification from images. Comput. Electron. Agric. 2009, 70, 96–104. [Google Scholar] [CrossRef]

- Prabira, K.; Sethy, B.S.; Pandey, C. Mango Variety, 2023 [Online]. Available online: https://data.mendeley.com/datasets/tk6d98f87d/2 (accessed on 27 September 2024).

- Mureşan, H.; Oltean, M. Fruit recognition from images using deep learning. Acta Univ. Sapientiae Inform. 2018, 10, 26–42. [Google Scholar] [CrossRef]

- Zarouit, Y.; Zekkouri, H.; Ouhda, M.; Aksasse, B. Date fruit detection dataset for automatic harvesting. Data Brief 2023, 52, 109876. [Google Scholar] [CrossRef]

- Marko, S. Automatic Fruit Recognition Using Computer Vision. Mentor, Matej Kristan, Fakulteta Racunalnistvo Informatiko, Univerza Ljubljani, 2013. Available online: https://www.vicos.si/resources/fids30/ (accessed on 12 February 2025).

- Meshram, V.; Patil, K. FruitNet: Indian fruits image dataset with quality for machine learning applications. Data Brief 2021, 40, 107686. [Google Scholar] [CrossRef]

- Li, G.; Holguin, G.; Park, J.; Lehman, B.; Hull, L.; Jones, V.. The CASC IFW Database. Available online: https://engineering.purdue.edu/RVL/Database/IFW/index.html (accessed on 10 November 2024).

- Priya, P.S.; Jyoshna, N.; Amaraneni, S.; Swamy, J. Real time fruits quality detection with the help of artificial intelligence. Mater. Today Proc. 2020, 33, 4900–4906. [Google Scholar] [CrossRef]

- Saldaña, E.; Siche, R.; Luján, M.; Quevedo, R. Review: Computer vision applied to the inspection and quality control of fruits and vegetables. Braz. J. Food Technol. 2013, 16, 254–272. [Google Scholar] [CrossRef]

- Zhang, B.; Gu, B.; Tian, G.; Zhou, J.; Huang, J.; Xiong, Y. Challenges and solutions of optical-based nondestructive quality inspection for robotic fruit and vegetable grading systems: A technical review. Trends Food Sci. Technol. 2018, 81, 213–231. [Google Scholar] [CrossRef]

- Naranjo-Torres, J.; Mora, M.; Hernández-García, R.; Barrientos, R.J.; Fredes, C.; Valenzuela, A. A Review of Convolutional Neural Network Applied to Fruit Image Processing. Appl. Sci. 2020, 10, 3443. [Google Scholar] [CrossRef]

| Inclusion/Exclusion | Criteria | |

|---|---|---|

| Inclusion criteria | IC1: | Studies that address inspection, classification, or detection of defects in fruits using artificial vision or image processing. |

| IC2: | Articles with open access accessibility | |

| IC3: | Articles published between 2015 and 2024 | |

| IC4: | Articles written in English | |

| IC5 | Works published as scientific articles in journals (“journal articles”). | |

| IC6 | Empirical studies presenting algorithms, image processing techniques, hardware configurations or evaluation of characteristics relevant to fruit quality. | |

| Exclusion Criteria | EC1: | Studies that do not address fruit inspection, grading or quality or that focus on other agricultural applications with no direct relation to fruit quality control. Even if they have the search terms in the title, abstract or keywords. |

| EC2: | Literature reviews, conference papers, abstracts, letters to the editor, theses, technical reports, patents or other documents that are not original scientific articles. | |

| EC3: | Articles written in languages other than English. | |

| EC4: | Articles published before 2015. | |

| EC5: | Papers whose full text is not available for review | |

| Article | Year | Fruit Type | Objective | Camera Type/Lighting Source | Feature Type | Classification Method | Algorithms Used and Compared | Target |

|---|---|---|---|---|---|---|---|---|

| [14] | 2018 | Oranges | Varieties | GigE industrial camera /RGB/controlled artificial | Texture, Color, Shape | ANN and metaheuristic | Custom ANN | Fruit-processing industries |

| [15] | 2022 | Multiple fruits | Fruit classes | RGB/ambient lighting | Shape, Texture, Color | RNN | Adam with DenseNet169 | Retail |

| [16] | 2023 | Multiple fruits | Fruit classes | RGB/ambient lighting | Color, Shape, Texture | CNN | VGG-16 with Spiral Optimization | Retail |

| [17] | 2024 | Mango | Varieties | Smartphone/ambient lighting | Deep Features | Cubic SVM | MobileNet-v2 | Retail |

| [18] | 2023 | Multiple fruits | Fruit classes | RGB/ambient lighting | Size, Shape | CNN | YOLOv5 | Fruit-processing industries |

| [19] | 2019 | Apple | Fruit classes | RGB/ambient lighting | Color, Shape, Size | CNN | ResNet50 | Fruit-processing industries |

| [20] | 2019 | Olive | Varieties | 24Mpx CCD/HSV/controlled artificial | Size, Mass | - | Linear Regression methods | Fruit-processing industries |

| [21] | 2024 | Banana | Ripeness | RGB/ambient lighting | Color, Texture | CNN | ResNet 34, ResNet 101, VGG16, VGG19 | Fruit-processing industries |

| [22] | 2015 | Apple | Size classification | RGB/controlled artificial | Mass, Size | Fuzzy neural network | ANFIS + Linear Regression methods | Fruit-processing industries |

| [23] | 2023 | Blackberry | Ripeness | Multispectral/ambient lighting | Ripeness Stage | CNN | Custom CNN, ResNet50 | Orchard |

| [24] | 2018 | Oil palm fruit | Ripeness | RGB/ambient lighting | Color, Texture, Size | Fuzzy Logic Method | Fuzzy Logic Method | Orchard |

| [25] | 2024 | Chili | Ripeness | Smartphone/ambient lighting | Color, Texture, Size | CNN | EfficientNetB0, VGG16, ResNet50 | Fruit-processing industries |

| [26] | 2016 | Kiwifruit | Shape | RGB/ambient lighting | Shape, Size | - | Linear Regression methods | Fruit-processing industries |

| [27] | 2023 | Starfruit | Ripeness | Smartphone/controlled artificial | Color Space Model | LDA, KNN, SVM | Linear Discriminant Analysis (LDA), Linear Support Vector Machine, Quadratic SVM, Fine KNN, Subspace Discriminant Analysis | Fruit-processing industries |

| [28] | 2019 | Date fruit | Ripeness | RGB/ambient lighting | Color, Texture, Size | CNN | AlexNet, VGG16 | Orchard |

| [29] | 2020 | Date fruit | Shape | RGB/ambient lighting | Color, Shape | Vision-Based Algorithms | - | Fruit-processing industries |

| [30] | 2020 | Date fruit | Ripeness | RGB/ambient lighting | Texture, Color, Shape | CNN, SVM | ResNet, VGG-19, Inception-V3, NASNet, SVM | Orchard |

| [31] | 2021 | Multiple fruits | Fruit classes | RGB/ambient lighting | Color, Shape, Texture | CNN | VGG-19 + Pyramid histogram of oriented gradient (PHOG) | Retail |

| [32] | 2024 | Multiple fruits | Fruit classes | RGB/ambient lighting | Texture, Shape, Color | ANN, CNN | Custom ANN, AlexNet, Squeezenet, GoogLeNet, ResNet50 | Orchard |

| [33] | 2020 | Carrot | Shape | RGB/controlled artificial | Length, Diameter, Shape | Vision-Based Algorithms | - | Fruit-processing industries |

| [34] | 2023 | Cerasus Humilis | Quality Defects | Hyperspectral/Controlled artificial | Defects | LS-SVM | Least Squares–Support Vector Machine | Fruit-processing industries |

| [35] | 2024 | Shaanxi Plum | Fruit classes | RGB/ambient lighting | Variety, Wax Bloom | CNN | RetinaNet, Faster R-CNN, YOLOv3, YOLOv5, YOLOv7 | Fruit-processing industries |

| [36] | 2024 | Mango | Shape | HSV/controlled artificial | Shape, Surface Defects | KNN, DT, RF, ADB, XGB, GB, ET, SVM. | XGBoost, Random Forest, Extra Tree Classifier, Gradient Boosting, SVM, Adaboost, Decision Tree, KNN | Fruit-processing industries |

| [37] | 2019 | Multiple fruits | Fruit classes | RGB/ambient lighting | Shape, Texture | CNN | Alexnet, GoogLeNet | Retail |

| [38] | 2020 | Multiple fruits | Fruit classes | RGB/ambient lighting | Contrast Enhanced Features | CNN + RNN | Custom CNN | Orchard |

| [39] | 2015 | Multiple fruits | Fruit classes | RGB/ambient lighting | Wavelet-Entropy | FNN | Feed-Forward Neural Network | Retail |

| [40] | 2021 | Multiple fruits | Fruit classes | RGB/ambient lighting | Residual Features | SVM | SVM, DT, Forest, KNN. | Retail |

| [41] | 2023 | Multiple fruits | Fruit classes | RGB/controlled artificial | Adversarial Robust Features | CNN | AlexNet, GoogLeNet, ResNet101, VGG16 | Retail |

| [42] | 2019 | Batuan | Quality Defects | RGB/ambient lighting | Depth, Shape | SVM | SVM | Fruit-processing industries |

| [43] | 2022 | Apple | Fruit classes | RGB/ambient lighting | Color, Size | CNN + RNN + LSTM | CNN + RNN + LSTM | Orchard |

| [44] | 2022 | Multiple fruits | Fruit classes | RGB/ambient lighting | Enhanced Features | CNN | IndusNet, VGG16, MobileNet | Retail |

| [45] | 2022 | Multiple fruits | Fruit classes | RGB/ambient lighting | Shape, Color | CNN | YOLOv7, ResNet50, VGG16 | Retail |

| [46] | 2024 | Multiple fruits | Fruit classes | RGB/ambient lighting | Texture, Size, Color | CNN | FruitVision-(MobileNetV3, VGG19, ResNet50, Resnet101, DenseNet121, DenseNet201, InceptionV3, NASNetMobile) | Retail |

| [47] | 2021 | Banana | 3D Reconstruction | RGB/mixed | 3D Volumetric Features | GAN | GAN | Retail |

| [48] | 2022 | Multiple fruits | Ripeness | RGB/ambient lighting | Color, Texture | CNN | Alexnet, VGG, GoogLeNet, Resnet | Retail |

| [49] | 2023 | Grapes | Pest monitoring | Hyperspectral/Controlled artificial | Hyperspectral Features | Spectral Analysis | Spectral Analysis | Orchard |

| [50] | 2021 | Indian Jujube | Varieties | RGB/ambient lighting | Morphological, Color | ANN | Custom ANN | Fruit-processing industries |

| [51] | 2020 | Date fruit | Ripeness | RGB/ambient lighting | Maturity Indicators | CNN | VGG-19, NASNet, Inception-V3 | Orchard |

| [52] | 2019 | Mango | Quality Defects | RGB/Controlled artificial | Color, Size | KNN | KNN | Fruit-processing industries |

| [53] | 2022 | Multiple fruits | Ripeness | RGB/ambient lighting | Freshness Attributes | CNN | YOLOv4 | Retail |

| [54] | 2023 | Date fruit | Varieties | RGB/Controlled artificial | Texture Features | SMO, Naive Bayes, Ibk, LogitBoost, LMT | SMO, Naive Bayes, Ibk, LogitBoost, LMT | Fruit-processing industries |

| [55] | 2023 | Hawthorn Fruit | Ripeness | RGB/Controlled artificial | Color, Ripeness | CNN | Custom CNN, Inception-V3, ResNet50 | Fruit-processing industries |

| [56] | 2024 | Pomegranate | Quality Defects | RGB/Controlled artificial | Sunburn Features | ANN, SVM | ANN, SVM | Fruit-processing industries |

| [57] | 2024 | Custard apples | Ripeness | RGB/ambient lighting | Color, Areole Opening | SVM, KNN | SVM, K-Means | Fruit-processing industries |

| [58] | 2021 | Multiple fruits | Varieties | RGB/Mixed | Enhanced Features | CNN | Inception-V3 | Retail |

| [59] | 2019 | Multiple fruits | Object detection | RGB/Mixed | Shape, Color | CNN | Custom CNN | Retail |

| [60] | 2019 | Pomegranate | Size classification | RGB/ambient lighting | Weight, Size | ANN | Custom ANN | Fruit-processing industries |

| [61] | 2024 | Multiple fruits | Varieties | RGB/Mixed | Visual and Textural | CNN | EfficientNetV2 | Retail |

| [62] | 2022 | Apple | Quality Defects | Hyperspectral/Controlled Artificial | Spectral and Spatial | RF | Random Forest | Fruit-processing industries |

| [63] | 2018 | Olive | Size classification | RGB/Controlled Artificial | Size, Mass | - | Linear Regression methods | Fruit-processing industries |

| [64] | 2019 | Olive | Varieties | RGB/Controlled Artificial | Variety | CNN | Inception—ResNetV2 (AlexNet, InceptionV1, InceptionV3, Resnet-50, ResNet-101) | Fruit-processing industries |

| [65] | 2017 | Apple | Pesticide monitoring | Multispectral/Controlled Artificial | Spectral Features | Vision-Based Algorithms | Threshold + Histogram analysis | Orchard |

| [66] | 2022 | Apple | Ripeness | RGB/Controlled Artificial | Appearance, Freshness | CNN | ResNet, DenseNet, MobileNetV2, NASNet, EfficientNet | Fruit-processing industries |

| [67] | 2023 | Banana | Quality Defects | RGB/ambient lighting | Color, Texture, Size | CNN | KEGCNN (Knowledge Embedded-Graph CNN | Fruit-processing industries |

| [68] | 2015 | Multiple fruits | Fruit classes | RGB/ambient lighting | Signal Parameters (S11, S21) | KNN, ANN | KNN, ANN | Fruit-processing industries |

| [69] | 2023 | Multiple fruits | Fruit classes | Variety | CNN | Custom CNN | Retail | |

| [70] | 2023 | Olive | Varieties | RGB/ambient lighting | Variety | CNN | TinyML approach | Fruit-processing industries |

| Reference | Dataset Name | Images Used | Dataset Reference |

|---|---|---|---|

| [15,16] | Supermarket produce | 2633 | [71] |

| [17] | Mango Variety | 1853 | [72] |

| [19] | Fruit 360 | 8271 | [73] |

| [30] | Date fruit dataset for automated harvesting and visual yield estimation | 8079 | [74] |

| [31] | Fruit 360 | 65,429 | [73] |

| [32] | Fruit 360 | 8072 | [73] |

| [40] | Fruit 360 | 22,688 | [73] |

| [45] | FIDS-30 | 971 | [75] |

| [46] | FruitNet | 19,526 | [76] |

| [51] | Date fruit dataset for automated harvesting and visual yield estimation | 8079 | [74] |

| [61] | FruitNet | 14,700 | [76] |

| [66] | Internal feeding-worm database of the comprehensive automation for specialty crops | 8791 | [77] |

| Capture Device | Quantity | Reference |

|---|---|---|

| RGB Camera | 84% | [14,15,16,18,19,20,21,22,24,26,28,29,30,31,32,33,35,36,37,38,39,40,41,42,43,44,45,46,47,48,50,51,52,53,54,55,56,57,58,59,60,61,63,64,66,67,70] |

| Hyperspectral | 5% | [34,49,62] |

| Smartphone | 5% | [17,27] |

| Multispectral | 4% | [23,65] |

| Radio frequency | 2% | [69] |

| Classification Model | Accuracy of Classification (%) | Precision of Validation (%) |

|---|---|---|

| Linear Discriminant Analysis (LDA) | 96.2 | 93.3 |

| Linear SVM | 88.7 | 86.7 |

| Quadratic SVM | 90.3 | 86.7 |

| Fine KNN | 94.3 | 93.3 |

| Subspace Discriminant Analysis (SDA) | 90.4 | 86.7 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) | Specificity (%) |

|---|---|---|---|---|---|

| VGG19 | 97.54 ± 0.17 | 97.25 ± 0.34 | 96.85 ± 0.21 | 97.05 ± 0.48 | 96.12 ± 0.21 |

| ResNet50 | 96.21 ± 0.62 | 96.42 ± 0.27 | 96.05 ± 0.68 | 96.23 ± 0.30 | 95.74 ± 0.38 |

| ResnNet101 | 98.30 ± 0.04 | 97.66 ± 0.31 | 96.94 ± 0.37 | 97.30 ± 0.49 | 95.76 ± 0.62 |

| DenseNet121 | 98.42 ± 0.33 | 97.21 ± 0.28 | 97.14 ± 0.24 | 97.17 ± 0.33 | 96.27 ± 0.84 |

| DenseNet201 | 98.84 ± 0.28 | 98.35 ± 0.45 | 97.51 ± 0.29 | 97.93 ± 0.67 | 97.10 ± 0.36 |

| MobileNetV3 | 97.24 ± 0.43 | 96.82 ± 0.36 | 96.83 ± 0.47 | 96.82 ± 0.31 | 96.88 ± 0.22 |

| InceptionV3 | 94.11 ± 0.57 | 94.21 ± 0.65 | 93.64 ± 0.62 | 93.92 ± 0.57 | 93.27 ± 0.26 |

| NASNetMobile | 96.74 ± 0.27 | 96.72 ± 0.38 | 96.25 ± 0.34 | 96.48 ± 0.63 | 96.38 ± 0.65 |

| FruitVision (Proposed) | 99.50 ± 0.20 | 99.19 ± 0.28 | 98.88 ± 0.74 | 99.03 ± 0.55 | 98.77 ± 0.34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rojas Santelices, I.; Cano, S.; Moreira, F.; Peña Fritz, Á. Artificial Vision Systems for Fruit Inspection and Classification: Systematic Literature Review. Sensors 2025, 25, 1524. https://doi.org/10.3390/s25051524

Rojas Santelices I, Cano S, Moreira F, Peña Fritz Á. Artificial Vision Systems for Fruit Inspection and Classification: Systematic Literature Review. Sensors. 2025; 25(5):1524. https://doi.org/10.3390/s25051524

Chicago/Turabian StyleRojas Santelices, Ignacio, Sandra Cano, Fernando Moreira, and Álvaro Peña Fritz. 2025. "Artificial Vision Systems for Fruit Inspection and Classification: Systematic Literature Review" Sensors 25, no. 5: 1524. https://doi.org/10.3390/s25051524

APA StyleRojas Santelices, I., Cano, S., Moreira, F., & Peña Fritz, Á. (2025). Artificial Vision Systems for Fruit Inspection and Classification: Systematic Literature Review. Sensors, 25(5), 1524. https://doi.org/10.3390/s25051524