Abstract

Established unmanned aerial vehicle (UAV) highway distress detection (HDD) faces the dual challenges of accuracy and efficiency, this paper conducted a comparative study on the application of the YOLO (You Only Look Once) series of algorithms in UAV-based HDD to provide a reference for the selection of models. YOLOv5-l and v9-c achieved the highest detection accuracy, with YOLOv5-l performing well in mean and classification detection precision and recall, while YOLOv9-c showed poor performance in these aspects. In terms of detection efficiency, YOLOv10-n, v7-t, and v11-n achieved the highest levels, while YOLOv5-n, v8-n, and v10-n had the smallest model sizes. Notably, YOLOv11-n was the best-performing model in terms of combined detection efficiency, model size, and computational complexity, making it a promising candidate for embedded real-time HDD. YOLOv5-s and v11-s were found to balance detection accuracy and model lightweightness, although their efficiency was only average. When comparing t/n and l/c versions, the changes in the backbone network of YOLOv9 had the greatest impact on detection accuracy, followed by the network depth_multiple and width_multiple of YOLOv5. The relative compression degrees of YOLOv5-n and YOLOv8-n were the highest, and v9-t achieved the greatest efficiency improvement in UAV HDD, followed by YOLOv10-n and v11-n.

1. Introduction

As a sensor-bearing platform, an unmanned aerial vehicle (UAV) is equipped with soulful shapes and free shooting angles that can be better adapted to the needs of different application scenarios [1]. The conjunctive application of UAVs with deep learning algorithms has been a subject of considerable interest in infrastructure management. In the field of visual sensors for infrastructure anomaly detection, the YOLO (You Only Look Once) family has emerged as the most promising detection algorithm [2]. UAVs have been gradually applied to pavement inspection over the years [2,3,4,5,6,7,8,9] but have not been used on a large scale due to flight range and batteries. In-vehicle cameras are still the mainstay of pavement distress and facility detection [10,11,12,13,14,15]. However, vehicle-mounted cameras often have problems such as a small field of view and the inability to cover the cross-section, as shown in Figure 1. These problems may result in structural damage not being detected. Spatial intelligence technology based on UAV inspections brings practical technical support for the one-time detection of safety problems including through-type cracks, large-area subsidence, and damage to retaining walls or slopes. However, UAV inspection still has problems such as restrictions on navigation routes [16], short working hours, untimely data transmission processing, and computational analysis.

Figure 1.

Comparison of image data shooting range. (a) Vehicle-mounted vision, self-collected; (b) UAV photography (15 m width), produced based on dataset [17].

The YOLO family of models has attracted considerable attention due to its real-time and efficient performance. The miniatures or small models can be released to end devices and applied to real-time detection. However, as the learning depth of the model decreases, both the detection accuracy and the complexity of the knowledge learned decrease more significantly. Hou et al. [3] demonstrated that YOLOv3 exhibited superior detection efficiency and precision compared to Faster R-CNN. Ma et al. [4] enhanced the YOLOv3 algorithm with an accelerated module, resulting in the development of a compact model that is 5–6 times faster than the original. The model was integrated with a smart inspection device and an automated UAV, allowing for the real-time detection and counting of cracks. Situ et al. [18] developed a 2.84 MB model integrating the YOLOv5 model with transfer learning and channel pruning, significant advancements have been made by researchers towards real-time target detection within the domain of fire detection. Huang et al. [19] proposed a YOLO-LNet model that surpasses the performance of YOLOv5s for forest fire detection. Computer-based tests demonstrated that the model exhibited acceptable detection accuracy, where the speed, computational quantity, and parameter size were 110 FPS (frames per second), 0.7 G FLOPs (floating point operations), and 0.26 M, respectively. The above model size and efficiency metrics for fire detection studies represent the requirements for real-time and embedded models for UAV aerial photography detection. Scenarios that require higher detection accuracy often cannot be balanced with efficiency. Guan et al. [20] proposed an enhanced YOLOv7-DRM model for UAV-based bridge defect detection and conducted a fine-tuning and KD to reduce the model volume. However, the model volume was only reduced to 32.2 M, which is even larger than that of YOLOv5s.

For road distress detection, we proposed the YOLOv7-RDD model with a 6.04 M parameter size [13]; however, the model exhibited a high overhead in embedding scenarios and its practical application was found to be challenging. The YOLOv7-RDD model was subsequently tested and compared using a publicly available UAV dataset containing four types of distress, achieving a higher level of performance than Faster R-CNN, YOLOv5s, and YOLOX-s [9]. The efficacy of PKD models is contingent upon the performance of the trained teacher model [21]. Zeng and Zhong [22] proposed YOLOv8-PD (pavement distress), achieving marginal improvements in accuracy, and significantly reduced parameter size and FLOPs. Zhang et al. proposed SMG-YOLOv8 [23], a model capable of precise pavement detection with a decreased model size, albeit at the cost of higher FLOPs. In a similar vein, Li et al. constructed an RDD-YOLOv8 [24] model based on YOLOv8, which exhibited enhanced detection accuracy and a marginal decline in model size and complexity. Zhu et al. [6] conducted a comprehensive and detailed analysis of the various parameters associated with UAV detection, including factors such as flight environment, flight altitude, image resolution, and shutter speed. With their dataset-UAPD (UAV asphalt pavement distress dataset), a mean average precision with an intersection over union above 0.5 (mAP@0.5) of 56.6% was achieved. However, the authors did not analyze the size or efficiency of the model. Moreover, they expanded the scope of UAV detection and constructed a dataset of multiple roadway anomalies (UAVROAD), achieving a mAP@0.5 of 61.9% and FLOPs of 7.9 G with an improved YOLOv8n [7].

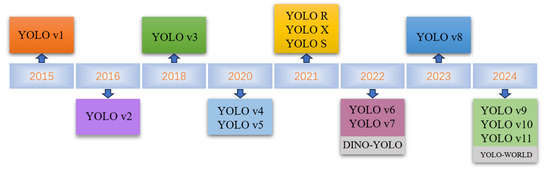

Since YOLOv1 [25], the YOLO series of object detection algorithms has been updated from YOLOv1 to YOLOv11 [25,26,27,28,29,30,31,32,33,34,35], as shown in Figure 2. The model performance still faces significant challenges in the task of less-sample target detection. The application of the series of YOLO models to UAV highway inspection scenarios and the role played by the improvement of each part of the modules need to be systematically compared and discussed. Accordingly, this paper proposed the implementation of comparative tests of varying scales of YOLO series models based on the dataset UAV-PDD2023 [17]. The improvements of each version of the model and its applicable scenarios were analyzed by systematically comparing the structure, detection mechanisms, and performances in a less-sample detection of the YOLO series.

Figure 2.

Evolution of the YOLO series of object detection algorithms.

2. Methods and Dataset

2.1. Comparison of YOLO Family

In terms of data enhancement and training strategies, the YOLO series models continue to innovate to improve detection capability. YOLOv3 [27] uses a multiscale prediction mechanism to improve the detection accuracy through different levels of feature fusion, but the model’s adaptability is weaker on low-shot data categories, which leads to a high missing detection rate. Both YOLOv5 [29] and YOLOv7 [30] employ a variety of advanced techniques and methods in the training process, such as Mosaic data enhancement, adaptive anchor frames, gradient cropping, and multi-scale training, to improve the training efficiency and detection accuracy of the models. In addition, YOLOv7 also adopts the hybrid precision training technique, which further reduces the training time and memory usage. Compared to YOLOv7, YOLOv8 [31] offers a wide range of pre-trained models using, for example, more advanced data enhancement techniques, Mosaic 4.0 and MixUp 2.0. YOLOv9 [32] and YOLOv10 [33] both combine adaptive training strategies and online data enhancement techniques, which enable the model to better adapt to changing data distributions during training. YOLOv11 [34] combines dynamic data enhancement, distributional balanced enhancement strategies, and semi-supervised learning strategy techniques to further improve the model’s detection ability in complex scenes. YOLO-World [35] integrates a variety of state-of-the-art data enhancement methods, including Mosaic, MixUp, CutMix, random cropping, color dithering, and random rotation strategies. These methods enhance the generalization ability of the model in different dimensions by diversely modifying the training samples.

For the backbone network, there are significant differences between different versions of the YOLO backbone network in the feature extraction and processing of complex scenes. YOLOv3 [27] uses Darknet−53 as the backbone network to capture multi-scale target features through deep convolutional layers, which is suitable for the detection task in simple scenes, but the performance is not good enough when dealing with low-shot data, and is prone to missing detection. YOLOv5 [29] adopts CSPDarknet, which is based on Darknet53 and improved by introducing the CSP (Cross Stage Partial connections) structure. The CSP structure enhances the feature representation by splitting the input feature map into two parts, one part is directly passed to the next layer, the other part undergoes convolution operation, and then the two parts of the feature map are added or spliced element by element. The YOLOv7’s [30] backbone network employs E-ELAN, an enhanced version of ELAN (Efficient Layer Aggregation Network) architecture. The primary function of E-ELAN is to enhance the efficacy and efficiency of the model by optimizing the aggregation of features and computational pathways. Moreover, YOLOv7 optimizes CSPNet based on YOLOv4 by reducing redundant computation and enhancing feature representation, which makes the model more adaptable when dealing with low-shot data and performs better in embedded and resource-constrained environments. YOLOv8 [31] employs the C2f (CSPDarknet53 to 2-Stage Feature Pyramid Network) module to enable more efficient residual learning and uses the Spatial Pyramid Pooling Fast (SPPF) module to facilitate the integration of key information from targets of various sizes by combining feature maps through pooling operations at different scales. YOLOv9 [32] employs the newly proposed Programmable Gradient Information PGI and the more efficient and versatile network Generalized ELAN, both of which together form a completely new network architecture. YOLOv10 [33] further optimizes the network architecture based on YOLOv9 by combining a lightweight convolutional neural network and a multi-scale feature fusion strategy, which enhances the accuracy and depth of the feature extraction, and results in a more robust and efficient detection of complex backgrounds and small-sample categories. YOLOv11 [34] adopts a feature extraction module based on Transformer, which can better capture globally dependent features than traditional convolutional neural networks and is especially suitable for handling complex scenes and long-distance-dependent features, which makes the model perform better in detecting low-shot data categories. YOLO-World [35] combines the visual Transformer and convolutional neural networks; through the hybrid feature extraction module, it achieves a more efficient feature expression capability when dealing with multi-targets and complex scenes, and especially performs well in low-shot data categories and small-target detection.

The improvement of the loss function is also an important factor in enhancing the detection performance of the YOLO series. YOLOv3 [27] uses the GIoU loss function to enhance the regression accuracy of the bounding box but suffers from the problem of inaccurate localization. YOLOv7 [30] introduces the CIoU loss function, which improves the localization accuracy by further taking into account the overlap of the bounding box, the distance from the centroid, and the scale consistency. YOLOv8 [31] uses the Wise-MPDIoU (Modified Panoptic Distance IoU) loss weighting mechanism, which dynamically adjusts the loss weights according to the importance of different targets and significantly improves the detection accuracy of the less-sample category. YOLOv9 [32] adopts an improved CIoU loss function and introduces a weighting strategy for the less-sample category to ensure that in the case of the bounding box, the model achieves stable detection results in the presence of imbalanced positioning accuracy and categories. Compared with the previous version, YOLOv9 has significantly improved the detection performance for rare targets. YOLOv10 [33] combines the GIoU and Focal loss functions to better balance the accuracy and stability in complex scenarios, especially when dealing with small targets and sparse categories. YOLOv11 [34] introduces an adaptive loss function mechanism, which dynamically adjusts the loss function according to the target features, enhancing the model’s performance in complex environments. YOLO-World [35] employs a hybrid loss function, including both IoU and classification loss, to ensure the accurate detection of complex scenarios and few-sample categories, while maintaining the robustness of the model.

As aspects of feature fusion and detection head processing, YOLOv3 generates multi-scale output by fusing features across layers. Instead of directly using a standard Feature Pyramid Network (FPN), it generates multiscale output by combining feature maps from different layers. The detection head consists of multiple convolutional layers and uses three convolutional layers of different scales to detect large, medium, and small objects. The neck structure of YOLOv5 mainly uses the feature pyramid network (FPN) for feature fusion. It fuses feature maps of different scales through up-sampling and splicing operations to enhance the detection of objects of different sizes. The main differences between the neck of YOLOv7 and YOLOv5 are that the SPPF is replaced with SPPCSP, the CSP module is replaced with the ELAN-W module, the downsampling becomes the MP−2 layer, and the Conv2d becomes the RepBlock + CBM. Both the YOLOv5 and YOLOv7 detection headers support a variety of improvement mechanisms. YOLOv8 fuses multi-scale feature map outputs from the backbone, utilising Feature Pyramid Network (FPN) and Path Aggregation Network (PAN) concepts to construct a feature pyramid. The head network of YOLOv8 employs decoupled detection heads, using two parallel convolution branches to compute the regression and classification losses separately. YOLOv9 is more flexible in feature fusion, and YOLOv10 and YOLOv11 introduce an enhanced feature fusion module and multi-attention mechanism, respectively, which make the model perform more stably and efficiently in multi-target detection with fewer sample categories and complex environments. YOLO-World, on the other hand, enables the model to better understand complex targets in complex environments through the global feature fusion strategy, especially for multi-target detection scenarios. The model and framework chosen for the comparison tests are shown in Table 1.

Table 1.

The framework composition for the comparison tests.

As illustrated in Table 1, certain frameworks (YOLOv5, YOLOv8, YOLOv11, and YOLO-world) employ model compression predominantly through smaller depth and width multipliers, while alternative versions of the compressed model affect enhancements or modifications at the level of the backbone or neck network modules for other frameworks (YOLOv7, YOLOv9, and YOLOv10).

2.2. Dataset

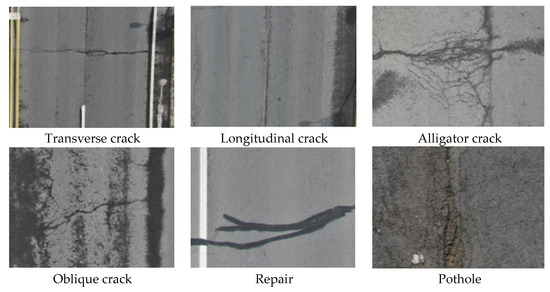

The UAV-PDD2023 [17] dataset consists of road pavement images taken during UAV inspections. The dataset consists of 2425 images in JPG format with corresponding annotated files in VOC format. The pavement distress images were collected during the day, and a wide variety of weather and lighting conditions were taken into account when capturing the images. The images are 2592 × 1944 pixels in size, derived from large-format slices of images taken with a 4K camera, with four images taken at each location (top–left, top–right, bottom–left, and bottom–right). Figure 3 illustrates the samples of highway distresses in the UAV-PDD 2023 dataset. The dataset used in this paper includes six types of road distress: 603 alligator cracks (AC), 2992 longitudinal cracks (LC), 282 repairs (RP), 199 potholes (PH), 1686 oblique cracks (OC), and 5396 transverse cracks (TC), totaling 11,150 labels. The dataset is divided into training and validation sets in a ratio of 7:3. The test set includes 201 ACs, 852 LCs, 86 RPs, 60 PHs, 495 OCs, and 1627 TCs, totaling 3321 labels.

Figure 3.

Samples of highway distress in UAV-PDD 2023 dataset [17].

3. Experiment Setting and Evaluation Metrics

The experimental environment is based on Python 3.10.8, a Pytorch 2.0.1 + cu117 framework, CUDA11.7, and the hardware devices are AMD Ryzen 7 5800 H with a Radeon Graphics Processor, and NVIDIA GeForce RTX 4090.

In this paper, the mean Average Precision (mAP) and F1 score were used to evaluate the model’s accuracy. Frame rate per second (FPS) was used to evaluate the detection efficiency. The number of parameters (Params) and the number of floating-point operations per second (FLOPs) were used to evaluate the model size and complexity. Moreover, the training time (TT) was used to assist in the assessment of the lightweight degree of the model. The related evaluation metrics are calculated according to Equations (5)–(9).

where TP denotes a positive case that predicted true, FP denotes a positive case that predicted false, TN denotes a negative case predicted true, and FN denotes a negative case predicted false; AP denotes the area enclosed by the coordinates x and y of the precision–recall curve, and N denotes the number of detection categories; mAP@0.5 represents the mean value of AP for each class of detection target computed when the IoU threshold is set to 0.5; mAP@0.5:0.95 and the average value of the mAP were computed for each IoU threshold in steps of 0.05 from 0.5 to 0.95.

The multidimensional evaluation metrics and their technical significance are summarized in Table 2.

Table 2.

Multidimensional evaluation metrics.

Moreover, the relative comparison metrics were calculated according to Equations (6)–(10).

4. Results and Discussion

4.1. Model Comparison Results

The performances of different scales and versions of the YOLO model on the UAV-PDD2023 dataset were compared. The evaluation metrics are shown in Table 3.

Table 3.

The evaluation results for the YOLO family.

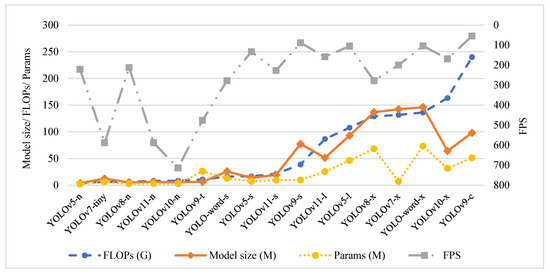

As can be seen from Table 2, YOLOv5-l and YOLOv9-c performed the best in detection accuracy, YOLOv5-l had a high mAP@0.5 of 89% and an F1 of 80.9%, while the second-placed YOLOv9-c had a mAP@0.5 of 82.2% and an F1 of 82%. Both models possessed a great number of parameters and high computational complexity. The inference efficiency of YOLOv9-c was the lowest among all models, followed by YOLOv9-s. In terms of model lightness, the YOLOv5-n, YOLOv8-n, and YOLOv10-n models demonstrated superior performance, with the YOLOv10-n achieving the highest detection efficiency of up to 714 FPS, followed by YOLOv7-tiny and YOLOv11-n. It is particularly noteworthy that YOLOv5s and YOLOv11-s presented some competitive advantages in terms of accuracy and lightweightness, indicating they may be suitable as baseline models for compression and application in embedded platforms. However, they performed poorly in efficiency, with FPS values of 133.3 and 227.3, respectively. A line graph was plotted in the order of computational sizes to further analyze the model’s ease of use and detection efficiency, as shown in Figure 4.

Figure 4.

Comparison of efficiency evaluation results.

As demonstrated in Figure 4, there was a strong correlation between the number of model parameters and the model size, with the notable exceptions of the small and nano-models of YOLOv7 and YOLOv9, which are due to the incorporation of specific modules. Models demonstrating both lightness and high efficiency included YOLOv10-n, YOLOv7-t, YOLOv11-n, and YOLOv9-t, which may be suitable for scenarios with embedded real-time monitoring requirements.

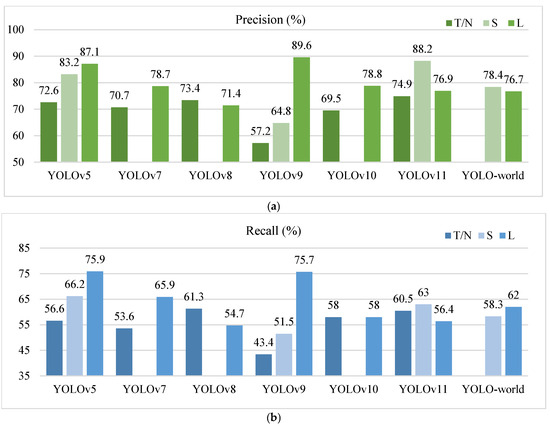

To further visually compare the performance of the models in terms of precision and recall, comparison graphs were plotted as shown in Figure 5.

Figure 5.

Comparison of precision and recall results. Note: nano and tiny (-n and -t) models are labelled as T/N; small-sized (-s) models are uniformly labelled as S, and large-sized (-c, -l, and -x) models are uniformly labelled as L.

As shown in Figure 5, YOLOv9-c and YOLOv5-l showed excellent performance in terms of precision and recall, indicating that both models had good detection capability and detection accuracy, highlighting that these two model frameworks can be prioritized for application scenarios pursuing high-precision detection. However, their corresponding T/N versions of the model performed poorly. The high detection precision and detection rate of YOLOv11-s and YOLOv5-s, as well as a certain degree of model compression, made them potentially amenable to further improvement and application in embedded scenarios for UAV inspections. YOLOv8-n and YOLOv11-n also performed with relatively high detection precisions and recalls, coupled with their compacted model sizes, making them ideal for embedded applications that require high detection coverage. Among them, YOLOv11-n had the highest detection efficiency (FPS), which met the real-time requirements. Notably, YOLOv11-s exhibited a superb detection accuracy and detection rate compared to YOLOv11-l, which may be because the target in the dataset was either small or densely distributed. In such cases, the sense field design of the -s version may be more appropriate. In contrast, the -l version might be overly complex to optimize, potentially affecting performance negatively.

4.2. Balance Evaluation and Application Scenarios Analysis

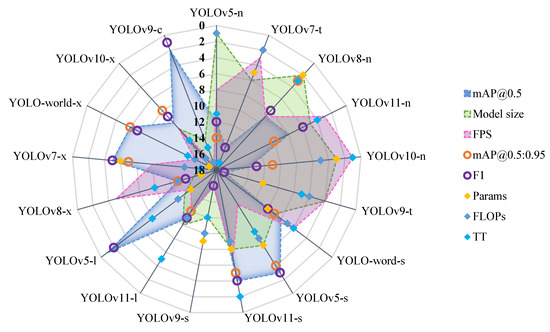

Based on the ranking of evaluation metrics (where 1 indicates optimal performance and 17 indicates the poorest performance), this study visualized model rankings through radar charts (Figure 6). Three core metrics were prioritized for comparative analysis: detection accuracy (mAP@0.5), detection efficiency (FPS), and model lightweight degree (model size).

Figure 6.

Comparison of efficiency evaluation results.

As shown in Figure 6, the models exhibited significant performance variations:

- (1)

- Single-Advantage Model Groups

YOLOv5-l, YOLOv9-c, YOLOv7-x, YOLOv5-s, and YOLOworld-x excelled in mAP@0.5 but lacked competitiveness in efficiency and lightweight metrics; YOLOv5-n and YOLOv8-n showed notable advantages in model size, while YOLv8-n demonstrated moderate detection accuracy.

- (2)

- Dual-Advantage Balanced Group

YOLOv9-t, YOLOv11-n, and YOLOv7-t achieved a balance between model lightweightness and detection efficiency but exhibited relatively lower baseline accuracy.

- (3)

- Comprehensive Excellence Group

YOLOv11-s delivered outstanding performances across all three metrics: detection accuracy (mAP@0.5 = 73.6%), efficiency (FPS = 227.3), and lightweightness (model size = 19.2 M), achieving the best overall performance. YOLO-wrorld1-s showed significant advantages in detection efficiency (FPS = 277.8) and model compression (model size = 25.8 M), with its mAP@0.5 at 66%.

This visualization revealed the trade-offs in the “accuracy–efficiency–lightweight trilemma”, providing multidimensional decision-making insights for model selection in engineering applications. Based on the established minimum detection accuracy threshold (mAP@0.5 > 60%), the efficiency-optimized benchmarks YOLOv10-n and YOLOv11-n warrant comprehensive validation for real-time vehicular/UAV detection systems. Conversely, the high-precision models YOLOv5-l and YOLOv9-c demonstrated particular suitability for security surveillance and industrial quality inspection scenarios. A further investigation into cross-environment generalization and multi-scale detection capability needs to be conducted. Notably, YOLOv11-s emerged as a balanced solution with competitive accuracy, efficient throughput, and compact architecture. This configuration presents strong potential for embedded deployment, particularly in edge computing devices and mobile systems.

4.3. Model Comparison Between Different Sized Models

A comparison was made between the performance metrics of the minimum (Max) model and the maximum model (Min) in Table 1. The comparison results are shown in Table 4.

Table 4.

The comparison results between the Max- and Min-sized models.

As there were no changes in the main modules of different sizes in YOLOv5, YOLOv8, YOLOv11, or YOLO-world, the four groups were discussed first as Group 1, and the others were Group 2. The comparison of models of differing sizes in Table 3 reveals the following conclusions.

Group 1: (1) The size of the model and the FLOPs of the YOLOv5 changes were very significant; the l-model was 24–27 times the n-model, and the detection accuracy also underwent a significant enhancement with alterations to network depth and width. (2) YOLOv8-n exhibited no loss in accuracy, but rather a modest enhancement, despite the significant compression of the model (the size of the n-model was approximately 1/26 the size of the x-model) and a substantial reduction in the amount of computation (the FLOPs of the n-model were about 1/20 of the FLOPs of the x-model). However, YOLOv8-n achieved a decrease in FPS. (3) The YOLOv11-n model was compressed significantly, with the n version being compressed approximately 1/8 compared to the l version, and the FLOPs being reduced to about 1/11. Intriguingly, the s-version of the model attained the highest road distress detection accuracy (Table 2), which may be attributable to the overfitting problem engendered by the deeper and wider network of YOLOv11-l. (4) The two versions (x and s) of YOLO-world exhibited a minor disparity in detection accuracy, with the compression degree of the model being about 1/6 and the reduction in the FLOPs being 1/8.5.

Group 2: (1) The differences in YOLOv7 were mainly in the confusion and detector parts. The compression degree and the change in the accuracy of the YOLOv7 model were both significant. The mAP@0.5 of the t-model was improved by 13%, the size was 11.6 times that of the t-model, and the FLOP value was 22.6 times that of the t-model. (2) There was a slight difference between the backbone components of the t/s and c versions of YOLOv9; the detection accuracy of YOLOv9-t was significantly improved with an increase of 36.3% in mAP@0.5; the YOLOv9-t model’s FLOPs were 1/22.4 that of the c-model, and the model size was compressed to 1/16. Moreover, YOLOv9-t achieved the highest FPS increase. (3) Both components of the backbones and neck networks of YOLOv10-n and YOLOv10-x were different. Compared to YOLOv10-x, the YOLOv10-n model was compressed to approximately 1/12, and the FLOP value was reduced to approximately 1/20. However, the change in model accuracy in detecting highway distress using UAV data was not significant, with mAP@0.5 being reduced by 1.9%.

4.4. Comparison of Classification Detection Accuracy of Representative Models

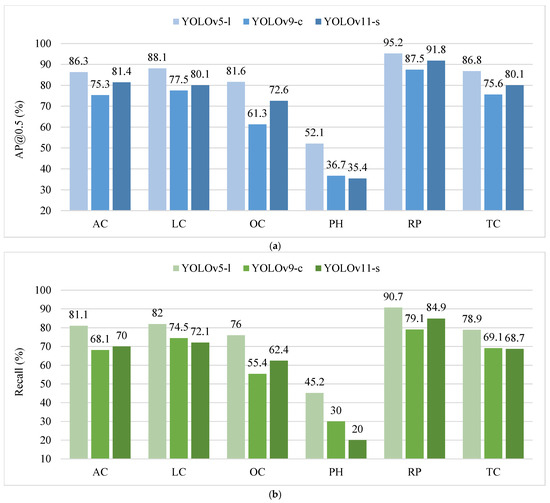

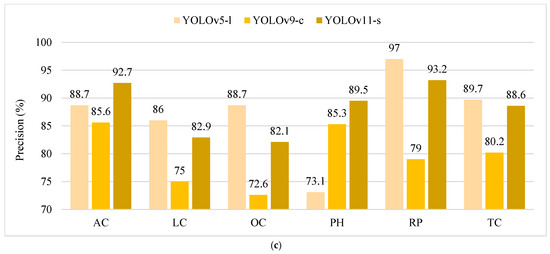

The classified detection accuracy results of representative models including YOLOv5-l, YOLOv9-c, and YOLOv11-s were compared, as shown in Figure 7.

Figure 7.

Comparison of classified detection accuracy. (a) AP@0.5; (b) Recall; (c) Precision.

From Figure 7, it can be seen that different models performed differently in terms of classification detection accuracy, with potholes being the most difficult target to balance detection rate and detection precision. As shown in Figure 7a, YOLOv5-l achieved the highest detection accuracy for all highway distress types; YOLOv11-s outperformed YOLOv9-c in most categories, highlighting the efficacy of the smaller model. As shown in Figure 7b, YOLOv5-l exhibited an excellent detection ability in all distress types, higher than the other two models, with the lowest detection rate for pothole targets. The pothole detection rate of YOLOv11-s was extremely low with a recall of 20% and that of YOLOv9-c was as low as 30%, which reflects the difficulty in detecting small targets. From Figure 7c, it can be seen that the YOLOv5-l and YOLOv11-s models performed similarly in terms of precision, and none of them had an absolute advantage, but YOLOv9-c performed relatively poorly, probably because of a higher probability of false detection. To further analyze the problematic manifestations of detection anomalies in different disease categories, a confusion matrix was drawn, as shown in Figure 8.

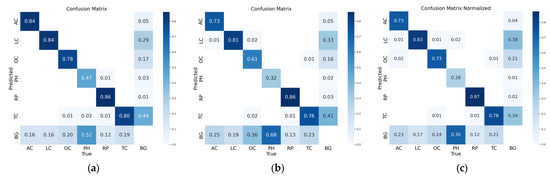

Figure 8.

Confusion matrixes. (a) YOLOv5-l, (b) YOLOv9-c, (c) YOLOv11-s.

As shown in Figure 8, potholes were the most likely to be missed, while the second category of easy-to-miss objects was diagonal cracks, while transverse and longitudinal cracks were mainly true-negative wrongly detected; YOLOV5-l had the best overall performance but presented the highest proportion of wrongly detected transverse cracks; no significant difference existed between YOLOv9-c and YOLOv11.

5. Conclusions

This paper conducted a comparative study on the comprehensive application of the YOLO series of algorithms in the UAV-based inspection of highway distress. The algorithms compared included YOLOv5-n, YOLOv5-s, YOLOv5-l, YOLOv7-tiny, YOLOv7-x, YOLOv8-n, YOLOv8-x, YOLOv9-t, YOLOv9-s. YOLOv9-c, YOLOv10-n, YOLOv10-x, YOLOv11-n, YOLOv11-s, YOLOv11-l, YOLO-word-s, and YOLO-word-x, and the main conclusions obtained are as follows.

- (1)

- YOLOv5-l and YOLOv9-c achieved the highest detection accuracy (mAP@0.5, mAP@0.5:0.95, F1) on UAV highway inspection data. YOLOv5-l performed well in mean and classification detection precision and recall, while YOLOv9-c performed poorly in classification precision and recall.

- (2)

- YOLOv10-n, YOLOv7-t, and YOLOv11-n achieved the highest detection efficiency; YOLOv5-n, YOLOv8-n, and YOLOv10-n had the smallest model sizes; and YOLOv11n was the model with the best performance in terms of combined detection efficiency (FPS), model size, and computational complexity (FLOPs), which is expected to be used for embedded real-time detection.

- (3)

- It is evident that both the YOLOv5-s and the YOLOv11-s are capable of achieving a balance between the detection accuracy and the lightweight degree of the model; however, the efficiency is merely average at best. It can be concluded that the models may be considered suitable for lightweight detection platforms that have higher accuracy requirements.

- (4)

- Comparing the t/n and l/c versions, it was found that the change of the backbone network in YOLOv9 had the greatest impact on the model detection accuracy, followed by the impact of the network depth_mulltiple and width_multiple of YOLOv5; the relative compression degrees of the models of YOLOv5-n and YOLOv8-n were the highest; and YOLOv9-t achieved the greatest efficiency improvement in UAV highway detection, followed by v10-n and v11-n.

The limitations of this study are as follows: (1) Tests were conducted on the same hardware platform, which precluded a comparative analysis of applications deployed on different platforms. This limits the generalizability of the findings across diverse hardware environments. (2) The study lacked validation using a diverse and extensive dataset. Additionally, there was insufficient research on the impact of data quality on the results. This may affect the robustness and applicability of the findings in broader contexts.

Suggestions for further research: (1) Investigate the stability of embedded terminal applications, particularly those utilizing preferred lightweight algorithms. This could provide insights into their reliability and performance in real-world scenarios. (2) Conduct studies aimed at improving the accuracy and generalization capabilities of high-precision detection applications. This would help enhance their applicability across various contexts and data types. (3) Test the performance of high-efficiency models in real-time monitoring scenarios. This could offer valuable insights into their feasibility and effectiveness for time-sensitive applications.

Author Contributions

Conceptualization, Z.Y. and H.W.; methodology, Z.Y.; software, Z.Y.; validation, X.L. and H.W.; formal analysis, Z.Y. and H.W.; investigation, Z.Y.; resources, H.W.; data curation, Z.Y. and X.L.; writing—original draft preparation, Z.Y. and H.W.; writing—review and editing, H.W.; visualization, Z.Y. and H.W.; supervision, H.W.; project administration, H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article have been cited in the article. https://zenodo.org/records/8429208 (accessed on 20 February 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hu, X.; Assaad, R.H. The use of unmanned ground vehicles (mobile robots) and unmanned aerial vehicles (drones) in the civil infrastructure asset management sector: Applications, robotic platforms, sensors, and algorithms. Expert Syst. Appl. 2023, 232, 120897. [Google Scholar] [CrossRef]

- Chen, C.; Zheng, Z.; Xu, T.; Guo, S.; Feng, S.; Yao, W.; Lan, Y. YOLO-Based UAV Technology: A Review of the Research and Its Applications. Drones 2023, 7, 190. [Google Scholar] [CrossRef]

- Hou, Y.; Dong, Y.; Zhang, Y.; Zhou, Z.; Tong, X.; Wu, Q.; Qian, Z.; Li, R. The Application of a Pavement Distress Detection Method Based on FS-Net. Sustainability 2022, 14, 2715. [Google Scholar] [CrossRef]

- Ma, D.; Fang, H.; Wang, N.; Zhang, C.; Dong, J.; Hu, H. Automatic Detection and Counting System for Pavement Cracks Based on PCGAN and YOLO-MF. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22166–22178. [Google Scholar] [CrossRef]

- Zhang, Y.; Zuo, Z.; Xu, X.; Wu, J.; Zhu, J.; Zhang, H.; Wang, J.; Tian, Y. Road damage detection using UAV images based on multi-level attention mechanism. Autom. Constr. 2022, 144, 104613. [Google Scholar] [CrossRef]

- Zhu, J.; Zhong, J.; Ma, T.; Huang, X.; Zhang, W.; Zhou, Y. Pavement distress detection using convolutional neural networks with images captured via UAV. Autom. Constr. 2022, 133, 103991. [Google Scholar] [CrossRef]

- Zhu, J.; Wu, Y.; Ma, T. Multi-Object Detection for Daily Road Maintenance Inspection with UAV Based on Improved YOLOv8. IEEE Trans. Intell. Transp. Syst. 2024, 25, 16548–16560. [Google Scholar] [CrossRef]

- Zheng, L.; Xiao, J.; Wang, Y.; Wu, W.; Chen, Z.; Yuan, D.; Jiang, W. Deep learning-based intelligent detection of pavement distress. Autom. Constr. 2024, 168, 105772. [Google Scholar] [CrossRef]

- Xu, F.; Wan, Y.; Ning, Z.; Wang, H. Comparative Study of Lightweight Target Detection Methods for Unmanned Aerial Vehicle-Based Road Distress Survey. Sensors 2024, 24, 6159. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, H.; Kang, J.; Xu, Z. A Method for Surveying Road Pavement Distress Based on Front-View Image Data Using a Lightweight Segmentation Approach. J. Comput. Civ. Eng. 2024, 38, 04024026. [Google Scholar] [CrossRef]

- Li, L.; Fang, B.; Zhu, J. Performance Analysis of the YOLOv4 Algorithm for Pavement Damage Image Detection with Different Embedding Positions of CBAM Modules. Appl. Sci. 2022, 12, 10180. [Google Scholar] [CrossRef]

- Ren, M.; Zhang, X.; Chen, X.; Zhou, B.; Feng, Z. YOLOv5s-M: A deep learning network model for road pavement damage detection from urban street-view imagery. Int. J. Appl. Earth Obs. Geoinf. 2023, 120, 103335. [Google Scholar] [CrossRef]

- Ning, Z.; Wang, H.; Li, S.; Xu, Z. YOLOv7-RDD: A Lightweight Efficient Pavement Distress Detection Model. IEEE Trans. Intell. Transp. Syst. 2024, 25, 6994–7003. [Google Scholar] [CrossRef]

- Miazzi, M.M.; Pasqualone, A.; Zammit-Mangion, M.; Savoia, M.A.; Fanelli, V.; Procino, S.; Gadaleta, S.; Aurelio, F.L.; Montemurro, C. A Glimpse into the Genetic Heritage of the Olive Tree in Malta. Agriculture 2024, 14, 495. [Google Scholar] [CrossRef]

- Lu, L.; Wang, H.; Wan, Y.; Xu, F. A Detection Transformer-Based Intelligent Identification Method for Multiple Types of Road Traffic Safety Facilities. Sensors 2024, 24, 3252. [Google Scholar] [CrossRef]

- Ažaltovič, V.; Škvareková, I.; Pecho, P.; Kandera, B. Calculation of the Ground Casualty Risk during Aerial Work of Unmanned Aerial Vehicles in the Urban Environment. Transp. Res. Procedia 2020, 44, 271–275. [Google Scholar] [CrossRef]

- Yan, H.; Zhang, J. UAV-PDD2023: A Benchmark Dataset for Pavement Distress Detection Based on UAV Images; Zenodo: Brussel, Belgium, 2023. [Google Scholar] [CrossRef]

- Situ, Z.; Teng, S.; Liao, X.; Chen, G.; Zhou, Q. Real-time sewer defect detection based on YOLO network, transfer learning, and channel pruning algorithm. J. Civ. Struct. Health Monit. 2024, 14, 41–57. [Google Scholar] [CrossRef]

- Huang, L.; Ding, Z.; Zhang, C.; Ye, R.; Yan, B.; Zhou, X.; Xu, W.; Guo, J. YOLO-ULNet: Ultralightweight Network for Real-Time Detection of Forest Fire on Embedded Sensing Devices. IEEE Sens. J. 2024, 24, 25175–25185. [Google Scholar] [CrossRef]

- Guan, B.; Li, J. Lightweight detection network for bridge defects based on model pruning and knowledge distillation. Structures 2024, 62, 106276. [Google Scholar] [CrossRef]

- Jiang, S.; Wang, H.; Ning, Z.; Li, S. Lightweight pruning model for road distress detection using unmanned aerial vehicles. Autom. Constr. 2024, 168, 105789. [Google Scholar] [CrossRef]

- Zeng, J.; Zhong, H. YOLOv8-PD: An improved road damage detection algorithm based on YOLOv8n model. Sci. Rep. 2024, 14, 12052. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Bei, Z.; Ling, T.; Chen, Q.; Zhang, L. Research on high-precision recognition model for multi-scene asphalt pavement distresses based on deep learning. Sci. Rep. 2024, 14, 25416. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Yin, C.; Lei, Y.; Zhang, J.; Yan, Y. RDD-YOLO: Road Damage Detection Algorithm Based on Improved You Only Look Once Version 8. Appl. Sci. 2024, 14, 3360. [Google Scholar] [CrossRef]

- Redmon, J. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Farhadi, A.; Redmon, J. Yolov3: An Incremental Improvement. In Computer Vision and Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2018; Volume 1804, pp. 1–6. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOV7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO, Version 8.0.0, 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 2 August 2024).

- Wang, C.Y.; Yeh, I.H.; Liao, M.H.Y. Yolov9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the European Conference on Computer Vision, Paris, France, 26–27 March 2025; Springer: Cham, Switzerland, 2025; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Cheng, T.; Song, L.; Ge, Y.; Liu, W.; Wang, X.; Shan, Y. Yolo-world: Real-time open-vocabulary object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA; 2024; pp. 16901–16911. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).