Abstract

With the increasing demand for road defect detection, existing deep learning methods have made significant progress in terms of accuracy and speed. However, challenges remain, such as insufficient detection precision for detection precision for road defect recognition and issues of missed or false detections in complex backgrounds. These issues reduce detection reliability and hinder real-world deployment. To address these challenges, this paper proposes an improved YOLOv8-based model, RepGD-YOLOV8W. First, it replaces the C2f module in the GD mechanism with the improved C2f module based on RepViTBlock to construct the Rep-GD module. This improvement not only maintains high detection accuracy but also significantly enhances computational efficiency. Subsequently, the Rep-GD module was used to replace the traditional neck part of the model, thereby improving multi-scale feature fusion, particularly for detecting small targets (e.g., cracks) and large targets (e.g., potholes) in complex backgrounds. Additionally, the introduction of the Wise-IoU loss function further optimized the bounding box regression task, enhancing the model’s stability and generalization. Experimental results demonstrate that the improved REPGD-YOLOV8W model achieved a 2.4% increase in mAP50 on the RDD2022 dataset. Compared with other mainstream methods, this model exhibits greater robustness and flexibility in handling road defects of various scales.

1. Introduction

As China’s economy takes off and develops, infrastructure development has been steadily advancing and achieving remarkable results. In particular, great achievements have been made in the field of highways. By the end of 2023, the country’s highway mileage had reached 5,436,800 km, an increase of 82,000 km from the end of the previous year [1]. The increasing mileage of highways also raises the question of how to maintain them. As highways are used more and more, both asphalt and cement pavements will gradually develop defects due to the erosion of the natural environment and the influence of human activities. Early common defects are cracks and potholes [2]. As the road ages, these defects will become more severe, posing a serious threat to the safety of pedestrians and vehicles. The timely detection and repair of road defects is crucial to ensuring road safety and prolonging pavement lifespan.

Early defect detection techniques have relied mainly on manual visual inspection [3]. Despite the simplicity and directness of this method, the accuracy of visual inspection is limited by the subjective judgement of the inspector, making it inefficient, time consuming and difficult to meet the increasing demand. With the expansion of roads and the scale of traffic, this method is increasingly unable to meet the accuracy and efficiency requirements of modern industrial inspection.

In contrast, multi-purpose inspection vehicles [4] based on integrated sensors such as GPS, cameras, laser profilers, ground-penetrating radar, etc., are more accurate and efficient. These vehicles are able to carry out inspections without interrupting normal traffic, greatly improving the convenience and accuracy of inspection work. Since the beginning of the 21st century, a number of countries have gradually introduced their own road defect detection vehicles, and Roadware has also developed a night inspection vehicle [5]. However, the high cost limits the popularity of these vehicles, especially in rural areas where the budget is limited and the relevant departments can hardly afford to use this kind of equipment, so the application of road inspection vehicles in rural areas is still limited. To overcome this problem, some scholars have tried to explore the use of 3D sensors for road defect detection, which has advantages in terms of accuracy and detail detection, but its equipment and application costs are still high and unsuitable for wider use [6].

In recent years, with the rapid progress of deep learning, target detection techniques have become increasingly mature, demonstrating remarkable prediction accuracy and detection speed. Most research results [7,8,9] indicate that, as long as the training data are sufficiently large and a well-designed algorithm is used, the performance of artificial intelligence can be nearly comparable to that of humans, making it capable of handling various detection tasks. Meanwhile, techniques such as knowledge distillation [10,11], transfer learning [12], and model resizing enable an effective balance between accuracy, speed, and computational resource consumption, leading to their widespread application [13,14,15,16] across various fields. In particular, the extensive use of convolutional neural network (CNN) [17] has significantly advanced road defect detection technology. Compared with traditional feature extraction methods, deep learning greatly enhances the accuracy and efficiency of defect detection by automatically learning image features [18].

Cao Jingang et al. [19] proposed a crack detection network (ACNet) based on the attention mechanism, using ResNet34 as the feature extraction backbone and introducing the attention feature module (AFM) to enhance the detection of multi-scale cracks. The attention-based decoding module (ADM) was designed to achieve accurate positioning of cracks. Miao Ren et al. [20] proposed a diagonal IoU loss function for optimizing the regression computation of the bounding box to enhance the detection capability. Meanwhile, the YOLOv5 model was improved by combining the Generalized and Decoupled Head modules, which achieve high- and low-level feature efficient fusion, thereby improving the detection accuracy. Hu et al. [21] proposed a YOLOM lightweight pavement disease detection method by fusing state-space models (SSM), with the aim of resolving the problem of insufficient accuracy of existing detection methods. The study designed a visual Mamba layer with multiple scanning modes and improved the rapid extraction of image features by optimizing the data normalization method to adapt to the small batch training. In addition, parallel computing units were designed to accelerate network computation and reduce algorithm training time. In Guo et al.’s study [22], MobileNetV3 was introduced to replace YOLOv5 as the base network, and the K-means clustering algorithm was used to optimize and filter the a priori frames. This method not only improves the accuracy of target detection but also effectively reduces the computational complexity of the model, making it more lightweight, while significantly improving the computational efficiency.

The above studies show that, for the challenges of defect detection in complex road scenes, the accuracy and robustness of detection can be significantly improved by improving the algorithm structure, optimizing the feature extraction method and introducing scene-adaptive enhancement strategies. These methods can not only reduce false detections and omissions but also deal more effectively with the interference of morphological diversity and complex defect background. However, road defect detection still faces the following challenges: in complex scenes, defect features are often small and easily overwhelmed by the background information, and the detection ability of existing methods for small targets is still insufficient; in the actual road detection scene, dynamic environmental factors such as light changes, rain and snow, and vehicle and pedestrian interference can significantly affect the stability and accuracy of detection; and the shapes, textures, and distributions of road diseases are complex and varied. Therefore, how are more robust detection methods to be designed? Furthermore, the shapes, textures, and distributions of road defects are highly diverse, posing challenges for designing more robust feature extraction and classification models. Based on YOLOv8n, this paper makes the following improvements to address the above problems: (1) design a new Rep-GD module; replace the original C2f module in the GD mechanism with the improved C2f module of RepViTBlock, which achieves higher feature expression capability and lower computational complexity and significantly reduces the computational cost; (2) replace the Rep-GD module with the Neck part in the YOLOv8 model, which solves the problem of information loss in recursive transmission by aggregating and distributing global information and improves the detection capability of road defects at different scales; and (3) introduce the Wise-IoU loss function to make the model converge faster and with higher accuracy.

2. RepGD-YOLOV8W Algorithm Construction

2.1. GD

In traditional target detection tasks, FPN [23] is a commonly used feature fusion structure, but it suffers from incomplete fusion of feature information in practical applications. This is because FPN can only effectively fuse the features of neighboring layers, while for the features of non-neighboring layers can only be passed indirectly by recursion, which leads to the loss of information. To address the above problem, this paper introduces the GD mechanism proposed by Gold-Yolo [24], which can effectively aggregate global information by globally fusing features at different levels and injecting them into features at different levels, thus avoiding the problem of information loss in the recursive transfer and significantly improving the ability of the model to detect road defects at different scales. The GD mechanism consists of three core modules: feature alignment module (FAM), information fusion module (IFM), and information injection module (Inject). In the gather process, the feature alignment module (FAM) first extracts and aligns features from different layers, ensuring consistency of features in the spatial dimension and providing the basis for subsequent fusion. Next, the information fusion module (IFM) integrates the aligned features to generate global information. Once the gathering process is complete, the Information injection module (Inject) distributes the fused global features to each layer and combines the local features of that layer. Through a simple attention mechanism, the global features are effectively fused with the local features, enhancing the feature expressiveness of each layer and significantly improving the model’s recognition performance.

The GD mechanism is designed for multi-scale features with two branches, low-GD and high-GD, to cope with small and large target detection requirements, respectively.

2.1.1. Low-GD

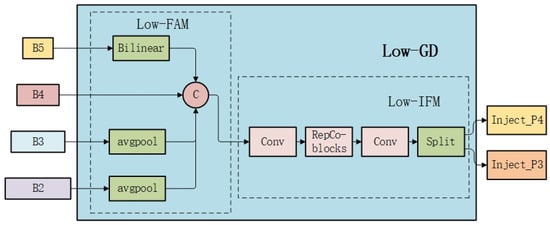

The low-GD module is mainly used to fuse the shallow feature information of the model to achieve efficient interaction of shallow features and distribution of global information through the steps of feature alignment, information fusion, and information injection. The exact process is shown in Figure 1.

Figure 1.

Low-stage collection-distribution branches.

In the feature alignment phase, the multi-scale features [B2, B3, B4, B5] extracted from the backbone network are unified to the target size by the low-order feature alignment module (Low_FAM). The Low_FAM uses an average pooling operation to downsample the input features and unify the sizes with the following formula:

This alignment not only preserves low-level information but also balances computational complexity and feature integrity, making subsequent information fusion more efficient.

In the information fusion phase, the GD mechanism uses a multi-layer reparametrized convolutional block (RepBlock) to extract and process the aligned features to generate the fused features . Subsequently, the fused features are used to generate the injected features and through the segmentation operation of the channel dimensions to support the operation of the injection module:

In the information injection phase, global information is injected into the corresponding local features through the attention mechanism. The injection module inputs the local feature and the global injected feature of the current layer, for the global injected feature , and are obtained using two independent convolution operations, and is extracted using a convolution operation for the local feature x of the current layer. Since the sizes of and may be different, and are resized to match using average pooling or bilinear interpolation. The final output features are further optimized with RepBlock, where is equal to at the low stage, so the formula is as follows:

2.1.2. High-GD

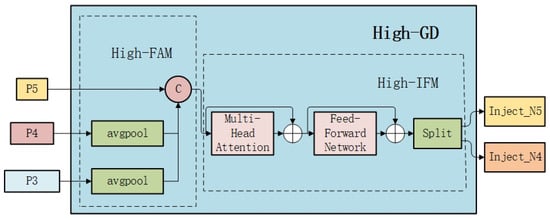

The high-GD module is mainly used to fuse the deep-level feature information of the model to optimize the detection performance of medium and large targets. The efficient integration of high-level information is realized by extracting and distributing the global semantic information of the deep-level features. The exact process is shown in Figure 2.

Figure 2.

High-stage collection-distribution branches.

In the feature alignment stage, the multi-scale features [P3, P4, P5] generated by the low-GD module are selected and unified by the higher-order feature alignment module (High_FAM) to the minimum size of . High_FAM uses an average pooling operation to reduce the feature size, thus reducing the subsequent computational requirements while preserving the global context information:

In the information fusion stage, a transformer block is used instead of RepBlock to fully fuse the global information. Subsequently, is downscaled by 1 × 1 convolution, and is divided into and along the channel dimensions by a segmentation operation.

In the information injection stage, the high-GD module continues the injection mechanism of low-GD, which combines global features with deep local features, completes the fusion through the attention mechanism, and extracts the final output features by RepBlock. In the high stage, Flocal is equal to Pi, so the formula is as follows:

2.2. Introduction of RepViTBlock

In the GD mechanism, the C2f module serves as the core of multi-scale feature fusion, integrating feature maps of different resolutions to enhance target representation. However, the traditional C2f module, which relies on a standard convolutional structure, faces limitations when applied to complex road defect detection tasks. Due to its fixed computational mode, standard convolution restricts the feature extraction capability, making it challenging to effectively capture fine-grained details of small targets, such as road cracks. Additionally, its rigid computational structure limits the potential for optimizing inference efficiency, making it difficult for the model to maintain high detection accuracy while minimizing computational overhead.

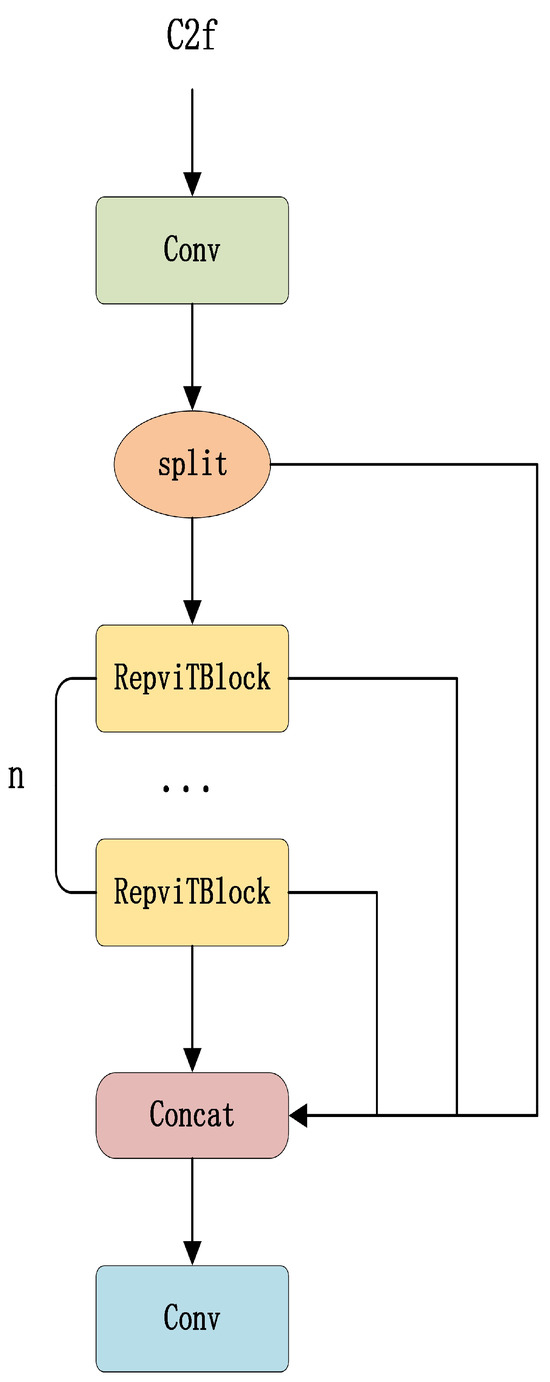

To address these issues, this paper introduces RepViTBlock [25] to enhance C2f, thereby constructing a new Rep-GD module (as shown in Figure 3). Compared to other lightweight models such as MobileNet and ShuffleNet, the Rep-GD module achieves a superior balance between computational efficiency and feature extraction capability. While MobileNet and ShuffleNet are designed with a strong emphasis on lightweight architecture, they often sacrifice feature representation, which is particularly limiting in complex tasks such as road defect detection. In contrast, the Rep-GD module, optimized with RepViTBlock, ensures high detection accuracy while significantly reducing computational cost during inference.

Figure 3.

Improved C2f module diagram.

RepViTBlock incorporates the structural reparameterization (SR) strategy, employing a multi-branch structure during training to enhance feature learning. During inference, these multiple branches are fused into a single path, effectively reducing computational complexity while maintaining strong feature expressiveness. This optimization improves detection efficiency, making the model more suitable for real-time applications [26]. Additionally, to further enhance feature fusion, RepViTBlock integrates a lightweight token mixer and channel mixer. The token mixer, utilizing a 3 × 3 depth-wise convolution, extracts local spatial features to enhance the detection of small targets, while the channel mixer, leveraging a 1 × 1 convolution, facilitates inter-channel information interaction to improve global feature representation. This design not only preserves detection accuracy but also prevents a significant increase in parameter count, allowing the model to achieve stronger feature extraction while maintaining high computational efficiency.

Furthermore, RepViTBlock incorporates the squeeze-and-excitation (SE) attention mechanism [27], which dynamically adjusts the weights of different feature channels to enhance the model’s focus on critical features, such as crack edges. By selectively emphasizing key features, this mechanism effectively strengthens the model’s feature extraction capability, leading to improved accuracy in road defect detection. With this design, RepViTBlock achieves higher feature expressiveness and lower computational complexity, and demonstrates excellent performance in multiple vision tasks, especially in scenarios such as real-time target detection.

2.3. Wise-IoU Loss Function

In the YOLOv8 model, CIoU-Loss [28] is usually used as the bounding box regression loss function. CIoU-Loss takes into account the overlap area, center point distance, and aspect ratio consistency of the predicted box and the real box, which has a certain improvement on the regression accuracy of the target box. However, in the road defect detection task, there can be the following problems: small targets such as road cracks, which are easily caused by insufficient gradient due to the low quality of anchor frames and can affect the detection accuracy; low quality anchor frames in the complex pavement environment, which can cause more interference and reduce the generalization ability of the model; and CIoU-loss, which has insufficient gradient allocation for centroid offset and can lead to lower regression efficiency.

In order to solve these problems, this paper adopts Wise-IoU v1 [29] instead of CIoU-loss. A key feature of Wise-IoU v1 is its dynamic non-monotonic focusing mechanism. Traditional focusing mechanisms are usually static, using fixed strategies to adjust the sample weights. However, these strategies may have limited effectiveness when dealing with low-quality anchor frames. In contrast, the dynamic non-monotonic focusing mechanism dynamically adjusts the gradient contribution of anchor frames based on their overlap (i.e., IoU) with target frames. This mechanism effectively mitigates the negative impact of low-quality anchor frames on model training, while enhancing the contribution of normal-quality anchor frames, allowing the model to more accurately learn valid regression information.

Wise-IoU v1 also introduces offset penalties and a weighting factor, which improves the accuracy of anchor frame quality assessment and effectively reduces the interference of low-quality anchor frames on gradient computation. By introducing the weighting factor , the weights of anchor frames are dynamically adjusted based on their quality, reducing the impact of low-quality anchor frames on model training, thereby improving the model’s robustness in complex environments. This weighting factor effectively mitigates the gradient interference from low-quality anchor frames and ensures the accurate flow of gradients, avoiding the regression inefficiencies and insufficient gradients that can occur in traditional CIoU-loss. Furthermore, Wise-IoU v1 enhances regression accuracy by precisely matching the centroids of the predicted and ground truth frames. The formula for Wise-IoU v1 is

where is the traditional intersection and fusion over union, is a weighting factor used to adjust the weights of anchor frames of different quality, is the centroid coordinates of the prediction frame, is the centroid coordinates of the true frame, is the size of the minimum closed frame, and the symbol ∗ indicates that is separated from the computational graph to effectively prevent them from interfering with the computation of the gradient and to avoid affecting the convergence process of the model.

3. Experiments and Results

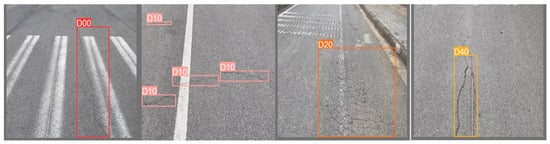

In order to adapt to the characteristics of urban roads in China, this paper selects the Chinese regional road damage data in the RDD-2022 (Road Damage Dataset 2022) [30] dataset for model training. This dataset contains two parts: the China_Drone image set and the china_motorcycle image set. The China_Drone image set contains a total of 2401 road damage images, covering 3068 instances of road damage, while the china_motorcycle image set contains 1977 road damage images, covering 4650 instances of damage. All images are labeled with four types of road defects, including longitudinal cracks (D00), transverse cracks (D10), composite cracks (D20), and potholes (D40). Figure 4 shows four typical damage types in RDD-2022. To ensure data quality, some unlabeled road images were excluded from this study. The processed dataset was then randomly split into a training set and a validation set at a 7:3 ratio, with the training set containing 5530 images and the validation set containing 2370 images. Additionally, an independent test set consisting of 500 images, which was not involved in training or validation, was separately allocated to ensure the fairness and objectivity of the evaluation.

Figure 4.

Four typical damage types.

3.1. Evaluation Criteria

In this paper, the evaluation criteria of the model mainly include mean average precision (mAP), number of parameters (parameters), and number of billion floating point operations per second (GFLOPs). These indicators can comprehensively reflect the detection accuracy and computational efficiency of the model. The specific calculation formula is as follows:

Precision (precision, ) indicates the proportion of correctly detected targets, which is calculated by the following formula:

where is the number of bit-positive samples correctly predicted by the model, and is the number of samples incorrectly predicted by the model.

Recall (recall, ), on the other hand, indicates the proportion of actual targets in the dataset that are detected and is calculated as

where represents the number of undetected targets among the correct targets.

Average precision () is the result obtained by integrating the precision-recall curve and is used as a measure of the model’s precision at different recall rates, which is calculated as

where is based on the recall rate, and is the calculated precision rate.

In order to evaluate the performance of the model on different categories, this paper calculates the mean average precision (). is the average of the AP values of all categories, which is calculated as

where denotes the number of categories, and denotes the average precision of the ith category.

In this paper, mAP50 is chosen as an evaluation metric, which represents the mean Average precision () of all categories at an intersection over union () threshold of 0.5. measures the degree of overlap between the predicted bounding box and the ground-truth bounding box, and it is calculated using the following formula:

where is the area of the predicted bounding box, and is the area of the labeled bounding box. With these metrics, this paper is able to comprehensively evaluate the detection accuracy, model complexity, and inference efficiency of the proposed model.

3.2. Ablation Experiments

In this study, ablation experiments are performed to verify the effectiveness of the proposed improved method. The GD mechanism, Rep-GD module, and the Wise-IoU loss function are added to the YOLOv8 model, respectively, and the experimental results of different module combinations are given. The experimental results are given in Table 1.

Table 1.

Results of ablation experiments.

As shown in the table, the GD mechanism improves the ability of the model to detect targets of different sizes by improving the fusion of multi-scale features. The experimental results show that the addition of the GD mechanism improves the mAP50 by 0.8% and also improves the accuracy. Next, we introduced the RepViTBlock module on top of the GD mechanism, an improvement that further enhances the global feature extraction capability of the model by combining the properties of the C2f structure and the RepViTBlock. The experimental results show that compared to the model using only the GD mechanism, the improved GD mechanism (i.e., Rep-GD) improves the mAP50 by 0.7% while reducing the number of parameters by 6%. To further improve the model’s small target detection performance, we introduce the Wise-IoU loss function. This loss function improves the model’s sensitivity to edges and fine targets by optimizing the IoU calculation. Experimental results show that the addition of the Wise-IoU loss function improves the mAP50 by 1.4%.

The experimental results show that the combination of the three modules leads to a significant improvement in the overall performance of the model. Compared to the original YOLOv8 model, the improved model improves the mAP50 by 2.4% and the accuracy by 3%.

3.3. Comparative Experiments

In order to verify the performance advantages of the improved RepGD-YOLOV8W model in road defect detection, this paper presents a detailed comparison with several mainstream target detection algorithms. The comparison includes the traditional two-stage algorithm Faster R-CNN [31], the single-stage algorithm SSD [32], and the lightweight YOLO series algorithms, such as YOLOv5n, YOLOv5s, YOLOv7-tiny [33], and YOLOv8n, along with the improved ML-YOLO based on YOLOv8 and CA-YOLOv8 models. To ensure fairness and comparability, all experiments were conducted under the same hardware conditions and trained using a unified road defect detection dataset.

As shown in Table 2, RepGD-YOLOV8W significantly outperforms other algorithms in terms of detection accuracy. Compared to the traditional two-stage algorithm Faster R-CNN and the single-stage algorithm SSD, RepGD-YOLOV8W shows improvements of 9.1 and 9.5 percentage points, respectively. Among the YOLO series algorithms, RepGD-YOLOV8W also exhibits significant accuracy advantages, with mAP50 values that are 4.7, 3.9, 3.1, and 2.8 percentage points higher than YOLOv5n, YOLOv5s, YOLOv7-tiny, and YOLOv8n, respectively, demonstrating its powerful detection capabilities in complex road damage scenarios.

Table 2.

Comparative experimental results.

Faster R-CNN, as a classical two-stage target detection method, generates candidate frames through the region proposal network (RPN) and then classifies and regresses each candidate. Despite its superior accuracy, it suffers from slow detection speed and poor real-time performance, which makes it unsuitable for tasks requiring efficient, real-time detection, such as road disease detection. Furthermore, Faster R-CNN struggles with small targets and complex backgrounds, particularly when detecting small road defects like cracks, which often leads to missed or false detections. Thus, while accurate, Faster R-CNN is not practical for real-world applications.

SSD is a single-stage target detection algorithm widely used in various applications due to its high speed and real-time performance. SSD can handle targets of different sizes by detecting them across multiple feature maps. However, in road disease detection, SSD faces difficulties in accurately detecting small defects due to the low contrast of small targets, such as cracks, and interference from complex backgrounds. Additionally, the poor quality of bounding boxes generated by SSD is affected by low-quality anchor frames, which ultimately reduces detection accuracy.

Among the YOLO series algorithms, YOLOv5n and YOLOv5s perform well, especially YOLOv5n, which is lightweight and suitable for real-time detection tasks. However, the accuracy of detecting small targets in complex backgrounds is not as high as RepGD-YOLOV8W. YOLOv7-tiny offers higher accuracy, but compared to RepGD-YOLOV8W, it has slightly lower computational efficiency and higher model complexity, which affects its performance in resource-constrained environments. YOLOv8n is smaller than YOLOv5n in terms of model size and parameter count, but RepGD-YOLOV8W still has obvious advantages in accuracy and efficiency, especially in complex road damage scenarios.

In the comparison between ML-YOLO [34] and CA-YOLOv8 [35], although ML-YOLO improves accuracy by adopting the convolutional block attention mechanism for better feature extraction, its larger model size and computational volume result in slower inference speed. On the other hand, CA-YOLOv8 achieves some improvements in channel feature convolution and the C2f module, but its accuracy and frame rate still do not reach the level of RepGD-YOLOV8W, especially in handling small targets and complex backgrounds.

In terms of model parameters, RepGD-YOLOV8W has 5.80 M parameters, slightly higher than YOLOv5n (1.77 M) and YOLOv8n (3.01 M), but the improvement in accuracy is significant, indicating a good balance between performance and model size. In contrast, CA-YOLOv8 and ML-YOLO have larger parameter counts and fail to match the accuracy of RepGD-YOLOV8W.

Although RepGD-YOLOV8W performed well in several comparative experiments, there are still potential limitations to consider. In extreme road defect scenarios, such as heavily shaded or high-noise environments, the model’s performance may be challenged. Although the experimental results are based on the RDD2022 dataset, the coverage of this dataset is limited, failing to encompass all possible road defect types and environmental variables. Therefore, the model’s performance in other datasets or real-world scenarios still needs further validation. Future research should extend the dataset to improve the generalizability and stability of RepGD-YOLOV8W, ensuring its performance across a wider range of application scenarios.

In summary, the RepGD-YOLOV8W model significantly outperforms mainstream target detection algorithms, demonstrating its efficiency and practicality for road defect detection tasks.

3.4. Comparison of Test Results

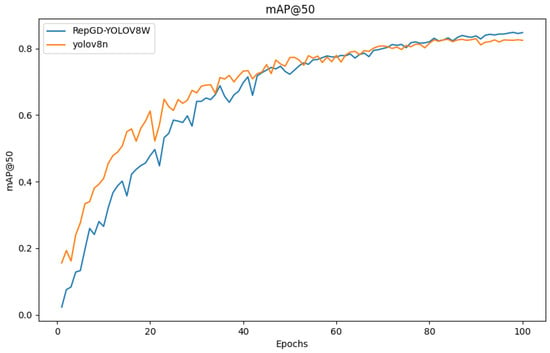

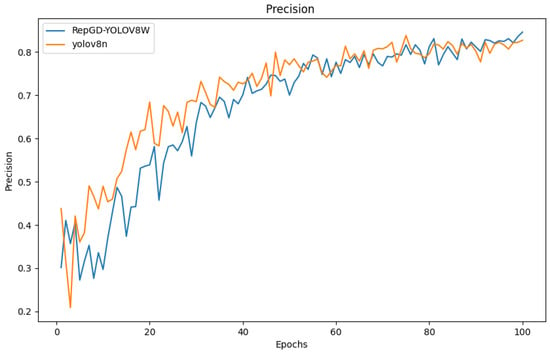

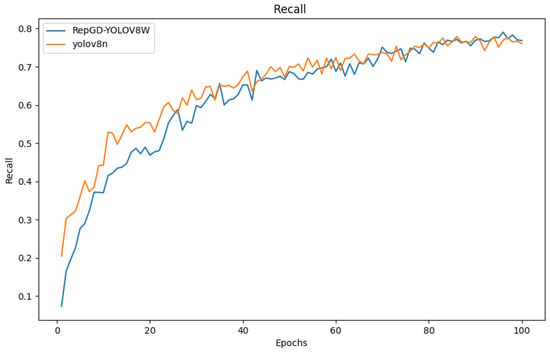

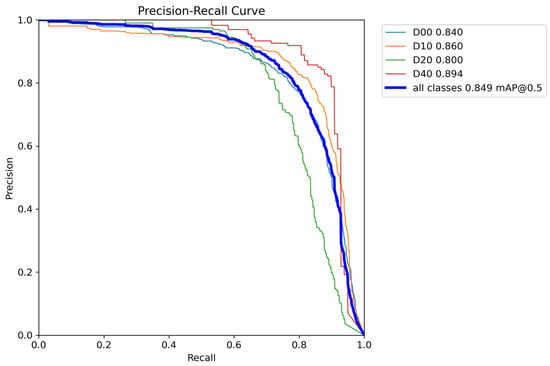

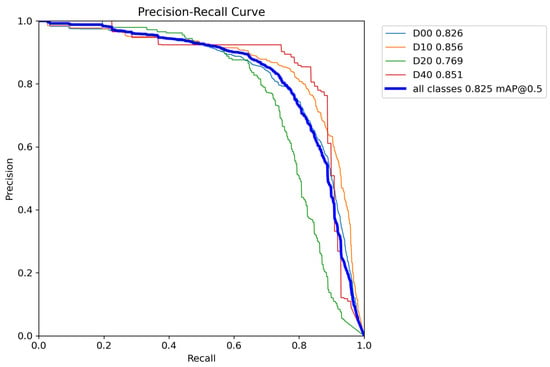

The quantitative analysis results of RepGD-YOLOV8W and YOLOV8n in terms of mAP@50, precision, recall, and precision–recall (PR) curve are shown in Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10 and Figure 11. The experimental results show that YOLOv8n converges faster in the early stage of training. However, as training progresses, the mAP@50 of RepGD-YOLOV8W gradually surpasses that of YOLOv8n, maintaining the lead in the later stages, and ultimately achieving a slightly higher mAP@50, indicating superior overall detection accuracy. In terms of precision, YOLOv8n slightly outperforms RepGD-YOLOV8W in the early stage of training but with increasing epochs, and the precision of RepGD-YOLOV8W gradually improves, surpassing YOLOv8n in the later stages and eventually stabilizing. This suggests that RepGD-YOLOV8W has a lower false positive rate, leading to more accurate detection results. Regarding recall, YOLOv8n also dominates in the early stage, with a faster rise in recall rate. However, RepGD-YOLOV8W gradually improves in the later stages and ultimately surpasses YOLOv8n. This demonstrates that RepGD-YOLOV8W can detect more targets, enhancing the comprehensiveness of target recognition. Through the analysis of the precision–recall (PR) curve, RepGD-YOLOV8W outperforms YOLOv8n in overall mAP, achieving an mAP of 0.849 compared to 0.825 for YOLOv8n. In the detection of longitudinal cracks (D00), transverse cracks (D10), composite cracks (D20), and potholes (D40), RepGD-YOLOV8W shows improvements of 1.4%, 0.4%, 3.1%, and 4.3%, respectively. RepGD-YOLOV8W shows more significant improvements in the D20 and D40 categories, mainly because these targets have relatively larger and more distinct morphological features. This model can better utilize multi-scale feature extraction and global information fusion when detecting these larger targets, especially in complex backgrounds, where such targets are easier to detect compared to smaller cracks or fine defects. For smaller targets like D10, although the model shows improvements, the gain is relatively small due to the small size of the features and the complexity of background interference.

Figure 5.

mAP@50 comparison chart.

Figure 6.

Precision comparison chart.

Figure 7.

Recall comparison chart.

Figure 8.

RepGD-YOLOV8W PR curves.

Figure 9.

YOLOv8n PR curves.

Figure 10.

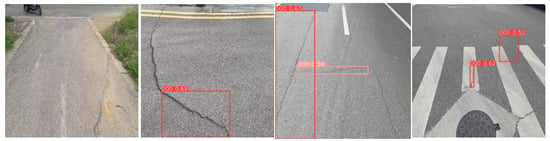

YOLOv8 detection results.

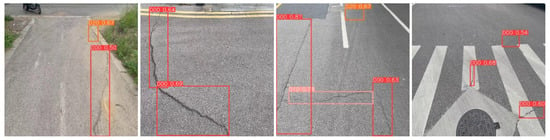

Figure 11.

RepGD-YOLOV8W test results.

In order to further verify the performance advantage of the improved RepGD-YOLOV8W model in road defect detection, this paper conducted a comparison test with the original YOLOv8 model to analyze the detection effect of the two in different scenes. In the scenes shown in the first group, complex background interference, such as weeds, obviously increases the detection difficulty, and the original YOLOv8 model has leakage detection, while the improved REPGD-YOLOV8W model, which enhances the multi-scale feature extraction capability by introducing the Rep-GD module, is able to accurately detect all crack lesions. RepGD-YOLOV8W model is capable of accurately labeling highway diseases. In the second set of test images, the scene contains larger crack targets, but the original YOLOv8 model fails to detect these lesions completely, and there is some leakage. This may be due to insufficient depth of the feature representation to capture the global features of large targets. In contrast, the improved RepGD-YOLOV8W model is able to extract the crack feature information more comprehensively through the feature enhancement effect of RepViTBlock and successfully detect all cracks. In the third group of images, the original YOLOv8 model fails to detect the composite crack next to the manhole cover in the upper right corner due to the blurred defect area, while the improved algorithm is able to capture the key features of the fuzzy target by relying on its optimized feature extraction mechanism. In the fourth group, it can also be seen that in complex environments, the improved algorithm model performs more robustly compared to the original model, which not only reduces the leakage detection but also improves the accuracy and confidence of detection. The experimental results fully demonstrate the practical application potential and superior performance of the improved model in road defect detection tasks.

4. Conclusions

In this paper, a RepGD-YOLOV8W model based on YOLOv8 improvement is proposed to solve the problems of leakage and misdetection in road defects. The model improves the expression ability of multi-scale features by introducing the RepViTBlock, while effectively optimizing the inference efficiency. By using the Rep-GD mechanism, the feature interaction and fusion methods are improved, which enhances the detection performance of the model for targets of different scales. In addition, the introduction of the Wise-IoU loss function makes the model more accurate in detecting both small and large targets. The experimental results show that RepGD-YOLOV8W achieves even better results than the YOLOv8n model on the RDD2022 dataset, with a 2.4% improvement in mAP50, and is able to meet real-time detection requirements. The model maintains a better balance between computational efficiency and accuracy of road defect detection and has stronger practicality. Future research will focus on further optimizing the algorithm to improve the accuracy of the model without increasing the computational resources and exploring its feasibility in a wider range of application scenarios.

Author Contributions

Writing—original draft, S.L.; writing—review and editing, D.Z.; investigation, S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by Anhui Province Key Research Program Project (No. 202304a05020049).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| YOLO | You only look once |

| RepGD-YOLOV8W | RepViTBlock Gather-and-Distribute YOLOV8n Wise-IoU loss function |

| GD | Gather-and-Distribute |

| IOU | Intersection over union |

| AP | Average precision |

| mAP | Mean average precision |

References

- Transportation Ministry of China. 2023 Statistical Bulletin on the Development of the Transportation Industry (Highway Section), Commercial Vehicle; Transportation Ministry of China: Beijing, China, 2024; pp. 56–57.

- Fang, H. Discussion on main damage forms and causes of asphalt pavement. Real Estate 2022, 224–226. [Google Scholar]

- Mandal, V.; Mussah, A.R.; Adu-Gyamfi, Y. Deep learning frameworks for pavement distress classification: A comparative analysis. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5577–5583. [Google Scholar]

- Ma, J.; Zhao, X.; He, S.; Song, H.; Zhao, Y.; Song, H.; Cheng, L.; Wang, J.; Yuan, Z.; Huang, F.; et al. Review of pavement detection technology. J. Traffic Transp. Eng. 2017, 17, 121–137. [Google Scholar]

- Fan, L.; Zhao, H.; Li, Y. RAO-UNet: A residual attention and octave UNet for road crac k detection via balance loss. IET Intell. Transp. Syst. 2022, 16, 332–343. [Google Scholar] [CrossRef]

- Ma, N.; Fan, J.; Wang, W.; Wu, J.; Jiang, Y.; Xie, L.; Fan, R. Computer vision for road imaging and pothole detection: A state-of-the-art review of systems and algorithms. Transp. Saf. Environ. 2022, 4, 1–16. [Google Scholar] [CrossRef]

- Lu, C.; Tang, X. Surpassing Human-Level Face Verification Performance on LFW with Gaussian Face. In Proceedings of the 29th AAAI National Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 3811–3819. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- McKinney, S.M.; Sieniek, M.; Godbole, V.; Godwin, J.; Antropova, N.; Ashrafian, H.; Back, T.; Chesus, M.; Corrado, G.S.; Darzi, A.; et al. International Evaluation of an AI System for Breast Cancer Screening. Nature 2020, 577, 89–94. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.W. Forklift Fatigue Driving Detection Algorithm Based on Knowledge Distillation. Agric. Equip. Intell. Technol. 2024, 3, 21–24. [Google Scholar]

- Gou, J.; Yu, B.; Maybank, S.J.; Tao, D. Knowledge Distillation: A Survey. Int. J. Comput. Vis. 2021, 129, 1789–1819. [Google Scholar] [CrossRef]

- Sun, W.; Xu, W.; Wang, C. Intelligent Surgical Speech Recognition Based on YAMNet Transfer Learning. Mod. Inf. Technol. 2024, 8, 61–65. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked Autoencoders Are Scalable Vision Learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16000–16009. [Google Scholar]

- Guo, H.; Zheng, K.; Fan, X.; Yu, H.; Wang, S. Visual Attention Consistency Under Image Transforms for Multi-Label Image Classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 729–739. [Google Scholar]

- Guo, M.-H.; Xu, T.-X.; Liu, J.-J.; Liu, Z.-N.; Jiang, P.-T.; Mu, T.-J.; Zhang, S.-H.; Martin, R.R.; Cheng, M.-M.; Hu, S.-M. Attention Mechanisms in Computer Vision: A Survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Guo, M.H.; Lu, C.Z.; Liu, Z.N.; Cheng, M.; Hu, S. Visual Attention Network. arXiv 2022, arXiv:2202.09741. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Cao, W.; Liu, Q.; He, Z. Review of pavement defect detection methods. IEEE Access 2020, 8, 14531–14544. [Google Scholar] [CrossRef]

- Cao, J.G.; Yang, G.T.; Yang, X.Y. Deep learning-based pavement crack detection with attention mechanism. J. Comput.-Aided Des. Comput. Graph. 2020, 32, 1324–1333. [Google Scholar]

- Ren, M.; Zhang, X.; Chen, X.; Zhou, B.; Feng, Z. YOLOv5s-M: A deep learning network model for road pavement damage detection from urban street-view imagery. Int. J. Appl. Earth Obs. Geoinf. 2023, 120, 103335. [Google Scholar] [CrossRef]

- Hu, X.W.; Yan, Y.X.; Wang, D.W.; Zhang, Y.H. Lightweight pavement defect detection method based on YOLOM algorithm. China J. Highw. Transp. 2024, 382–391. [Google Scholar]

- Guo, G.; Zhang, Z. Road damage detection algorithm for improved YOLOv5. Sci. Rep. 2022, 12, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Wang, Y.; Han, K. Gold-YOLO: Efficient object detector via gather-and-distribute mechanism. In Proceedings of the Advances in Neural Information Processing Systems 36: Annual Conference on Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Wang, A.; Chen, H.; Lin, Z.; Han, J.; Ding, G. Repvit: Revisiting mobile cnn from vit perspective. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 15909–15920. [Google Scholar]

- Zheng, Q.; Saponara, S.; Tian, X.; Yu, Z.; Elhanashi, A.; Yu, R. A real-time constellation image classification method of wireless communication signals based on the lightweight network MobileViT. Cogn. Neurodyn. 2023, 18, 659–671. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12993–13000. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding boxregression loss with dynamic focusing mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Omata, H.; Kashiyama, T.; Sekimoto, Y. Crowdsensing-based road damage detection challenge (CRDDC’2022). In Proceedings of the 2022 IEEE International Conference on Big Data (Big Data), Osaka, Japan, 17–20 December 2022; pp. 6378–6386. [Google Scholar]

- Seo, D.-M.; Woo, H.-J.; Kim, M.-S.; Hong, W.-H.; Kim, I.-H.; Baek, S.-C. Identification of asbestos slates in buildings based on faster region-based convolutional neural network (faster R-CNN) and drone-based aerial imagery. Drones 2022, 6, 194. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision—ECCV 2016, 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y. YOLOv7: Trainable bag-of-freebies sets new state of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Li, T.; Li, G. Road Defect Identification and Location Method Based on an Improved ML-YOLO Algorithm. Sensors 2024, 24, 6783. [Google Scholar] [CrossRef]

- Fu, J.Y.; Zhang, Z.J.; Sun, W.; Zou, K. Improved YOLOv8 Small Target Detection Algorithm in Aerial Images. Comput. Eng. Appl. 2024, 60, 100–109. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).