Abstract

Industrial railway monitoring systems require precise understanding of 3D scenes, typically achieved using deep learning models for 3D point cloud segmentation. However, real-world applications demand these models to rapidly adapt to infrastructure upgrades and diverse environmental conditions across regions. Conventional deep learning models, which rely on large-scale annotated datasets for training and are evaluated on test sets that are drawn independently and identically from the training distribution, often fail to account for such real-world changes, leading to overestimated model performance. Recent advancements in few-shot learning, which aim to develop generalizable models with minimal annotations, have shown promise. Motivated by this potential, the paper investigates the application of few-shot learning to railway monitoring by formalizing three types of distributional shifts that are commonly encountered in such systems: (a) in-domain shifts caused by sensor noise, (b) in-domain out-of-distribution shifts arising from infrastructure changes, and (c) cross-domain out-of-distribution shifts driven by geographical variations. A systematic evaluation of few-shot learning’s adaptability to these shifts is conducted using three performance metrics and a predictive uncertainty estimation metric. Extensive experimentation demonstrates that few-shot learning outperforms fine-tuning and maintains strong generalization under in-domain shifts with only ~1% performance deviation. However, it experiences a significant drop in performance under both in-domain and cross-domain out-of-distribution shifts, pronounced when dealing with previously unseen infrastructure classes. Additionally, we show that incorporating predictive uncertainty estimation enhances few-shot learning applicability by quantifying the model’s sensitivity to distributional shifts, offering valuable insights into the model’s reliability for safety-critical applications.

1. Introduction

Railways are among the busiest modes of global transportation and are essential for efficiently moving people, freight, and containers across regions. They frequently require rapid and consistent maintenance to ensure uninterrupted operations, resulting in substantial maintenance costs, time investment, and reliance on manual labor [1,2,3]. Automated monitoring systems can address these challenges by offering a viable and rapid solution while also reducing the reliance on manual labor and the associated costs. Such systems necessitate an accurate understanding of 3D scenes, which is typically achieved by utilizing deep learning models for point cloud segmentation [4,5]—a task that involves classifying each point into its corresponding category, thereby dividing the point cloud into semantically meaningful regions. Most of these models are constrained by the requirement of large-scale annotated data for training. Creating such annotations for railway point cloud data is particularly challenging as it involves cumbersome manual labor and is time-consuming, expensive, and error-prone due to the intricate geometrical structures of the objects involved. Furthermore, their performance is typically evaluated on a test set that is identically and independently distributed (i.i.d.) relative to the training set, often causing overestimation of their actual effectiveness.

The i.i.d. assumption is often violated in real-world applications, potentially leading to catastrophic outcomes in safety-critical systems such as railway monitoring, where accuracy in decision-making is of paramount importance. Gawlikowski et al. [6] attributed the failure of deep learning models in real-world deployment for safety-critical systems to their inability to distinguish between in-domain (ID) and out-of-distribution (OOD) samples, their sensitivity to distributional shifts, and their lack of reliable uncertainty estimation. Railway monitoring presents a complex and dynamic landscape, often resulting in distributional shifts. For example, maintenance activities can introduce new types of infrastructure as part of an upgrade process, leading to the emergence of novel classes not encountered during the model’s training phase. Additionally, timely detection and removal of vegetation are crucial for ensuring smooth operations, but vegetation characteristics vary significantly across regions. Furthermore, railway environments differ substantially based on geographical location, encompassing variations in infrastructure types, weather conditions, and environmental settings, such as rural versus urban areas. These scenarios, characterized by significant variations between training and test conditions [7,8,9], are referred to as OOD scenarios. A model designed for railway monitoring is expected to generalize effectively under such OOD conditions. However, in practice, this often results in unreliable predictions [6,10]. Consequently, assessing a model’s generalization capability—both in terms of performance and uncertainty in its decisions—becomes a cornerstone for model selection and their reliable deployment.

The scarcity of large-scale point-wise annotations for 3D point clouds and the critical need for effective generalization motivate this paper exploring few-shot learning (FSL) [11,12,13]—a relatively new deep learning paradigm that aims to develop models that are capable of generalizing to unseen novel classes using only a minimal amount of labeled data. In recent years, FSL has demonstrated significant potential in the image and text domains [14,15]. However, its application to 3D point clouds remains relatively limited. Although some works have explored FSL for point cloud segmentation in indoor environments [16,17,18,19,20,21,22,23,24,25,26,27], its use in railway environments is still largely under-explored. In contrast to controlled indoor environments with generally dense point clouds, railway environments present far more challenging scenarios: inherent environmental and sensor noises, unintentional occlusions, variations in lighting conditions, and significant variations in geometric shapes within the same class (e.g., vegetation). Long-range LiDAR scanners used for railway monitoring often collect data over extended distances, resulting in sparse points in many regions within the point cloud compared to the shorter-range detailed high-resolution scanning in indoor environments. Additionally, the railway environment has fewer features, but these features are much larger and more spread out compared to the smaller, more numerous objects found indoors.

This paper investigates the generalization capability of FSL under real-world distributional shifts encountered in railway monitoring systems, contributing to a deeper understanding of model effectiveness in decision-making for safety-critical applications. To achieve this, we formalize three types of distributional shifts: (a) ID shift, (b) in-domain OOD shift, and (c) cross-domain OOD shift, further detailed in Section 2.2. A systematic evaluation is conducted using three performance metrics, along with a predictive uncertainty estimation metric to assess model sensitivity by quantifying prediction uncertainties. As demonstrated through experimental validation, this evaluation provides valuable insights into model development, selection, and deployment for reliable railway monitoring systems. Although prior studies have separately explored distributional shifts and uncertainty estimation in point cloud segmentation, to the best of our knowledge, this is the first to comprehensively evaluate few-shot segmentation for railroad monitoring under real-world distributional shifts.

The structure of the paper is as follows: Section 2 presents a comprehensive review of the related works, providing background, context, and the problem setup. Section 3 outlines the materials and methods used in the study. In Section 4, we detail the experimental setup. Section 5 delves into an in-depth analysis of the results, examining the key findings. Section 6 offers a discussion of the implications and relevance of the study’s outcomes. In Section 8, the conclusion is presented.

2. Background

2.1. Related Work

Three-dimensional point cloud segmentation for railroad infrastructure. Earlier works focused on developing heuristic-based methods [28,29,30,31,32,33] to detect railway infrastructure by exploiting underlying geometrical or morphological patterns in 3D point clouds. Sánchez-Rodríguez et al. [34] integrated heuristics with an SVM classifier—a classical machine learning technique to detect rail tracks, cantilever arms, power line wires, and lining in railway tunnels. These methods are based on simple predefined rules derived from domain knowledge, making them computationally efficient and requiring minimal data. However, they heavily rely on handcrafted features designed by human experts, which restricts their application to simple detection tasks, typically involving regular, well-defined linear or circular objects. Furthermore, their adaptability is limited as they struggle to handle variations such as environmental changes, outliers, scaling, or noise.

The advancements in deep learning for point cloud segmentation prompted Soilán et al. [35] to explore two well-known point cloud segmentation networks, PointNet [36] and KP-Conv [37], for railway tunnel detection, achieving performance on par with the heuristic-based approach proposed by Sánchez-Rodríguez et al. [34]. PointNet, a pioneering work by Qi et al. [36], is the first network that directly inputs unstructured point clouds into a neural network, learning per-point features through shared multi-layer perceptrons and pooling functions. KP-Conv introduces a flexible and deformable convolutional operator that applies point convolutions to kernel points and their neighbors in Euclidean space. Chen et al. [38] applied a multi-scale Hierarchical Conditional Random Field (HiCRF) model to classify electrification assets in railway infrastructure by capturing spatial relationships. FarNet [39] introduced an attention module to aggregate spatial attention into feature information, learning from the spherical projection of point clouds. Ton et al. [40] adopted PointNet++ [41], SuperPoint Graph [42], and Point Transformer [43] for the semantic segmentation of railway infrastructure in catenary arches. PointNet++ extends PointNet by incorporating a grouping function to capture richer feature information, while SuperPoint Graph leverages object contextual relationships to partition point clouds into superpoint graphs, with each superpoint embedded via PointNet and processed by a graph convolutional network. Point Transformer incorporates self-attention layers for enhanced feature learning. However, it is computationally intensive, as is SuperPoint Graph. Kharroubi et al. [44] used KP-Conv, LightGBM, and Random Forest for semantic segmentation in railway environments. These models, trained in a supervised manner, address the limitations of heuristic-based approaches by automatically extracting meaningful features from data, enabling their application to complex high-dimensional railway segmentation problems, albeit with higher computational complexity and a significant requirement for labeled data. However, as typically evaluated on a test set drawn i.i.d. from the same distribution as the training data, these models do not account for the real-world variations encountered in railway environments, often resulting in an overestimation of model performance. Additionally, these works overlook uncertainty quantification in the model’s predictions, which is crucial for accurate decision-making, especially in identifying highly uncertain samples for further review by human counterparts.

Distributional shifts and OOD conditions in point clouds. Distributional shifts in point clouds can be categorized into ID shifts and OOD shifts. ID shifts arise from minor variations in the test set relative to the training set, characterized by positional, rotational, and scaling disturbances, along with jitter, outliers, sparsity, and corruptions. TriangleNet [45] addresses these shifts in point cloud classification by utilizing arbitrary SO(3) rotations, demonstrating high performance even with sparse point clouds using as few as 16 points sampled for each object. PointASNL [46] introduces adaptive sampling and a local–nonlocal module to handle inherent noise and outliers in point clouds. RobustPointSet [47] proposes a benchmark for evaluating point cloud classifiers on transformations, such as noise, rotation, sparsity, and translation. DUP-Net [48] and PointGuard [49] leverage adversarial point addition and deletion attacks by randomly adding and removing points in input data to design models that are robust to ID shifts. Additionally, Dong et al. [50] leveraged the relative positions of local features, and Sun et al. [51] emphasized learning local features through self-supervision.

In contrast to ID shifts, OOD shifts occur when the test data distribution diverges significantly from the training data, often involving previously unseen classes or environments. Veeramacheneni and Valdenegro-Toro [52] established a benchmark for semantic segmentation utilizing Semantic3D, which consists of outdoor scenes, and S3DIS, comprising indoor scenes. Bhardwaj et al. [53] employed knowledge distillation for object classification, while Riz et al. [54] applied online clustering and uncertainty quantification to generate prototypes for pseudo-labeling point clouds of previously unseen classes. The research on object detection [55,56,57], instance segmentation [58], and semantic segmentation [57] in outdoor environments for autonomous driving has focused on enhancing robustness against distributional shifts caused by previously unseen and unknown objects. The 3DOS benchmark [59] evaluates models, encompassing both in-domain scenarios (synthetic-to-synthetic and real-to-real) and cross-domain scenarios (synthetic-to-real). Research dedicated to OOD shifts using FSL for 3D point clouds remains limited. The BelHouse3D benchmark [60] examines FSL under such shifts in indoor scene segmentation, identifying inherent point cloud occlusion that alters the shapes and context of 3D household objects. Unlike prior works, our study focuses on investigating a wide range of shifts that are particularly encountered in railway environments.

2.2. Notation and Problem Setup

Assume a point cloud, , which is an unordered set of n points in Euclidean space. Each point is a vector representing the i-th point in three-dimensional space and corresponds to one of the l target labels, . Let denote a deep neural network with learnable parameters . We train the model on a labeled training set, , to evaluate its generalization capability on an evaluation (test) set, . Conditioned on , we formulate distributional shifts to represent the types of changes encountered by real-world railway monitoring systems as follows:

- ID shift: is constructed by sampling point clouds, drawn i.i.d. from the same distribution as the training set, , and then adding random noise, ,simulates sensor noise as represented by variations in object positions and orientations. We employ three types of noise in our transformations: jitter, mirroring, and rotation. Jitter adds Gaussian noise to the point cloud :where , , and represent a standard normal distribution sampled for each point in Mirroring randomly reflects the point cloud along the x-axis and y-axis. Rotation rotates the point cloud around the z-axis by an sampled from a uniform distribution,

- In-domain OOD shift: consists of samples from classes that were not included in the training phase, taking into account a dataset, D, which encompasses classes. The training set, , contains samples from j classes, referred to as base classes, denoted as , where In contrast, includes samples from the remaining classes, designated as novel classes, The base and novel classes are contextually distinct and mutually exclusive, ensuring that . This situation represents an OOD shift within the same domain, characterized by the condition . This shift is indicative of the emergence of new classes during upgrades to railway infrastructure.

- Cross-domain OOD shift: is constructed by sampling test samples from a dataset, , that is entirely distinct from , which was utilized to create the training set, The datasets, and , are sourced from different distributions, resulting in an OOD shift due to domain differences, expressed as For simplicity, our experimental setup assumed that and share the same set of classes, . This shift reflects variations arising from differences in railway environments across diverse geographical regions, such as Asia and Europe, or among various countries.

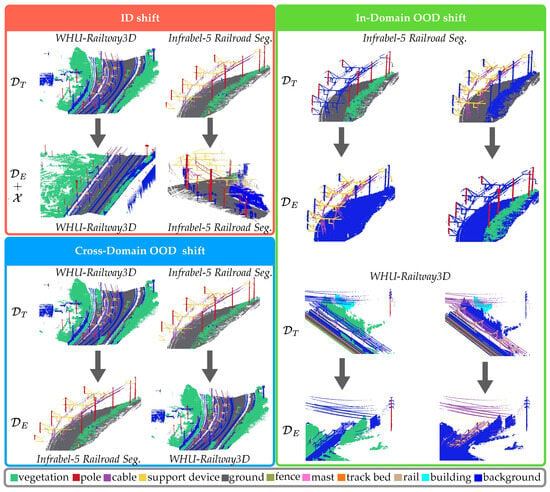

Figure 1 presents an overview of the distributional shifts, as captured by the datasets used in our experimental setup.

Figure 1.

The experimental setup illustrates three types of distributional shifts: (i) ID shift (red box), (ii) in-domain OOD shift (green box), and (iii) cross-domain OOD shift (blue box) using the Infrabel-5 Railroad Segmentation dataset and the WHU-Railway3D dataset. Class labels are shown in the gray box. Rows represent the training () and evaluation () sets for each shift type.

3. Materials and Methods

3.1. Few-Shot Learning

FSL is closely related to the concept of generalization over test tasks, where a learned model is expected to adapt to new tasks, provided with minimal supervision at test time. This concept mimics the learning capabilities inherent in human intelligence. For instance, a child can identify a zebra in a zoo after seeing only a few pictures of zebras in a book, even without direct prior exposure to the animal. Theories from cognitive psychology suggest that humans and animals learn by capturing the regularities and commonalities among categories of objects, forming internal representations, defined as prototypes [61,62,63,64,65,66,67,68]. These prototypes are then used to categorize an unseen object as belonging to a particular category (or not).

We define FSL on 3D point cloud segmentation tasks for railway environments under distributional shifts to study a model’s generalization capability at test time. Each task samples K labeled examples and q test examples from an example space to constitute a pair of support–query sets for N chosen classes, known as an N-way K-shot task. FSL aims to train the model from Section 2.2 over the distribution of tasks, , which samples pairs from during the meta-training phase. The goal is to generalize to a distribution of previously unseen tasks, , consisting of pairs sampled from the test domain. Since the problem essentially becomes a transfer learning task under OOD shifts, we assume that minimal supervision is available through a few labeled samples from the test domain, which provide prior knowledge about the domain. These labeled examples are used to construct for , while comprises the test samples to be evaluated. This is effectively equivalent to . Under this setting, we characterized the distributional shifts (see Section 2.2) as follows:

- ID shift: and are defined over C classes within a dataset D. is drawn i.i.d. from the same distribution as the with the inclusion of random noise. Consequently, and share the same underlying distribution, such that .

- In-domain OOD shift: and are defined over and classes within a dataset D, respectively. To ensure and are predefined rather than being chosen randomly.

- Cross-domain OOD shift: and are defined for C shared classes from two distinct datasets, and , respectively. The difference in underlying distributions ensures that .

To incorporate the prototypical view, we employ Prototypical Networks [69], a commonly used metric-based method for FSL. They utilize initialized with a pretrained weight to compute the feature representation for each point in ,

In an N-way K-shot task, the prototype for each foreground class is computed as the mean embedding of all points within that class given K examples from . The background prototype is calculated as the mean embedding of points that do not belong to any of the N classes.

Given a query point cloud, , the probability for each point to belong to a class, } is obtained by applying a softmax over the distances to each class prototype:

where represents the total number of classes, including N foreground classes and 1 background class, is the predicted class label for point , and denotes the similarity/distance function, which in our experiments is implemented as the cosine distance.

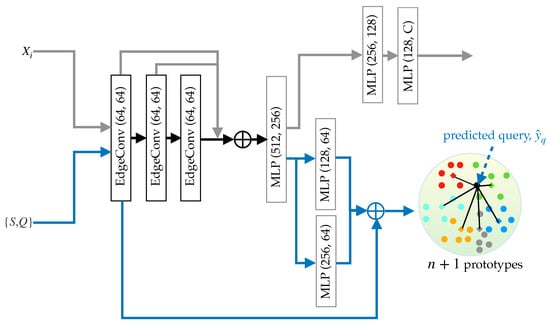

Figure 2 presents our pipeline for point cloud segmentation in railway monitoring, illustrating the pretraining process and FSL. We employ DGCNN [70], pretrained on a subset of WHU-Railway3D dataset, to extract support and query features using Equation (3). DGCNN constructs a local neighborhood graph for each point using k-Nearest Neighbors (k-NN) and applies a convolutional operation to the edges via EdgeConv layers to capture local geometric structures, crucial in railroad segmentation. The network aggregates features for each point by pooling the edge features from each subsequent EdgeConv layer. These aggregated features are then input into multi-layer perceptrons to produce global feature representations at different scales, which are combined to enhance the overall representation. The graph in DGCNN is dynamic as it is recomputed at every layer, enabling the network to adjust neighborhood relationships based on the learned features at each stage and facilitating learning complex and non-linear relationships within point clouds.

Figure 2.

Overview of our pipeline. The network is trained on input point clouds () using batches from a subset of the WHU-Railway3D dataset in a supervised manner (top part, connected via gray arrows). The trained network is then utilized as a feature extractor for FSL given a pair of support–query sets (bottom part, connected via blue arrows). Specifically, we illustrate a 5-way task, producing five foreground prototypes (depicted in red, green, blue, yellow, and sky blue) and one background prototype (depicted in gray). The query feature is then classified to the nearest prototype based on cosine distance.

We conducted experiments using Transformer-based models, specifically Point Transformers v1, to be used as the pretrained network. Observations indicate that the model tends to overfit on small datasets like those used in our experiments. Furthermore, recent work [71] comparing DGCNN with Transformer-based models, particularly Convolutional Point Transformer (CpT), reports closely comparable performance. This finding further reinforces our decision to prioritize the computationally efficient DGCNN architecture.

3.2. Datasets

Infrabel-5 Railroad Segmentation dataset. The Infrabel-5 Railway Segmentation dataset consists of data from 8 railway tracks, each containing 8 million raw points, collected across various cities in Belgium. The data collection process is administered by Infrabel (a Belgian government-owned public limited company responsible for the installation, upgrading, and maintenance of railway infrastructure). The point clouds, represented by x, y, and z coordinates, are captured using a Z+F 9012 LiDAR-based MMS system. The raw data were shared with the authors under a confidentiality agreement and were also used in our earlier work [72]. The data were initially filtered by removing outliers using the Statistical Outlier Removal (SOR) filter within the CloudCompare software (available at https://www.danielgm.net/cc/, (accessed on 20 December 2024)), followed by manual annotation using the software’s built-in annotation tool. The filtered dataset contains approximately 4 million points per railway track, classified into 5 object classes: cable, cable holder (support device), pole, vegetation, and ground. Any remaining points are categorized as clutter. Although this dataset accurately represents the highly imbalanced class scenarios common in real-world railway environments, it does not include RGB information.

WHU-Railway3D dataset. The WHU-Railway3D dataset [73] is a publicly available collection of 3D point clouds, represented by x, y, and z coordinates, capturing railroad infrastructure across urban, rural, and plateau environments. An Optech Lynx MMS system captured 675 million points over 10.7 km in urban areas. In rural environments, a HiScan-Z MMS system captured 2775 million points across 10.6 km, while a rMMS system collected 362 million points over 10.4 km in plateau areas. The maximum valid range for urban data is 250 m with an outlier tolerance of 0.2%, and the average point density is 2000 points/m2. The rural and plateau data have a maximum valid range of 119 m with an outlier tolerance of 0.1%. The average point density is 9000 points/m2 in rural areas and 500 points/m2 in plateau regions. Across all environments, the point position accuracy is 5 cm. The dataset spans over 30 km with 3.9 billion points, manually annotated into 10 classes: rail, track bed, mast, support device, overhead line (cable), fence, pole, vegetation, building, and ground. Notably, the dataset lacks RGB information.

4. Experiments

Our experiments evaluated the generalization capability of the FSL model under distributional shifts formulated in Section 2.2 for 3D point cloud segmentation in railroads. The experimental setup for distributional shifts, including data splits and class configurations for training and evaluation, is presented in Table 1.

Table 1.

Dataset settings and class configurations used in our experiments on distributional shifts. The experiments utilize the Infrabel-5 Railroad Segmentation (Infrabel-5) dataset from Belgium (BEL) and the WHU-Railway3D (WHU) dataset from China (CHN).

First, we assessed the model under a no-shift scenario, which replicates the ID shift scenario without introducing additional noise () to the evaluation set (. This scenario serves as the baseline for comparison with distributional shifts. For ID shifts, the trained model is evaluated on for both datasets, incorporating jitter, mirroring, and rotation (Equation (1)).

For the in-domain OOD shift, the model was evaluated on two class-based configurations: and for both datasets, while the remaining classes were used during the training phase. For the cross-domain OOD shift, the model was trained on the WHU-Railway3D dataset and evaluated on the Infrabel-5 Railroad Segmentation dataset and vice versa. Although both datasets share the same classes—pole, vegetation, ground, cable, and support device—in our experimental setup, they significantly differ in terms of distributions. We do not include the clutter class from both datasets in FSL training.

4.1. Evaluation Metrics

Performance metrics. Three metrics, mean Intersection over Union (mIoU), Overall Accuracy (OA), and Matthews Correlation Coefficient (MCC), are employed to assess the model’s performance. Given the four categories of the confusion matrix—true positive (), false positive (), true negative (), and false negative ()—these metrics are defined as follows:

- mIoU: mIoU measures how well each class is segmented by measuring the overlap between predicted and ground-truth points for each class.Note that we ignore the background class for our evaluation.

- OA: OA measures the ratio of correctly classified points over the total number of points, regardless of classes, thereby reflecting the overall correctness of the model’s predictions.

- MCC: In a class imbalance scenario, the OA can present overly optimistic model performance evaluations. In contrast, MCC provides a more equitable assessment of classification models. It yields a high score only when the predictions are accurate across all four categories of the confusion matrix—, , , and —while also considering the relative sizes of both positive and negative classes within the dataset. This makes the MCC a robust metric for performance evaluation, particularly in imbalanced datasets.This metric ranges from −1, indicating total disagreement, to 1, representing perfect prediction.

Uncertainty metric. Entropy quantifies the model’s performance in terms of probabilistic predictions, providing insights into the model’s uncertainty in its predictions. Given a point, entropy is defined as,

where denotes the probability that a point belongs to class, . We report the mean entropy (mH), which calculates the average entropy across all points in the evaluation set. A lower mean entropy value indicates higher model confidence in its predictions.

4.2. Implementation Details

During the pretraining phase, the model is trained for 200 epochs on the WHU-Railway3D dataset using the Adam optimizer with a learning rate of and L2 regularization of . The learning rate is reduced by a factor of 0.5 after 100 epochs. A batch size of 16 is used. For episodic training in FSL, 40K episodic tasks were sampled from . The Adam optimizer was employed with a learning rate of , which was halved every 5000 episodes. The trained model was evaluated on 1500 test tasks sampled from . An NVIDIA Tesla V100 GPU with 32 GB of memory was used for training. The code was implemented in PyTorch and, along with the pretrained model, is publicly available at https://gitlab.kuleuven.be/eavise/point-clouds/dist_shift_railroads_fsl.git, (accessed on 1 February 2025).

To efficiently utilize limited computational resources, we adopted the data preprocessing strategy from PointNet, which employs a 3D sliding cubic window of size to subdivide a large point cloud into smaller cubic blocks. This sampling approach has also been utilized in PointNet++, DGCNN, and many others. In our case, , , and z equals the maximum height (in meters) recorded in the point cloud. This process captures points within cubic blocks, from which we randomly sample 2048 points as input to the network. We also conducted preliminary experiments using 1024 and 4096 points. Using 2048 points enabled the model to capture more representative features compared to 1024. However, increasing the number of points to 4096 did not yield significant performance benefits. Thus, 2048 points provided a favorable trade-off between computational efficiency and model performance.

5. Experimental Results and Analysis

We conducted a comprehensive evaluation of our model across four scenarios: no-shift, ID shift, in-domain OOD shift, and cross-domain OOD shift, with no-shift serving as the baseline. The model performance is quantified using the following performance metrics: mIoU, OA, and MCC. Additionally, the model’s predictive uncertainty is assessed using mH. The average performance over 1500 test tasks is reported as the experimental results, implementing one-way twenty-shot tasks (i.e., ) for no-shift and ID-shift. For in-domain OOD shift and cross-domain OOD shift scenarios, the results are reported for one-way (i.e., ) tasks with five, ten, and twenty shots (i.e., ). Additionally, in the cross-domain OOD shift scenario, the results are also reported for a five-way task setting (i.e., ) with five, ten, and twenty shots. A one-way task corresponds to a binary segmentation problem involving a single foreground class, while a five-way task represents a multi-class segmentation problem involving five foreground classes.

5.1. Quantitative Results

Table 2 presents the experimental results for the ID shift using the Infrabel-5 Railroad Segmentation dataset and the WHU-Railway3D dataset compared against the baselines under the no-shift scenario. The results indicate that the baselines achieved the highest performance and the lowest predictive uncertainty, with mIoU values of 89.1% and 83% and OA values of 92.4% and 89% for the Infrabel-5 Railroad Segmentation dataset and the WHU-Railway3D dataset, respectively. Under the ID shift scenario, with the application of jitter, mirroring, and rotation, the model’s performance remained comparable to the baselines, deviating by ~1% across all the metrics. These findings emphasize the strong generalization capability of FSL under minimal variations in evaluation conditions.

Table 2.

Quantitative results under ID shift, where = {jitter, mirroring, rotation} applied to evaluation set. The first rows for both datasets with no (under no-shift scenario) serve as the baselines. Results are reported for 1-way 20-shot tasks. The best performance metrics are shown in bold, and the second-best are underlined. Only the model with the lowest predictive uncertainty is highlighted in bold.

Table 3 and Table 4 summarize the experimental results for the in-domain OOD shift using one-way tasks. Specifically, Table 3 reports the results for two evaluation settings with previously unseen classes from the Infrabel-5 Railroad Segmentation dataset: = {pole, vegetation} and = {cable, support device}. In the = {pole, vegetation} setting, the model achieved its best performance on twenty-shot tasks with 64.2% mIoU and 74.4% OA, representing ~7.5% mIoU and ~5.5% OA improvements over the second-best performance in ten-shot tasks. Conversely, in the = {cable, support device} setting, the model attained comparable performance across five- and twenty-shot tasks, with the highest performance observed in ten-shot tasks, ~1% better than in both other settings. The lowest predictive uncertainty was recorded in twenty-shot tasks for the = {pole, vegetation} and in five-shot tasks for = {cable, support device}.

Table 3.

Quantitative results for the in-domain OOD shift scenario based on two evaluation settings using the Infrabel-5 Railroad Segmentation dataset. Results are reported for . The highest performance metrics and the lowest predictive uncertainty for each setting are highlighted in bold. Higher values indicate better performance for mIoU, OA, and MCC (↑), while lower values indicate better performance for mH (↓).

Table 4.

Quantitative results for the in-domain OOD shift scenario based on two evaluation settings using the WHU-Railway3D dataset. Results are reported for . The highest performance metrics and the lowest predictive uncertainty for each setting are highlighted in bold.

Table 4 presents the results for the same evaluation settings as Table 3 but applied to the WHU-Railway3D dataset. In the = {pole, vegetation} setting, the model achieved its best performance on twenty-shot tasks with 76% mIoU and 78.4% OA, comparable to the results from ten- and twenty-shot tasks. For = {cable, support device}, the highest performance was achieved in twenty-shot tasks with 59.1% mIoU and 83.9% OA, showing ~2.5% mIoU improvement over the second-best result in ten-shot tasks. The lowest predictive uncertainty occurred in five-shot tasks for the first setting and in ten-shot tasks for the second.

Table 5 and Table 6 provide the experimental results for the cross-domain OOD shift, evaluated on the Infrabel-5 Railroad Segmentation dataset and the WHU-Railway3D dataset, respectively. In Table 5, the model achieved its best performance for the one-way setting in twenty-shot tasks, with 77.5% mIoU and 84.8% OA, comparable to the second-best performance in ten-shot tasks. The lowest predictive uncertainty is observed in the ten-shot tasks. For the five-way setting, the model attained its highest performance in twenty-shot tasks, achieving 50% mIoU and 70.6% OA, with ~3.2% improvements in mIoU over ten-shot tasks (second best) and ~4% OA improvements compared to five-shot tasks. The twenty-shot task setting generates the lowest predictive uncertainty.

Table 5.

Quantitative results under cross-domain OOD shift, with the WHU-Railway3D dataset as the training set and the Infrabel-5 Railroad Segmentation dataset as the evaluation set. The best performance metrics and lowest predictive uncertainty for are shown in bold.

Table 6.

Quantitative results under cross-domain OOD shift, with the Infrabel-5 Railroad Segmentation dataset as the training set and the WHU-Railway3D dataset as the evaluation set. The best performance metrics and lowest predictive uncertainty for are shown in bold.

In Table 6, the model achieved its best performance for the one-way setting in ten-shot tasks, with 71.7% mIoU and 82.4% OA, comparable to the results (<1%) for five-shot and twenty-shot tasks. The lowest predictive uncertainty is observed under the same shot setting. For the five-way setting, the model performed best in twenty-shot tasks, with 48% mIoU and 62.4% OA, showing ~2.5% mIoU and ~1.6% OA improvements compared to the second-best results achieved in ten-shot tasks. The lowest predictive uncertainty was observed in the twenty-shot tasks.

5.2. Qualitative Results

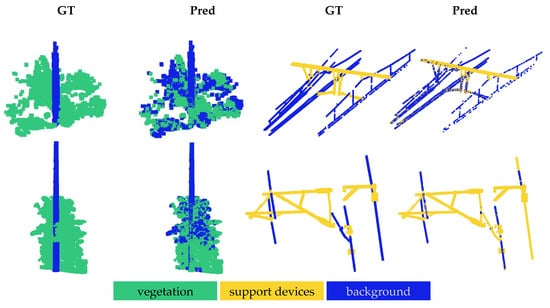

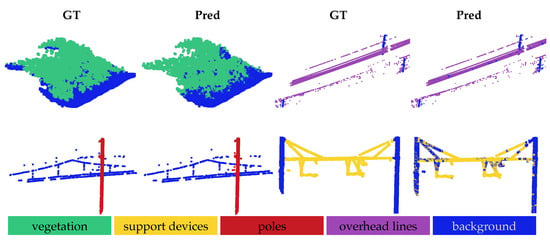

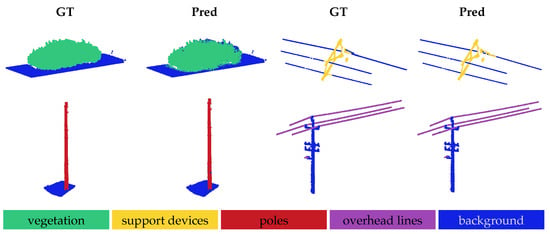

The qualitative results for the OOD shifts are illustrated in Figure 3, Figure 4 and Figure 5. In all the figures, the model was evaluated via a one-way five-shot setting, with smaller regions extracted from larger railroad areas for clear visualization. The ground truth is displayed in the ‘GT’ columns, while the model predictions are shown in the corresponding ‘Pred’ columns.

Figure 3.

Qualitative results for in-domain OOD shift. The first row shows results for the Infrabel-5 Segmentation dataset, while the second row corresponds to the WHU-Railway3D dataset. In each row, previously unseen foreground classes—vegetation and support device—are segmented from the background, illustrating the two evaluation settings.

Figure 4.

Qualitative results under cross-domain OOD shift, evaluated on previously unseen Infrabel-5 Railroad Segmentation dataset. Results are shown for the 1-way 5-shot setting, comparing each model prediction (Pred) to the ground truth (GT). From left to right in two rows, segmentation of four foreground object classes is illustrated: vegetation, overhead line, pole, and support device.

Figure 5.

Qualitative results under cross-domain OOD shift, evaluated on previously unseen WHU-Railway3D dataset. Results are shown for the 1-way 5-shot setting, comparing each model prediction (Pred) to the ground truth (GT). The first row illustrates two foreground object classes, vegetation and overhead line (from left to right), and the second row shows poles and support devices (from left to right).

Figure 3 illustrates the results for the in-domain OOD shift, showing the results for the Infrabel-5 Segmentation dataset in the first row and the WHU-Railway3D dataset in the second row. In the first two columns of each row, vegetation is segmented by the model that was trained on ground, cable, and support devices. In the last two columns, support devices are segmented using the model that was trained on ground, vegetation, and poles. The results indicate better segmentation of vegetation from poles in the WHU-Railway3D dataset compared to the Infrabel-5 dataset. Conversely, the model performed better at segmenting support devices from cables in the Infrabel-5 dataset, while some cables were misclassified as support devices in the WHU-Railway3D dataset. Overall, the figure highlights the model’s strong generalization to novel railway infrastructure classes despite receiving minimal supervision, with only five labeled support examples.

Figure 4 and Figure 5 present the qualitative results for the cross-domain OOD shift, evaluated on the Infrabel-5 Segmentation dataset and the WHU-Railway3D dataset, respectively, with the other dataset used for training. These datasets exhibit notable structural differences: the vegetation and poles are taller in the WHU-Railway3D dataset, while the support devices vary significantly in form. Both figures demonstrate that the model generalizes well to previously unseen distributions, although some challenges remain in segmenting vegetation (row 1, columns 1–2) and distinguishing support devices from cables (row 2, columns 3–4). Nonetheless, the model accurately segments poles and cables when presented against distinct backgrounds.

5.3. Ablation Study

Fine-tuning. Fine-tuning is often used to adapt a model to the test distribution when it significantly differs from the training distribution. We fine-tuned the pretrained model on the Infrabel-5 Railroad Segmentation dataset. The last two layers of the pretrained model were fine-tuned on the WHU-Railway3D dataset to better align the model with our selected evaluation classes. We employed the Adam optimizer with a learning rate of and weight decay of . Training and evaluation follow the same data splits as the ID shift (Table 1), with a batch size of 16. The training is conducted for 30 epochs.

Table 7 presents the fine-tuning results for our segmentation task. The results show that fine-tuning achieves more than 60% mIoU in both datasets, achieving 92.6% OA for the WHU-Railway3D dataset, which is 10% higher than the OA value for the Infrabel-5 Railroad Segmentation dataset.

Table 7.

Quantitative results on WHU-Railway3D dataset and Infrabel-5 Railroad Segmentation dataset using fully supervised setting.

6. Discussion

This work discusses the generalization of FSL under inevitable distributional shifts encountered in real-world railroad monitoring, arising from sensor noise (ID shift), infrastructure upgrades (in-domain OOD shift), and environmental variations across geographical regions (cross-domain OOD shift). The baseline performances achieved under the no-shift condition (Table 2), representing optimal similarity to the training conditions, provide an upper bound for comparison. The results for ID shift (Table 2), compared to the baselines, demonstrate minimal impact on model performance, with deviations of ~1%, highlighting FSL’s strong generalization ability under minor variations in evaluation conditions.

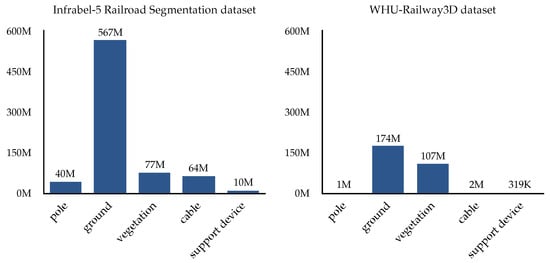

The in-domain OOD shift, which evaluated the model on novel, previously unseen classes from the same dataset as the training set, indicates a performance drop. For the Infrabel-5 Railroad Segmentation dataset, the {pole, vegetation} setting experienced a ~24.9% decrease in mIoU and 18.1% reduction in OA compared to the baseline (Table 3 vs. Table 2). In contrast, the {cable, support device} setting exhibited a 1.45% decline in mIoU with a comparable OA. For the WHU-Railway3D dataset (Table 4 vs. Table 2), the {pole, vegetation} setting demonstrates a ~7.4% mIoU and ~10.7% OA decrease, while the {cable, support device} setting shows a ~23.9% mIoU and ~5.1% OA decline. These performance differences across the datasets (Table 3 vs. Table 4) for the two evaluation settings are likely attributable to class imbalances between the datasets. Vegetation has higher representation in the WHU-Railway3D dataset, whereas cable and support device classes are more prevalent in the Infrabel-5 Segmentation dataset (Figure 6).

Figure 6.

Distribution of points per class, highlighting the highly imbalanced nature of our datasets: the Infrabel-5 Segmentation dataset (on the left) and the WHU-Railway3D dataset (on the right).

The cross-domain OOD shift with one-way tasks resulted in performance decreases of ~11.3–11.6% in mIoU and ~6.6–7.6% in OA when compared to the baselines (Table 5 and Table 6 vs. Table 2) for both datasets. Specifically, the mIoU and OA decreased by 11.6% and 7.65% on the Infrabel-5 Railroad Segmentation dataset and by 11.3% and 6.6% on the WHU-Railway3D dataset.

N-way K-shot tasks were examined for different N and K configurations. Under cross-domain OOD shifts, increasing to led to performance drops of 27.5% mIoU and ~14.2% OA on the Infrabel-5 Railroad Segmentation dataset, and ~23.7% mIoU and ~19.9% OA on the WHU-Railway3D dataset. These results align with existing FSL research, where the increased complexity of multi-class segmentation () tasks contributes to the observed performance disparity. Increasing K to 20 notably enhanced the model performance in some settings. For instance, in the in-domain OOD shift for the {pole, vegetation} setting on the Infrabel-5 Railroad Segmentation dataset, the mIoU and OA improved by ~12.1% and ~10%, respectively, compared to (Table 3). Similarly, for the {cable, support device} setting on the WHU-Railway3D dataset, the performance increased by ~2.5% in mIoU and ~1.6% in OA (Table 4). For cross-domain OOD shift using one-way tasks, improvements of over 4% in both mIoU (4.43) and OA (4.16) were observed compared to for the Infrabel-5 Segmentation dataset. Similar behavior was observed in the five-way tasks, where increasing K resulted in ~4-4.4% gains in both mIoU and OA across both the Infrabel-5 Segmentation dataset and WHU-Railway3D dataset (Table 5 and Table 6). However, in other scenarios, the number of support examples had a minimal effect on performance. While increasing K boosted the results, it occasionally introduced noise in some cases, leading to slight reductions in model performance.

This study underscores the importance of evaluating model performance using the MCC metric, which is particularly useful for identifying trivial majority classifiers. An MCC value of 0 indicates a model that predicts the majority class irrespective of input features [74]. Given the highly class-imbalanced nature of our datasets, MCC offers a more realistic assessment of model performance as it penalizes errors in minority classes more effectively than OA. The baseline results (Table 2) exhibit 82% agreement with the ground truth for the Infrabel-5 Segmentation dataset and 77% for the WHU-Railway3D dataset, while the corresponding OA values are higher, i.e., 92% and 89%, respectively. Similar trends are observed across all the experiments under distributional shifts. In those experiments involving one-way tasks, MCC consistently exceeded 60%, except in the in-domain OOD shift for the {pole, vegetation} setting, where it decreased to 47% for the Infrabel-5 Segmentation dataset and 39% for the WHU-Railway3D dataset, respectively.

The comparison between the FSL baselines under no-shift (Table 2) and fine-tuning (Table 7) shows that FSL achieved an 18.1% improvement in mIoU on the Infrabel-5 Railroad Segmentation dataset and a 21.3% improvement on the WHU-Railway3D dataset. Under cross-domain OOD shift with the one-way task setting (Table 5 and Table 6 vs. Table 7), FSL achieved 17.5% and 9.9% mIoU improvements on the Infrabel-5 Railroad Segmentation dataset and WHU-Railway3D dataset, respectively. However, such cross-domain OOD generalization with limited data is not inherent to fine-tuning as fine-tuning relies on the i.i.d. assumption and is evaluated on a test set drawn from the same distribution as the training data. Compared to fine-tuning, the FSL baselines (under no-shift) yielded a higher mH value on the WHU-Railway3D dataset. However, on the Infrabel-5 Railroad Segmentation dataset, mH was lower, suggesting that the model exhibits greater confidence when trained on a smaller dataset with FSL. Under cross-domain OOD shift, FSL resulted in higher mH values, reflecting increased uncertainty in unseen environments. Nevertheless, the model remains capable of generalizing beyond the i.i.d. assumption—an aspect where fine-tuning falls short. These findings further highlight the importance of incorporating predictive uncertainty measures, such as entropy, in the evaluation of FSL models.

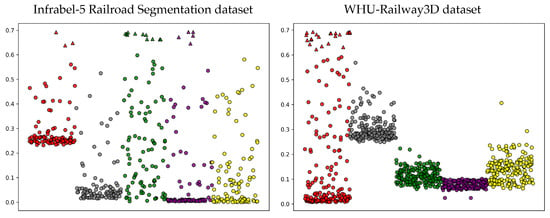

The entropy measure provides insights into the reliability of our model’s predictions by quantifying uncertainty, which is crucial for real-world applications such as railroad monitoring, where distributional shifts are inevitable. Since test data often lack ground truth for verification, quantifying predictive outcomes through entropy can support decision-making. When the model exhibits low confidence in its predictions, highly uncertain samples can be referred to human experts for further review. Figure 7 illustrates this for the Infrabel-5 Railroad Segmentation and WHU-Railway3D datasets under cross-domain OOD settings, highlighting highly uncertain samples for poles (red), ground (gray), vegetation (green), cables (purple), and support devices (yellow). For instance, three highly uncertain pole samples (red) in the Infrabel-5 Railroad Segmentation dataset could be flagged for human evaluation in critical decision-making scenarios. Moreover, in domain adaptation with FSL, mH provides essential insights: a high mH value indicates low model confidence under a new distribution, aiding in decisions such as model rejection, recalibration, or further fine-tuning.

Figure 7.

Sample-wise entropy plot for the first 100 samples of each class in the Infrabel-5 Railroad Segmentation dataset (left) and the WHU-Railway3D dataset (right) under cross-domain OOD shifts. Classes, poles, ground, vegetation, overhead lines, and support devices are represented in red, gray, green, purple, and yellow, respectively. Highly uncertain samples are represented as triangles.

7. Challenges and Future Prospects

Our work currently has certain limitations, primarily linked to the availability of only coordinates as features in our datasets. Segmenting vegetation from the ground solely based on x, y, z coordinates presents significant challenges as the most critical features often rely on height (z coordinates). In complex environments like railways, the height of the ground plane can vary, particularly between the track bed and its surroundings. Incorporating additional information, such as RGB color data, could enhance the model’s ability to learn more robust features. Furthermore, the development of more balanced training datasets is crucial for improving the effectiveness and generalization of machine learning models in railway monitoring. In the future, model calibration or active learning can be explored in conjunction with FSL for models under such distributional shifts.

8. Conclusions

Machine learning models are often evaluated under the i.i.d. assumption for training and test sets. However, this assumption does not hold true in dynamic real-world applications, particularly in complex railroad environments. In this work, we formulated distributional shifts that arise in railway environments due to sensor noise, infrastructure upgrades, or environmental variations across regions. We explored FSL for railway monitoring via the segmentation of 3D point clouds and evaluated its generalization capability under distributional shifts with minimal supervision at test time. We presented an extensive evaluation of the model performance, including an analysis and discussion, and assessed the model’s predictive confidence, emphasizing the crucial role of such evaluation in developing reliable models for real-world applications.

Author Contributions

Conceptualization, A.R.F.; methodology, A.R.F.; software, A.R.F. and M.L.; validation, A.R.F., M.L. and P.V.; formal analysis, A.R.F. and P.V.; investigation, A.R.F.; resources, P.V.; data curation, M.L.; writing—original draft preparation, A.R.F.; writing—review and editing, P.V.; visualization, A.R.F.; supervision, P.V.; project administration, P.V.; funding acquisition, P.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by VLAIO and KU Leuven Internal Fund.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The WHU-Railway3D dataset is publicly available from ‘WHU-Railway3D: A Diverse Dataset and Benchmark for Railway Point Cloud Semantic Segmentation’ at https://github.com/WHU-USI3DV/WHU-Railway3D/, (accessed on 5 January 2025). However, access to the Infrabel-5 dataset is restricted. This dataset was obtained from Infrabel, and access must be requested directly from them.

Acknowledgments

We sincerely thank Infrabel for providing the data.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Organisation for Economic Co-Operation and Development (OECD). Freight Transport, Indicator (Rail). 2022. Available online: https://www.oecd.org/en/data/indicators/freight-transport.html (accessed on 27 August 2024).

- Organisation for Economic Co-Operation and Development (OECD). Container Transport, Indicator (Rail). 2022. Available online: https://www.oecd.org/en/data/indicators/container-transport.html (accessed on 27 August 2024).

- Organisation for Economic Co-Operation and Development (OECD). Infrastructure Maintenance, Indicator (Rail). 2021. Available online: https://www.oecd.org/en/data/indicators/infrastructure-maintenance.html (accessed on 27 August 2024).

- Zhang, R.; Wu, Y.; Jin, W.; Meng, X. Deep-Learning-Based Point Cloud Semantic Segmentation: A Survey. Electronics 2023, 12, 3642. [Google Scholar] [CrossRef]

- Sarker, S.; Sarker, P.; Stone, G.; Gorman, R.; Tavakkoli, A.; Bebis, G.; Sattarvand, J. A comprehensive overview of deep learning techniques for 3D point cloud classification and semantic segmentation. Mach. Vis. Appl. 2024, 35, 67. [Google Scholar] [CrossRef]

- Gawlikowski, J.; Tassi, C.R.N.; Ali, M.; Lee, J.; Humt, M.; Feng, J.; Kruspe, A.; Triebel, R.; Jung, P.; Roscher, R.; et al. A survey of uncertainty in deep neural networks. Artif. Intell. Rev. 2023, 56, 1513–1589. [Google Scholar] [CrossRef]

- Bansak, K.C.; Paulson, E.; Rothenhäusler, D. Learning under random distributional shifts. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Palau de Congressos, Valencia, Spain, 2–4 May 2024; PMLR, 2024. pp. 3943–3951. [Google Scholar]

- Malinin, A.; Athanasopoulos, A.; Barakovic, M.; Cuadra, M.B.; Gales, M.J.; Granziera, C.; Graziani, M.; Kartashev, N.; Kyriakopoulos, K.; Lu, P.J.; et al. Shifts 2.0: Extending the dataset of real distributional shifts. arXiv 2022, arXiv:2206.15407. [Google Scholar]

- Data-Centric AI (DCAI), CSAIL, MIT. Class Imbalance, Outliers, and Distribution Shift. 2024. Available online: https://dcai.csail.mit.edu/2024/imbalance-outliers-shift/#:~:text=Distribution%20shift%20is%20a%20challenging,test%20(%20x%20%2C%20y%20)%20 (accessed on 28 August 2024).

- Fayjie, A.R.; Borah, J.; Carbone, F.; Tack, J.; Vandewalle, P. Predictive uncertainty estimation in deep learning for lung carcinoma classification in digital pathology under real dataset shifts. arXiv 2024, arXiv:2408.08432. [Google Scholar]

- Ravi, S.; Larochelle, H. Optimization as a model for few-shot learning. In Proceedings of the International Conference on Learning Representations, Palais des Congrès Neptune, Toulon, France, 24–26 April 2017. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Wierstra, D. Matching networks for one shot learning. In Proceedings of the International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29, pp. 3637–3645. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Learning to compare: Relation network for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1199–1208. [Google Scholar]

- Song, Y.; Wang, T.; Cai, P.; Mondal, S.K.; Sahoo, J.P. A comprehensive survey of few-shot learning: Evolution, applications, challenges, and opportunities. ACM Comput. Surv. 2023, 55, 271. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Proceedings of the International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Zhao, N.; Chua, T.S.; Lee, G.H. Few-shot 3D point cloud semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8873–8882. [Google Scholar]

- Chen, X.; Zhang, C.; Lin, G.; Han, J. Compositional prototype network with multi-view comparision for few-shot point cloud semantic segmentation. arXiv 2020, arXiv:2012.14255. [Google Scholar]

- Zhao, Z.; Wu, Z.; Wu, X.; Zhang, C.; Wang, S. Crossmodal few-shot 3D point cloud semantic segmentation. In Proceedings of the ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 4760–4768. [Google Scholar]

- Mao, Y.; Guo, Z.; Xiaonan, L.; Yuan, Z.; Guo, H. Bidirectional feature globalization for few-shot semantic segmentation of 3D point cloud scenes. In Proceedings of the International Conference on 3D Vision (3DV), Prague, Czech Republic, 12–15 September 2022; pp. 505–514. [Google Scholar]

- Wei, M. Few-shot 3D Point Cloud Semantic Segmentation with Prototype Alignment. In Proceedings of the International Conference on Machine Learning Technologies, Stockholm Sweden, 10–12 March 2023; pp. 195–200. [Google Scholar]

- Ning, Z.; Tian, Z.; Lu, G.; Pei, W. Boosting few-shot 3D point cloud segmentation via query-guided enhancement. In Proceedings of the ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 1895–1904. [Google Scholar]

- Guo, X.; Hu, H.; Yang, X.; Deng, Y. Enhancing Few-Shot 3D Point Cloud Semantic Segmentation through Bidirectional Prototype Learning. In Proceedings of the International Conference on Robotics and Artificial Intelligence, Singapore, 17–19 November 2023; pp. 7–16. [Google Scholar]

- Zhang, C.; Wu, Z.; Wu, X.; Zhao, Z.; Wang, S. Few-shot 3D point cloud semantic segmentation via stratified class-specific attention based transformer network. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 3410–3417. [Google Scholar]

- Xu, Y.; Hu, C.; Zhao, N.; Lee, G.H. Generalized few-shot point cloud segmentation via geometric words. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 21506–21515. [Google Scholar]

- He, S.; Jiang, X.; Jiang, W.; Ding, H. Prototype adaption and projection for few-and zero-shot 3D point cloud semantic segmentation. IEEE Trans. Image Process. 2023, 32, 3199–3211. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Zhang, L.; Jiang, G.; Hua, Y.; Liu, Y. Part-Whole Relational Few-Shot 3D Point Cloud Semantic Segmentation. Comput. Mater. Contin. 2024, 78, 3021–3039. [Google Scholar] [CrossRef]

- Yang, S.; Ding, H.; Jiang, X. Generalized Few-Shot 3D Point Cloud Segmentation. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Singapore, 19–22 May 2024; pp. 1–5. [Google Scholar]

- Elberink, S.O.; Khoshelham, K.; Arastounia, K.; Benito, D.D. Rail track detection and modelling in mobile laser scanning data. In Proceedings of the ISPRS Workshop Laser Scanning 2013. International Society for Photogrammetry and Remote Sensing (ISPRS); pp. 223–228. Available online: https://isprs-annals.copernicus.org/articles/II-5-W2/223/2013/ (accessed on 28 August 2024).

- Yang, B.; Fang, L. Automated extraction of 3-D railway tracks from mobile laser scanning point clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4750–4761. [Google Scholar] [CrossRef]

- Zhu, L.; Hyyppa, J. The use of airborne and mobile laser scanning for modeling railway environments in 3D. Remote Sens. 2014, 6, 3075–3100. [Google Scholar] [CrossRef]

- Arastounia, M. Automated recognition of railroad infrastructure in rural areas from LiDAR data. Remote Sens. 2015, 7, 14916–14938. [Google Scholar] [CrossRef]

- Oude Elberink, S.; Khoshelham, K. Automatic extraction of railroad centerlines from mobile laser scanning data. Remote Sens. 2015, 7, 5565–5583. [Google Scholar] [CrossRef]

- Lamas, D.; Soilán, M.; Grandío, J.; Riveiro, B. Automatic point cloud semantic segmentation of complex railway environments. Remote Sens. 2021, 13, 2332. [Google Scholar] [CrossRef]

- Sánchez-Rodríguez, A.; Riveiro, B.; Soilán, M.; González-deSantos, L. Automated detection and decomposition of railway tunnels from Mobile Laser Scanning Datasets. Autom. Constr. 2018, 96, 171–179. [Google Scholar] [CrossRef]

- Soilán, M.; Nóvoa, A.; Sánchez-Rodríguez, A.; Riveiro, B.; Arias, P. Semantic segmentation of point clouds with pointnet and kpconv architectures applied to railway tunnels. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 2, 281–288. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. KPConv: Flexible and deformable convolution for point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6411–6420. [Google Scholar]

- Chen, L.; Jung, J.; Sohn, G. Multi-scale hierarchical CRF for railway electrification asset classification from mobile laser scanning data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3131–3148. [Google Scholar] [CrossRef]

- Wang, Z.; Yu, G.; Chen, P.; Zhou, B.; Yang, S. FarNet: An attention-aggregation network for long-range rail track point cloud segmentation. IEEE Trans. Intell. Transp. Syst. 2021, 23, 13118–13126. [Google Scholar] [CrossRef]

- Ton, B.; Ahmed, F.; Linssen, J. Semantic segmentation of terrestrial laser scans of railway catenary arches: A use case perspective. Sensors 2022, 23, 222. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Landrieu, L.; Simonovsky, M. Large-scale point cloud semantic segmentation with superpoint graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4558–4567. [Google Scholar]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.H.; Koltun, V. Point transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 16259–16268. [Google Scholar]

- Kharroubi, A.; Ballouch, Z.; Hajji, R.; Yarroudh, A.; Billen, R. Multi-Context Point Cloud Dataset and Machine Learning for Railway Semantic Segmentation. Infrastructures 2024, 9, 71. [Google Scholar] [CrossRef]

- Xiao, C.; Wachs, J. Triangle-net: Towards robustness in point cloud learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 826–835. [Google Scholar]

- Yan, X.; Zheng, C.; Li, Z.; Wang, S.; Cui, S. PointASNL: Robust point clouds processing using nonlocal neural networks with adaptive sampling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5589–5598. [Google Scholar]

- Taghanaki, S.A.; Luo, J.; Zhang, R.; Wang, Y.; Jayaraman, P.K.; Jatavallabhula, K.M. Robustpointset: A dataset for benchmarking robustness of point cloud classifiers. arXiv 2020, arXiv:2011.11572. [Google Scholar]

- Zhou, H.; Chen, K.; Zhang, W.; Fang, H.; Zhou, W.; Yu, N. DUP-Net: Denoiser and upsampler network for 3D adversarial point clouds defense. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1961–1970. [Google Scholar]

- Liu, H.; Jia, J.; Gong, N.Z. PointGuard: Provably robust 3D point cloud classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6186–6195. [Google Scholar]

- Dong, X.; Chen, D.; Zhou, H.; Hua, G.; Zhang, W.; Yu, N. Self-robust 3D point recognition via gather-vector guidance. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; 2020; pp. 11513–11521. [Google Scholar]

- Sun, J.; Cao, Y.; Choy, C.B.; Yu, Z.; Anandkumar, A.; Mao, Z.M.; Xiao, C. Adversarially robust 3D point cloud recognition using self-supervisions. In Proceedings of the International Conference on Neural Information Processing Systems, Virtual, 6–14 December 2021; Volume 34, pp. 15498–15512. [Google Scholar]

- Veeramacheneni, L.; Valdenegro-Toro, M. A Benchmark for Out of Distribution Detection in Point Cloud 3D Semantic Segmentation. arXiv 2022, arXiv:2211.06241. [Google Scholar]

- Bhardwaj, A.; Pimpale, S.; Kumar, S.; Banerjee, B. Empowering knowledge distillation via open set recognition for robust 3D point cloud classification. Pattern Recognit. Lett. 2021, 151, 172–179. [Google Scholar] [CrossRef]

- Riz, L.; Saltori, C.; Ricci, E.; Poiesi, F. Novel class discovery for 3D point cloud semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9393–9402. [Google Scholar]

- Huang, C.; Abdelzad, V.; Mannes, C.G.; Rowe, L.; Therien, B.; Salay, R.; Czarnecki, K. Out-of-distribution detection for LiDAR-based 3D object detection. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 4265–4271. [Google Scholar]

- Cen, J.; Yun, P.; Cai, J.; Wang, M.Y.; Liu, M. Open-set 3D object detection. In Proceedings of the International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; pp. 869–878. [Google Scholar]

- Kong, L.; Liu, Y.; Li, X.; Chen, R.; Zhang, W.; Ren, J.; Pan, L.; Chen, K.; Liu, Z. Robo3D: Towards robust and reliable 3D perception against corruptions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 19994–20006. [Google Scholar]

- Wong, K.; Wang, S.; Ren, M.; Liang, M.; Urtasun, R. Identifying unknown instances for autonomous driving. In Proceedings of the Conference on Robot Learning, PMLR, Virtual, 16–18 November 2020; pp. 384–393. [Google Scholar]

- Alliegro, A.; Cappio Borlino, F.; Tommasi, T. 3DOS: Towards 3D open set learning-benchmarking and understanding semantic novelty detection on point clouds. In Proceedings of the International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 21228–21240. [Google Scholar]

- Kumar, U.R.; Fayjie, A.R.; Hannaert, J.; Vandewalle, P. BelHouse3D: A Benchmark Dataset for Assessing Occlusion Robustness in 3D Point Cloud Semantic Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Homa, D.; Cultice, J.C. Role of feedback, category size, and stimulus distortion on the acquisition and utilization of ill-defined categories. J. Exp. Psychol. Learn. Mem. Cogn. 1984, 10, 83–94. [Google Scholar] [CrossRef]

- Donald, H.; Joseph, C.; Don, C.; David, G.; Steven, S. Prototype abstraction and classification of new instances as a function of number of instances defining the prototype. J. Exp. Psychol. 1973, 101, 116–122. [Google Scholar] [CrossRef]

- Minda, J.P.; Smith, J.D. Prototypes in category learning: The effects of category size, category structure, and stimulus complexity. J. Exp. Psychol. Learn. Mem. Cogn. 2001, 27, 775. [Google Scholar] [CrossRef] [PubMed]

- Minda, J.P.; Smith, J.D. Comparing prototype-based and exemplar-based accounts of category learning and attentional allocation. J. Exp. Psychol. Learn. Mem. Cogn. 2002, 28, 275–292. [Google Scholar] [CrossRef]

- Smith, J.D.; Minda, J.P. Prototypes in the mist: The early epochs of category learning. J. Exp. Psychol. Learn. Mem. Cogn. 1998, 24, 1411–1436. [Google Scholar] [CrossRef]

- Smith, J.D.; Redford, J.S.; Haas, S.M. Prototype abstraction by monkeys (Macaca mulatta). J. Exp. Psychol. Gen. 2008, 137, 390–401. [Google Scholar] [CrossRef] [PubMed]

- Posner, M.I.; Keele, S.W. On the genesis of abstract ideas. J. Exp. Psychol. 1968, 77, 353–363. [Google Scholar] [CrossRef] [PubMed]

- Minda, J.P.; Smith, J.D. Prototype models of categorization: Basic formulation, predictions, and limitations. In Formal Approaches in Categorization; Pothos, E.M., Wills, A.J., Eds.; Cambridge University Press: Cambridge, UK, 2011; pp. 40–64. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. In Proceedings of the International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30, pp. 4080–4090. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic graph cnn for learning on point clouds. ACM Trans. Graph. (TOG) 2019, 38, 146. [Google Scholar] [CrossRef]

- Kaul, C.; Mitton, J.; Dai, H.; Murray-Smith, R. Convolutional point transformer. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 303–319. [Google Scholar]

- Fayjie, A.R.; Vandewalle, P. Few-shot learning on point clouds for railroad segmentation. Electron. Imaging 2023, 35, 1–5. [Google Scholar] [CrossRef]

- Urban Spatial Intelligence Research Group at LIESMARS, W.U. WHU-Railway3D: A Diverse Dataset and Benchmark for Railway Point Cloud Semantic Segmentation. 2023. Available online: https://github.com/WHU-USI3DV/WHU-Railway3D (accessed on 27 August 2024).

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).