1. Introduction

Research into sixth-generation (6G) networks has gained significant traction, particularly as the traffic continues its rapid evolution, reaching 5016 EB by 2030 toward more complex and demanding applications [

1]. Meanwhile, ongoing work on resource allocation in heterogeneous 5G networks emphasizes scalability, adaptive user provisioning, and emerging deployment challenges [

2], providing valuable insights into how future 6G systems can extend or refine these strategies. In parallel, aerospace integrated networks—often envisioned as a critical component for 6G—promise global connectivity through interconnected satellite constellations and airborne platforms [

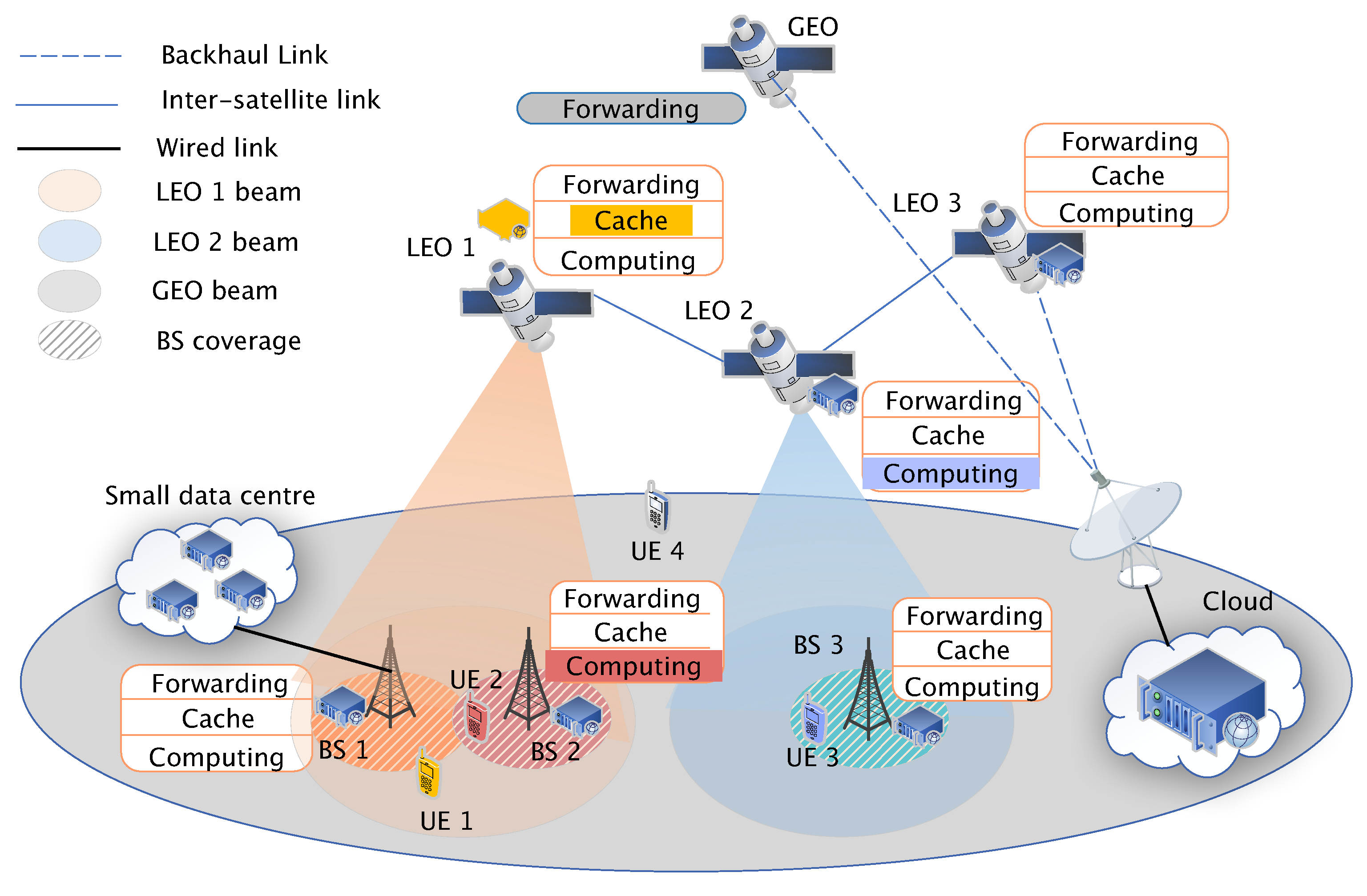

3]. LEO satellites not only provide computing and content retrieval services but are also capable of efficient data transmission, particularly in scenarios sensitive to time delays. In contrast, GEO satellites primarily function as data transmission relays, offering stable connectivity over wider areas. Building on these advances, our study presents a novel dual-layer satellite architecture designed to synchronize communication and computation resources across both LEO and GEO satellites, with the ultimate goal of empowering next-generation IoT services.

With the rapid advancement of science and technology, human civilization is continually progressing. Today, human activities are no longer limited to plains; they have expanded into deserts, oceans, the sky, and even outer space. However, these areas are characterized by wide distribution and high density, which makes information transmission in these areas somewhat challenging. Due to the broad spatial distribution, long transmission distances, and limited node capacity, traditional cellular systems can no longer meet the demand for real-time communication. Satellite communication, with its large communication capacity, long transmission distance, and ability to ignore complex topographical features, has gained increasing attention in recent years and offers new solutions to these issues [

4]. Specifically, dual-layer satellite networks can further enhance the stability and reliability of satellite communication, as well as expand bandwidth and coverage. By integrating multi-layer satellite and ground networks, the geographical limitations of ground networks can be overcome, making heterogeneous satellite networks (HSN) a popular development direction in modern communication technology [

5].

However, deploying HSN introduces critical challenges that require innovative solutions. Key issues include efficient resource allocation to meet diverse user demands, minimizing co-channel interference between LEO and GEO satellites in shared spectrum scenarios, and ensuring low-latency service delivery for applications such as video streaming. These challenges are further compounded by the complexity of integrating emerging technologies like Mobile Edge Computing (MEC), in-network caching, and Software-Defined Networking (SDN) into HSN architectures.

According to the [

6], the development of the industry has enriched short video content, significantly increasing user numbers and making it a primary source of mobile internet usage time and traffic. As of December 2023, the user size of online video in China had reached 1.067 billion. The ubiquity of video services presents a huge challenge for the design and operation of the next generation of mobile networks. One of the issues faced by video transmission services is the environmental limitations and resource scarcity of terrestrial cellular networks, which can cause disruptions and instability in video service transmission. Considering the characteristics of heterogeneous satellite networks discussed earlier, introducing them into video service transmission schemes presents new possibilities.

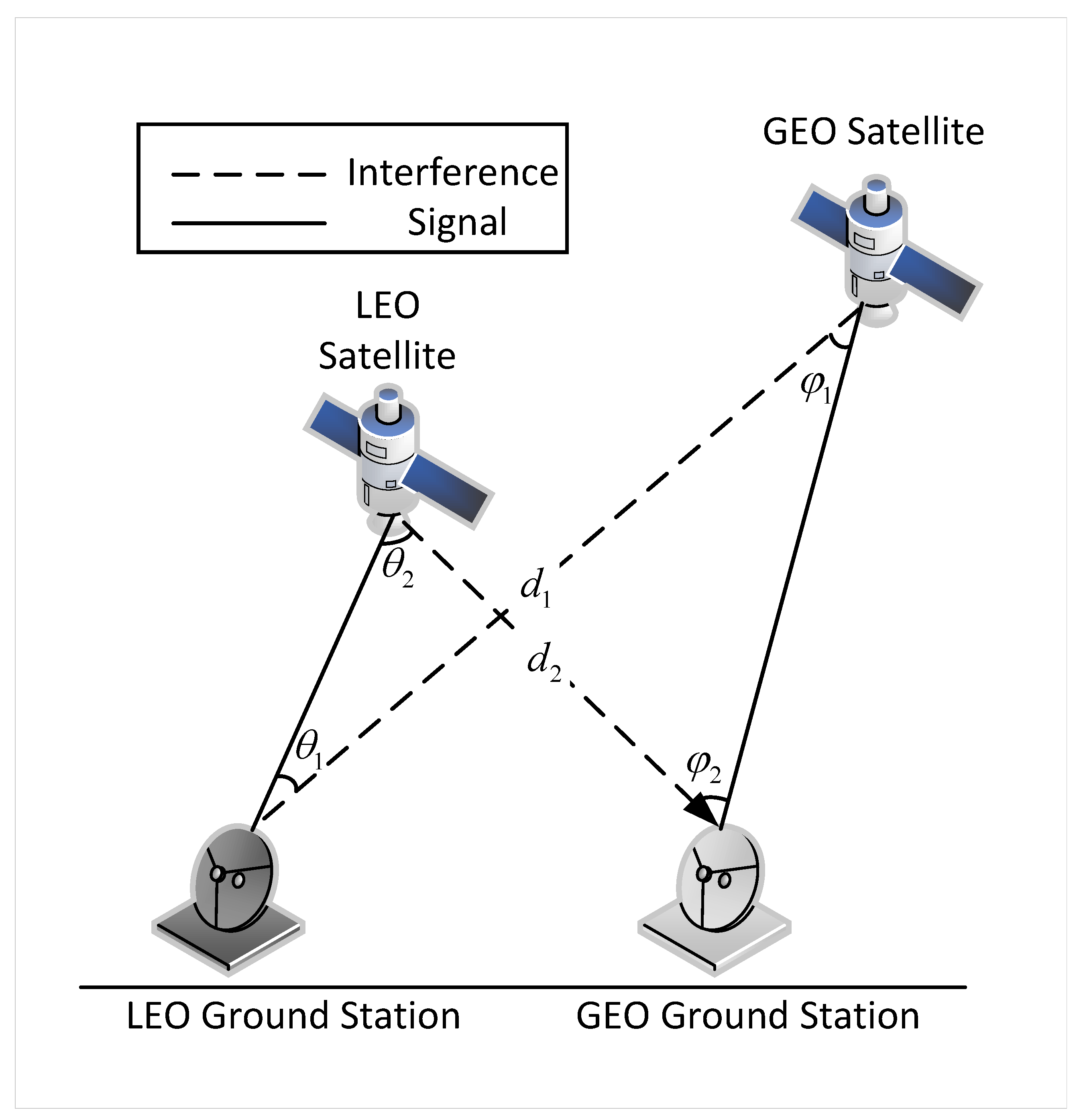

There are still several challenges in implementing video transmission services in heterogeneous satellite networks. First, although there have been numerous innovations in the wireless communication and networking fields over the past few years, allowing mobile users to access cloud data at extremely fast transmission rates, the current network architecture still suffers from significant propagation delays, which are unacceptable for latency-sensitive applications. For example, in video applications, retrieving video resources from the cloud can lead to long initial buffering times and video playback stuttering. Second, while the emergence of heterogeneous satellite networks has expanded the network’s reach, it has also increased its complexity. Therefore, when transmitting video streams in heterogeneous satellite networks, it is necessary to measure and predict user traffic and proactively allocate and plan network resources based on the network status to ensure users’ video quality demands and reduce the pressure on network operations and maintenance. Finally, the simultaneous presence of multiple types of networks will lead to spectrum resource scarcity, severely limiting the future development of heterogeneous satellite networks. Given the non-renewable nature of spectrum resources, spectrum sharing is one of the inevitable solutions [

7]. However, spectrum sharing also introduces new issues, such as co-channel interference between heterogeneous networks, especially the interference of low Earth orbit satellite networks with high Earth orbit satellite networks, because high orbit satellites have absolute priority to ensure their service quality is not affected by low orbit satellites [

8,

9]. Therefore, in heterogeneous satellite networks based on video stream transmission, resource allocation and interference coordination still require further research.

Compared to traditional terrestrial cellular networks, heterogeneous satellite networks that integrate MEC, in-network caching, and SDN can provide more efficient services to users. In this network, users can first request services from edge nodes equipped with caching and computing capabilities. If the edge nodes cannot meet the user’s needs, the user can still access cloud server resources through the satellite backhaul network. The entire network’s traffic planning and resource scheduling are uniformly controlled by SDN technology. However, due to the complexity of heterogeneous networks and the mix of resources, how to allocate resources to meet each user’s requests still requires further research. Additionally, the issue of co-channel interference in heterogeneous satellite networks also needs to be addressed. Thus, the paper proposes a heterogeneous satellite network integrating MEC, in-network caching, and SDN to enhance service efficiency over traditional networks. The main contributions are summarized as follows.

This paper proposes to tackle resource allocation and interference coordination challenges in dual-layer satellite networks combining LEO and GEO satellites.

A mathematical optimization problem is formulated to optimize resource allocation while minimizing co-channel interference. An ADMM-based distributed algorithm is proposed to solve the optimization problem efficiently.

The algorithm decomposes the problem into subproblems for users, service nodes, and the network, allowing distributed optimization. The incorporation of MEC (Mobile Edge Computing), in-network caching, and SDN (Software-Defined Networking) enhances service efficiency in the satellite network.

Simulation results show that the proposed algorithm improves network performance by efficiently managing resources and reducing interference.

The paper is structured as follows.

Section 2 reviews existing literature on heterogeneous networks, satellite communications, and resource allocation strategies, emphasizing the need for integrated network architectures.

Section 3 presents the formulation of the resource allocation and interference coordination problem within heterogeneous dual-layer satellite networks.

Section 4 introduces the algorithm designed to solve the formulated problem, detailing its methodology and theoretical underpinnings.

Section 5 describes the simulation setup and parameters used and analyzes the performance outcomes of the proposed algorithm.

Section 6 summarizes the key findings and discusses potential future research directions.

4. ADMM-Based Distributed Solution Strategy

In optimization problem , constraint indicates that the variables and must be binary, making the problem a mixed-integer nonlinear programming challenge. Moreover, there is a coupling of variables in constraints , , , and , adding complexity to finding a solution. To enhance efficiency, a more detailed analysis and refinement of the problem are necessary.

4.1. Problem Transformation

To elucidate the product relationship between the variables

and

in constraint

, this section introduces a novel variable

. This variable denotes whether user

m selects a video with resolution level

g and connects via access point

j. Consequently, constraint

is reformulated as follows:

Upon the introduction of a new variable

, the numerical constraint of

remains unaltered; however, the focus shifts from users selecting a single access base station to each user picking one access base station specifically for video streams at resolution level

g. The constraint concerning variable

within

is thus reformulated as follows:

This adjustment, centered around the newly defined variable

, modifies constraint

further into:

Both are subsequently relaxed to take values in the interval

. Consequently, constraint

is updated to:

With these transformations, the original optimization problem

is converted into a new problem, denoted as

, expressed as:

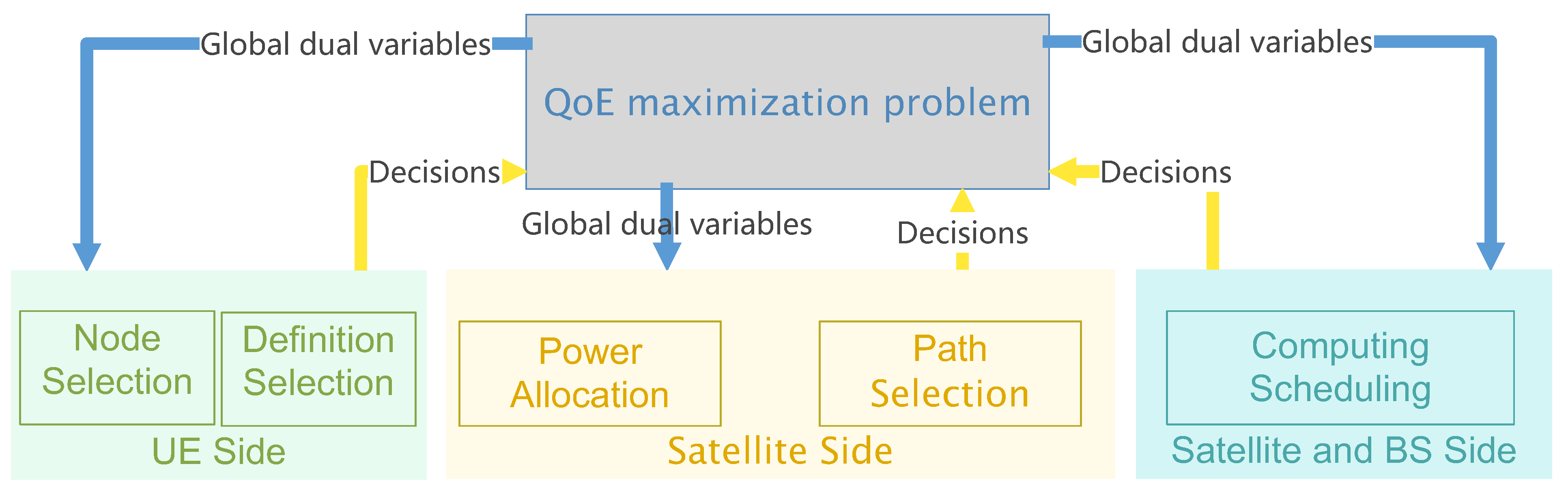

4.2. Problem Decomposition and Joint Optimization Algorithm

In this paper, the Alternating Direction Method of Multipliers (ADMM) [

36] is utilized to address the interdependencies between variables in

. This approach breaks down the problem into subproblems focused on the user side, the service node side, and the network side, solving each independently to enhance efficiency. In detail, the user side is responsible for selecting video resolution levels and choosing access points; the service node side oversees the allocation of computational resources; and the network side manages the planning of transmission paths and the allocation of power for wireless links.

To construct the augmented Lagrangian function for the problem

, we first establish independent local feasible sets

,

, and

for the variables in each sub-problem as given below:

To address the coupling constraints, we introduce dual variables

,

, and

for the relaxation of constraints

,

, and

, respectively. This yields the following augmented Lagrangian function:

where

,

, and

act as penalty parameters, and

,

, and

are specified by:

By utilizing ADMM, we can switch between optimizing the objective function and separating the problem

into three subproblems: the video resolution and access node selection issue on the user side, the computational scheduling challenge on the service node side, and the path planning and power allocation on the network side. In particular, when tackling the user-side issue, the variables related to the service node side and network side are kept fixed, and the same approach is applied to the other subproblems. After completing the optimization, the centralized controller treats the outcomes of the distributed optimization as fixed values to address the overall problem from the controller’s perspective. The algorithm ceases iterations once the stopping conditions are satisfied. This scheme is summarized in

Figure 3.

The issue of video resolution and selection of an access node can be handled separately by each user on their own. Consequently, the individual user’s optimization problem is formulated as follows:

In problem

, the goal involves a standard convex objective function along with two linear equality constraints. Due to these characteristics, the problem can be effectively tackled using convex optimization tools such as CVX [

37].

Analogous to the computational scheduling issue on the user side, the service node-side scheduling challenge can likewise be addressed independently by each service node. The problem specific to each service node is expressed as:

The issue of path planning and power allocation on the network side is structured as follows:

In every cycle

t, after addressing the distributed subproblems, the dual variables are revised according to the following expressions:

After breaking down the problem, we can view it as having two parts: the user side and the network side. On the user side, video resolution levels are selected based on the resources provided by the network. On the network side, resources are allocated based on the user’s choices. If the video resolution level selected by the user remains unchanged, the network side’s demand will not change either. Once the resource demand on the network side cannot be met, the network will notify the video user to lower the resolution demand through network pricing. Therefore, if this paper can solve the internal problems , , and in each iteration, the SDN controller can update the dual variables and pass them to the nodes and users, helping the nodes and users find the optimal solution to their subproblems. These subproblems can each be optimized independently, considering their unique local constraints and dual variables. Specifically:

User-side optimization: Users select the suitable video resolution and access point from the available network resources.

Service node-side optimization: Service nodes distribute computational resources to users.

Network-side optimization: The network decides on transmission paths and administers power for the wireless links.

Once these subproblems are solved, the dual variables are updated, and the cycle is repeated until the system converges. The subsequent algorithm outlines the ADMM-based distributed approach for managing resource distribution and coordinating interference within the GEO–LEO satellite system.

After solving the problem, the user’s video resolution level

and the new variable

are converted back to binary variables based on the marginal benefits of the obtained linear solution. Here, considering that the new variable

represents the selection of access base station

j for the video file requested by user

m at level

g, it is necessary to first determine the video quality level

g chosen by user

m based on

before obtaining the access node selection variable

. Then, find the submatrix corresponding to

based on parameters

m and

g, which is the set corresponding to the access node selection variable

. The complete algorithm flow is shown in Algorithm 1.

| Algorithm 1 Algorithm for ADMM-based Resource Allocation and Interference Coordination |

- 1:

Input: Network , user video demand f. - 2:

Initialization Initialize variables , , , , and , as well as dual variables , , and . Set stopping threshold and maximum iterations T. - 3:

for each step do - 4:

Broadcast dual variables , , and from the SDN controller to users and service nodes. - 5:

Solve the user-side optimization problem for each user to obtain updated video resolution and access point selection . - 6:

Solve the service node-side optimization problem to obtain updated computational resource allocation . - 7:

Solve the network-side optimization problem to update transmission paths and power allocation . - 8:

Update the dual variables , , and using the results from step 4. - 9:

if the difference in the objective function value between iterations t and is less than then - 10:

stop - 11:

end if - 12:

end for - 13:

Output: Video resolution selection , access point selection , resource allocation , transmission paths , and power allocation .

|

To make the proposed scheme more accessible, consider a simplified dual-layer satellite scenario with two users ( and ), one LEO satellite acting as a service node with limited computational and caching resources, one GEO satellite functioning purely as a communication relay, and one ground base station connected to a small data center. We assume:

Each user requests video service at one of two resolution levels (e.g., HD or SD).

The LEO satellite and data center can process and cache video content, while the GEO satellite primarily forwards traffic.

Spectrum resources must be shared among all links, and interference arises if both satellites transmit in the same frequency band simultaneously.

We can formulate a smaller version of our optimization model for this scenario as follows:

Decision variables: to indicate whether each user is assigned to SLEO or BS for primary video access. to represent the chosen resolution levels (e.g., 1 for SD, 2 for HD).

Objective: Maximize overall video quality while minimizing interference and respecting power/bandwidth constraints at SLEO and BS.

Constraints: Each user must be served by either SLEO or BS (but not both). The LEO satellite has limited power and needs to avoid interfering with GEO satellite transmissions. When the user’s resolution (rUi) remains fixed, the network side must ensure sufficient resource allocation for that demand. If resources become constrained, the network (SDN controller) may signal one or both users to downgrade their resolutions to ensure stable service.

Although modest in scale, this example highlights how our scheme coordinates resource allocation among multi-layer satellite links and ground stations. By solving the user-side and network-side subproblems with ADMM, we iteratively assign service nodes, video resolution levels, and transmission parameters in a way that balances performance, interference mitigation, and resource limitations. Scaling up to more satellites, users, and base stations follows the same core logic while adding corresponding subproblems and constraints.

4.3. Algorithm Performance Analysis

The problem is a convex optimization problem featuring both a convex objective function and constraints, thus ensuring convergence to a globally optimal solution. Moreover, the augmented Lagrangian approach supports strong duality, meaning that the ADMM-based algorithm achieves convergence to the global optimum.

Regarding complexity analysis, utilizing centralized methods like primal-dual interior-point algorithms to solve would entail a complexity of , where denotes the number of iterations, M is the user count, N the number of service nodes, K the number of candidate paths, and L represents the number of links. However, by applying ADMM for problem decomposition, complexity is notably diminished, allowing for distributed solving at the level of each component (user, service node, and network).

The complexity for resolving the user-side sub-problem via a convex optimization tool is , where G stands for the number of video quality levels, and J the number of access points. The service node-side sub-problem has a complexity of . Lastly, solving the network-side sub-problem carries a complexity of .

Consequently, the overall complexity of each iteration of the proposed ADMM-based algorithm is . With iterations for achieving convergence, the total complexity becomes . This highlights that the ADMM algorithm can substantially reduce computational complexity while ensuring effective resource allocation and interference coordination in a GEO–LEO satellite network.

In addition to providing a detailed complexity analysis of our ADMM-based algorithm, we compared its computational efficiency with two commonly referenced methods in the literature.

Centralized Interior-Point Method. Classic interior-point solvers applied to similar optimization problems demonstrate a polynomial time complexity that grows significantly with the problem size per iteration. While these solvers can converge in relatively few iterations, they rely on a centralized structure, leading to high memory overhead and potentially long solution times for large-scale heterogeneous satellite networks.

Gradient-Based Distributed Approach. Distributed gradient descent or primal-dual methods operate without forming large Hessian matrices, reducing per-iteration costs. However, these methods often need more iterations to achieve convergence and may suffer from slow progress in the presence of highly coupled constraints, as found in dual-layer satellite networks.

By decomposing the resource allocation and interference coordination problem into subproblems (user side, service node side, and network side), the ADMM-based algorithm strikes a balance between per-iteration complexity and convergence speed. Consequently, the total complexity per iteration is lower than that of the centralized interior-point approach, while convergence is typically faster than standard gradient-based methods in scenarios with tightly coupled constraints. This efficiency is particularly advantageous for large-scale GEO–LEO satellite networks.

5. Simulation Results and Analysis

In this section, MATLAB is used to simulate and analyze the performance of the proposed ADMM-based resource allocation and interference coordination strategy. The simulated network consists of multiple edge nodes and backhaul networks, with edge networks composed of multiple users, access points, and small data centers. Users communicate with access points via wireless links, while access points and small data centers are connected via wired links. The backhaul network comprises multiple LEO and GEO satellites, where access points can request resources from the cloud via the satellite network or communicate with other access points.

The satellite-earth propagation was modeled to accurately reflect real-world communication conditions. The following factors were considered. Free-space path loss (FSPL) is calculated as follows.

where

d is the distance between the satellite and the ground station in kilometers, and

f is the frequency in MHz. Atmospheric attenuation and rain attenuation are modeled based on using the ITU-R P.618-13 recommendation to account for signal degradation [

38].

The network service nodes are pre-loaded with video resources from the cloud, cached within the network. Each user randomly requests a video file, and the following parameters, based on ITU’s EPFD limits, are used for the simulation, as shown in

Table 3. The bandwidth values for LEO and GEO satellites were chosen based on the frequency bands typically allocated for satellite communications, such as the Ku-band (12–18 GHz) and Ka-band (26.5–40 GHz), as per ITU-R recommendations. These bands are widely used in satellite networks for high-speed data transmission. The transmission power levels were selected to balance the trade-off between maintaining adequate signal strength and minimizing interference. The values align with power levels reported in recent studies on LEO-GEO coexistence.

In this paper, the specific values chosen for the satellite parameters (

Table 3) reflect realistic operational thresholds and commonly referenced industry standards. For instance, the LEO orbit height of 1200 km represents a practical trade-off between reduced latency and wide coverage, aligning with typical commercial LEO constellations; the GEO orbit height of 35,786 km corresponds to the standard geostationary ring, ensuring minimal relative orbital motion from the ground perspective. Similarly, the inclination angle of 87° approximates near-polar orbits often employed to achieve global coverage. Antenna gains for both LEO and GEO satellites (25 dBi) and ground stations (45 dBi for LEO, 30 dBi for GEO) are derived from typical engineering designs in the literature, balancing transmit power demands with hardware constraints. These selections ensure that our simulation environment is both realistic and representative of state-of-the-art satellite network deployments, thus strengthening the applicability of our resource allocation and interference coordination findings.

In this paper, we compare our proposed scheme with a baseline and other peers [

11,

39,

40].

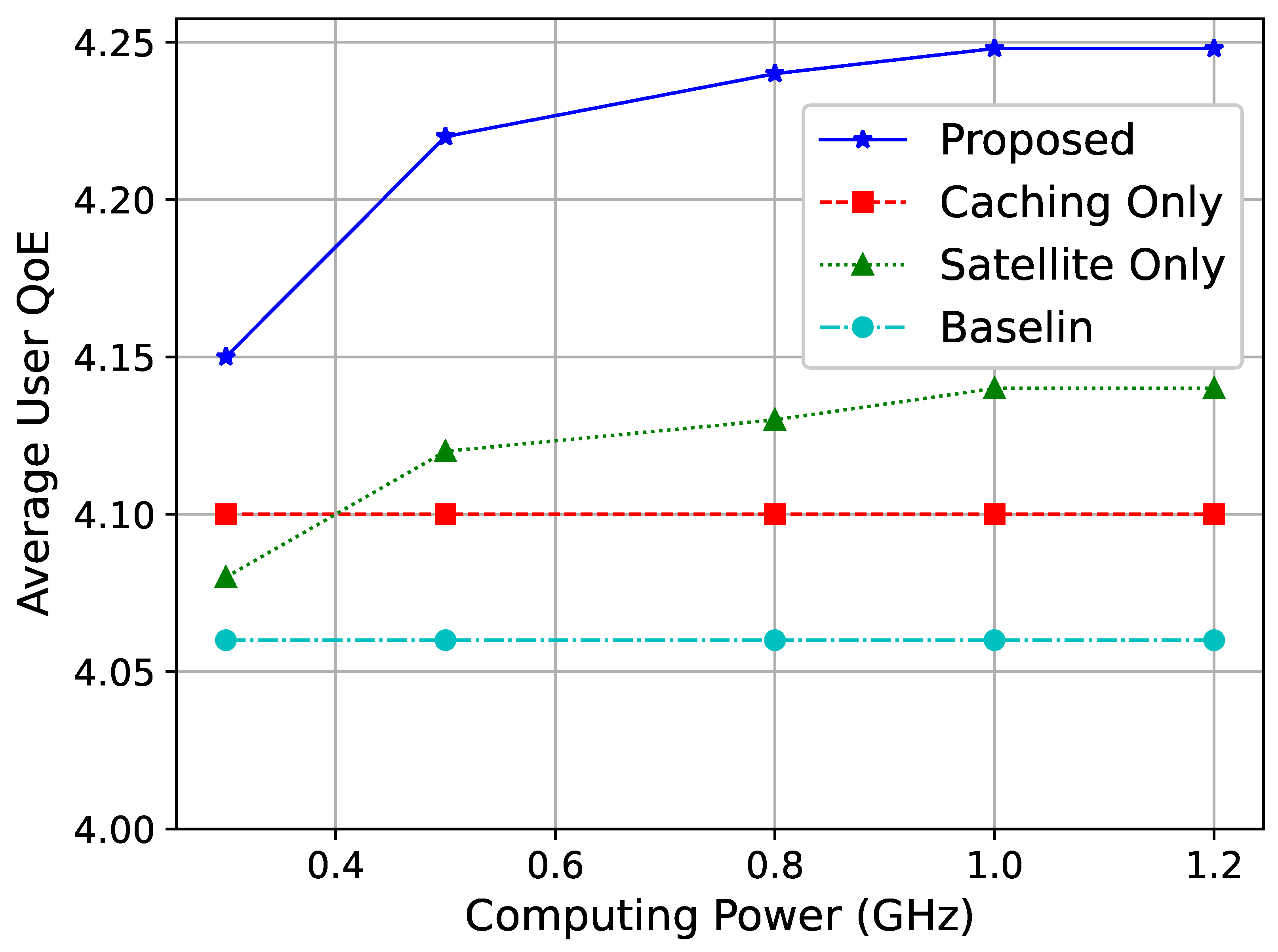

Figure 4 shows the average user vMOS under different normalized computing capacities. The normalized computing capacity refers to the ratio of the tested computing resource capacity to the default value. As seen in the figure, as the computing capacity increases, the overall performance of the network improves. This is because higher computing power enables the network nodes to handle more tasks, allowing more users to retrieve video resources nearby, thus improving network performance. Moreover, the observed QoE improvement is attributed to the efficient allocation of resources and effective interference coordination. By optimizing power control and frequency allocation, our algorithm minimizes co-channel interference between GEO and LEO satellites. This leads to enhanced spectral efficiency and higher data rates for users. Specifically, the dynamic adjustment of resource allocation in response to network conditions ensures that users experience consistent and improved service quality, even as the network scales.

When the computing capacity reaches a certain threshold, the proposed scheme begins to stabilize. This is due to other limiting factors in the network, such as cache hit rates and link resources, preventing further performance improvement. The stabilization of performance at higher computing capacities (as seen in

Figure 4) indicates diminishing returns beyond a certain threshold. This insight can guide network planners in optimizing resource allocation by balancing computing capacity with other factors, such as caching and link bandwidth, to achieve cost-effective deployments.

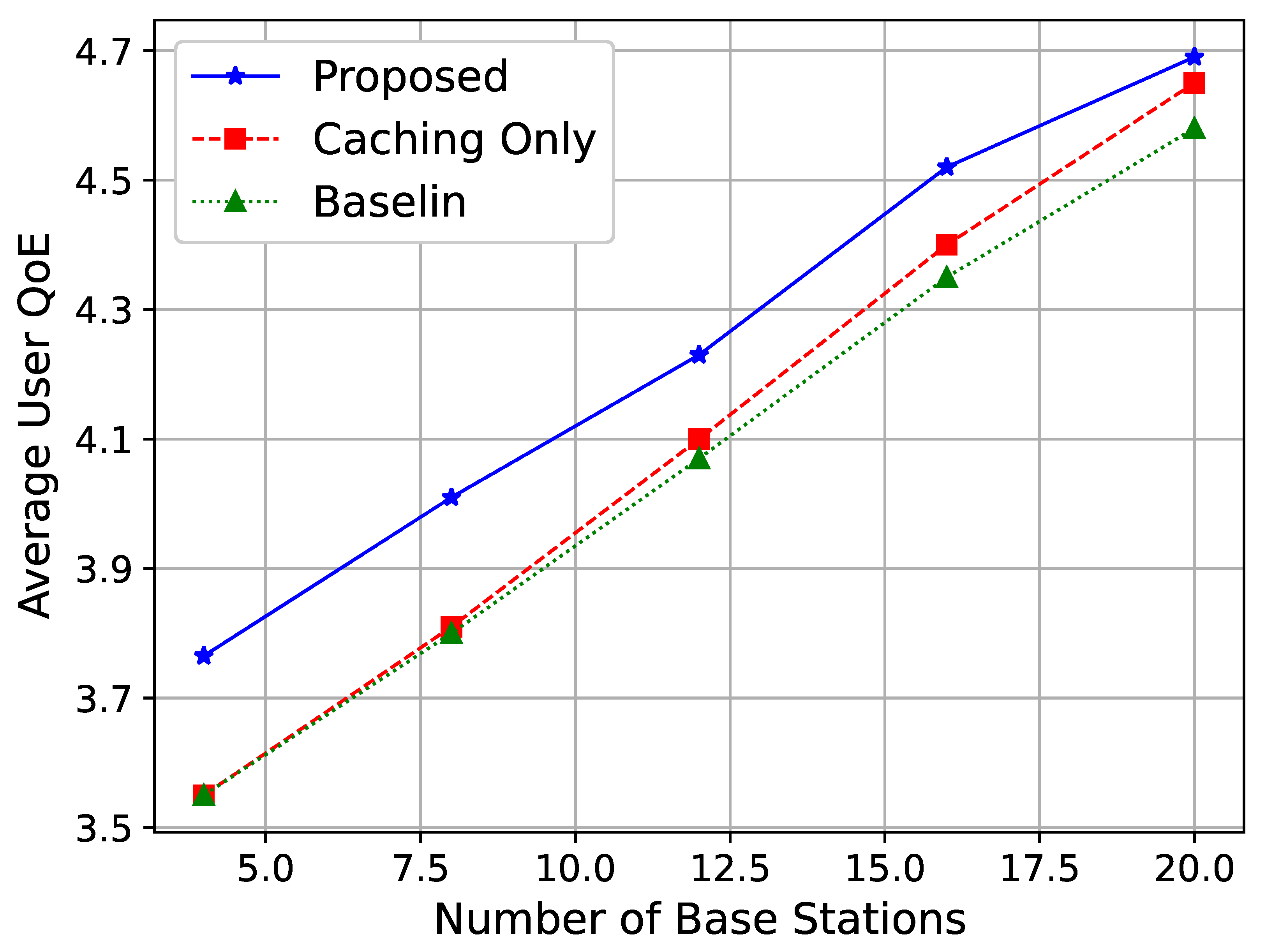

Figure 5 shows the relationship between the number of access points (APs) and the average vMOS of users. It can be observed that as the number of access points increases, the average vMOS of users improves. This is because an increase in the number of access points enhances the network’s service capacity by providing more resources and offering better access environments for users. Additionally, more access points improve network coverage and channel conditions, further enhancing user experience. Increasing the number of base stations reduces the number of users served by each base station. This alleviates traffic congestion, allowing each user to experience better service quality due to more available resources per user. More base stations enable the network to implement frequency reuse more effectively. By assigning the same frequency bands to non-adjacent cells, the network maximizes spectral efficiency while minimizing co-channel interference. This efficient use of spectrum resources contributes to higher data throughput and improved QoE.

Next,

Figure 6,

Figure 7 and

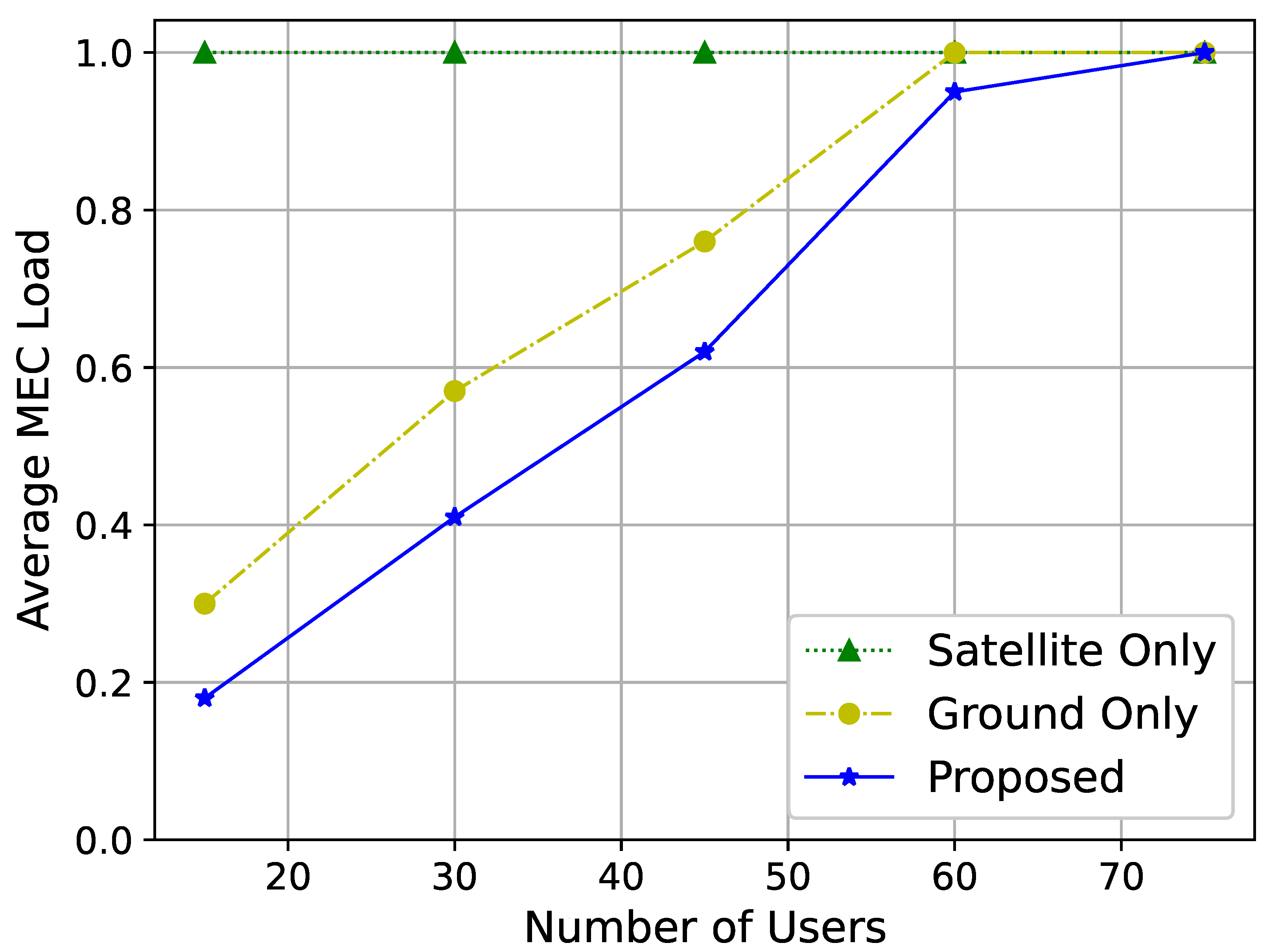

Figure 8 illustrate the average MEC server load under different network settings. To examine the impact of network architecture, we compare a solution where MEC servers are only deployed at terrestrial base stations and another where MEC servers are only deployed at satellites.

Figure 6 shows the relationship between the number of users and the average load on MEC servers. In the satellite service solution, the average load on MEC servers is consistently high due to the extensive coverage area of satellites. As the number of users increases, the MEC server load also increases due to the additional tasks the network needs to handle. Thus, as the number of users increases, the MEC server load continues to rise.

Moreover,

Figure 5 and

Figure 6 demonstrate the effectiveness of the proposed algorithm in minimizing co-channel interference between GEO and LEO satellites. This is particularly critical in heterogeneous satellite networks where spectrum sharing is inevitable. The results suggest that the proposed strategy can enable more efficient spectrum utilization while maintaining service quality. In practice, this means that satellite operators can adopt the proposed algorithm to support higher user densities and data rates without compromising network stability.

Figure 7 demonstrates the relationship between the cache capacity of service nodes and the average load on MEC servers. As cache capacity increases, the MEC server load also increases. This is because service nodes with higher cache capacities store more video files, improving the hit rate and increasing the likelihood that users retrieve video resources from service nodes, therefore increasing the computation load on the MEC servers.

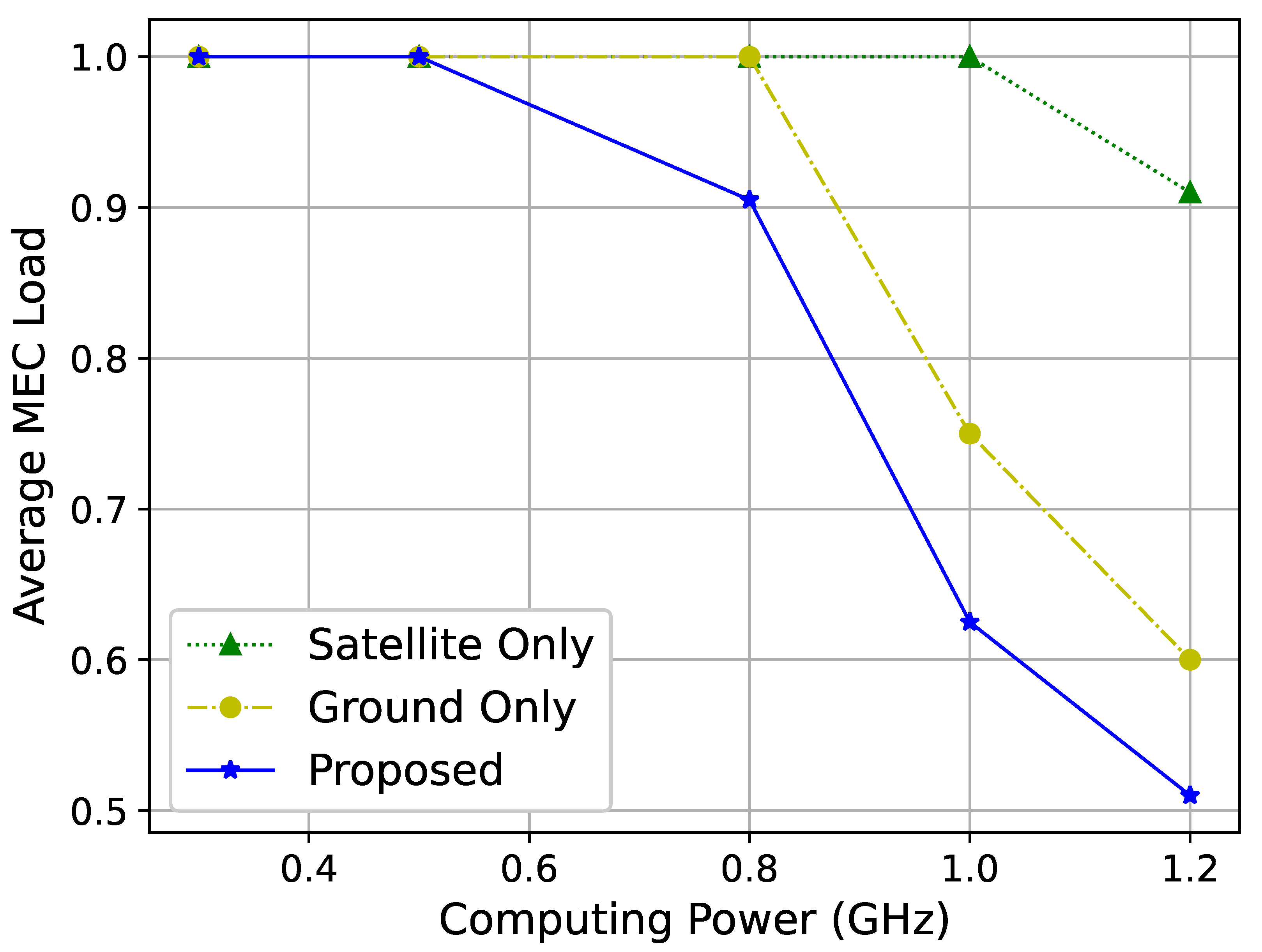

Figure 8 shows the relationship between the computational capacity of MEC servers and their average load. As the computing power increases, the load on MEC servers gradually decreases. This is because although more tasks can be handled with greater computational power, the number of users and their demand remains constant in this simulation. Hence, once the computational capacity surpasses the demand, the average load on MEC servers decreases.

The proposed interference coordination strategy is integral to the ADMM-based algorithm, as it mitigates co-channel interference among multiple satellites and user terminals. By coordinating resource usage, the algorithm ensures that interference levels remain within acceptable limits, therefore enhancing overall network performance. Simulation results indicate that without effective interference coordination, the network experiences increased latency and reduced throughput, underscoring the necessity of this strategy in achieving optimal resource allocation.

The improvements in user QoE, as shown in

Figure 7 and

Figure 8, underscore the practical benefits of dynamic resource allocation and interference coordination. For instance, the ability to dynamically adjust resource allocation based on network conditions ensures consistent service quality, even under varying traffic loads. This is particularly relevant for applications such as live video streaming and remote sensing, where maintaining high QoE is critical for user satisfaction and operational success. The inherent advantages of LEO satellites, including their ability to perform data transmission with lower latency, make them particularly advantageous for applications such as live video streaming and real-time data processing.

The scalability of the proposed algorithm, as demonstrated by its performance across different network sizes and configurations, demonstrates its potential for real-world deployment. By reducing computational complexity while ensuring effective resource allocation, the ADMM-based strategy can be implemented in large-scale satellite networks without incurring excessive computational overhead. This makes it a viable solution for next-generation satellite communication systems.

Although we have not explicitly graphed interference-related metrics (e.g., SINR, interference probability) in the outcome figures, the ADMM-based scheme inherently addresses co-channel interference by coordinating resource usage among multiple satellites and user terminals. The improved QoS metrics (notably throughput and latency) corroborate that our allocation decisions prevent severe interference conditions and maintain service quality. Specifically, by incorporating interference factors into our objective and constraints, the proposed algorithm actively confines interference levels to permissible ranges. Future research efforts could place additional emphasis on quantifying these effects through dedicated metrics, building on the foundational resource coordination framework presented here.

While our simulation results demonstrate the effectiveness of the proposed ADMM-based resource allocation and interference coordination strategy, conducting real-world experiments remains a challenge due to significant infrastructure, regulatory, and cost barriers. Future work will focus on exploring collaborations with industry partners to facilitate field trials, which would provide valuable insights into the practical implementation of our method and its performance in operational satellite networks.

6. Conclusions and Future Works

This paper has comprehensively addressed the joint dynamic task offloading and resource scheduling problem in LEO (LEO) satellite edge computing networks. Our findings underscore the importance of leveraging both LEO and GEO satellites in future network designs, as LEO satellites can provide both computational resources and efficient data transmission, enhancing overall network performance. The proposed system model incorporates both data service transmission and computational task offloading, framed as a long-term cost function minimization problem with constraints. Key contributions include the development of a priority-based policy adjustment algorithm to handle transmission scheduling conflicts and a DQN-based algorithm for dynamic task offloading and computation scheduling. These methods are integrated into a joint scheduling strategy that optimizes overall system performance. Simulation results demonstrate significant improvements in average system cost, queue length, energy consumption, and task completion rate compared to baseline strategies, highlighting the strategy’s effectiveness and efficiency. Future work will extend the framework to more complex network scenarios and explore the integration of machine learning with traditional optimization methods to further enhance performance.

In future research, we intend to investigate more advanced machine learning methods that can be combined with traditional optimization frameworks to further enhance resource allocation and interference management in heterogeneous satellite networks. For example, integrating deep reinforcement learning (DRL) techniques with our ADMM-based solution can help the network adapt to rapidly changing channel conditions and user demands by continuously learning optimal actions from environmental feedback. Similarly, multi-agent RL can be employed to coordinate decisions across multiple network entities (e.g., LEO, GEO satellites, and edge nodes), potentially accelerating convergence and improving overall system performance. Beyond RL, leveraging supervised or unsupervised learning methods for traffic prediction, node clustering, or link reliability assessment could also complement our optimization models, providing richer insights into network dynamics. These hybrid approaches—blending the reliability of mathematical optimization with the adaptability of machine learning—present promising avenues for future enhancement of resource management strategies in dual-layer satellite networks.