Meteorological Visibility Estimation Using Landmark Object Extraction and the ANN Method

Abstract

1. Introduction

2. Methodology

2.1. Past Approaches

2.2. Proposed System Structure

2.3. Collection of Visibility and Image Data

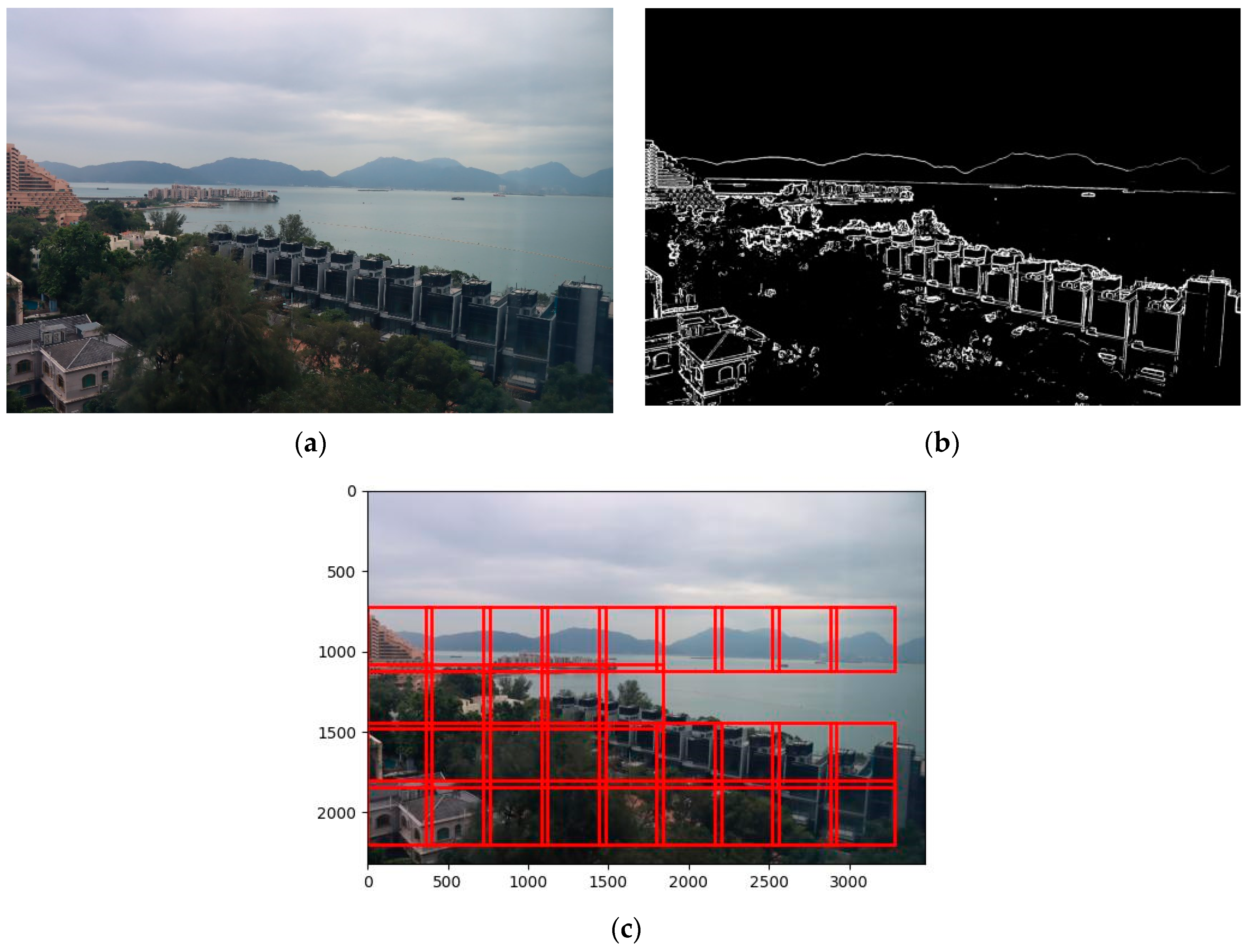

2.4. Identification of Landmark Static Objects in the Image Dataset

2.5. LMO Extraction and Identification of Effective Visibility Ranges

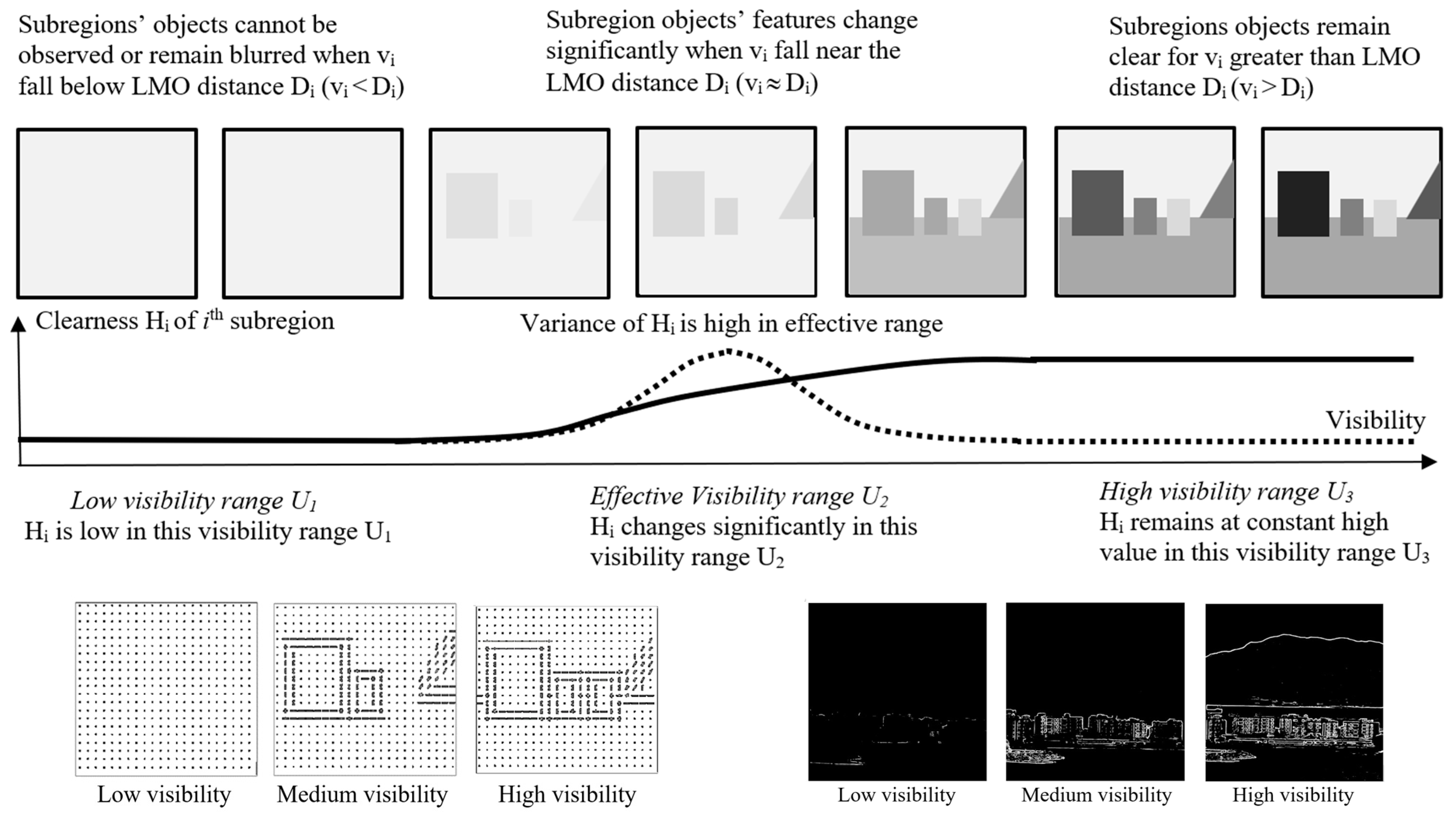

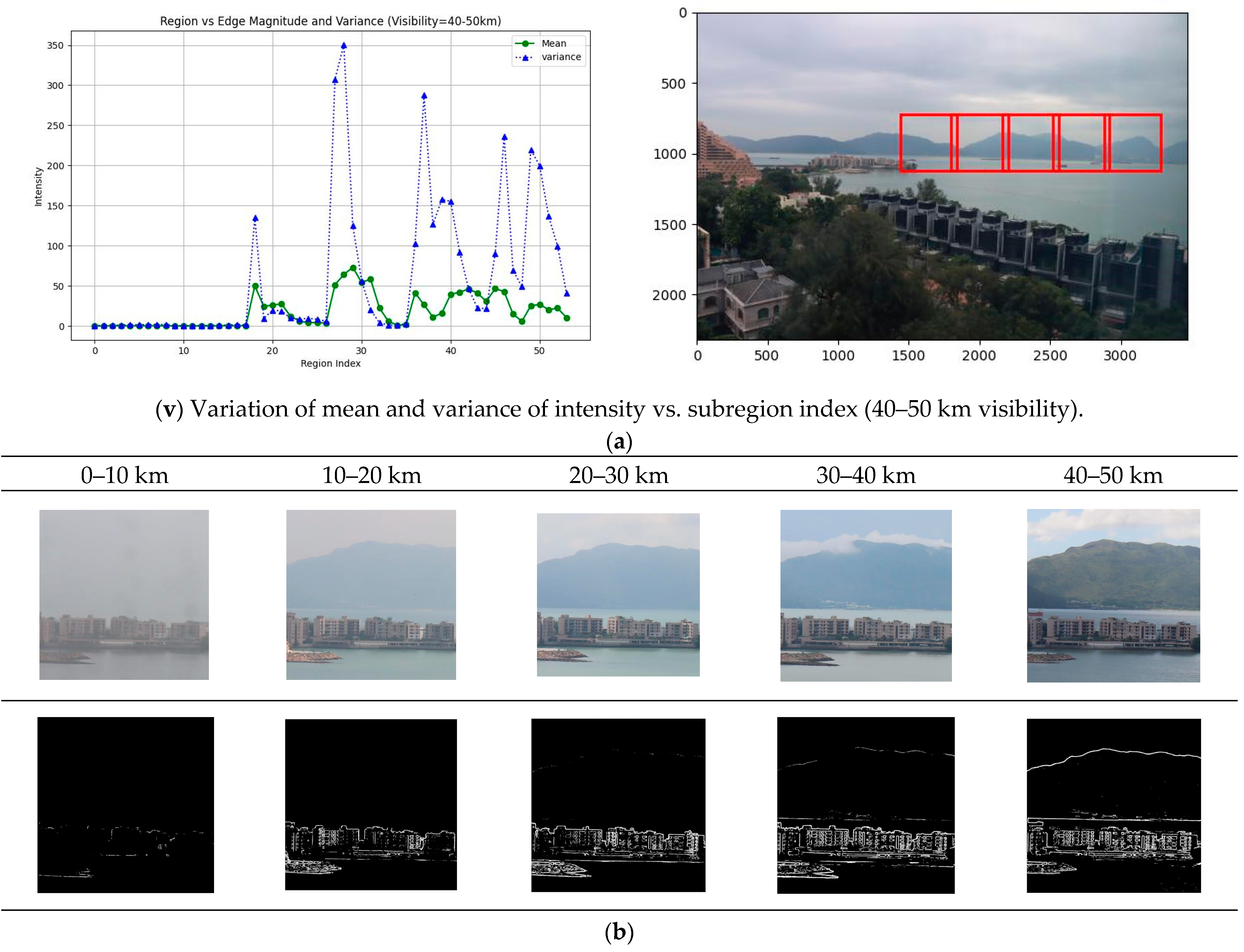

2.5.1. Indicators for the Subregion’s Effectiveness in Visibility Estimation

2.5.2. Derivation of an Effective Subregion Selection Matrix

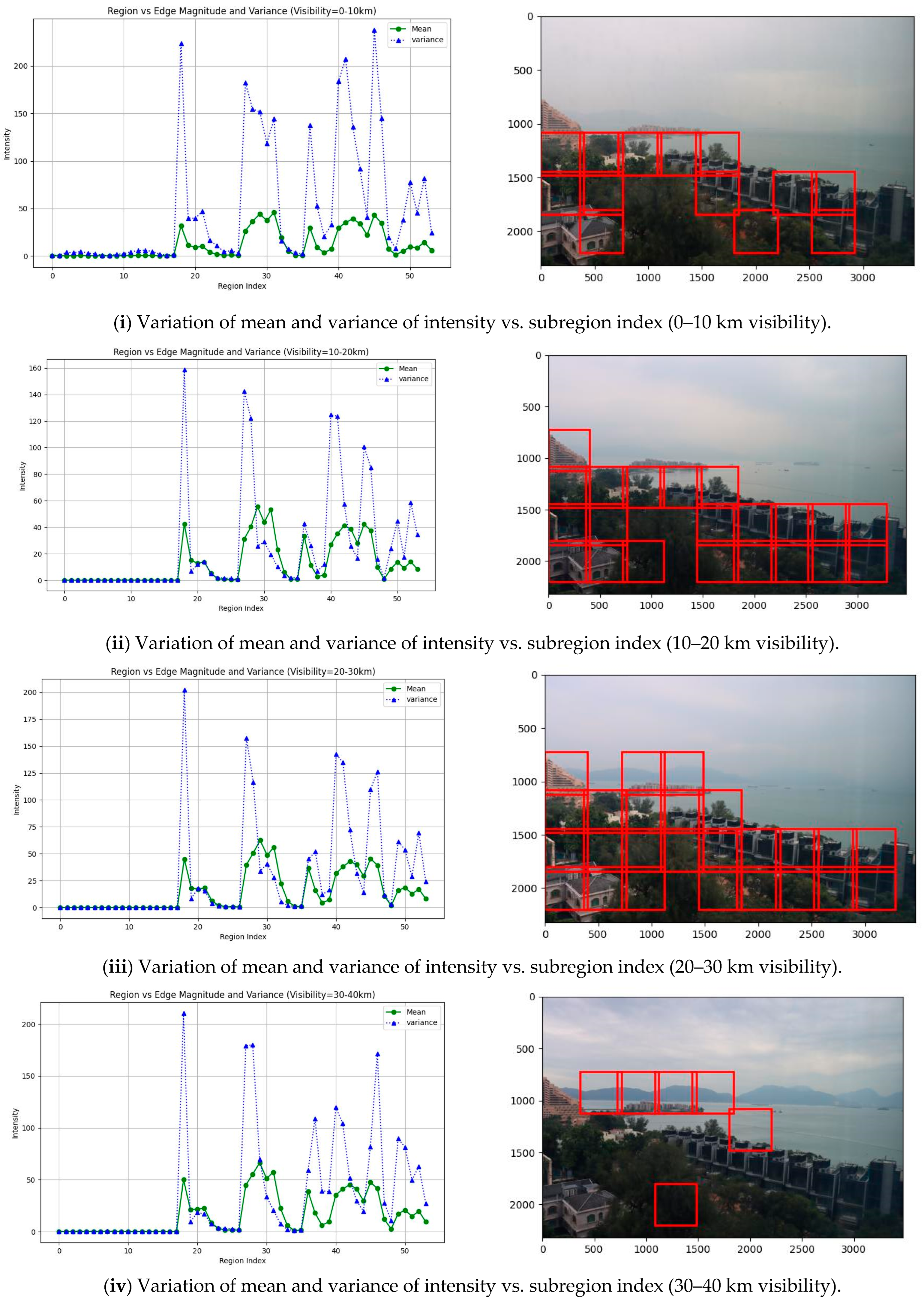

2.5.3. Selection of Effective Regions for Different Visibility Ranges

2.5.4. Image Feature Extraction and VRC

2.5.5. Formulation of the Effective Subregions’ Feature Vector

2.5.6. Multi-Class Models for Visibility Estimation

2.5.7. ANN Modeling

2.5.8. Visibility Estimation Algorithm Design

2.5.9. Step-by-Step Procedures

- Digital images and visibility readings for different visibility ranges are collected at a fixed viewing angle. The visibility image database is built. Edge averaging analysis is applied to the database.

- The proposed LMO extraction algorithms are applied to the edge-averaging image to locate the subregions for different LMOs.

- The mean and variances of the clearness index of different subregions are calculated for different visibility ranges. The developed subregion selection method is applied to derive the effective subregion selection matrix Me.

- The pre-trained ANN (e.g., ResNet) is used to extract image features of the subregions. The subregions’ image features are combined to form a composite feature vector F. The visibility values and F are used to train an ANN as a VRC.

- 5.

- The feature vectors of F are applied to the VRC to determine the visibility range. The estimated visibility range and the effective subregion selection matrix Me are used to derive the effective subregions’ feature vector Fs, which is used together with the visibility values vi to train an ANN as a visibility estimator for that visibility range. Step 5 is repeated for other feature vectors F in the dataset to train the ANN for different visibility ranges. Finally, a multi-class ANN model is obtained for visibility estimation.

- The testing image is applied to the visibility estimation system. The results in the pre-processing stage are used to extract the subregions’ images. The feature vector for each subregion is generated, the composite feature vector F is generated, and the VRC is used to find the visibility range.

- The visibility range and the subregion selection matrix are used to select the set of effective subregions. The effective subregions’ feature vector Fs is formed.

- Fs is applied to the multi-class visibility estimator for the visibility range to estimate the visibility.

3. Experiment Results and Analysis

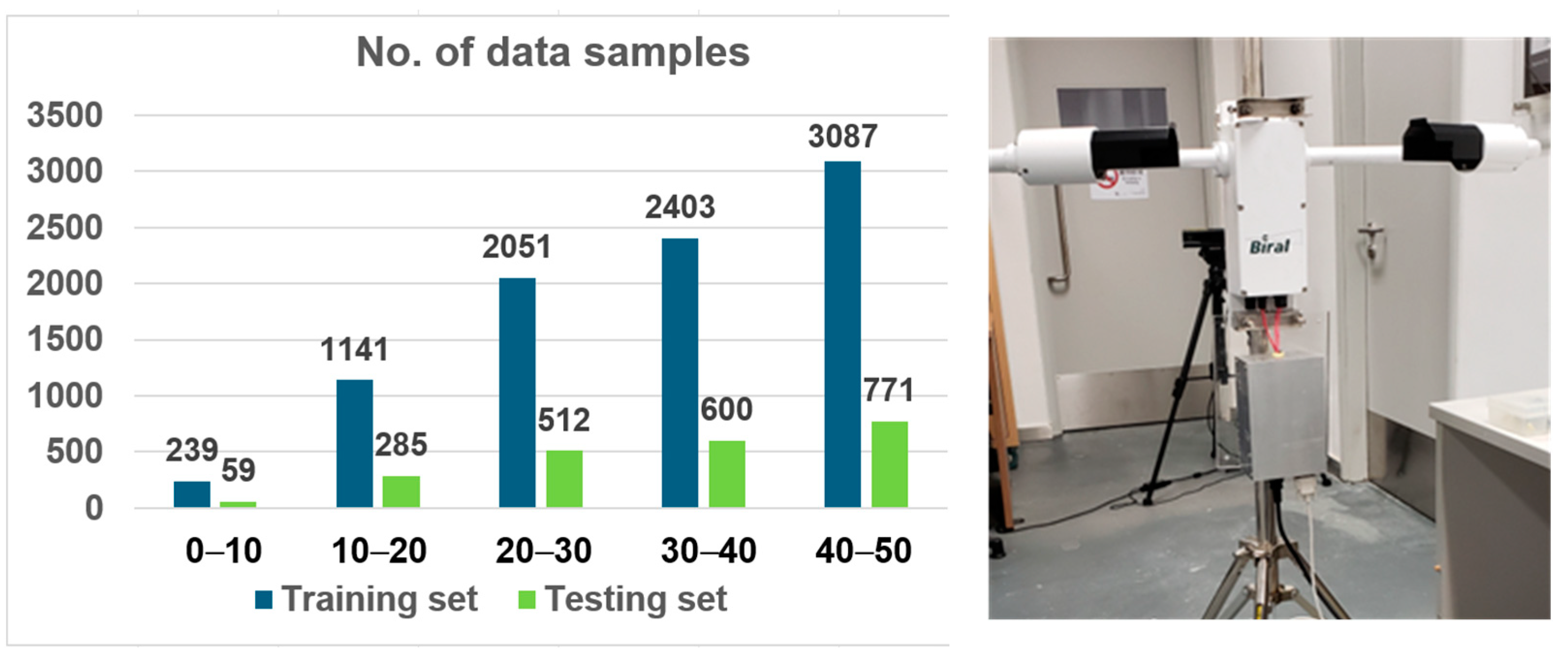

3.1. Data and Equipment

3.2. Result and Analysis

3.2.1. Detection of Static Regions

3.2.2. Selection of Effective Subregions

3.2.3. Visibility Range Classifications

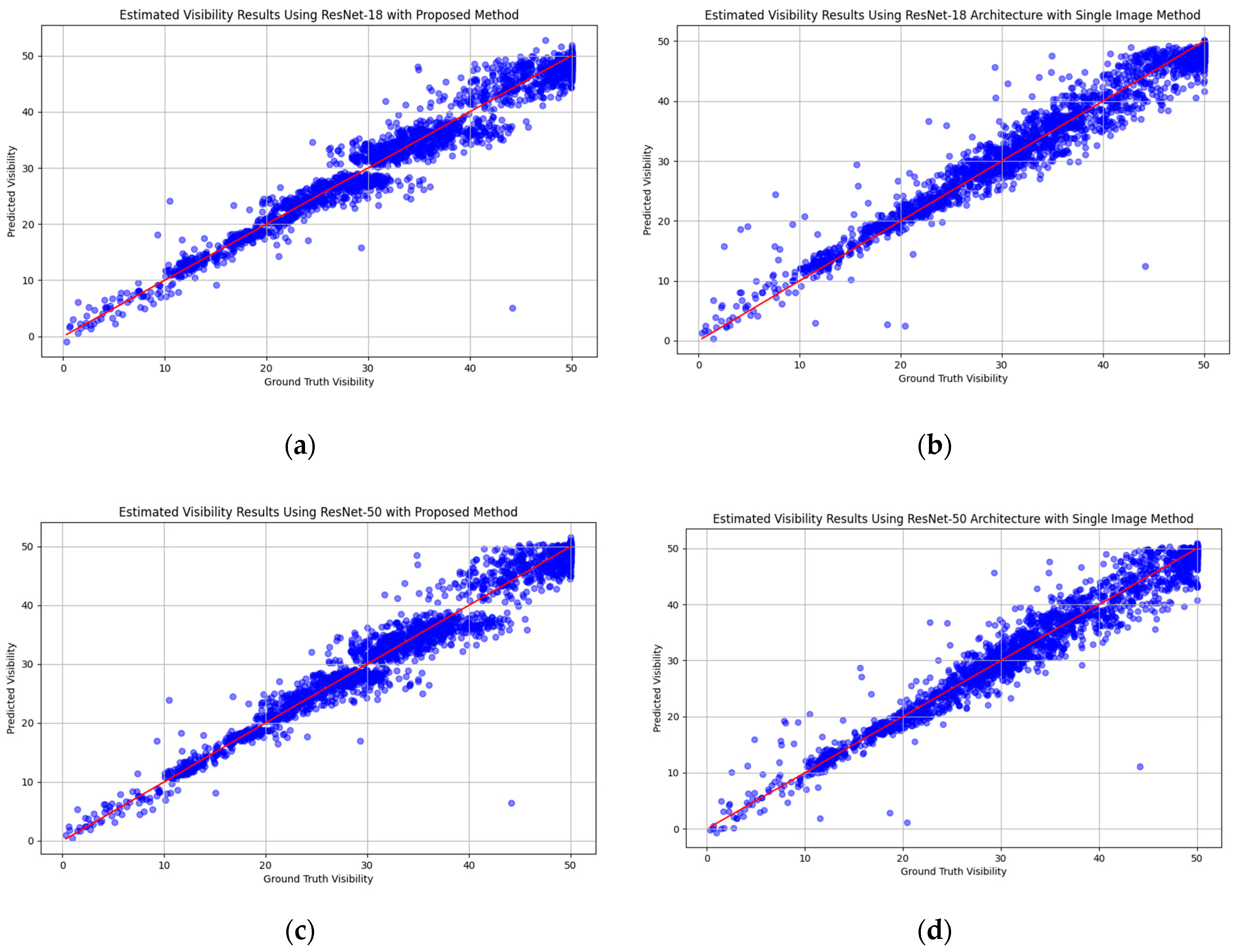

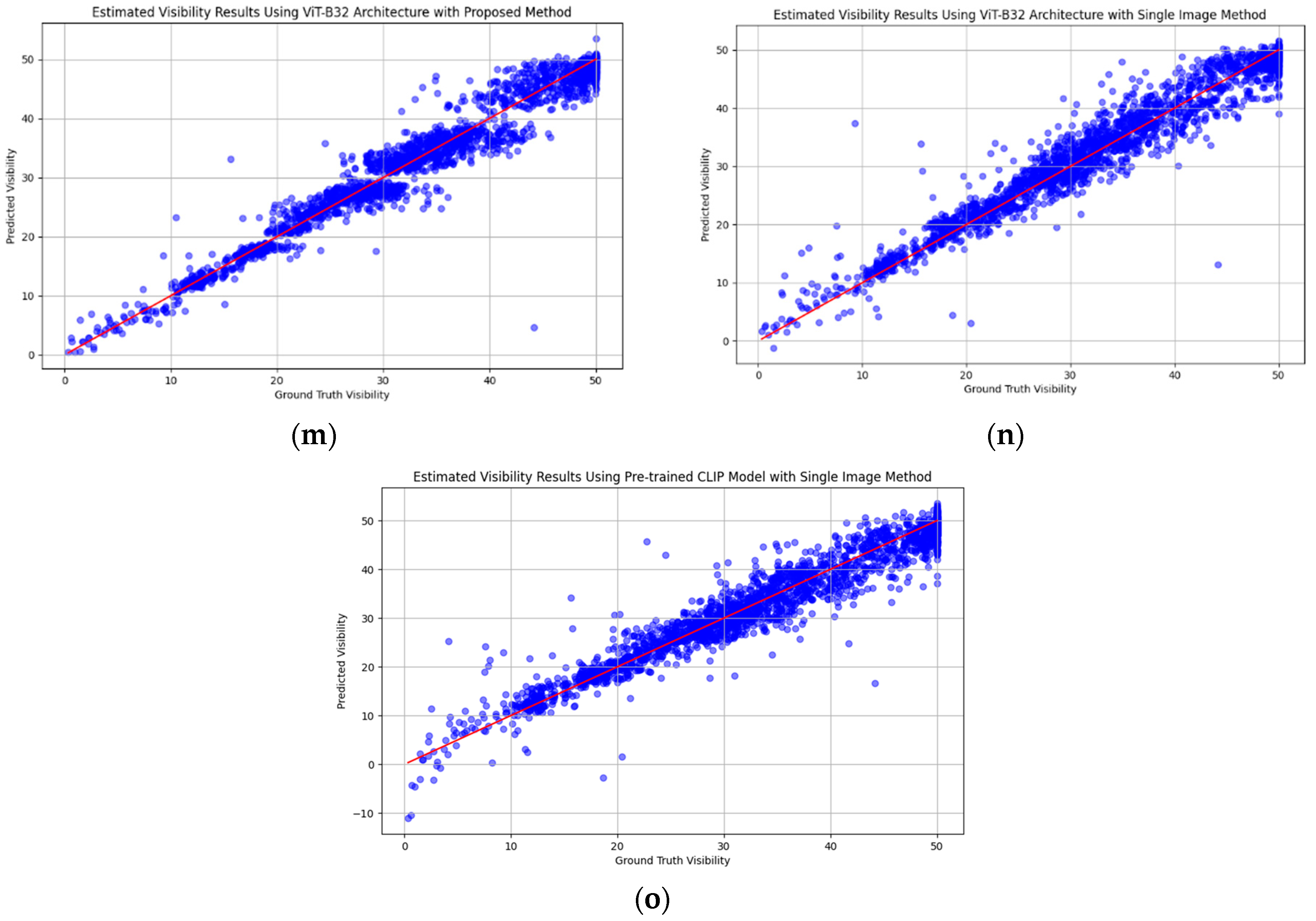

3.2.4. Visibility Estimation

4. Discussion and Summary

- Localized Information—Subregions of an image may contain more relevant and detailed information about the visibility conditions in those specific areas. By focusing on these regions, the model can make more accurate predictions.

- Noise Reduction—The whole image may include irrelevant or noisy data that can negatively impact the model’s performance. By targeting effective subregions, the model avoids these extraneous details and focuses on the parts of the image that matter most.

- Enhanced Feature Extraction—Different parts of an image may have varying visibility conditions. Using subregions allows the model to extract features that are specifically tailored to those conditions, improving the overall accuracy of the visibility estimation.

- Better Handling of Variability—Large images can have significant variability in visibility conditions across different areas. Using effective subregions, the model can better handle this variability and provide more accurate and context-specific predictions.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Khademi, S.; Rasouli, S.; Hariri, E. Measurement of the atmospheric visibility distance by imaging a linear grating with sinusoidal amplitude and having variable spatial period through the atmosphere. J. Earth Space Phys. 2016, 42, 449–458. [Google Scholar]

- Zhuang, Z.; Tai, H.; Jiang, L. Changing Baseline Lengths Method of Visibility Measurement and Evaluation. Acta Opt. Sin. 2016, 36, 0201001. [Google Scholar] [CrossRef]

- Song, H.; Chen, Y.; Gao, Y. Visibility estimation on road based on lane detection and image inflection. J. Comput. Appl. 2012, 32, 3397–3403. [Google Scholar] [CrossRef]

- Liu, N.; Ma, Y.; Wang, Y. Comparative Analysis of Atmospheric Visibility Data from the Middle Area of Liaoning Province Using Instrumental and Visual Observations. Res. Environ. Sci. 2012, 25, 1120–1125. [Google Scholar]

- Minnis, P.; Doelling, D.R.; Nguyen, L.; Miller, W.F.; Chakrapani, V. Assessment of the Visible Channel Calibrations of the VIRS on TRMM and MODIS on Aqua and Terra. J. Atmos. Ocean. Technol. 2008, 25, 385–400. [Google Scholar] [CrossRef]

- Chattopadhyay, P.; Ray, A.; Damarla, T. Simultaneous tracking and counting of targets in a sensor network. J. Acoust. Soc. Am. 2016, 139, 2108. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, G.Y.; Sun, G.F. Calibration Method for Standard Scattering Plate Calibration System Used in Calibrating Visibility Meter. Acta Photonica Sin. 2017, 46, 1207. [Google Scholar]

- Chaabani, H.; Kamoun, F.; Bargaoui, H.; Outay, F. A Neural network approach to visibility range estimation under foggy weather conditions. Procedia Comput. Sci. 2017, 113, 466–471. [Google Scholar] [CrossRef]

- Rachid, B.; Dominique, G. Impact of Reduced Visibility from Fog on Traffic Sign Detection. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Dearborn, MI, USA, 8–11 June 2014. [Google Scholar]

- You, Y.; Lu, C.; Wang, W.; Tang, C.K. Relative CNN-RNN: Learning Relative Atmospheric Visibility from Images. IEEE Trans. Image Process. 2019, 28, 45–55. [Google Scholar] [CrossRef]

- Yang, W.; Liu, J.; Yang, S.; Guo, Z. Scale-Free Single Image Deraining Via Visibility-Enhanced Recurrent Wavelet Learning. IEEE Trans. Image Process. 2019, 28, 2948–2961. [Google Scholar] [CrossRef] [PubMed]

- Lei, Z.; Guodong, Z.; Lei, H.; Nan, W. The Application of Deep Learning in Airport Visibility Forecast. Atmos. Clim. Sci. 2017, 7, 314–322. [Google Scholar]

- Wang, K.; Zhao, H.; Liu, A.; Bai, Z. The Risk Neural Network Based Visibility Forecast. In Proceedings of the Fifth International Conference on Natural Computation IEEE, Tianjin, China, 14–16 August 2009. [Google Scholar]

- Li, S.Y.; Fu, H.; Lo, W.L. Meteorological Visibility Evaluation on Webcam Weather Image Using Deep Learning Features. Int. J. Comput. Theory Eng. 2017, 9, 455–461. [Google Scholar] [CrossRef]

- Raouf, B.; Nicolas, H.; Eric, D.; Roland, B.; Nicolas, P. A Model-Driven Approach to Estimate Atmospheric Visibility with Ordinary Cameras. Atmos. Environ. 2011, 45, 5316–5324. [Google Scholar]

- Robert, G.H.; Michael, P.M. An Automated Visibility Detection Algorithm Utilizing Camera Imagery. In Proceedings of the 23rd Conference on IIPS, San Antonio, TX, USA, 15 January 2007. [Google Scholar]

- Cheng, X.; Liu, G.; Hedman, A.; Wang, K.; Li, H. Expressway visibility estimation based on image entropy and piecewise stationary time series analysis. In Proceedings of the CVPR, Boston, MA, USA, 8–10 June 2015. [Google Scholar]

- Wiel, W.; Martin, R. Exploration of fog detection and visibility estimation from camera images. In Proceedings of the TECO, Madrid, Spain, 27–30 September 2016. [Google Scholar]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single Image Dehazing via Multi-scale Convolutional Neural Networks with Holistic Edges. Int. J. Comput. Vis. 2019, 128, 240–259. [Google Scholar] [CrossRef]

- Lu, Z.; Lu, B.; Zhang, H.; Fu, Y.; Qiu, Y.; Zhan, T. A method of visibility forecast based on hierarchical sparse representation. J. Vis. Commun. Image Represent. 2019, 58, 160–165. [Google Scholar] [CrossRef]

- Li, Q.; Tang, S.; Peng, X.; Ma, Q. A Method of Visibility Detection Based on the Transfer Learning. J. Atmos. Ocean. Technol. 2019, 36, 1945–1956. [Google Scholar] [CrossRef]

- Outay, F.; Taha, B.; Chaabani, H.; Kamoun, F.; Werghi, N.; Yasar, A.U.H. Estimating ambient visibility in the presence of fog: A deep convolutional neural network approach. Pers. Ubiquitous Comput. 2019, 25, 51–62. [Google Scholar] [CrossRef]

- Zhang, C.; Wu, M.; Chen, J.; Chen, K.; Zhang, C.; Xie, C.; Huang, B.; He, Z. Weather Visibility Prediction Based on Multimodal Fusion. IEEE Access 2019, 7, 74776–74786. [Google Scholar] [CrossRef]

- Palvanov, A.; Cho, Y. VisNet: Deep Convolutional Neural Networks for Forecasting Atmospheric Visibility. Sensors 2019, 19, 1343. [Google Scholar] [CrossRef] [PubMed]

- Lo, W.L.; Zhu, M.; Fu, H. Meteorology Visibility Estimation by Using Multi-Support Vector Regression Method. J. Adv. Inf. Technol. 2020, 11, 40–47. [Google Scholar] [CrossRef]

- Malm, W.; Cismoski, S.; Prenni, A.; Peters, M. Use of cameras for monitoring visibility impairment. Atmos. Environ. 2018, 175, 167–183. [Google Scholar] [CrossRef]

- De Bruine, M.; Krol, M.; Van Noije, T.; Le Sager, P.; Röckmann, T. The impact of precipitation evaporation on the atmospheric aerosol distribution in EC-Earth v3.2.0. Geosci. Model Dev. Discuss. 2017, 11, 1–34. [Google Scholar] [CrossRef]

- Hautiére, N.; Tarel, J.-P.; Lavenant, J.; Aubert, D. Automatic fog detection and estimation of visibility distance through use of an onboard camera. Mach. Vis. Appl. 2006, 17, 8–20. [Google Scholar] [CrossRef]

- Cheng, X.; Yang, B.; Liu, G.; Olofsson, T.; Li, H. A variational approach to atmospheric visibility estimation in the weather of fog and haze. Sustain. Cities Soc. 2018, 39, 215–224. [Google Scholar] [CrossRef]

- Li, J.; Lo, W.L.; Fu, H.; Chung, H.S.H. A Transfer Learning Method for Meteorological Visibility Estimation Based on Feature Fusion Method. Appl. Sci. 2021, 11, 997. [Google Scholar] [CrossRef]

- Hu, M.; Wu, T.; Weir, J.D. An Adaptive Particle Swarm Optimization With Multiple Adaptive Methods. IEEE Trans. Evol. Comput. 2013, 17, 705–720. [Google Scholar] [CrossRef]

- Zhan, Z.H.; Zhang, J.; Li, Y.; Chung, H.S.H. Adaptive Particle Swarm Optimization. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2009, 39, 1362–1381. [Google Scholar] [CrossRef] [PubMed]

- Han, H.; Lu, W.; Qiao, J. An Adaptive Multi-objective Particle Swarm Optimization Based on Multiple Adaptive Methods. IEEE Trans. Cybern. 2017, 47, 2754–2767. [Google Scholar] [CrossRef] [PubMed]

- Cervante, L.; Xue, B.; Zhang, M.; Shang, L. Binary particle swarm optimisation for feature selection: A filter based approach. In Proceedings of the 2012 IEEE Congress on Evolutionary Computation, Brisbane, QLD, Australia, 10–15 June 2012. [Google Scholar]

- Hou, Z.; Yau, W.Y. Visible Entropy: A Measure for Image Visibility. In Proceedings of the 2010 International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 4448–4451. [Google Scholar]

- Yu, X.; Xiao, C.; Deng, M.; Peng, L. A Classification Algorithm to Distinguish Image as Haze or Non-haze. In Proceedings of the 2011 Sixth International Conference on Image and Graphics, Hefei, China, 12–15 August 2011; pp. 286–289. [Google Scholar]

- Lo, W.L.; Chung, H.S.H.; Fu, H. Experimental Evaluation of PSO based Transfer Learning Method for Meteorological Visibility Estimation. Atmosphere 2021, 12, 828. [Google Scholar] [CrossRef]

- Atreya, Y.; Mukherjee, A. Efficient RESNET model for atmospheric visibility classification. In Proceedings of the 2021 2nd Global Conference for Advancement in Technology (GCAT), Bangalore, India, 1–3 October 2021; pp. 1–5. [Google Scholar]

- Jin, Z.; Qiu, K.; Zhang, M. Investigation of Visibility Estimation Based on BP Neural Network. J. Atmos. Environ. Opt. 2021, 16, 415–423. [Google Scholar]

- Qin, H.; Qin, H. An End-to-End Traffic Visibility Regression Algorithm. IEEE Access 2022, 10, 25448–25454. [Google Scholar] [CrossRef]

- Sato, R.; Yagi, M.; Takahashi, S.; Hagiwara, T.; Nagata, Y.; Ohashi, K. An Estimation Method of Visibility Level Based on Multiple Models Using In-vehicle Camera Videos under Nighttime. In Proceedings of the 2021 IEEE 10th Global Conference on Consumer Electronics (GCCE), Kyoto, Japan, 12–15 October 2021; pp. 530–531. [Google Scholar]

- Bouhsine, T.; Idbraim, S.; Bouaynaya, N.C.; Alfergani, H.; Ouadil, K.A.; Johnson, C.C. Atmospheric visibility image-based system for instrument meteorological conditions estimation: A deep learning approach. In Proceedings of the 2022 9th International Conference on Wireless Networks and Mobile Communications (WINCOM), Rabat, Morocco, 26–29 October 2022; pp. 1–6. [Google Scholar]

- Pavlove, F.; Lucny, A.; Ondik, I.M.; Krammer, P.; Kvassay, M.; Hluchy, L. Efficient Deep Learning Methods for Automated Visibility Estimation at Airports. In Proceedings of the 2022 Cybernetics & Informatics (K&I), Visegrád, Hungary, 11–14 September 2022; pp. 1–7. [Google Scholar]

- Chen, J.; Yan, M.; Qureshi, M.R.H.; Geng, K. Estimating the visibility in foggy weather based on meteorological and video data: A Recurrent Neural Network approach. IET Signal Process. 2022, 17, e12164. [Google Scholar] [CrossRef]

- Liu, J.; Chang, X.; Li, Y.; Ji, Y.; Fu, J.; Zhong, J. STCN-Net: A Novel Multi-Feature Stream Fusion Visibility Estimation Approach. IEEE Access 2022, 10, 120329–120342. [Google Scholar] [CrossRef]

- You, J.; Jia, S.; Pei, X.; Yao, D. DMRVisNet: Deep Multihead Regression Network for Pixel-Wise Visibility Estimation Under Foggy Weather. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22354–22366. [Google Scholar] [CrossRef]

- Khan, N.; Ahmed, M.M. Weather and surface condition detection based on road-side webcams: Application of pre-trained Convolutional Neural Network. Int. J. Transp. Sci. Technol. 2022, 11, 468–483. [Google Scholar] [CrossRef]

- Ortega, L.C.; Otero, L.D.; Solomon, M.; Otero, C.E.; Fabregas, A. Deep learning models for visibility forecasting using climatological data. Int. J. Forecast. 2023, 39, 992–1004. [Google Scholar] [CrossRef]

- Redmon, J. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005. [Google Scholar]

- Kanopoulos, N.; Vasanthavada, N.; Baker, R. Design of an image edge detection filter using the Sobel operator. IEEE J. Solid-State Circuits 1988, 23, 358–367. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

| Item | Configuration |

|---|---|

| Operating System | Linux |

| Memory Capacity | 256 GB |

| General Processing Unit | Intel(R) Xeon(R) Gold 6426Y |

| Graphical Processing Unit | NVIDIA RTX 4090 |

| Visibility Range (km) | |||||

|---|---|---|---|---|---|

| 0–10 | 10–20 | 20–30 | 30–40 | 40–50 | |

| No. of training set sample images | 239 | 1141 | 2051 | 2403 | 3087 |

| No. of test set sample images | 59 | 285 | 512 | 600 | 771 |

| 298 | 1426 | 2563 | 3003 | 3585 | |

| Visibility Range (km) | 0–10 | 10–20 | 20–30 | 30–40 | 40–50 |

|---|---|---|---|---|---|

| Subregion method | 95% | 90% | 85% | 81% | 91% |

| YOLO11 | 88% | 90% | 85% | 82% | 91% |

| EfficientNet-B7 | 95% | 88% | 82% | 69% | 94% |

| CLIP (ViT-B32) | 76% | 91% | 73% | 71% | 92% |

| Subregions (Visibility Range) | A (0–10 km) | B (10–20 km) | C (20–30 km) | D (30–40 km) | E (40–50 km) | Overall | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Performance Evaluation Index | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | |

| ResNet-18 | Proposed Method | 27.40 | 1.98 | 2.76 | 1.01 | 5.75 | 1.72 | 7.80 | 2.15 | 6.01 | 1.78 | 6.64 | 1.77 |

| Single Image Approach | 22.74 | 2.96 | 4.99 | 1.40 | 5.75 | 1.60 | 7.07 | 2.03 | 7.47 | 2.05 | 7.06 | 1.88 | |

| ResNet-50 | Proposed Method | 25.50 | 1.84 | 2.24 | 0.87 | 5.46 | 1.66 | 7.66 | 2.15 | 5.91 | 1.69 | 6.38 | 1.71 |

| Single Image Approach | 14.79 | 2.45 | 3.94 | 1.04 | 6.27 | 1.69 | 7.20 | 2.09 | 7.89 | 1.78 | 7.01 | 1.77 | |

| ResNet-101 | Proposed Method | 26.22 | 2.02 | 2.42 | 0.92 | 5.22 | 1.64 | 7.87 | 2.13 | 6.31 | 1.70 | 6.56 | 1.71 |

| Single Image Approach | 12.69 | 2.47 | 3.53 | 1.04 | 5.04 | 1.59 | 7.00 | 1.96 | 8.82 | 2.13 | 6.89 | 1.83 | |

| EfficientNet-B0 | Proposed Method | 27.04 | 2.05 | 2.42 | 0.94 | 6.34 | 1.83 | 7.59 | 2.12 | 5.84 | 1.77 | 6.62 | 1.79 |

| Single Image Approach | 8.66 | 2.42 | 6.17 | 1.80 | 7.20 | 1.96 | 6.90 | 2.00 | 7.13 | 1.84 | 7.00 | 1.92 | |

| EfficientNet-B7 | Proposed Method | 26.45 | 1.94 | 2.28 | 0.89 | 5.82 | 1.76 | 7.70 | 2.13 | 5.95 | 1.71 | 6.53 | 1.74 |

| Single Image Approach | 10.26 | 2.47 | 3.28 | 1.07 | 4.51 | 1.41 | 7.11 | 2.04 | 8.13 | 2.03 | 6.46 | 1.78 | |

| ViT-B/16 | Proposed Method | 28.25 | 1.94 | 2.43 | 0.95 | 5.57 | 1.67 | 7.77 | 2.16 | 5.93 | 1.75 | 6.55 | 1.75 |

| Single Image Approach | 38.63 | 3.50 | 4.00 | 1.30 | 6.74 | 1.79 | 8.52 | 2.22 | 9.06 | 2.09 | 8.52 | 1.99 | |

| ViT-B/32 | Proposed Method | 28.17 | 2.00 | 2.28 | 0.94 | 5.41 | 1.66 | 7.97 | 2.14 | 5.98 | 1.73 | 6.56 | 1.73 |

| Single Image Approach | 27.91 | 3.03 | 6.02 | 1.54 | 6.98 | 1.93 | 8.94 | 2.29 | 7.51 | 1.87 | 8.12 | 1.98 | |

| CLIP (ViT-B/32) | Single Image Approach | 4.61 | 1.92 | 17.0 | 3.17 | 5.40 | 2.02 | 11.80 | 2.60 | 0.53 | 0.68 | 10.70 | 2.31 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lo, W.-L.; Wong, K.-W.; Hsung, R.T.-C.; Chung, H.S.-H.; Fu, H. Meteorological Visibility Estimation Using Landmark Object Extraction and the ANN Method. Sensors 2025, 25, 951. https://doi.org/10.3390/s25030951

Lo W-L, Wong K-W, Hsung RT-C, Chung HS-H, Fu H. Meteorological Visibility Estimation Using Landmark Object Extraction and the ANN Method. Sensors. 2025; 25(3):951. https://doi.org/10.3390/s25030951

Chicago/Turabian StyleLo, Wai-Lun, Kwok-Wai Wong, Richard Tai-Chiu Hsung, Henry Shu-Hung Chung, and Hong Fu. 2025. "Meteorological Visibility Estimation Using Landmark Object Extraction and the ANN Method" Sensors 25, no. 3: 951. https://doi.org/10.3390/s25030951

APA StyleLo, W.-L., Wong, K.-W., Hsung, R. T.-C., Chung, H. S.-H., & Fu, H. (2025). Meteorological Visibility Estimation Using Landmark Object Extraction and the ANN Method. Sensors, 25(3), 951. https://doi.org/10.3390/s25030951