Abstract

Monocular 3D object detection is rapidly emerging as a key research direction in autonomous driving, owing to its resource efficiency and ease of implementation. However, existing methods face certain limitations in cross-dimensional feature attention mechanisms and multi-order contextual information modeling, which constrain their detection performance in complex scenes. Thus, we propose MonoAMP, an adaptive multi-order perceptual aggregation algorithm for monocular 3D object detection. We first introduce triplet attention to enhance the interaction of cross-dimensional feature attention. Second, we design an adaptive multi-order perceptual aggregation module. It dynamically captures multi-order contextual information and employs an adaptive aggregation strategy to enhance target perception. Finally, we propose an uncertainty-guided adaptive depth ensemble strategy, which models the uncertainty distribution in depth estimation and effectively fuses multiple depth predictions. Experiments demonstrate that MonoAMP significantly enhances performance on the KITTI dataset at the moderate difficulty level, achieving 16.80% and 24.47% . Additionally, the ablation study shows a 3.78% improvement in object detection accuracy over the baseline method. Compared to other advanced methods, MonoAMP demonstrates superior detection capabilities, especially in complex scenarios.

1. Introduction

Three-dimensional object detection plays an essential role in environmental understanding and trajectory forecasting for autonomous vehicles. Presently, the top-performing 3D detectors primarily utilize 3D LiDAR sensors thanks to their capability to deliver highly accurate depth measurements of the surroundings. However, LiDAR-based systems are prohibitively expensive, hindering their widespread adoption. In contrast, monocular cameras have garnered increasing attention across various application scenarios due to their cost-effectiveness and simplicity in deployment.

Monocular 3D object detection faces several significant challenges. Although existing methods have made optimizations in feature extraction, feature fusion, and bounding box regression, several critical issues persist: (1) Limited receptive field: Monocular 3D object detection significantly depends on feature extractors to capture spatial information. However, many existing methods [1,2,3,4] are constrained by the limited receptive field of their backbone networks. A small receptive field prevents the model from capturing long-range dependencies and contextual information, particularly for distant or occluded objects. In scenarios where objects are far apart or partially hidden (e.g., in urban driving scenes), these methods struggle to capture global features, leading to inaccuracies in object localization and depth estimation. (2) Insufficient multi-scale feature capture: Driving scenes inherently involve objects at varying sizes and distances, making multi-scale feature extraction essential. However, existing methods [5,6,7,8] often fail to effectively capture features at different scales due to inadequate handling of spatial correlations across resolutions. These methods typically rely on single-scale feature extractors or shallow architectures, which fail to capture the full range of object sizes. This results in poor performance in complex scenes, particularly when handling objects with significant scale variations. (3) Insufficient multi-order contextual information capture: In real-world environments, objects do not exist in isolation. Their relationships—both semantic and spatial—are essential for accurate detection. Many current methods [9,10,11,12,13] fail to adequately capture multi-order contextual information, which includes both short-range and long-range dependencies between objects. This inability to model these relationships reduces the model’s robustness, leading to errors in object identification and spatial understanding. (4) Insufficient integration of channel information: Modern deep learning models rely on feature maps with multiple channels to represent different aspects of input data. However, many methods [14,15,16] struggle to aggregate this channel information effectively, leading to redundancy or loss of critical features. These models often fail to exploit inter-channel dependencies and overlook the rich semantic relationships between channels, which are crucial for accurately representing object features. (5) Singularity of depth estimation: Depth estimation from monocular images is inherently ambiguous, and relying on a single method for depth prediction often results in poor generalization across diverse and dynamic environments. Many modern monocular 3D object detection methods [17,18,19] depend on a single depth estimation strategy, making them unsuitable for complex, dynamic scenes. These methods typically lack sufficient robustness and generalization capabilities, failing to effectively address the challenges of depth estimation in varied scenarios. (6) Limitations of traditional attention mechanisms: Traditional attention mechanisms have shown success in enhancing feature representation by focusing on key regions of interest. However, existing methods [20,21] focus primarily on processing individual dimensions and fail to fully exploit the complementarity between features, limiting the representational power of feature semantics. Although the CBAM [22] model enhances feature expression by introducing a spatial attention mechanism, its fragmented computational paradigm struggles to effectively model the semantic dependencies between cross-dimensional features.

In addition to the aforementioned methods, similar issues still persist in recent works. YOLOBU [23] progressively analyzes local geometric and semantic information to improve detection accuracy. PDR [24] proposes the progressive depth regularization strategy to optimize depth estimation quality. MonoEdge [25] utilizes edge features from local perspectives to enhance detection performance in edge-blurred regions. MonoRCNN++ [26] introduces a multivariate probabilistic framework to better model the diversity of objects. MonoNeRD [27] enhances detection performance through implicit geometric modeling and volumetric rendering. Keypoint3D [28] projects the geometric center of 3D objects onto the 2D image plane as keypoints and integrates a self-adaptive elliptical Gaussian filter. DL-VFFA [29] captures the relationship between local details and the global context by improving the voxel processing process. AEPF [30] integrates an attention mechanism into the feature fusion strategy to enhance detection accuracy. These methods overlook the critical role of cross-dimensional feature interaction and multi-order contextual information modeling, particularly when lacking additional depth information. This limits the model’s performance in handling complex scenes, making it difficult to fully exploit the potential relationships between features.

In summary, we point out that existing methods lack cross-dimensional feature interaction and multi-order contextual information modeling, which limits the accuracy of detection. Therefore, we propose considering cross-dimensional feature interaction and multi-order contextual information modeling in detection to alleviate this issue. To tackle the aforementioned problems, we propose an adaptive multi-order perceptual aggregation framework, called MonoAMP. Evaluation of the KITTI [31] benchmark demonstrates that our method achieves significant performance improvements. Furthermore, to assess the generalization ability and robustness of the model, we conduct additional experiments on other datasets. The results show that our model consistently performs well across diverse datasets. We summarize the main contributions of our paper as follows:

- We introduce the triplet attention mechanism and integrate it into the backbone network to alleviate the issue of insufficient cross-dimensional feature interaction.

- We design an adaptive multi-order perception aggregation module, which dynamically models multi-order contextual information and effectively integrates channel information, addressing the issues of insufficient contextual information capture and inadequate channel information integration.

- To further improve the accuracy of depth estimation in the absence of additional data, we propose an uncertainty-guided depth ensemble strategy.

2. Related Work

2.1. Anchor-Based Monocular 3D Detector

Accurately predicting the 3D poses of targets remains a primary challenge in monocular 3D detection due to the lack of depth information. Inspired by “anchor-based” 2D object detection methods, researchers have focused on regressing vehicle 3D pose parameters directly from images using convolutional neural networks. M3D-RPN [10] is a pioneering anchor-based framework that directly predicts the 3D position and dimensions of targets using 2D/3D geometric constraints and a 3D region generation network. MonoPair [32] innovatively introduces foreground–foreground and foreground–background anchor pairing modeling, enhancing the understanding of 3D spatial relationships through a parallelized pairing prediction network. Kinematic3D [33] further extends these works by reconstructing 3D scene dynamics from monocular video and improving 3D target localization in videos through stable object orientation decomposition and self-balanced 3D confidence estimation. MonoRCNN [8] generates dense anchors based on an RPN network, achieving end-to-end 3D localization through depth estimation and regional feature extraction. To address the feature misalignment issues in anchor-based methods, M3DSSD [34] introduces a two-stage feature alignment strategy, comprising target shape alignment and 2D/3D center alignment. However, these methods still rely on predefined anchors, which increase computational complexity and model limitations.

2.2. Center-Based Monocular 3D Detector

To reduce excessive reliance on anchors, researchers in 2019 proposed an “anchor-free” object detection framework, CenterNet [35]. This framework abstracts target regions as the centers of 2D bounding boxes and uses them as keypoints. Inspired by this, relevant researchers further extended it to monocular 3D detection. RTM3d [36] is a multi-scale feature pyramid 3D detection network that describes the 3D bounding box of a vehicle in image space using nine keypoints: eight vertices of the 3D box and one center point. It then utilizes geometric relationships across three-dimensional and two-dimensional views to determine the target’s spatial attributes. SMOKE [18] adopts a simpler architecture consisting of only two branches: keypoint estimation and 3D bounding box regression. Using a multi-step separation approach to construct 3D bounding boxes significantly improves network convergence and detection accuracy. MonoFlex [15] restores the target’s scale, position, and orientation by detecting the center point and incorporating geometric constraints. MonoDDE [37] further unveils cues from the object’s perspective based on this foundation. MonoDLE [11] highlights that although 2D bounding box prediction can be excluded, they remain crucial for forecasting 3D attributes. Research indicates that errors in depth estimation are the leading constraint on the accuracy of this detection task. Moreover, MonoCon [38] has demonstrated that incorporating additional learning information around the object can significantly enhance the model’s ability to generalize.

2.3. Transformer-Based Monocular 3D Detector

Recently, Transformer-based monocular 3D detection methods have made significant progress in enhancing global perception capabilities. For example, MonoDTR [39] incorporates depth position encoding to inject global depth information into the Transformer framework, which helps guide detection tasks and relies on LiDAR for supplementary supervision. In contrast, MonoDETR [40] leverages foreground target annotations to generate depth maps, thus providing depth guidance for the detection process. To improve computational effectiveness, MonoATT [41] employs an adaptive token transformer, which allocates detailed tokens to key areas. Despite these advances, Transformer-based monocular 3D detectors still suffer from challenges related to high computational complexity and slow inference times. These issues become particularly pronounced in real-world autonomous driving scenarios, where both the ability to synthesize global information and low-latency responses are crucial. At present, no solution exists that can effectively balance these two requirements.

3. Approach

3.1. Overall Framework of MonoAMP Network

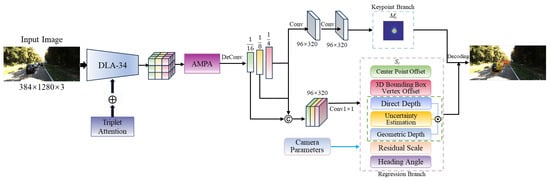

The overall framework of our proposed method is illustrated in Figure 1. The multi-task detection head comprises two main branches. The keypoint branch estimates the likelihood of vehicle existence at the current location, generating a heatmap of vehicle center points. The regression branch predicts key geometric attributes, including depth, dimensions, and orientation of objects.

Figure 1.

Overall framework of MonoAMP. The pink dashed box denotes the regression branch, the green denotes the uncertainty-guided depth ensemble, and the blue represents the keypoint branch. The symbol ⊕ represents the fusion operation, and © denotes the feature map concatenation operation.

The backbone network utilizes DLA-34 [42] to extract features from images and incorporates triplet attention [43] to enhance the key feature extraction capability through collaborative interactions across different dimensions. The three-branch attention structure establishes cross-dimensional attention dependency modeling through rotational transformations and residual mappings. This structure constructs attention maps from three orthogonal planes and enhances the discriminative representation of features through adaptive attention weight computations based on dimensional decomposition, ultimately achieving efficient cross-dimensional attention interaction and fusion. The tail of the backbone network is connected to an adaptive multi-order perceptual aggregation (AMPA) module, which effectively aggregates multi-order contextual information, fully utilizes inter-channel correlations, and enhances the model’s feature selection capability.

Subsequently, a multi-scale feature hierarchy is constructed by hierarchically upsampling the features processed by the AMPA. We obtain feature maps with resolutions of 1/16, 1/8, and 1/4 of the input image size, respectively. We employ feature alignment operations to achieve a unified representation of multi-scale features, and feature concatenation is used to establish a joint semantic expression across multiple scales. During the feature fusion stage, we improve detection capability for multi-scale vehicles and address issues such as blurred object boundaries. For the heatmaps output by the keypoint branch, a set of response values is generated by applying a two-dimensional Gaussian kernel centered at the actual target point locations. The ground truth value of the vehicle center point on the heatmap corresponds to the pixel-level maximum response generated at the Gaussian kernel location. For depth prediction in the regression branch, an uncertainty-guided depth ensemble is designed, which adaptively fuses multiple depth estimation methods through weighted aggregation, thereby improving the model’s depth estimation precision and robustness. The regression vector of this network primarily includes center point offsets , 3D box vertex offsets , vehicle residual scales , orientation angles , direct depth , uncertainty estimates , and geometric depth , among others.

3.2. Triplet Attention Mechanism

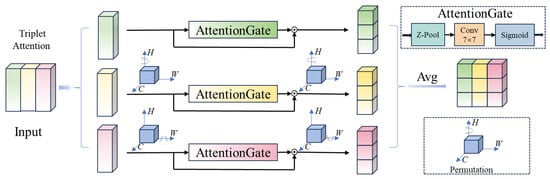

Existing attention mechanisms typically model feature relationships within a single dimension. SENet [44] adjusts the weights of different channels through channel attention, enhancing relevant features while suppressing irrelevant or redundant ones. However, SENet overlooks spatial information and fails to account for the correlations between different spatial locations. In contrast, CBAM [22] successfully demonstrates the importance of capturing both channel and spatial attention. Although CBAM improves performance, it does not consider cross-dimensional interactions. In monocular 3D object detection, the model needs to infer the spatial location, keypoints, pose, and size of objects from 2D images. Therefore, it is essential for the model to accurately capture both local and global features during the feature extraction phase. Unlike traditional 2D detection, it requires more refined feature representations, particularly for estimating the depth, spatial pose, and scale variations in objects. Therefore, the key to enhancing performance lies in effectively capturing the interaction between spatial and channel information. The triplet attention mechanism addresses cross-dimensional interactions through three parallel branches. Each branch focuses on modeling the interaction between the channel dimension (C) and the spatial dimension (H or W). This design enables complementary enhancement of information across dimensions. Theoretically, the triplet attention mechanism can significantly improve model performance, especially for monocular 3D object detection tasks that require fine-grained feature representation.

In this paper, we introduce triplet attention [43] and integrate it into the backbone network to capture the mutual dependencies between the channel and spatial dimensions. Specifically, we integrate triplet attention (TA) into the deeper levels, Level 4 and Level 5, of the DLA-34 network for cross-dimensional information modeling. These layers typically extract high-level semantic features. It effectively enhances the fine-grained interactions between features, enabling the fine-grained feature representations required for the task. The TA strengthens cross-dimensional dependencies through parallel computation, combining rotational operations and residual transformations, as shown in Figure 2. In each branch, features of specific dimensions are compressed and extracted through Z-pool and convolution operations, while a Sigmoid function computes attention weights that determine the importance of features in each dimension. This mechanism enables the backbone network to enhance its focus on multi-dimensional features through a global self-attention perspective. It preserves the original information of the input features while enhancing cross-dimensional cooperation through the attention mechanism. All of this is achieved without significantly increasing computational overhead, thereby achieving a notable performance improvement.

Figure 2.

Illustration of TA.

3.3. The AMPA

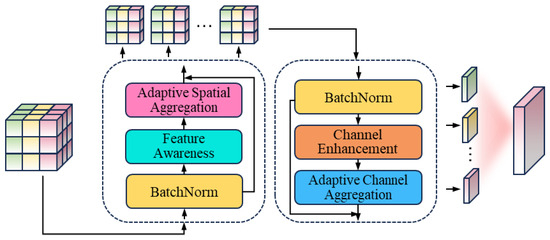

The proposed AMPA is an architecture designed for aggregating multi-order contextual and channel information. The structure is shown in Figure 3. It aims to capture semantic correlations in contextual information and channel dependencies between features. The AMPA consists of four core sub-modules: feature perception module, adaptive spatial aggregation module, channel enhancement module, and adaptive channel aggregation module. The subsequent sections will delve into the specifics of each component’s construction and capabilities.

Figure 3.

Illustration of AMPA.

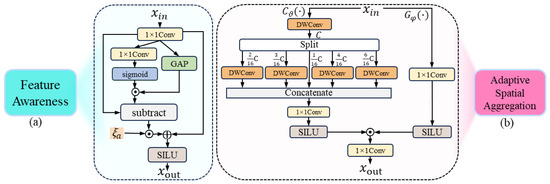

3.3.1. Feature Awareness Module

To balance the perception of local details and global semantic information, we design the feature awareness (FA) module. Figure 4a provides a comprehensive view of the structure. Specifically, it computes the difference between fine-grained features from the convolutional branch and dynamically weighted global features, highlighting the distinctions between detailed and global information. The complete procedure is outlined below:

where represents the local features Y extracted from using . denotes the global features. The weight generation component for dynamic weighting of the global features is represented as , where f denotes . Furthermore, is a learnable scaling factor, initialized to zero, for adjusting the weights of the difference term features.

Figure 4.

(a) The FA module. (b) The ASA module. The detailed structures corresponding to them are enclosed within their extended dashed boxes.

3.3.2. Adaptive Spatial Aggregation Module

Figure 4b shows the structure of the adaptive spatial aggregation (ASA). It is composed of two key functional pathways: context pathway and gating pathway.

The context pathway is responsible for extracting multi-order contextual information. It employs depth-wise convolutions using varying dilation factors to comprehensively extract multi-order features. While controlling computational complexity, it expands the receptive field to capture multi-order contextual information from local to global. The gating pathway adopts an adaptive gating mechanism. To adaptively aggregate features extracted from , we use the adaptive activation function SILU [45]. It is specifically defined as: , where represents the sigmoid function. The SiLU possesses smooth gradients, which contribute to more stable gradient updates during the training process. Its derivative is:

Input features are processed through SILU to generate discriminative gating signals. Nonlinear transformations enable the capture of nonlinear dependencies between input features, facilitating the generation of more discriminative gating signals. SILU automatically adjusts the magnitude of the input features by multiplying Y with . For positive and negative values, SILU exhibits distinct activation behaviors. Consequently, the gating signals can flexibly adapt to the specific characteristics of the input features, thereby enabling adaptive functionality. The importance of features at different orders is adaptively adjusted through dynamic gating signals.

The outputs from the gating pathway and the context pathway are fused to generate the final output. This enables the adaptive aggregation of multi-order context features. The entire process is instantiated as follows:

where f denotes . represents the input features of the gating pathway . represents the division of the input features into multiple channel subsets with different ratios for feature extraction, and .

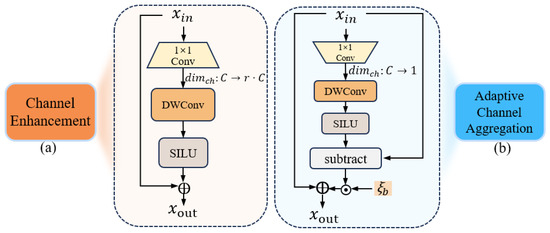

3.3.3. Channel Enhancement Module

The channel enhancement (CE) module enhances the representational capability of input features and generates high-dimensional channel features. The CE module is shown in Figure 5a. First, it expands the number of feature map channels from the original dimension C to a higher dimension . The SILU [45] activation function enhances discriminative feature representation through non-linear mapping. Specifically, the residual connection design enables the learning of residual mappings based on original features. The output is obtained through residual connection, which combines the input and transformed feature representations:

where is implemented by a channel-expanding projection using : .

Figure 5.

(a) The CE module. (b) The ACA module. The detailed structures corresponding to them are enclosed within their extended dashed boxes. The symbol → denotes projection operations that reduce or expand channel dimensions.

3.3.4. Adaptive Channel Aggregation Module

The adaptive channel aggregation (ACA) module addresses the key issues of insufficient channel dependency modeling and information redundancy in traditional methods. As shown in Figure 5b, it is designed with a channel compression and difference enhancement mechanism. First, a dimensionality reduction mapping is performed on the features along the channel dimension, capturing global dependencies between channels. Specifically, depth-wise convolutions are introduced to refine the feature representation of channels. The weighted difference information is fused with the original input features to generate richer output features:

where is implemented by a channel-reducing projection using : . is a channel-level learnable scaling factor initialized to zero, used to adjust the weight of the difference term.

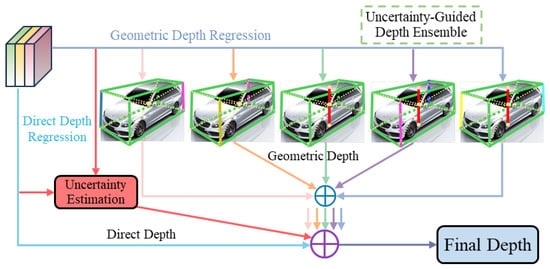

3.4. Uncertainty-Guided Depth Ensemble Strategy

For monocular 3D object detection, a single-depth prediction method often struggles to handle the complexities of various environments and scenarios. To enhance the robustness and accuracy of depth estimation, we propose an uncertainty-guided depth ensemble (UGDE). The depth estimation methods in this paper include direct regression of depth predictions and multiple geometric depth estimates derived from keypoints.

As shown in Figure 6, our depth ensemble strategy combines multiple depth estimation methods and adaptively weights different predictions based on uncertainty, generating more reliable depth estimates. Each depth prediction has a corresponding weight , which represents the model’s confidence in that prediction. Through uncertainty-based weighted averaging, predictions with higher confidence are given greater weight, dominating the depth ensemble. The computation formula for the ensemble is as follows:

where the weight term is defined as . Estimates with smaller uncertainty are given greater weights, while those with larger uncertainty are given lower weights.

Figure 6.

Illustration of the UGDE. In the geometric depth regression branch, we design five sets of geometric depths, including the vertical height of the vehicle center point and the heights of various diagonal combinations, and input them into the depth ensemble module.

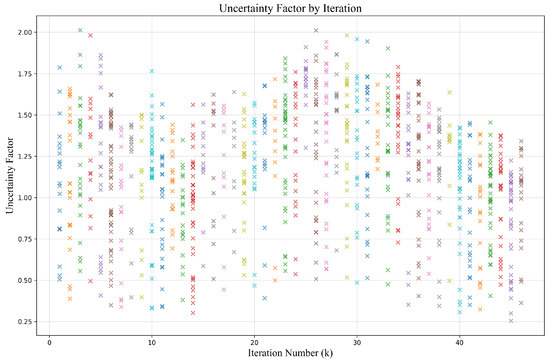

The uncertainty factor is computed from the model’s regression feature maps. Specifically, it is estimated based on the input features and the model’s performance on that input. During the forward pass, the regression feature maps contain the depth predictions along with other related features. We extract the features corresponding to the target locations (i.e., points of interest, POI) from these maps and obtain the specific regression results for each location using the corresponding indices. The uncertainty factor, which plays a critical role in depth estimation, is embedded in a dedicated channel of the regression feature map. This value indicates the reliability of the model’s depth predictions in certain regions. In practice, the uncertainty factor is constrained within a predefined range to prevent it from exceeding a reasonable limit, ensuring that the model’s uncertainty predictions remain both precise and adjustable. The visualization of the uncertainty factor during the training process is shown in Figure 7. The uncertainty factor is influenced by various factors, such as differences in input images or scene conditions, which can lead to variations in the uncertainty. When the training data include complex, occluded, or blurred scenes, the model may exhibit higher uncertainty in such situations. Furthermore, the model’s architecture and training process also play a crucial role in determining the magnitude of the uncertainty factor.

Figure 7.

The uncertainty factor visualization. We present the values of the uncertainty factor at different iterations during the training process. We use different colors to more intuitively distinguish the uncertainty factor at different iterations.

3.5. Multi-Task Loss Function Design

Based on the designed network output, the loss function consists of seven components: center point classification loss , 3D bounding box vertex offset loss , center point offset loss , direct regression depth loss , geometric depth loss , vehicle 3D residual scale loss , and heading angle loss . The center point classification loss is computed using focal loss [46]:

where and are hyperparameters that adjust the weights of the positive and negative sample losses. N indicates the quantity of positive samples. represents the predicted response value at (i, j). represents the true response value at (i, j) for the Gaussian kernel function , and is the standard deviation of the Gaussian distribution.

The center point offset loss and 3D bounding box vertex offset loss are trained using L1 Loss:

where P represents the actual target point coordinates. R is the downsampling factor. denotes the true coordinate offset. denotes the floor operation). represents the predicted coordinate offset.

For the vehicle 3D residual scale , each regression quantity is evaluated using the Smooth L1 Loss [47]:

where h, w, and l represent the real height, width, and length dimensions of the vehicle, respectively. represents the numerical difference between the ground truth and predicted values.

The heading angle regression loss is predicted by applying the Multi-Bin [48] method. In our approach, we define four bins with centers at . The total loss for Multi-Bin orientation estimation is:

The confidence loss is defined as the softmax loss for the confidence of each interval. The calculation formula for the localization loss is as follows:

where denotes the number of intervals covering the true heading angle . represents the center angle of interval i. denotes the correction required for the center of interval i.

The direct regression depth loss is designed to quickly capture depth features by directly regressing the object depth information. The network output is first transformed into absolute depth using the inverse sigmoid function to reduce the influence of the output range on predictions.

where is defined as . The term represents a small constant that is introduced to ensure the stability of the direct depth calculation process. Meanwhile, by incorporating uncertainty modeling, the model can adaptively adjust the loss value when it has low confidence in depth predictions.

where denotes the predicted depth. denotes the ground truth depth. is used to prevent the model from increasing uncertainty to avoid loss. is the predicted depth uncertainty.

The keypoint-based geometric depth loss estimates depth by leveraging the geometric relationships of the object’s keypoints. It computes the center depth and the depths of four 3D bounding box diagonals, , , , and . The loss function for geometric depth estimation also uses L1 Loss and incorporates uncertainty modeling:

where represents the depth calculated based on the geometric relationships of the keypoints. denotes the uncertainty of the depth. is an indicator function that determines whether the keypoints used for depth calculation are visible. When the keypoints are not visible, the model allows the impact of the loss to be reduced by assigning a larger uncertainty.

Based on the above, the comprehensive loss function of the network, , is formulated in the manner presented below:

where , , , , , , and are the balance factors between the individual loss functions, with .

4. Experiments

4.1. Dataset

KITTI. This study primarily employs the KITTI 3D Object dataset [31], a widely adopted benchmark in autonomous driving research, particularly for 3D object detection tasks. It provides 7481 training images and 7518 testing images, all captured from real-world driving scenarios and accompanied by detailed 3D annotations. Since the test image annotations are not publicly available, we follow the strategy described in [49], dividing the 7481 training images into 3712 for training and 3769 for validation. This split ensures the independence of the training and validation sets, thereby enhancing the reliability of the experimental outcomes. Based on the degree of truncation, bounding box height, and occlusion, the levels of difficulty are defined as easy, moderate, and hard. Table 1 presents the specific classification criteria.

Table 1.

Difficulty level classification criteria of the KITTI Dataset.

Waymo. Waymo [50] categorizes objects into Level 1 and Level 2 based on the count of LiDAR points contained within their 3D bounding boxes. Evaluations are performed across three distance ranges: , , and meters. Waymo employs the and metrics as standard evaluation measures. is an extension of that integrates heading angle information.

NuScenes. NuScenes [51] includes training images (28,130) and validation images (6019), all recorded using the front-facing camera. To evaluate the model’s generalization capability, we employ a validation subset for cross-dataset evaluation.

4.2. Implementation Details

The experimental setup is summarized in Table 2. The proposed approach is developed on PyTorch 2.0.1. All input images were resized uniformly to 384 × 1280. The only data augmentation employed is random horizontal flipping of the images. The model is trained with the AdamW [52] optimizer, initialized at a learning rate of 0.0003. This process utilizes a batch size of 8. The training is conducted on an individual NVIDIA A800 GPU, performing a total of 46k iterations. Additionally, the learning rate is adjusted by reducing it to one-tenth of its value at 37k and 42k iterations.

Table 2.

Experimental configuration.

4.3. Evaluation Metrics

This study aligns with current mainstream research, with a particular focus on the performance of models in vehicle category detection tasks. For performance evaluation, Average Precision (AP) is used as the primary metric. After that, the 40 recall positions (RPs) are considered to enhance the comprehensiveness of the evaluation, following MonoDIS [6]. The Intersection-over-Union (IoU) threshold is set to 0.7. It ensures the rigor and consistency of the evaluation process by accurately measuring the overlap between ground truth and predicted values. This multi-level evaluation strategy aims to comprehensively reflect the model’s performance across varying detection difficulties.

4.4. Quantitative Results

Results of KITTI test set. We provide a comprehensive analysis comparing our novel approach with leading methodologies developed in recent years, evaluated on the widely used KITTI test set. MonoGRNet [9], MonoDIS [6], M3D-RPN [10], SMOKE [18], MonoDLE [11], MonoRCNN [8], MonoFlex [15], and MonoGround [53] are currently popular standard monocular 3D detectors, which use only RGB images as the network’s sole input without introducing additional data (e.g., LiDAR or CAD). Additionally, DDMP-3D [7], UR3D [54], Kinematic3D [33], AutoShape [55], MonoRUn [56], CaDDN [57], and MonoDTR [39] incorporate auxiliary data to guide detector training.

The data in Table 3 demonstrate that our method achieves significant improvements. Notably, it shows superior accuracy in detecting objects at the intermediate level, which is a crucial aspect of monocular 3D object detection. Quantitative analysis demonstrates that our method achieves scores of 23.89%, 16.80%, and 14.06%, and scores of 33.61%, 24.47%, and 21.08%. In contrast to existing methods, our novel approach demonstrates clear superiority, outperforming several methods that do not use auxiliary data, such as M3D-RPN [10], SMOKE [18], MonoDLE [11], MonoRCNN [8], MonoFlex [15], MonoGround [53], and MonoJSG [4]. It also surpasses some methods that utilize auxiliary data, including DDMP-3D [7], Kinematic3D [33], AutoShape [55], CaDDN [57], and MonoDTR [39]. Additionally, relative to the advanced Transformer-based detector MonoDETR [40], our method outperforms it on most performance metrics while ensuring real-time functionality.

Table 3.

Comparative performance of different methods. (%) metrics for the car category on the KITTI test set were evaluated using a 0.7 IoU threshold. Methods are categorized based on the extra data they utilize. Within each category, methods are sorted according to the results at the Moderate difficulty level (Mod.). Notably, the top-performing outcomes are presented in boldface, and the subsequent best are indicated with an underline.

The improvements achieved by our method can be attributed to its enhanced ability to learn contextual relationships and spatial dependencies between objects. By focusing on more precise depth estimation and refining the spatial interaction between features, our model better captures fine-grained information about object positioning and structure. This enhances the accuracy of scene understanding, leading to significant improvements in localization and depth precision. The model’s effective fusion of multi-order contextual information allows it to better represent complex scene dynamics, resulting in stable performance gains across both and metrics.

Results of Waymo validation set. We evaluate the generalization ability of our MonoAMP method on the Waymo dataset. Table 4 shows that MonoAMP surpasses state-of-the-art methods in most evaluation metrics. It delivers excellent performance across various thresholds, particularly for objects at moderate distances.

Table 4.

Evaluation results of Waymo validation set.

Cross-dataset evaluation. We conduct cross-dataset evaluation experiments to further assess the model’s generalization ability. We use our KITTI validation model to evaluate the mean absolute error (MAE) of depth on nuScenes [51] frontal validation and KITTI validation images. As shown in Table 5, our MonoAMP outperforms GUPNet [18]. Meanwhile, our results are very close to DEVIANT [60], whose method is equivalent to depth transformation and demonstrates robustness to variations in data patterns.

Table 5.

Cross-dataset evaluation on nuScenes frontal validation and KITTI validation datasets.

Efficiency. In evaluating the efficiency of the proposed model, we emphasize computational cost, which is critical for real-time applications. We provide a clear comparison with existing state-of-the-art methods by reporting the Giga Floating-Point Operations per Second (GFLOPs), Runtime (ms), and for our model. As shown in Table 6, we present performance data for these methods, including the widely used transformer-based approaches, MonoDTR [40] and MonoDETR [41]. MonoAMP achieves competitive detection performance while maintaining lower computational cost.

Table 6.

Efficiency comparison of different methods.

4.5. Ablation Study

This section presents ablation studies to assess the contribution of each module in the proposed method and its impact on overall performance. MonoDLE [11] is the baseline method of the ablation study. Specifically, we conducted experiments by incrementally adding key modules to the baseline method and analyzing their impact on overall performance with a rigorous evaluation based on experimental metrics. All experiments were conducted under identical conditions to ensure the comparability of results. The results of the ablation study are presented in Table 7.

Table 7.

Ablation study results. The best results are emphasized in bold.

The ablation study shows that each proposed module greatly enhances the performance of the baseline model in monocular 3D object detection. After incorporating the TA into the baseline model, the model’s ability to allocate attention to critical regions is significantly improved. The FA module enhances the model’s perception of key spatial features through an adaptive feature enhancement mechanism. The ASA module captures multi-level contextual information, improving the model’s adaptability to complex environments. The CE module optimizes the inter-channel dependency, enhancing the model’s performance with multi-channel features. By adding the ACA module, the aggregation of channel features is optimized, enabling the model to capture important channel information more accurately, thereby improving overall performance. The UGDE module adaptively fuses multiple depth prediction strategies, enabling the model to exhibit strong robustness and accuracy in monocular 3D object detection.

Quantitative analysis shows that, for the easy, moderate, and hard scenarios of the monocular 3D object detection task, the proposed method improves the by 5.13, 3.78, and 3.06 percentage points, respectively, and the by 5.94, 4.42, and 4.14 percentage points compared to the baseline method. These experimental results fully validate the effectiveness and synergistic effects of each module and demonstrate the significant advantages of the proposed method in enhancing detection performance and improving model robustness.

Efficiency of each component. To further evaluate the efficiency and performance of the key components in this study, we conduct experiments to analyze the contribution of each component to the overall performance. Table 8 illustrates that each component achieves performance improvements while maintaining relatively low computational resource consumption.

Table 8.

Efficiency of each component based on the baseline model.

4.6. Visualization Analysis

In this section, we visualize the detection results in various scenarios to comprehensively assess the performance of the proposed approach, including heatmaps, 3D bounding boxes, and bird’s-eye views. As shown in Figure 8, we compare the predicted results of the proposed approach with actual scenes, demonstrating its performance in complex scenarios such as dense vehicle crowds, varying object sizes, occlusions, and truncated targets. The red denotes the ground truth, while the green indicates the predicted bounding boxes.

Figure 8.

Visualization results of the MonoAMP experiment. We provide the detection results under various road conditions. The red arrows primarily highlight instances of vehicle truncation.

The heatmap shows that the model generates clear responses in densely populated vehicle areas. Our method still provides reliable responses even in distant scenarios or when occlusions or truncations occur. For vehicles that are close and unobstructed, the method generates 3D prediction bounding boxes that closely match the ground truth. In cases of severe vehicle truncation, such as the vehicle at the image edge marked by the red arrow, traditional methods often struggle to accurately estimate its depth or shape. In contrast, we effectively mitigate the depth ambiguity issue through the UGDE, successfully generating 3D bounding boxes that closely match the ground truth. The bird’s-eye view further demonstrates the method’s accurate localization and identification of severely truncated objects. Our method also detects small, distant vehicles that are not annotated in the dataset, as shown by the green 3D prediction bounding boxes highlighted by the magnifying glass in the figure, along with their corresponding bird’s-eye view. This shows that our model exhibits strong generalization and perception abilities when handling unannotated objects. Overall, the proposed method demonstrates significant accuracy and robustness in complex traffic scenarios, including dense object detection, small distant object recognition, and occlusion or truncated object detection.

5. Conclusions

In this paper, we reveal the limitations of existing monocular 3D object detection methods, particularly in terms of cross-dimensional feature attention mechanisms and multi-order contextual information modeling. We propose MonoAMP, a novel framework with the AMPA. By employing cross-dimensional attention interactions, we fully exploit both spatial and channel information of the features, enhancing the expression of key features. Additionally, we design AMPA that enhances the model’s ability to adaptively aggregate multi-order contextual information, effectively alleviating the issue of multi-scale objects. To achieve more stable and accurate depth predictions, we propose an uncertainty-guided adaptive depth ensemble strategy. This strategy dynamically allocates weights to different depth estimation methods, ensuring that high-confidence depth values contribute more significantly during the fusion process. Experiments on the KITTI benchmark show that MonoAMP performs exceptionally well in complex scenarios. Whether in high-density traffic scenarios or challenging environments with occlusions and truncation, it consistently demonstrates excellent detection performance. This validates its potential value and broad applicability in real-world applications. Although MonoAMP demonstrates robust performance, its accuracy remains a challenge in meeting the stringent demands of real-world autonomous driving applications. Future research will aim to enhance both detection precision and computational efficiency, ensuring greater applicability in complex and dynamic environments. By addressing these challenges, it holds significant potential to advance the field of monocular 3D object detection.

Author Contributions

Conceptualization, X.H. and W.Z.; methodology, X.H.; software, X.H.; validation, X.H., W.Z. and G.J.; formal analysis, X.H.; investigation, X.H. and T.C.; resources, T.C. and W.Z.; data curation, X.H.; writing—original draft preparation, X.H.; writing—review and editing, T.C. and W.Z.; visualization, X.H. and G.J.; supervision, T.C.; project administration, T.C., W.Z. and H.J.; funding acquisition, T.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Scientific Research Projects of the Education Department of Shaanxi Province (21JK0570, 23JK0371), the Natural Science Basic Research Projects of Shaanxi Province (2024JC-YBQN-0725), the Graduate Innovation Fund Projects of Shaanxi University of Technology (SLGYCX2451), and the Scientific Research Projects of Shaanxi University of Technology (SLGRCQD2318).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data underlying this study’s findings are included in the article. The KITTI dataset is available at http://www.cvlibs.net/datasets/kitti/(accessed on 25 January 2025).

Acknowledgments

We extend our appreciation to the broader research community, whose shared knowledge and collaborative spirit have significantly enriched our work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chen, X.; Kundu, K.; Zhang, Z.; Ma, H.; Fidler, S. Monocular 3d object detection for autonomous driving. In Proceedings of the 2016 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2147–2156. [Google Scholar]

- Ding, M.; Huo, Y.; Yi, H.; Wang, Z.; Shi, J.; Lu, Z.; Luo, P. Learning depth-guided convolutions for monocular 3d object detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1000–1001. [Google Scholar]

- Li, P.; Su, S.; Zhao, H. Rts3d: Real-time stereo 3d detection from 4d feature-consistency embedding space for autonomous driving. In Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence (AAAI-21), Online, 2–9 February 2021; pp. 1930–1939. [Google Scholar]

- Lian, Q.; Li, P.; Chen, X. Monojsg: Joint semantic and geometric cost volume for monocular 3d object detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 1019–1028. [Google Scholar]

- Li, B.; Ouyang, W.; Sheng, L.; Zeng, X.; Wang, X. Gs3d: An efficient 3d object detection framework for autonomous driving. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1930–1939. [Google Scholar]

- Simonelli, A.; Bulo, S.R.; Porzi, L.; López-Antequera, M.; Kontschieder, P. Disentangling monocular 3d object detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1991–1999. [Google Scholar]

- Wang, L.; Du, L.; Ye, X.; Fu, Y.; Guo, G.; Xue, X.; Feng, J.; Zhang, L. Depth-conditioned dynamic message propagation for monocular 3d object detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 454–463. [Google Scholar]

- Shi, X.; Ye, Q.; Chen, X.; Chen, C.; Chen, Z.; Kim, T.-K. Geometry-based distance decomposition for monocular 3d object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 15172–15181. [Google Scholar]

- Qin, Z.; Wang, J.; Lu, Y. Monogrnet: A geometric reasoning network for monocular 3d object localization. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence (AAAI-19), Honolulu, HI, USA, 27 January–1 February 2019; pp. 15172–15181. [Google Scholar]

- Brazil, G.; Liu, X. M3d-rpn: Monocular 3d region proposal network for object detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9287–9296. [Google Scholar]

- Ma, X.; Zhang, Y.; Xu, D.; Zhou, D.; Yi, S.; Li, H.; Ouyang, W. Delving into localization errors for monocular 3d object detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 10–25 June 2021; pp. 4721–4730. [Google Scholar]

- Wang, T.; Zhu, X.; Pang, J.; Lin, D. Fcos3d: Fully convolutional one-stage monocular 3d object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 913–922. [Google Scholar]

- Xu, Q.; Zhong, Y.; Neumann, U. Behind the curtain: Learning occluded shapes for 3d object detection. In Proceedings of the Thirty-Sixth AAAI Conference on Artificial Intelligence (AAAI-22), Online, 22 February–1 March 2022; pp. 2893–2901. [Google Scholar]

- Manhardt, F.; Kehl, W.; Gaidon, A. Roi-10d: Monocular lifting of 2d detection to 6d pose and metric shape. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2069–2078. [Google Scholar]

- Zhang, Y.; Lu, J.; Zhou, J. Objects are different: Flexible monocular 3d object detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 3289–3298. [Google Scholar]

- Wu, X.; Peng, L.; Yang, H.; Xie, L.; Huang, C.; Deng, C.; Liu, H.; Cai, D. Sparse fuse dense: Towards high quality 3d detection with depth completion. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5418–5427. [Google Scholar]

- Lu, Y.; Ma, X.; Yang, L.; Zhang, T.; Liu, Y.; Chu, Q.; Yan, J.; Ouyang, W. Geometry uncertainty projection network for monocular 3d object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3111–3121. [Google Scholar]

- Liu, Z.; Wu, Z.; Tóth, R. Smoke: Single-stage monocular 3d object detection via keypoint estimation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 996–997. [Google Scholar]

- Zhou, Y.; He, Y.; Zhu, H.; Wang, C.; Li, H.; Jiang, Q. MonoEF: Extrinsic parameter free monocular 3D object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 10114–10128. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Liu, S.; Xia, Z.; Zhang, H.; Zeng, X.; Ouyang, W. Rethinking pseudo-lidar representation. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 311–327. [Google Scholar]

- Wang, Y.; Guizilini, V.C.; Zhang, T.; Wang, Y.; Zhao, H.; Solomon, J. Detr3d: 3d object detection from multi-view images via 3d-to-2d queries. In Proceedings of the 4th Conference on Robot Learning (CoRL), Cambridge, MA, USA, 16–18 November 2020; pp. 180–191. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Xiong, K.; Zhang, D.; Liang, D.; Liu, Z.; Yang, H.; Dikubab, W.; Cheng, J.; Bai, X. You Only Look Bottom-Up for Monocular 3D Object Detection. IEEE Robon. Autom. Lett. 2023, 8, 7464–7471. [Google Scholar] [CrossRef]

- Sheng, H.; Cai, S.; Zhao, N.; Deng, B.; Zhao, M.-J.; Lee, G.H.J.I.T.o.C.; Technology, S.f.V. PDR: Progressive depth regularization for monocular 3D object detection. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 7591–7603. [Google Scholar] [CrossRef]

- Zhu, M.; Ge, L.; Wang, P.; Peng, H. Monoedge: Monocular 3d object detection using local perspectives. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 643–652. [Google Scholar]

- Shi, X.; Chen, Z.; Kim, T.-K. Multivariate probabilistic monocular 3D object detection. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 4281–4290. [Google Scholar]

- Xu, J.; Peng, L.; Cheng, H.; Li, H.; Qian, W.; Li, K.; Wang, W.; Cai, D. Mononerd: Nerf-like representations for monocular 3d object detection. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 6814–6824. [Google Scholar]

- Li, Z.; Gao, Y.; Hong, Q.; Du, Y.; Serikawa, S.; Zhang, L. Keypoint3D: Keypoint-Based and Anchor-Free 3D Object Detection for Autonomous Driving with Monocular Vision. Remote Sens. 2023, 15, 1210. [Google Scholar] [CrossRef]

- Jiang, H.; Ren, J.; Li, A. 3D Object Detection under Urban Road Traffic Scenarios Based on Dual-Layer Voxel Features Fusion Augmentation. Sensors 2024, 24, 3267. [Google Scholar] [CrossRef] [PubMed]

- Sharma, S.; Meyer, R.T.; Asher, Z.D. AEPF: Attention-Enabled Point Fusion for 3D Object Detection. Sensors 2024, 24, 5841. [Google Scholar] [CrossRef] [PubMed]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the 2012 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Chen, Y.; Tai, L.; Sun, K.; Li, M. Monopair: Monocular 3d object detection using pairwise spatial relationships. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12093–12102. [Google Scholar]

- Brazil, G.; Pons-Moll, G.; Liu, X.; Schiele, B. Kinematic 3d object detection in monocular video. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 135–152. [Google Scholar]

- Luo, S.; Dai, H.; Shao, L.; Ding, Y. M3dssd: Monocular 3d single stage object detector. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 6145–6154. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Li, P.; Zhao, H.; Liu, P.; Cao, F. Rtm3d: Real-time monocular 3d detection from object keypoints for autonomous driving. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 644–660. [Google Scholar]

- Li, Z.; Qu, Z.; Zhou, Y.; Liu, J.; Wang, H.; Jiang, L. Diversity matters: Fully exploiting depth clues for reliable monocular 3d object detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 2791–2800. [Google Scholar]

- Liu, X.; Xue, N.; Wu, T. Learning auxiliary monocular contexts helps monocular 3d object detection. In Proceedings of the Thirty-Sixth AAAI Conference on Artificial Intelligence (AAAI-22), Online, 22 February–1 March 2022; pp. 1810–1818. [Google Scholar]

- Huang, K.-C.; Wu, T.-H.; Su, H.-T.; Hsu, W.H. Monodtr: Monocular 3d object detection with depth-aware transformer. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 4012–4021. [Google Scholar]

- Zhang, R.; Qiu, H.; Wang, T.; Guo, Z.; Cui, Z.; Qiao, Y.; Li, H.; Gao, P. MonoDETR: Depth-guided transformer for monocular 3D object detection. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 9155–9166. [Google Scholar]

- Zhou, Y.; Zhu, H.; Liu, Q.; Chang, S.; Guo, M. Monoatt: Online monocular 3d object detection with adaptive token transformer. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 17493–17503. [Google Scholar]

- Yu, F.; Wang, D.; Shelhamer, E.; Darrell, T. Deep layer aggregation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2403–2412. [Google Scholar]

- Misra, D.; Nalamada, T.; Arasanipalai, A.U.; Hou, Q. Rotate to attend: Convolutional triplet attention module. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Online, 3–8 January 2021; pp. 3139–3148. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018, 107, 3–11. [Google Scholar] [CrossRef] [PubMed]

- Lin, T. Focal Loss for Dense Object Detection. arXiv 2017, arXiv:1708.02002. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Mousavian, A.; Anguelov, D.; Flynn, J.; Kosecka, J. 3d bounding box estimation using deep learning and geometry. In Proceedings of the 2017 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7074–7082. [Google Scholar]

- Chen, X.; Kundu, K.; Zhu, Y.; Berneshawi, A.G.; Ma, H.; Fidler, S.; Urtasun, R. 3d object proposals for accurate object class detection. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2446–2454. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11621–11631. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Qin, Z.; Li, X. Monoground: Detecting monocular 3d objects from the ground. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 3793–3802. [Google Scholar]

- Shi, X.; Chen, Z.; Kim, T.-K. Distance-normalized unified representation for monocular 3d object detection. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 91–107. [Google Scholar]

- Liu, Z.; Zhou, D.; Lu, F.; Fang, J.; Zhang, L. Autoshape: Real-time shape-aware monocular 3d object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 15641–15650. [Google Scholar]

- Chen, H.; Huang, Y.; Tian, W.; Gao, Z.; Xiong, L. Monorun: Monocular 3d object detection by reconstruction and uncertainty propagation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10379–10388. [Google Scholar]

- Reading, C.; Harakeh, A.; Chae, J.; Waslander, S.L. Categorical depth distribution network for monocular 3d object detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8555–8564. [Google Scholar]

- Jinrang, J.; Li, Z.; Shi, Y.J.A.i.N.I.P.S. MonoUNI: A unified vehicle and infrastructure-side monocular 3d object detection network with sufficient depth clues. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 9–15 December 2024; Volume 36. [Google Scholar]

- Wang, L.; Zhang, L.; Zhu, Y.; Zhang, Z.; He, T.; Li, M.; Xue, X. Progressive coordinate transforms for monocular 3d object detection. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021; pp. 13364–13377. [Google Scholar]

- Kumar, A.; Brazil, G.; Corona, E.; Parchami, A.; Liu, X. Deviant: Depth equivariant network for monocular 3d object detection. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 664–683. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).