Real-Time Sensor-Based and Self-Reported Emotional Perceptions of Urban Green-Blue Spaces: Exploring Gender Differences with FER and SAM

Abstract

1. Introduction

2. Materials and Methods

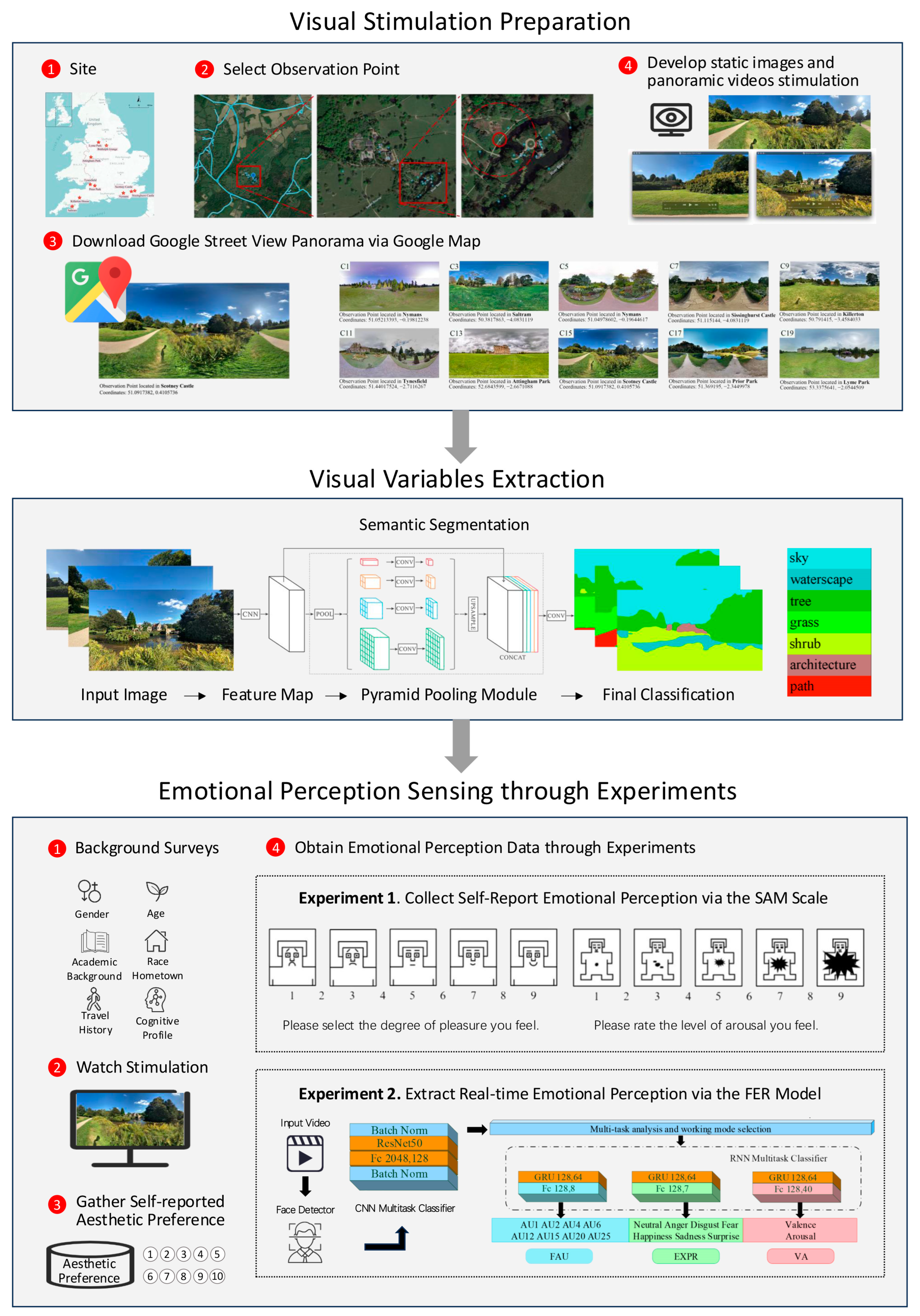

2.1. Research Framework

- Visual Stimulation Preparation: We employed the GSV platform (Google, CA, USA) to select and capture panoramic images at specific sites, which were then developed into both static images and panoramic videos. These visual stimuli were utilized in the subsequent self-report and facial expression experiments to elicit emotional responses.

- Visual Variables Extraction: We utilized a scene semantic segmentation model to extract key visual variables of the captured videos and photos.

- Emotional Perception Sensing through Experiments: A cohort of 108 participants was presented with the static images and asked to articulate their emotional responses using the validated SAM scale, providing a quantitative measure of their subjective emotional experiences. A separate cohort of 20 participants viewed the panoramic videos, and their facial expressions were captured and analyzed using a FER model, allowing for the objective assessment of their real-time emotional reactions.

- Data Analysis and Comparative Evaluation: We conducted a comprehensive integration of the extracted visual variables with the data from facial emotional perception, comparing these insights with the self-reported emotional responses. This comparative analysis aimed to elucidate the impact of visual variables on gender-based perception disparities and to assess the relative effectiveness of different methodologies in capturing emotional responses to green spaces.

2.2. Visual Stimulation Preparation

2.3. Visual Variables Extraction

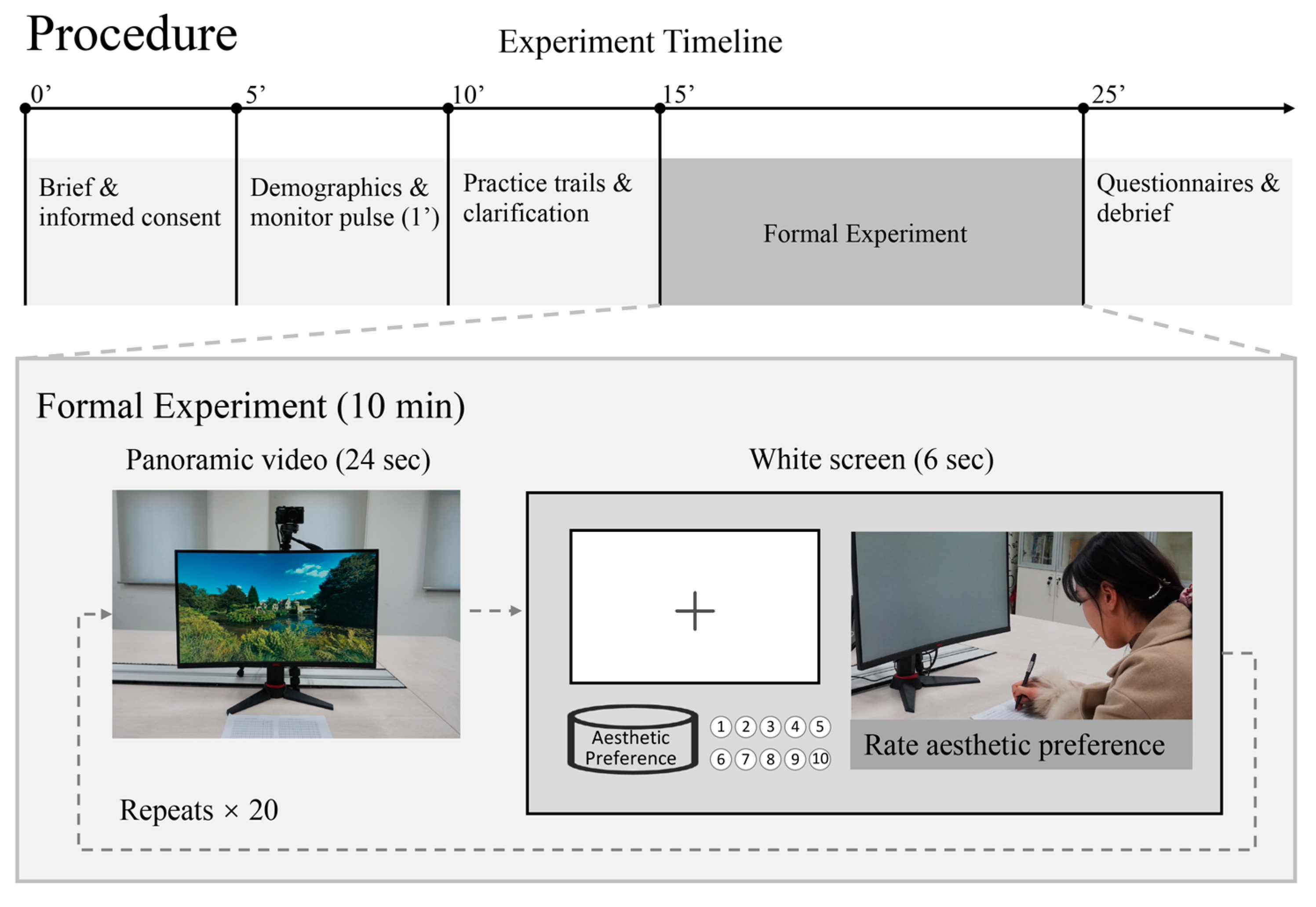

2.4. Emotional Perception Sensing Through Experiments

2.4.1. Emotional Self-Report Experiment via the SAM Scale

2.4.2. Real-Time Emotional Recognition Experiment via Facial Expression Analysis

2.5. Data Analysis and Comparison

3. Results

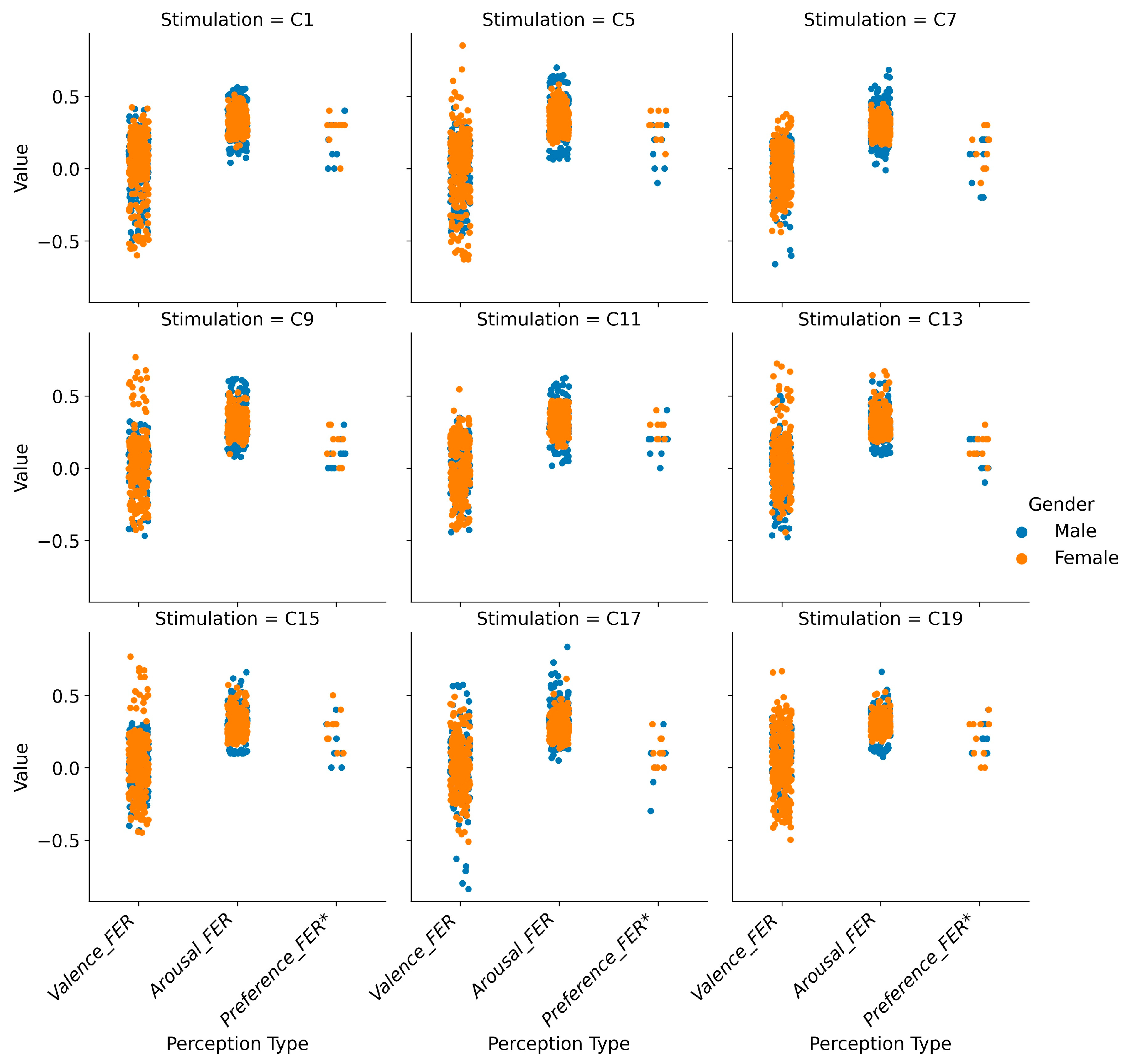

3.1. Gender Differences in Perception Measures

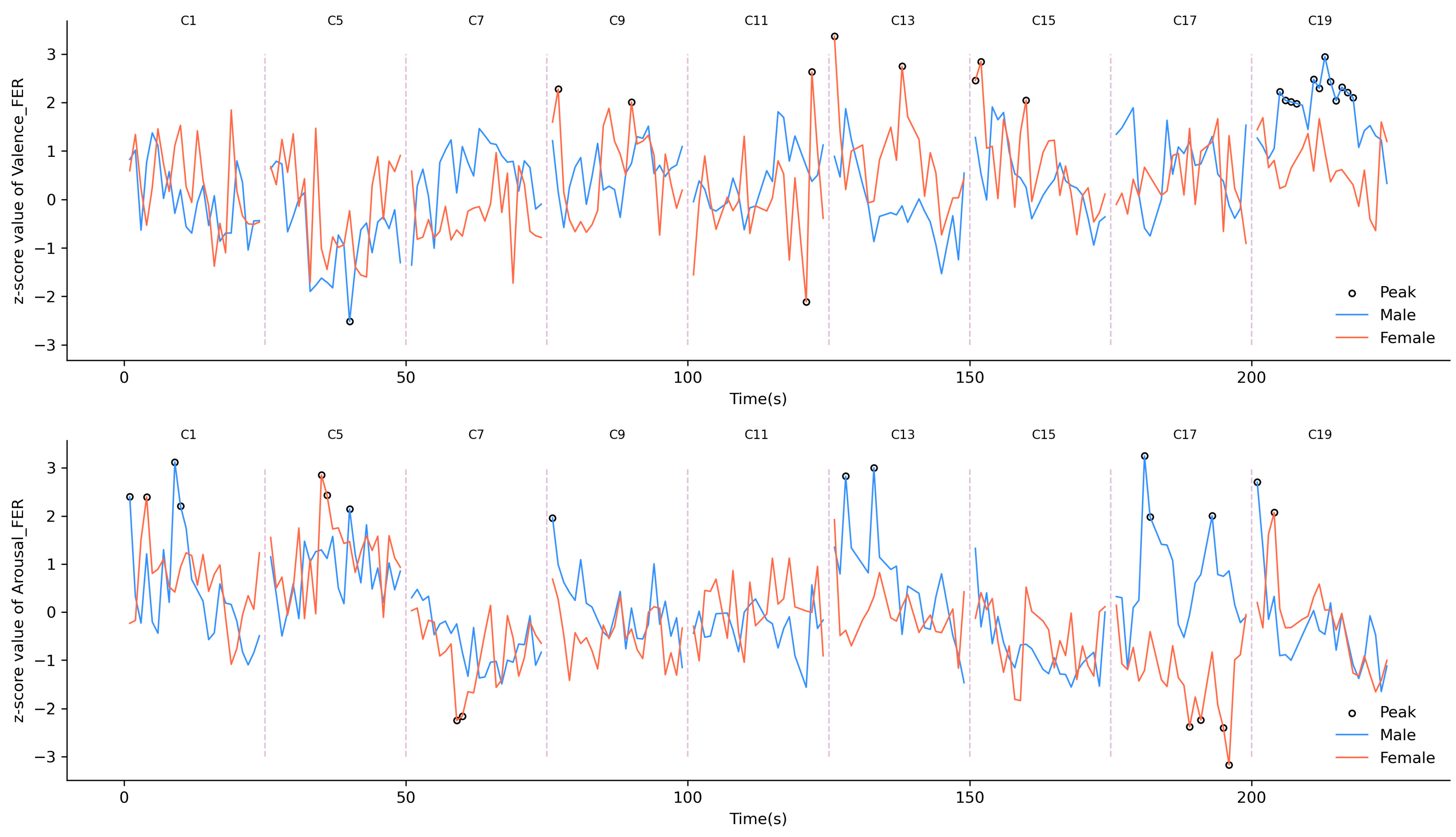

3.2. Gender Differences in Real-Time Emotional Trends and Peaks

3.3. Gender Differences in Correlations Between Emotional Perception and Visual Variables

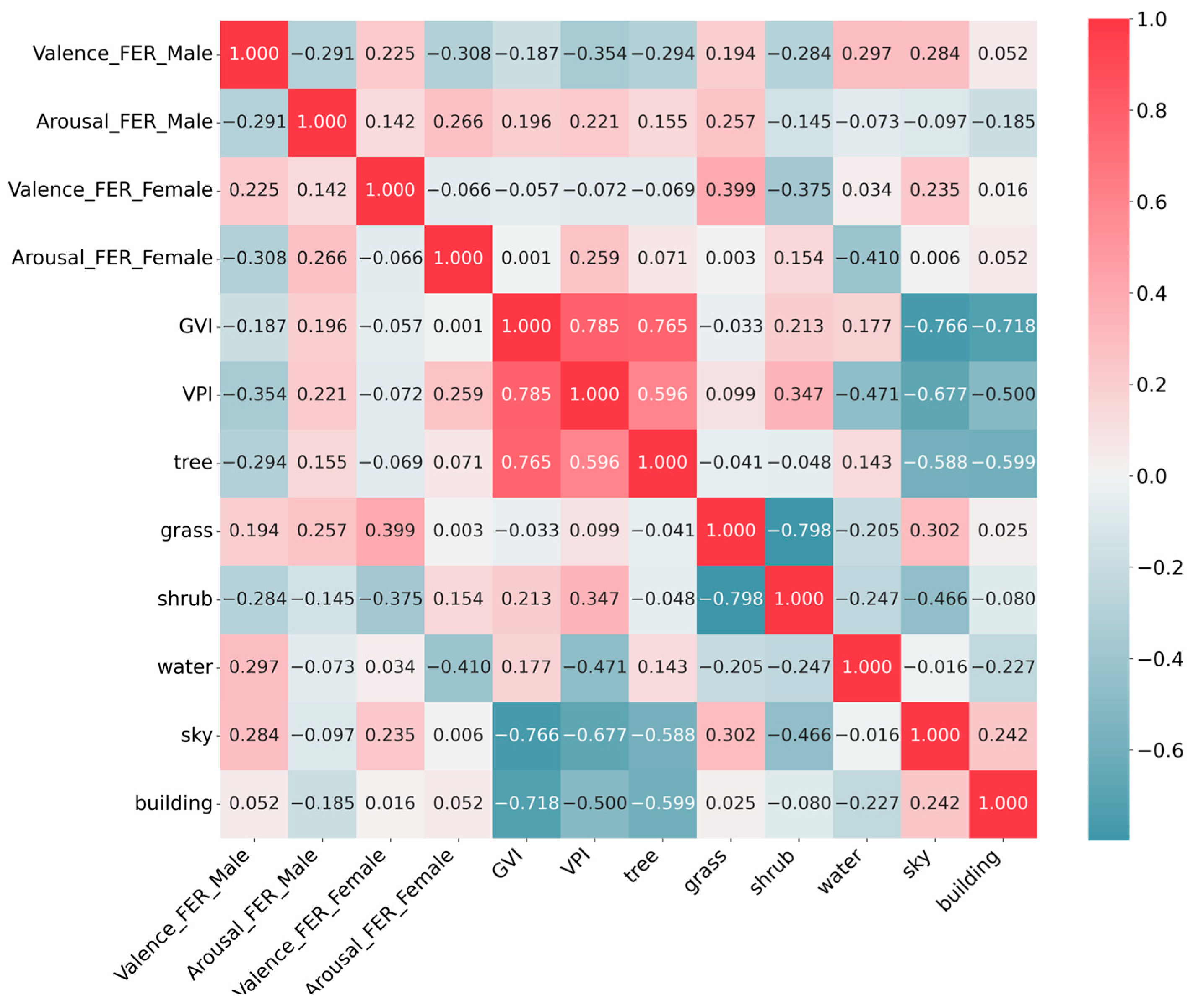

3.3.1. Gender Differences in Pearson Correlations

3.3.2. Gender Differences in FER Perception Prediction Models with Three Different Combinations

3.4. Gender Differences in the Interrelationship Between Aesthetic Preference and Perception Data

3.4.1. Gender Differences in the Interrelationship Between Aesthetic Preference and Self-Reported Perception Data

3.4.2. Gender Differences in the Relationship Between Aesthetic Preference and FER Perception Data

4. Discussion

4.1. Comparative Analysis of FER and Self-Reported Measures in Capturing Gender-Specific Emotional Perceptions

4.2. Gender-Specific Emotional Responses to UGBS Visual Variables

4.3. Implications for Future Urban Planning and Inclusive Urban Design

4.4. Limitations and Future Outlook

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Benzell, S.G.; Collis, A.; Nicolaides, C. Rationing Social Contact during the COVID-19 Pandemic: Transmission Risk and Social Benefits of US Locations. Proc. Natl. Acad. Sci. USA 2020, 117, 14642–14644. [Google Scholar] [CrossRef] [PubMed]

- Legeby, A.; Koch, D.; Duarte, F.; Heine, C.; Benson, T.; Fugiglando, U.; Ratti, C. New Urban Habits in Stockholm Following COVID-19. Urban Stud. 2023, 60, 1448–1464. [Google Scholar] [CrossRef] [PubMed]

- Smith, K.J.; Victor, C. Typologies of Loneliness, Living Alone and Social Isolation, and Their Associations with Physical and Mental Health. Ageing Soc. 2019, 39, 1709–1730. [Google Scholar] [CrossRef]

- Leigh-Hunt, N.; Bagguley, D.; Bash, K.; Turner, V.; Turnbull, S.; Valtorta, N.; Caan, W. An Overview of Systematic Reviews on the Public Health Consequences of Social Isolation and Loneliness. Public Health 2017, 152, 157–171. [Google Scholar] [CrossRef]

- Liu, L.; Qu, H.; Ma, Y.; Wang, K.; Qu, H. Restorative Benefits of Urban Green Space: Physiological, Psychological Restoration and Eye Movement Analysis. J. Environ. Manag. 2022, 301, 113930. [Google Scholar] [CrossRef]

- Zhou, Y.; Yang, L.; Yu, J.; Guo, S. Do Seasons Matter? Exploring the Dynamic Link between Blue-Green Space and Mental Restoration. Urban For. Urban Green. 2022, 73, 127612. [Google Scholar] [CrossRef]

- Campagnaro, T.; Vecchiato, D.; Arnberger, A.; Celegato, R.; Da Re, R.; Rizzetto, R.; Semenzato, P.; Sitzia, T.; Tempesta, T.; Cattaneo, D. General, Stress Relief and Perceived Safety Preferences for Green Spaces in the Historic City of Padua (Italy). Urban For. Urban Green. 2020, 52, 126695. [Google Scholar] [CrossRef]

- Ulmer, J.M.; Wolf, K.L.; Backman, D.R.; Tretheway, R.L.; Blain, C.J.; O’Neil-Dunne, J.P.; Frank, L.D. Multiple Health Benefits of Urban Tree Canopy: The Mounting Evidence for a Green Prescription. Health Place 2016, 42, 54–62. [Google Scholar] [CrossRef]

- Pasanen, T.P.; White, M.P.; Wheeler, B.W.; Garrett, J.K.; Elliott, L.R. Neighbourhood Blue Space, Health and Wellbeing: The Mediating Role of Different Types of Physical Activity. Environ. Int. 2019, 131, 105016. [Google Scholar] [CrossRef]

- Tang, J.; Long, Y. Measuring Visual Quality of Street Space and Its Temporal Variation: Methodology and Its Application in the Hutong Area in Beijing. Landsc. Urban Plan. 2019, 191, 103436. [Google Scholar] [CrossRef]

- Beebeejaun, Y. Gender, Urban Space, and the Right to Everyday Life. J. Urban Aff. 2017, 39, 323–334. [Google Scholar] [CrossRef]

- Skaburskis, A. Gender Differences in Housing Demand. Urban Stud. 1997, 34, 275–320. [Google Scholar] [CrossRef]

- Shaikly, A.; Beatriz, M.L. Gender-Bias in the Built Environment: How Young Women’s Experiences in Public Space Are Understood and Addressed in London. J. Urban Des. 2023, 28, 355–374. [Google Scholar] [CrossRef]

- Gao, T.; Song, R.; Zhu, L.; Qiu, L. What Characteristics of Urban Green Spaces and Recreational Activities Do Self-Reported Stressed Individuals Like? A Case Study of Baoji, China. Int. J. Environ. Res. Public Health 2019, 16, 1348. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Zhao, J.; Meitner, M.J.; Hu, Y.; Xu, X. Characteristics of Urban Green Spaces in Relation to Aesthetic Preference and Stress Recovery. Urban For. Urban Green. 2019, 41, 6–13. [Google Scholar] [CrossRef]

- Colley, K.; Irvine, K.N.; Currie, M. Who Benefits from Nature? A Quantitative Intersectional Perspective on Inequalities in Contact with Nature and the Gender Gap Outdoors. Landsc. Urban Plan. 2022, 223, 104420. [Google Scholar] [CrossRef]

- Baran, P.K.; Tabrizian, P.; Zhai, Y.; Smith, J.W.; Floyd, M.F. An Exploratory Study of Perceived Safety in a Neighborhood Park Using Immersive Virtual Environments. Urban For. Urban Green. 2018, 35, 72–81. [Google Scholar] [CrossRef]

- Sillman, D.; Rigolon, A.; Browning, M.H.E.M.; Yoon, H.V.; McAnirlin, O. Do Sex and Gender Modify the Association between Green Space and Physical Health? A Systematic Review. Environ. Res. 2022, 209, 112869. [Google Scholar] [CrossRef]

- Richardson, E.A.; Mitchell, R. Gender Differences in Relationships between Urban Green Space and Health in the United Kingdom. Soc. Sci. Med. 2010, 71, 568–575. [Google Scholar] [CrossRef]

- Menninghaus, W.; Schindler, I.; Wagner, V.; Wassiliwizky, E.; Hanich, J.; Jacobsen, T.; Koelsch, S. Aesthetic Emotions Are a Key Factor in Aesthetic Evaluation: Reply to Skov and Nadal (2020). Psychol. Rev. 2020, 127, 650–654. [Google Scholar] [CrossRef]

- Schindler, I.; Hosoya, G.; Menninghaus, W.; Beermann, U.; Wagner, V.; Eid, M.; Scherer, K.R. Measuring Aesthetic Emotions: A Review of the Literature and a New Assessment Tool. PLoS ONE 2017, 12, e0178899. [Google Scholar] [CrossRef] [PubMed]

- Kuehner, C. Gender Differences in Unipolar Depression: An Update of Epidemiological Findings and Possible Explanations. Acta Psychiatr. Scand. 2003, 108, 163–174. [Google Scholar] [CrossRef] [PubMed]

- Sloan, D.M.E.; Kornstein, S.G. Gender Differences in Depression and Response to Antidepressant Treatment. Psychiatr. Clin. N. Am. 2003, 26, 581–594. [Google Scholar] [CrossRef] [PubMed]

- Kring, A.M.; Gordon, A.H. Sex Differences in Emotion: Expression, Experience, and Physiology. J. Personal. Soc. Psychol. 1998, 74, 686. [Google Scholar] [CrossRef]

- Labouvie-Vief, G.; Lumley, M.A.; Jain, E.; Heinze, H. Age and Gender Differences in Cardiac Reactivity and Subjective Emotion Responses to Emotional Autobiographical Memories. Emotion 2003, 3, 115–126. [Google Scholar] [CrossRef]

- Ma, Y.; Ling, C.; Wu, J. Exploring the Spatial Distribution Characteristics of Emotions of Weibo Users in Wuhan Waterfront Based on Gender Differences Using Social Media Texts. ISPRS Int. J. Geo-Inf. 2020, 9, 465. [Google Scholar] [CrossRef]

- Birditt, K.S.; Fingerman, K.L. Age and Gender Differences in Adults’ Descriptions of Emotional Reactions to Interpersonal Problems. J. Gerontol. Ser. B 2003, 58, P237–P245. [Google Scholar] [CrossRef]

- Thayer, R.E.; Newman, J.R.; McClain, T.M. Self-Regulation of Mood: Strategies for Changing a Bad Mood, Raising Energy, and Reducing Tension. J. Personal. Soc. Psychol. 1994, 67, 910. [Google Scholar] [CrossRef]

- Ye, Y.; Richards, D.; Lu, Y.; Song, X.; Zhuang, Y.; Zeng, W.; Zhong, T. Measuring Daily Accessed Street Greenery: A Human-Scale Approach for Informing Better Urban Planning Practices. Landsc. Urban Plan. 2019, 191, 103434. [Google Scholar] [CrossRef]

- Han, X.; Wang, L.; Seo, S.H.; He, J.; Jung, T. Measuring Perceived Psychological Stress in Urban Built Environments Using Google Street View and Deep Learning. Front. Public Health 2022, 10, 891736. [Google Scholar] [CrossRef]

- Xia, Y.; Yabuki, N.; Fukuda, T. Development of a System for Assessing the Quality of Urban Street-Level Greenery Using Street View Images and Deep Learning. Urban For. Urban Green. 2021, 59, 126995. [Google Scholar] [CrossRef]

- Biljecki, F.; Ito, K. Street View Imagery in Urban Analytics and GIS: A Review. Landsc. Urban Plan. 2021, 215, 104217. [Google Scholar] [CrossRef]

- Zhang, F.; Zhou, B.; Liu, L.; Liu, Y.; Fung, H.H.; Lin, H.; Ratti, C. Measuring Human Perceptions of a Large-Scale Urban Region Using Machine Learning. Landsc. Urban Plan. 2018, 180, 148–160. [Google Scholar] [CrossRef]

- Cui, Q.; Zhang, Y.; Yang, G.; Huang, Y.; Chen, Y. Analysing Gender Differences in the Perceived Safety from Street View Imagery. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103537. [Google Scholar] [CrossRef]

- Zhang, F.; Fan, Z.; Kang, Y.; Hu, Y.; Ratti, C. “Perception Bias”: Deciphering a Mismatch between Urban Crime and Perception of Safety. Landsc. Urban Plan. 2021, 207, 104003. [Google Scholar] [CrossRef]

- Bradley, M.M.; Lang, P.J. International Affective Picture System. In Encyclopedia of Personality and Individual Differences; Zeigler-Hill, V., Shackelford, T.K., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 1–4. ISBN 978-3-319-28099-8. [Google Scholar]

- Wrase, J.; Klein, S.; Gruesser, S.M.; Hermann, D.; Flor, H.; Mann, K.; Braus, D.F.; Heinz, A. Gender Differences in the Processing of Standardized Emotional Visual Stimuli in Humans: A Functional Magnetic Resonance Imaging Study. Neurosci. Lett. 2003, 348, 41–45. [Google Scholar] [CrossRef]

- Li, X.; Zhang, C.; Li, W. Does the Visibility of Greenery Increase Perceived Safety in Urban Areas? Evidence from the Place Pulse 1.0 Dataset. ISPRS Int. J. Geo-Inf. 2015, 4, 1166–1183. [Google Scholar] [CrossRef]

- Ito, K.; Kang, Y.; Zhang, Y.; Zhang, F.; Biljecki, F. Understanding Urban Perception with Visual Data: A Systematic Review. Cities 2024, 152, 105169. [Google Scholar] [CrossRef]

- Do, N.-T.; Nguyen-Quynh, T.-T.; Kim, S.-H. Affective Expression Analysis In-the-Wild Using Multi-Task Temporal Statistical Deep Learning Model. In Proceedings of the 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), Buenos Aires, Argentina, 16–20 November 2020; IEEE: Buenos Aires, Argentina, 2020; pp. 624–628. [Google Scholar]

- Bradley, M.M.; Lang, P.J. Measuring Emotion: The Self-Assessment Manikin and the Semantic Differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef]

- Aoshima, I.; Uchida, K.; Ushimaru, A.; Sato, M. The Influence of Subjective Perceptions on the Valuation of Green Spaces in Japanese Urban Areas. Urban For. Urban Green. 2018, 34, 166–174. [Google Scholar] [CrossRef]

- Subiza-Pérez, M.; Hauru, K.; Korpela, K.; Haapala, A.; Lehvävirta, S. Perceived Environmental Aesthetic Qualities Scale (PEAQS)—A Self-Report Tool for the Evaluation of Green-Blue Spaces. Urban For. Urban Green. 2019, 43, 126383. [Google Scholar] [CrossRef]

- Li, S.; Walters, G.; Packer, J.; Scott, N. Using Skin Conductance and Facial Electromyography to Measure Emotional Responses to Tourism Advertising. Curr. Issues Tour. 2018, 21, 1761–1783. [Google Scholar] [CrossRef]

- Mavros, P.; Wälti, M.J.; Nazemi, M.; Ong, C.H.; Hölscher, C. A Mobile EEG Study on the Psychophysiological Effects of Walking and Crowding in Indoor and Outdoor Urban Environments. Sci. Rep. 2022, 12, 18476. [Google Scholar] [CrossRef] [PubMed]

- Qiu, Y.; Pan, H.; Kalantari, Z.; Giusti, M.; Che, S. The Natural Focus: Combining Deep Learning and Eye-Tracking to Understand Public Perceptions of Urban Ecosystem Aesthetics. Ecol. Indic. 2023, 156, 111181. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Z.; Jing, F.; Gao, J.; Ma, J.; Shao, G.; Noel, S. An Evaluation of Urban Green Space in Shanghai, China, Using Eye Tracking. Urban For. Urban Green. 2020, 56, 126903. [Google Scholar] [CrossRef]

- Hadinejad, A.; Moyle, B.D.; Kralj, A.; Scott, N. Physiological and Self-Report Methods to the Measurement of Emotion in Tourism. Tour. Recreat. Res. 2019, 44, 466–478. [Google Scholar] [CrossRef]

- Li, S.; Scott, N.; Walters, G. Current and Potential Methods for Measuring Emotion in Tourism Experiences: A Review. Curr. Issues Tour. 2015, 18, 805–827. [Google Scholar] [CrossRef]

- Ashkezari-Toussi, S.; Kamel, M.; Sadoghi-Yazdi, H. Emotional Maps Based on Social Networks Data to Analyze Cities Emotional Structure and Measure Their Emotional Similarity. Cities 2019, 86, 113–124. [Google Scholar] [CrossRef]

- Zhu, X.; Gao, M.; Zhang, R.; Zhang, B. Quantifying Emotional Differences in Urban Green Spaces Extracted from Photos on Social Networking Sites: A Study of 34 Parks in Three Cities in Northern China. Urban For. Urban Green. 2021, 62, 127133. [Google Scholar] [CrossRef]

- Wei, H.; Hauer, R.J.; Sun, Y.; Meng, L.; Guo, P. Emotional Perceptions of People Exposed to Green and Blue Spaces in Forest Parks of Cities at Rapid Urbanization Regions of East China. Urban For. Urban Green. 2022, 78, 127772. [Google Scholar] [CrossRef]

- Terzis, V.; Moridis, C.N.; Economides, A.A. Measuring Instant Emotions Based on Facial Expressions during Computer-Based Assessment. Pers. Ubiquitous Comput. 2013, 17, 43–52. [Google Scholar] [CrossRef]

- Kang, Y.; Jia, Q.; Gao, S.; Zeng, X.; Wang, Y.; Angsuesser, S.; Liu, Y.; Ye, X.; Fei, T. Extracting Human Emotions at Different Places Based on Facial Expressions and Spatial Clustering Analysis. Trans. GIS 2019, 23, 450–480. [Google Scholar] [CrossRef]

- Terlinden, U. City and Gender: Intercultural Discourse on Gender, Urbanism and Architecture; Springer: Berlin/Heidelberg, Germany, 2013; Volume 12. [Google Scholar]

- Ramírez, T.; Hurtubia, R.; Lobel, H.; Rossetti, T. Measuring Heterogeneous Perception of Urban Space with Massive Data and Machine Learning: An Application to Safety. Landsc. Urban Plan. 2021, 208, 104002. [Google Scholar] [CrossRef]

- Ye, Z.; Hashim, N.H.; Baghirov, F.; Murphy, J. Gender Differences in Instagram Hashtag Use. J. Hosp. Mark. Manag. 2018, 27, 386–404. [Google Scholar] [CrossRef]

- Community-Driven Instagram Hashtag Data. Available online: https://www.instagram.com/ (accessed on 5 April 2024).

- Zhao, H. Semseg. Available online: https://github.com/hszhao/semseg (accessed on 1 May 2023).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Las Vegas, NV, USA, 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zhou, B.; Zhao, H.; Puig, X.; Xiao, T.; Fidler, S.; Barriuso, A.; Torralba, A. Semantic Understanding of Scenes Through the ADE20K Dataset. Int. J. Comput. Vis. 2019, 127, 302–321. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Honolulu, HI, USA, 2017; pp. 6230–6239. [Google Scholar]

- Kim, J.; Fesenmaier, D.R. Measuring Emotions in Real Time: Implications for Tourism Experience Design. J. Travel Res. 2015, 54, 419–429. [Google Scholar] [CrossRef]

- Hadinejad, A.; Moyle, B.D.; Scott, N.; Kralj, A. Emotional Responses to Tourism Advertisements: The Application of FaceReaderTM. Tour. Recreat. Res. 2019, 44, 131–135. [Google Scholar] [CrossRef]

- Svoray, T.; Dorman, M.; Shahar, G.; Kloog, I. Demonstrating the Effect of Exposure to Nature on Happy Facial Expressions via Flickr Data: Advantages of Non-Intrusive Social Network Data Analyses and Geoinformatics Methodologies. J. Environ. Psychol. 2018, 58, 93–100. [Google Scholar] [CrossRef]

- Liu, Q.; Luo, S.; Shen, Y.; Zhu, Z.; Yao, X.; Li, Q.; Tarin, M.W.K.; Zheng, J.; Zhuo, Z. Relationships between Students’ Demographic Characteristics, Perceived Naturalness and Patterns of Use Associated with Campus Green Space, and Self-Rated Restoration and Health. Urban For. Urban Green. 2022, 68, 127474. [Google Scholar] [CrossRef]

- Völker, S.; Kistemann, T. The Impact of Blue Space on Human Health and Well-Being—Salutogenetic Health Effects of Inland Surface Waters: A Review. Int. J. Hyg. Environ. Health 2011, 214, 449–460. [Google Scholar] [CrossRef] [PubMed]

- Rachmi, C.N.; Agho, K.E.; Li, M.; Baur, L.A. Stunting, Underweight and Overweight in Children Aged 2.0–4.9 Years in Indonesia: Prevalence Trends and Associated Risk Factors. PLOS ONE 2016, 11, e0154756. [Google Scholar] [CrossRef] [PubMed]

- Frijda, N.H.; Kuipers, P. Relations Among Emotion, Appraisal, and Emotional Action Readiness. J. Personal. Soc. Psychol. 1989, 57, 212–228. [Google Scholar] [CrossRef]

- Ulrich, R.S.; Simons, R.F.; Losito, B.D.; Fiorito, E.; Miles, M.A.; Zelson, M. Stress Recovery during Exposure to Natural and Urban Environments. J. Environ. Psychol. 1991, 11, 201–230. [Google Scholar] [CrossRef]

- Kaplan, S. The Restorative Benefits of Nature: Toward an Integrative Framework. J. Environ. Psychol. 1995, 15, 169–182. [Google Scholar] [CrossRef]

- Luo, J.; Zhao, T.; Cao, L.; Biljecki, F. Semantic Riverscapes: Perception and Evaluation of Linear Landscapes from Oblique Imagery Using Computer Vision. Landsc. Urban Plan. 2022, 228, 104569. [Google Scholar] [CrossRef]

- Birenboim, A.; Dijst, M.; Ettema, D.; De Kruijf, J.; De Leeuw, G.; Dogterom, N. The Utilization of Immersive Virtual Environments for the Investigation of Environmental Preferences. Landsc. Urban Plan. 2019, 189, 129–138. [Google Scholar] [CrossRef]

- Lynch, K. The Image of the City, 33rd ed.; Publication of the Joint Center for Urban Studies; 33. Print; M.I.T. Press: Cambridge, MA, USA, 2008; ISBN 978-0-262-12004-3. [Google Scholar]

- Kaplan, R.; Kaplan, S. The Experience of Nature: A Psychological Perspective; Cambridge University Press: Cambridge, UK, 1989. [Google Scholar]

- Liu, P.; Zhao, T.; Luo, J.; Lei, B.; Frei, M.; Miller, C.; Biljecki, F. Towards Human-Centric Digital Twins: Leveraging Computer Vision and Graph Models to Predict Outdoor Comfort. Sustain. Cities Soc. 2023, 93, 104480. [Google Scholar] [CrossRef]

- Wang, L.; Han, X.; He, J.; Jung, T. Measuring Residents’ Perceptions of City Streets to Inform Better Street Planning through Deep Learning and Space Syntax. ISPRS J. Photogramm. Remote Sens. 2022, 190, 215–230. [Google Scholar] [CrossRef]

- Qiu, W.; Li, W.; Liu, X.; Huang, X. Subjectively Measured Streetscape Perceptions to Inform Urban Design Strategies for Shanghai. ISPRS Int. J. Geo-Inf. 2021, 10, 493. [Google Scholar] [CrossRef]

- Ma, X.; Ma, C.; Wu, C.; Xi, Y.; Yang, R.; Peng, N.; Zhang, C.; Ren, F. Measuring Human Perceptions of Streetscapes to Better Inform Urban Renewal: A Perspective of Scene Semantic Parsing. Cities 2021, 110, 103086. [Google Scholar] [CrossRef]

- Yao, Y.; Liang, Z.; Yuan, Z.; Liu, P.; Bie, Y.; Zhang, J.; Wang, R.; Wang, J.; Guan, Q. A Human-Machine Adversarial Scoring Framework for Urban Perception Assessment Using Street-View Images. Int. J. Geogr. Inf. Sci. 2019, 33, 2363–2384. [Google Scholar] [CrossRef]

| Measures | Categories | Self-Report Experiment | Facial Recognition Experiment | Total | ||||

|---|---|---|---|---|---|---|---|---|

| Male | Female | Male | Female | Male | Female | All | ||

| Amount | 52 (40.6%) | 56 (43.8%) | 10 (7.8%) | 10 (7.8%) | 62 (48.4%) | 66 (51.6%) | 128 (100%) | |

| Age | 16–20 | 7 (5.5%) | 11 (8.6%) | 0 (0.0%) | 1 (0.8%) | 7 (5.5%) | 12 (9.4%) | 19 (14.8%) |

| 21–25 | 10 (7.8%) | 4 (3.1%) | 10 (7.8%) | 8 (6.3%) | 20 (15.6%) | 12 (9.4%) | 32 (25.0%) | |

| 26–30 | 4 (3.1%) | 8 (6.3%) | 0 (0.0%) | 1 (0.8%) | 4 (3.1%) | 9 (7.0%) | 13 (10.2%) | |

| 31–35 | 11 (8.6%) | 15 (11.7%) | 0 (0.0%) | 0 (0.0%) | 11 (8.6%) | 15 (11.7%) | 26 (20.3%) | |

| 36–40 | 11 (8.6%) | 15 (11.7%) | 0 (0.0%) | 0 (0.0%) | 11 (8.6%) | 15 (11.7%) | 26 (20.3%) | |

| 41–45 | 9 (7.9%) | 3 (2.3%) | 0 (0.0%) | 0 (0.0%) | 9 (7.9%) | 3 (2.3%) | 12 (9.4%) | |

| Mean | 31.46 | 30.50 | 23.90 | 23.10 | 30.10 | 29.36 | 29.72 | |

| S.D. | 8.55 | 7.86 | 0.74 | 1.45 | 8.42 | 7.77 | 8.07 | |

| Race | Chinese | 52 (40.6%) | 56 (43.8%) | 10 (7.8%) | 10 (7.8%) | 62 (48.4%) | 66 (51.6%) | 128 (100%) |

| Country living in before 15 years old | China | 52 (40.6%) | 56 (43.8%) | 10 (7.8%) | 10 (7.8%) | 62 (48.4%) | 66 (51.6%) | 128 (100%) |

| Landscape/urban planning/architecture related field | Yes | 11 (8.6%) | 5 (3.9%) | 0 (0.0%) | 0 (0.0%) | 11 (8.6%) | 5 (3.9%) | 16 (12.5%) |

| No | 41 (32.0%) | 49 (38.3%) | 10 (7.8%) | 10 (7.8%) | 51 (39.8%) | 61 (47.7%) | 112 (87.5%) | |

| Have been to UK | Yes | 4 (3.1%) | 3 (2.3%) | 0 (0.0%) | 0 (0.0%) | 4 (3.1%) | 3 (2.3%) | 7 (5.5%) |

| No | 48 (37.5%) | 53 (41.4%) | 10 (7.8%) | 10 (7.8%) | 58 (45.3%) | 63 (49.2%) | 121 (94.5%) | |

| Have been to Japan | Yes | 3 (2.3%) | 0 (0.0%) | 0 (0.0%) | 0 (0.0%) | 3 (2.3%) | 0 (0.0%) | 3 (2.3%) |

| No | 49 (38.3%) | 56 (43.8%) | 10 (7.8%) | 10 (7.8%) | 59 (46.1%) | 66 (51.6%) | 125 (97.7%) | |

| Clip | Measures | Men | Women | ||||

|---|---|---|---|---|---|---|---|

| N | Mean | SD | N | Mean | SD | ||

| C1 | Valence_FER | 240 | 0.03 | 0.18 | 240 | 0.01 | 0.23 |

| Arousal_FER | 240 | 0.32 | 0.11 | 240 | 0.32 | 0.08 | |

| Preference_FER | 10 | 0.21 | 0.15 | 10 | 0.27 | 0.11 | |

| Valence_SAM | 52 | 0.14 | 0.41 | 56 | 0.16 | 0.47 | |

| Arousal_SAM | 52 | −0.01 | 0.48 | 56 | −0.06 | 0.49 | |

| Preference_SAM | 52 | 0.19 | 0.45 | 56 | 0.11 | 0.45 | |

| C5 | Valence_FER | 240 | −0.01 | 0.18 | 240 | 0.00 | 0.25 |

| Arousal_FER | 240 | 0.33 | 0.14 | 240 | 0.33 | 0.08 | |

| Preference_FER | 10 | 0.15 | 0.14 | 10 | 0.29 | 0.10 | |

| Valence_SAM | 52 | 0.20 | 0.45 | 56 | 0.14 | 0.48 | |

| Arousal_SAM | 52 | −0.01 | 0.45 | 56 | −0.05 | 0.45 | |

| Preference_SAM | 52 | 0.12 | 0.45 | 56 | 0.16 | 0.46 | |

| C7 | Valence_FER | 240 | 0.05 | 0.15 | 240 | −0.01 | 0.16 |

| Arousal_FER | 240 | 0.30 | 0.12 | 240 | 0.29 | 0.06 | |

| Preference_FER | 10 | 0.06 | 0.16 | 10 | 0.13 | 0.13 | |

| Valence_SAM | 52 | 0.12 | 0.47 | 56 | 0.11 | 0.44 | |

| Arousal_SAM | 52 | 0.04 | 0.45 | 56 | −0.07 | 0.42 | |

| Preference_SAM | 52 | 0.13 | 0.44 | 56 | 0.05 | 0.42 | |

| C9 | Valence_FER | 240 | 0.05 | 0.15 | 240 | 0.03 | 0.23 |

| Arousal_FER | 240 | 0.31 | 0.12 | 240 | 0.29 | 0.08 | |

| Preference_FER | 10 | 0.11 | 0.10 | 10 | 0.17 | 0.12 | |

| Valence_SAM | 52 | 0.21 | 0.48 | 56 | 0.23 | 0.46 | |

| Arousal_SAM | 52 | 0.00 | 0.47 | 56 | −0.02 | 0.45 | |

| Preference_SAM | 52 | 0.17 | 0.47 | 56 | 0.21 | 0.42 | |

| C11 | Valence_FER | 240 | 0.04 | 0.14 | 240 | 0.00 | 0.19 |

| Arousal_FER | 240 | 0.30 | 0.10 | 240 | 0.31 | 0.07 | |

| Preference_FER | 10 | 0.18 | 0.10 | 10 | 0.28 | 0.06 | |

| Valence_SAM | 52 | 0.21 | 0.39 | 56 | 0.23 | 0.47 | |

| Arousal_SAM | 52 | 0.09 | 0.43 | 56 | 0.05 | 0.48 | |

| Preference_SAM | 52 | 0.24 | 0.42 | 56 | 0.23 | 0.44 | |

| C13 | Valence_FER | 240 | 0.02 | 0.17 | 240 | 0.04 | 0.20 |

| Arousal_FER | 240 | 0.32 | 0.10 | 240 | 0.30 | 0.09 | |

| Preference_FER | 10 | 0.08 | 0.11 | 10 | 0.15 | 0.08 | |

| Valence_SAM | 52 | 0.25 | 0.40 | 56 | 0.20 | 0.48 | |

| Arousal_SAM | 52 | 0.04 | 0.47 | 56 | 0.02 | 0.46 | |

| Preference_SAM | 52 | 0.23 | 0.44 | 56 | 0.19 | 0.43 | |

| C15 | Valence_FER | 240 | 0.04 | 0.15 | 240 | 0.03 | 0.23 |

| Arousal_FER | 240 | 0.30 | 0.10 | 240 | 0.29 | 0.08 | |

| Preference_FER | 10 | 0.16 | 0.13 | 10 | 0.27 | 0.13 | |

| Valence_SAM | 52 | 0.21 | 0.40 | 56 | 0.15 | 0.42 | |

| Valence_SAM | 52 | 0.21 | 0.40 | 56 | 0.15 | 0.42 | |

| Arousal_SAM | 52 | 0.05 | 0.44 | 56 | 0.02 | 0.45 | |

| Preference_SAM | 52 | 0.23 | 0.39 | 56 | 0.18 | 0.45 | |

| C17 | Valence_FER | 240 | 0.06 | 0.20 | 240 | 0.02 | 0.18 |

| Arousal_FER | 240 | 0.33 | 0.11 | 240 | 0.27 | 0.08 | |

| Preference_FER | 10 | 0.03 | 0.16 | 10 | 0.11 | 0.10 | |

| Valence_SAM | 52 | 0.23 | 0.40 | 56 | 0.17 | 0.47 | |

| Arousal_SAM | 52 | 0.11 | 0.43 | 56 | 0.04 | 0.46 | |

| Preference_SAM | 52 | 0.25 | 0.45 | 56 | 0.19 | 0.43 | |

| C19 | Valence_FER | 240 | 0.10 | 0.12 | 240 | 0.03 | 0.22 |

| Arousal_FER | 240 | 0.30 | 0.08 | 240 | 0.30 | 0.06 | |

| Preference_FER | 10 | 0.22 | 0.10 | 10 | 0.20 | 0.14 | |

| Valence_SAM | 52 | 0.23 | 0.38 | 56 | 0.10 | 0.44 | |

| Arousal_SAM | 52 | 0.05 | 0.42 | 56 | 0.00 | 0.45 | |

| Preference_SAM | 52 | 0.18 | 0.45 | 56 | 0.13 | 0.47 | |

| Visual Variables | Male | |||

| Valence_FER Adj. R2 = 0.076 | Arousal_FER Adj. R2 = 0.034 | |||

| t-Value | 95%CI | t-Value | 95%CI | |

| GVI | 2.923 ** | [0.010, 0.051] | ||

| Sky | 4.336 ** | [0.073, 0.193] | ||

| Building | ||||

| Constant | 0.460 | [−1.423, 2.288] | 43.759 ** | [28.050, 30.696] |

| Visual Variables | Female | |||

| Valence_FER Adj. R2 = 0.135 | Arousal_FER Adj. R2 = 0.000 | |||

| t-Value | 95%CI | t-Value | 95%CI | |

| GVI | 4.737 ** | [0.134, 0.324] | ||

| Sky | 5.961 ** | [0.207, 0.411] | ||

| Building | 3.700 ** | [0.104, 0.342] | ||

| Constant | −4.902 ** | [−31.952, −13.624] | 196.744 ** | [29.708, 30.310] |

| Visual Variables | Male | |||

| Valence_FER Adj. R2 = 0.164 | Arousal_FER Adj. R2 = 0.045 | |||

| t-Value | 95%CI | t-Value | 95%CI | |

| VPI | 3.322 ** | [0.013, 0.049] | ||

| Water | 4.834 ** | [0.080, 0.189] | ||

| Sky | 4.633 ** | [0.078, 0.193] | ||

| Building | ||||

| Constant | −0.227 | [−1.990, 1.579] | 52.377 ** | [28.371, 30.590] |

| Visual Variables | Female | |||

| Valence_FER Adj. R2 = 0.131 | Arousal_FER Adj. R2 = 0.178 | |||

| t-Value | 95%CI | t-Value | 95%CI | |

| VPI | 6.996 ** | [0.072, 0.128] | ||

| Water | 4.387 ** | [0.125, 0.329] | ||

| Sky | 5.915 ** | [0.206, 0.413] | 4.541 ** | [0.055, 0.138] |

| Building | 3.688 ** | [0.104, 0.342] | 4.035 ** | [0.047, 0.137] |

| Constant | −4.879 ** | [−32.069, −13.612] | 15.209 ** | [18.232, 23.662] |

| Visual Variables | Male | |||

| Valence_FER Adj. R2 = 0.258 | Arousal_FER Adj. R2 = 0.108 | |||

| t-Value | 95%CI | t-Value | 95%CI | |

| Tree | −6.101 ** | [−0.237, −0.121] | −1.865 * | [−0.088, 0.002] |

| Grass | ||||

| Shrub | −4.152 ** | [−0.080, −0.028] | −4.313 ** | [−0.060, −0.022] |

| Water | 4.097 ** | −3.073 ** | [−0.078, −0.017] | |

| Sky | [0.059, 0.169] | −3.089 ** | [−0.127, −0.028] | |

| Building | −2.377 * | [−0.195, −0.018] | −3.527 ** | [−0.144, −0.041] |

| Constant | 10.485 ** | [6.968, 10.195] | 27.773 ** | [33.079, 38.133] |

| Visual Variables | Female | |||

| Valence_FER Adj. R2 = 0.175 | Arousal_FER Adj. R2 = 0.245 | |||

| t-Value | 95%CI | t-Value | 95%CI | |

| Tree | 7.131 ** | [0.128, 0.226] | ||

| Grass | 5.813 ** | [0.049, 0.099] | 6.480 ** | [0.062, 0.117] |

| Shrub | 8.207 ** | [0.096, 0.157] | ||

| Water | 1.808 | [−0.004, 0.087] | ||

| Sky | 1.851 | [−0.003, 0.095] | 6.525 ** | [0.121, 0.226] |

| Building | 5.487 ** | [0.092, 0.195] | ||

| Constant | −2.318 * | [−3.177, −0.257] | 10.314 ** | [13.586, 20.007] |

| Aesthetic Preference | Pearson’s r, p Value | |||

|---|---|---|---|---|

| Male | Female | |||

| Valence_SAM | 0.801 ** | 0.000 | 0.702 ** | 0.000 |

| Arousal_SAM | 0.487 ** | 0.000 | 0.433 ** | 0.000 |

| Aesthetic Preference | Pearson’s r, p Value | |||

|---|---|---|---|---|

| Male | Female | |||

| Valence_FER_max | 0.249 * | 0.018 | 0.205 | 0.053 |

| Valence_FER_ave | 0.094 | 0.292 | 0.205 | 0.053 |

| Valence_FER_min | 0.112 | 0.292 | 0.205 | 0.053 |

| Arousal_FER_max | −0.037 | 0.732 | 0.205 | 0.053 |

| Arousal_FER_ave | 0.010 | 0.926 | 0.298 ** | 0.004 |

| Arousal_FER_min | 0.017 | 0.873 | 0.158 | 0.137 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Han, H.; Shen, G. Real-Time Sensor-Based and Self-Reported Emotional Perceptions of Urban Green-Blue Spaces: Exploring Gender Differences with FER and SAM. Sensors 2025, 25, 748. https://doi.org/10.3390/s25030748

Zhang X, Han H, Shen G. Real-Time Sensor-Based and Self-Reported Emotional Perceptions of Urban Green-Blue Spaces: Exploring Gender Differences with FER and SAM. Sensors. 2025; 25(3):748. https://doi.org/10.3390/s25030748

Chicago/Turabian StyleZhang, Xuan, Haoying Han, and Guoqiang Shen. 2025. "Real-Time Sensor-Based and Self-Reported Emotional Perceptions of Urban Green-Blue Spaces: Exploring Gender Differences with FER and SAM" Sensors 25, no. 3: 748. https://doi.org/10.3390/s25030748

APA StyleZhang, X., Han, H., & Shen, G. (2025). Real-Time Sensor-Based and Self-Reported Emotional Perceptions of Urban Green-Blue Spaces: Exploring Gender Differences with FER and SAM. Sensors, 25(3), 748. https://doi.org/10.3390/s25030748