Abstract

This study presents a method for the active control of a follow-up lower extremity exoskeleton rehabilitation robot (LEERR) based on human motion intention recognition. Initially, to effectively support body weight and compensate for the vertical movement of the human center of mass, a vision-driven follow-and-track control strategy is proposed. Subsequently, an algorithm for recognizing human motion intentions based on machine learning is proposed for human-robot collaboration tasks. A muscle–machine interface is constructed using a bi-directional long short-term memory (BiLSTM) network, which decodes multichannel surface electromyography (sEMG) signals into flexion and extension angles of the hip and knee joints in the sagittal plane. The hyperparameters of the BiLSTM network are optimized using the quantum-behaved particle swarm optimization (QPSO) algorithm, resulting in a QPSO-BiLSTM hybrid model that enables continuous real-time estimation of human motion intentions. Further, to address the uncertain nonlinear dynamics of the wearer-exoskeleton robot system, a dual radial basis function neural network adaptive sliding mode Controller (DRBFNNASMC) is designed to generate control torques, thereby enabling the precise tracking of motion trajectories generated by the muscle–machine interface. Experimental results indicate that the follow-up-assisted frame can accurately track human motion trajectories. The QPSO-BiLSTM network outperforms traditional BiLSTM and PSO-BiLSTM networks in predicting continuous lower limb motion, while the DRBFNNASMC controller demonstrates superior gait tracking performance compared to the fuzzy compensated adaptive sliding mode control (FCASMC) algorithm and the traditional proportional–integral–derivative (PID) control algorithm.

1. Introduction

In recent years, stroke has emerged as a major cause of death and disability among adults [1,2]. Statistics indicate that Europe has approximately 1.4 million new stroke cases annually, while the United States reports over 800,000 new cases each year [3]. In China, there are 28.76 million current and former stroke patients, with 3.94 million new strokes and 2.19 million new deaths each year [4], with the incidence rate on the rise. Among these patients, around 65% require rehabilitation [5]. Traditional manual rehabilitation training faces many challenges, including a high patient volume and a limited number of rehabilitation specialists, which makes it difficult to achieve consistent training. Additionally, the costs of manual rehabilitation training are quite high [6]. In contrast, rehabilitation robots present notable benefits, such as providing consistent repetitive training and allowing for flexible and adjustable training intensity. They can deliver repetitive rehabilitation exercises for the legs, thereby enhancing patients’ physical ability [7]. This approach aids in promoting the compensation or reorganization of neural tissue function, remedying the functional deficits of damaged nerve cells, and ultimately facilitating the recovery of neural function to help patients restore their normal gait early recovery [8,9,10].

To realize the rehabilitation goals of stroke patients, the medical field typically integrates physiotherapy techniques (like electrical stimulation and massage) with treadmill-assisted exercise. Bodyweight-supported treadmill training (BWSTT) plays a dominant role in rehabilitation training. By adjusting the level of weight support, BWSTT can reduce the load on the lower limbs, providing safe and repeatable small-range gait training [11,12]. However, BWSTT is primarily conducted on a treadmill, lacking in real environments. Long-term reliance on BWSTT may affect the recovery of natural gait. To address the issue of abnormal gait in the BWSTT system, ground-walking exoskeleton systems have been introduced into rehabilitation training. These systems are typically equipped with active hip and knee joint devices [13], assisting patients in achieving ground-based walking. However, this type of exoskeleton faces challenges in maintaining balance, requiring users to exert significant effort using crutches or handrails for support. Additionally, they need to perform hip-lifting movements during walking to compensate for the vertical movement of the center of mass, which adds extra difficulty to gait training. To enhance patients’ active participation and improve the effectiveness of rehabilitation training, introducing human motion intention recognition technology is particularly important. This method can detect the patient’s movement state during rehabilitation exercises, ensuring both the safety and effectiveness of the rehabilitation process [14]. Human motion intention can be interpreted from two levels: dynamics and kinematics. At the dynamics level, the focus is on parameters related to force/torque in the human body, such as joint torque [15] and adaptive stiffness [16]. At the kinematics level, attention is paid to movement characteristics such as joint angles [17], joint angular velocity [18], and joint angular acceleration. This study primarily focuses on the kinematics level, identifying patients’ motion intentions during rehabilitation training by analyzing parameters such as joint angles, angular velocity, and angular acceleration. This approach enables a more precise capture of the patient’s movement characteristics, allowing for a more targeted rehabilitation training plan that promotes neurological recovery and gait reconstruction.

The sEMG signals are common physiological indicators of human motion intention. Through feature extraction algorithms, information highly correlated with the wearer’s motion intention can be extracted from biological signals to predict their motion intention [19,20]. There exist two main approaches for active gait control based on sEMG signals. The first method uses sEMG signals to identify different movement patterns, with a focus on designing classification algorithms with high recognition rates and multiple movement patterns [21,22]. However, sEMG signals with a fixed number of channels can only recognize a limited range of movement patterns, and the recognition results usually serve only as trigger signals to control the robot’s movement. This may affect the coordination between the exoskeleton and patients who still retain some lower limb muscle strength. The second method continuously estimates motion variables using sEMG signals, thereby achieving smooth motion control. To address the issue of delayed motion control in exoskeletons, Wang et al. proposed a lower limb continuous motion estimation method combining sEMG signals, deep belief networks, and random forest algorithms. This method enhances the accuracy and response speed of exoskeleton robot motion control by recognizing the wearer’s motion intentions [23]. Sun et al. introduced a feature-based convolutional neural network-bi-directional long-short term memory (CNN-BiLSTM) network model that integrates sEMG and inertial measurement unit (IMU) data for more accurate and real-time knee joint angle prediction. By optimizing feature selection using ensemble feature scorer (EFS) and profile likelihood maximization (PLM) algorithms, they significantly improved prediction performance [24]. Experimental results showed that this method outperformed traditional methods in both accuracy and real-time performance, thereby rendering it appropriate for lower limb rehabilitation training. Zeng et al. proposed a sEMG–Transformer-based continuous multi-joint angle motion prediction model for the lower limbs, which was applied to a lower extremity exoskeleton rehabilitation robot, successfully achieving more precise motion intention estimation and synchronized walking [25]. Experimental results indicated that this model outperformed existing methods such as convolutional neural networks (CNNs), back propagation (BP), and long short-term memory networks (LSTMs) in prediction performance. Although the above methods have been successfully validated on specific platforms, the complex modeling and calibration processes limit their broad application across individuals. To solve this problem, future research should focus on simplifying the model calibration process and improving its adaptability and generalization across different individuals, thereby promoting the widespread application of sEMG-based active gait control in rehabilitation training.

The motion control strategies for exoskeleton robots are closely relevant to intention recognition [26]. The control algorithm should follow the user’s motion intention as soon as human movement is recognized [27], making sure the robot moves in the same direction as the human body [28,29]. In the research on control algorithms for lower limb exoskeletons, iterative learning algorithms [30], adaptive algorithms [31], and fuzzy PID algorithms [32,33] are often used in embedded development. More researchers are integrating AI techniques as a result of the rapid development of computer technology and artificial intelligence—such as neural networks [34], machine learning [35], adaptive control [36], sliding mode control [37], and intelligent swarm algorithms [38,39]—with traditional control technologies to achieve precise tracking of human gait curves by exoskeletons. A humanoid neural network sliding mode controller based on human gait was proposed by Yu et al. With parameterized gait trajectories as targets, this controller creates a humanoid model based on human biomechanics and designs a humanoid control system for the robot. Given the errors in the dynamic model of the robot, neural networks are used to improve the precision of the model by compensating for the uncertain aspects. Furthermore, the system’s sliding mode control enhances stability, tracking efficiency, and response time [40]. Sun et al. introduced a compound position control strategy that employs secondary sliding mode control. This method utilizes bis-finite-time observers to monitor and compensate for two types of perturbations in real-time, and a super-twisting approach for the position control part to guarantee that the trajectory tracking error of the knee joint converges to zero in finite time [41]. Kenas et al. employed two radial basis function (RBF) neural networks to precisely estimate perturbations and uncertainty parameters. They integrated backstepping techniques with the super-twisting algorithm to reduce tracking errors, and the controller’s stability was validated using Lyapunov theory, ensuring precise tracking of the target trajectory [42]. In summary, the combination of RBF neural networks with sliding mode control can effectively compensate for and counteract external disturbances and friction, enabling precise tracking of gait trajectories in LEERR.

Existing LEERR systems have certain limitations in design and application, resulting in low levels of patient engagement in rehabilitation training and have consequently reduced rehabilitation efficacy. To address these issues, this study focuses on the following three key questions: (1) How can we combine the advantages of body-weight-supported and follow-up lower limb exoskeleton platforms to develop a new follow-up LEERR and propose a completed active control solution? (2) How can we leverage physiological information from the human body to achieve accurate and continuous motion intention estimation, enhancing the wearer’s active participation and thereby promoting more effective rehabilitation training? (3) How can we select an appropriate trajectory tracking controller to achieve precise gait trajectory tracking control for trajectories generated at the muscle–machine interface?

2. Methods

2.1. Overall System Framework

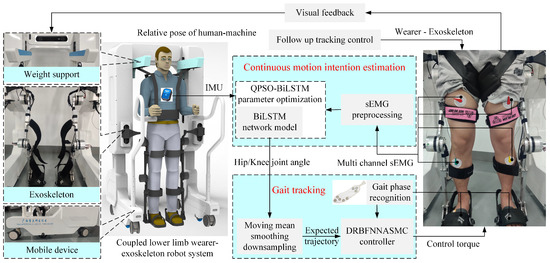

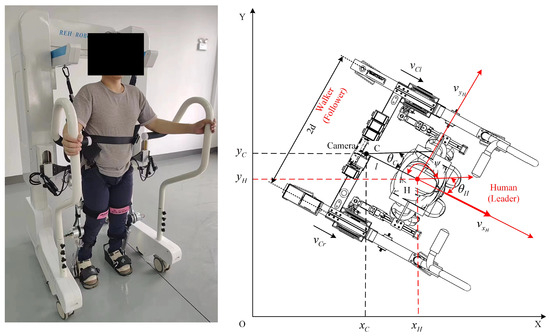

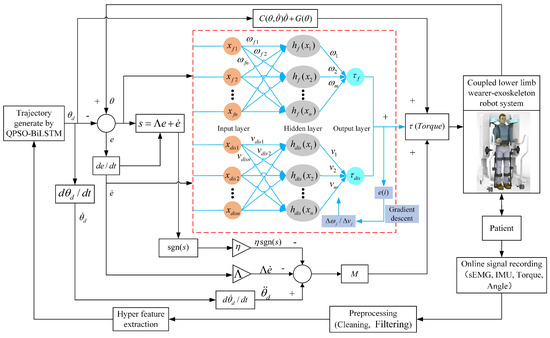

The framework of the proposed active control algorithm in this study is illustrated in Figure 1. The device mainly comprises three components: (1) Follow-up assistive frame: This component regulates the vertical motion of a weight-reducing cantilever by retracting and releasing a steel cable via a DC motor to provide follow-up body weight support. This design prevents potential falls during rehabilitation and compensates for the vertical movement of the center of mass during walking, correcting the improper hip-lifting gait post-stroke. (2) LEERR: The robot employs a bionic humanoid joint exoskeleton design to achieve high consistency between the exoskeleton and human joint movements, effectively simulating and supporting natural gait motion. (3) Sensor information system: This system includes a ZED stereo camera, IMU, and sEMG sensors. The ZED stereo camera captures depth information between the user and the follow-up assistive frame, while the IMU and sEMG sensors collect joint angles and sEMG signals to establish a mapping relationship between joint movements and myoelectric signals. The theoretical research also consists of three parts: (1) 3D human information extraction: Using the Python-OpenPose algorithm to detect the 2D shoulder information of the human body and combining it with binocular ranging principles to obtain depth information, a velocity controller is designed to achieve active tracking of the follow-up assistive frame. (2) Muscle–machine interface construction: A BiLSTM network is employed to construct the muscle–machine interface, mapping multi-channel sEMG signals in real-time to hip and knee joint movement angles. The QPSO algorithm is used for optimizing the hyperparameters of the BiLSTM network, forming a QPSO-BiLSTM model for continuous real-time estimation of human motion intentions. (3) Trajectory tracking controller design: A DRBFNNASMC controller is designed to generate control torques, enabling the robot to accurately track the trajectories generated by the muscle–machine interface.

Figure 1.

Active control framework of LEERR.

2.2. Vision-Driven Follow-Up Tracking Control

In this section, the Python-OpenPose algorithm is used for detecting the 2D message of the human shoulder, which is then combined with binocular vision to obtain depth information, enabling the perception of the relative 3D coordinates of the human during walking. Based on this information, a kinematic model of the follow-up assistive frame is established, and a velocity controller is designed to actively follow human motion.

2.2.1. Three-Dimensional Human Information Extraction Based on Python-OpenPose and Binocular Vision

In the process of achieving active tracking using the assistive frame, perceiving the relative 3D coordinate information of a human during walking is essential. Many researchers have conducted in-depth studies on this content. Hou et al. proposed a feature-matching method combining the scale-invariant feature transform and random sample consensus algorithms to provide 2D navigation information for mobile robots, achieving precise path tracking and navigation [43]. Quan et al. introduced a navigation algorithm that integrates deep reinforcement learning and recurrent neural networks. By optimizing path exploration with dual networks and recurrent neural modules, they improved pathfinding efficiency and reduced path length [44]. Efstathiou et al. presented a lightweight framework that combines CNNs with LSTM networks for detecting and identifying gait features from 2D laser sensor data, enhancing the accuracy and efficiency of online leg tracking and gait analysis for mobile assistive robots [45]. Wang et al. introduced a visual navigation approach that utilizes object-level topological semantic mapping in conjunction with heuristic search techniques. This methodology employs active visual perception and goal-oriented path segmentation, resulting in the generation of smooth trajectories through the application of Bernstein polynomials, thereby improving the navigation success rate and path efficiency of autonomous mobile robots [46].

Although the aforementioned methods can successfully detect human-related information, using multiple sensors to obtain navigation information is costly. Binocular vision, as a single sensor source, offers high robustness and completeness but relies on precise binocular feature matching. OpenPose, an open-source human pose estimation library, can accurately identify human skeletal points in 2D and 3D spaces, providing precise joint positions for improved tracking of human relative position and posture. Therefore, this study captures human motion posture using a ZED stereo camera, detects the 2D information of the human shoulder through the Python-OpenPose algorithm, and obtains depth information with binocular vision, thus avoiding the complexity of binocular feature matching.

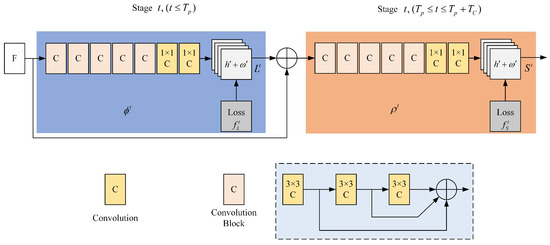

The network structure of OpenPose, illustrated in Figure 2, is primarily divided into two components: the left side is used for predicting the affinity field of key points, whereas the right side is used for predicting the confidence of key points. The input image is processed through a 10-layer Visual Geometry Group 19-layer network (VGG-19) network to extract image features F. Which are then used to generate predictions for the part affinity fields (PAF) and part confidence maps (PCM), respectively. Figure 2, and represent the CNNs used for predicting the PAF and PCM. The prediction formulas for the vector field and confidence are as follows:

Figure 2.

OpenPose network structure.

The network specifies the subsequent loss function for each stage t in each layer:

where represents a binary bit, when indicates that the position of point P is not labeled. and denote the network’s predicted value at point P, and and refer to the network’s actual values at point P. While training the confidence for key points, the confidence level for key points of the kth individual in the image exhibits akin to a Gaussian distribution centered around that position.

where k represents the kth individual, signifies the variance in the Gaussian function that regulates the range of actual joints. A higher value leads to an increase in , which in turn results in a decrease in prediction accuracy.

For the limb c of the kth individual in the image, the affinity vector field defined at pixel point P is as follows:

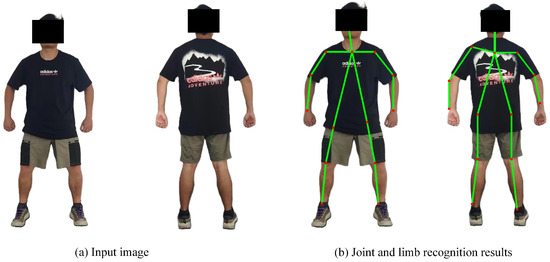

where is the unit vector that indicates the direction of the extremity, whereas and are the actual positions of and . When there is an overlap in the image, the average value of the affinity vector field at that point should be taken. By extracting the key points and their affinity vector fields, clustering analysis and optimization of the key points are performed, ultimately constructing the human skeleton(s) for single or multiple persons in the image. Figure 3 illustrates the outcomes of extracting the actual human skeleton using OpenPose, with the left and right corresponding to the input and output images of the ZED stereo camera, respectively, directly obtaining the 2D information of the main human joints. Considering the recognition accuracy of OpenPose and the placement of the ZED stereo camera on the follow-up assistive frame, this study primarily extracts the coordinates of both shoulders to guide tracking information for the follow-up assistive frame.

Figure 3.

Actual results of human joint and limb recognition based on Python-OpenPose. (The green lines represent the main skeleton of the human body, and the red dots represent the main key points of the human body).

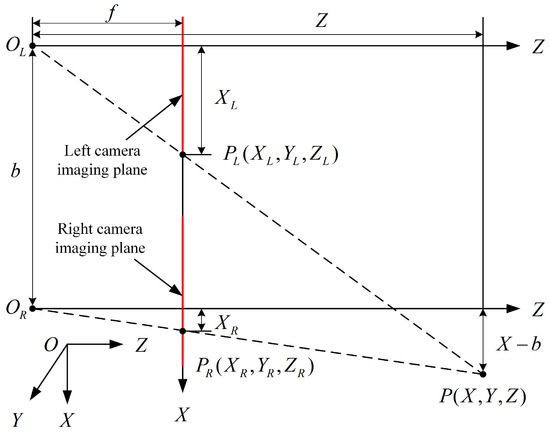

The above process only obtains the 2D shoulder coordinates of the human body, lacking depth information between the person and the camera. Since this study uses a stereo camera, depth information can be extracted using the principle of binocular vision. The principle of binocular vision is shown in Figure 4, where P represents the human shoulder. and are the optical centers of both cameras, whereas and are the image points on both sides. and denote the distances from the left and right image points to their corresponding optical centers, f is the focal length of the camera, b is the baseline distance of both camera centers, and Z is the depth information of P relative to optical centers. Based on the principle of similar triangles, the following relationship can be derived: . Thus, once the disparity is obtained, the depth information of the shoulder coordinates can be determined. Through the combination of Python-OpenPose and binocular vision, the 3D coordinates of both shoulders relative to the binocular center of the ZED stereo camera can be obtained, with the left shoulder coordinates denoted as and the right shoulder coordinates as .

Figure 4.

Principle of binocular ranging.

2.2.2. Follow-Up Assistive Frame Tracking Control

The kinematic model of the follow-up assistive frame is shown in Figure 5. The pose of the assistive frame in the coordinate system is represented by the vector , where and are the horizontal and vertical coordinates of the assistive frame in the X-Y plane. In this study, the coordinates of the assistive frame are set at the ZED stereo camera. The distance between the two drive wheels is , the radius of each drive wheel is r. is the orientation angle of the assistive frame, whereas and represent the linear and angular velocities of the assistive frame, respectively. In practical use, the assistive frame is equipped with anti-slip wheels, preventing lateral movement. Additionally, assuming that the centroid coincides with the geometric center, the frame must satisfy the pure rolling and no-slip conditions. Therefore, the following constraint equations apply:

Figure 5.

Human follow-up-assisted frame kinematic model.

The kinematic equations for the differential-drive follow-up assistive frame with two wheels are as follows:

During actual movement, the coordinates of the assistive frame can be obtained through encoders on the two drive wheels, and the coordinates of the person can be calculated using feedback parameters from the assistive frame and camera. The coordinates of the person can be represented as follows:

where l is the distance between the person and the camera, given by . is the orientation angle of the line connecting the person and the assistive frame. Since the assistive frame continuously moves in the direction of the person’s motion and the person holds the handrails on either side of the frame during movement, this geometric relationship can be approximated as .

During movement, the assistive frame needs to maintain a certain desired state relative to the person. Let the desired distance be and the desired orientation angle be . To achieve active following, the desired state of the assistive frame is defined by the person’s coordinates:

The tracking error of the assistive frame is defined as follows:

where can be fed back in real-time through the geometric relationship in double-shoulder coordinates. Consequently, the error differential matrix for the assistive frame is as follows:

A globally asymptotically stable velocity tracking controller has been designed according to the differential equations of the postural error, along with the method of inverse and Lyapunov stability theory. This controller utilizes the output linear velocity and angular velocity as assist control inputs to design the velocity controller for the follow-up-assisted frame:

where , and are all positive constants, and and are the desired linear and angular velocities, respectively. It can be obtained by taking the derivative of Equation (8) with respect to time.

2.3. Human Motion Intention Recognition

The methods for recognizing human motion intentions are typically categorized into discrete action classification [47] and continuous motion estimation [23,24,25]. Discrete action classification is limited to recognizing combinations of discrete joint movements and does not facilitate the continuous and unrestricted motion of a robot’s joints like human joints. In contrast, continuous motion estimation allows for smooth and continuous control of the robot’s joints by the patient, thus improving the coordination between stroke patients with partial autonomous movement ability and exoskeleton robots. From a kinematic perspective, human motion intention can be analyzed as joint angle trajectories, allowing for the establishment of a correlation between sEMG signals and joint angles. However, there is a complex nonlinear correlation exists between sEMG signals and joint angles. In this study, a BiLSTM network is used to build a muscle–machine interface that maps multi-channel sEMG signals into the motion angles of the lower limb joint in real time. Furthermore, the QPSO algorithm is used for optimizing the hyperparameters of the BiLSTM network, forming a QPSO-BiLSTM combined model to achieve continuous and real-time estimation of human motion intentions.

2.3.1. Quantum-Behaved Particle Swarm Optimization

QPSO is an advanced optimization algorithm that combines the concepts of quantum computing with traditional particle swarm optimization (PSO). In this algorithm, the state of a particle is determined by a wave function, meaning that the probability of a particle appearing in the search space depends solely on the amplitude of the wave function, unaffected by its previous trajectory. This characteristic enables particles to search the entire feasible space, increasing search diversity and helping to avoid local optima.

In QPSO, particles are updated through observation to obtain new individuals. Specifically, given the probability of observing a particle, its position can be determined. For each particle, multiple probabilities are randomly generated, and Monte Carlo methods are used to observe and obtain multiple individuals. The best individual among them is then selected, with the remaining individuals evaluated sequentially to ultimately obtain the next generation.

In QPSO, the average value is introduced to denote the historical best location of the particle swarm . The update steps for particles in the QPSO algorithm are as follows:

Step 1: Calculate the average best position [48].

where M represents the total count of particles, and indicates the of the ith particle in the iteration.

Step 2: Update the particle positions.

where is a random number between , denotes the current globally optimal particle, and is used to update the location of the ith particle.

The equation for updating the location of a particle is as follows:

where and u are random numbers between , taken with a probability of . represents the location of the ith particle, while denotes the contraction–expansion coefficient of the QPSO, which is used to control the rate of convergence. The most common way to adjust is to change linearly, and this is generally performed according to the following equation:

where denotes the max number of iterations, and and represent the max and min values of the parameter , respectively. Typically, .

2.3.2. BiLSTM Network

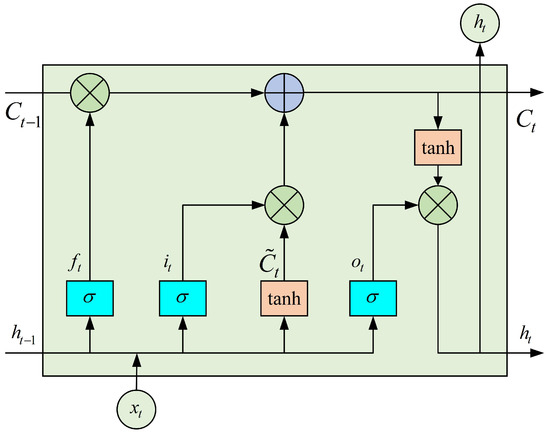

To address the issue of long-term dependency in recurrent neural networks (RNNs), researchers have proposed various new recurrent network architectures, such as LSTM networks, gated recursive units (GRUs) and BiLSTM networks. The LSTM network, by changing the recurrent structure of the RNN model, can effectively handle long-sequence data and offers enhanced long-term memory and modeling capabilities [49]. The architecture of LSTM is illustrated in Figure 6.

Figure 6.

LSTM unit structure.

Unlike traditional neural networks, the key parts of LSTM are the unit state and various gating mechanisms, which mainly include the forgetting gate, input gate, and output gate. Together, these determine the update of the unit state. These gating mechanisms use the sigmoid function to control the degree of information flow.

(1) Forget Gate: The first step in LSTM is to decide, via the forget gate, what information should be discarded from the unit state. It also extracts useful information from the external inputs and to incorporate into the internal state.

where and represent the weights and biases associated with the forgetting gate, respectively, while denotes the sigmoid function.

(2) Input Gate: The input gate is made up of two portions: a sigmoid layer that confirms which information will be renewed, and a tan h layer that produces a candidate vector containing the new information to be added.

where and represent the weights of the input gate and input node, respectively, whereas and denote the deviations, respectively.

By combining Equtions (16)–(18) the state information of the memory cell can be obtained, which updates by forgetting the part unit and renewing the memory unit.

(3) Output Gate: The output gate determines the portion of the unit state that will be utilized for generating the output.

where and are the output gate weights and biases, respectively.

In conclusion, the unit state undergoes processing through a tan h, which transforms the values to a range of −1∼1. Subsequently, this modified cell state is multiplied by the output from the output gate, thereby ensuring that only the designated cell state is produced as output.

where is the unit state and is the output gate.

Although LSTM addresses the issues of gradient vanishing or explosion in traditional RNNs [50], conventional LSTM networks can only encode information from one direction, meaning they process time-series data sequentially from the past to the future. In the LSTM model, exoskeleton gait trajectory parameters are trained as a time series from front to back. This unidirectional training method results in lower data utilization and fails to fully exploit intrinsic data features. Compared to traditional unidirectional LSTM networks, the BiLSTM network consists of two LSTM layers, forward and backward, which capture both past and future state information, thus improving prediction accuracy. By building a bidirectional RNN, BiLSTM effectively overcomes the limitations of LSTM in data information utilization [51,52].

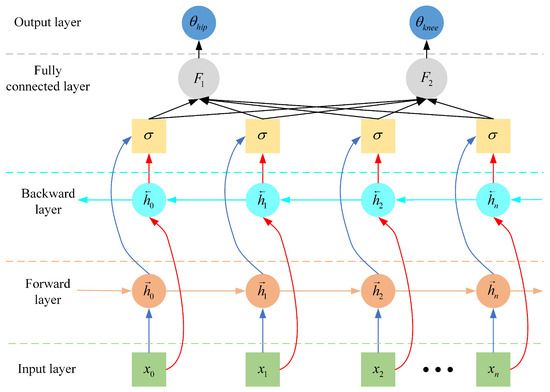

Therefore, we use a BiLSTM network to construct a muscle–machine interface to predict the lower limb joint angles. The structure of the BiLSTM model is shown in Figure 7, consisting mainly of an input layer, two LSTM layers (forward and backward), a fully connected layer, a forgetting layer, and an output layer. The input layer has six nodes, corresponding to the processed six-channel sEMG sequence signals. The fully connected layer integrates data features and performs classification, outputting results for two categories. The output layer consists of two nodes, corresponding to the flexion/extension joint angle values of the hip and knee joints. Through BiLSTM network training, a mapping between multi-channel sEMG signals and joint angles is achieved, generating the desired trajectories of the lower limb joints .

where and represent the forward and backward LSTM layers, respectively, whereas and denote the weight coefficients, respectively.

Figure 7.

BiLSTM model structure.

2.3.3. QPSO-BiLSTM Gait Trajectory Prediction

The QPSO algorithm is used for optimizing the parameters of the BiLSTM network, specifically the number of iterations, neurons, and the learning rate. After reading the time series, the dataset is partitioned into a training set and a test set, adhering to a 9:1 ratio. Once the BiLSTM network structure is determined, the neural network parameters are initialized, and QPSO optimizes the randomly initialized particle positions. The dataset is used to calculate the fitness value of each individual, which serves as one of the evaluation criteria. If the QPSO training criteria are met, the optimized parameters, such as the learning rate, are input into the BiLSTM network for training. Subsequently, the network undergoes validation utilizing the test set, resulting in the generation of predicted values. Following this, an anti-normalization is applied to obtain the ultima results. Eventually, the gait trajectory prediction performance is evaluated using mean absolute error (), mean absolute percentage error (), root mean square error (), and coefficient of determination (). The equations are delineated as follows:

where n stands for the number of predictions, refers to the real gait trajectory values, indicates the estimated values of gait trajectory, and represents the mean of the true values.

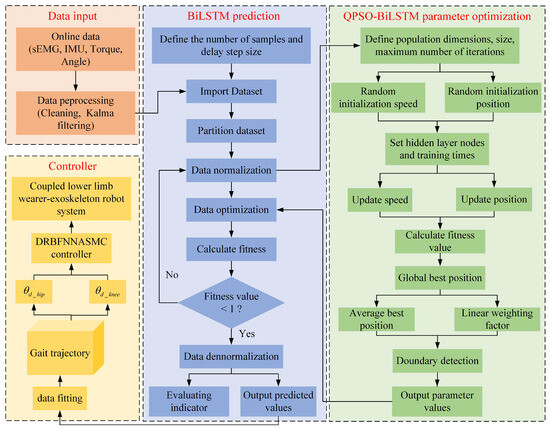

The gait trajectory prediction process includes four parts: data input, prediction, optimization, and control, as illustrated in Figure 8. The detailed steps are outlined below:

Step 1: The collected online data, including IMU and sEMG signals, are used as the model’s input.

Step 2: The sample data undergo normalization to a range of through the application of min–max normalization, according to Equation (27). Subsequently, the dataset is partitioned into training and testing samples in a ratio of 9:1.

where is the normalized data, whereas and are the min–max values of the sample, and x is the original sample data.

Step 3: Initialize the model’s hyperparameters, setting the number of hidden layers to m, learning rate to r, maximum iterations to , and population size to n.

Step 4: After randomly initializing the velocity and position, optimize the quantity of hidden layer nodes as well as the number of training iterations. Update the velocity and position, then calculate the fitness value and find the global optimal position.

Step 5: According to the global optimal position, introduce a linear weighting factor, calculate the average best position, perform boundary checking, and input it into the BiLSTM optimization module to recalculate particle fitness. If the fitness value is ≥1, recalculate; otherwise, proceed with metric evaluation and output the prediction results.

Step 6: Fit the predicted output values to obtain the hip and knee gait trajectories. Run the controller and apply it to the wearer–exoskeleton robot system.

Figure 8.

QPSO-BiLSTM gait trajectory prediction framework.

2.4. Control of Trajectory Tracking for LEERR Based on Motion Intent

This section designs the DRBFNNASMC controller for tracking the trajectories generated by the muscle–machine interface. The controller achieves global asymptotic tracking of joint trajectories in the presence of friction and external perturbation, demonstrating excellent control performance. The following will provide a detailed description of the controller design process and analyze the system’s state stability under the controller’s operation.

The 2-DOF LEERR dynamics model can be described as follows:

where represents the inertia matrix, denotes the centrifugal and Coriolis force matrix, stands for the gravity matrix, indicates the frictional force, refers to the external disturbances, and is the joint control torque input vector.

In actual rehabilitation training, uncertainties such as the friction between the exoskeleton insole and the ground and external disturbances are challenging to measure accurately. The RBF neural network is frequently employed for its superior approximation capabilities, particularly in modeling the nonlinear dynamics of controlled systems and minimizing approximation errors [53]. Therefore, this study adopts a dual RBF neural network to estimate the frictional force and external disturbances in Equation (25). Furthermore, by integrating the dual RBF neural network with sliding mode control, we propose the DRBFNNASMC control algorithm to track the gait trajectory predicted by the QPSO-BiLSTM. In a conventional RBF neural network, the correlation between the input and output vectors can be expressed as follows:

where x is the input of the neural network, j is the hidden layer, is the output of the Gaussian function, is the center vector of the Gaussian function, and is the width of the Gaussian function. and are the ideal weights, whereas and are the evaluated errors, and , . and are the ideal outputs.

Let the input to the neural network be defined as ; then, the output is given by the following:

where and are the Gaussian functions of the RBF neural network. For the RBF neural network, the gradient descent algorithm can be used to update the weights. The basic idea is to continuously adjust the values of and v in the direction of the negative gradient of until reaches a minimum. The approximation error criterion function for the network is defined as follows:

where represents the desired output vector, and denotes the real output vector. Following the gradient descent algorithm, the weight parameters can be modified in the following manner:

where . represents the learning rate, and denotes the momentum factor. As the RBF neural network learns online, , the weights and v will eventually stabilize, leading the system to achieve stability. The control objective for trajectory tracking is to make the actual angle value approach the ideal angle value continuously, i.e., , while is the ideal angle value, and is the real angle value.

Define the angle tracking error as . Taking the derivative of the error obtained , the sliding mode function is defined as follows:

Taking the derivative of Equation (36), we obtain the following:

The design of the trajectory tracking controller is as follows:

where and

Define the Lyapunov function for the closed-loop system as follows:

where .

The design of the adaptive rate is the following:

Substituting Equation (42) and Equation (43) into Equation (41), the following result can be obtained:

The approximation errors and of the neural network are positive real numbers that approach zero. Setting , we obtain . When , , and according to LaSalle’s invariance principle, the closed-loop system exhibits asymptotically stability. When , thus .

The designed DRBFNNASMC controller is illustrated in Figure 9. The inputs to this controller consist of the discrepancy between the target and actual angle values e, as well as the derivative of this error, represented as . The output is the motor torque . The motor torque acts on the coupled lower-limb wearer-exoskeleton robotic system, facilitating rehabilitation training for the patient. By recording sEMG, IMU, and motor encoder torque and angle data in real-time, feature data are extracted, and the QPSO-BiLSTM algorithm predicts trajectories to generate the patient’s required gait trajectory. By further integrating the dual RBF neural network with sliding mode control, the DRBFNNASMC controller is proposed to track the gait trajectory predicted by the QPSO-BiLSTM. Ultimately, the stability of the system is established through the application of the Lyapunov stability theory.

Figure 9.

DRBFNNASMC controller structure.

3. Results

To validate the effectiveness and accuracy of the proposed control scheme, a series of validation experiments were designed using a follow-up LEERR platform and its related systems, incorporating multiple submodules. The experimental results were then analyzed. Finally, through walking experiments with four participants, the subsystems were integrated to evaluate the overall control performance.

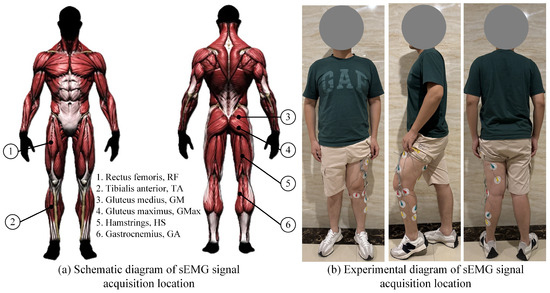

3.1. Data Collection and Preprocessing

In the experiment, sEMG signals, joint angles, and binocular vision data were primarily collected. The sEMG signals were acquired using a six-channel sEMG muscle electrical sensor module developed with Arduino, with a sampling rate of 600 kHz. The joint angles were calculated using the WitMotion IMU inertial navigation module with a sampling rate of 200 Hz. To eliminate high-frequency interference, a moving average filter was applied to both types of signals. Figure 10a,b show the schematic diagram and experimental setup of the six-channel sEMG signal acquisition positions, respectively, used to record the flexion/extension movement of the lower limb joints in the sagittal plane.

Figure 10.

Attachment positions of the six-channel sEMG sensors on the human body.

To process the sEMG signals, a Butterworth filter was applied. Considering the temporal mismatch between the high sampling rate of the sEMG signals and the low sampling rate of the posture angles, downsampling of the sEMG signals is required. The specific implementation is as follows:

where is the downsampled sEMG sequence, is the original sEMG sequence, n is the resampled data points, and M is the downsampling rate. To effectively extract the feature correspondence between the sEMG signal and joint angles, and to prevent the features from being overwhelmed by large volumes of data, both signals are normalized to express them in a dimensionless form. For visual information extraction, the chessboard method was used to calibrate the ZED stereo camera, obtaining the camera’s intrinsic, extrinsic, and distortion parameters for image correction.

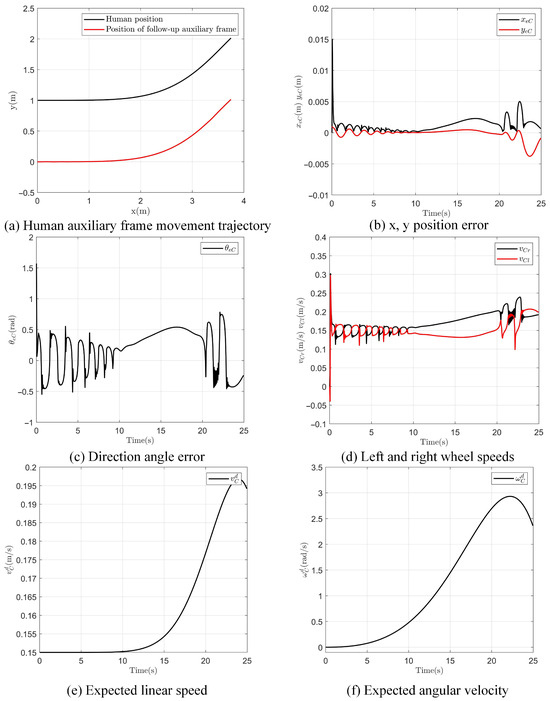

3.2. Follow-Up Tracking Control Experiment

To verify and assess the viability of the follow-up tracking control, a test experiment was conducted. In the experiment, the follow-up assistive frame, driven by vision-based control, generated control inputs for the two wheels using Equation (11) to achieve follow-up tracking of human walking. During the experiment, the controller’s control parameters were set as follows: 2 d = 0.4. The findings from the experiment are presented in Figure 11.

Figure 11.

Follow-up assistive frame and human follow-up tracking experiment results.

Figure 11a shows the movement trajectory of a person and the follow-up assistive frame in the x-y plane. Under the initial conditions, the person is 1 m in front of the follow-up assistive frame, which continuously maintains a following distance of 1 m. Figure 11b displays the position error in the x and y directions, with the results showing that the error is bounded, remaining between −0.005 m and 0.015 m. Figure 11c shows the directional angle error between the person and the follow-up assistive frame, with the error range between −0.6° and 1.6°. Figure 11d presents the left and right wheel speeds of the follow-up assistive frame, calculated using Equation (5) to control the frame’s movement. Figure 11e,f show the desired linear and angular velocities of the follow-up assistive frame, respectively, which are determined by the person’s posture relative to the frame.

From the above analysis, it is demonstrated that the follow-up assistive frame can achieve trajectory tracking of a person in the plane under vision-driven control. It should be noted that when the follow-up assistive frame is used in conjunction with the exoskeleton, since the exoskeleton only performs flexion/extension movements in the sagittal plane, the motion of the follow-up assistive frame is simplified to linear tracking in the forward and backward directions.

3.3. QPSO-BiLSTM Network Training and Gait Prediction

To build the training dataset for the QPSO-BiLSTM network, four participants (age: 24 ± 4 years, height: 168 ± 10 cm, weight: 72 ± 8 kg) were invited to participate in the experiment. After becoming fully familiar with the experimental procedures, the participants wore six-channel sEMG sensors, as illustrated in Figure 10, with sensors affixed to six muscles of the lower extremities to collect sEMG signals. Meanwhile, an IMU sensor was attached to the participant’s torso to collect posture angle signals. Combining these two types of signals, the angles of the lower limb joints in the sagittal plane were obtained.

During data collection, participants walked back and forth 15 times at a normal walking speed, with each walk lasting 2 min, followed by a 1-min break. During walking, each participant’s sEMG signals and joint angle signals were collected in real time to construct the training dataset. Since each participant has individualized muscle characteristics and joint motion models, a personalized continuous motion intention recognition model was trained for each participant. After processing the signals collected from a single participant, a total of 120,000 data points were generated, with six-channel sEMG signals as inputs and two-channel joint angles as outputs for training the QPSO-BiLSTM network. The entire input dataset was partitioned into two segments, with 90% allocated for model training and the remaining 10% designated for model testing. The network training was conducted using the MATLAB Deep Learning Toolbox, with model parameters set as illustrated in Table 1.

Table 1.

QPSO-BiLSTM network model training parameter settings.

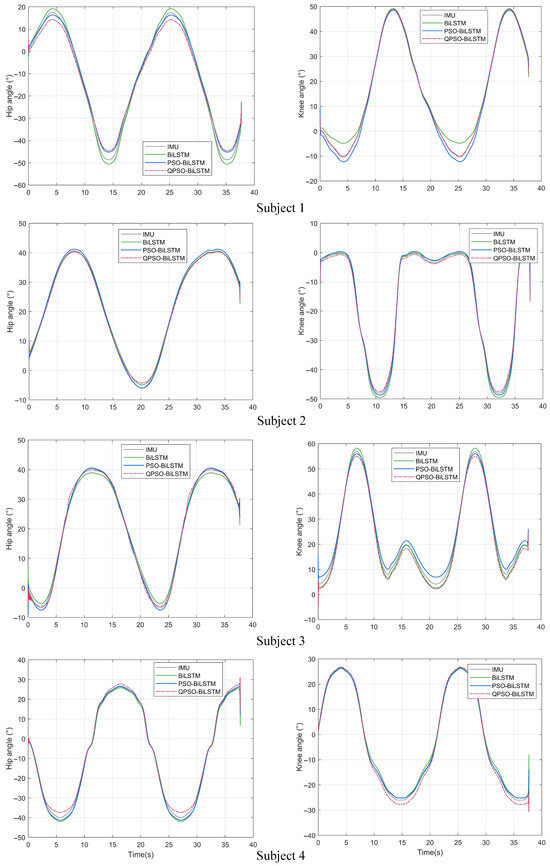

To evaluate the predictive performance of the QPSO-BiLSTM network, a comparative experiment was conducted. In the experiment, the participants walked at a normal speed at random, with their sEMG signals and joint angles collected. These signals were processed and used as test data. The test data were input into the trained QPSO-BiLSTM network to obtain the predicted joint angles, referred to as the QPSO-BiLSTM network predictions. The joint angles collected by the IMU sensor served as the true values, referred to as the IMU true values. To further compare the recognition performance, additional comparative experiments were conducted using the BiLSTM network and the PSO-BiLSTM network, with their respective predictions referred to as the BiLSTM network predictions and the PSO-BiLSTM network predictions. The findings of the experiment are presented in Figure 12.

Figure 12.

Comparison of joint angle predictions by three network models and IMU measured values. (IMU is an abbreviation for inertial measurement unit, BiLSTM stands for bi-directional long short-term memory network, PSO-BiLSTM denotes particle swarm optimization bi-directional long short-term memory network, and QPSO-BiLSTM refers to quantum-behaved particle swarm optimization bi-directional long short-term memory network).

To quantitatively analyze prediction accuracy, Equtions (23)–(26) were used to evaluate four aspects: , , , and . The findings are presented in Table 2. The analysis indicates that the predictions of all three models correlate closely with the true values. However, for the four participants, the prediction error associated with the BiLSTM network model was markedly higher than that of the PSO-BiLSTM network, while the QPSO-BiLSTM network exhibited the lowest prediction error. In summary, the QPSO-BiLSTM network can achieve continuous real-time prediction of joint angles and effectively recognize human motion intentions.

Table 2.

Quantitative analysis of joint angle predictions by three networks ( is an abbreviation for mean absolute error, denotes mean absolute percentage error, stands for root mean square error, and refers to coefficient of determination).

3.4. LEERR Trajectory Tracking Control Experiment Based on Motion Intention Recognition

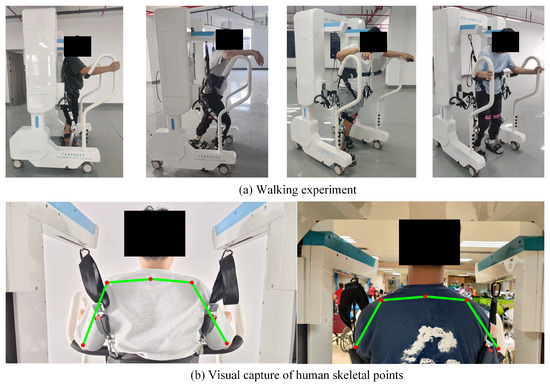

To validate the effectiveness of exoskeleton gait control based on motion intention recognition, a walking experiment was designed and conducted using the developed system. Four participants who contributed to BiLSTM network development were invited to participate in this experiment. They wore six-channel sEMG sensors for joint angle prediction. Meanwhile, a vision-driven follow-up assistive frame was used to provide weight support, helping the participants maintain balance while walking. During the experiment, the simulated hemiplegic patients moved their unaffected limb first, while the joint trajectories generated from motion intentions drove the exoskeleton to move the affected limb. The gait phase of both sides was monitored through feedback from foot pressure sensors. To address potential discrepancies between the actual initial position of the affected limb and the desired initial joint trajectory, a linear interpolation method was applied for transition adjustment. Figure 13 shows a schematic of the walking experiment, including the walking experiment setup (a) and the skeletal points captured visually during movement (b).

Figure 13.

Diagram of the walking experiment. (The green lines represent the main skeleton of the human body, and the red dots represent the main key points of the human body).

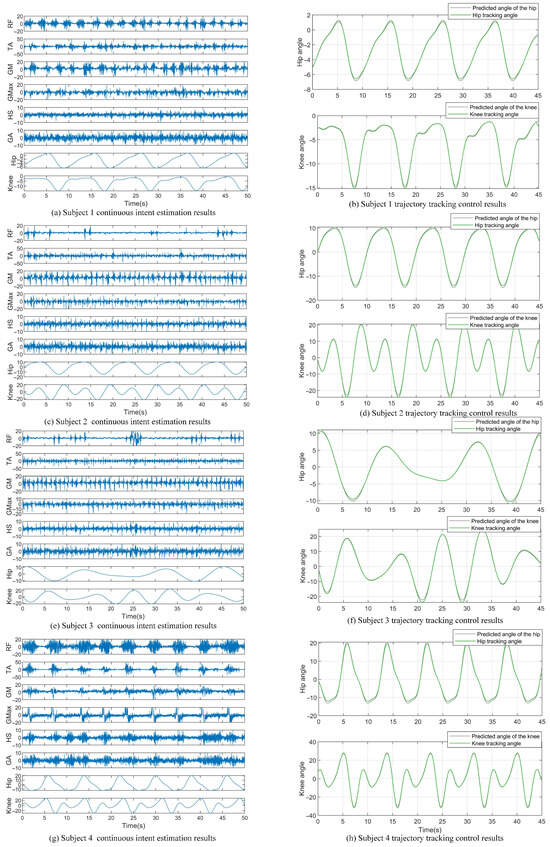

Figure 14 shows the real-time joint angle predictions and trajectory tracking results of four participants during walking, utilizing six-channel sEMG signals. In the experiment, the controller parameters were set as follows: , , and with the initial neural network weights set to 0.1. The generated trajectory was predicted in real time by the server to ensure both limbs maintained the same movement speed. Thus, the time reference point on the left graph represents the moment when the prediction side began movement, while the right graph’s time reference is set to when the control side started. The experimental results demonstrate that the trajectory predicted by the multi-channel sEMG signals combined with the QPSO-BiLSTM algorithm exhibited periodic changes, and the controller effectively tracked the generated gait trajectory. This indicates that the scheme of utilizing continuous motion intention recognition to enable the unaffected side to drive the affected side is feasible. Specifically, once the algorithm detects the movement intention, the subjects will be instructed to allow the robot to move autonomously, thereby guiding the movement of the affected limb. To minimize any inadvertent assistance, we suggest that future studies include trials where the recognition algorithm fails to assess the effect of voluntary muscle intention. Additionally, we will consider recruiting naive subjects who are unfamiliar with the study’s objectives to reduce potential bias.

Figure 14.

Trajectory tracking control results based on continuous motion intention estimation. RF stands for rectus femoris, TA refers to tibialis anterior, GM denotes gluteus medius, Gmax represents gluteus maximus, HS indicates hamstrings, and GA stands for gastrocnemius.

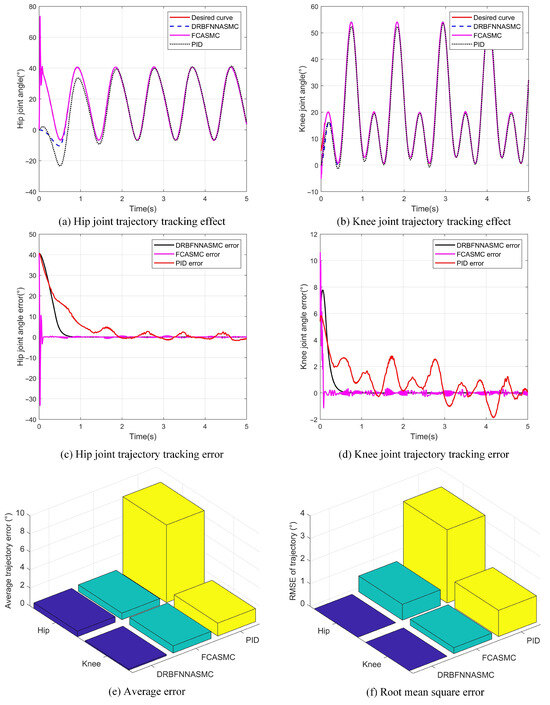

To evaluate the performance of the designed DRBFNNASMC controller, we designed and conducted a comparative experiment. The experiment was divided into three groups, employing the DRBFNNASMC trajectory tracking controller designed in this study, the FCASMC trajectory tracking controller, and the traditional PID trajectory tracking controller. In the experiment, the exoskeleton was used to track a segment of the gait trajectory, and the experimental results are illustrated in Figure 15.

Figure 15.

Comparison of the control effectiveness of three different controllers. (DRBFNNASMC stands for dual radial basis function neural network adaptive sliding mode controller, FCASMC refers to fuzzy compensated adaptive sliding mode controller, and PID denotes proportional–integral–derivative controller).

Figure 15a illustrates the tracking performance of the three controllers on the hip joint trajectory. As indicated by the black dashed line, the PID controller demonstrated significant error in the initial 2 s of the gait cycle and was unable to effectively respond to external disturbances, resulting in motor instability and failure to accurately track the desired gait trajectory. In contrast, the DRBFNNASMC and FCASMC controllers produced tracking errors only within the first 0.5 s and then stably tracked the desired gait trajectory. Figure 15b shows the tracking performance of the three controllers on the knee joint trajectory. As indicated by the black dashed line, the PID controller displayed significant oscillations throughout the gait cycle, with errors failing to converge. In comparison, the DRBFNNASMC and FCASMC controllers achieved sliding mode stability, with error convergence and stable tracking of the desired gait trajectory. Overall, the DRBFNNASMC and FCASMC controllers significantly outperform the PID controller in trajectory tracking.

Furthermore, Figure 15c,d show the display of the error curves for the hip and knee joints. The FCASMC controller’s error curve converged to zero within 0.2 s but then displayed periodic oscillations, failing to fully eliminate the effects of external disturbances and friction. This sustained high-frequency oscillation could adversely affect the motor and potentially lead to motor damage.

In conclusion, the trajectory tracking performance of the three controllers was evaluated based on average error and root mean square error (RMSE). Figure 15e shows the average tracking errors for the hip and knee joints under the three control schemes. The PID controller’s average errors for the hip and knee joints were 8.61° and 1.43°, respectively; for the FCASMC controller, they were 0.73° and 0.8°; and for the DRBFNNASMC controller, they were 0.62° and 0.13°. Specifically, the DRBFNNASMC controller’s average error for the hip joint was reduced by 15.1% and 92.8% compared to the FCASMC and PID controllers, respectively, while the average error for the knee joint was reduced by 83.75% and 90.9%. Figure 15f shows the RMSE for the hip and knee joint trajectory tracking under each control scheme. The RMSE values for the PID controller’s hip and knee joints were 3.24° and 1.157°, respectively; for the FCASMC controller, 0.699° and 0.265°; and for the DRBFNNASMC controller, 0.0014° and 0.000276°. Specifically, the DRBFNNASMC controller’s hip joint RMSE was reduced by 99.8% and 99.9% compared to the FCASMC and PID controllers, respectively, while the knee joint RMSE was reduced by 99.9% and 100%. In summary, the DRBFNNASMC controller demonstrated superior trajectory tracking capability in the exoskeleton robot system under external disturbances and friction.

4. Discussion

The vision-driven follow-up tracking control strategy can capture and respond to human motion in real time, ensuring that the rehabilitation robot’s movement trajectory closely aligns with the natural motion trajectory of the human body. This is critical for the effectiveness of rehabilitation training, as precise motion trajectory tracking can reduce unnatural or uncoordinated movements during the rehabilitation process, enhancing the effectiveness and safety of the training.

The results in Table 2 show that the proposed QPSO-BiLSTM algorithm successfully decodes the gait trajectories of the hip and knee joints during walking by utilizing QPSO-BiLSTM and sEMG signals to construct a muscle–machine interface. The experimental results further indicate that the BiLSTM network demonstrates significant advantages in multi-channel sEMG signal-based motion intention recognition, particularly in multi-feature and sequential prediction tasks. The application of the QPSO intelligent optimization algorithm further enhances the performance of the BiLSTM network model by optimizing parameters online, addressing shortcomings in real-time performance and adaptability. However, this approach also has some limitations. Currently, our algorithm is trained based on gait data from healthy individuals, and therefore may not accurately capture the gait intentions of stroke patients. To address this limitation, we plan to collect more sEMG data from stroke patients in future studies and optimize the existing algorithm for these data. We will also explore personalized training methods, adjusting the algorithm according to the specific conditions of the patients to improve its accuracy and applicability across different patient types. To better assess the algorithm’s applicability to stroke patients, future research will include diversified testing on different groups of stroke patients and attempt to combine other physiological signals (such as motion sensor data) to further enhance the accuracy of gait intention detection.

The average error and RMSE results in Figure 15e,f indicate that the DRBFNNASMC control algorithm significantly outperforms the FCASMC control algorithm and the traditional PID control algorithm in terms of error metrics. This demonstrates that the DRBFNNASMC control algorithm offers higher accuracy and stability in trajectory tracking.

Despite achieving significant results in several areas, there remain some issues that require further exploration. First, the generalization ability of the QPSO-BiLSTM model across different participants and movement tasks needs further validation. Second, the computational complexity and real-time performance of the DRBFNNASMC controller in complex nonlinear systems require further optimization. Additionally, the long-term effects and patient satisfaction with the follow-up assistive frame and rehabilitation robot system in practical clinical applications need further clinical validation.

5. Conclusions

This study proposes an active control method for a follow-up LEERR based on human motion intention recognition, and multiple experiments have verified its significant advantages. Initially, the experimental results indicate that the vision-driven follow-up tracking control strategy effectively supports body weight during walking, compensates for the vertical movement of the body’s center of mass, and achieves accurate tracking of the human movement trajectory. Additionally, by constructing a BiLSTM network and applying QPSO algorithm, the QPSO-BiLSTM combined model demonstrates outstanding performance in decoding multi-channel sEMG signals to predict lower limb joint angles. Compared to traditional BiLSTM and PSO-BiLSTM networks, the QPSO-BiLSTM network shows significant improvement in continuous motion prediction of the lower limbs. Furthermore, the designed DRBFNNASMC controller generates precise control torques to effectively drive the robot in tracking the trajectory generated by the muscle–machine interface, even in the face of uncertain nonlinear dynamics of the wearer-exoskeleton system. The experimental results show that the DRBFNNASMC controller outperforms the FCASMC control algorithm and traditional PID control algorithm in gait tracking. The proposed method holds great potential for clinical rehabilitation applications, providing a more intelligent, precise, and efficient solution for lower limb rehabilitation training. It enhances the intelligence level of the LEERR, achieving breakthroughs in trajectory tracking and motion intention recognition accuracy, and laying a foundation for future research and application of rehabilitation robots. Future research will continue to optimize and validate this method to promote its practical application in a broader range of rehabilitation scenarios.

Author Contributions

Data curation, Z.S. and P.Z.; formal analysis, Z.S. and X.W.; funding acquisition, X.G.; project administration, X.G. and R.Y.; resources, X.G. and R.Y.; writing—original draft, Z.S.; writing—review and editing, Z.S. and X.G. All authors contributed to the article and approved the final version submitted. All authors have read and agreed to the published version of the manuscript.

Funding

This research received funding from the Province Key R&D Program of Guangxi (AB22035006) and the Key Laboratory of Intelligent Control and Rehabilitation Technology of the Ministry of Civil Affairs (Grant no.102118170090010009004).

Institutional Review Board Statement

The studies involving human participants were reviewed and approved by Junlin Deng and Xueshan Gao; College of Mechanical and Marine Engineering, Beibu Gulf University. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Informed Consent Statement

Written informed consent has been obtained from the patient(s) to publish this study.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors state that they have no conflicts of interest.

References

- Wu, S.; Wu, B.; Liu, M.; Chen, Z.; Wang, W.; Anderson, C.S.; Sandercock, P.; Wang, Y.; Huang, Y.; Cui, L.; et al. Stroke in china: Advances and challenges in epidemiology, pre-vention, and management. Lancet Neurol. 2019, 18, 394–405. [Google Scholar] [CrossRef]

- Zhou, M.; Wang, H.; Zeng, X.; Yin, P.; Zhu, J.; Chen, W.; Li, X.; Wang, L.; Wang, L.; Liu, Y.; et al. Mortality, morbidity, and risk factors in China and its provinces, 1990–2017: A systematic analysis for the Global Burden of Disease Study 2017. Lancet 2019, 394, 1145–1158. [Google Scholar] [CrossRef] [PubMed]

- Paraskevas, K.I. Prevention and treatment of strokes associated with carotid artery stenosis: A research priority. Ann. Transl. Med. 2020, 8, 1260. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.-J.; Li, Z.-X.; Gu, H.-Q.; Zhai, Y.; Zhou, Q.; Jiang, Y.; Zhao, X.-Q.; Wang, Y.-L.; Yang, X.; Wang, C.-J.; et al. China Stroke Statistics: An update on the 2019 report from the National Center for Healthcare Quality Management in Neurological Diseases, China National Clinical Research Center for Neurological Diseases, the Chinese Stroke Association, National Center for Chronic and Non-communicable Disease Control and Prevention, Chinese Center for Disease Control and Prevention and Institute for Global Neuroscience and Stroke Collaborations. Stroke Vasc. Neurol. 2022, 7, 415–450. [Google Scholar] [PubMed]

- da Miao, M.; Gao, X.S.; Zhao, J.; Zhao, P. Rehabilitation robot following motion control algorithm based on human behavior intention. Appl. Intell. 2023, 53, 6324–6343. [Google Scholar] [CrossRef]

- Gittler, M.; Davis, A.M. Guidelines for adult stroke rehabilitation and recovery. JAMA 2018, 319, 820–821. [Google Scholar] [CrossRef]

- Ghonasgi, K.; Mirsky, R.; Bhargava, N.; Haith, A.M.; Stone, P.; Deshpande, A.D. Kinematic coordination captures learning during human–exoskeleton interaction. Sci. Rep. 2023, 13, 10322. [Google Scholar] [CrossRef]

- Bae, E.K.; Park, S.E.; Moon, Y.; Chun, I.T.; Choi, J. A robotic gait training system with a stair-cextremitying mode based on a unique exoskeleton structure with active foot plates. Int. J. Control Autom. Syst. 2020, 18, 196–205. [Google Scholar] [CrossRef]

- Ling, W.; Yu, G.; Li, Z. Lower extremity exercise rehabilitation assessment based on artificial intelligence and medical big data. IEEE Access 2019, 7, 126787–126798. [Google Scholar] [CrossRef]

- Yang, T.; Gao, X. Adaptive neural sliding-mode controller for alternative control strategies in lower extremity rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 28, 238–247. [Google Scholar] [CrossRef]

- Pisla, D.; Nadas, I.; Tucan, P.; Albert, S.; Carbone, G.; Antal, T.; Banica, A.; Gherman, B. Development of a control system and functional validation of a parallel robot for lower extremity rehabilitation. Actuators 2021, 10, 277. [Google Scholar] [CrossRef]

- Chen, C.; Zhang, S.; Zhu, X.; Shen, J.; Xu, Z. Disturbance observer-based patient-cooperative control of a lower extremity rehabilitation exoskeleton. Int. J. Precis. Eng. Manuf. 2020, 21, 957–968. [Google Scholar] [CrossRef]

- He, Y.; Xu, Y.; Hai, M.; Feng, Y.; Liu, P.; Chen, Z.; Duan, W. Exoskeleton-assisted rehabilitation and neuroplasticity in spinal cord injury. World Neurosurg. 2024, 185, 45–54. [Google Scholar] [CrossRef]

- Li, K.; Zhang, J.; Wang, L.; Zhang, M.; Li, J.; Bao, S. A review of the key technologies for sEMG-based human-robot interaction systems. Biomed. Signal Process. Control 2020, 62, 102074. [Google Scholar] [CrossRef]

- Liang, C.; Hsiao, T. Admittance control of powered exoskeletons based on joint torque estimation. IEEE Access 2020, 8, 94404–94414. [Google Scholar] [CrossRef]

- Li, Z.; Huang, Z.; He, W.; Su, C.Y. Adaptive impedance control for an upper extremity robotic exoskeleton using biological signals. IEEE Trans. Ind. Electron. 2016, 64, 1664–1674. [Google Scholar] [CrossRef]

- Wang, W.; Qin, L.; Yuan, X.; Ming, X.; Sun, T.; Liu, Y. Bionic control of exoskeleton robot based on motion intention for rehabilitation training. Adv. Robot. 2019, 33, 590–601. [Google Scholar] [CrossRef]

- Foroutannia, A.; Akbarzadeh-T, M.-R.; Akbarzadeh, A.; Tahamipour-Z, S.M. Adaptive fuzzy impedance control of exoskeleton robots with electromyography-based convolutional neural networks for human intended trajectory estimation. Mechatronics 2023, 91, 102952. [Google Scholar] [CrossRef]

- Feng, Y.; Yu, L.; Dong, F.; Zhong, M.; Pop, A.A.; Tang, M.; Vladareanu, L. Research on the method of identifying upper and lower extremity coordinated movement intentions based on surface EMG signals. Front. Bioeng. Biotechnol. 2024, 11, 1349372. [Google Scholar] [CrossRef]

- Luo, S.; Meng, Q.; Li, S.; Yu, H. Research of intent recognition in rehabilitation robots: A systematic review. Disabil. Rehabil. Assist. Technol. 2024, 19, 1307–1318. [Google Scholar] [CrossRef]

- Iqbal, N.; Khan, T.; Khan, M.; Hussain, T.; Hameed, T.; Bukhari, S.A. Neuromechanical signal-based parallel and scalable model for lower extremity movement recognition. IEEE Sensors J. 2021, 21, 16213–16221. [Google Scholar] [CrossRef]

- Li, C.; Chen, X.; Zhang, X.; Wu, D. Research on electromyography-based pattern recognition for inter-extremity coordination in human crawling motion. Front. Neurosci. 2024, 18, 1349347. [Google Scholar]

- Wang, F.; Lu, J.; Fan, Z.; Ren, C.; Geng, X. Continuous motion estimation of lower extremitys based on deep belief networks and random forest. Rev. Sci. Instruments 2022, 93, 044106. [Google Scholar] [CrossRef]

- Sun, N.; Cao, M.; Chen, Y.; Chen, Y.; Wang, J.; Wang, Q.; Chen, X.; Liu, T. Continuous estimation of human knee joint angles by fusing kinematic and myoelectric signals. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 2446–2455. [Google Scholar] [CrossRef]

- Zeng, M.; Gu, J.; Feng, Y. Motion Prediction Based on sEMG-Transformer for Lower Extremity Exoskeleton Robot Control. In Proceedings of the 2023 International Conference on Advanced Robotics and Mechatronics (ICARM), Sanya, China, 8–10 July 2023; pp. 864–869. [Google Scholar]

- Wu, Q.; Chen, Y. Development of an intention-based adaptive neural cooperative control strategy for upper-extremity robotic rehabilitation. IEEE Robot. Autom. Lett. 2020, 6, 335–342. [Google Scholar] [CrossRef]

- Mehr, J.K.; Sharifi, M.; Mushahwar, V.K.; Tavakoli, M. Intelligent locomotion planning with enhanced postural stability for lower-extremity exoskeletons. IEEE Robot. Autom. Lett. 2021, 6, 7588–7595. [Google Scholar] [CrossRef]

- Khairuddin, I.M.; Sidek, S.N.; Majeed, A.P.A.; Razman, M.A.M.; Puzi, A.A.; Yusof, H.M. The classification of movement intention through machine learning models: The identification of significant time-domain EMG features. PeerJ Comput. Sci. 2021, 7, e379. [Google Scholar] [CrossRef]

- Shi, Q.; Ying, W.; Lv, L.; Xie, J. Deep reinforcement learning-based attitude motion control for humanoid robots with stability constraints. Ind. Robot Int. J. Robot. Res. Appl. 2020, 47, 335–347. [Google Scholar] [CrossRef]

- Guan, W.; Zhou, L.; Cao, Y.S. Joint motion control for lower extremity rehabilitation based on iterative learning control (ILC) algorithm. Complexity 2021, 2021, 6651495. [Google Scholar] [CrossRef]

- Hasan, S.K.; Dhingra, A.K. An adaptive controller for human lower extremity exoskeleton robot. Microsyst. Technol. 2021, 27, 2829–2846. [Google Scholar] [CrossRef]

- Kunyou, H.; Lumin, C. Research of fuzzy PID control for lower extremity wearable exoskeleton robot. In Proceedings of the 2021 4th International Conference on Intelligent Autonomous Systems (ICoIAS), Wuhan, China, 14–16 May 2021; pp. 385–389. [Google Scholar]

- Yang, Y. Improvement with fuzzy PID control method control system for flexible exoskeleton. In Proceedings of the AIP Conference Proceedings; AIP Publishing: Melville, NY, USA, 2024; Volume 3144. [Google Scholar]

- Lin, C.J.; Sie, T.Y. Design and experimental characterization of an artificial neural network controller for a lower extremity robotic exoskeleton. Actuators 2023, 12, 55. [Google Scholar] [CrossRef]

- Sun, Y.; Tang, Y.; Zheng, J.; Dong, D.; Chen, X.; Bai, L. From sensing to control of lower extremity exoskeleton: A systematic review. Annu. Rev. Control 2022, 53, 83–96. [Google Scholar] [CrossRef]

- Sun, J.; Wang, J.; Yang, P.; Zhang, Y.; Chen, L. Adaptive finite-time control for wearable exoskeletons based on ultra-local model and radial basis function neural network. Int. J. Control Autom. Syst. 2021, 19, 889–899. [Google Scholar] [CrossRef]

- Wang, J.; Liu, J.; Zhang, G.; Guo, S. Periodic event-triggered sliding mode control for lower extremity exoskeleton based on human-robot cooperation. ISA Trans. 2022, 123, 87–97. [Google Scholar] [CrossRef]

- Soleimani Amiri, M.; Ramli, R.; Ibrahim, M.F.; Abd Wahab, D.; Aliman, N. Adaptive particle swarm optimization of pid gain tuning for lower-extremity human exoskeleton in a virtual environment. Mathematics 2020, 8, 2040. [Google Scholar] [CrossRef]

- Zhang, P.; Zhang, J. Lower extremity exoskeleton robots’ dynamics parameters identification based on improved beetle swarm optimization algorithm. Robotica 2022, 40, 2716–2731. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, S.; Wang, A.; Li, W.; Ma, Z.; Yue, X. Humanoid control of lower extremity exoskeleton robot based on human gait data with sliding mode neural network. CAAI Trans. Intell. Technol. 2022, 7, 606–616. [Google Scholar] [CrossRef]

- Sun, Z.; Qiu, J.; Zhu, J.; Li, S. A composite position control of flexible lower extremity exoskeleton based on second-order sliding mode. Nonlinear Dyn. 2023, 111, 1657–1666. [Google Scholar] [CrossRef]

- Kenas, F.; Saadia, N.; Ababou, A.; Ababou, N. Model-free based adaptive BackSteping-Super Twisting-RBF neural network control with α-variable for 10 DOF lower extremity exoskeleton. Int. J. Intell. Robot. Appl. 2024, 8, 122–148. [Google Scholar] [CrossRef]

- Hou, X.; Li, W.; Han, Y.; Wang, A.; Yang, Y.; Liu, L.; Tian, Y. A novel mobile robot navigation method based on hand-drawn paths. IEEE Sensors J. 2020, 20, 11660–11673. [Google Scholar] [CrossRef]

- Quan, H.; Li, Y.; Zhang, Y. A novel mobile robot navigation method based on deep reinforcement learning. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420921672. [Google Scholar] [CrossRef]

- Efstathiou, D.; Chalvatzaki, G.; Dometios, A.; Spiliopoulos, D.; Tzafestas, C.S. Deep leg tracking by detection and gait analysis in 2D range data for intelligent robotic assistants. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 2657–2662. [Google Scholar]

- Wang, F.; Zhang, C.; Zhang, W.; Fang, C.; Xia, Y.; Liu, Y.; Dong, H. Object-based reliable visual navigation for mobile robot. Sensors 2022, 22, 2387. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Gao, X.; Miao, M.; Zhao, P. Design and control of a lower limb rehabilitation robot based on human motion intention recognition with multi-source sensor information. Machines 2022, 10, 1125. [Google Scholar] [CrossRef]

- Zhao, L.; Cao, N.; Yang, H. Forecasting regional short-term freight volume using QPSO-LSTM algorithm from the perspective of the importance of spatial information. Math. Biosci. Eng. 2023, 20, 2609–2627. [Google Scholar] [CrossRef]

- Xiang, Z.; Yan, J.; Demir, I. A rainfall-runoff model with LSTM-based sequence-to-sequence learning. Water Resour. Res. 2020, 56, e2019WR025326. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Pirani, M.; Thakkar, P.; Jivrani, P.; Bohara, M.H.; Garg, D. A comparative analysis of ARIMA, GRU, LSTM and BiLSTM on financial time series forecasting. In Proceedings of the 2022 IEEE International Conference on Distributed Computing and Electrical Circuits and Electronics (ICDCECE), Ballari, India, 23–24 April 2022; pp. 1–6. [Google Scholar]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The performance of LSTM and BiLSTM in forecasting time series. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Angeles, CA, USA, 9–12 December 2019; pp. 3285–3292. [Google Scholar]

- Chu, Y.; Fei, J.; Hou, S. Adaptive global sliding-mode control for dynamic systems using double hidden layer recurrent neural network structure. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 1297–1309. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).