1. Introduction

Water scarcity is rapidly emerging as one of the most pressing global challenges of the 21st century. As emphasized by the United Nations Sustainable Development Goals, particularly SDG 6 on clean water and sanitation, the sustainable management of freshwater resources is essential for human well-being, food security, and environmental resilience [

1]. Although in some regions water is still perceived as abundant and readily available, this perception is increasingly outdated due to the impacts of climate change, population growth, and expanding agricultural demand. Agriculture alone accounts for over 70% of global freshwater withdrawals, making irrigation a critical area for strategic intervention [

2].

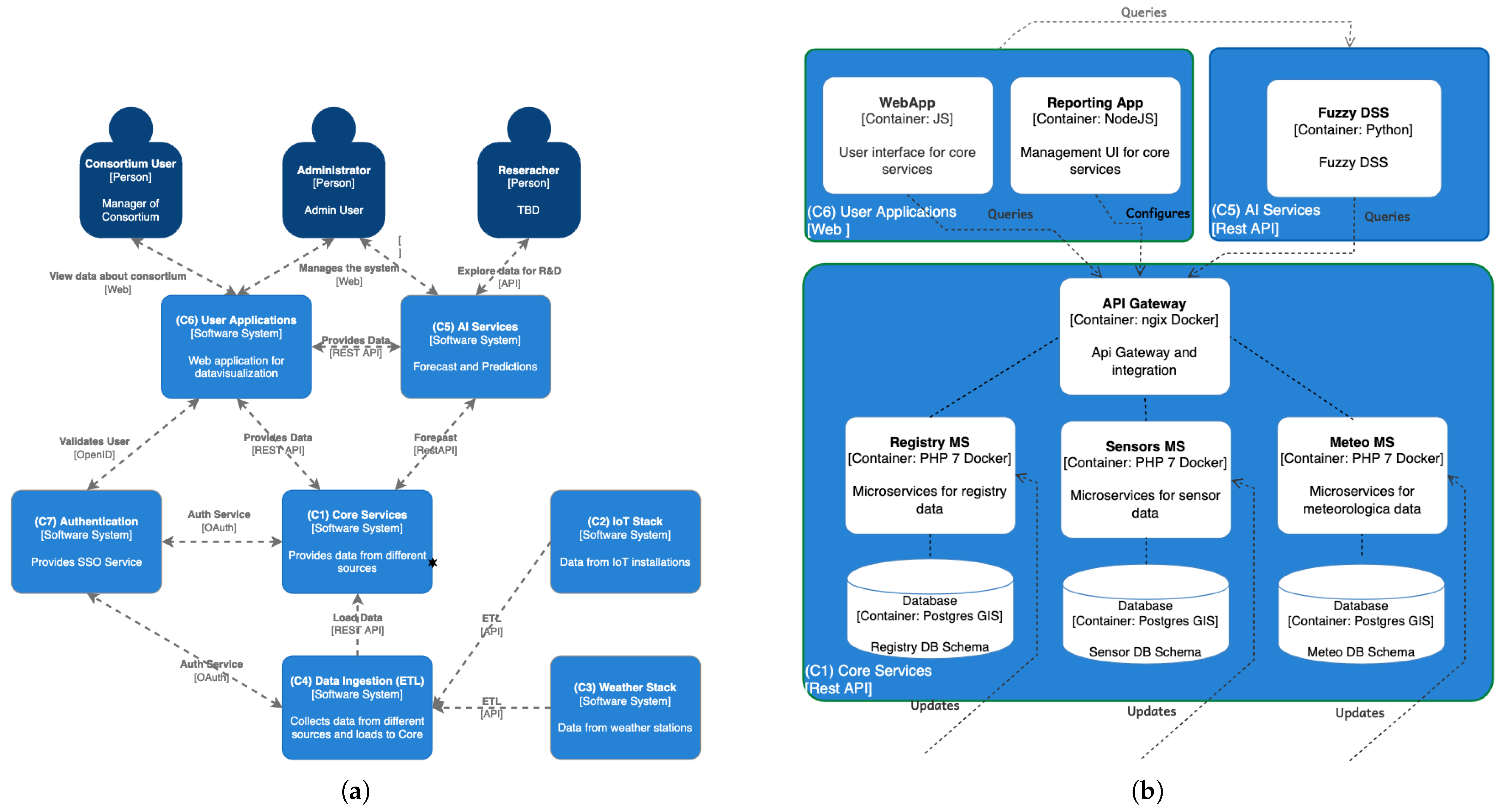

Recent advances in remote sensing, variable-rate application systems, autonomous machinery, and Internet of Things (IoT) networks are generating unprecedented volumes of field data [

3,

4]. When combined with AI-driven analytics, these technologies can support real-time monitoring and adaptive control, reducing water consumption while sustaining yields [

5]. Unlike in fully autonomous Industry 4.0 settings, many precision irrigation scenarios can operate with low-cost sensors and actuators, offloading computationally intensive tasks to intermediate gateways or the cloud [

6]. Such architectures lower field-level costs while enabling advanced processing and decision-support capabilities. Despite this potential, irrigation management faces persistent technical and economic barriers: models must remain accurate across heterogeneous microclimates, recommendations must be interpretable to build farmer trust [

7], and solutions must be viable within the narrow profit margins of farming. Local environmental variability—even within a single field—complicates decision-making, and the growing frequency of extreme weather events demands systems that adapt rapidly to changing conditions. These needs point toward hybrid decision-making approaches that combine ultra-local sensing, which in this work refers to very high-resolution measurements collected directly at the sensor location (i.e., a soil tensiometer installed at a precisely georeferenced position) and not obtained through spatial interpolation, with short-term forecasts, filtered and aggregated at the edge or in the cloud [

6], to deliver timely, context-aware irrigation recommendations.

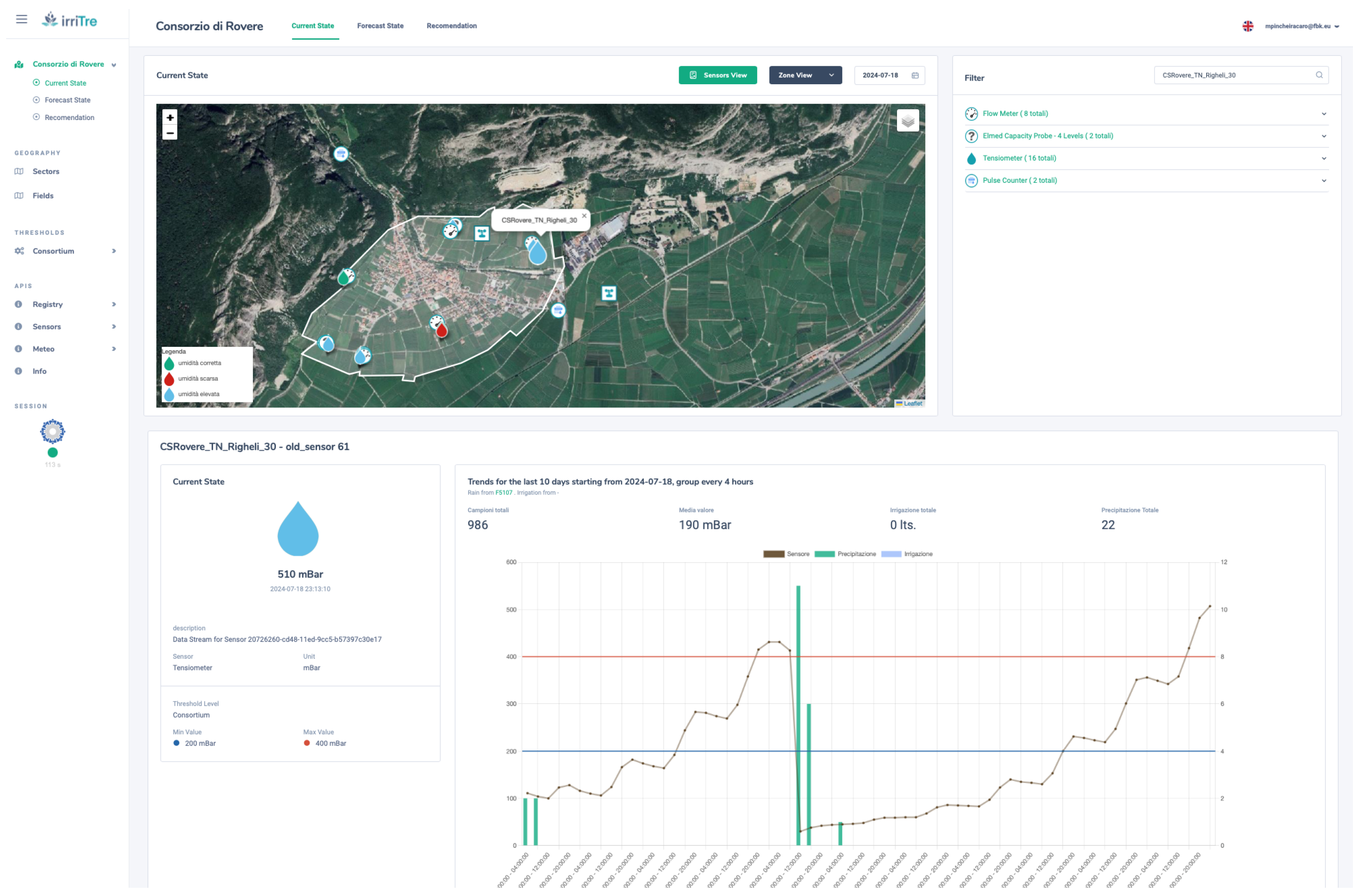

Within this context, agricultural decision support systems, and more specifically Irrigation Decision Support Systems (IDSS), have emerged as key technologies for enabling data-driven irrigation management [

8]. These systems integrate real-time data on weather, soil moisture, and crop stress, possibly collected via IoT devices, to support optimized water use. Among the various soft computing techniques applied in IDSS, fuzzy logic has gained increasing traction due to its ability to handle uncertainty and imprecise data, as extensively reviewed by Patel et al. [

9]. Fuzzy inference systems are particularly well-suited for translating heterogeneous sensor inputs into actionable irrigation strategies and for supporting rule-based, autonomous decision-making within decentralized IoT architectures.

However, many existing IDSS implementations, fuzzy-based or otherwise, still rely on static, physics-based models with limited integration of real-time data streams or predictive analytics [

10]. A promising but underexplored direction is to combine the descriptive, rule-based reasoning of fuzzy logic with the adaptive capabilities of machine learning (ML) [

11]. This hybrid approach could enable anticipatory irrigation decisions that better reflect evolving field conditions, reducing both water waste and crop stress.

In this work, we compare two fuzzy IDSS implementations: (i) a Mamdani-based inference engine with expert-defined rules [

11], and (ii) a Takagi–Sugeno-based system introduced here, which enables automated learning from ultra-local field data. Both integrate predictive components from previously proposed machine learning models [

12,

13], deployed in the cloud. Field experiments show that both controllers reduce water use compared to traditional irrigation while keeping soil moisture within agronomic thresholds. The Mamdani-based system reduces the occurrence of critical dry days, providing slightly better soil moisture stability. In contrast, the ANFIS-based controller achieves greater water savings by adopting a more conservative irrigation strategy (i.e., applying less water). These results reveal a trade-off between maximizing water efficiency and practicing deficit irrigation (i.e., deliberately allowing for mild water stress), highlighting the practical relevance of both approaches depending on management priorities.

The remainder of this paper is organized as follows:

Section 2 reviews related work on precision agriculture, with a focus on IDSSs and the application of fuzzy logic in water management;

Section 3 presents the proposed fuzzy-logic-based systems and the comparison strategy;

Section 4 describes the software–hardware platform, the study area, and the datasets used;

Section 5 outlines the counterfactual simulation and bootstrap-based analysis used for assessing the performance of the systems;

Section 6 discusses the comparative results and trade-offs; and

Section 7 summarizes the findings and highlights future research directions.

2. Related Work

Advances in precision agriculture, such as remote sensing, variable-rate systems, autonomous machinery, IoT, and AI, are transforming farm management by enabling real-time monitoring, adaptive control, and data-driven decision-making [

3,

4,

5,

14]. These technologies have paved the way for decision support systems that integrate diverse data sources to provide practical, evidence-based recommendations for more efficient and sustainable agricultural practices [

10,

15].

In recent years, there has been growing attention among researchers, practitioners, and policymakers toward IDSS [

16,

17]. IDSS are designed to support farmers in making informed decisions about when and how much to irrigate, ensuring water is used precisely and efficiently. To achieve these goals, IDSS can integrate various data sources, such as weather forecasts, soil moisture data, and crop-specific water requirements, with advanced analytical and predictive methods [

4]. In addition to improving water management, these systems significantly enhance farm profitability [

18,

19].

Rosillon et al. [

20] propose a near real-time spatial interpolation method for air temperature and humidity, improving IDSS accuracy through kriging and reanalysis data [

21]. Conde et al. [

22] design an adaptive DSS that integrates human inputs to improve scheduling efficiency. In viticulture, Kang et al. [

23] introduce a IDSS for regulated deficit irrigation in wine grapes, focusing on soil moisture monitoring. King et al. [

24] present an IoT-based IDSS with a crop water stress index and neural networks for precision irrigation. Simionesei et al. [

8] presented an IDSS deployed in southern Portugal, which integrates data from local weather stations, 7-day weather forecasts, and the MOHID-Land soil water balance model [

25].

Regarding the use of fuzzy logic in combination with IoT technology for IDSS, Patel et al. [

9] present an autonomous irrigation device that processes multiple field inputs, including current weather conditions, air temperature, soil moisture, and water availability in a storage tank. The system employs a fuzzy inference engine with 81 manually defined rules to automate the opening and closing of a water valve, thereby optimizing irrigation schedules without human intervention. Similarly, Kokkonis et al. [

26] propose an IoT-based irrigation device that performs local sensing and actuation through an embedded fuzzy inference system. Their system collects data from multiple soil moisture sensors, as well as air temperature and humidity sensors, each modeled using three fuzzy membership levels. The fuzzy logic algorithm, implemented directly on the microcontroller, determines the opening angle of a central servo valve to control irrigation. These approaches enable real-time, on-device decision-making without relying on constant connectivity, making them particularly suitable for deployment in remote or infrastructure-limited agricultural settings.

More recently, and in a manner closely aligned with our work, Benzaouia et al. [

27] propose an intelligent IDSS that combines IoT-based environmental and soil sensing with a Mamdani-type fuzzy logic controller implemented directly on an ATmega2560 microcontroller. The system processes real-time inputs such as soil moisture, temperature, solar irradiance, and rainfall to dynamically determine optimal irrigation timing and duration. Meanwhile, the sensed and aggregated data are transmitted via LoRa communication to a cloud-based infrastructure for storage and visualization. The main objective is to improve water and energy efficiency in semi-arid agricultural settings. Field experiments conducted in a Moroccan apple orchard demonstrated that the fuzzy controller effectively reduced irrigation during periods of high evapotranspiration and adjusted watering durations in response to varying environmental conditions.

3. Fuzzy-Based Decision Support Systems

In this section, we present the two developed IDSSs based on fuzzy logic, which are later compared using real-world data. Fuzzy logic offers a powerful framework for reasoning under uncertainty, inspired by the way humans make decisions in the presence of imprecise or incomplete information [

28]. Unlike classical binary logic, which imposes a strict true/false dichotomy, fuzzy logic allows variables to assume degrees of truth, enabling more nuanced and rule-based decision-making, therefore earning the motto of “computing with words” [

29]. For the particular case of irrigation management, fuzzy logic offers a natural way to encode agronomic expertise into decision support tools. Its linguistic rule structures allow domain experts to define irrigation strategies in intuitive terms (e.g., “if soil is dry and high temperature is expected, then irrigate generously”), while the underlying inference engine translates these qualitative insights into quantitative actions.

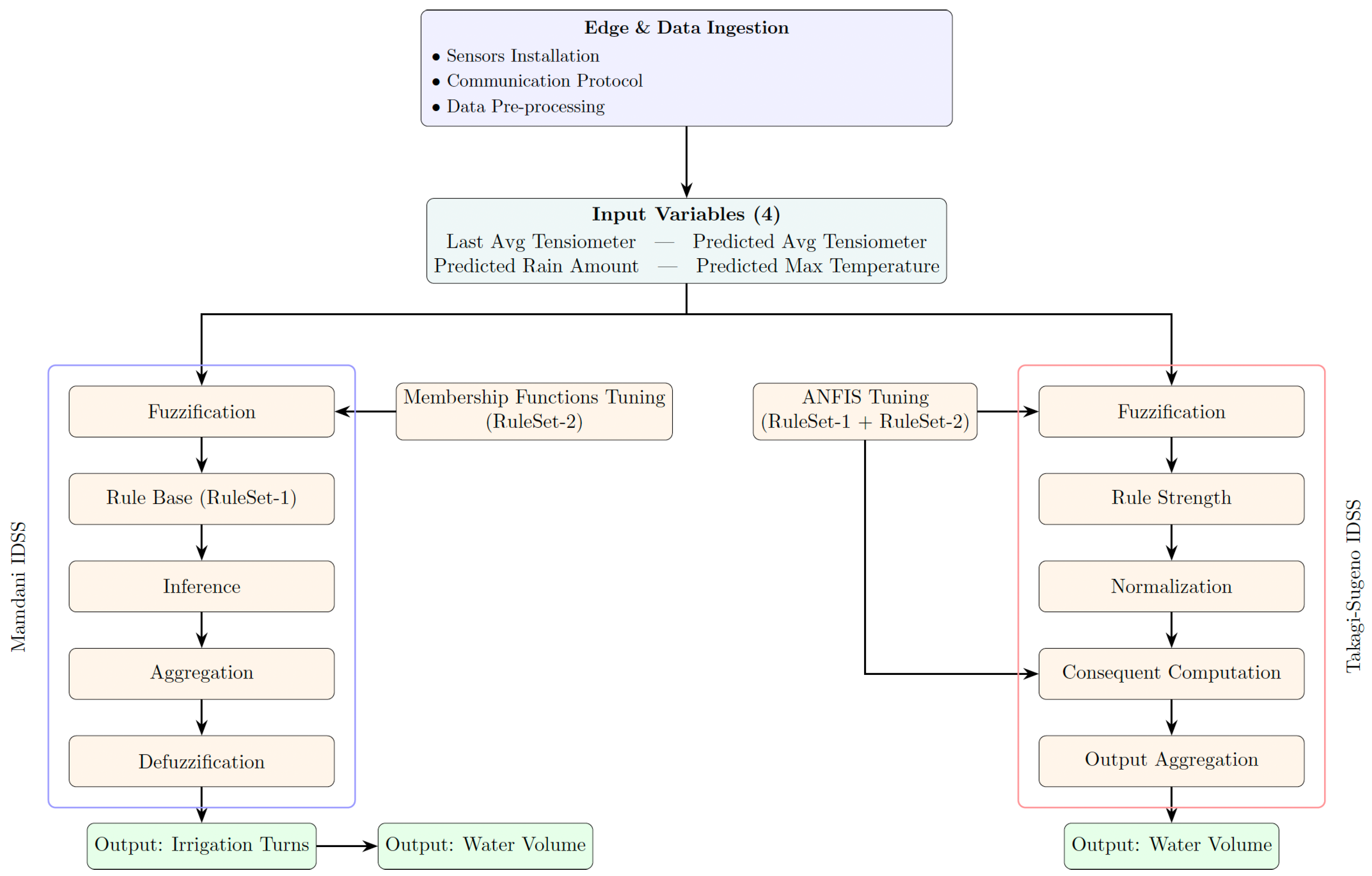

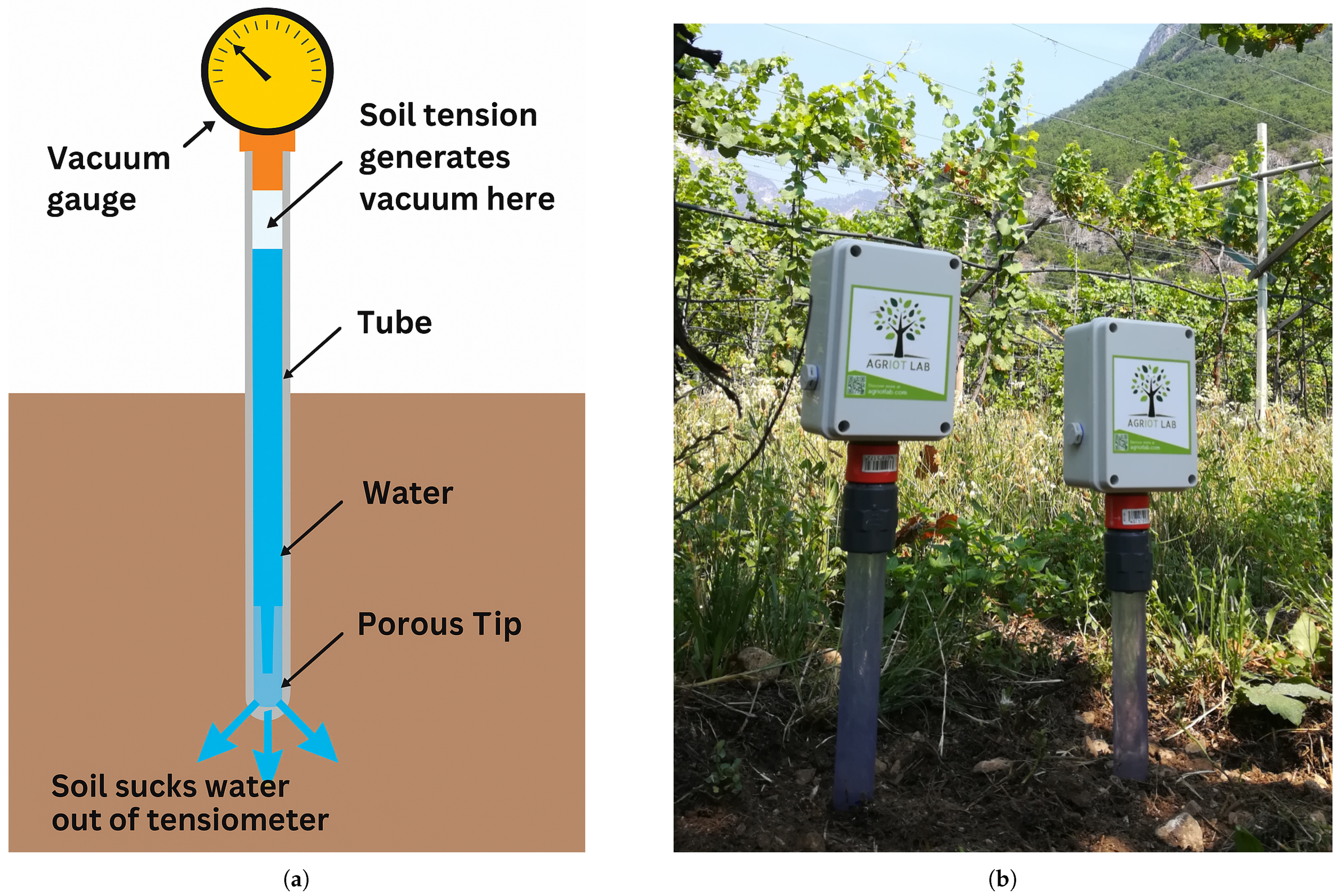

The two IDSSs differ in their fuzzy inference methods: the first uses a Mamdani-type system known for its straightforward rule-based logic, while the second employs an Adaptive Neuro-Fuzzy Inference System (ANFIS), which combines fuzzy logic with neural networks. Despite these differences, both systems share the same four input variables and produce a single output variable. Specifically, the input variables are:

Last Avg Tensiometer: the current day’s average tensiometer reading (), representing the most recent soil water tension, which is directly related to the soil moisture level;

Predicted Avg Tensiometer: the predicted average tensiometer reading for the following day (

), generated by ultra-local Long Short-Term Memory (LSTM) machine learning models trained on historical data [

12,

13];

Predicted Rain Amount: the predicted cumulative rainfall over the next three days (), obtained from a weather forecast service;

Predicted Max Temperature: the maximum predicted air temperature over the next three days (), also obtained from a weather forecast service.

Regarding the output, this variable represents the recommended irrigation level. Depending on the irrigation system and the field layout (e.g., organized in rows or other configurations), it may carry different operational meanings. In this study, the output is defined as the duration of irrigation cycles relative to a reference vineyard row within a water sector, where each sector corresponds to a predefined and homogeneous area of the field as determined by agronomists.

The decision to employ a deep learning model architecture rather than classical physically based soil water-balance models (i.e., FAO-56 [

30] or AquaCrop [

31]) is driven by data compatibility and operational practicality. While physically based models provide mechanistic insights, they require precise soil parameters and crop coefficients, often uncertain at the local scale. In contrast, the adopted data-driven approach leverages tensiometric time series to model soil water tension directly, capturing complex, non-linear site-specific dynamics and facilitating deployment as new sensor data become available.

In the remainder of this section, we provide a detailed and formal description of the design and development of the two fuzzy systems analyzed and compared in this work.

3.1. Mamdani-Type Fuzzy IDSS

The first IDSS adopted in this study is the fuzzy inference model developed and validated for vineyard irrigation management in Northern Italy [

11]. It addresses the critical need for sustainable water use and agronomic precision in a dynamic, weather-sensitive agricultural context.

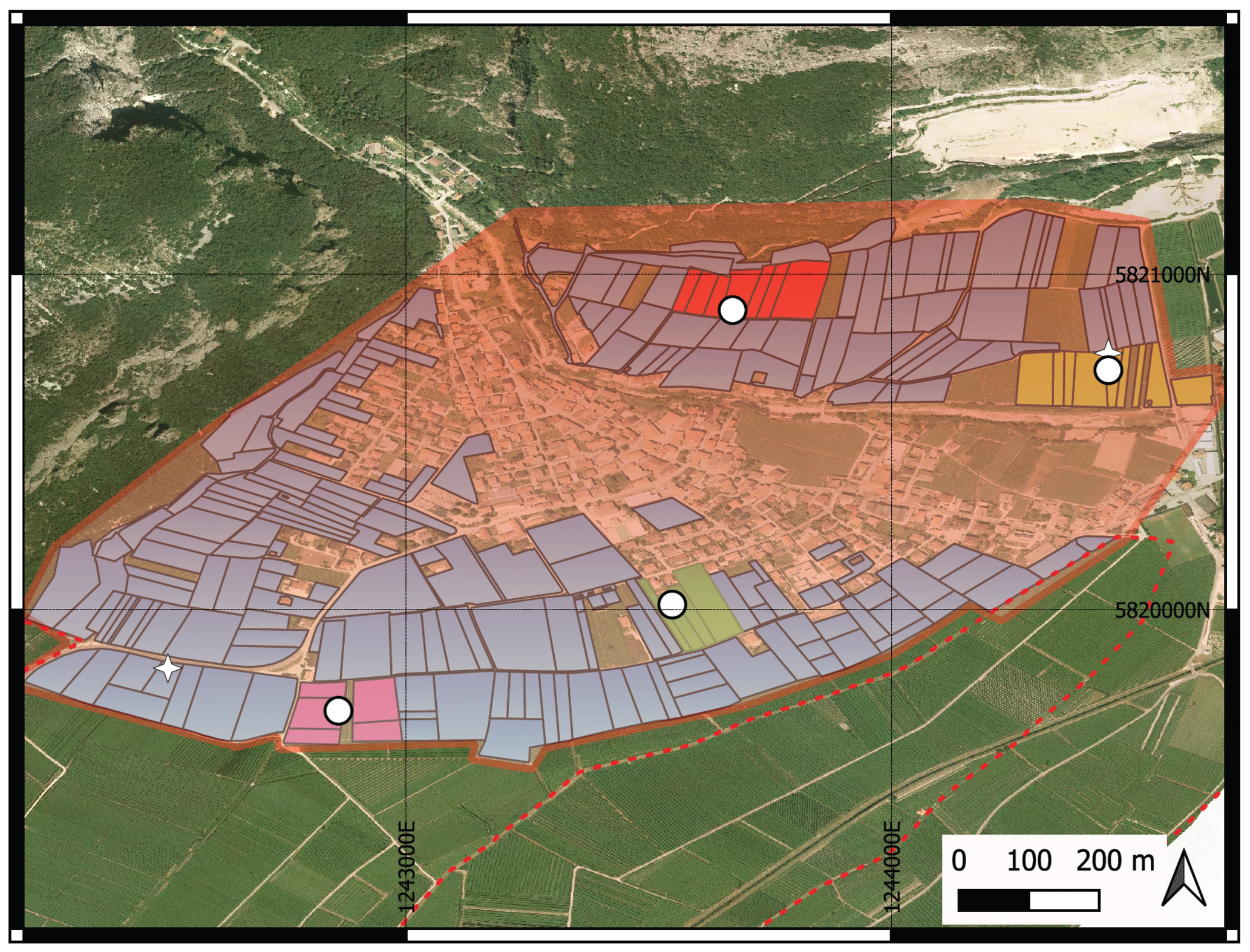

Formally, this IDSS is based on the classical Mamdani-type fuzzy system [

32], which can be conceptually decomposed into five main components, schematically represented in the Mamdani branch of

Figure 1 and briefly described below:

More specifically, each input variable is fuzzified into three linguistic terms (

Low,

Medium, and

High), while the output variable is defined by four linguistic terms corresponding to the standard irrigation turns, namely

No irrigation,

Half-turn,

Single-turn, and

Double-turn. In operational terms, a

Single-turn corresponds to a specific irrigation duration per vineyard row of the reference sector, which in our system represents the application of 650 L of water; the other irrigation turns are defined proportionally to this standard. For all variables, the linguistic terms at the extremes are modeled using trapezoidal membership functions, whereas the intermediate terms are modeled using triangular membership functions (see

Table 1 for a summary of input variable domains and the fuzzy-set peaks used in the experiments).

Finally, the rule base of this IDSS comprises the same 21 original fuzzy rules described in [

11], developed collaboratively with agronomists and irrigation managers to coherently represent various environmental scenarios and ensure comprehensive coverage of critical soil and meteorological conditions of this study area. In the following, this set of rules will be referred to as Ruleset-1. For illustrative purposes, one representative rule from Ruleset-1 is shown below:

3.2. Takagi-Sugeno Fuzzy IDSS

The second IDSS proposed in this paper is based on the Adaptive Neuro-Fuzzy Inference System (ANFIS). Formally, this system employs first-order Sugeno-type rules [

33] and follows the original five-layer architecture introduced in [

34]. In essence, ANFIS combines the transparent, rule-based reasoning of fuzzy logic with the learning capabilities of artificial neural networks. Instead of manually defining the rules and the parameters (e.g., the shapes) of the linguistic terms, the system automatically learns them from the example data.

The same input and output variables defined at the beginning of this section are used here. However, ANFIS directly produces a crisp output, expressing the irrigation recommendation as a numerical value. In our case, this value corresponds to the number of liters of water to be applied per vineyard row.

More in detail, the first-order Sugeno rule base comprises rules of the following form:

where each fuzzy set

may take any differentiable shape (e.g., triangular, trapezoidal, Gaussian), and

are linear coefficients optimized during training. The final output is produced by aggregating these local consequents via their normalized firing strengths.

The network comprises five layers, namely:

Layer 1-Fuzzification: Each crisp input is mapped to a set of membership values through parameterized membership functions. The shape parameters (e.g., centers, widths, slopes) are initialized heuristically and refined through learning.

Layer 2-Rule Strength: For each rule

k, the firing strength

is computed as the t-norm (typically the product or minimum) of the antecedent membership degrees:

Layer 3-Normalization: Each rule’s firing strength is normalized across all rules:

ensuring that

.

Layer 4-Consequent Computation: The normalized strength

weights a local first-order polynomial function:

where the coefficients

are learned jointly with the membership function parameters.

Layer 5-Output Aggregation: The final crisp output is the weighted sum of the rule outputs:

With ANFIS, training is performed end-to-end using gradient-based optimization (e.g., backpropagation to minimize the mean squared error), allowing the model to adjust the fuzzy partitions and the Sugeno consequents simultaneously. This joint optimization enables the inference system to capture complex, non-linear relationships among soil moisture levels and predictions, weather forecasts, and crop water demand, while maintaining a transparent rule-based structure.

3.3. IDSS Tuning Methodology

A key step in [

11] for designing the Mamdani-type IDSS was the use of Bayesian optimization to fine-tune the membership function parameters (e.g., the shapes and centers of the linguistic terms). To this end, the authors created a validation dataset, hereafter referred to as Ruleset-2, based on expert feedback collected through structured surveys that simulated realistic irrigation scenarios. Specifically, in each survey, an expert was presented with a set of numerical values for the four input variables that represents a possible field status and was asked to indicate the corresponding number of irrigation turns to be applied. During the optimization process, each candidate fuzzy system was evaluated on Ruleset-2 by comparing its irrigation recommendations with those provided by the experts. The objective was to minimize the mean squared error (MSE), defined as:

where

is the expert’s recommendation and

is the corresponding output generated by the fuzzy system for the

i-th validation sample.

In this study, to ensure an accurate and fair comparison between the two IDSSs, both were evaluated under identical conditions. For the ANFIS model, this required building a dedicated training dataset by combining Ruleset-1 and Ruleset-2. Since ANFIS operates on numerical input–output pairs rather than purely linguistic descriptors, each fuzzy rule from Ruleset-1 was systematically defuzzified into one or more crisp samples. Specifically, for every antecedent term, we selected between one and three numerical values corresponding to the points where its membership function reached its maximum before Bayesian tuning. When a rule antecedent did not constrain a particular variable (i.e., the linguistic category was unspecified), that variable was instantiated by generating one representative numerical value for each of its defined linguistic terms. This expansion converted the original fuzzy rule into multiple concrete training examples. The procedure follows the approach described in [

35], where membership-function peaks are sampled to generate numerical training data from a fuzzy rule base. Finally, the dataset was augmented with the expert-derived samples from Ruleset-2.

Finally, to determine the optimal configuration of the ANFIS-based IDSS, a cross-validation procedure is employed to tune its hyperparameters. The search space includes the type of membership functions used to model the linguistic terms, the learning rate of the training algorithm, and the number of training epochs. In each fold, model performance is evaluated using the MSE defined in Equation (

1). The average MSE across all validation folds is used to select the best hyperparameter combination. Once cross-validation is complete, the model is retrained on the entire dataset with the selected hyperparameters, thereby leveraging all available data to refine the fuzzy partitions and decision surfaces under optimal learning conditions.

Figure 1 provides a graphical overview of the entire workflow, illustrating both the Mamdani and ANFIS inference pipelines.

6. Results and Discussion

The simulations and analyses were fully implemented in Python (version 3.11). For specific software libraries, Scikit-Fuzzy was used for Mamdani-based fuzzy logic modeling, while the S-ANFIS library in PyTorch (version 2.9.1) was employed for implementing the Takagi-Sugeno ANFIS model.

The optimal hyperparameter configuration of the ANFIS-based IDSS was identified by a grid search, in which each candidate was evaluated using a k-fold cross-validation procedure (with

). Before the ANFIS learning phase, all input and output variables were standardized to zero mean and unit variance. The grid search explored different types of membership function, learning rate values, and numbers of training epochs, as summarized in

Table 2. Each candidate configuration was evaluated by averaging the mean squared error over the five folds.

The best performing configuration used the hybrid membership function shape (two sigmoidal flanks with a central Gaussian peak), a learning rate of

, and 200 epochs. In this setting, the cross-validation process produced a standardized average MSE of 0.118 (see Equation (

1)). These results demonstrate that hybrid fuzzy partitions better reflect the natural behavior of the input variables: the sigmoidal parts capture gradual changes that stabilize at the extremes, while the Gaussian peak provides precise focus around key central values. Using these hyperparameters, the model was finally re-trained on the full dataset to refine fuzzy partitions and decision surfaces.

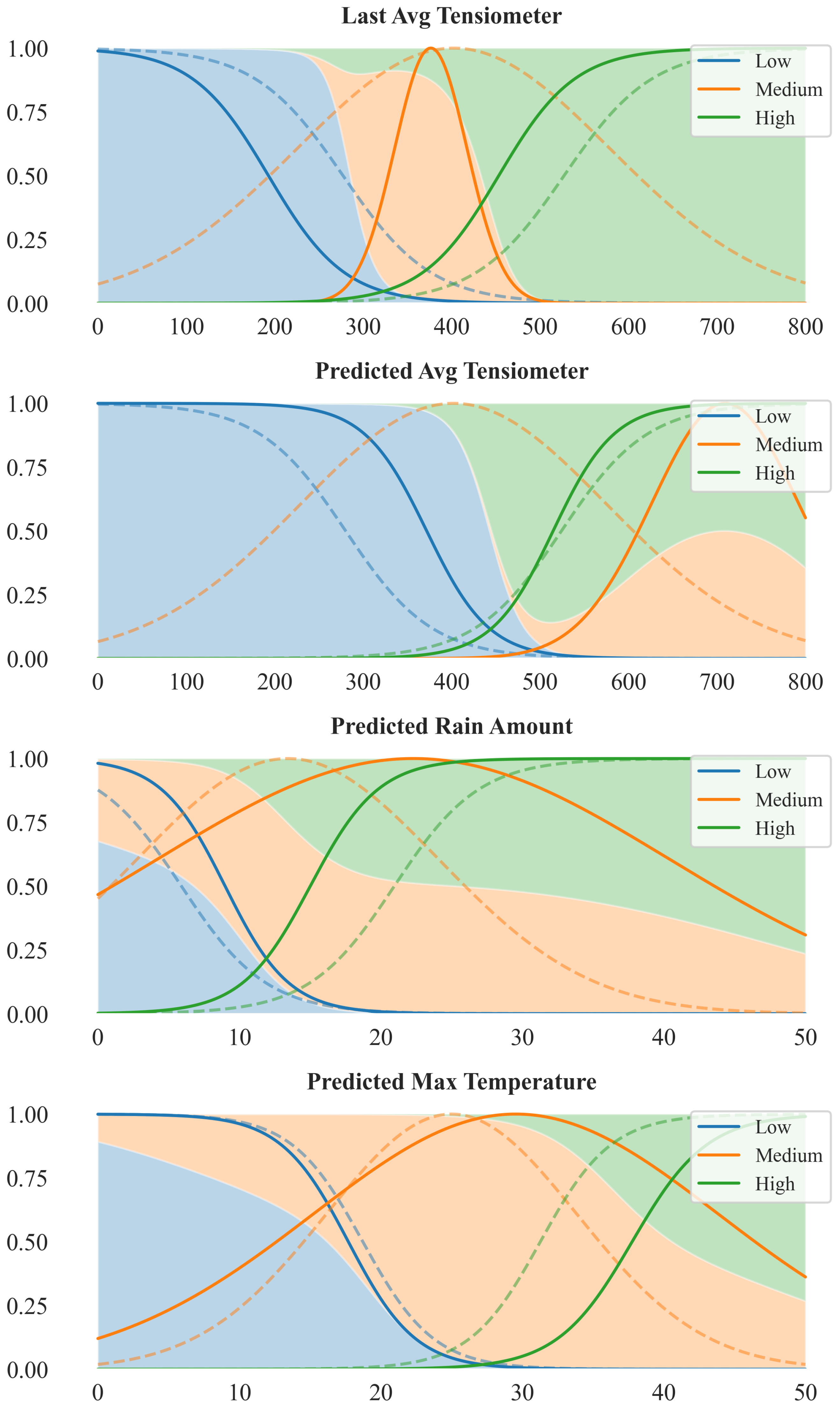

Figure 8 illustrates the changes from the initially defined membership functions (based on domain-expert knowledge and aligned with [

11]) to those obtained after training, shown as dashed and solid lines, respectively.

After training, the membership functions of the Last Avg Tensiometer show a pronounced refinement: the Low term becomes sharply confined below approximately 250 millibars (mbar), and the High function rises rapidly just above 400 mbar, drastically reducing the transition zone. This indicates that the model has learned to strongly emphasize recent soil water tension measurements in identifying water stress thresholds. In contrast, the Predicted Avg Tensiometer variable maintains broader and smoother membership transitions. The three linguistic terms remain largely overlapping, with the Medium membership retaining a relatively flat and wide distribution, suggesting a more diffuse contribution of the forecasted moisture values to the model decision boundaries. For the Predicted Rain Amount variable, the membership functions contract around the moderate precipitation range (roughly 10–25 mm). Both Low and High memberships pull away from extremes, indicating a focus on central rainfall values as the most informative for the model’s output. Regarding Predicted Max Temperature variable, the optimized membership functions remain largely similar to their initial configuration. While minor sharpening occurs, particularly around the Medium region, the overall shape and boundaries of the curves are preserved, suggesting that the initial partitioning was already well aligned with the structure of the data in this dimension.

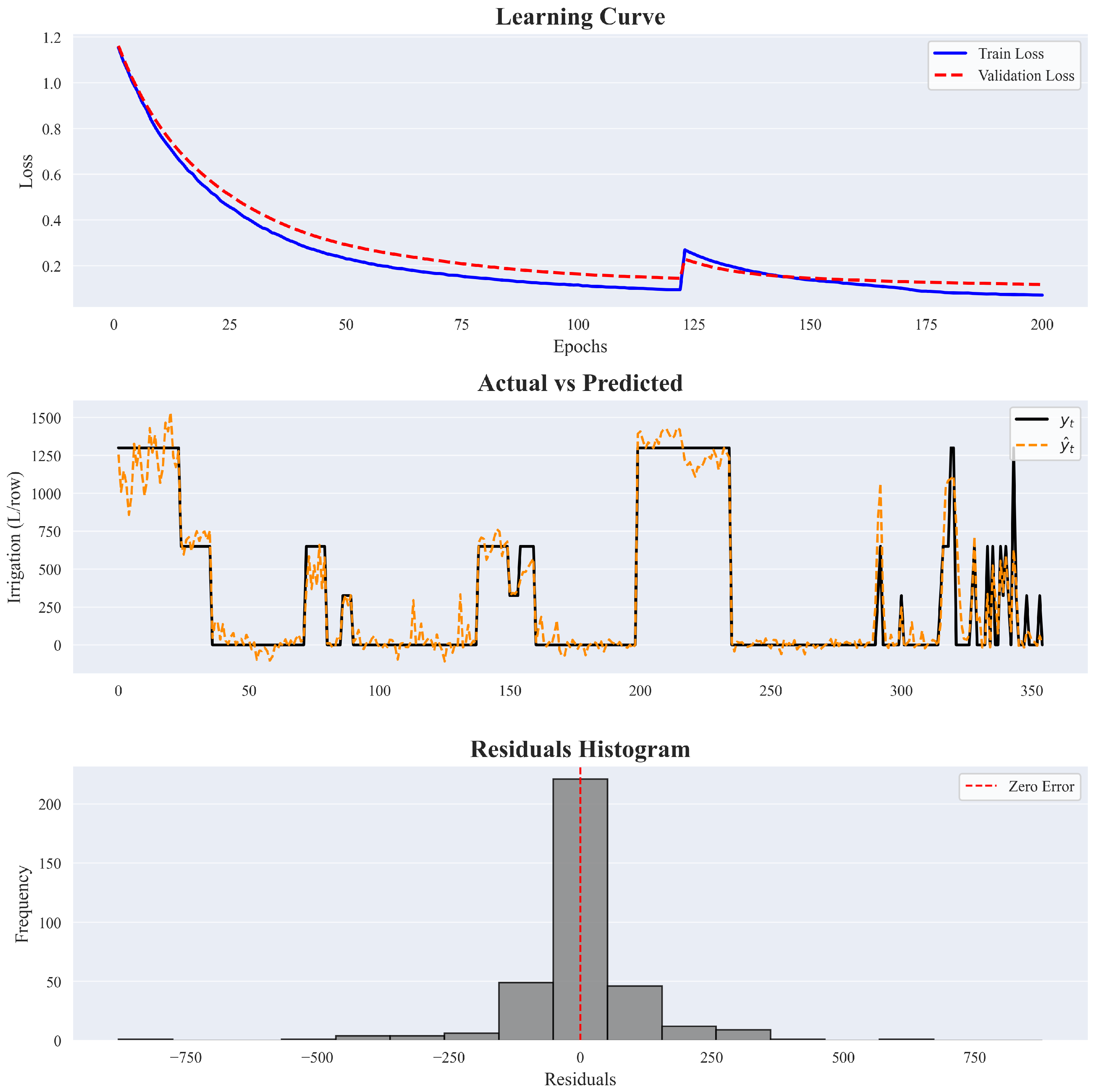

Figure 9 provides an overview of the model’s training process along with an evaluation of its adaptation accuracy. The first subplot illustrates the evolution of the training and validation errors, expressed as the mean standardized MSE across the five cross-validation folds. The learning curves reveal a generally decreasing trend, with both errors dropping steadily over time. However, a noticeable spike occurs around the 125th epoch, where both training and validation losses increase abruptly. This likely reflects a temporary instability during training, possibly due to an unfavorable update in the rule parameters or a temporary overfitting to certain folds. Despite this, the model quickly recovers, and both error curves resume a downward trajectory, ultimately reaching a stable minimum at the latest epochs. In particular, the training and validation curves remain consistently close throughout the process, suggesting that the model does not suffer from significant overfitting.

The second subplot shows the relationship between observed and predicted irrigation volumes after the ANFIS model was retrained on the full dataset, represented by solid black and dashed orange lines, respectively. The outputs shown are continuous values; in operational deployment, they are mapped to the nearest valid discrete irrigation volume. While this transformation is not depicted in

Figure 9, it is a critical step for integration into the decision-making process. As shown in the plot, the model aligns well with all irrigation demands, for both low and high water volumes, indicating a strong fit to the data.

The third subplot shows the histogram of residuals, where each residual is defined as . The distribution is centered around zero, with a high concentration of small errors, indicating accurate model predictions in most cases. The symmetry of the residual distribution suggests that the model does not systematically overestimate or underestimate the suggested irrigation volumes, ensuring balanced predictions. Considering that a single irrigation turn corresponds to 650 L/row, the absolute prediction error exceeds this value only twice, once with a positive residual and once with a negative residual.

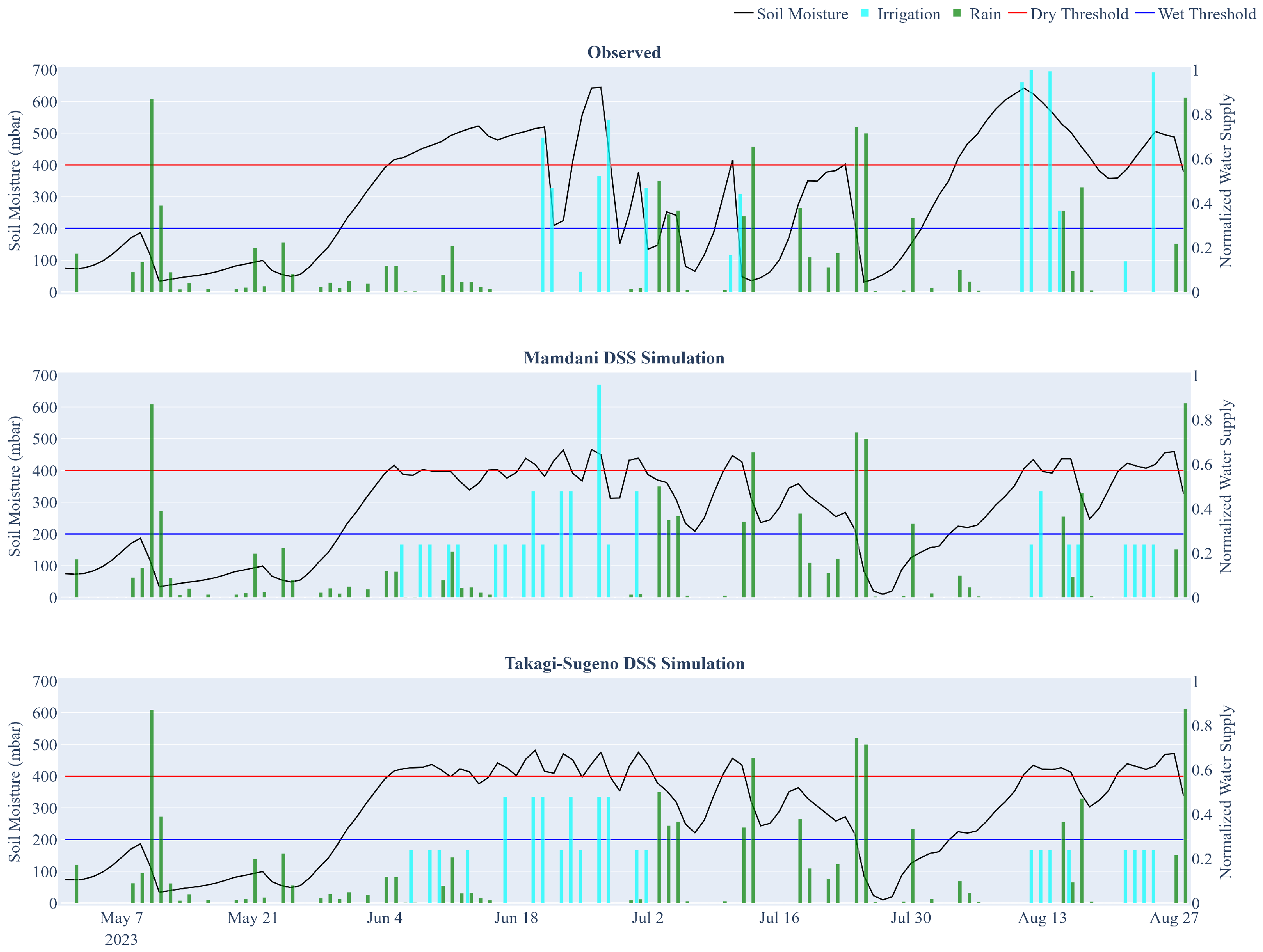

Figure 10 illustrates an example comparison of irrigation scheduling for a single water sector in the study area during the 2023 season, contrasting the actual farmer-managed strategy with simulations produced by the Mamdani-type IDSS and the Takagi–Sugeno ANFIS IDSS (IDSS

M and IDSS

TS, respectively).

Both IDSSs improve upon the observed irrigation schedule by keeping soil water tension more consistently within the target range shown in

Figure 10 (blue and red lines). Values between these two lines represent the optimal moisture window for crop health. In the observed strategy (top subplot), soil tension frequently drifts outside this range, with several irrigation events occurring shortly before or after substantial rainfall (cyan and green bars, respectively). In contrast, the IDSS simulations distribute water more effectively over time, reduce overlaps with rainfall, and produce tensiometer readings that are generally more stable. Notably, IDSS

TS adopts a more conservative, water-saving approach compared to IDSS

M, tending to delay irrigation events and applying slightly lower water volumes, while accepting marginally higher tensiometer readings (i.e., drier soil) in exchange for reducing irrigation volumes.

The same analysis was conducted for four water sectors in the study area, with the results summarized in

Table 3. On average, the observed strategy applied 1,663 L of water per row, compared to 6,175 L for IDSS

M and 5,444 L for IDSS

TS, corresponding to water savings of 51.25% and 57.03%, respectively.

As shown in

Table 3, despite the reduction in water use, both IDSSs maintained effective moisture control. The number of days exceeding the critical dryness threshold decreased, on average, from 27.8 under observed management to 15 with IDSS

M and 23 with IDSS

TS. As expected, the average tensiometer readings increased moderately, from 237.3 mbar to 244 mbar for IDSS

M and 250.3 mbar for IDSS

TS, confirming more efficient water use while keeping soil tension within agronomic thresholds.

Across all sectors, both decision support strategies consistently outperformed the observed strategy in terms of water use efficiency, with IDSSTS systematically using less water than the Mamdani-based variant. Interestingly, sector-level patterns reveal the adaptive behaviors of the controllers. In Sector 2, the second most water-intensive sector under the observed management (11,486 L of water used), both IDSS variants halved water volume (5,200 and 4,875 L for IDSSM and IDSSTS, respectively), critical dry days dropped significantly and the average tensiometer readings remained near 250 mbar in all cases. Instead, in Sector 4, the sector with the highest water stress, IDSSTS applied 6,825 L (versus 8,450 L for IDSSM) but incurred 33 critical days, exceeding even the observed strategy. In general, these results confirm that both IDSSs produce robust and adaptive irrigation strategies: IDSSM achieves stronger dryness control per unit of water saved, while IDSSTS maximizes volume reduction, with an increase in stress days.

To assess the statistical robustness of the differences observed between the decision systems, a stratified block bootstrap procedure described in

Section 5.2 was implemented. The analysis was based on soil moisture tensiometer data from Sector 4 (i.e., the one depicted in

Figure 10), which was selected because it is considered the most stable and responsive to field variations among all monitored sectors. A total of 1,000 simulations were generated through stratified resampling of the original 2023 weather series. Each trajectory consisted of 123 days, divided into

temporal strata corresponding to the four summer months, and segmented into overlapping blocks of

days to capture short-term autocorrelation in weather patterns. The resulting

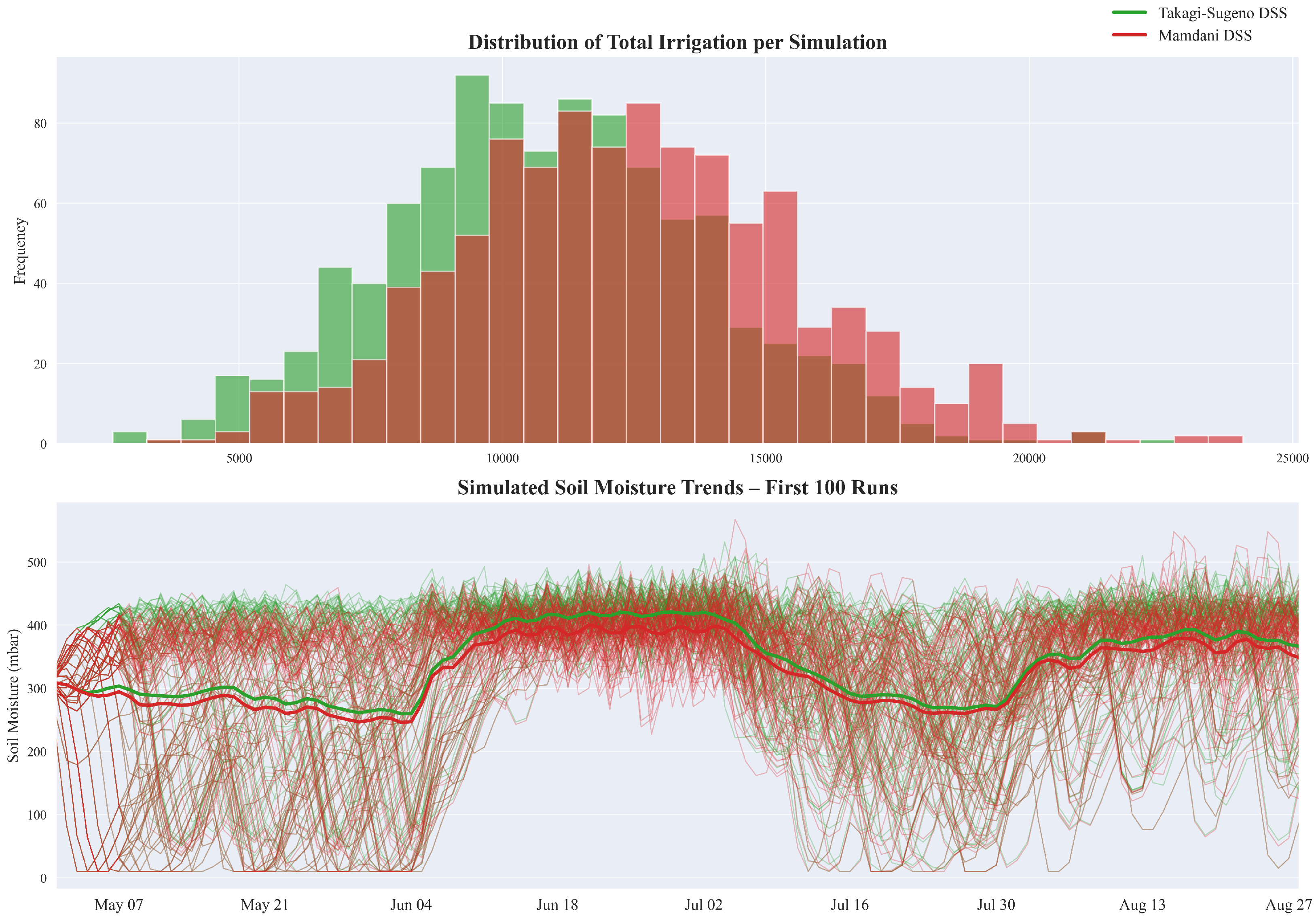

blocks (plus four remaining days) were sampled with replacement within each stratum and concatenated chronologically to preserve realistic temporal dependencies.

Figure 11 displays the bootstrap distributions of key performance metrics. The top plot shows the total irrigation volume per bootstrap sample, revealing that IDSS

TS consistently applies less water than IDSS

M. The two distributions are clearly separated, with IDSS

TS shifted to the left, indicating a more conservative irrigation behavior under the same weather uncertainty. The plot below shows the corresponding distribution of the average soil water tension level. In this case, IDSS

M tends to maintain lower tensiometric values than IDSS

TS, which implies slightly wetter soil conditions. Although both controllers operate within agronomic thresholds, overlap in the distributions seems to be more pronounced here than for irrigation.

To statistically validate these differences, one-sided pairwise bootstrap tests were performed at a level of significance of 5%.

Table 4 reports the corresponding means, standard deviations, 95% confidence intervals, and

p-values for the hypothesis of differences in average values. A statistically significant result supports the alternative hypothesis that the IDSSs perform differently in that respective metric.

In terms of total irrigation volume, IDSSTS applied substantially less water than IDSSM, with a mean of 10,801.7 L/row compared to 12,349 L/row. The 95% confidence intervals for the two systems do not completely overlap, and the associated one-sided p-value of 0.001 indicates a statistically significant advantage for IDSSTS in minimizing water use. However, IDSSM shows better performance in reducing both the number of critical days (27.6 versus 46) and the average soil tension (327.8 mbar versus 342.1 mbar), indicating more favorable soil moisture conditions compared to IDSSTS. In both cases, the differences were supported by highly significant p-values.

These findings highlight a clear trade-off between the two decision support systems. IDSSTS shows superior performance in minimizing water consumption, while IDSSM is more effective in maintaining favorable soil moisture conditions. The bootstrap-based analysis provides robust support for these conclusions by accounting for variability in weather-driven environmental conditions and confirming the operational distinctions between the two approaches.

7. Conclusions

This work compared two fuzzy-logic–based IDSSs for vineyard management, namely a Mamdani-type controller with expert-defined rules and a Takagi–Sugeno ANFIS trained on ultra-local data. They are consistently defined and tested within a unified evaluation framework that combines counterfactual simulation and a stratified block bootstrap. Both systems integrate soil water tension sensing and short-term forecasts, and when employed in real settings, they reduce water use while keeping soil moisture within agronomic bounds.

Across four water sectors of a real agricultural consortium, the analysis reveals a clear trade-off: the ANFIS-based IDSS achieved greater water savings, whereas the Mamdani system better mitigated plant stress, reducing critical dry days and maintaining a lower average tensiometer value (e.g., wetter soil). These complementary strengths were observed consistently in both the campaign-level comparison and the bootstrap-based statistical analysis.

From a numerical standpoint, the ANFIS strategy reduced irrigation by around 57% on average compared to the baseline, a result supported by robust statistical analysis. In practical terms, if a slight increase in stress days is acceptable (for instance, it is a realistic trade-off for a resilient crop such as grapevine), the ANFIS controller is preferable. Conversely, for crops where water stress is less tolerable, the Mamdani controller may represent a safer choice.

From an operational perspective, this translates into a practical selection rule: when water scarcity or pumping costs are the dominant constraints, the ANFIS controller offers the greatest benefits; when minimizing crop stress and ensuring tighter moisture control is the highest priority, the Mamdani controller holds the advantage. Since both approaches are interpretable and already integrated with the IrriTre cloud platform, they can be deployed under different water management policies, or even combined in policy-driven ensembles.

Building upon the site-specific models developed in this study, future research efforts should also concentrate on enhancing their generalizability and transferability across diverse viticultural environments. Since the current models were trained exclusively under specific local conditions (e.g., local soil properties, climate, and irrigation management), a critical research direction is the data augmentation of the existing training set. This expansion would involve systematically collecting and integrating local data from a broader range of geographic areas and management regimes. The ultimate objective is model generalization: the development of robust, generalized models capable of providing reliable predictions and effective decision support across a significantly broader range of contexts, thereby minimizing the need for extensive site-specific recalibration.