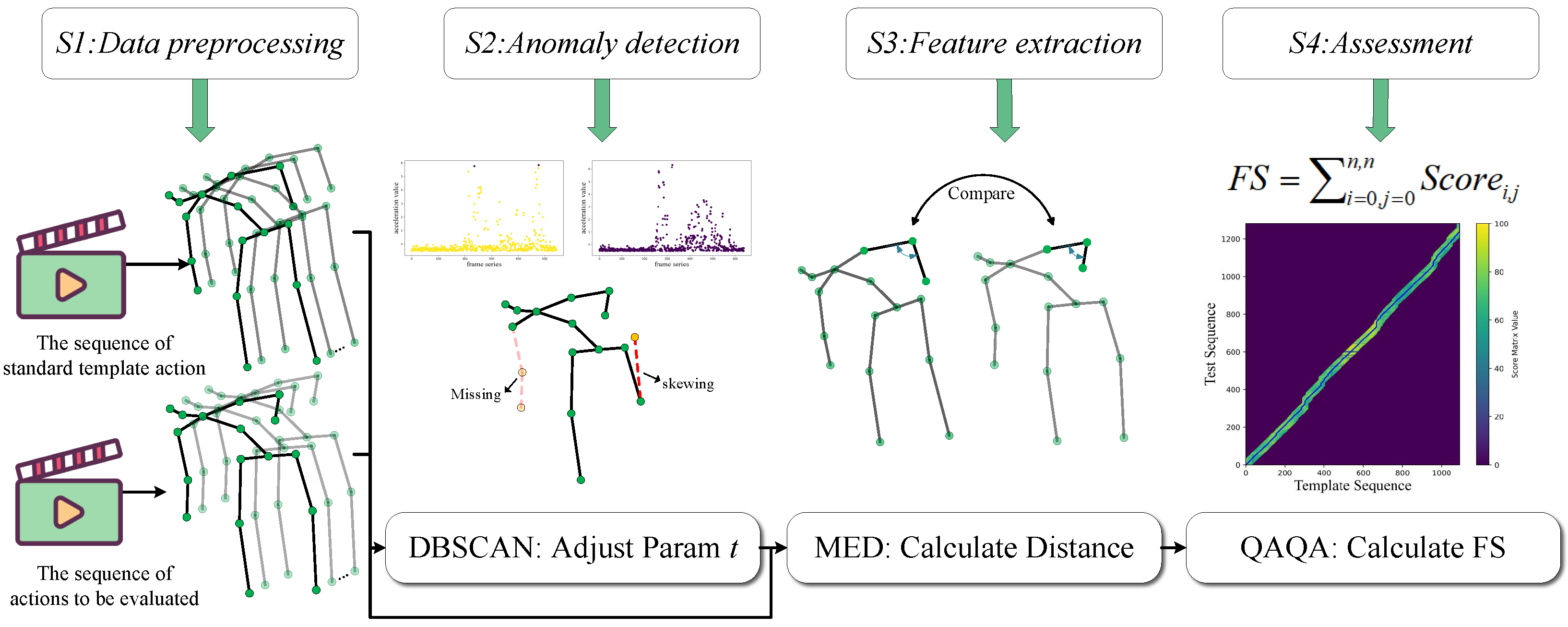

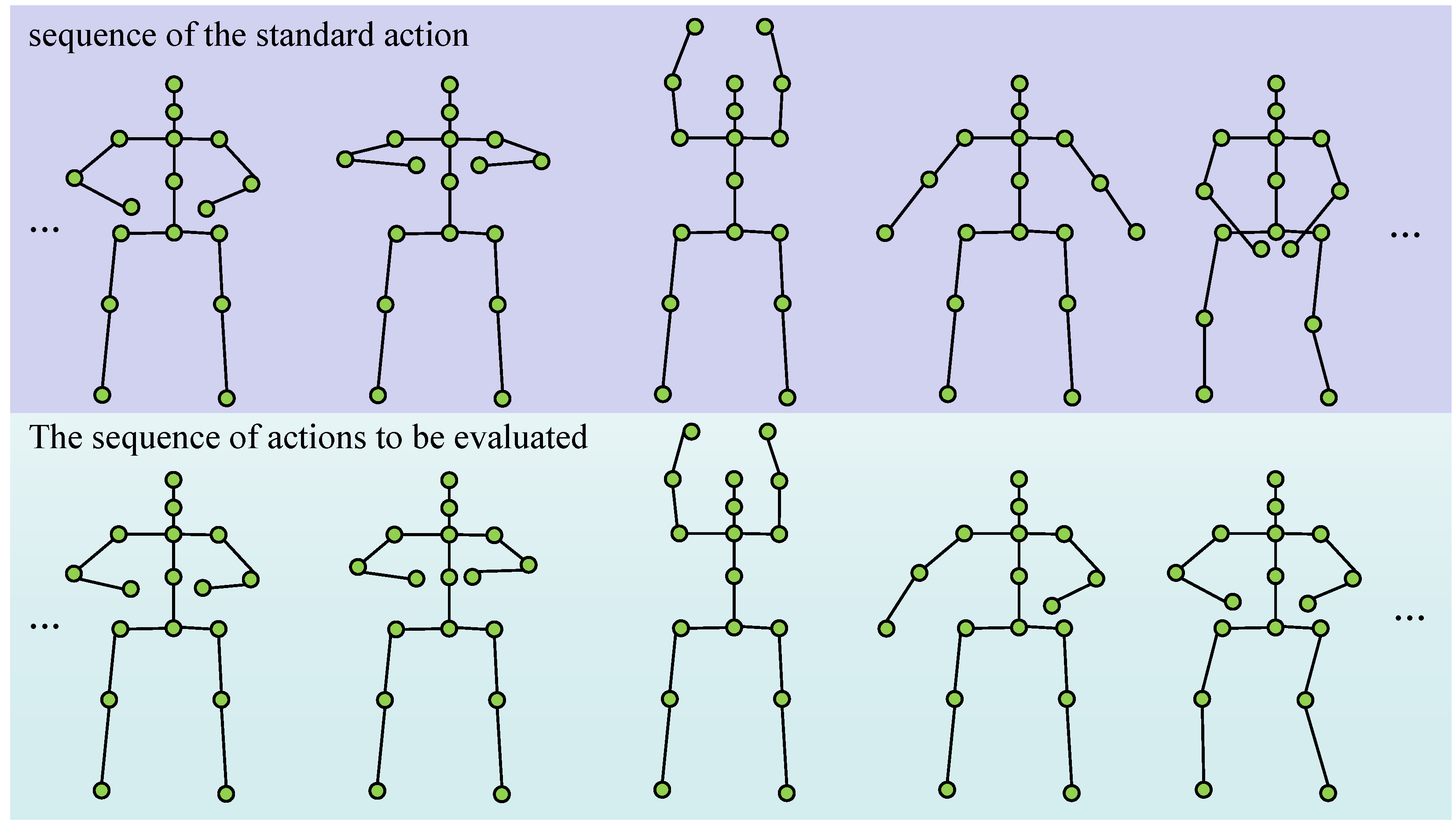

Existing AQA methods are hindered by a dependency on large, annotated datasets and a failure to handle unsegmented real-world videos, which limits their generalizability and practical utility. To overcome these barriers, we propose an unsupervised, skeleton-based AQA framework. As depicted in

Figure 1, our annotation-free pipeline consists of four sequential stages: (S1) data extraction and processing, (S2) anomaly detection, (S3) action feature construction, and (S4) final score computation via our proposed QAQA algorithm. This design ensures broad applicability across diverse exercise scenarios without requiring model retraining.

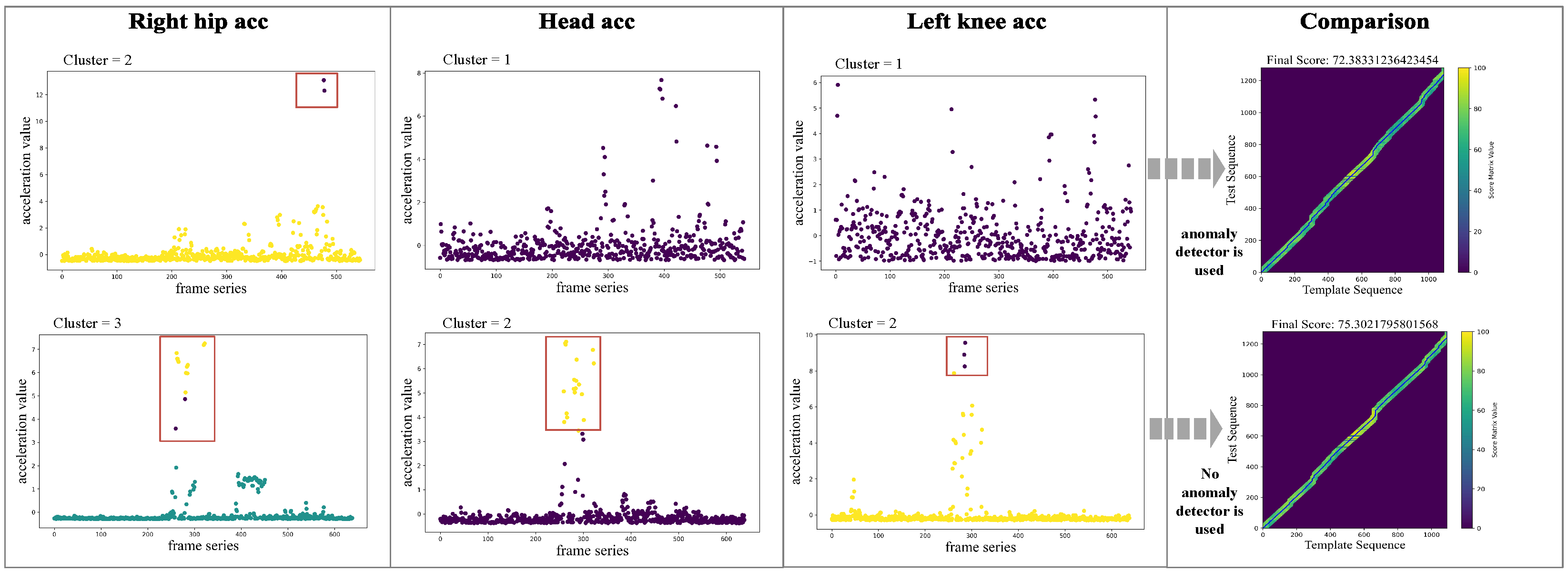

3.2. S2: Anomaly Detection

In outdoor sports training, the accuracy of human keypoint detection can be significantly affected by factors such as camera angles, lighting conditions, object occlusions, and algorithmic errors. Subsequently, these issues affect the assessment of sports actions.

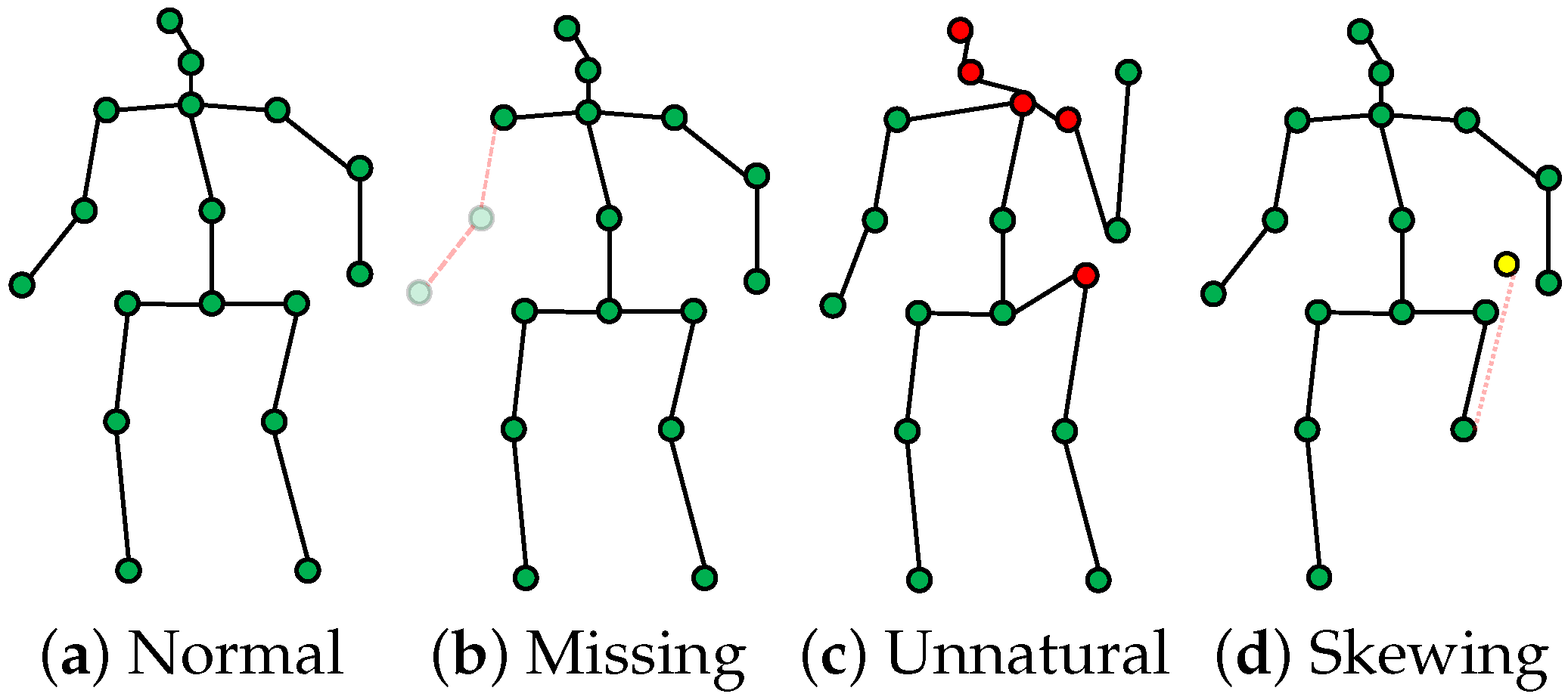

Figure 2 illustrates several common anomalies, including missing keypoints

Figure 2b, abnormal shifts

Figure 2d, and unnatural pose keypoints

Figure 2c.

To address these challenges and improve the accuracy of action assessment, we propose a pose feature enhancement method based on anomaly detection. This method identifies anomalies introduced during data preprocessing and dynamically adjusts subsequent scoring based on the type and severity of detected anomalies.

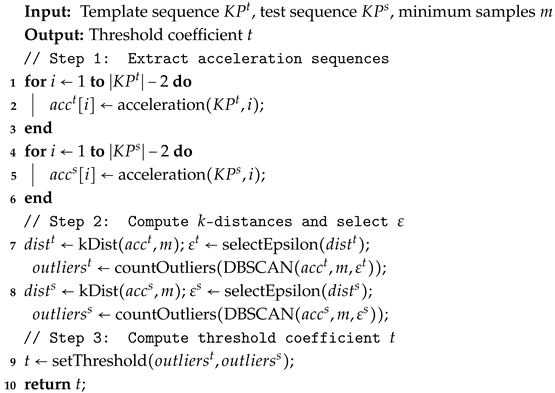

The processing flow of the anomaly detection module consists of three primary steps: First, extract the acceleration sequence for both the template and the test keypoint data. Second, determine the DBSCAN parameters () and detect outliers in the acceleration sequences. Finally, calculate the threshold coefficient t based on the outliers detected in Step 2.

Step 1 (Extracting Acceleration Sequences): Traditional Chinese Qigong actions typically involve slow stretching, twisting, and balancing movements, leading to relatively stable changes in the skeletal keypoints. To improve computational efficiency, we remove data from the spine, chest, and hip midpoint keypoints, as their accelerations show minimal variation. Thus, we retain acceleration data from 14 keypoints.

Each keypoint sequence is approximated as a uniform motion by calculating Euclidean distance differences of every two frames in the 3D space. The

for keypoint

in frame

i is calculated using Equation (

1):

Here, represents the 3D coordinates of a specific keypoint, , n is the total frame count, denotes the Euclidean distance function, and is the interval between two consecutive frames.

Step 2 (Outlier detection with DBSCAN): We employ the DBSCAN [

48] for anomaly detection within individual keypoint acceleration series due to its unsupervised nature, its ability to identify outliers without pre-specifying the number of clusters, and its proven efficacy in density-based outlier detection. Since the motion ranges of the keypoints vary significantly across different actions, using a fixed neighborhood radius could result in suboptimal clustering. Thus, we dynamically determine the DBSCAN parameter

using a

k-distance method. The

k-distance is the distance from each acceleration data point to its

k-th nearest neighbor, computed by Equation (

2):

Here,

denotes the minimum number of samples required to form a cluster, and

denotes the acceleration sequence calculated by Equation (

1).

We then select

as the point with the maximum slope change in the sorted

k-distance graph, as shown in Equation (

3):

Here,

represents the sorted

k-distance values,

indexes distances. Equation (

3) identifies the point with the maximum slope difference, which sets the value of the parameter

. The selection of

is illustrated in

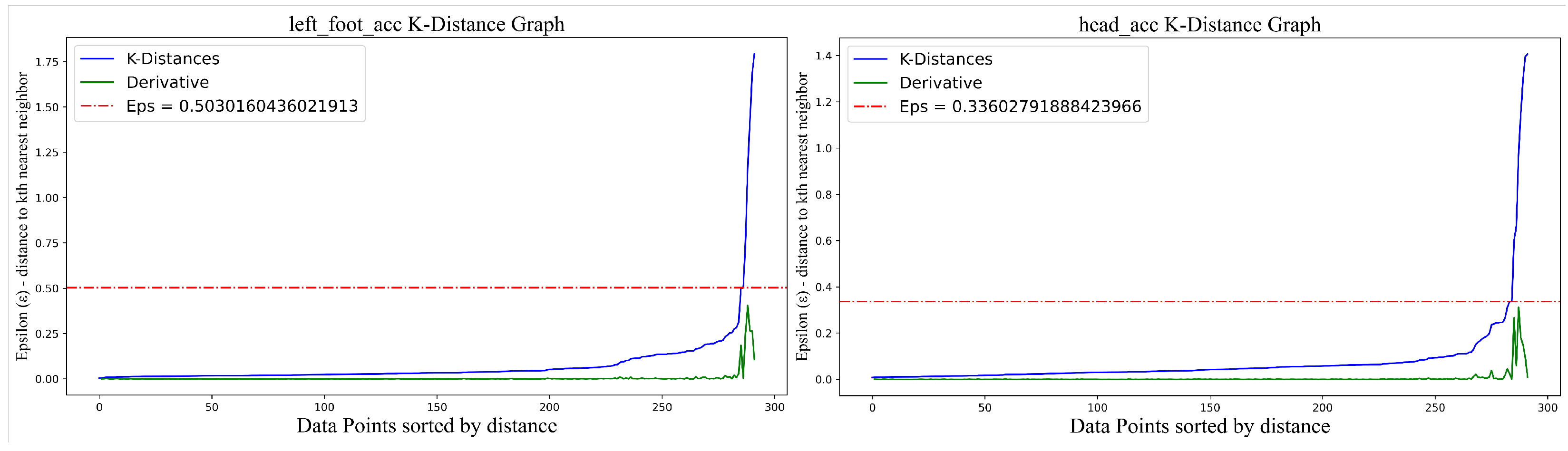

Figure 3.

Figure 3 visualizes the

k-distance graph. The vertical axis shows the maximum distance from each data point to its nearest neighbor required for the minimum cluster size, while the horizontal axis indicates the index of data points sorted by distance. The green curve represents the derivative of the

k-distance values, and the red dashed line marks the optimal position of the parameter

. The DBSCAN algorithm selects the vertical coordinate value at this position as its parameter

. This approach ensures accurate clustering for each keypoint under varying conditions, improving the robustness of anomaly detection.

Therefore, given the input action sequences, we denote the total number of acceleration outliers in the standard action sequence as

and in the test video sequence as

. By entering the determined parameters (

,

) and the acceleration sequences into the DBSCAN model, we can calculate

and

. The calculation method for counting outliers is shown in Equation (

4):

Here, is a function that returns the number of detected outliers, and refers to the DBSCAN clustering model.

Step 3 (Threshold Coefficient Calculation): We calculate the threshold coefficient

t based on the ratio of the absolute difference between the number of outliers in the standard action sequence (

) and the test action sequence (

), given by

. The specific threshold values assigned according to this ratio are detailed in Equation (

5).

It is important to note that some discrepancies between standard and test actions are expected. However, if both actions have identical distributions of joint acceleration outliers (i.e., ), it strongly suggests the possibility of cheating. To address this, assignments suspected of cheating are directly assigned a threshold coefficient of 1, indicating that no further scoring is required.

The detailed role and usage of this parameter

t will be described further in

Section 3.4. The complete anomaly detection process is summarized in Algorithm 1:

| Algorithm 1: Human Skeleton Keypoint Anomaly Detection |

![Sensors 25 07160 i001 Sensors 25 07160 i001]() |

3.3. S3: Construction of Action Feature

This study integrates motion state features, including limb angles, body orientation, and shoulder-hip angles, to construct a complete 3D human motion representation. The coordinate system is reconstructed with the neck as the origin based on the 3D spatial coordinates of 17 skeletal keypoints. Subsequently, the limb angles (covering both upper and lower limb joints) and body orientation features are calculated in both 2D and 3D space.

To address the morphological characteristics of Qigong action, we select high-attention skeletal keypoints and establish an 11-dimensional angle feature set, as detailed in

Table 1.

The keypoints are labeled using a number-name format, with their mathematical representation given in Equation (

6):

Here, and represent vectors between two joint nodes, and is the angle between these vectors. For simplicity, skeletal keypoints are abbreviated as KP.

For spatial limb segment features, we define four proximal limb segments based on anatomical landmarks: left arm (KP 8, 14, 15, and 16), right arm (KP 8, 11, 12, and 13), left leg (KP 0, 1, 2, and 3), and right leg (KP 0, 4, 5, and 6). By calculating the spatial angles between the center of each limb segment and a reference point at the hip (using the geometric center calculation in Equation (

7)), we establish four-dimensional kinematic parameters for limb movement:

In Equation (

7),

, and

denote the 3D spatial coordinates of the selected limb block, and

represents the 3D coordinates of the center point.

The angle between the center point of a limb block and the midpoint of the spine is then calculated as follows:

Here, denotes the spine vector, is the vector from the neck to the center of the limb block, and is the angle between these two vectors. This calculation yields four spatial upper and lower limb block features, providing important references for subsequent AQA.

To obtain the angle between the coordinates of the center point and the midpoint of the spine, four spatial upper and lower limb block features can be obtained using Equation (

8). These features provide important references for subsequent AQA.

represents the spine vector,

represents the vector from the neck to the center point of the limb block, and

represents the angle between the center point vector of the limb block and the spine vector.

We construct a hierarchical orientation system to represent human body orientation. Using vectors connecting the shoulders (KP-11, 12) and hips (KP-0, 1), we establish a three-dimensional anatomical reference in space. The vector projection is then used to convert discrete directional parameters into continuous radian values, setting the forward direction as and the reverse direction as . These are denoted as orientation features and .

When calculating the opening angles between both hands and both feet, these features are not originally radian values, so we normalize them to radians. Specifically, if the distance between the left and right feet is greater than 0.5 times the distance between the left and right shoulders, the opening angle of the feet is set to . Otherwise, it is set to . Similarly, if the distance between the left and right wrists is greater than 1.5 times the distance between the left and right shoulders, the opening angle of the hands is set to ; otherwise, it is set to . These two features are denoted as and .

For four groups of contralateral limb combinations, such as the left upper limb–left lower limb (KP-15, 16, 2, 3) and the right upper limb–right lower limb (KP-12, 13, 5, 6), sagittal plane movement angles are calculated using Equation (

6) and denoted as

.

Finally, by combining limb angles, body orientation, and shoulder-hip joint angles, we obtain a total of 27-dimensional limb motion angle features: F = [ … , … , … , … , … ]. These features provide comprehensive input data for subsequent action similarity calculations and action scoring methods. To further optimize the efficiency of AQA, we designed a fast action quality scoring algorithm QAQA to address performance bottlenecks in long-sequence calculations.

3.4. S4: Quick Action Quality Assessment

To optimize the time complexity of the ACDTW algorithm [

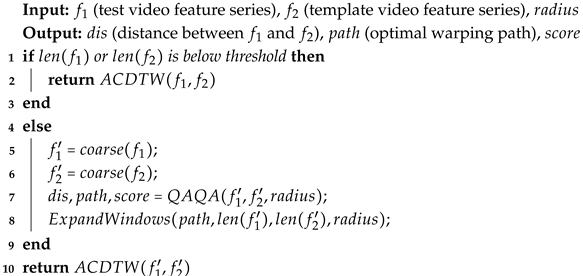

41] for the computation of action similarity, we propose the QAQA algorithm, as detailed in Algorithm 2.

QAQA uses an approximate path approach that incrementally refines the final alignment by coarsening, projecting, and refining the dynamic programming score matrix.

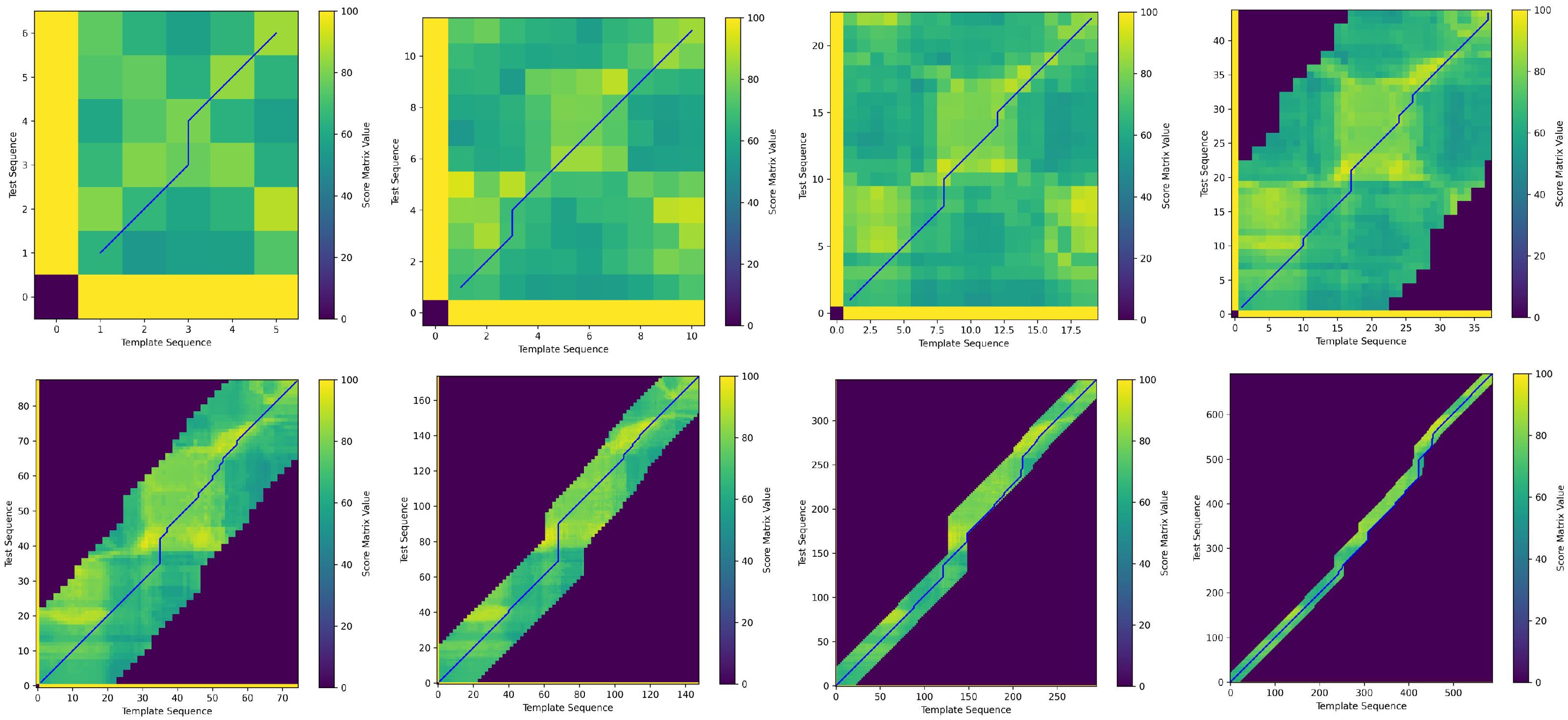

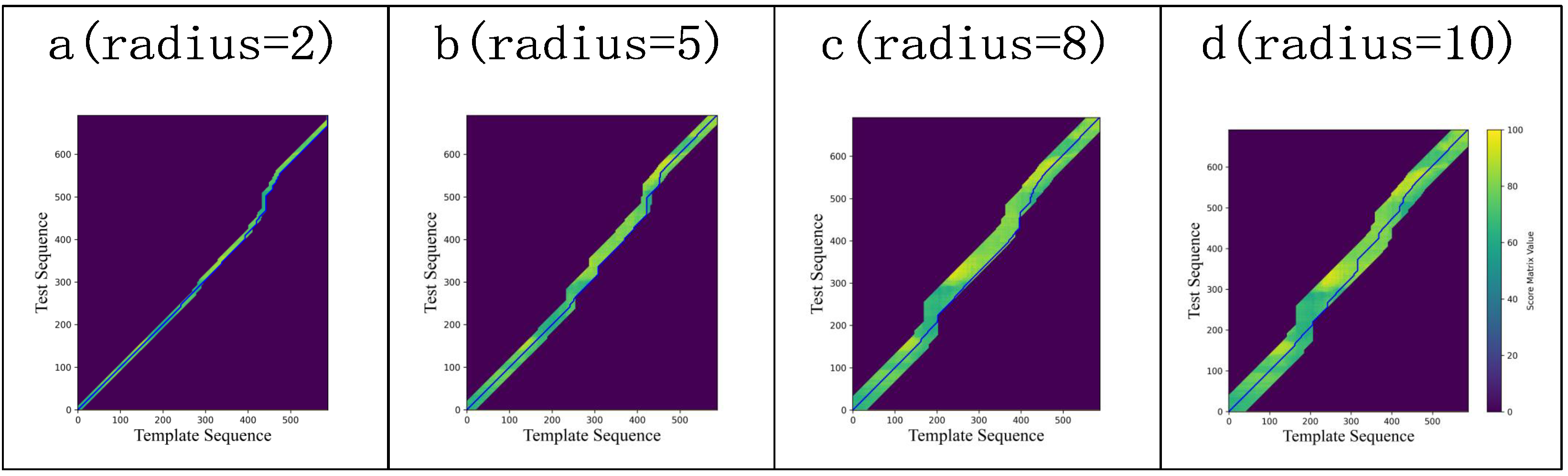

Figure 4 illustrates the iterative process of QAQA, where blue lines indicate backtracking paths from low to high resolution, and darker regions represent areas with uncomputed scores.

| Algorithm 2: Quick Action Quality Assessment (QAQA) |

![Sensors 25 07160 i002 Sensors 25 07160 i002]() |

In the coarsening step, QAQA first performs coarse-grained processing of the two time series. According to Equation (

9), the series are downsampled by averaging pairs of adjacent features, reducing them to a predefined minimum length.

Using the downsampled series, the dynamic programming distance matrix and action score matrix are computed using ACDTW with a penalty function, resulting in a coarse-grained backtracking path. A weighting factor, typically based on local features or time series attributes, adjusts the match cost for each pair of matching points. The calculation methods for ACDTW are shown in Equations (

10) and (

11).

Equation (

10) defines the penalty function, where

N is the number of times each time series point is matched, and

a and

b are the lengths of the two series. Equation (

11) describes the ACDTW computation, where

i and

j index the frames in the standard and test action sequences, respectively.

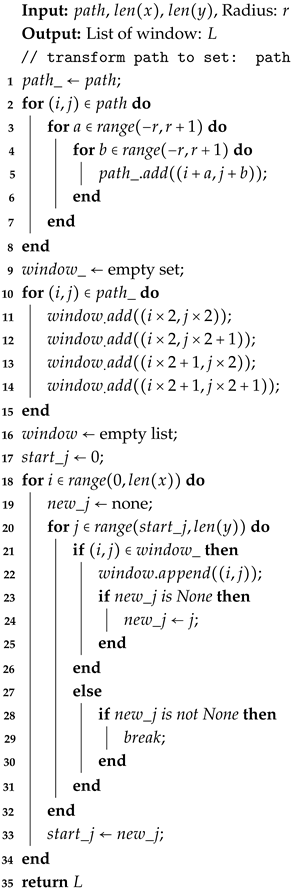

During the projection step, the algorithm calculates the extended window range based on the coarse-grained backtracking path, the radius parameter, and the two feature sequences. This window limits the computational region. Details of the extended window algorithm are provided in Algorithm 3.

| Algorithm 3: ExpandWindows |

![Sensors 25 07160 i003 Sensors 25 07160 i003]() |

In the refinement step, the two time series are upsampled, and ACDTW updates the dynamic programming distances only within the specified window. Based on these distances, the backtracking path is recalculated. This cycle of coarsening, window computation, and refinement repeats until the full-resolution distance matrix and the final backtracking path are obtained, at which point the iteration terminates.

Finally, the action score is calculated along the full-resolution backtracking path using Equations (

12)–(

14). For each frame along the backtracking path, the action score is computed according to Equation (

13). Based on the threshold coefficient

t (determined by Equation (

5) in

Section 3.2), different penalty levels are applied to the action similarity distances. The frame-level scores are then summed and averaged to yield the final score.

In Equation (

12),

and

denote the features of the test and standard action sequences at frame

i, respectively, with

q measuring the discrepancy between the evaluated and standard actions. In Equation (

13),

is the score of frame

i along the backtracking path. If

, the score is set to 0 for that frame. In Equation (

14),

n is the total number of points along the backtracking path.