MLD-Net: A Multi-Level Knowledge Distillation Network for Automatic Modulation Recognition

Abstract

1. Introduction

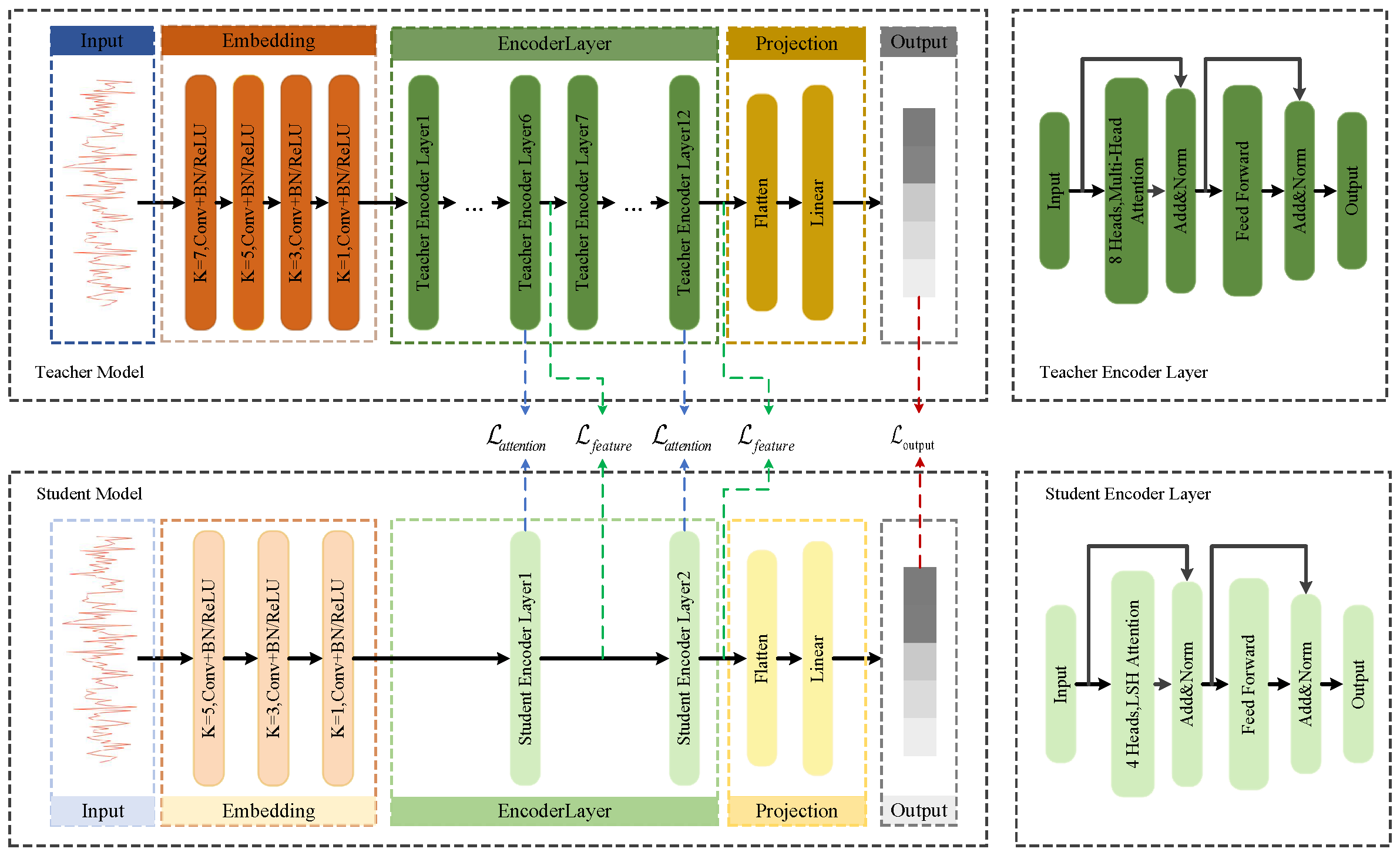

- We propose a multi-level knowledge distillation network (MLD-Net) for AMR. By transferring knowledge simultaneously at the output, feature, and attention levels, MLD-Net enables a lightweight student model to learn not only the teacher’s predictive distribution but also its internal reasoning process regarding complex signal characteristics, significantly enhancing the student model’s performance and its robustness under diverse channel conditions.

- We design an efficient yet powerful student model. We demonstrate that a compact Reformer-based architecture, when guided by a larger Transformer teacher through our multi-level distillation, can achieve state-of-the-art accuracy with only a fraction of the computational and memory costs, making it ideal for edge deployment.

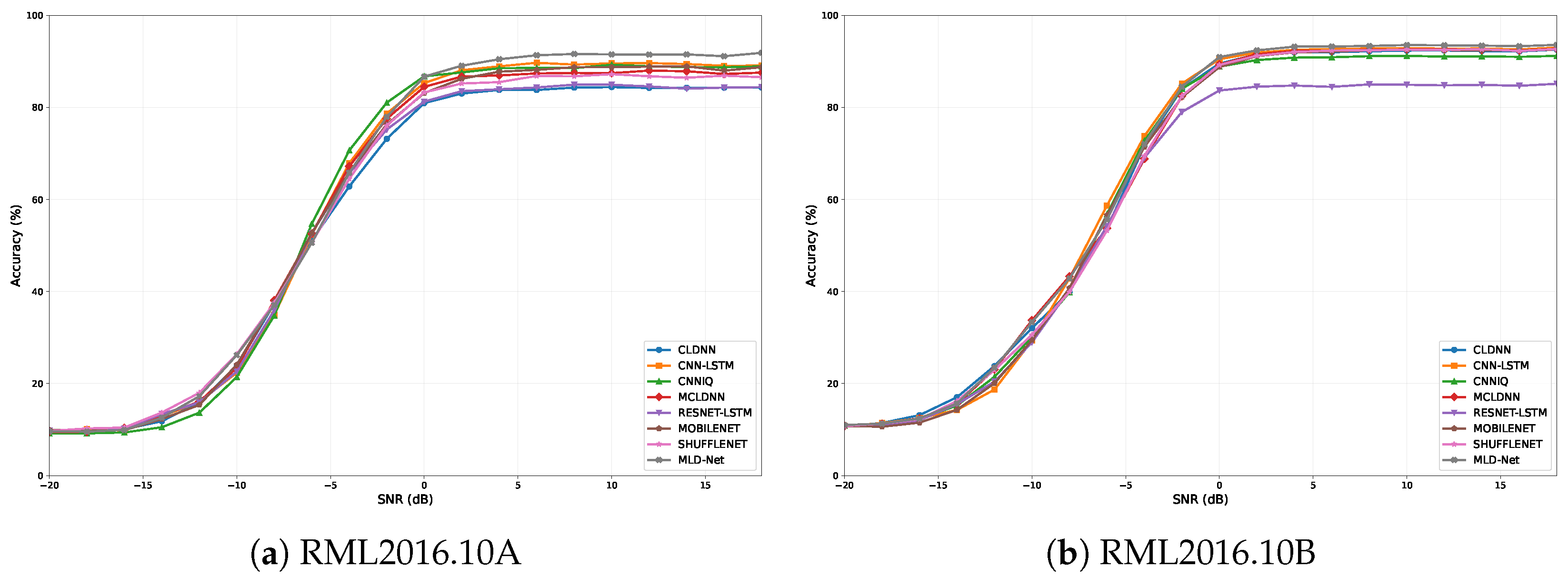

- Adequate comparative and ablation experiments on the RML2016.10A and RML2016.10B datasets validate that our proposed MLD-Net establishes a superior trade-off between efficiency and performance. It achieves state-of-the-art accuracy among lightweight models (e.g., 61.14% on 10A and 64.62% on 10B) with an approximately 131.9-fold parameter reduction compared to the teacher model.

2. Related Work

2.1. Automatic Modulation Recognition

2.2. Model Pruning and Lightweight

2.3. Knowledge Distillation

3. Methodology

3.1. Signal Model

3.2. Proposed Framework Architecture

3.3. Multi-Level Knowledge Distillation

4. Experiments

4.1. Dataset and Experimental Setup

4.2. Results

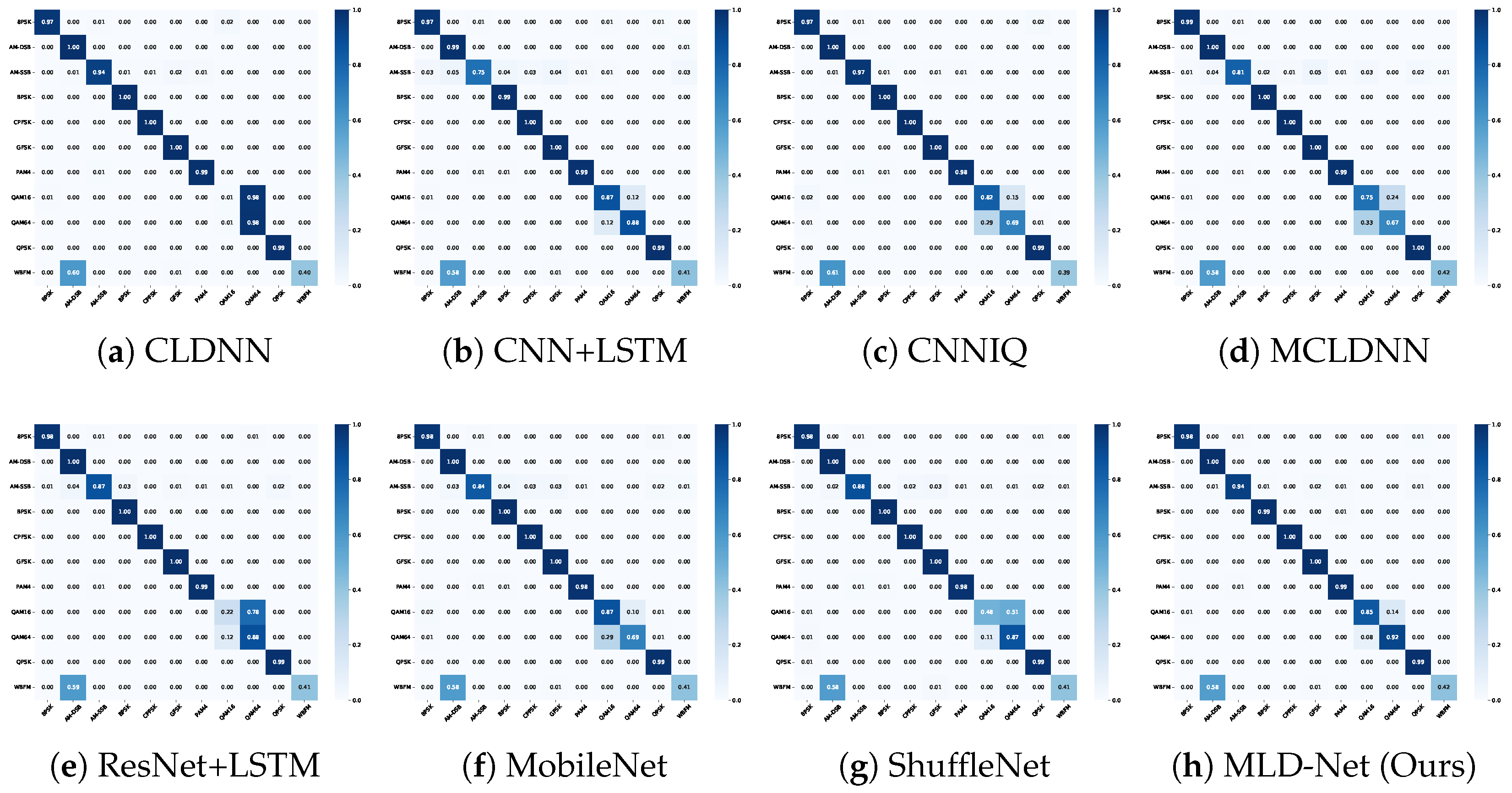

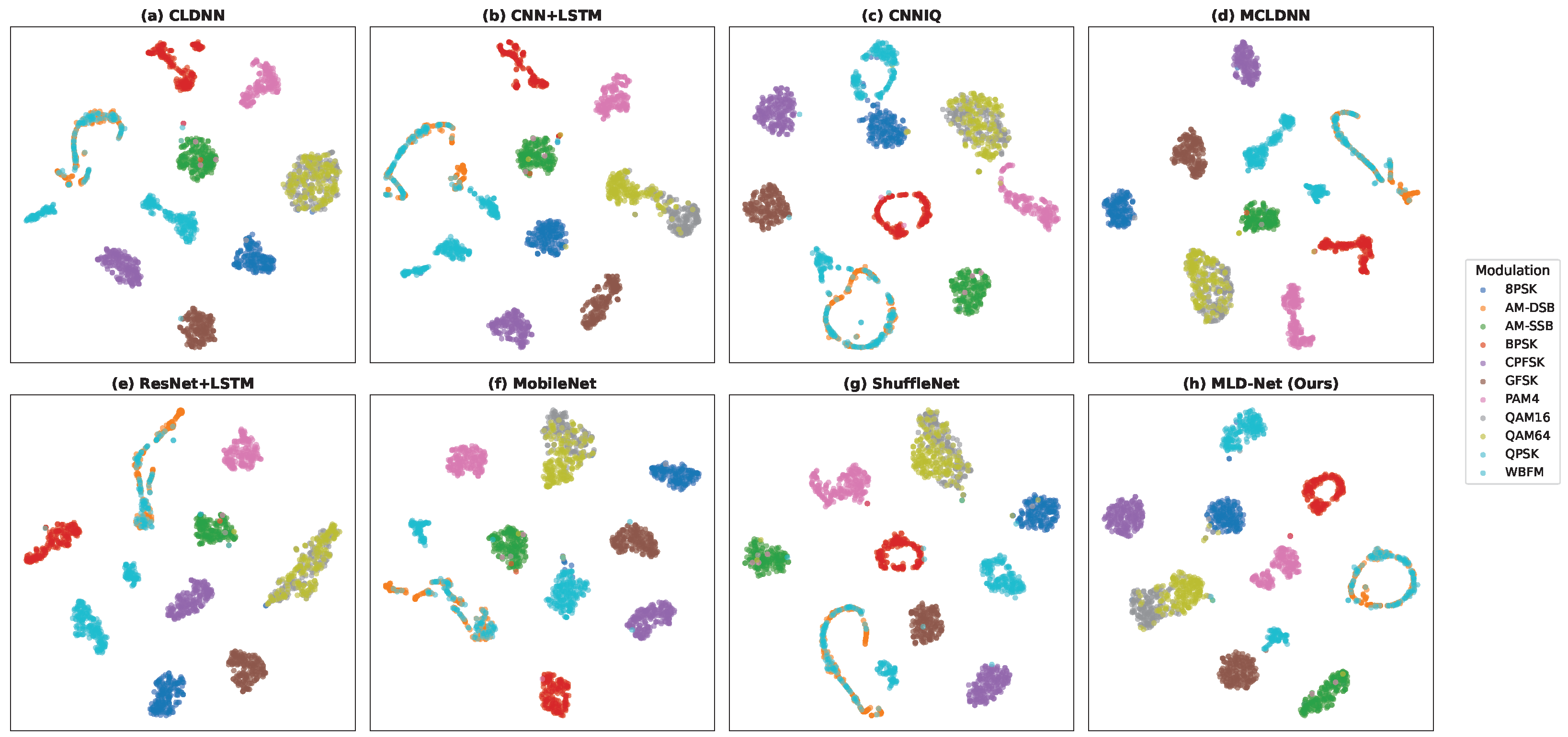

4.2.1. Comparison with State-of-the-Art Methods

4.2.2. Ablation Study

- Student w/o KD: The baseline Reformer student model trained from scratch using only the cross-entropy loss ().

- +Output KD: Student trained with .

- +Feature KD: Student trained with .

- +Attention KD: Student trained with .

- +Output + Feature: Student trained with the two corresponding distillation losses.

- +Output + Attention: Student trained with the two corresponding distillation losses.

- +Feature + Attention: Student trained with the two corresponding distillation losses.

- MLD-Net (Ours): The full proposed model trained with all four loss components.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Nahum, C.V.; Lopes, V.H.L.; Dreifuerst, R.M.; Batista, P.; Correa, I.; Cardoso, K.V.; Klautau, A.; Heath, R.W. Intent-aware radio resource scheduling in a ran slicing scenario using reinforcement learning. IEEE Trans. Wirel. Commun. 2023, 23, 2253–2267. [Google Scholar] [CrossRef]

- Lin, Y.; Tu, Y.; Dou, Z. An improved neural network pruning technology for automatic modulation classification in edge devices. IEEE Trans. Veh. Technol. 2020, 69, 5703–5706. [Google Scholar] [CrossRef]

- Hazza, A.; Shoaib, M.; Alshebeili, S.A.; Fahad, A. An overview of feature-based methods for digital modulation classification. In Proceedings of the 2013 1st International Conference on Communications, Signal Processing, and Their Applications (ICCSPA), Sharjah, United Arab Emirates, 12–14 February 2013; pp. 1–6. [Google Scholar]

- O’shea, T.; Hoydis, J. An introduction to deep learning for the physical layer. IEEE Trans. Cogn. Commun. Netw. 2017, 3, 563–575. [Google Scholar] [CrossRef]

- Zhang, H.; Yuan, L.; Wu, G.; Zhou, F.; Wu, Q. Automatic modulation classification using involution enabled residual networks. IEEE Wirel. Commun. Lett. 2021, 10, 2417–2420. [Google Scholar] [CrossRef]

- Sills, J.A. Maximum-likelihood modulation classification for PSK/QAM. In Proceedings of the MILCOM 1999, IEEE Military Communications. Conference Proceedings (Cat. No. 99CH36341), Atlantic City, NJ, USA, 31 October–3 November 1999; Volume 1, pp. 217–220. [Google Scholar]

- Wei, W.; Mendel, J.M. Maximum-likelihood classification for digital amplitude-phase modulations. IEEE Trans. Commun. 2000, 48, 189–193. [Google Scholar] [CrossRef]

- Hameed, F.; Dobre, O.A.; Popescu, D.C. On the likelihood-based approach to modulation classification. IEEE Trans. Wirel. Commun. 2009, 8, 5884–5892. [Google Scholar] [CrossRef]

- Xu, J.L.; Su, W.; Zhou, M. Likelihood-ratio approaches to automatic modulation classification. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2010, 41, 455–469. [Google Scholar] [CrossRef]

- Hong, L.; Ho, K. Identification of digital modulation types using the wavelet transform. In Proceedings of the MILCOM 1999, IEEE Military Communications. Conference Proceedings (Cat. No. 99CH36341), Atlantic City, NJ, USA, 31 October–3 November 1999; Volume 1, pp. 427–431. [Google Scholar]

- Liu, L.; Xu, J. A novel modulation classification method based on high order cumulants. In Proceedings of the 2006 International Conference on Wireless Communications, Networking and Mobile Computing, Wuhan, China, 22–24 September 2006; pp. 1–5. [Google Scholar]

- Park, C.S.; Choi, J.H.; Nah, S.P.; Jang, W.; Kim, D.Y. Automatic modulation recognition of digital signals using wavelet features and SVM. In Proceedings of the 2008 10th International Conference on Advanced Communication Technology, Gangwon, Republic of Korea, 17–20 February 2008; Volume 1, pp. 387–390. [Google Scholar]

- Zhang, Z.; Li, Y.; Zhu, X.; Lin, Y. A method for modulation recognition based on entropy features and random forest. In Proceedings of the 2017 IEEE International Conference on Software Quality, Reliability and Security Companion (QRS-C), Prague, Czech Republic, 25–29 July 2017; pp. 243–246. [Google Scholar]

- Stanescu, D.; Digulescu, A.; Ioana, C.; Serbanescu, A. Modulation recognition of underwater acoustic communication signals based on phase diagram entropy. In Proceedings of the OCEANS 2022, Hampton Roads, VA, USA, 17–20 October 2022; pp. 1–7. [Google Scholar]

- Stanescu, D.; Digulescu, A.; Ioana, C.; Serbanescu, A. Spread spectrum modulation recognition based on phase diagram entropy. Front. Signal Process. 2023, 3, 1197619. [Google Scholar]

- Sun, Z.; Wang, S.; Chen, X. Feature-Based Digital Modulation Recognition Using Compressive Sampling. Mob. Inf. Syst. 2016, 2016, 9754162. [Google Scholar] [CrossRef]

- Sun, X.; Su, S.; Zuo, Z.; Guo, X.; Tan, X. Modulation classification using compressed sensing and decision tree–support vector machine in cognitive radio system. Sensors 2020, 20, 1438. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Hong, X. Recent progresses on object detection: A brief review. Multimed. Tools Appl. 2019, 78, 27809–27847. [Google Scholar] [CrossRef]

- Peng, S.; Jiang, H.; Wang, H.; Alwageed, H.; Zhou, Y.; Sebdani, M.M.; Yao, Y.D. Modulation classification based on signal constellation diagrams and deep learning. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 718–727. [Google Scholar] [CrossRef]

- O’Shea, T.J.; Roy, T.; Clancy, T.C. Over-the-air deep learning based radio signal classification. IEEE J. Sel. Top. Signal Process. 2018, 12, 168–179. [Google Scholar] [CrossRef]

- Zhao, J.; Mao, X.; Chen, L. Speech emotion recognition using deep 1D & 2D CNN LSTM networks. Biomed. Signal Process. Control 2019, 47, 312–323. [Google Scholar]

- Elsagheer, M.M.; Ramzy, S.M. A hybrid model for automatic modulation classification based on residual neural networks and long short term memory. Alex. Eng. J. 2023, 67, 117–128. [Google Scholar] [CrossRef]

- Tekbıyık, K.; Ekti, A.R.; Görçin, A.; Kurt, G.K.; Keçeci, C. Robust and fast automatic modulation classification with CNN under multipath fading channels. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020; pp. 1–6. [Google Scholar]

- Sainath, T.N.; Vinyals, O.; Senior, A.; Sak, H. Convolutional, long short-term memory, fully connected deep neural networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 4580–4584. [Google Scholar]

- Xu, J.; Luo, C.; Parr, G.; Luo, Y. A spatiotemporal multi-channel learning framework for automatic modulation recognition. IEEE Wirel. Commun. Lett. 2020, 9, 1629–1632. [Google Scholar] [CrossRef]

- Kong, W.; Jiao, X.; Xu, Y.; Zhang, B.; Yang, Q. A transformer-based contrastive semi-supervised learning framework for automatic modulation recognition. IEEE Trans. Cogn. Commun. Netw. 2023, 9, 950–962. [Google Scholar] [CrossRef]

- Cai, J.; Gan, F.; Cao, X.; Liu, W. Signal modulation classification based on the transformer network. IEEE Trans. Cogn. Commun. Netw. 2022, 8, 1348–1357. [Google Scholar] [CrossRef]

- Zhai, L.; Li, Y.; Feng, Z.; Yang, S.; Tan, H. Learning Cross-Domain Features with Dual-Path Signal Transformer. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 3863–3869. [Google Scholar] [CrossRef]

- Zafrir, O.; Boudoukh, G.; Izsak, P.; Wasserblat, M. Q8bert: Quantized 8bit bert. In Proceedings of the 2019 Fifth Workshop on Energy Efficient Machine Learning and Cognitive Computing-NeurIPS Edition (EMC2-NIPS), Vancouver, BC, Canada, 13 December 2019; pp. 36–39. [Google Scholar]

- Liu, Z.; Sun, M.; Zhou, T.; Huang, G.; Darrell, T. Rethinking the value of network pruning. arXiv 2018, arXiv:1810.05270. [Google Scholar]

- Jiao, X.; Yin, Y.; Shang, L.; Jiang, X.; Chen, X.; Li, L.; Wang, F.; Liu, Q. Tinybert: Distilling bert for natural language understanding. arXiv 2019, arXiv:1909.10351. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. Albert: A lite bert for self-supervised learning of language representations. arXiv 2019, arXiv:1909.11942. [Google Scholar]

- Kitaev, N.; Kaiser, Ł.; Levskaya, A. Reformer: The efficient transformer. arXiv 2020, arXiv:2001.04451. [Google Scholar] [CrossRef]

- Wang, S.; Li, B.Z.; Khabsa, M.; Fang, H.; Ma, H. Linformer: Self-attention with linear complexity. arXiv 2020, arXiv:2006.04768. [Google Scholar] [CrossRef]

- Romero, A.; Ballas, N.; Kahou, S.E.; Chassang, A.; Gatta, C.; Bengio, Y. Fitnets: Hints for thin deep nets. arXiv 2014, arXiv:1412.6550. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. arXiv 2016, arXiv:1612.03928. [Google Scholar]

- Furlanello, T.; Lipton, Z.; Tschannen, M.; Itti, L.; Anandkumar, A. Born again neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 1607–1616. [Google Scholar]

- Kimura, A.; Ghahramani, Z.; Takeuchi, K.; Iwata, T.; Ueda, N. Few-shot learning of neural networks from scratch by pseudo example optimization. arXiv 2018, arXiv:1802.03039. [Google Scholar] [CrossRef]

- Park, W.; Kim, D.; Lu, Y.; Cho, M. Relational knowledge distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3967–3976. [Google Scholar]

- Liu, L.; Huang, Q.; Lin, S.; Xie, H.; Wang, B.; Chang, X.; Liang, X. Exploring inter-channel correlation for diversity-preserved knowledge distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 8271–8280. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

| Component | Teacher Model | Student Model |

|---|---|---|

| Signal Embedding | Deep 4-layer CNN (2 channels → 512 channels) | Lightweight 3-layer CNN (2 channels → 128 channels) |

| Backbone Type | Standard Transformer Encoder | Reformer Encoder |

| Number of Layers | 12 | 2 |

| Model Dimension () | 512 | 128 |

| Attention Type | Full Multi-Head Self-Attention | LSH Attention |

| Number of Heads | 8 | 4 |

| Total Parameters | 38.27 M | 0.29 M |

| Model | Dataset | Parameters | FLOPs | SNR (dB) | Overall | Inference Time (ms/Sample) | |

|---|---|---|---|---|---|---|---|

| −20–−2 | 0–18 | ||||||

| Teacher | A | 38.27 M | 3.263 G | 31.77% | 90.71% | 61.24% | 2.9612 |

| B | 35.85% | 93.46% | 64.65% | 2.9979 | |||

| CLDNN | A | 1.03 M | 15.680 M | 30.48% | 83.73% | 57.10% | 0.7107 |

| B | 36.63% | 91.90% | 64.26% | 0.7061 | |||

| CNN+LSTM | A | 0.50 M | 16.622 M | 30.43% | 88.74% | 59.58% | 0.6006 |

| B | 35.84% | 92.17% | 64.01% | 0.5658 | |||

| CNNIQ | A | 0.87 M | 9.149 M | 31.98% | 88.23% | 60.11% | 0.4754 |

| B | 35.86% | 90.50% | 63.18% | 0.5604 | |||

| MCLDNN | A | 1.16 M | 34.358 M | 33.02% | 86.82% | 59.92% | 1.0613 |

| B | 36.62% | 90.94% | 63.78% | 1.0430 | |||

| ResNet+LSTM | A | 7.40 M | 35.032 M | 32.74% | 83.74% | 58.24% | 1.7717 |

| B | 35.98% | 84.58% | 60.28% | 1.8139 | |||

| MobileNet | A | 0.21 M | 2.812 M | 32.21% | 86.89% | 59.55% | 1.3535 |

| B | 35.04% | 91.52% | 63.28% | 1.0255 | |||

| ShuffleNet | A | 0.06 M | 0.873 M | 31.79% | 86.03% | 58.91% | 2.5073 |

| B | 35.62% | 91.26% | 63.44% | 2.6010 | |||

| Student w/o KD | A | 0.29 M | 35.899 M | 30.43% | 87.68% | 59.06% | 1.5686 |

| B | 32.97% | 90.62% | 61.80% | 1.1479 | |||

| MLD-Net (Ours) | A | 0.29 M | 35.899 M | 31.62% | 90.65% | 61.14% | 1.5674 |

| B | 36.20% | 93.03% | 64.62% | 1.1495 | |||

| Method | Dataset | Accuracy | ||||

|---|---|---|---|---|---|---|

| Student w/o KD | A | ✓ | 59.06% | |||

| B | ✓ | 61.80% | ||||

| +Output KD | A | ✓ | ✓ | 60.21% | ||

| B | ✓ | ✓ | 63.15% | |||

| +Feature KD | A | ✓ | ✓ | 59.88% | ||

| B | ✓ | ✓ | 63.02% | |||

| +Attention KD | A | ✓ | ✓ | 59.76% | ||

| B | ✓ | ✓ | 62.85% | |||

| +Output + Feature | A | ✓ | ✓ | ✓ | 60.65% | |

| B | ✓ | ✓ | ✓ | 64.25% | ||

| +Output + Attention | A | ✓ | ✓ | ✓ | 60.34% | |

| B | ✓ | ✓ | ✓ | 63.79% | ||

| +Feature + Attention | A | ✓ | ✓ | ✓ | 59.95% | |

| B | ✓ | ✓ | ✓ | 63.45% | ||

| MLD-Net (Ours) | A | ✓ | ✓ | ✓ | ✓ | 61.14% |

| B | ✓ | ✓ | ✓ | ✓ | 64.62% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Zhang, L.; Zhang, M.; Zhang, Z.; Li, P.; Shi, X.; Zhou, F. MLD-Net: A Multi-Level Knowledge Distillation Network for Automatic Modulation Recognition. Sensors 2025, 25, 7143. https://doi.org/10.3390/s25237143

Zhang X, Zhang L, Zhang M, Zhang Z, Li P, Shi X, Zhou F. MLD-Net: A Multi-Level Knowledge Distillation Network for Automatic Modulation Recognition. Sensors. 2025; 25(23):7143. https://doi.org/10.3390/s25237143

Chicago/Turabian StyleZhang, Xihui, Linrun Zhang, Meng Zhang, Zhenxi Zhang, Peiru Li, Xiaoran Shi, and Feng Zhou. 2025. "MLD-Net: A Multi-Level Knowledge Distillation Network for Automatic Modulation Recognition" Sensors 25, no. 23: 7143. https://doi.org/10.3390/s25237143

APA StyleZhang, X., Zhang, L., Zhang, M., Zhang, Z., Li, P., Shi, X., & Zhou, F. (2025). MLD-Net: A Multi-Level Knowledge Distillation Network for Automatic Modulation Recognition. Sensors, 25(23), 7143. https://doi.org/10.3390/s25237143