DSC-LLM: Driving Scene Context Representation-Based Trajectory Prediction Framework with Risk Factor Reasoning Using LLMs †

Abstract

1. Introduction

2. Related Work

2.1. Trajectory Prediction

2.2. Scene Understanding in Autonomous Driving Systems

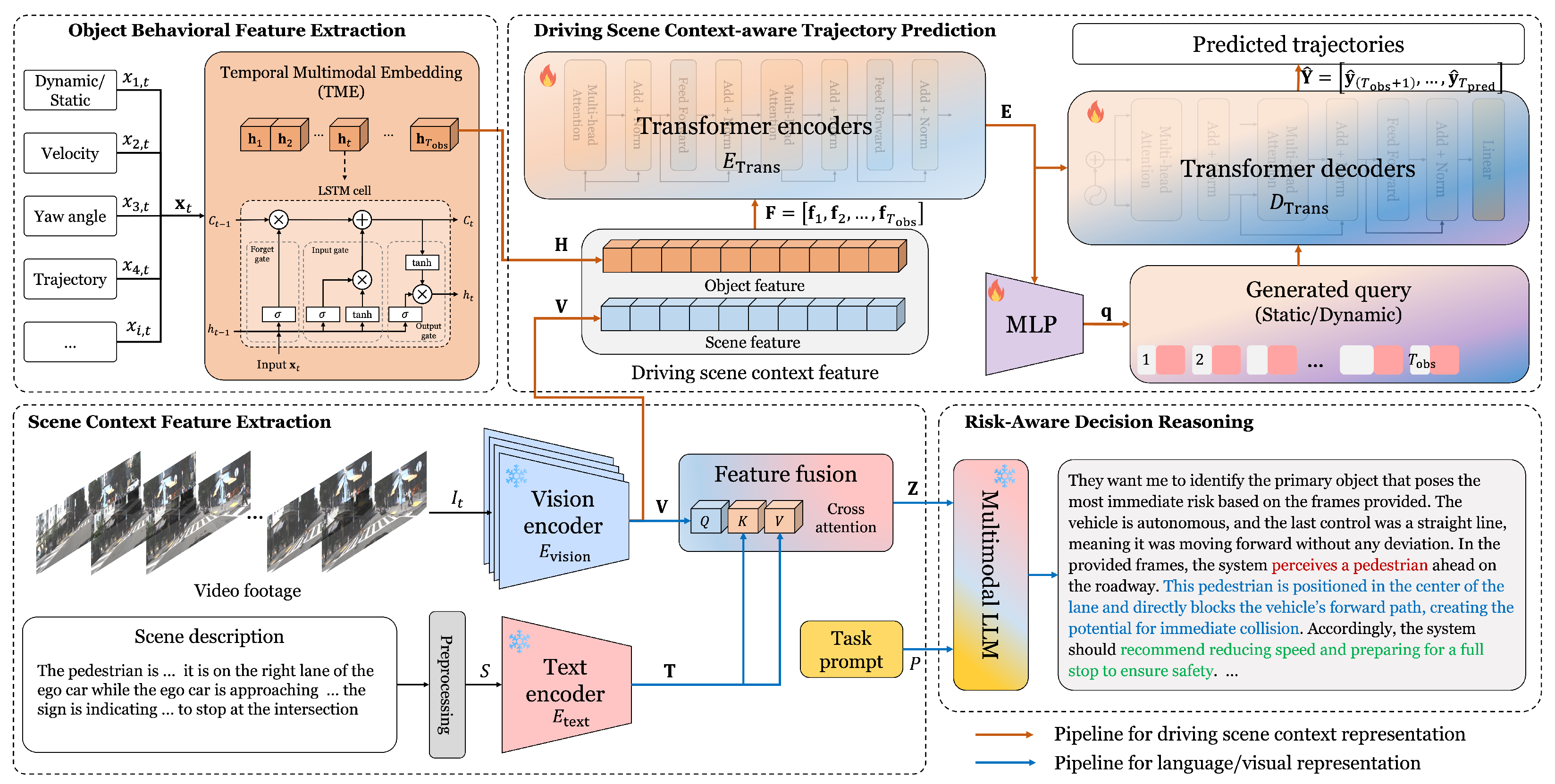

3. Methodology

3.1. Object Behavior Feature Extraction

3.2. Scene Context Feature Extraction

3.3. Driving Scene Context-Augmented Trajectory Prediction

3.4. Risk-Aware Decision Reasoning

4. Results

4.1. Experimental Details

4.1.1. Dataset

4.1.2. Training Setup

4.1.3. MLLM Configuration for Risk-Aware Reasoning

4.2. Evaluation Metrics

4.3. Quantitative Evaluation for Trajectory Prediction

4.3.1. Baseline Experiment

4.3.2. Ablation Study

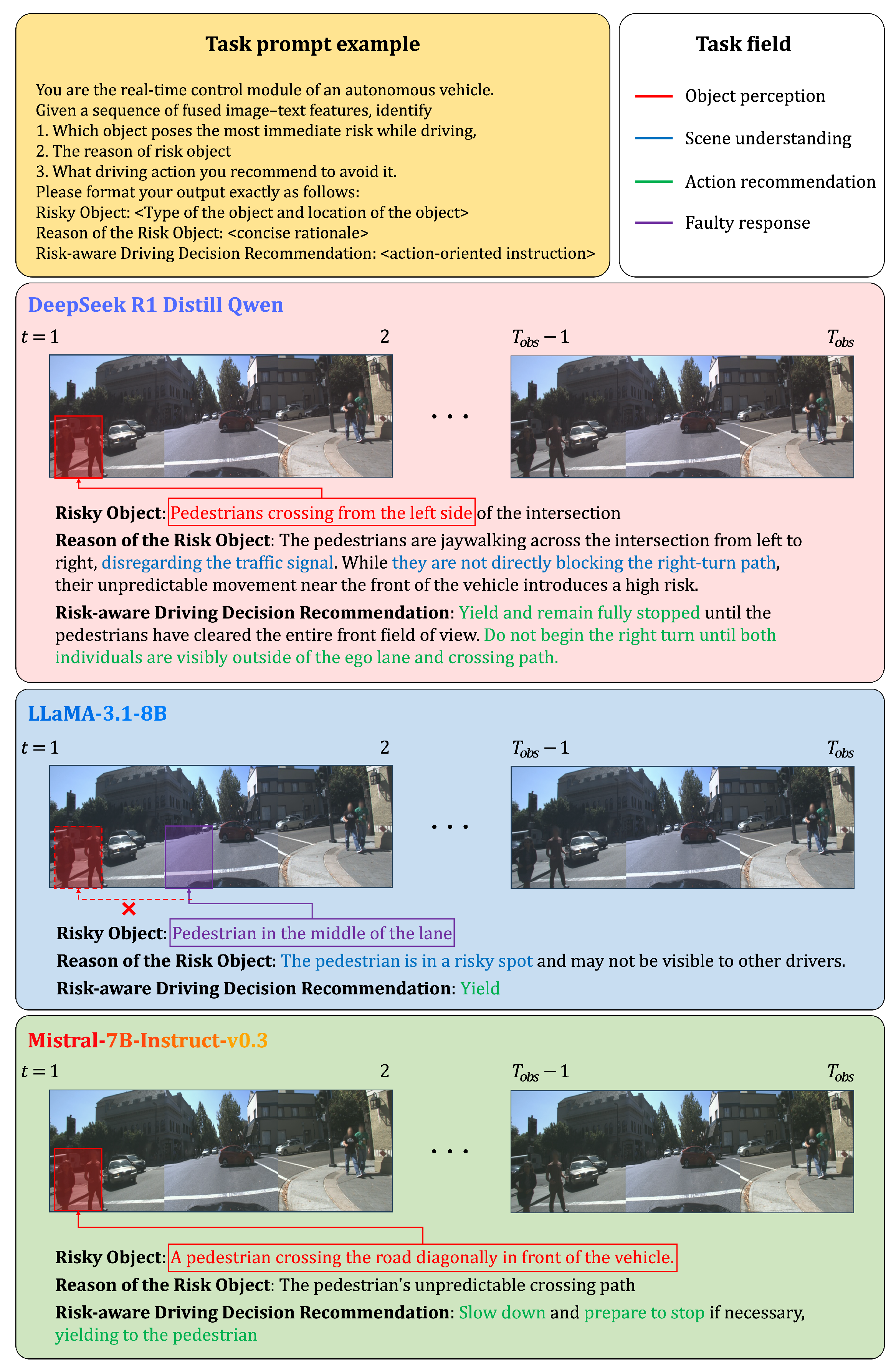

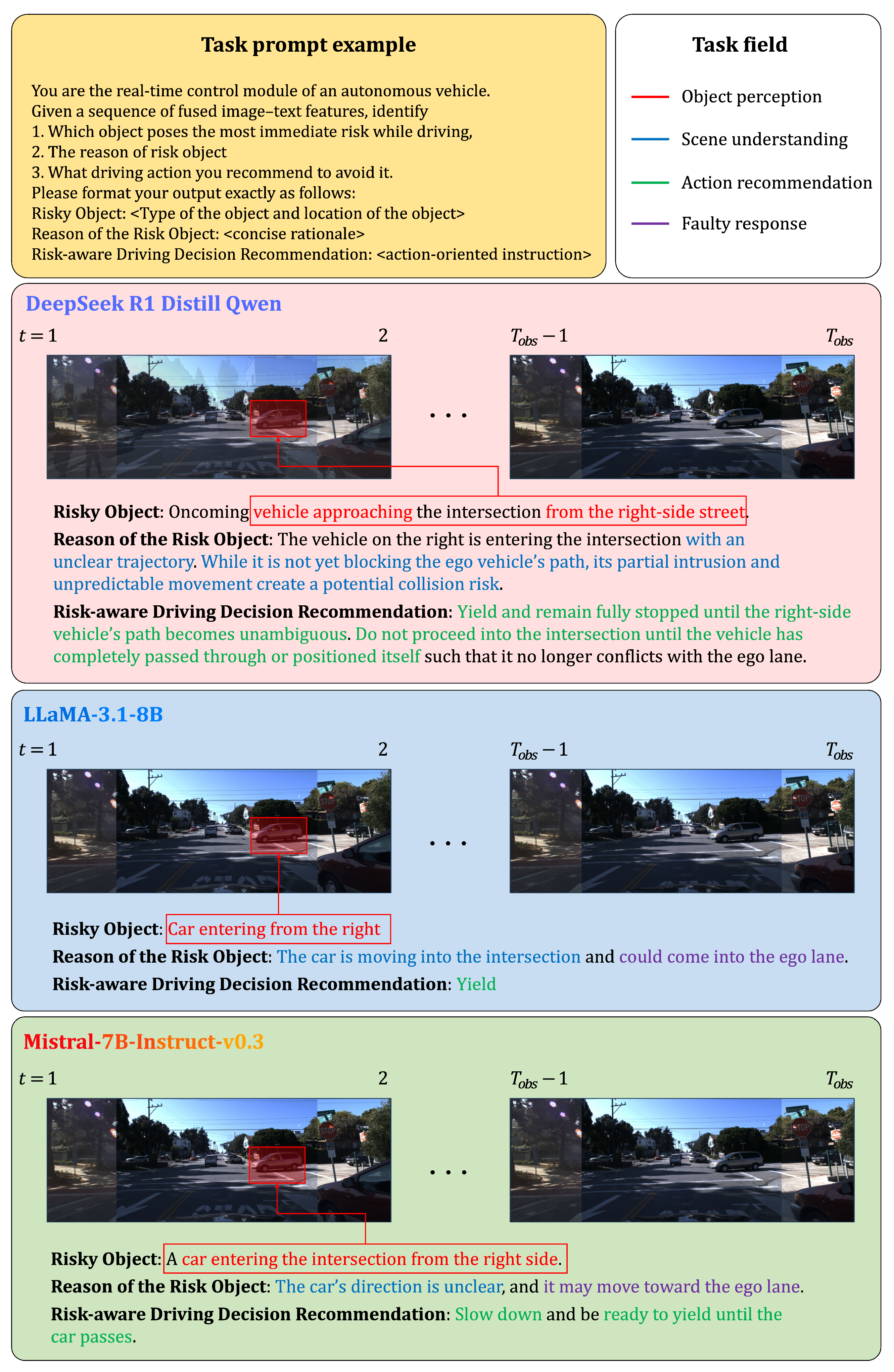

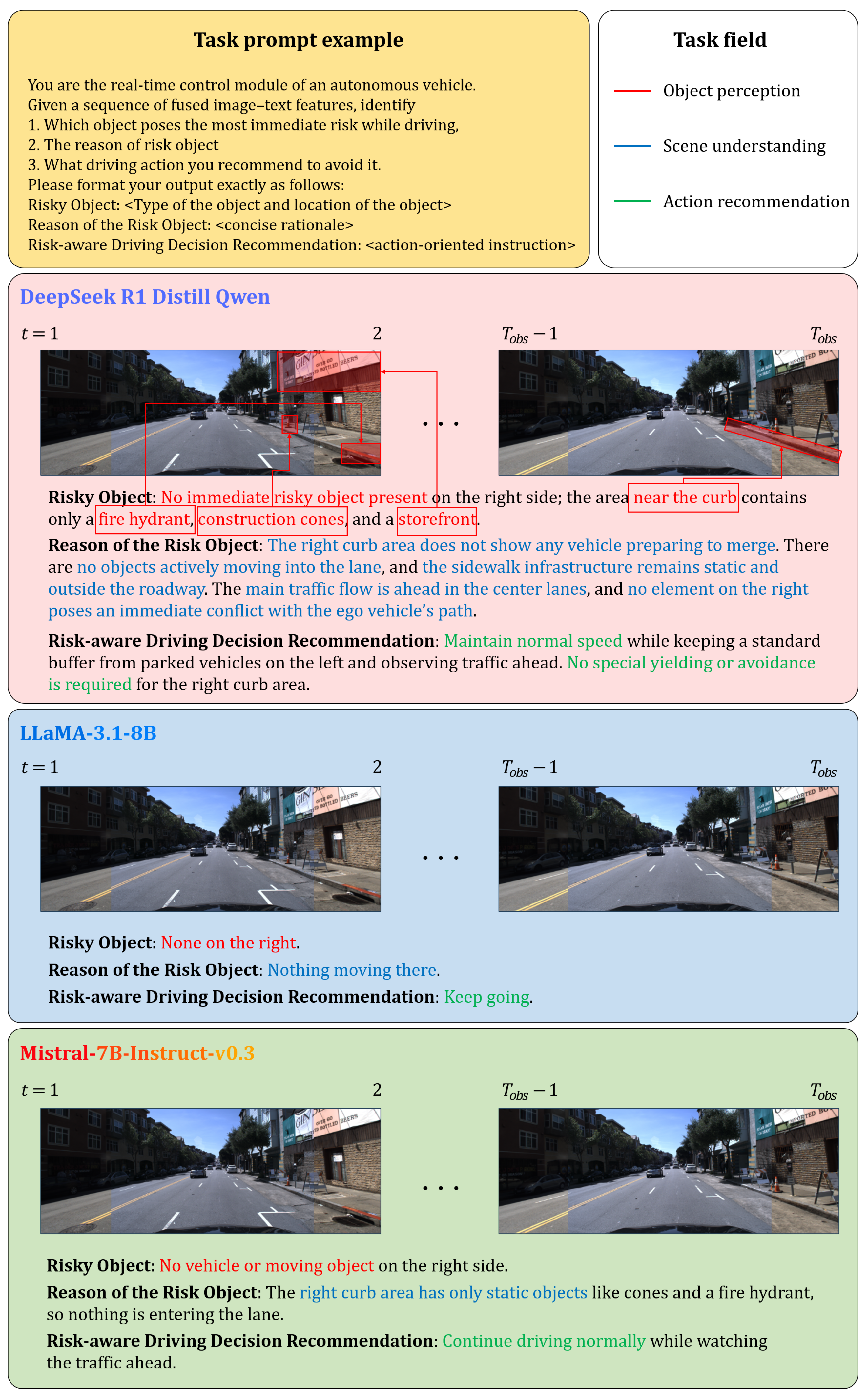

4.4. Qualitative Evaluation for Risk-Aware Decision Reasoning

4.5. Runtime Efficiency Analysis

4.6. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADE | Average Displacement Error |

| Bi-LSTM | Bidirectional Long Short-Term Memory |

| CLIP | Contrastive Language-Image Pretraining |

| CoT | Chain-of-Thought |

| DSC | Driving Scene Context |

| FDE | Final Displacement Error |

| GNNs | Graph Neural Networks |

| LLMs | Large Language Models |

| LoRA | Low-Rank Adaptation |

| LTSF-NLinear | Long-Term Time Series Forecasting Normalization Linear |

| MLLM | Multimodal Large Language Model |

| MLP | Multi-Layer Perceptron |

| MSE | Mean Squared Error |

| RMSE | Root Mean Squared Error |

| TCN | Temporal Convolutional Network |

| TME | Temporal Multimodal Embedding |

| VLMs | Vision-Language Models |

References

- Noh, B.; Yeo, H. A novel method of predictive collision risk area estimation for proactive pedestrian accident prevention system in urban surveillance infrastructure. Transp. Res. Part C: Emerg. Technol. 2022, 137, 103570. [Google Scholar] [CrossRef]

- Chen, Z.; Yu, G.; Chen, P.; Cao, G.; Li, Z.; Zhang, Y.; Ni, H.; Zhou, B.; Sun, J.; Ban, H. MineSim: A scenario-based simulation test system and benchmark for autonomous trucks in open-pit mines. Accid. Anal. Prev. 2025, 213, 107938. [Google Scholar] [CrossRef] [PubMed]

- Su, H.; Arakawa, S.; Murata, M. Occlusion-Aware Planning for Connected and Automated Vehicles with Cooperative Perception at Unsignalized Intersection. In Proceedings of the 2025 IEEE Intelligent Vehicles Symposium (IV), Cluj-Napoca, Romania, 22–25 June 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 950–957. [Google Scholar]

- Nie, J.; Jiang, J.; Li, Y.; Ercisli, S. Generative AI-Enhanced Autonomous Driving: Innovating Decision-Making and Risk Assessment in Multi-Interactive Environments. IEEE Trans. Intell. Transp. Syst. 2025; early access. [Google Scholar]

- Zeru, L.; In, K.J. Autonomous Earthwork Machinery for Urban Construction: A Review of Integrated Control, Fleet Coordination, and Safety Assurance. Buildings 2025, 15, 2570. [Google Scholar] [CrossRef]

- Abdel-Aty, M.; Ding, S. A matched case-control analysis of autonomous vs human-driven vehicle accidents. Nat. Commun. 2024, 15, 4931. [Google Scholar] [CrossRef]

- Yuan, K.; Huang, Y.; Yang, S.; Wu, M.; Cao, D.; Chen, Q.; Chen, H. Evolutionary decision-making and planning for autonomous driving: A hybrid augmented intelligence framework. IEEE Trans. Intell. Transp. Syst. 2024, 25, 7339–7351. [Google Scholar] [CrossRef]

- Fang, J.; Wang, F.; Xue, J.; Chua, T.S. Behavioral intention prediction in driving scenes: A survey. IEEE Trans. Intell. Transp. Syst. 2024, 25, 8334–8355. [Google Scholar] [CrossRef]

- Zhang, Z.; Ding, Z.; Tian, R. Decouple ego-view motions for predicting pedestrian trajectory and intention. IEEE Trans. Image Process. 2024, 33, 4716–4727. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Liao, H.; Li, Z.; Xu, C. WAKE: Towards Robust and Physically Feasible Trajectory Prediction for Autonomous Vehicles With WAvelet and KinEmatics Synergy. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 3126–3140. [Google Scholar] [CrossRef]

- Tang, R.; Ng, K.K.; Li, L.; Yang, Z. A learning-based interacting multiple model filter for trajectory prediction of small multirotor drones considering differential sequences. Transp. Res. Part C: Emerg. Technol. 2025, 174, 105115. [Google Scholar] [CrossRef]

- Jiang, T.; Dong, Q.; Ma, Y.; Ji, X.; Liu, Y. Customizable Multimodal Trajectory Prediction via Nodes of Interest Selection for Autonomous Vehicles. Expert Syst. Appl. 2025, 288, 128222. [Google Scholar] [CrossRef]

- Alahi, A.; Goel, K.; Ramanathan, V.; Robicquet, A.; Fei-Fei, L.; Savarese, S. Social lstm: Human trajectory prediction in crowded spaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 961–971. [Google Scholar]

- Liu, C.; Dong, H.; Wang, P.; Yu, J. EnTAIL: Evolutional temporal-aware interaction learning for motion forecasting. Eng. Appl. Artif. Intell. 2025, 160, 111800. [Google Scholar] [CrossRef]

- Hong, S.; Im, J.; Noh, B. Attention-Driven Lane Change Trajectory Prediction with Traffic Context in Urban Environments. IEEE Access 2025, 13, 108059–108075. [Google Scholar] [CrossRef]

- Atakishiyev, S.; Salameh, M.; Yao, H.; Goebel, R. Explainable artificial intelligence for autonomous driving: A comprehensive overview and field guide for future research directions. IEEE Access 2024, 12, 101603–101625. [Google Scholar] [CrossRef]

- Rivera, E.; Lübberstedt, J.; Uhlemann, N.; Lienkamp, M. Scenario Understanding of Traffic Scenes Through Large Visual Language Models. In Proceedings of the Winter Conference on Applications of Computer Vision, Tucson, Arizona, 28 February–4 March 2025; pp. 1037–1045. [Google Scholar]

- Xiao, D.; Dianati, M.; Jennings, P.; Woodman, R. HazardVLM: A Video Language Model for Real-Time Hazard Description in Automated Driving Systems. IEEE Trans. Intell. Veh. 2025, 10, 3331–3343. [Google Scholar] [CrossRef]

- Zeng, T.; Wu, L.; Shi, L.; Zhou, D.; Guo, F. Are vision llms road-ready? A comprehensive benchmark for safety-critical driving video understanding. In Proceedings of the 31st ACM SIGKDD Conference on Knowledge Discovery and Data Mining V. 2, Toronto, ON, Canada, 3–7 August 2025; pp. 5972–5983. [Google Scholar]

- Zhou, X.; Liu, M.; Yurtsever, E.; Zagar, B.L.; Zimmer, W.; Cao, H.; Knoll, A.C. Vision language models in autonomous driving: A survey and outlook. IEEE Trans. Intell. Veh. 2024; early access. [Google Scholar]

- Kim, S.; Hong, S.; Jin, J.; Noh, B. Driving Scene Context-Augmented Trajectory Prediction with Risk-Aware Decision Reasoning Using Multimodal LLM. In Proceedings of the EPIA Conference on Artificial Intelligence, Faro, Portugal, 1–3 October 2025; Springer: Berlin/Heidelberg, Germany, 2025; pp. 192–204. [Google Scholar]

- Sachdeva, E.; Agarwal, N.; Chundi, S.; Roelofs, S.; Li, J.; Kochenderfer, M.; Choi, C.; Dariush, B. Rank2tell: A multimodal driving dataset for joint importance ranking and reasoning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 7513–7522. [Google Scholar]

- Guan, L.; Shi, J.; Wang, D.; Shao, H.; Chen, Z.; Chu, D. A trajectory prediction method based on bayonet importance encoding and bidirectional lstm. Expert Syst. Appl. 2023, 223, 119888. [Google Scholar] [CrossRef]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF models for sequence tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar] [CrossRef]

- Wang, L.; Zhao, J.; Xiao, M.; Liu, J. Predicting lane change and vehicle trajectory with driving micro-data and deep learning. IEEE Access 2024, 12, 106432–106446. [Google Scholar] [CrossRef]

- Sun, D.; Guo, H.; Wang, W. Vehicle trajectory prediction based on multivariate interaction modeling. IEEE Access 2023, 11, 131639–131650. [Google Scholar] [CrossRef]

- Fu, Z.; Jiang, K.; Xie, C.; Xu, Y.; Huang, J.; Yang, D. Summary and reflections on pedestrian trajectory prediction in the field of autonomous driving. IEEE Trans. Intell. Veh. 2024; early access. [Google Scholar]

- Du, Q.; Wang, X.; Yin, S.; Li, L.; Ning, H. Social force embedded mixed graph convolutional network for multi-class trajectory prediction. IEEE Trans. Intell. Veh. 2024, 9, 5571–5580. [Google Scholar] [CrossRef]

- Benrachou, D.E.; Glaser, S.; Elhenawy, M.; Rakotonirainy, A. Graph-Based Spatial-Temporal Attentive Network for Vehicle Trajectory Prediction in Automated Driving. IEEE Trans. Intell. Transp. Syst. 2025, 26, 13109–13130. [Google Scholar] [CrossRef]

- Wang, X.; Wu, G.; Luo, Q.; Zhang, Y. Self-Interaction Dynamic Graph Convolutional Network With Multiscale Time-Frequency Fusion for Vehicle Trajectory Prediction. IEEE Trans. Intell. Transp. Syst. 2025, 26, 15087–15099. [Google Scholar] [CrossRef]

- Pu, Y.; Li, Y.; Xia, B.; Wang, X.; Qin, H.; Zhu, L. A Lightweight Lane-Guided Vector Transformer for Multi-Agent Trajectory Prediction in Autonomous Driving. In Proceedings of the 2024 IEEE 27th International Conference on Intelligent Transportation Systems (ITSC), Edmonton, AB, Canada, 24–27 September 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1245–1252. [Google Scholar]

- Jiang, H.; Zhao, B.; Hu, C.; Chen, H.; Zhang, X. Multi-Modal Vehicle Motion Prediction Based on Motion-Query Social Transformer Network for Internet of Vehicles. IEEE Internet Things J. 2025, 12, 28864–28875. [Google Scholar] [CrossRef]

- Cyranka, J.; Haponiuk, S. Unified Long-Term Time-Series Forecasting Benchmark. arXiv 2023, arXiv:2309.15946. [Google Scholar]

- Tang, X.; Kan, M.; Shan, S.; Ji, Z.; Bai, J.; Chen, X. Hpnet: Dynamic trajectory forecasting with historical prediction attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 15261–15270. [Google Scholar]

- Tian, W.; Ren, X.; Yu, X.; Wu, M.; Zhao, W.; Li, Q. Vision-based mapping of lane semantics and topology for intelligent vehicles. Int. J. Appl. Earth Obs. Geoinf. 2022, 111, 102851. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, Z.; Halilaj, L.; Luettin, J. Semanticformer: Holistic and semantic traffic scene representation for trajectory prediction using knowledge graphs. IEEE Robot. Autom. Lett. 2024, 9, 7381–7388. [Google Scholar] [CrossRef]

- Gruver, N.; Finzi, M.; Qiu, S.; Wilson, A.G. Large language models are zero-shot time series forecasters. Adv. Neural Inf. Process. Syst. 2023, 36, 19622–19635. [Google Scholar]

- Chen, L.; Sinavski, O.; Hünermann, J.; Karnsund, A.; Willmott, A.J.; Birch, D.; Maund, D.; Shotton, J. Driving with llms: Fusing object-level vector modality for explainable autonomous driving. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Atlanta, GA, USA, 13–17 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 14093–14100. [Google Scholar]

- Chib, P.S.; Singh, P. Lg-traj: Llm guided pedestrian trajectory prediction. arXiv 2024, arXiv:2403.08032. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, W.; Qu, H.; Liu, J. Enhancing human-centered dynamic scene understanding via multiple llms collaborated reasoning. Vis. Intell. 2025, 3, 3. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, Y.; Xie, E.; Zhao, Z.; Guo, Y.; Wong, K.Y.K.; Li, Z.; Zhao, H. Drivegpt4: Interpretable end-to-end autonomous driving via large language model. IEEE Robot. Autom. Lett. 2024, 9, 8186–8193. [Google Scholar] [CrossRef]

- Luo, X.; Ding, F.; Panda, R.; Chen, R.; Loo, J.; Zhang, S. “ What’s Happening”—A Human-centered Multimodal Interpreter Explaining the Actions of Autonomous Vehicles. In Proceedings of the Winter Conference on Applications of Computer Vision, Tucson, Arizona, 28 February–4 March 2025; pp. 1163–1170. [Google Scholar]

- Feng, Y.; Hua, W.; Sun, Y. Nle-dm: Natural-language explanations for decision making of autonomous driving based on semantic scene understanding. IEEE Trans. Intell. Transp. Syst. 2023, 24, 9780–9791. [Google Scholar] [CrossRef]

- Peng, M.; Guo, X.; Chen, X.; Chen, K.; Zhu, M.; Chen, L.; Wang, F.Y. Lc-llm: Explainable lane-change intention and trajectory predictions with large language models. Commun. Transp. Res. 2025, 5, 100170. [Google Scholar] [CrossRef]

- Sima, C.; Renz, K.; Chitta, K.; Chen, L.; Zhang, H.; Xie, C.; Beißwenger, J.; Luo, P.; Geiger, A.; Li, H. Drivelm: Driving with graph visual question answering. In Proceedings of the European Conference on Computer Vision, Milano, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 256–274. [Google Scholar]

- Chen, Z.; Xu, S.; Ye, M.; Qian, Z.; Zou, X.; Yeung, D.Y.; Chen, Q. Learning High-Resolution Vector Representation from Multi-camera Images for 3D Object Detection. In Proceedings of the European Conference on Computer Vision, Milano, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 385–403. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. ICLR 2022, 1, 3. [Google Scholar]

- Tian, R.; Li, B.; Weng, X.; Chen, Y.; Schmerling, E.; Wang, Y.; Ivanovic, B.; Pavone, M. Tokenize the world into object-level knowledge to address long-tail events in autonomous driving. arXiv 2024, arXiv:2407.00959. [Google Scholar]

- Luo, S.; Chen, W.; Tian, W.; Liu, R.; Hou, L.; Zhang, X.; Shen, H.; Wu, R.; Geng, S.; Zhou, Y.; et al. Delving into multi-modal multi-task foundation models for road scene understanding: From learning paradigm perspectives. IEEE Trans. Intell. Veh. 2024, 9, 8040–8063. [Google Scholar] [CrossRef]

- Sadat, A.; Casas, S.; Ren, M.; Wu, X.; Dhawan, P.; Urtasun, R. Perceive, predict, and plan: Safe motion planning through interpretable semantic representations. In Proceedings of the European Conference on Computer Vision, Milano, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2020; pp. 414–430. [Google Scholar]

- Sun, M.; Li, J.; Liu, J.; Yang, X. MTPI: Multimodal Trajectory Prediction for Autonomous Driving via Informer. In Proceedings of the 2024 IEEE 24th International Conference on Software Quality, Reliability, and Security Companion (QRS-C), Cambridge, UK, 1–5 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 569–576. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; PmLR: New York, NY, USA, 2021; pp. 8748–8763. [Google Scholar]

- Chen, X.; Zhang, H.; Zhao, F.; Cai, Y.; Wang, H.; Ye, Q. Vehicle trajectory prediction based on intention-aware non-autoregressive transformer with multi-attention learning for Internet of Vehicles. IEEE Trans. Instrum. Meas. 2022, 71, 2513912. [Google Scholar] [CrossRef]

- Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; Bi, X.; et al. Deepseek-r1: Incentivizing reasoning capability in llms via reinforcement learning. arXiv 2025, arXiv:2501.12948. [Google Scholar]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The llama 3 herd of models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Jiang, A.Q.; Sablayrolles, A.; Mensch, A.; Bamford, C.; Chaplot, D.S.; de las Casas, D.; Bressand, F.; Lengyel, G.; Lample, G.; Saulnier, L.; et al. Mistral 7B. arXiv 2023, arXiv:2310.06825. [Google Scholar] [CrossRef]

| Model | ADE-avg | FDE-avg | RMSE-avg |

|---|---|---|---|

| TCN | 18.429 ± 0.535 | 24.129 ± 0.647 | 14.203 ± 0.339 |

| Transformer | 17.598 ± 2.123 | 20.825 ± 2.765 | 13.426 ± 1.507 |

| LSTM | 13.137 ± 1.256 | 15.865 ± 1.304 | 10.282 ± 0.846 |

| Ours | 12.272 ± 1.006 | 14.889 ± 1.054 | 9.752 ± 0.757 |

| Image | Dynamic/Static | Velocity | Yaw | ADE | FDE | RMSE |

|---|---|---|---|---|---|---|

| ✓ | ✓ | ✓ | – | 11.570 | 14.079 | 9.193 |

| ✓ | ✓ | – | ✓ | 13.874 | 16.481 | 10.571 |

| ✓ | – | ✓ | ✓ | 12.055 | 14.791 | 9.517 |

| – | ✓ | ✓ | ✓ | 14.622 | 17.351 | 11.317 |

| ✓ | ✓ | ✓ | ✓ | 10.972 | 13.701 | 8.782 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Jin, J.; Hong, S.; Ka, D.; Kim, H.; Noh, B. DSC-LLM: Driving Scene Context Representation-Based Trajectory Prediction Framework with Risk Factor Reasoning Using LLMs. Sensors 2025, 25, 7112. https://doi.org/10.3390/s25237112

Kim S, Jin J, Hong S, Ka D, Kim H, Noh B. DSC-LLM: Driving Scene Context Representation-Based Trajectory Prediction Framework with Risk Factor Reasoning Using LLMs. Sensors. 2025; 25(23):7112. https://doi.org/10.3390/s25237112

Chicago/Turabian StyleKim, Sunghun, Joobin Jin, Seokjun Hong, Dongho Ka, Hakjae Kim, and Byeongjoon Noh. 2025. "DSC-LLM: Driving Scene Context Representation-Based Trajectory Prediction Framework with Risk Factor Reasoning Using LLMs" Sensors 25, no. 23: 7112. https://doi.org/10.3390/s25237112

APA StyleKim, S., Jin, J., Hong, S., Ka, D., Kim, H., & Noh, B. (2025). DSC-LLM: Driving Scene Context Representation-Based Trajectory Prediction Framework with Risk Factor Reasoning Using LLMs. Sensors, 25(23), 7112. https://doi.org/10.3390/s25237112